GaitGAN: Invariant Gait Feature Extraction

Using Generative Adversarial Networks

Shiqi Yu, Haifeng Chen,

Computer Vision Institute,

College of Computer Science

and Software Engineering,

Shenzhen University, China.

Edel B. Garc´ıa Reyes

Norman Poh

Advanced Technologies

Department of Computer Science,

Application Center,

7ma A 21406, Playa,

University of Surrey,

Guildford, Surrey,

Havana, Cuba.

GU2 7XH, United Kingdom.

shiqi.yu@szu.edu.cn

egarcia@cenatav.co.cu

n.poh@surrey.ac.uk

chenhaifeng@email.szu.edu.cn

Abstract

The performance of gait recognition can be adversely

affected by many sources of variation such as view angle,

clothing, presence of and type of bag, posture, and occlu-

sion, among others. In order to extract invariant gait fea-

tures, we proposed a method named as GaitGAN which is

based on generative adversarial networks (GAN). In the

proposed method, a GAN model is taken as a regressor to

generate invariant gait images that is side view images with

normal clothing and without carrying bags. A unique ad-

vantage of this approach is that the view angle and other

variations are not needed before generating invariant gait

images. The most important computational challenge, how-

ever, is to address how to retain useful identity information

when generating the invariant gait images. To this end, our

approach differs from the traditional GAN which has only

one discriminator in that GaitGAN contains two discrimi-

nators. One is a fake/real discriminator which can make

the generated gait images to be realistic. Another one is an

identification discriminator which ensures that the the gen-

erated gait images contain human identification informa-

tion. Experimental results show that GaitGAN can achieve

state-of-the-art performance. To the best of our knowledge

this is the first gait recognition method based on GAN with

encouraging results. Nevertheless, we have identified sev-

eral research directions to further improve GaitGAN.

1. Introduction

Gait is a behavioural biometric modality with a great po-

tential for person identification because of its unique advan-

tages such as being contactless, hard to fake and passive in

nature which requires no explicit cooperation from the sub-

jects. Furthermore, the gait features can be captured at a

distance in uncontrolled scenarios. Therefore, gait recogni-

tion has potentially wide application in video surveillance.

Indeed, many surveillance cameras has been installed in

major cities around world. With improved accuracy, the

gait recognition technology will certainly be another use-

ful tools for crime prevention and forensic identification.

Therefore, gait recognition is and will become an ever more

important research topic in the computer vision community.

Unfortunately, automatic gait recognition remains a

challenging task because there are many variations that can

alter the human appearance drastically, such as view, cloth-

ing, variations in objects being carried. These variations

can affect the recognition accuracy greatly. Among these

variations, view is one of the most common one because

we cannot control the walking directions of subjects in real

applications, which is the central focus of our work here.

Early literature reported in [9] uses static body parame-

ters measured from gait images as a kind of view invariant

feature. Kale et al.

[10] used the perspective projection

model to generated side view features from arbitrary views.

Unfortunately, the the relation between two views is hard to

be modelled by a simple linear model, such as the perspec-

tive projection model.

Some other researchers employed more complex models

to handle this problem. The most commonly used model is

the view transformation model (VTM) which can transfor-

m gait feature from one view to another view. Makihara et

al. [15] designed a VTM named as FD-VTM, which can

work in the frequency-domain. Different from FD-VTM,

RSVD-VTM proposed in [11] operates in the spatial do-

main. It uses reduced SVD to construct a VTM and opti-

mized Gait Energy Image (GEI) feature vectors based on

linear discriminant analysis (LDA), and has achieved a rel-

atively good improvement. Motivated by the capability of

robust principal component analysis (RPCA) for feature ex-

130

�

traction, Zheng et al. [23] established a robust VTM via

RPCA for view invariant feature extraction. Kusakunniran

et al. [12] considered view transformation as a regression

problem, and used the sparse regression based on the elastic

net as the regression function. Bashir et al. [1] formulated a

gaussian process classification framework to estimate view

angle in probe set, then uses canonical correlation analy-

sis(CCA) to model the correlation of gait sequences from

different, arbitrary views. Luo et al. [14] proposed a gait

recognition method based on partitioning and CCA. They

separated GEI image into 5 non-overlapping parts, and for

each part they used CCA to model the correlation. In [19],

Xing et al. also used CCA; but they reformulated the tra-

ditional CCA to deal with high-dimensional matrix, and re-

duced the computational burden in view invariant feature

extraction. Lu et al. [13] proposed one method which can

handle arbitrary walking directions by cluster-based aver-

aged gait images. However, if there is no views with similar

walking direction in the gallery set, the recognition rate will

decrease.

For most VTM related methods, one view transforma-

tion model [2, 3, 11, 12, 15, 23] can only transform one

specific view angle to another one. The model heavily de-

pends on the accuracy of view angle estimation. If we want

to transform gait images from arbitrary angles to a specific

view, a lot of models are needed. Some other researchers

also tried to achieve view invariance using only one mod-

el, such as Hu et al. [8] who proposed a method named as

ViDP which extracts view invariant features using a linear

transform. Wu et al. [18] trained deep convolution neural

networks for any view pairs and achieved high accuracies.

Besides view variations, clothing can also change the

human body appearance as well as shape greatly. Some

clothes, such as long overcoats, can occlude the leg mo-

tion. Carrying condition is another factor which can affect

feature extraction since it is not easy to segment the car-

ried object from a human body in images. In the literature,

there are few methods that can handle clothing invariant in

gait recognition unlike their view invariant counterparts. In

[7] , clothing invariance is achieved by dividing the human

body into 8 parts, each of which is subject to discrimina-

tion analysis. In [5], Guan et al. proposed a random sub-

space method (RSM) for clothing-invariant gait recognition

by combining multiple inductive biases for classification.

One recent method named as SPAE in [21] can extract in-

variant gait feature using only one model.

In the paper, we propose to use generative adversarial

networks (GAN) as a means to solve the problems of varia-

tions due to view, clothing and carrying condition simul-

taneously using only one model. GAN is inspired by t-

wo person zero-sum game in Game Theory, developed by

Goodfellow et al. [4] in 2014, which is composed of one

generative model G and one discriminative model D. The

generative model captures the distribution of the training

data, and the discriminative model is a second classifier that

determines whether the input is real or generated. The opti-

mization process of these two models is a problem of min-

imax two-player game. The generative model produces a

realistic image from an input random vector z. As we know

the early GAN model is too flexible in generating image. In

[16], Mirza et al. fed a conditional parameter y into both

the discriminator and generator as additional input layer to

increase the constraint. Meanwhile, Denton et al. proposed

a method using a cascade of convolutional networks with-

in a Laplacian pyramid framework to generate images in a

coarse-to-fine fashion. As a matter of fact, GANs are hard to

train and the generators often produce nonsensical outputs.

A method named Deep Convolutional GAN [17] proposed

by Radford et al., which contains a series of strategies such

as using fractional-strided convolutions and batch normal-

ization to make the GAN more stable in training. Recently,

Yoo et al. [20] presented an image-conditional generation

model which contains a vital component named domain-

discriminator. This discriminator ensures that a generat-

ed image is relevant to its input image. Furthermore, this

method proposes domain transfer using GANs at the pix-

el level; and is subsequently known as pixel-level domain

transfer GAN, or PixelDTGAN in [20].

In the proposed method, GAN is taken as a regressor.

The gait data captured with multiple variations can be trans-

formed into the side view without knowing the specific an-

gles, clothing type and the object carried. This method has

great potential in real scenes.

The rest of the paper is organized as follows. Section 2

describes the proposed method. Experiments and evalua-

tion are presented in Section 3. The last section, Section 4,

gives the conclusions and identifies future work.

2. Proposed method

To reduce the effect of variations, GAN is employed as a

regressor to generate invariant gait images, that contain side

view giat images with normal clothing and without carrying

objects. The gait images at arbitrary views can be converted

to those at the side view since the side view data contains

more dynamic information. While this is intuitively appeal-

ing, a key challenge that must be address is to preserve the

human identification information in the generated gait im-

ages.

The GaitGAN model is trained to generate gait images

with normal clothing and without carrying objects at the

side view by data from the training set. In the test phase,

gait images are sent to the GAN model and invariant gait

images contains human identification information are gen-

erated. The difference between the proposed method and

most other GAN related methods is that the generated im-

age here can help to improve the discriminant capability,

231

�

not just generate some images which just looks realistic.

The most challenging thing in the proposed method is to p-

reserve human identification when generating realistic gait

images.

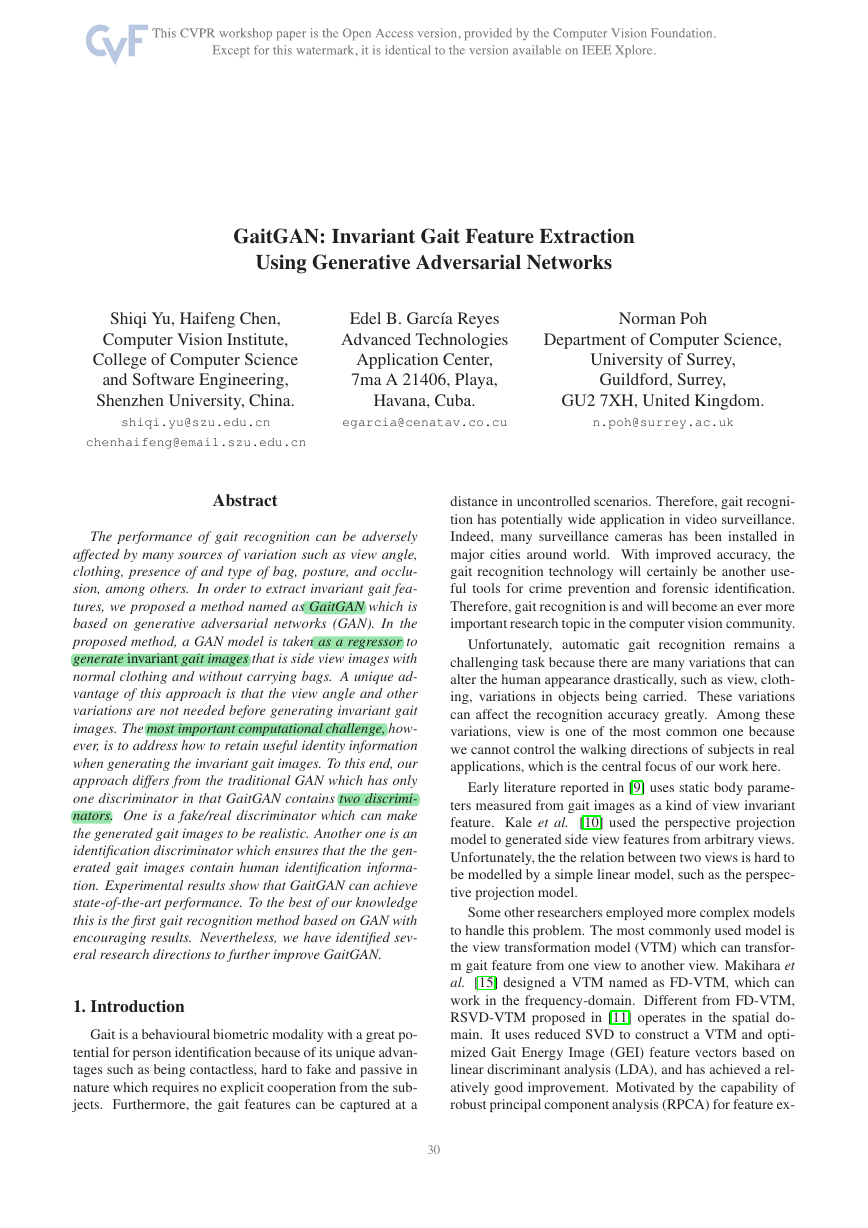

2.1. Gait energy image

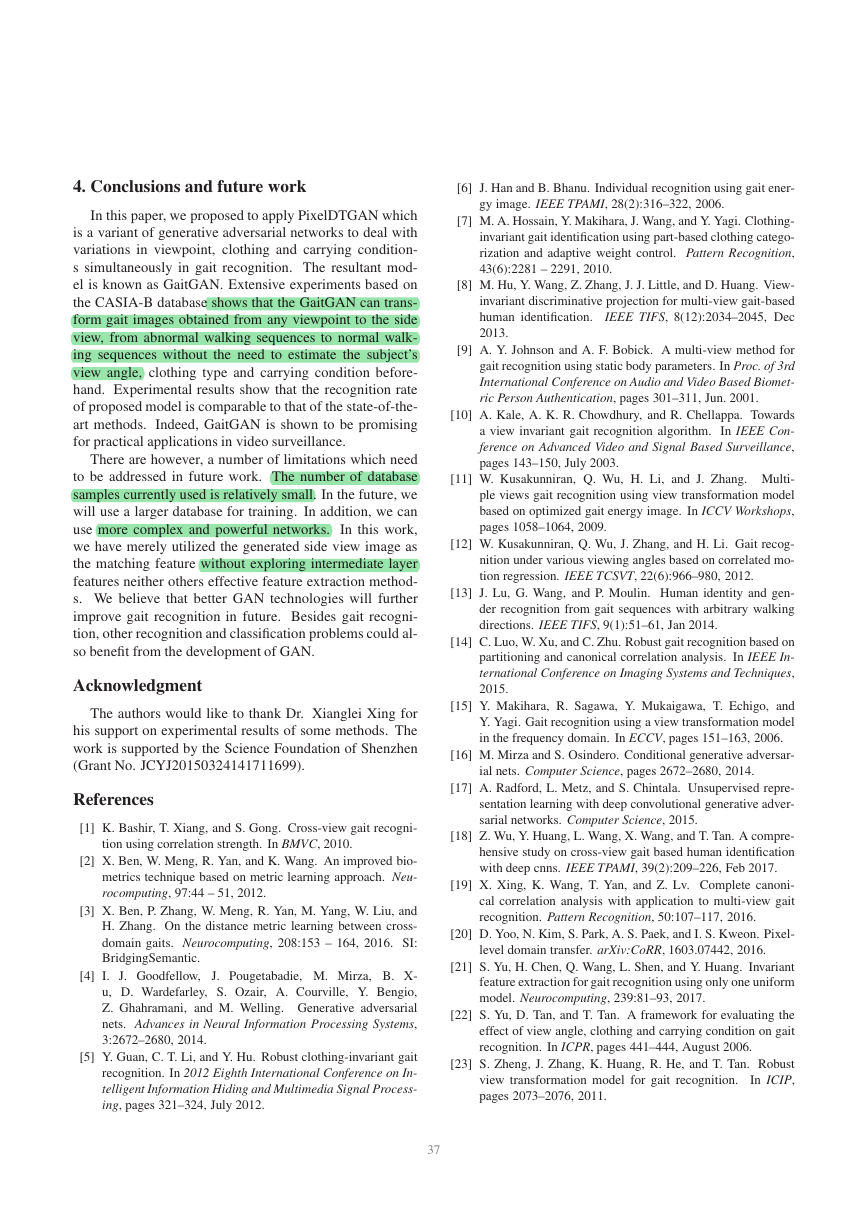

The gait energy image [6] is a popular gait feature, which

is produced by averaging the silhouettes in one gait cycle in

a gait sequence as illustrated in Figure 1. GEI is well known

for its robustness to noise and its efficient computation. The

pixel values in a GEI can be interpretted as the probability

of pixel positions in GEI being occupied by a human body

over one gait cycle. According to the success of GEI in

gait recognition, we take GEI as the input and target im-

age of our method. The silhouettes and energy images used

in the experiments are produced in the same way as those

described in [22].

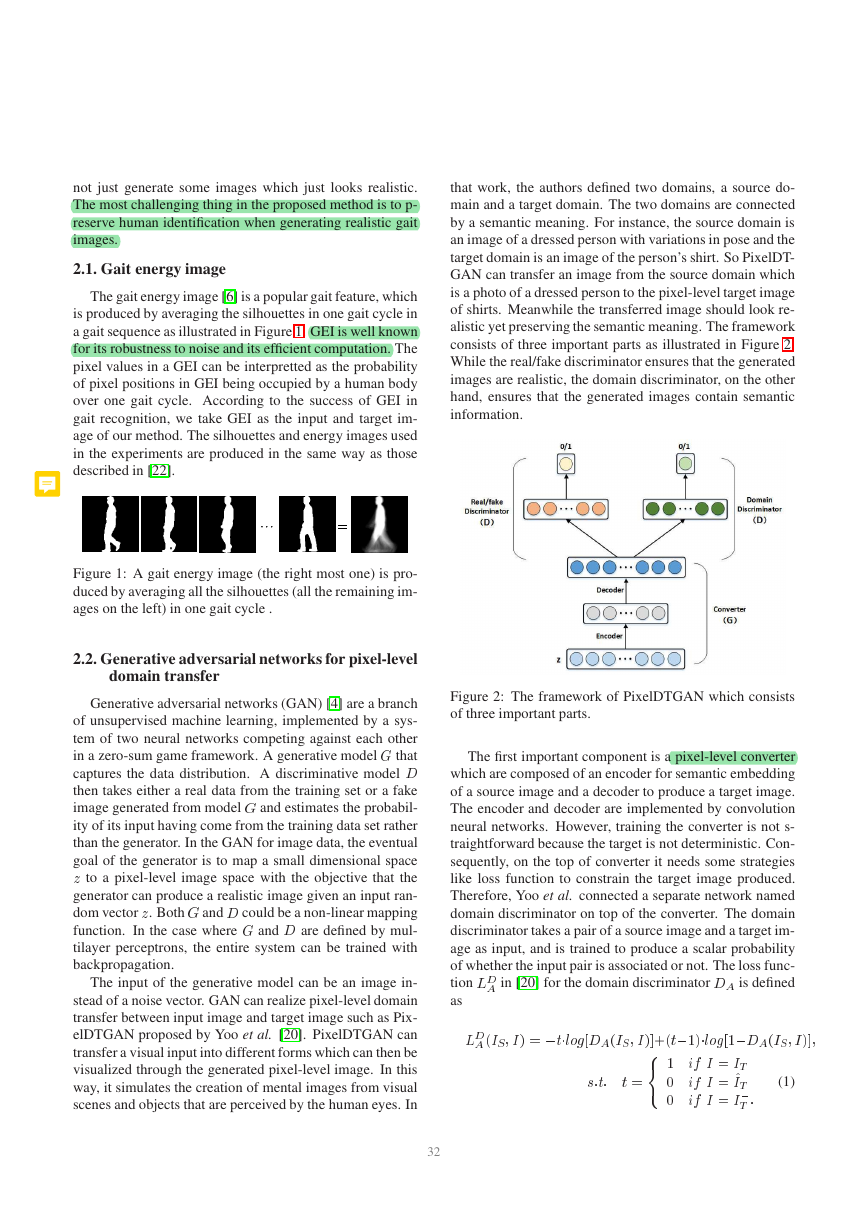

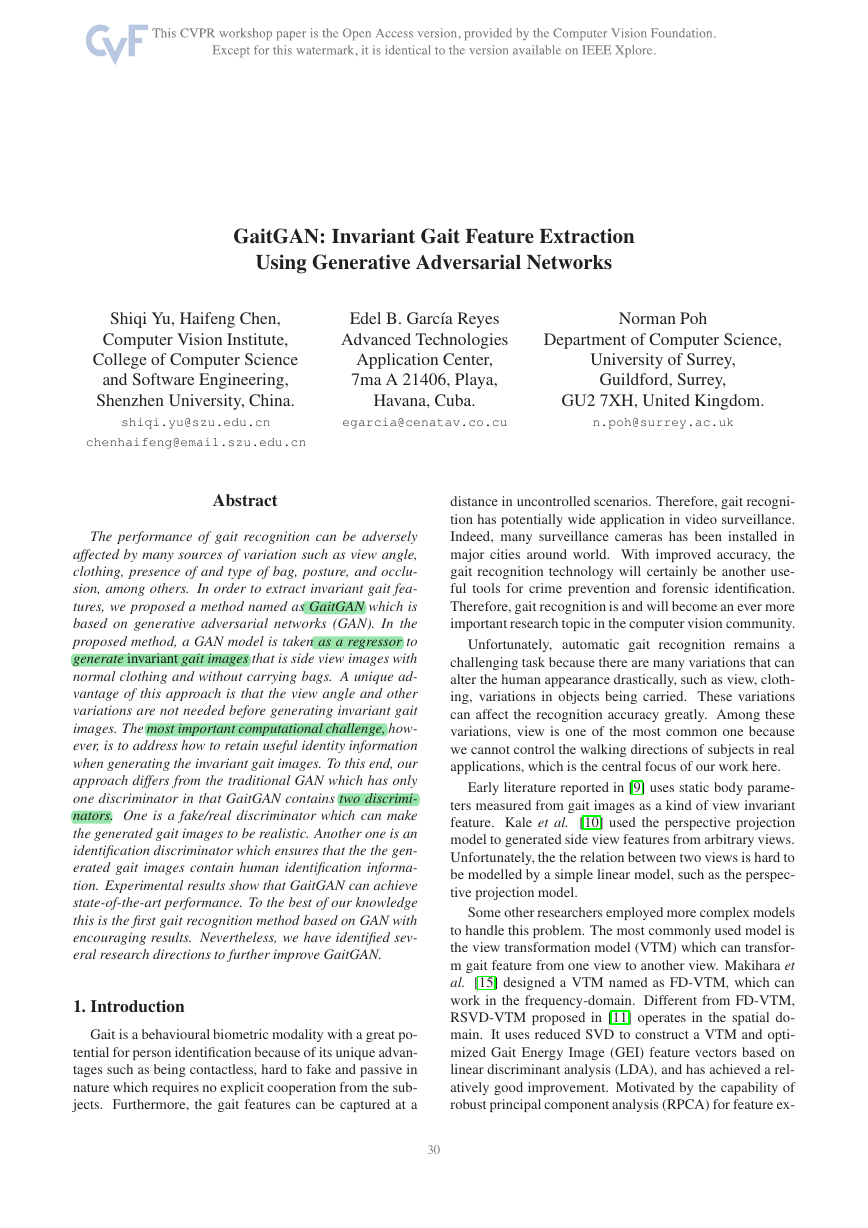

that work, the authors defined two domains, a source do-

main and a target domain. The two domains are connected

by a semantic meaning. For instance, the source domain is

an image of a dressed person with variations in pose and the

target domain is an image of the person’s shirt. So PixelDT-

GAN can transfer an image from the source domain which

is a photo of a dressed person to the pixel-level target image

of shirts. Meanwhile the transferred image should look re-

alistic yet preserving the semantic meaning. The framework

consists of three important parts as illustrated in Figure 2.

While the real/fake discriminator ensures that the generated

images are realistic, the domain discriminator, on the other

hand, ensures that the generated images contain semantic

information.

Figure 1: A gait energy image (the right most one) is pro-

duced by averaging all the silhouettes (all the remaining im-

ages on the left) in one gait cycle .

2.2. Generative adversarial networks for pixel-level

domain transfer

Generative adversarial networks (GAN) [4] are a branch

of unsupervised machine learning, implemented by a sys-

tem of two neural networks competing against each other

in a zero-sum game framework. A generative model G that

captures the data distribution. A discriminative model D

then takes either a real data from the training set or a fake

image generated from model G and estimates the probabil-

ity of its input having come from the training data set rather

than the generator. In the GAN for image data, the eventual

goal of the generator is to map a small dimensional space

z to a pixel-level image space with the objective that the

generator can produce a realistic image given an input ran-

dom vector z. Both G and D could be a non-linear mapping

function. In the case where G and D are defined by mul-

tilayer perceptrons, the entire system can be trained with

backpropagation.

The input of the generative model can be an image in-

stead of a noise vector. GAN can realize pixel-level domain

transfer between input image and target image such as Pix-

elDTGAN proposed by Yoo et al. [20]. PixelDTGAN can

transfer a visual input into different forms which can then be

visualized through the generated pixel-level image. In this

way, it simulates the creation of mental images from visual

scenes and objects that are perceived by the human eyes. In

Figure 2: The framework of PixelDTGAN which consists

of three important parts.

The first important component is a pixel-level converter

which are composed of an encoder for semantic embedding

of a source image and a decoder to produce a target image.

The encoder and decoder are implemented by convolution

neural networks. However, training the converter is not s-

traightforward because the target is not deterministic. Con-

sequently, on the top of converter it needs some strategies

like loss function to constrain the target image produced.

Therefore, Yoo et al. connected a separate network named

domain discriminator on top of the converter. The domain

discriminator takes a pair of a source image and a target im-

age as input, and is trained to produce a scalar probability

of whether the input pair is associated or not. The loss func-

A is defined

tion �

as

in [20] for the domain discriminator D

D

A

D

�

��

; � � = �����g [D

��

; � �℄����1����g [1�D

��

; � �℄;

A

S

A

S

A

S

8

<

T

1 if � = �

�:�:

� =

0 if � =

�

^

:

T

�

0 if � = �

:

T

(1)

332

�

where �

S is the source image, �

T is the ground truth target,

T is the generated image from

�

�

T

the irrelevant target, and ^

�

converter.

Another component is the real/fake discriminator which

similar to traditional GAN in that it is supervised by the

labels of real or fake, in order for the entire network to pro-

duce realistic images. Here, the discriminator produces a

scalar probability to indicate if the image is a real one or

not. The discriminator ’s loss function �

, according to

[20], takes the form of binary cross entropy:

D

R

Figure 4: The structure of the converter which transform the

source images to a target one as shown in Figure 3.

D

�

�� � = �� � ��g [D

�� �℄ � �� � 1� � ��g [1 � D

�� �℄;

R

R

R

1 if � 2 f�

g

i

�:�:

� =

0 if � 2 f

�

g:

i

^

(2)

normal walking, the discriminator will output 1. Othervise,

it will output 0. The structure of the real/fake discriminator

is shown in Figure 5.

i

where f�

fake images produced by the generator.

g contains real training images and f

i

^

�

g contains

Labels are given to the two discriminators, and they su-

pervise the converter to produce images that are realistic

while keeping the semantic meaning.

2.3. GaitGAN: GAN for gait gecognition

Inspired by the pixel-level domain transfer in PixelDT-

GAN, we propose GaitGAN to transform the gait data from

any view, clothing and carrying conditions to the invariant

view that contains side view with normal clothing and with-

out carrying objects. Additionally, identification informa-

tion is preserved.

We set the GEIs at all the viewpoints with clothing and

carrying variations as the source and the GEIs of normal

Æ (side view) as the target, as shown in Fig-

walking at 90

ure 3. The converter contains an encoder and a decoder as

shown in Figure 4.

Figure 3: The source images and the target image. ’NM’

stands for the normal condition, ’BG’ is for carrying a bag

and ’CL’ is for dressing in a coat as defined in CASIA-B

database.

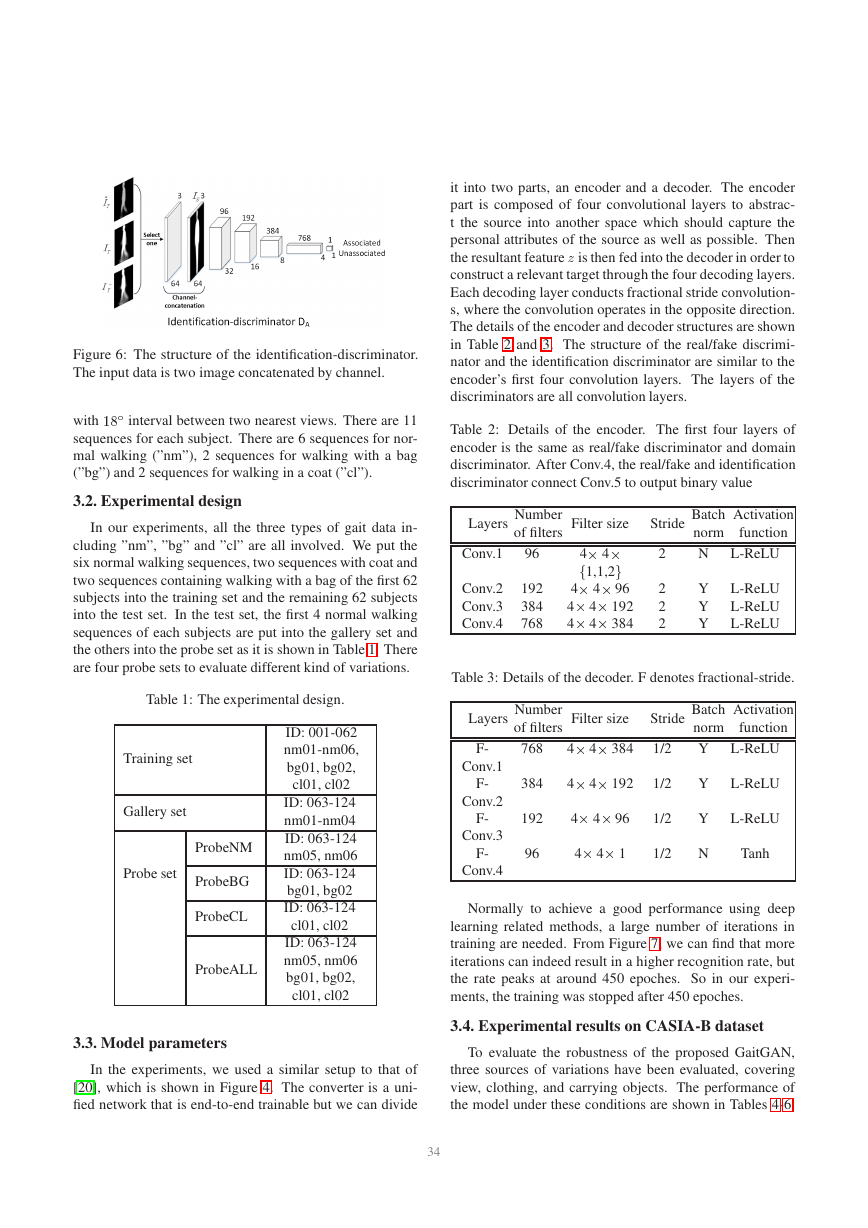

There are two discriminators. The first one is a real/fake

discriminator which is trained to predict whether an image

Æ view in

is real. If the input GEI is from real gait data at 90

433

Figure 5: The structure of the Real/fake-discriminator. The

input data is target image or generated image.

With the real/fake discriminator, we can only generate

side view GEIs which look well. But, the identification in-

formation of the subjects may be lost. To preserve the i-

dentification information, another discriminator, named as

identification discriminator, which is similar to the domain

discriminator in [20] is involved. The identification dis-

criminator takes a source image and a target image as input,

and is trained to produce a scalar probability of whether the

input pair is the same person. If the two inputs source im-

ages are from the same subject, the output should be 1. If

they are source images belonging to two different subjects,

the output should be 0. Likewise, if the input is a source

image and the target one is generated by the converter, the

discriminator function should output 0. The structure of i-

dentification discriminator is shown in Figure 6.

3. Experiments and analysis

3.1. Dataset

CASIA-B gait dataset [22] is one of the largest public

gait databases, which was created by the Institute of Au-

tomation, Chinese Academy of Sciences in January 2005.

It consists of 124 subjects (31 females and 93 males) cap-

tured from 11 views. The view range is from 0

Æ to 180

Æ

�

it into two parts, an encoder and a decoder. The encoder

part is composed of four convolutional layers to abstrac-

t the source into another space which should capture the

personal attributes of the source as well as possible. Then

the resultant feature z is then fed into the decoder in order to

construct a relevant target through the four decoding layers.

Each decoding layer conducts fractional stride convolution-

s, where the convolution operates in the opposite direction.

The details of the encoder and decoder structures are shown

in Table 2 and 3. The structure of the real/fake discrimi-

nator and the identification discriminator are similar to the

encoder’s first four convolution layers. The layers of the

discriminators are all convolution layers.

Table 2: Details of the encoder. The first four layers of

encoder is the same as real/fake discriminator and domain

discriminator. After Conv.4, the real/fake and identification

discriminator connect Conv.5 to output binary value

Layers

Number

of filters

Conv.1

96

Conv.2

Conv.3

Conv.4

192

384

768

Filter size

Stride

Batch

norm

Activation

function

4� 4�

f1,1,2g

4� 4� 96

4� 4� 192

4� 4� 384

2

2

2

2

N

Y

Y

Y

L-ReLU

L-ReLU

L-ReLU

L-ReLU

Table 3: Details of the decoder. F denotes fractional-stride.

Layers

Number

of filters

Filter size

Stride

Batch

norm

Activation

function

F-

768

4� 4� 384

1/2

Conv.1

F-

384

4� 4� 192

1/2

Conv.2

F-

192

4� 4� 96

1/2

Conv.3

F-

96

4� 4� 1

1/2

Conv.4

Y

Y

Y

N

L-ReLU

L-ReLU

L-ReLU

Tanh

Normally to achieve a good performance using deep

learning related methods, a large number of iterations in

training are needed. From Figure 7, we can find that more

iterations can indeed result in a higher recognition rate, but

the rate peaks at around 450 epoches. So in our experi-

ments, the training was stopped after 450 epoches.

3.4. Experimental results on CASIA-B dataset

To evaluate the robustness of the proposed GaitGAN,

three sources of variations have been evaluated, covering

view, clothing, and carrying objects. The performance of

the model under these conditions are shown in Tables 4-6.

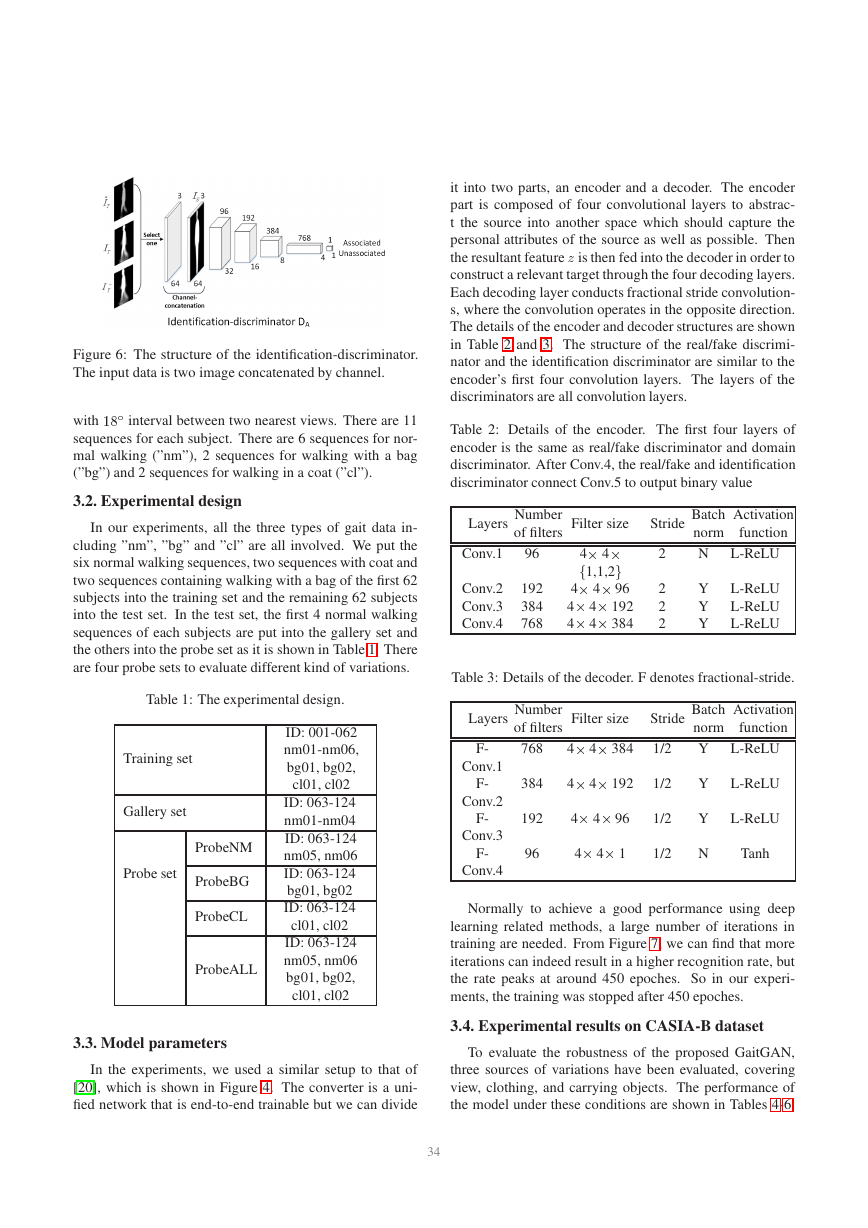

Figure 6: The structure of the identification-discriminator.

The input data is two image concatenated by channel.

with 18

Æ interval between two nearest views. There are 11

sequences for each subject. There are 6 sequences for nor-

mal walking (”nm”), 2 sequences for walking with a bag

(”bg”) and 2 sequences for walking in a coat (”cl”).

3.2. Experimental design

In our experiments, all the three types of gait data in-

cluding ”nm”, ”bg” and ”cl” are all involved. We put the

six normal walking sequences, two sequences with coat and

two sequences containing walking with a bag of the first 62

subjects into the training set and the remaining 62 subjects

into the test set. In the test set, the first 4 normal walking

sequences of each subjects are put into the gallery set and

the others into the probe set as it is shown in Table 1. There

are four probe sets to evaluate different kind of variations.

Table 1: The experimental design.

Training set

Gallery set

Probe set

ProbeNM

ProbeBG

ProbeCL

ProbeALL

ID: 001-062

nm01-nm06,

bg01, bg02,

cl01, cl02

ID: 063-124

nm01-nm04

ID: 063-124

nm05, nm06

ID: 063-124

bg01, bg02

ID: 063-124

cl01, cl02

ID: 063-124

nm05, nm06

bg01, bg02,

cl01, cl02

3.3. Model parameters

In the experiments, we used a similar setup to that of

[20], which is shown in Figure 4. The converter is a uni-

fied network that is end-to-end trainable but we can divide

534

�

there are 11 views in the database, there are 121 pairs of

combinations. In each table, each row corresponds to a view

angle of the gallery set, whereas each column corresponds

to the view angle of the probe set. The recognition rates of

these combinations are listed in Table 4. For the results in

Table 5, the main difference with those in Table 4 are the

probe sets. The probe data contains images of people carry-

ing bags, and the carrying conditions are different from that

of the gallery set. The probe sets for Table 6 contain gait

data with coats.

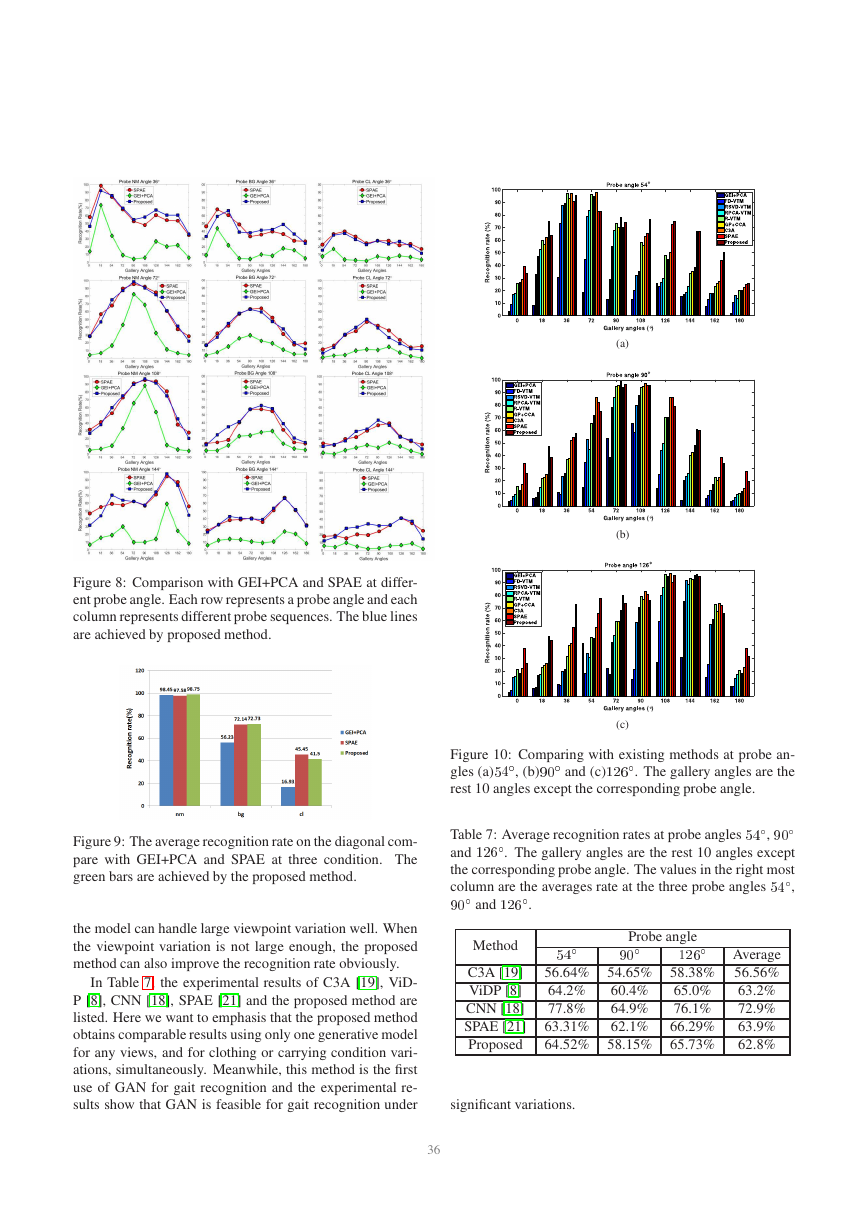

3.5. Comparisons with GEI+PCA and SPAE

Æ, 72

Æ, 108

Æ and 144

Since GEIs are used as input trying to extract invariant

features, we first compare our method with GEI+PCA [6]

and SPAE [21]. The experiment protocols in terms of the

gallery and probe sets for GEI+PCA and SPAE are exactly

the same as those presented in Table. 1. Due to limited s-

pace, we only list 4 probe angles with a 36

Æ interval. Each

row in this figure represents a probe angle. The compared

Æ. The first column of Fig-

angles are 36

ure 8 compares the recognition rates of the proposed Gait-

GAN with GEI+PCA and SPAE at different probe angles in

normal walking sequences. The second column shows the

comparison with different carrying conditions, and the third

shows the comparison with different clothings. As illustrat-

ed in Figure 8, the proposed method outperforms GEI+PCA

at all probe angle and gallery angle pairs. Meantime, its

performance is comparable to that of SPAE and better than

SPAE at many points. The results show that the proposed

method can produce features that as robust as the state of the

art methods in the presence of view, clothing and carrying

condition variations.

We also compared the recognition rates without view

variation. This can be done by taking the average of the

rates on the diagonal of Table 4, Table 5 and Table 6. The

corresponding average rates of GEI+PCA and SPAE are

also obtained in the same manner. The result are shown

in Figure 9. When there is no variation, the proposed

method achieve a high recognition rate which is better than

GEI+PCA and SPAE. But when variation exists, the pro-

posed method outperforms GEI+PCA greatly.

Figure 7: The recognition rate as a function of the number

of iterations. The blue, red and green respectively corre-

sponds to normal walking (ProbeNM), walking with a bag

(ProbeBG) and walking in a coat (ProbeCL) sequences re-

spectively in the probe set.

Table 4: Recognition Rate of ProbeNM in experiment 2,

training with sequences containing three conditions.

0

100.0

78.23

56.45

33.87

27.42

22.58

20.16

29.84

28.23

48.39

73.39

18

79.03

99.19

88.71

53.23

41.13

37.10

32.26

37.90

45.97

63.71

56.45

Probe set view(Normal walking, nm05,nm06)

36

45.97

91.94

97.58

85.48

69.35

54.84

58.06

66.94

60.48

60.48

36.29

54

33.87

63.71

95.97

95.97

83.06

74.19

76.61

75.00

66.94

50.00

25.81

72

28.23

46.77

75.00

87.10

100.0

98.39

90.32

81.45

61.29

41.13

21.77

90

25.81

38.71

57.26

75.00

96.77

98.39

95.97

79.03

59.68

33.87

19.35

108

26.61

37.90

59.68

75.00

89.52

96.77

97.58

91.13

75.00

45.16

20.16

126

25.81

44.35

72.58

77.42

73.39

75.81

95.97

99.19

95.16

65.32

31.45

144

31.45

43.55

70.16

63.71

62.10

57.26

74.19

97.58

99.19

83.06

44.35

162

54.84

65.32

60.48

37.10

37.10

35.48

38.71

59.68

79.84

99.19

72.58

180

72.58

58.06

35.48

22.58

17.74

21.77

22.58

37.10

45.97

71.77

100.0

w

e

i

v

t

e

s

y

r

e

l

l

a

G

0

18

36

54

72

90

108

126

144

162

180

Table 5: Recognition Rate of ProbeBG in the experiment 2

training with sequences containing three conditions.

0

79.03

54.84

36.29

25.00

20.16

15.32

16.13

19.35

26.61

29.03

42.74

18

45.97

76.61

58.87

45.16

24.19

27.42

25.00

29.84

32.26

34.68

28.23

Probe set view(walking with a bag, bg01,bg02)

36

33.06

58.87

75.81

66.13

38.71

37.90

41.13

41.94

48.39

36.29

24.19

54

14.52

31.45

53.23

68.55

41.94

38.71

42.74

45.16

37.90

25.00

12.90

72

16.13

26.61

44.35

57.26

65.32

62.10

58.87

46.77

37.10

19.35

11.29

90

14.52

16.13

30.65

42.74

56.45

64.52

58.06

52.42

36.29

16.13

11.29

108

11.29

24.19

34.68

41.13

57.26

62.10

69.35

58.06

38.71

20.16

14.52

126

15.32

29.84

46.77

45.97

51.61

61.29

70.16

73.39

67.74

37.90

21.77

144

22.58

32.26

42.74

40.32

39.52

38.71

53.23

66.13

73.39

51.61

30.65

162

33.87

41.94

34.68

20.16

16.94

20.97

24.19

41.13

50.00

76.61

49.19

180

41.13

32.26

20.16

13.71

8.87

12.10

11.29

22.58

32.26

41.94

77.42

w

e

i

v

t

e

s

y

r

e

l

l

a

G

0

18

36

54

72

90

108

126

144

162

180

Table 6: Recognition Rate of ProbeCL in the experiment 2

training with sequences containing three conditions.

Probe set view(walking wearing a coat, cl01,cl02)

0

25.81

17.74

13.71

2.42

4.84

4.03

4.03

10.48

8.87

14.52

17.74

18

16.13

37.90

24.19

19.35

12.10

10.48

12.90

10.48

13.71

18.55

13.71

36

15.32

34.68

45.16

37.10

29.03

22.58

27.42

23.39

26.61

20.97

11.29

54

12.10

20.97

43.55

55.65

40.32

31.45

27.42

27.42

22.58

17.74

6.45

72

6.45

13.71

30.65

39.52

43.55

50.00

38.71

26.61

18.55

12.10

10.48

90

6.45

8.87

19.35

22.58

34.68

48.39

44.35

25.81

19.35

12.10

5.65

108

9.68

12.10

16.94

29.03

32.26

43.55

47.58

37.10

21.77

17.74

6.45

126

7.26

19.35

22.58

29.84

28.23

36.29

38.71

45.97

35.48

21.77

5.65

144

12.10

16.94

28.23

29.84

33.87

31.45

32.26

41.13

43.55

37.10

14.52

162

11.29

24.19

20.16

16.94

12.90

13.71

15.32

15.32

20.97

35.48

29.03

180

15.32

19.35

10.48

8.06

8.06

8.06

4.84

10.48

12.90

21.77

27.42

w

e

i

v

t

e

s

y

r

e

l

l

a

G

0

18

36

54

72

90

108

126

144

162

180

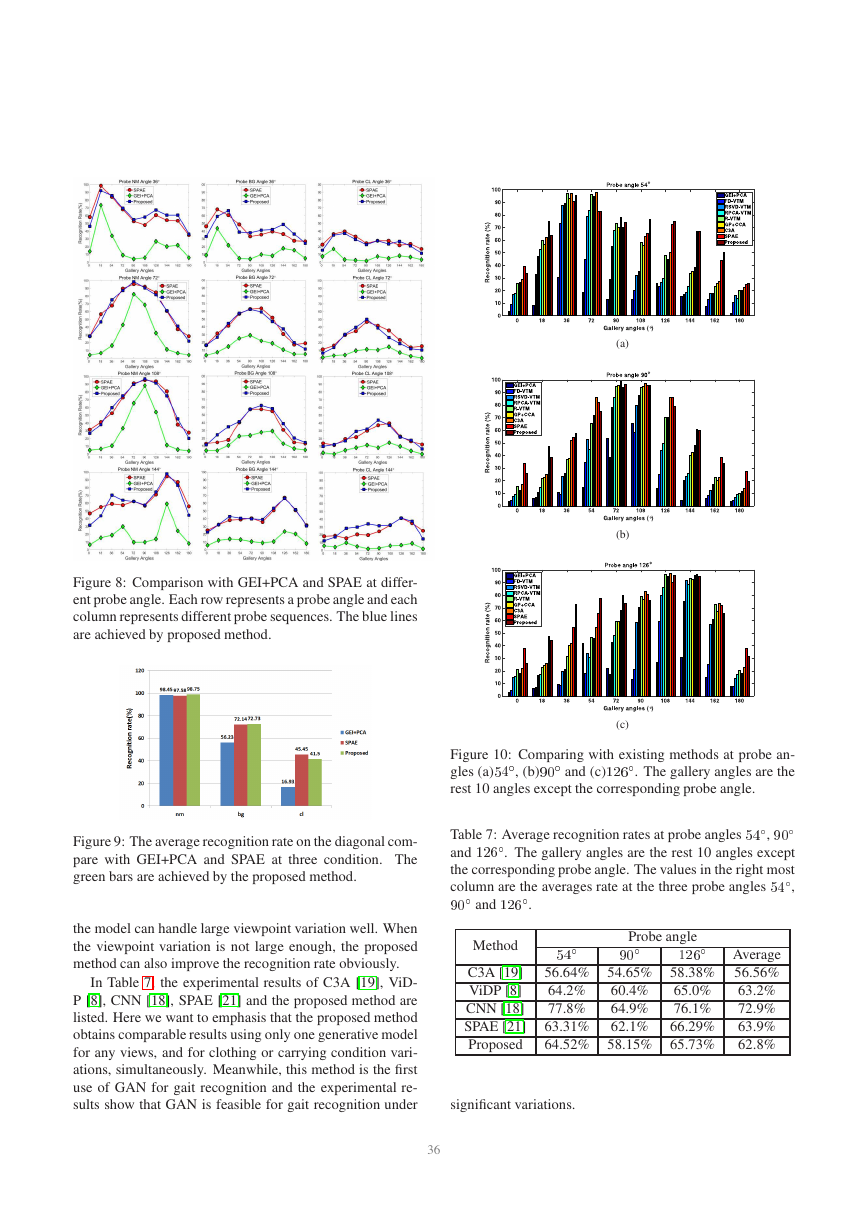

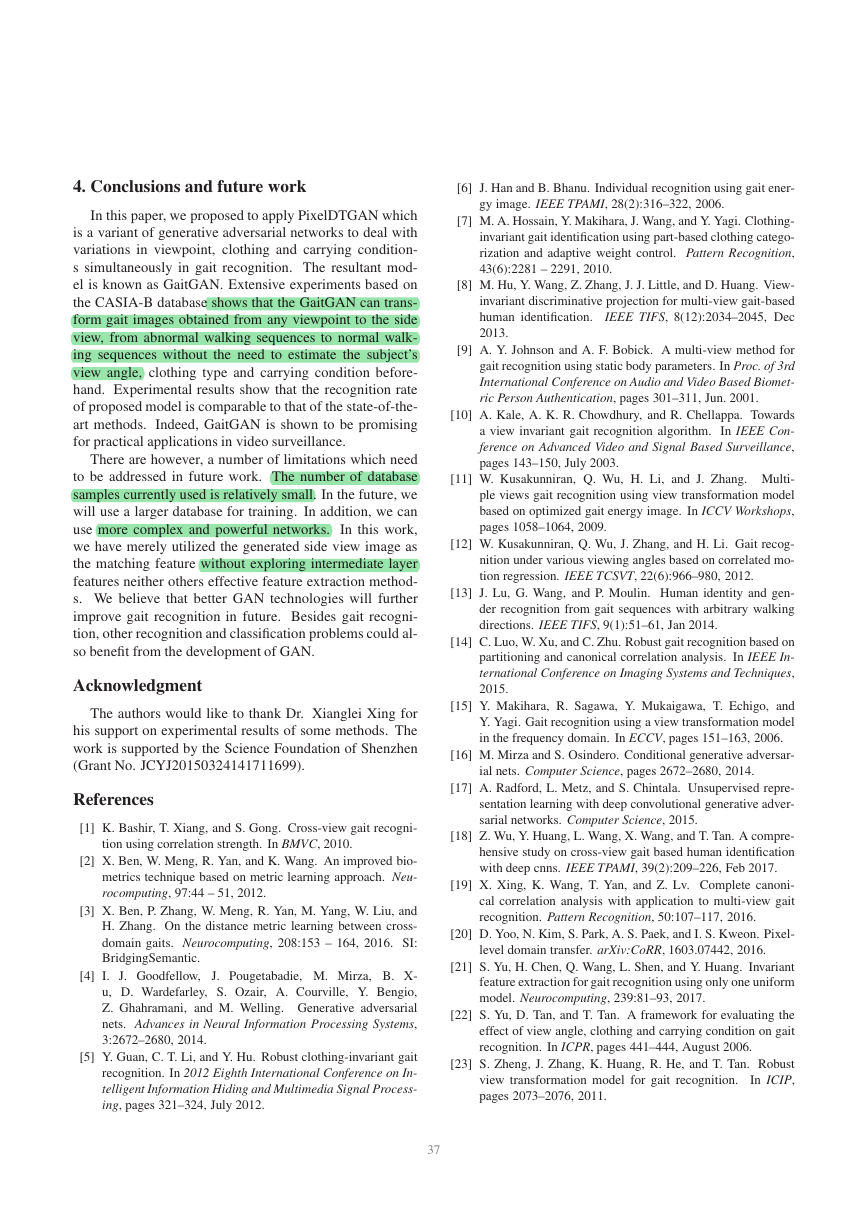

3.6. Comparisons with state-of-the-art

In order to better analyse the performance of the pro-

posed method, we further compare the proposed GaitGAN

with additional state-of-the-art methods including FD-VTM

[15], RSVD-VTM [11], RPCA-VTM [23], R-VTM [12],

GP+CCA [1] , C3A [19] and SPAE [21].

For Table 4, the first four normal sequences at a specific

view are put into the gallery set, and the last two normal

sequences at another view are put into the probe set. Since

635

The probe angles selected are 54

Æ as in

experiments of those methods. The experimental results are

listed in Figure 10. From the results we can find that the pro-

posed method outperforms others when the angle difference

between the gallery and the probe is large. This shows that

Æ and 126

Æ, 90

�

(a)

(b)

(c)

Figure 10: Comparing with existing methods at probe an-

gles (a)54

Æ. The gallery angles are the

rest 10 angles except the corresponding probe angle.

Æ and (c)126

Æ, (b)90

Table 7: Average recognition rates at probe angles 54

and 126

Æ. The gallery angles are the rest 10 angles except

the corresponding probe angle. The values in the right most

Æ,

column are the averages rate at the three probe angles 54

Æ, 90

Æ

Æ and 126

Æ.

90

Method

C3A [19]

ViDP [8]

CNN [18]

SPAE [21]

Proposed

Probe angle

Æ

Æ

54

90

Æ

126

Average

56.64% 54.65% 58.38% 56.56%

64.2%

63.2%

77.8%

72.9%

63.31% 62.1% 66.29% 63.9%

64.52% 58.15% 65.73% 62.8%

60.4%

64.9%

65.0%

76.1%

significant variations.

736

Figure 8: Comparison with GEI+PCA and SPAE at differ-

ent probe angle. Each row represents a probe angle and each

column represents different probe sequences. The blue lines

are achieved by proposed method.

Figure 9: The average recognition rate on the diagonal com-

pare with GEI+PCA and SPAE at three condition. The

green bars are achieved by the proposed method.

the model can handle large viewpoint variation well. When

the viewpoint variation is not large enough, the proposed

method can also improve the recognition rate obviously.

In Table 7, the experimental results of C3A [19], ViD-

P [8], CNN [18], SPAE [21] and the proposed method are

listed. Here we want to emphasis that the proposed method

obtains comparable results using only one generative model

for any views, and for clothing or carrying condition vari-

ations, simultaneously. Meanwhile, this method is the first

use of GAN for gait recognition and the experimental re-

sults show that GAN is feasible for gait recognition under

�

4. Conclusions and future work

In this paper, we proposed to apply PixelDTGAN which

is a variant of generative adversarial networks to deal with

variations in viewpoint, clothing and carrying condition-

s simultaneously in gait recognition. The resultant mod-

el is known as GaitGAN. Extensive experiments based on

the CASIA-B database shows that the GaitGAN can trans-

form gait images obtained from any viewpoint to the side

view, from abnormal walking sequences to normal walk-

ing sequences without the need to estimate the subject’s

view angle, clothing type and carrying condition before-

hand. Experimental results show that the recognition rate

of proposed model is comparable to that of the state-of-the-

art methods. Indeed, GaitGAN is shown to be promising

for practical applications in video surveillance.

There are however, a number of limitations which need

to be addressed in future work. The number of database

samples currently used is relatively small. In the future, we

will use a larger database for training. In addition, we can

use more complex and powerful networks.

In this work,

we have merely utilized the generated side view image as

the matching feature without exploring intermediate layer

features neither others effective feature extraction method-

s. We believe that better GAN technologies will further

improve gait recognition in future. Besides gait recogni-

tion, other recognition and classification problems could al-

so benefit from the development of GAN.

Acknowledgment

The authors would like to thank Dr. Xianglei Xing for

his support on experimental results of some methods. The

work is supported by the Science Foundation of Shenzhen

(Grant No. JCYJ20150324141711699).

References

[1] K. Bashir, T. Xiang, and S. Gong. Cross-view gait recogni-

tion using correlation strength. In BMVC, 2010.

[2] X. Ben, W. Meng, R. Yan, and K. Wang. An improved bio-

metrics technique based on metric learning approach. Neu-

rocomputing, 97:44 – 51, 2012.

[3] X. Ben, P. Zhang, W. Meng, R. Yan, M. Yang, W. Liu, and

H. Zhang. On the distance metric learning between cross-

domain gaits. Neurocomputing, 208:153 – 164, 2016. SI:

BridgingSemantic.

[4] I. J. Goodfellow,

J. Pougetabadie, M. Mirza, B. X-

u, D. Wardefarley, S. Ozair, A. Courville, Y. Bengio,

Z. Ghahramani, and M. Welling. Generative adversarial

nets. Advances in Neural Information Processing Systems,

3:2672–2680, 2014.

[5] Y. Guan, C. T. Li, and Y. Hu. Robust clothing-invariant gait

recognition. In 2012 Eighth International Conference on In-

telligent Information Hiding and Multimedia Signal Process-

ing, pages 321–324, July 2012.

[6] J. Han and B. Bhanu. Individual recognition using gait ener-

gy image. IEEE TPAMI, 28(2):316–322, 2006.

[7] M. A. Hossain, Y. Makihara, J. Wang, and Y. Yagi. Clothing-

invariant gait identification using part-based clothing catego-

rization and adaptive weight control. Pattern Recognition,

43(6):2281 – 2291, 2010.

[8] M. Hu, Y. Wang, Z. Zhang, J. J. Little, and D. Huang. View-

invariant discriminative projection for multi-view gait-based

human identification.

IEEE TIFS, 8(12):2034–2045, Dec

2013.

[9] A. Y. Johnson and A. F. Bobick. A multi-view method for

gait recognition using static body parameters. In Proc. of 3rd

International Conference on Audio and Video Based Biomet-

ric Person Authentication, pages 301–311, Jun. 2001.

[10] A. Kale, A. K. R. Chowdhury, and R. Chellappa. Towards

a view invariant gait recognition algorithm.

In IEEE Con-

ference on Advanced Video and Signal Based Surveillance,

pages 143–150, July 2003.

[11] W. Kusakunniran, Q. Wu, H. Li, and J. Zhang. Multi-

ple views gait recognition using view transformation model

based on optimized gait energy image. In ICCV Workshops,

pages 1058–1064, 2009.

[12] W. Kusakunniran, Q. Wu, J. Zhang, and H. Li. Gait recog-

nition under various viewing angles based on correlated mo-

tion regression. IEEE TCSVT, 22(6):966–980, 2012.

[13] J. Lu, G. Wang, and P. Moulin. Human identity and gen-

der recognition from gait sequences with arbitrary walking

directions. IEEE TIFS, 9(1):51–61, Jan 2014.

[14] C. Luo, W. Xu, and C. Zhu. Robust gait recognition based on

partitioning and canonical correlation analysis. In IEEE In-

ternational Conference on Imaging Systems and Techniques,

2015.

[15] Y. Makihara, R. Sagawa, Y. Mukaigawa, T. Echigo, and

Y. Yagi. Gait recognition using a view transformation model

in the frequency domain. In ECCV, pages 151–163, 2006.

[16] M. Mirza and S. Osindero. Conditional generative adversar-

ial nets. Computer Science, pages 2672–2680, 2014.

[17] A. Radford, L. Metz, and S. Chintala. Unsupervised repre-

sentation learning with deep convolutional generative adver-

sarial networks. Computer Science, 2015.

[18] Z. Wu, Y. Huang, L. Wang, X. Wang, and T. Tan. A compre-

hensive study on cross-view gait based human identification

with deep cnns. IEEE TPAMI, 39(2):209–226, Feb 2017.

[19] X. Xing, K. Wang, T. Yan, and Z. Lv. Complete canoni-

cal correlation analysis with application to multi-view gait

recognition. Pattern Recognition, 50:107–117, 2016.

[20] D. Yoo, N. Kim, S. Park, A. S. Paek, and I. S. Kweon. Pixel-

level domain transfer. arXiv:CoRR, 1603.07442, 2016.

[21] S. Yu, H. Chen, Q. Wang, L. Shen, and Y. Huang. Invariant

feature extraction for gait recognition using only one uniform

model. Neurocomputing, 239:81–93, 2017.

[22] S. Yu, D. Tan, and T. Tan. A framework for evaluating the

effect of view angle, clothing and carrying condition on gait

recognition. In ICPR, pages 441–444, August 2006.

[23] S. Zheng, J. Zhang, K. Huang, R. He, and T. Tan. Robust

In ICIP,

view transformation model for gait recognition.

pages 2073–2076, 2011.

837

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc