Introduction to Bayesian Optimization

Javier Gonz´alez

Masterclass, 7-February, 2107 @Lancaster University

�

Big picture

“Civilization advances by extending the

number of important operations which

we can perform without thinking of them.”

(Alfred North Whitehead)

We are interested on optimizing data science pipelines:

Automatic model configuration.

Automate the design of physical experiments.

�

Agenda of the day

9:00-11:00, Introduction to Bayesian Optimization:

What is BayesOpt and why it works?

Relevant things to know.

11:30-13:00, Connections, extensions and

applications:

Extensions to multi-task problems, constrained domains,

early-stopping, high dimensions.

Connections to Armed bandits and ABC.

An applications in genetics.

14:00-16:00, GPyOpt LAB!: Bring your own problem!

16:30-15:30, Hot topics current challenges:

Parallelization.

Non-myopic methods

Interactive Bayesian Optimization.

�

Section I: Introduction to Bayesian Optimization

What is BayesOpt and why it works?

Relevant things to know.

�

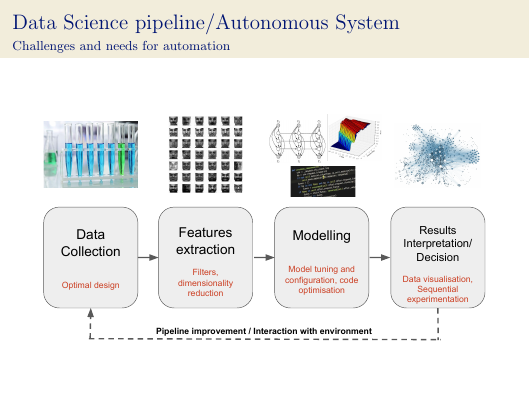

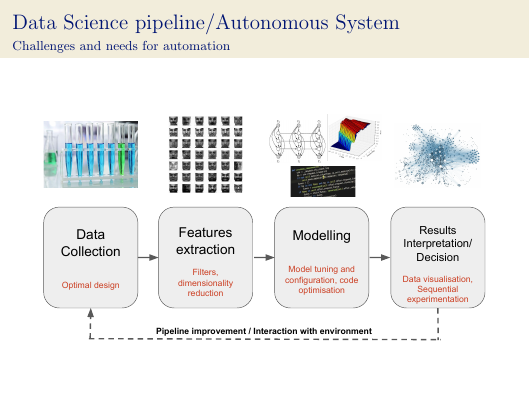

Data Science pipeline/Autonomous System

Challenges and needs for automation

Data CollectionOptimal designFeatures extractionFilters, dimensionality reductionModellingModel tuning and configuration, code optimisationResults Interpretation/DecisionData visualisation,Sequential experimentationPipeline improvement / Interaction with environment �

Experimental Design - Uncertainty Quantification

Can we automate/simplify the process of designing complex experiments?

Emulator - Simulator - Physical system

�

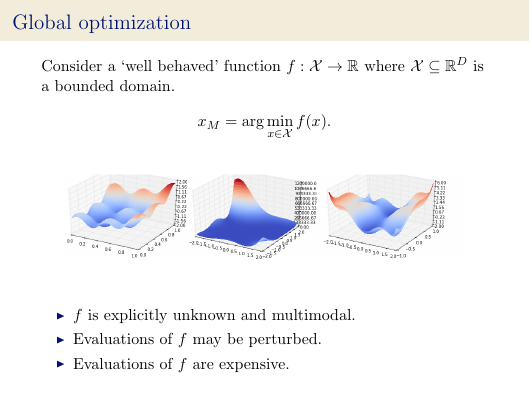

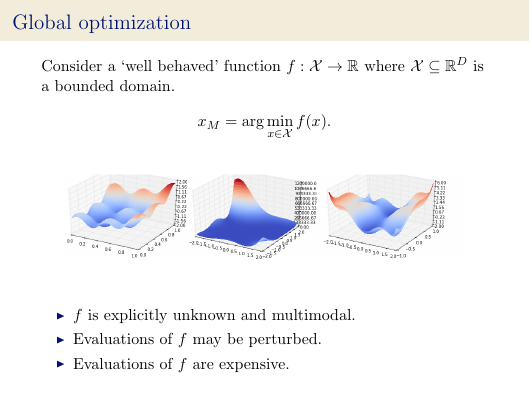

Global optimization

Consider a ‘well behaved’ function f : X → R where X ⊆ RD is

a bounded domain.

xM = arg min

x∈X f (x).

f is explicitly unknown and multimodal.

Evaluations of f may be perturbed.

Evaluations of f are expensive.

�

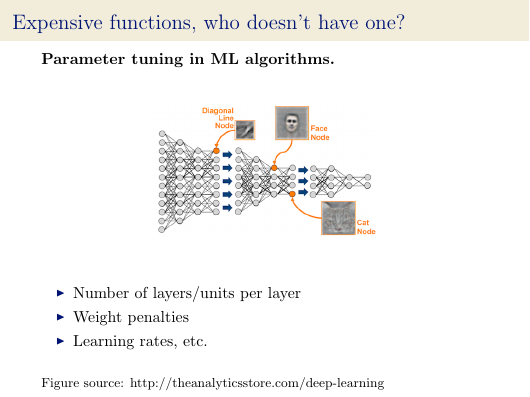

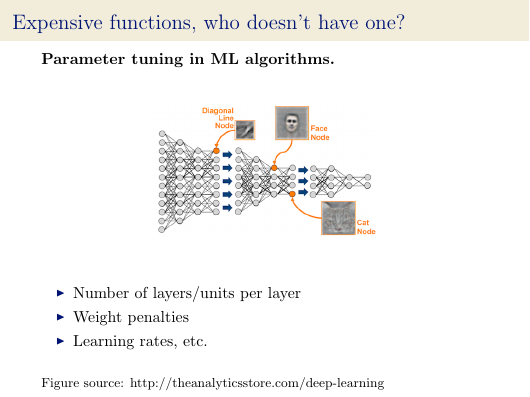

Expensive functions, who doesn’t have one?

Parameter tuning in ML algorithms.

Number of layers/units per layer

Weight penalties

Learning rates, etc.

Figure source: http://theanalyticsstore.com/deep-learning

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc