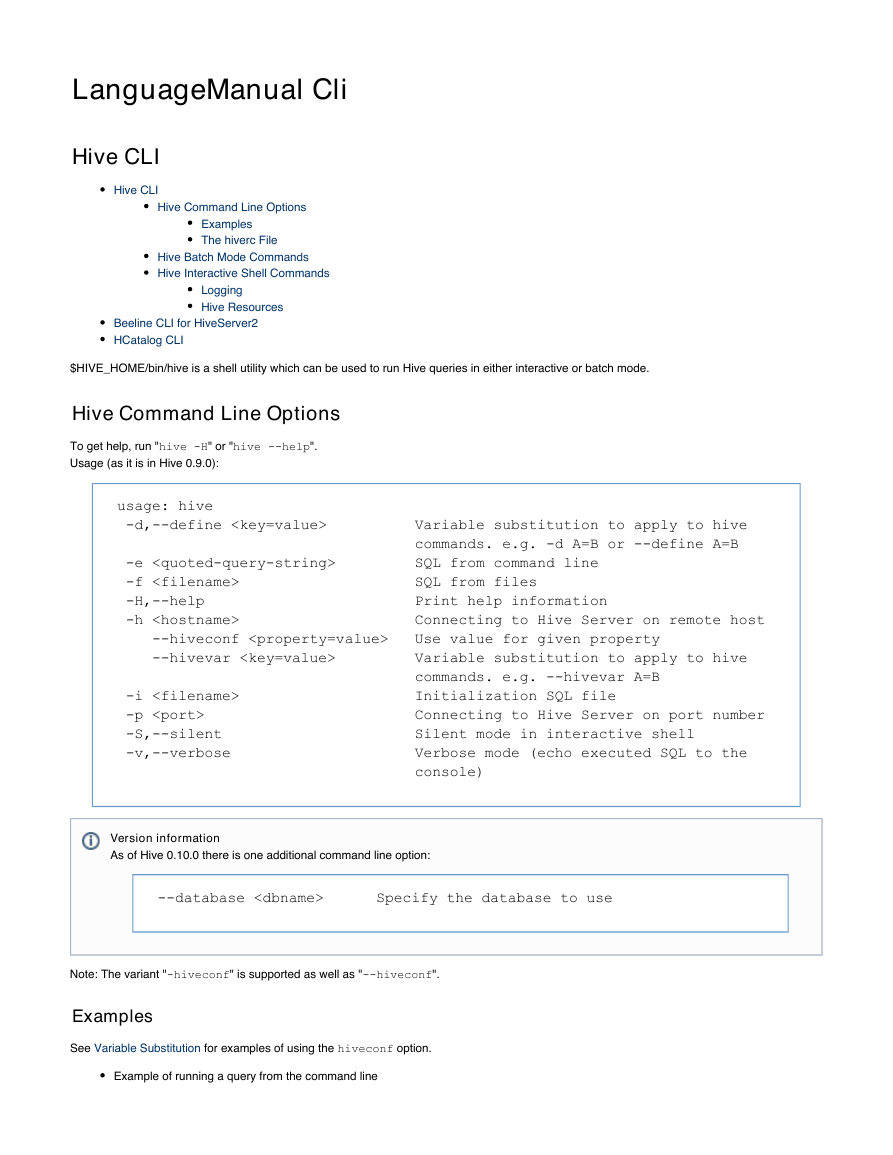

LanguageManual Cli

Hive CLI

Hive CLI

Hive Command Line Options

Examples

The hiverc File

Hive Batch Mode Commands

Hive Interactive Shell Commands

Logging

Hive Resources

Beeline CLI for HiveServer2

HCatalog CLI

$HIVE_HOME/bin/hive is a shell utility which can be used to run Hive queries in either interactive or batch mode.

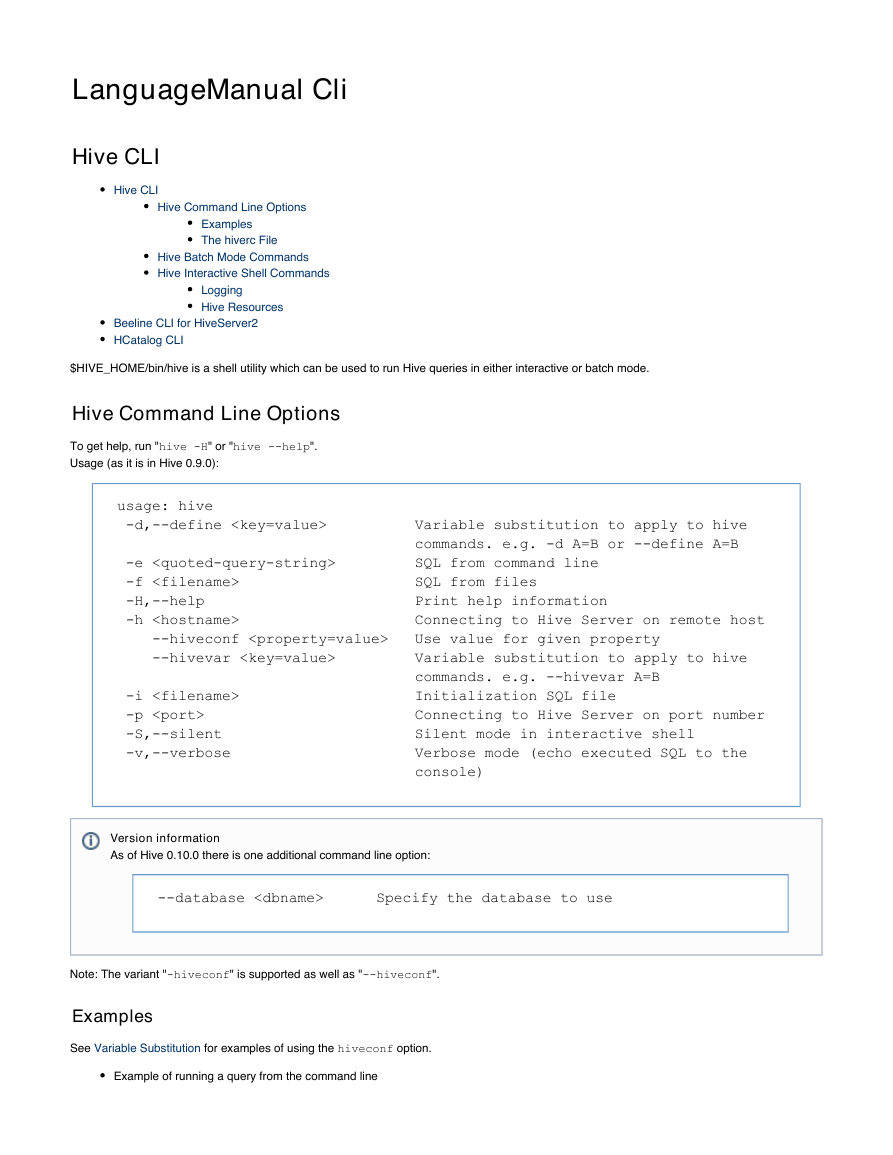

Hive Command Line Options

To get help, run "

Usage (as it is in Hive 0.9.0):

hive -H

" or "

hive --help

".

usage: hive

-d,--define

Variable substitution to apply to hive

commands. e.g. -d A=B or --define A=B

-e SQL from command line

-f SQL from files

-H,--help Print help information

-h Connecting to Hive Server on remote host

--hiveconf Use value for given property

--hivevar Variable substitution to apply to hive

commands. e.g. --hivevar A=B

-i Initialization SQL file

-p Connecting to Hive Server on port number

-S,--silent Silent mode in interactive shell

-v,--verbose Verbose mode (echo executed SQL to the

console)

Version information

As of Hive 0.10.0 there is one additional command line option:

--database Specify the database to use

Note: The variant "

-hiveconf

" is supported as well as "

--hiveconf

".

Examples

See

Variable Substitution

for examples of using the

hiveconf

option.

Example of running a query from the command line

�$HIVE_HOME/bin/hive -e 'select a.col from tab1 a'

Example of setting Hive configuration variables

$HIVE_HOME/bin/hive -e 'select a.col from tab1 a' --hiveconf

hive.exec.scratchdir=/home/my/hive_scratch --hiveconf

mapred.reduce.tasks=32

Example of dumping data out from a query into a file using silent mode

$HIVE_HOME/bin/hive -S -e 'select a.col from tab1 a' > a.txt

Example of running a script non-interactively

$HIVE_HOME/bin/hive -f /home/my/hive-script.sql

Example of running an initialization script before entering interactive mode

$HIVE_HOME/bin/hive -i /home/my/hive-init.sql

The hiverc File

The CLI when invoked without the

-i

option will attempt to load $HIVE_HOME/bin/.hiverc and $HOME/.hiverc as initialization files.

Hive Batch Mode Commands

When

$HIVE_HOME/bin/hive

is run with the

-e

or

-f

option, it executes SQL commands in batch mode.

hive -e '' executes the query string.

hive -f executes one or more SQL queries from a file.

Examples are shown above.

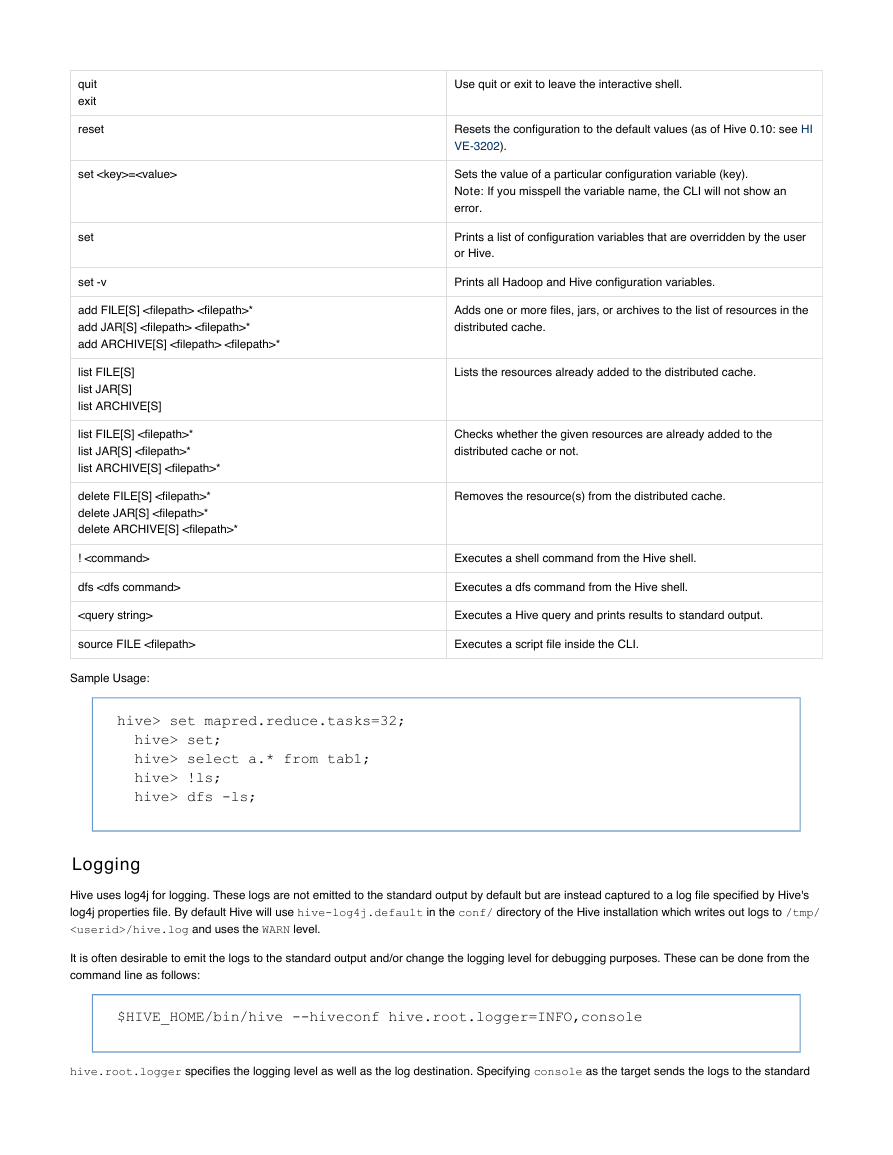

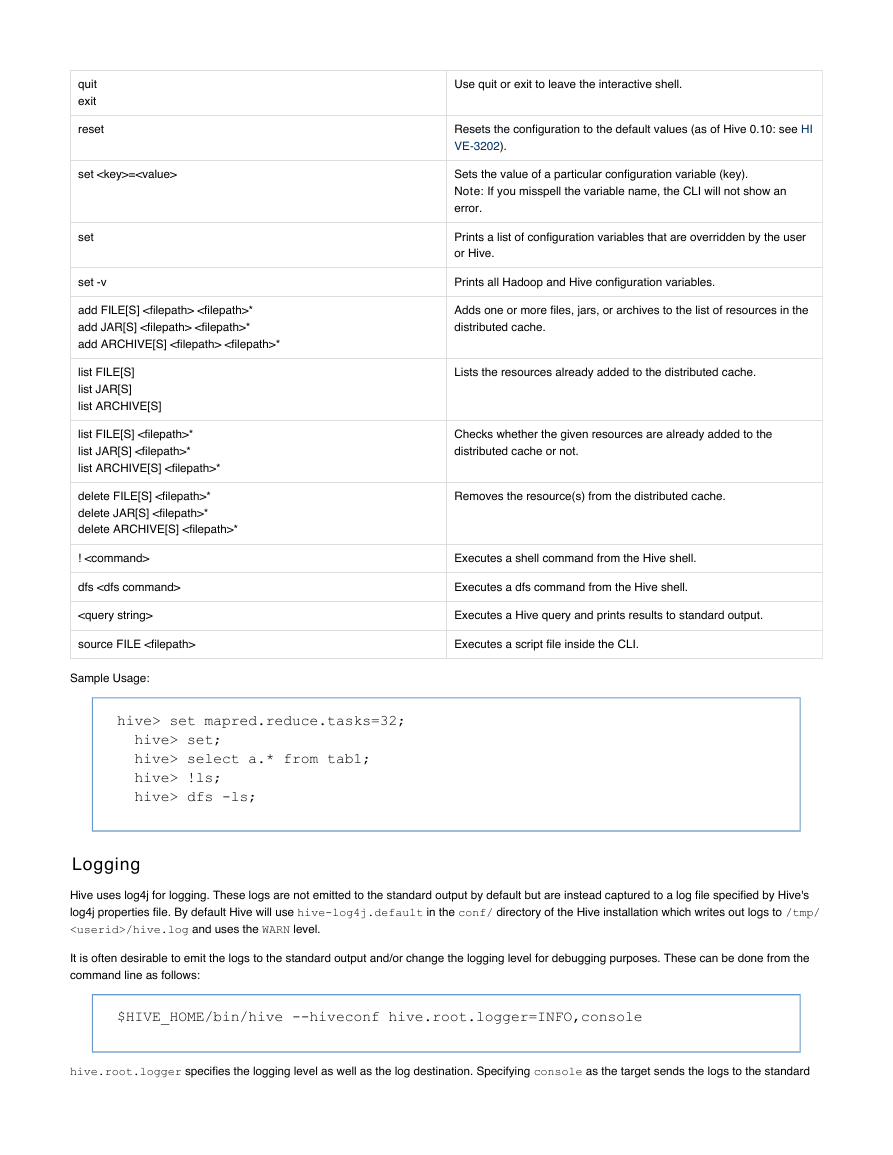

Hive Interactive Shell Commands

When

$HIVE_HOME/bin/hive

is run without either the

-e

or

-f

option, it enters interactive shell mode.

Use ";" (semicolon) to terminate commands. Comments in scripts can be specified using the "--" prefix.

Command

Description

�

Use quit or exit to leave the interactive shell.

Resets the configuration to the default values (as of Hive 0.10: see HI

VE-3202

).

Sets the value of a particular configuration variable (key).

Note:

error.

If you misspell the variable name, the CLI will not show an

Prints a list of configuration variables that are overridden by the user

or Hive.

Prints all Hadoop and Hive configuration variables.

Adds one or more files, jars, or archives to the list of resources in the

distributed cache.

Lists the resources already added to the distributed cache.

Checks whether the given resources are already added to the

distributed cache or not.

Removes the resource(s) from the distributed cache.

Executes a shell command from the Hive shell.

Executes a dfs command from the Hive shell.

Executes a Hive query and prints results to standard output.

Executes a script file inside the CLI.

quit

exit

reset

set =

set

set -v

add FILE[S] *

add JAR[S] *

add ARCHIVE[S] *

list FILE[S]

list JAR[S]

list ARCHIVE[S]

list FILE[S] *

list JAR[S] *

list ARCHIVE[S] *

delete FILE[S] *

delete JAR[S] *

delete ARCHIVE[S] *

!

dfs

source FILE

Sample Usage:

hive> set mapred.reduce.tasks=32;

hive> set;

hive> select a.* from tab1;

hive> !ls;

hive> dfs -ls;

Logging

Hive uses log4j for logging. These logs are not emitted to the standard output by default but are instead captured to a log file specified by Hive's

log4j properties file. By default Hive will use

/hive.log

directory of the Hive installation which writes out logs to

hive-log4j.default

in the

and uses the

WARN

level.

conf/

/tmp/

It is often desirable to emit the logs to the standard output and/or change the logging level for debugging purposes. These can be done from the

command line as follows:

$HIVE_HOME/bin/hive --hiveconf hive.root.logger=INFO,console

hive.root.logger specifies the logging level as well as the log destination. Specifying

console

as the target sends the logs to the standard

�

error (instead of the log file).

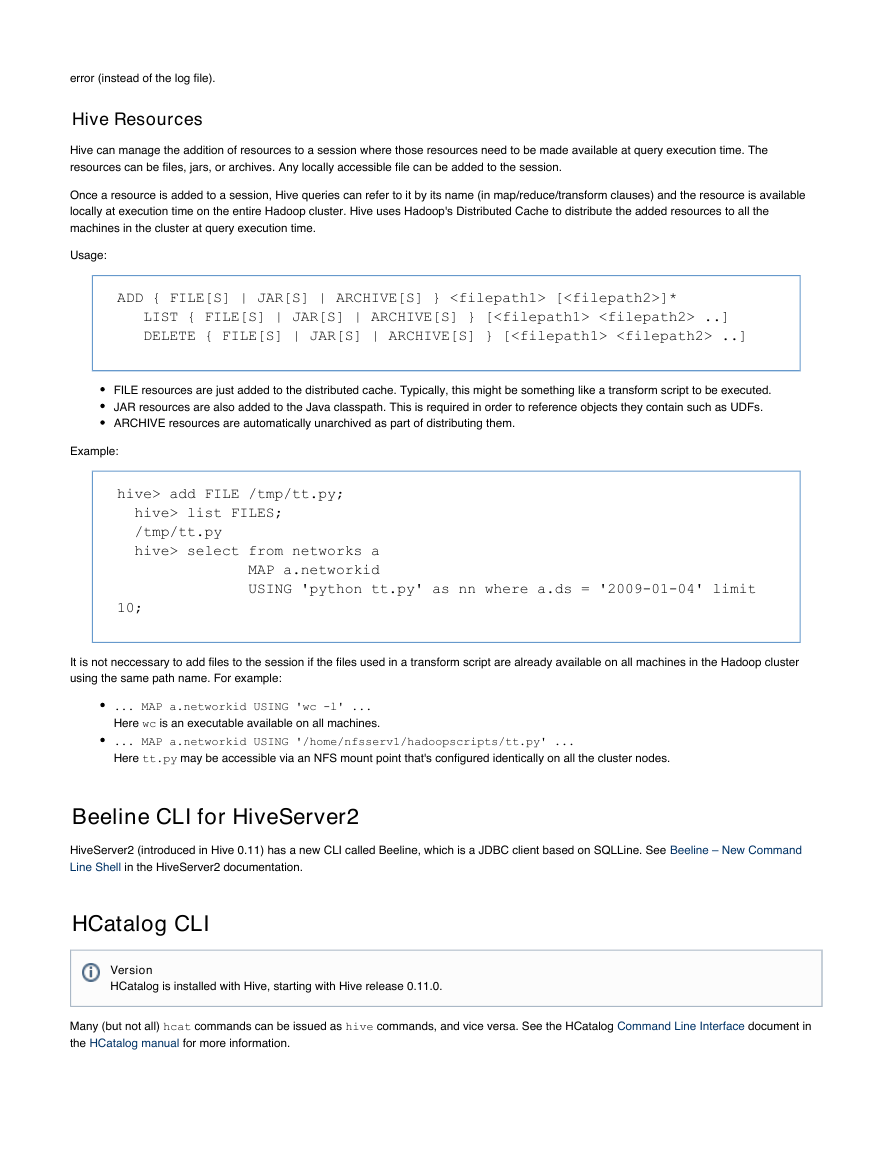

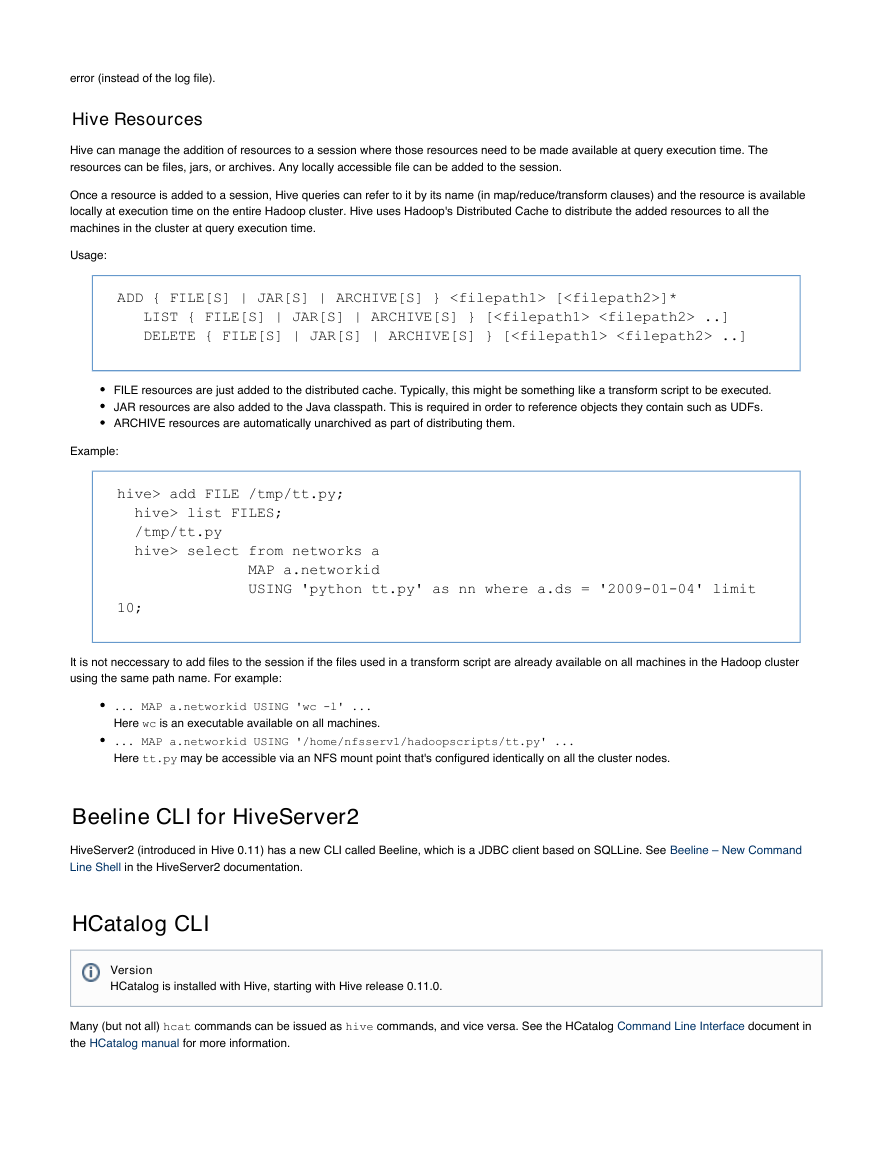

Hive Resources

Hive can manage the addition of resources to a session where those resources need to be made available at query execution time. The

resources can be files, jars, or archives. Any locally accessible file can be added to the session.

Once a resource is added to a session, Hive queries can refer to it by its name (in map/reduce/transform clauses) and the resource is available

locally at execution time on the entire Hadoop cluster. Hive uses Hadoop's Distributed Cache to distribute the added resources to all the

machines in the cluster at query execution time.

Usage:

ADD { FILE[S] | JAR[S] | ARCHIVE[S] } []*

LIST { FILE[S] | JAR[S] | ARCHIVE[S] } [ ..]

DELETE { FILE[S] | JAR[S] | ARCHIVE[S] } [ ..]

FILE resources are just added to the distributed cache. Typically, this might be something like a transform script to be executed.

JAR resources are also added to the Java classpath. This is required in order to reference objects they contain such as UDFs.

ARCHIVE resources are automatically unarchived as part of distributing them.

Example:

hive> add FILE /tmp/tt.py;

hive> list FILES;

/tmp/tt.py

hive> select from networks a

MAP a.networkid

USING 'python tt.py' as nn where a.ds = '2009-01-04' limit

10;

It is not neccessary to add files to the session if the files used in a transform script are already available on all machines in the Hadoop cluster

using the same path name. For example:

... MAP a.networkid USING 'wc -l' ...

Here

is an executable available on all machines.

... MAP a.networkid USING '/home/nfsserv1/hadoopscripts/tt.py' ...

Here

may be accessible via an NFS mount point that's configured identically on all the cluster nodes.

wc

tt.py

Beeline CLI for HiveServer2

HiveServer2 (introduced in Hive 0.11) has a new CLI called Beeline, which is a JDBC client based on SQLLine. See Beeline – New Command

Line Shell

in the HiveServer2 documentation.

HCatalog CLI

Version

HCatalog is installed with Hive, starting with Hive release 0.11.0.

Many (but not all)

the

HCatalog manual

for more information.

hcat

commands can be issued as

hive

commands, and vice versa. See the HCatalog

Command Line Interface

document in

�

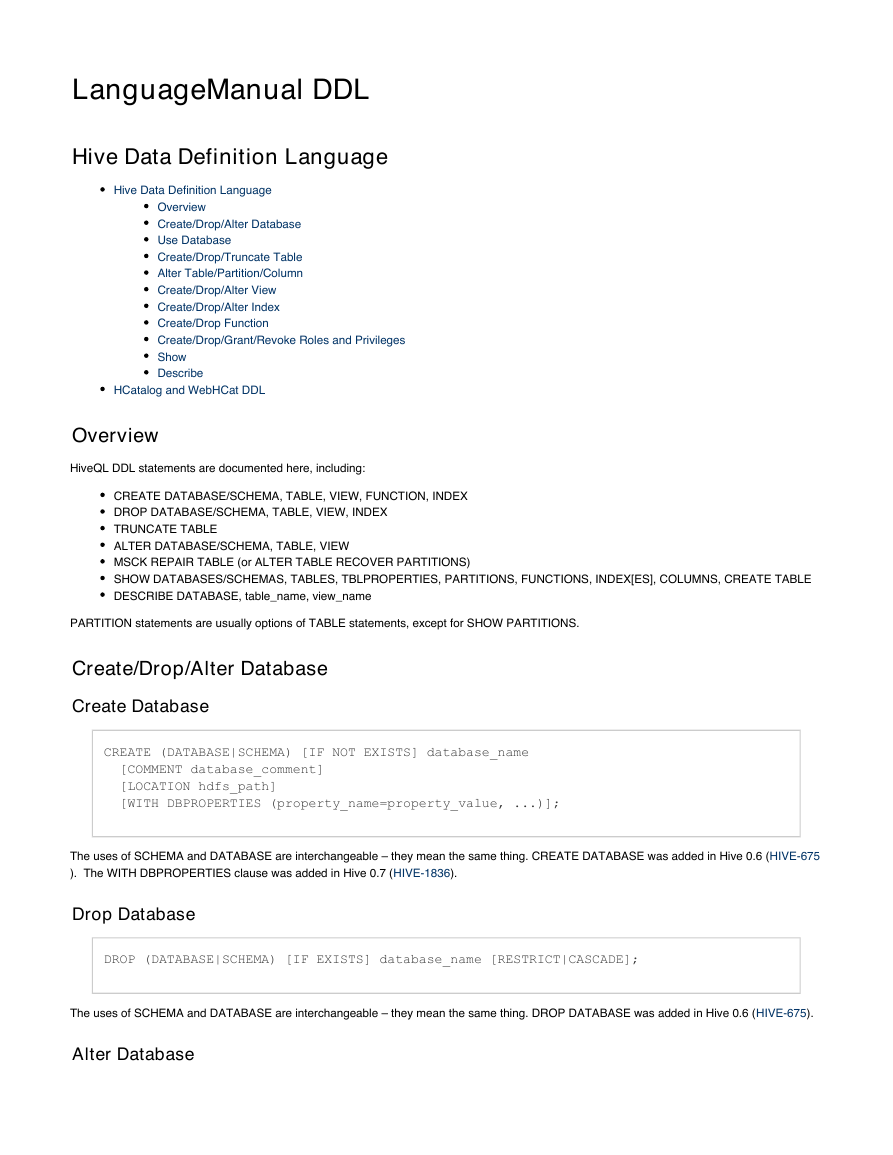

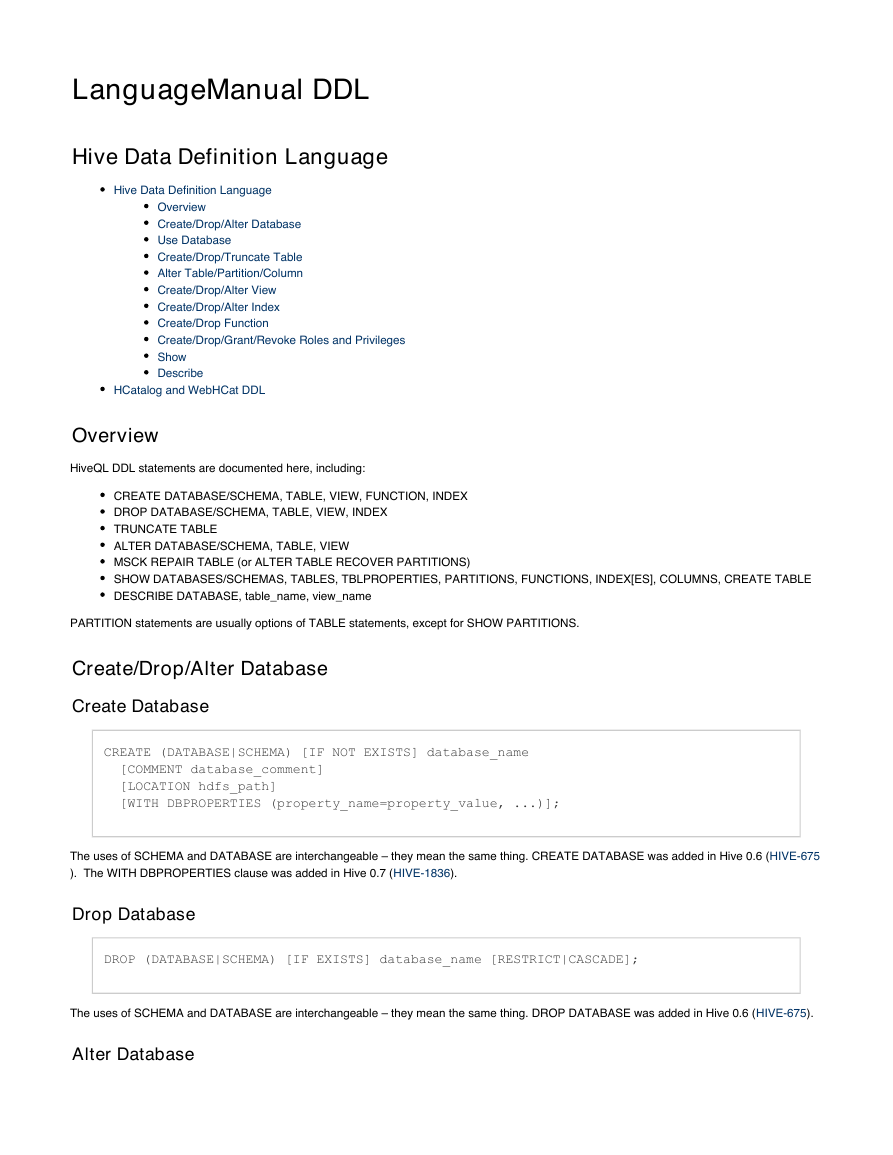

LanguageManual DDL

Hive Data Definition Language

Hive Data Definition Language

Overview

Create/Drop/Alter Database

Use Database

Create/Drop/Truncate Table

Alter Table/Partition/Column

Create/Drop/Alter View

Create/Drop/Alter Index

Create/Drop Function

Create/Drop/Grant/Revoke Roles and Privileges

Show

Describe

HCatalog and WebHCat DDL

Overview

HiveQL DDL statements are documented here, including:

CREATE DATABASE/SCHEMA, TABLE, VIEW, FUNCTION, INDEX

DROP DATABASE/SCHEMA, TABLE, VIEW, INDEX

TRUNCATE TABLE

ALTER DATABASE/SCHEMA, TABLE, VIEW

MSCK REPAIR TABLE (or ALTER TABLE RECOVER PARTITIONS)

SHOW DATABASES/SCHEMAS, TABLES, TBLPROPERTIES, PARTITIONS, FUNCTIONS, INDEX[ES], COLUMNS, CREATE TABLE

DESCRIBE DATABASE, table_name, view_name

PARTITION statements are usually options of TABLE statements, except for SHOW PARTITIONS.

Create/Drop/Alter Database

Create Database

CREATE (DATABASE|SCHEMA) [IF NOT EXISTS] database_name

[COMMENT database_comment]

[LOCATION hdfs_path]

[WITH DBPROPERTIES (property_name=property_value, ...)];

The uses of SCHEMA and DATABASE are interchangeable – they mean the same thing. CREATE DATABASE was added in Hive 0.6 ( HIVE-675

). The WITH DBPROPERTIES clause was added in Hive 0.7 (

HIVE-1836

).

Drop Database

DROP (DATABASE|SCHEMA) [IF EXISTS] database_name [RESTRICT|CASCADE];

The uses of SCHEMA and DATABASE are interchangeable – they mean the same thing. DROP DATABASE was added in Hive 0.6 (

HIVE-675

).

Alter Database

�

ALTER DATABASE database_name SET DBPROPERTIES (property_name=property_value, ...);

ALTER DATABASE database_name SET OWNER [USER|ROLE] user_or_role; (Hive 0.13.0 and

later)

No other metadata about a database can be changed.

Currently the SCHEMA keyword cannot be used as an alternative for DATABASE (see

HIVE-6601

).

Use Database

USE database_name;

USE DEFAULT;

USE sets the current database for all subsequent HiveQL statements. To revert to the default database, use the keyword "

database name.

default

" instead of a

USE database_name was added in Hive 0.6 (

HIVE-675

).

Create/Drop/Truncate Table

Create Table

Row Format, Storage Format, and SerDe

Partitioned Tables

External Tables

Create Table As Select (CTAS)

Create Table Like

Bucketed Sorted Tables

Skewed Tables

Temporary Tables

Drop Table

Truncate Table

Create Table

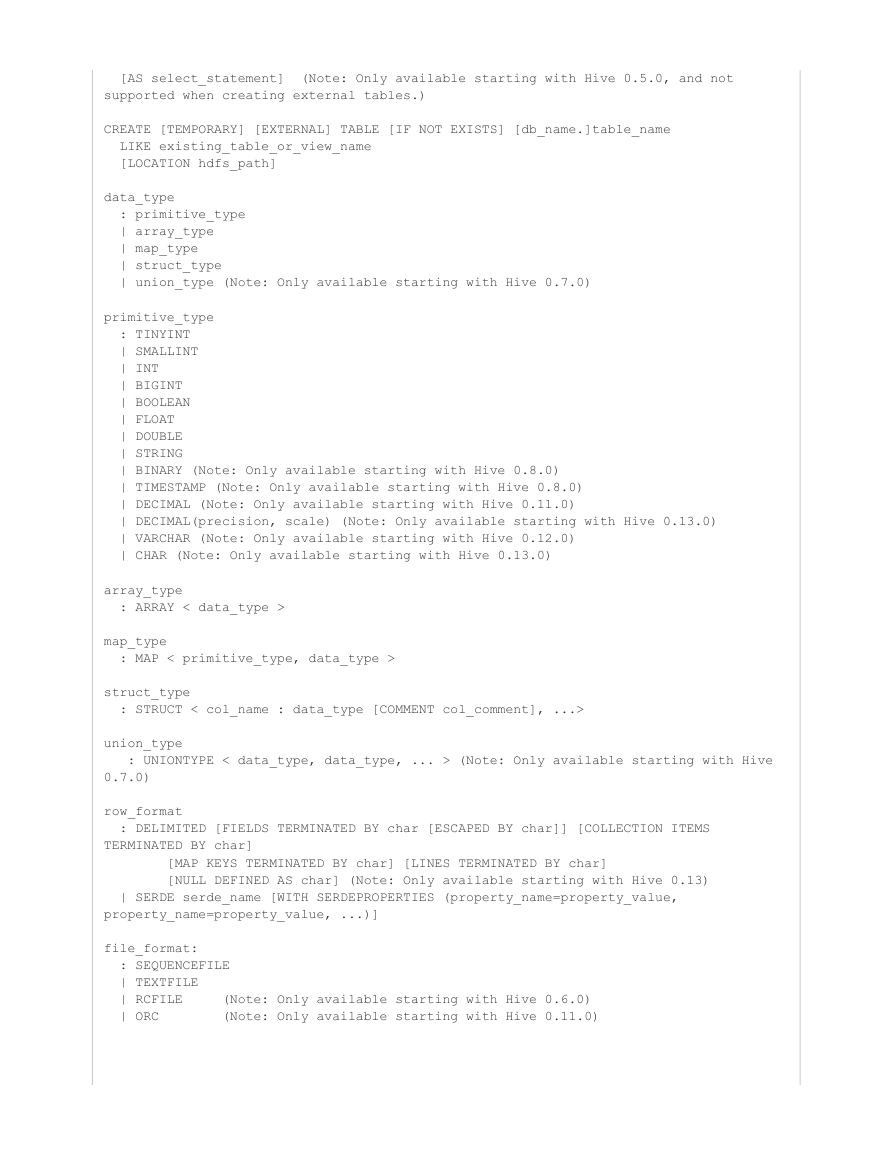

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name (Note:

TEMPORARY available starting with Hive 0.14.0)

[(col_name data_type [COMMENT col_comment], ...)]

[COMMENT table_comment]

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)]

[CLUSTERED BY (col_name, col_name, ...) [SORTED BY (col_name [ASC|DESC], ...)] INTO

num_buckets BUCKETS]

[SKEWED BY (col_name, col_name, ...) ON ([(col_value, col_value, ...),

...|col_value, col_value, ...])

[STORED AS DIRECTORIES] (Note: Only available

starting with Hive 0.10.0)]

[

[ROW FORMAT row_format] [STORED AS file_format]

| STORED BY 'storage.handler.class.name' [WITH SERDEPROPERTIES (...)] (Note: Only

available starting with Hive 0.6.0)

]

[LOCATION hdfs_path]

[TBLPROPERTIES (property_name=property_value, ...)] (Note: Only available starting

with Hive 0.6.0)

�

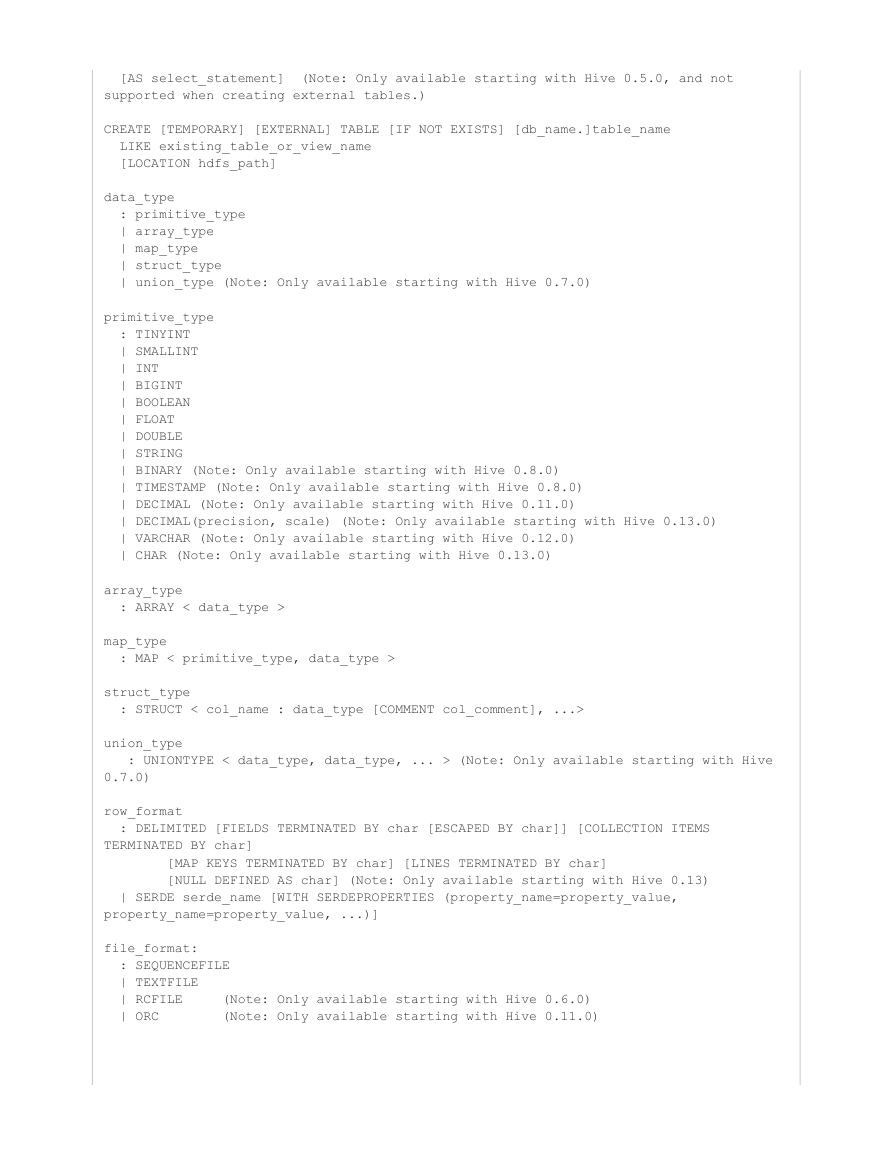

[AS select_statement] (Note: Only available starting with Hive 0.5.0, and not

supported when creating external tables.)

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name

LIKE existing_table_or_view_name

[LOCATION hdfs_path]

data_type

: primitive_type

| array_type

| map_type

| struct_type

| union_type (Note: Only available starting with Hive 0.7.0)

primitive_type

: TINYINT

| SMALLINT

| INT

| BIGINT

| BOOLEAN

| FLOAT

| DOUBLE

| STRING

| BINARY (Note: Only available starting with Hive 0.8.0)

| TIMESTAMP (Note: Only available starting with Hive 0.8.0)

| DECIMAL (Note: Only available starting with Hive 0.11.0)

| DECIMAL(precision, scale) (Note: Only available starting with Hive 0.13.0)

| VARCHAR (Note: Only available starting with Hive 0.12.0)

| CHAR (Note: Only available starting with Hive 0.13.0)

array_type

: ARRAY < data_type >

map_type

: MAP < primitive_type, data_type >

struct_type

: STRUCT < col_name : data_type [COMMENT col_comment], ...>

union_type

: UNIONTYPE < data_type, data_type, ... > (Note: Only available starting with Hive

0.7.0)

row_format

: DELIMITED [FIELDS TERMINATED BY char [ESCAPED BY char]] [COLLECTION ITEMS

TERMINATED BY char]

[MAP KEYS TERMINATED BY char] [LINES TERMINATED BY char]

[NULL DEFINED AS char] (Note: Only available starting with Hive 0.13)

| SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value,

property_name=property_value, ...)]

file_format:

: SEQUENCEFILE

| TEXTFILE

| RCFILE (Note: Only available starting with Hive 0.6.0)

| ORC (Note: Only available starting with Hive 0.11.0)

�

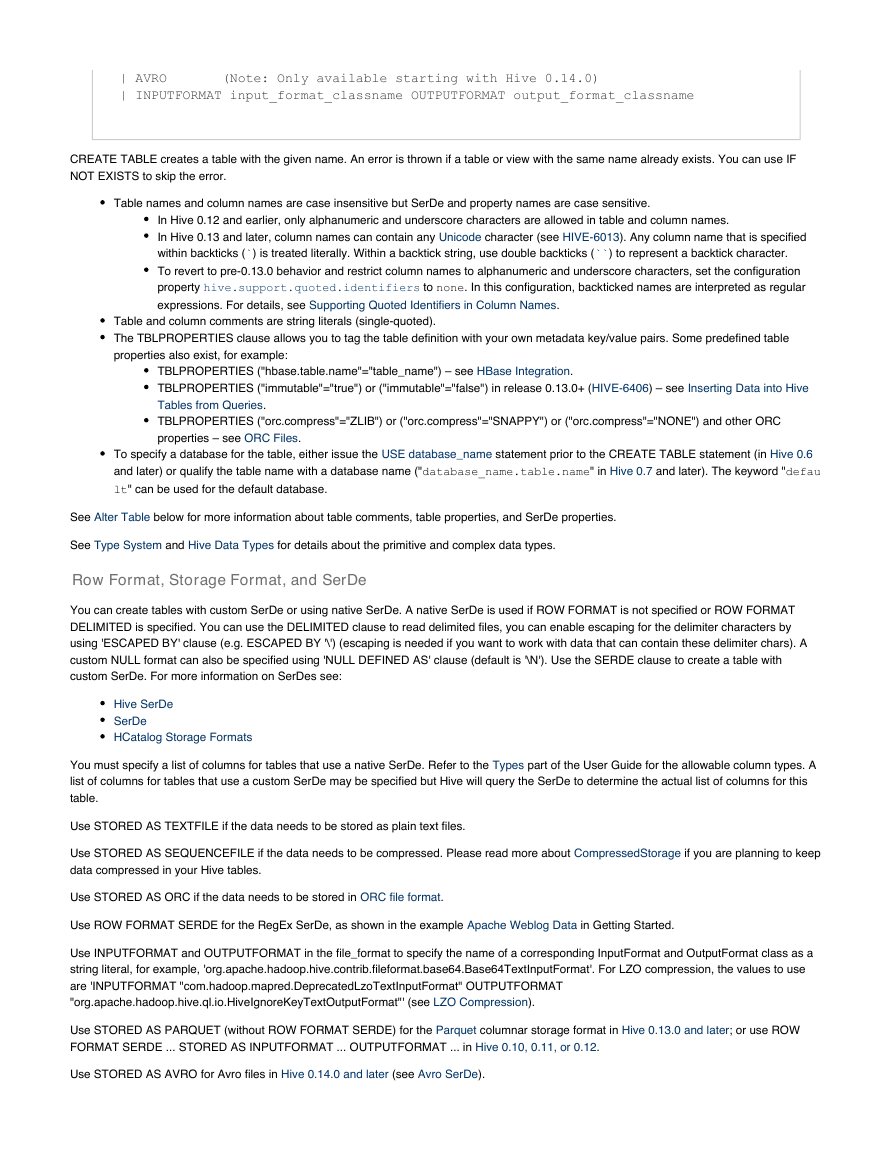

| AVRO (Note: Only available starting with Hive 0.14.0)

| INPUTFORMAT input_format_classname OUTPUTFORMAT output_format_classname

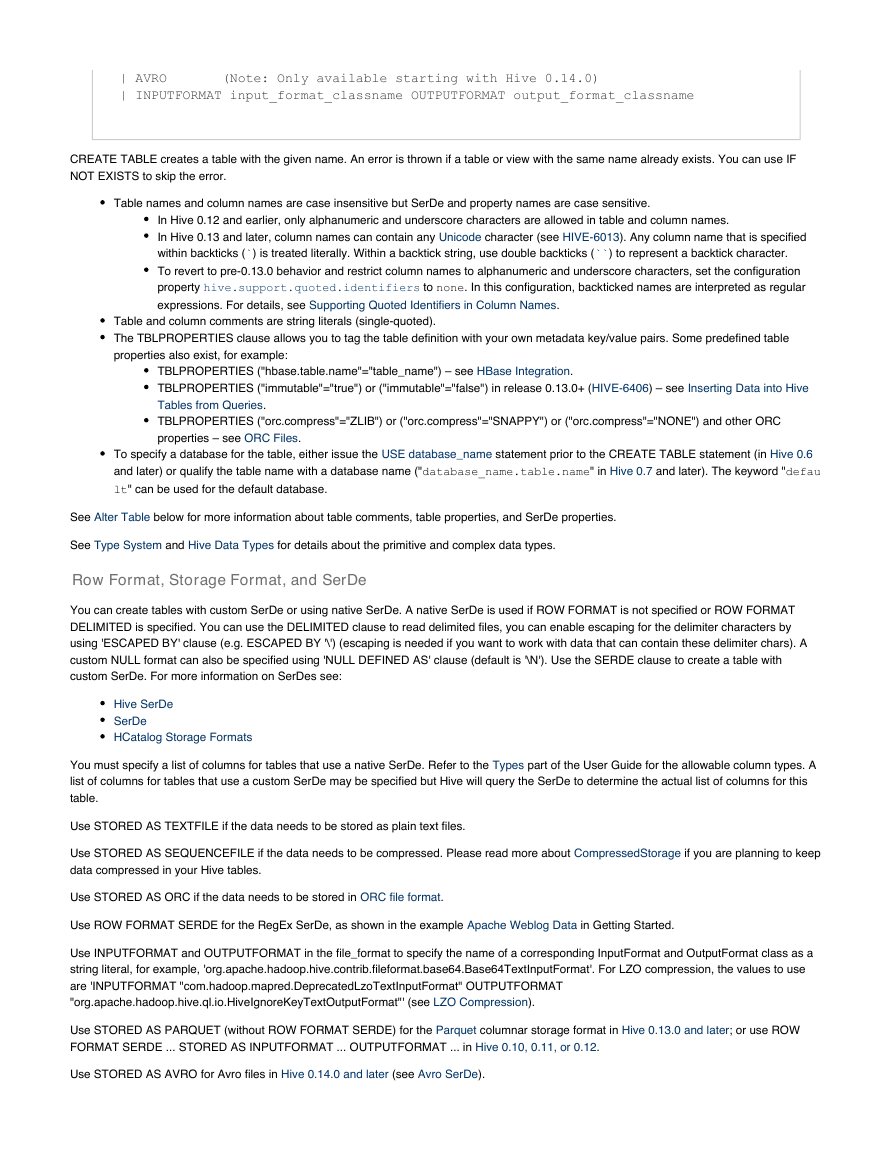

CREATE TABLE creates a table with the given name. An error is thrown if a table or view with the same name already exists. You can use IF

NOT EXISTS to skip the error.

Table names and column names are case insensitive but SerDe and property names are case sensitive.

In Hive 0.12 and earlier, only alphanumeric and underscore characters are allowed in table and column names.

In Hive 0.13 and later, column names can contain any

within backticks ( ) is treated literally. Within a backtick string, use double backticks (

To revert to pre-0.13.0 behavior and restrict column names to alphanumeric and underscore characters, set the configuration

property

. In this configuration, backticked names are interpreted as regular

expressions. For details, see

Supporting Quoted Identifiers in Column Names

hive.support.quoted.identifiers

). Any column name that is specified

) to represent a backtick character.

character (see

HIVE-6013

Unicode

none

to

``

`

.

Table and column comments are string literals (single-quoted).

The TBLPROPERTIES clause allows you to tag the table definition with your own metadata key/value pairs. Some predefined table

properties also exist, for example:

TBLPROPERTIES ("hbase.table.name"="

TBLPROPERTIES ("immutable"="true") or ("immutable"="false") in release 0.13.0+ (

Tables from Queries

TBLPROPERTIES ("orc.compress"="ZLIB") or ("orc.compress"="SNAPPY") or ("orc.compress"="NONE") and other ORC

properties – see

HBase Integration

table_name

HIVE-6406

ORC Files

") – see

) – see

.

.

.

Inserting Data into Hive

To specify a database for the table, either issue the

and later) or qualify the table name with a database name ("

lt

" can be used for the default database.

USE database_name

statement prior to the CREATE TABLE statement (in

database_name.table.name

" in

Hive 0.7

and later). The keyword "

Hive 0.6

defau

See

Alter Table

below for more information about table comments, table properties, and SerDe properties.

See

Type System

and

Hive Data Types

for details about the primitive and complex data types.

Row Format, Storage Format, and SerDe

You can create tables with custom SerDe or using native SerDe. A native SerDe is used if ROW FORMAT is not specified or ROW FORMAT

DELIMITED is specified. You can use the DELIMITED clause to read delimited files, you can enable escaping for the delimiter characters by

using 'ESCAPED BY' clause (e.g. ESCAPED BY '\') (escaping is needed if you want to work with data that can contain these delimiter chars). A

custom NULL format can also be specified using 'NULL DEFINED AS' clause (default is '\N'). Use the SERDE clause to create a table with

custom SerDe. For more information on SerDes see:

Hive SerDe

SerDe

HCatalog Storage Formats

You must specify a list of columns for tables that use a native SerDe. Refer to the

list of columns for tables that use a custom SerDe may be specified but Hive will query the SerDe to determine the actual list of columns for this

table.

part of the User Guide for the allowable column types. A

Types

Use STORED AS TEXTFILE if the data needs to be stored as plain text files.

Use STORED AS SEQUENCEFILE if the data needs to be compressed. Please read more about

data compressed in your Hive tables.

CompressedStorage

if you are planning to keep

Use STORED AS ORC if the data needs to be stored in

ORC file format

.

Use ROW FORMAT SERDE for the RegEx SerDe, as shown in the example

Apache Weblog Data

in Getting Started.

Use INPUTFORMAT and OUTPUTFORMAT in the file_format to specify the name of a corresponding InputFormat and OutputFormat class as a

string literal, for example, 'org.apache.hadoop.hive.contrib.fileformat.base64.Base64TextInputFormat'. For LZO compression, the values to use

are 'INPUTFORMAT "com.hadoop.mapred.DeprecatedLzoTextInputFormat" OUTPUTFORMAT

"org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat"' (see

LZO Compression

).

Use STORED AS PARQUET (without ROW FORMAT SERDE) for the

Parquet

FORMAT SERDE ... STORED AS INPUTFORMAT ... OUTPUTFORMAT ... in

columnar storage format in

Hive 0.10, 0.11, or 0.12

.

Hive 0.13.0 and later

; or use ROW

Use STORED AS AVRO for Avro files in

Hive 0.14.0 and later

(see

Avro SerDe

).

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc