Gaussian Processes for Regression:

A Quick Introduction

M. Ebden, August 2008

Comments to mark.ebden@eng.ox.ac.uk

1 MOTIVATION

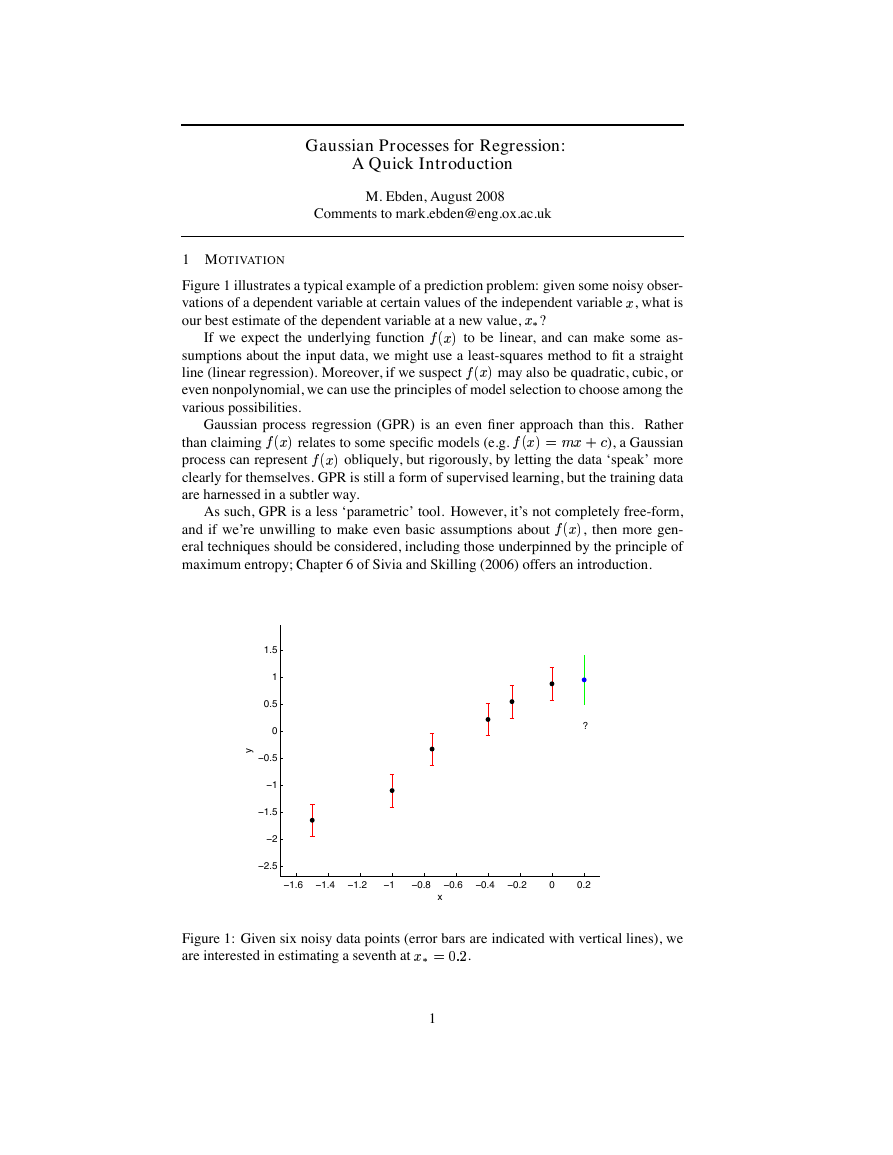

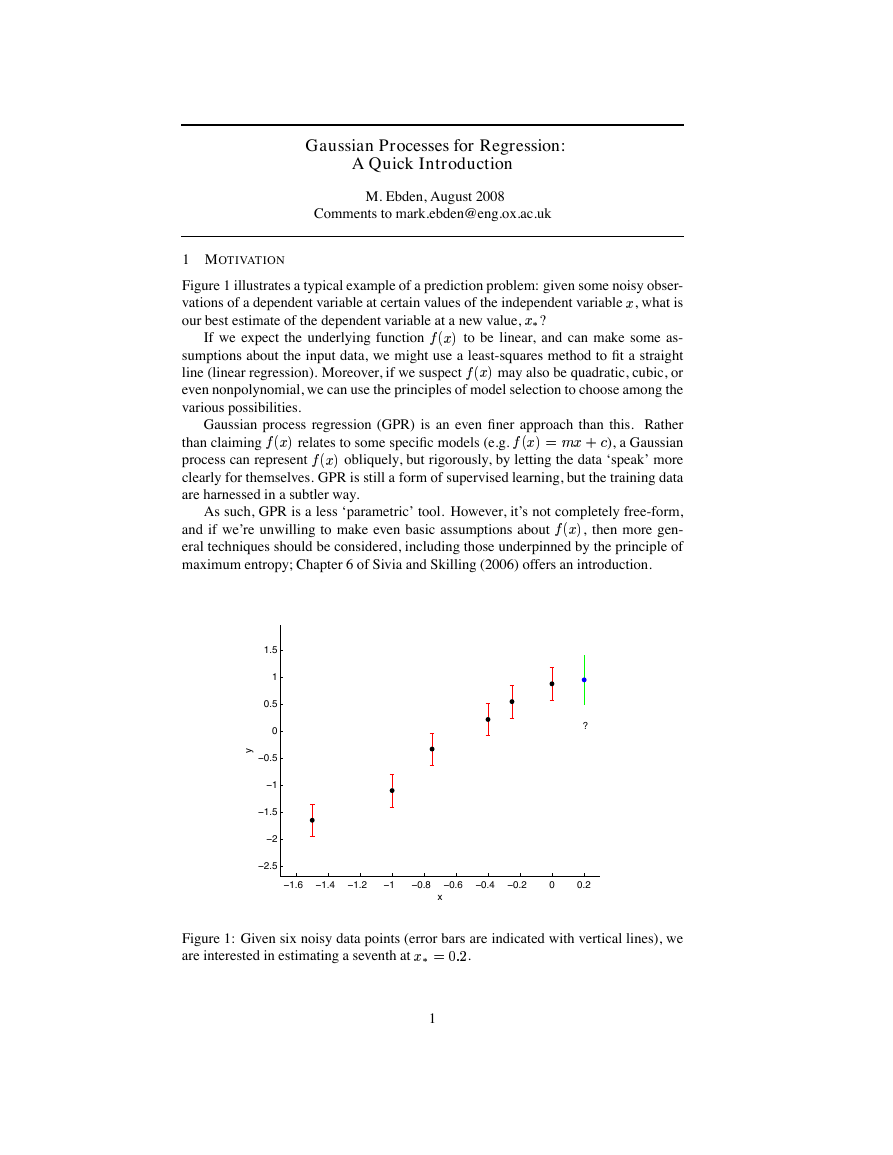

Figure 1 illustrates a typical example of a prediction problem: given some noisy obser-

, what is

Gaussian process regression (GPR) is an even finer approach than this. Rather

to be linear, and can make some as-

sumptions about the input data, we might use a least-squares method to fit a straight

even nonpolynomial, we can use the principles of model selection to choose among the

various possibilities.

vations of a dependent variable at certain values of the independent variable�

our best estimate of the dependent variable at a new value,��� ?

If we expect the underlying function���

���

line (linear regression). Moreover, if we suspect���

��� may also be quadratic, cubic, or

than claiming���

��� relates to some specific models (e.g.���

��� ��

���

�� ), a Gaussian

process can represent���

��� obliquely, but rigorously, by letting the data ‘speak’ more

and if we’re unwilling to make even basic assumptions about���

��� , then more gen-

eral techniques should be considered, including those underpinned by the principle of

maximum entropy; Chapter 6 of Sivia and Skilling (2006) offers an introduction.

clearly for themselves. GPR is still a form of supervised learning, but the training data

are harnessed in a subtler way.

As such, GPR is a less ‘parametric’ tool. However, it’s not completely free-form,

1.5

1

0.5

0

y

−0.5

−1

−1.5

−2

−2.5

?

−1.6

−1.4

−1.2

−1

−0.8

−0.6

−0.4

−0.2

0

0.2

x

Figure 1: Given six noisy data points (error bars are indicated with vertical lines), we

are interested in estimating a seventh at�

������� .

1

�

�

2 DEFINITION OF A GAUSSIAN PROCESS

A popular choice is the ‘squared exponential’,

is distant from

there is much flexibility built into (1).

through a Gaussian noise model:

Very often, it’s assumed that the mean of this partner GP is zero everywhere. What

Not quite enough flexibility though: the data are often noisy as well, from measure-

Gaussian processes (GPs) extend multivariate Gaussian distributions to infinite dimen-

sionality. Formally, a Gaussian process generates data located throughout some domain

such that any finite subset of the range follows a multivariate Gaussian distribution.

bution, after enough thought. Hence, working backwards, this data set can be partnered

with a GP. Thus GPs are as universal as they are simple.

��������� �!� �"�#�%$'& , can always be

Now, the� observations in an arbitrary data set,�

imagined as a single point sampled from some multivariate (� -variate) Gaussian distri-

�)�*�'+,� .

relates one observation to another in such cases is just the covariance function,(

��+,�

�.�0/21

�-�#�

3.4 57698;:

�%<

where the maximum allowable covariance is defined as/

3 — this should be high for

functions which cover a broad range on the� axis. If�?>��'+ , then(

�-�*��+,� approaches

is nearly perfectly correlated with���

this maximum, meaning���

�'+@� . This is good:

���

for our function to look smooth, neighbours must be alike. Now if�

�'+ , we have instead(

�-�*��+A�B>C� , i.e. the two points cannot ‘see’ each other. So, for

example, during interpolation at new� values, distant observations will have negligible

effect. How much effect this separation has will depend on the length parameter,< , so

ment errors and so on. Each observation� can be thought of as related to an underlying

function���

���

���

�D�

���-

FE

�G�#/

�H�

gression is the search for���

��� . Purely for simplicity of exposition in the next page, we

�-�#�'+,� , by writing

take the novel approach of folding the noise into(

�'+,�

L/

�.��/

�)�*�

�I�

�-�#�

$7M

4I576J8

�K<

�-�#�'+,�

whereM

�-�*��+,� . However, our redefinition of(

processes, they keep/G$

�)�*��+@�

separate from(

is equally suitable for working with problems of the sort posed in Figure 1. So, given�

� , not the ‘actual’�

, our objective is to predict�

� ; their expected values

observations�

� , and of�

process. e.g. in Figure 1, the expected value of�

� , is the dot at�

� .)

VXW

�2�;�#��� �

�2�;�#�'$��

�TS S!S

���;�*�

�#��� �

�*�

�TS S!S

�#�'$��

�PO

�'$2�#�'$��

�'$2�#���I�

�US S!S

��$��*�

���%�*�

�\S!S S

���K�*��$G�^]

�[Z

�_�

Confirm for yourself that the diagonal elements ofN

`/

off-diagonal elements tend to zero when�

are identical according to (2), but their variances differ owing to the observational noise

something which should look familiar to those who’ve done regression before. Re-

To prepare for GPR, we calculate the covariance function, (3), among all possible

combinations of these points, summarizing our findings in three matrices:

spans a large enough domain.

, and that its extreme

is the Kronecker delta function.

(When most people use Gaussian

...

...

...

(1)

(2)

(3)

(4)

(5)

...

2

are/

���%�*�2� �

�*�

�I�

�

(

�

+

�

�

:

1

1

=

�

1

�

�

�

1

$

�

(

�

+

1

3

:

�

�

:

1

1

=

1

�

+

�

�

�

N

Q

Q

Q

R

(

�

(

�

1

(

�

(

�

�

1

(

�

�

1

1

(

�

�

1

(

�

(

�

1

(

�

W

W

Y

N

�

(

�

(

�

1

(

�

N

�

(

�

�

�

�

1

3

1

$

�

3 HOW TO REGRESS USING GAUSSIAN PROCESSES

Since the key assumption in GP modelling is that our data can be represented as a

sample from a multivariate Gaussian distribution, we have that

�a�

=cb

Nhg

�i�

=kj

Nqp

�H�

is the mean of this distribution:

We’re now ready to tackle the data in Figure 1.

�i�

NLp

�_�

, at

(6)

� ?”. As

(7)

(8)

(9)

and the uncertainty in our estimate is captured in its variance:

on this later), we have enough to calculate a covariance matrix using (4):

indicates matrix transposition. We are of course interested in the conditional

explained more slowly in the Appendix, the probability follows a Gaussian distribution:

wherel

� : “given the data, how likely is a certain prediction for�

�on

probabilitym

���

Our best estimate for�

EedGf-�

NLp

���!�.�

r;sKt

�vu observations�

1. There are�

�o�

�o�

�G�

{��

�G�

�oy|�G�

y%�

�G��z%y

wh�

:Bx

:Bx

from the error bars. With judicious choices of/

We know/G$D���G�

VXW

~�z|���zK�����y

{a�

��zK�

���

��zK�

}K{

���

�z

{a�

���

y%u

y%u

���

��y

{��

��zK�

xx

xTx

{o~

��yK

�z;�

}%{

~az

�G�

{a�

�G��z%�

��yKu

��yK

�z;�

xx

az

�G�

�z;�

{o~

��yKu

�G�

xx

From (5) we also haveN

��zK� and

�i��

��yK~

{ou

}oy

�%}

}o~T�G��zK

�G�

�.�v�����

�y andr;sKt

�0�G�

2. From (8) and (9),�

x .

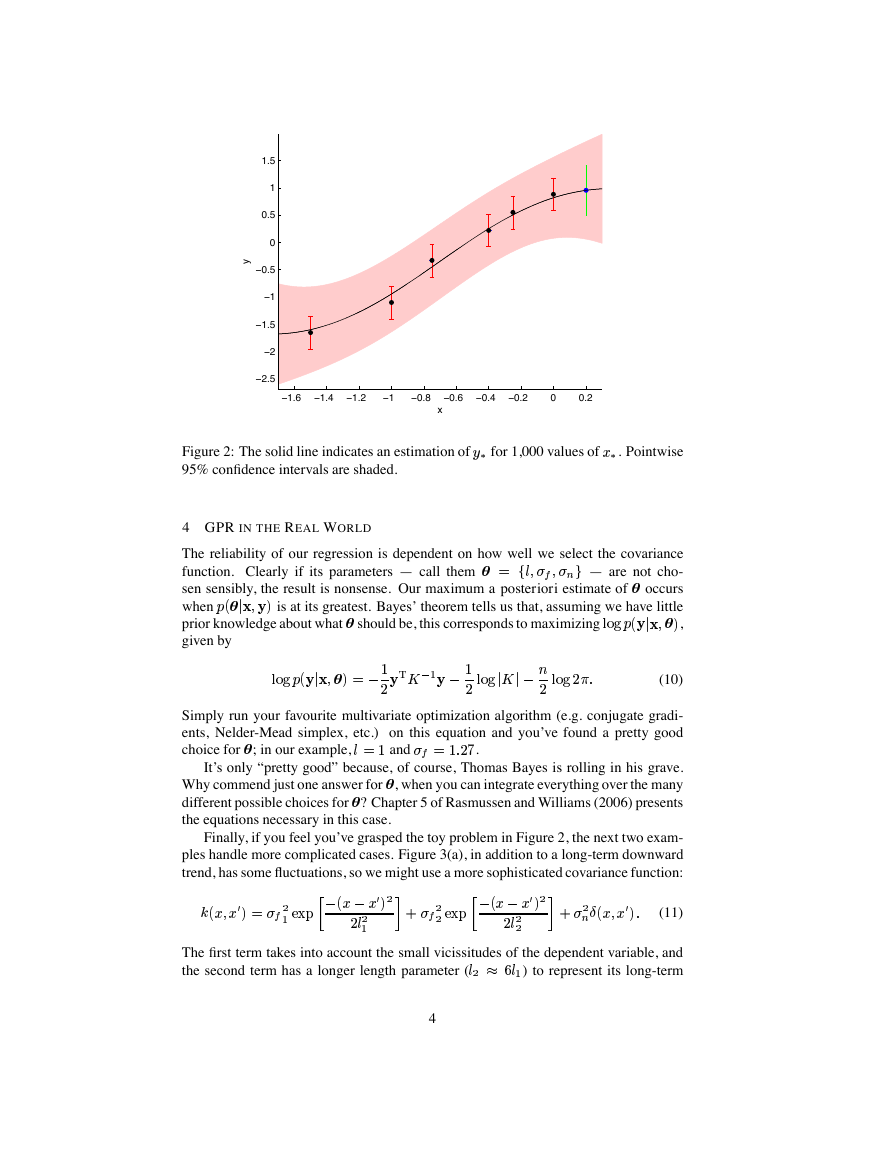

� .

estimation of the dependent variable at���

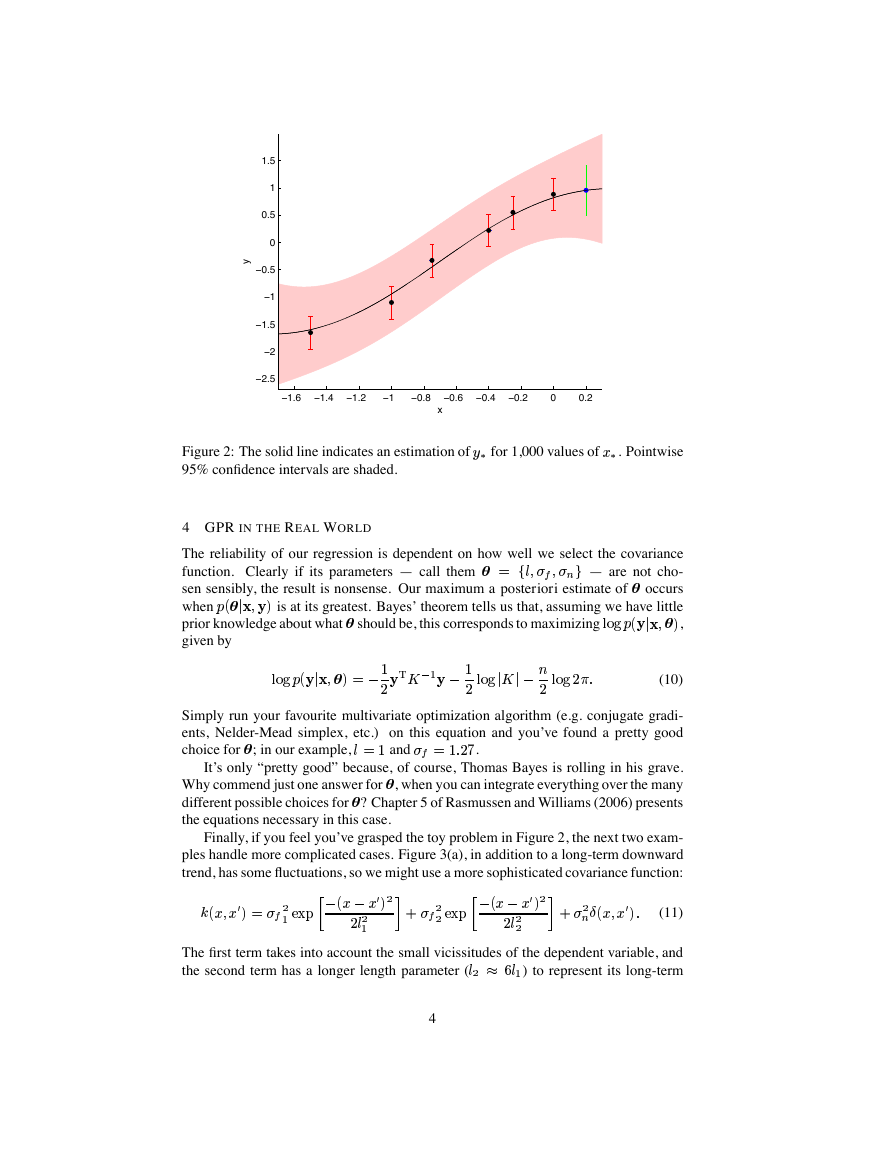

�0�G�

of the� axis, as shown in Figure 2. (In fact, equivalently, we could avoid the repetition

by performing the above procedure once with suitably largerN

�_� matrices.

In this case, since there are 1,000 test points spread over the�

�_� would be

of size 1,000 1,000.) Rather than plotting simple error bars, we’ve decided to plot

� , giving a 95% confidence interval.

%uG

r;sKt

�.

]��

3 and< (more

We can repeat the above procedure for various other points spread over some portion

3. Figure 1 shows a data point with a question mark underneath, representing the

]�

� andN

axis,N

3

8

�

8

N

�

N

�

N

�

�

�

�

n

�

b

E

�

N

�

�

�

�

N

:

N

�

�

N

g

�

�

�

�

�

N

�

�

�

�

�

N

:

N

�

N

p

�

N

g

�

�

Z

�

�

:

:

:

}

N

�

O

Q

Q

Q

Q

Q

Q

R

x

x

�

x

�

�

x

x

x

�

x

x

�

x

�

x

x

x

�

�

�

x

x

�

x

x

x

�

x

�

y

x

x

x

x

�

x

�

�

�

x

x

x

y

x

x

�

�

�

x

x

W

W

W

W

W

Y

�

x

N

�

�

Z

x

�

x

�

x

�

x

�

�

�

�

�

x

�

�

�

�

�

1.5

1

0.5

0

y

−0.5

−1

−1.5

−2

−2.5

−1.6

−1.4

−1.2

−1

−0.8

−0.6

−0.4

−0.2

0

0.2

x

is at its greatest. Bayes’ theorem tells us that, assuming we have little

w�

given by

o"m

95% confidence intervals are shaded.

4 GPR IN THE REAL WORLD

The reliability of our regression is dependent on how well we select the covariance

Simply run your favourite multivariate optimization algorithm (e.g. conjugate gradi-

ents, Nelder-Mead simplex, etc.) on this equation and you’ve found a pretty good

� for 1,000 values of�

��<�i/

Figure 2: The solid line indicates an estimation of�

� . Pointwise

�#/G$�& — are not cho-

function. Clearly if its parameters — call them

sen sensibly, the result is nonsense. Our maximum a posteriori estimate of occurs

whenm

w�

� ,

prior knowledge about what should be, this corresponds to maximizing%"m

NLp

w�

�.�

�K�

%

%

���oz .

x and/

choice for ; in our example,<-�

Why commend just one answer for

different possible choices for ? Chapter 5 of Rasmussen and Williams (2006) presents

�'+,�

�'+,�

�I�

�-�*�

4I576J8

�K<

�K<

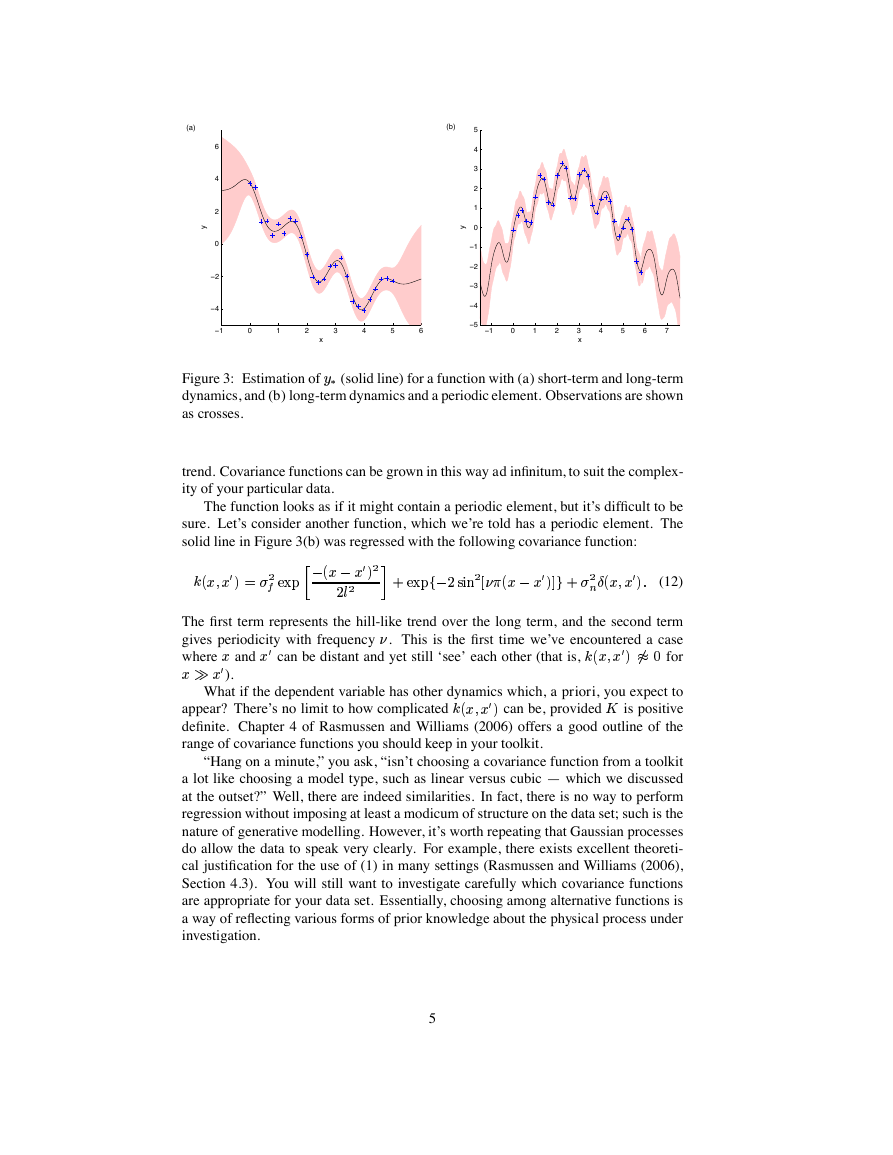

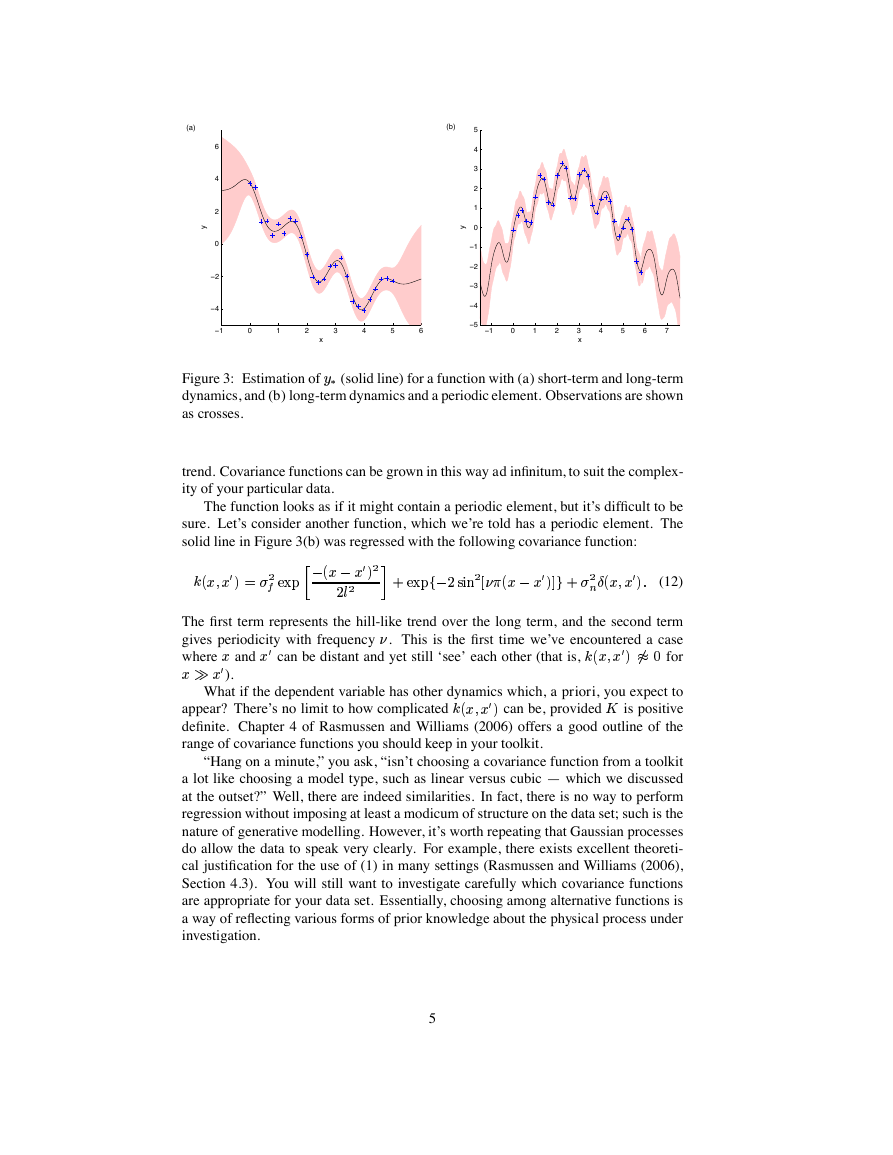

>u%<� ) to represent its long-term

the second term has a longer length parameter (<

Finally, if you feel you’ve grasped the toy problem in Figure 2, the next two exam-

ples handle more complicated cases. Figure 3(a), in addition to a long-term downward

trend, has some fluctuations, so we might use a more sophisticated covariance function:

It’s only “pretty good” because, of course, Thomas Bayes is rolling in his grave.

, when you can integrate everything over the many

The first term takes into account the small vicissitudes of the dependent variable, and

�.��/

��4I5768

(10)

(11)

the equations necessary in this case.

/

4

L/

�-�#�

3

�

n

�

�

�

�

n

�

�

n

:

x

�

�

g

�

�

:

x

�

n

N

n

:

�

�

3

�

x

(

�

+

3

1

:

�

�

:

1

1

�

=

3

1

1

:

�

�

:

1

1

1

=

1

$

M

�

+

1

�

(a)

y

6

4

2

0

−2

−4

−1

0

1

2

3

4

5

6

x

(b)

y

5

4

3

2

1

0

−1

−2

−3

−4

−5

−1

0

1

2

4

5

6

7

3

x

Figure 3: Estimation of�

dynamics, and (b) long-term dynamics and a periodic element. Observations are shown

as crosses.

� (solid line) for a function with (a) short-term and long-term

trend. Covariance functions can be grown in this way ad infinitum, to suit the complex-

ity of your particular data.

The function looks as if it might contain a periodic element, but it’s difficult to be

sure. Let’s consider another function, which we’re told has a periodic element. The

solid line in Figure 3(b) was regressed with the following covariance function:

The first term represents the hill-like trend over the long term, and the second term

. This is the first time we’ve encountered a case

for

�'+,�

�^&

L/

a

�#71%

�)�*�

�I�

�)�*�

$�M

4I576

�K<

gives periodicity with frequency

where� and�'+ can be distant and yet still ‘see’ each other (that is,(

�-�*��+,�?

>¡�

��¢£��+ ).

�)�*�'+,� can be, providedN

appear? There’s no limit to how complicated(

What if the dependent variable has other dynamics which, a priori, you expect to

is positive

definite. Chapter 4 of Rasmussen and Williams (2006) offers a good outline of the

range of covariance functions you should keep in your toolkit.

“Hang on a minute,” you ask, “isn’t choosing a covariance function from a toolkit

a lot like choosing a model type, such as linear versus cubic — which we discussed

at the outset?” Well, there are indeed similarities. In fact, there is no way to perform

regression without imposing at least a modicum of structure on the data set; such is the

nature of generative modelling. However, it’s worth repeating that Gaussian processes

do allow the data to speak very clearly. For example, there exists excellent theoreti-

cal justification for the use of (1) in many settings (Rasmussen and Williams (2006),

Section 4.3). You will still want to investigate carefully which covariance functions

are appropriate for your data set. Essentially, choosing among alternative functions is

a way of reflecting various forms of prior knowledge about the physical process under

investigation.

�.�v/

4I576J8

(12)

5

(

�

+

1

3

:

�

�

:

1

1

=

�

:

�

�

:

�

+

1

�

+

�

�

�

5 DISCUSSION

We’ve presented a brief outline of the mathematics of GPR, but practical implementa-

tion of the above ideas requires the solution of a few algorithmic hurdles as opposed

to those of data analysis. If you aren’t a good computer programmer, then the code

for Figures 1 and 2 is at ftp://ftp.robots.ox.ac.uk/pub/outgoing/mebden/misc/GPtut.zip,

and more general code can be found at http://www.gaussianprocess.org/gpml.

We’ve merely scratched the surface of a powerful technique (MacKay, 1998). First,

although the focus has been on one-dimensional inputs, it’s simple to accept those of

higher dimension. Whereas� would then change from a scalar to a vector,(

can be replaced with functions of�

would remain a scalar and so the maths overall would be virtually unchanged. Second,

the zero vector representing the mean of the multivariate Gaussian distribution in (6)

. Third, in addition to their use in regression, GPs

are applicable to integration, global optimization, mixture-of-experts models, unsuper-

vised learning models, and more — see Chapter 9 of Rasmussen and Williams (2006).

The next tutorial will focus on their use in classification.

�)�*�

REFERENCES

MacKay, D. (1998).

In C.M. Bishop (Ed.), Neural networks and machine learning.

(NATO ASI Series, Series F, Computer and Systems Sciences, Vol. 168, pp. 133-

166.) Dordrecht: Kluwer Academic Press.

Rasmussen, C. and C. Williams (2006). Gaussian Processes for Machine Learning.

MIT Press.

Sivia, D. and J. Skilling (2006). Data Analysis: A Bayesian Tutorial (second ed.).

Oxford Science Publications.

APPENDIX

6

same as writing

taken from some multivariate Gaussian distribution with zero

arbitrarily into two

Imagine a data sample¤

. Now decompose¤

mean and a covariance given by matrix¥

f-�

� would be the

consecutive subvectors¦ and§ — in other words, writing¤

Eed�f"�

8¨

=Db

=«j

are the corresponding bits and pieces that make up¥

where¨

, and©

,ª

Interestingly, the conditional distribution of§ given¦

If the covariance matrix¥ were diagonal or even block diagonal, then knowing¦

f"�

� . On the other hand, if

: specifically,§

wouldn’t tell us anything about§

© were nonzero, then some matrix algebra leads us to

©¬¨

©¬¨

The mean,©¬¨

in¥

ance,ª

, is the ‘Schur complement of¨

©¬¨

In summary, if we know some of¤

the rest of¤ might be, thanks to the revealing off-diagonal elements of¥

, is known as the ‘matrix of regression coefficients’, and the vari-

, we can use that to inform our estimate of what

is itself Gaussian-distributed.

�H�

’.

(13)

(14)

.

.

�

+

�

b

E

�

¥

8

¦

§

©

g

©

ª

�

n

¦

b

E

�

ª

§

n

¦

b

E

�

p

�

¦

�

ª

:

p

�

©

g

p

�

¦

:

p

�

©

g

�

Gaussian Processes for Classification:

A Quick Introduction

M. Ebden, August 2008

Prerequisite reading: Gaussian Processes for Regression

1 OVERVIEW

:Bx

steps instead of one:

Writing these two steps schematically,

, are linked to

involves two

for the other. In principle, we could try fitting a

As mentioned in the previous document, GPs can be applied to problems other than

represent the probability of a data point belonging to one of say two types, and voil`a,

we can ascertain classifications. This is the subject of the current document.

, it can

���

regression. For example, if the output of a GP is squashed onto the range

The big difference between GPR and GPC is how the output data,�

the underlying function outputs, . They are no longer connected simply via a noise

process as in (2) in the previous document, but are instead now discrete: say�L�

precisely for one class and��

GP that produces an output of approximatelyx for some values of� and approximately

:Bx for others, simulating this discretization. Instead, we interpose the GP between the

data and a squashing function; then, classification of a new data point�

1. Evaluate a ‘latent function’� which models qualitatively how the likelihood of

one class versus the other changes over the� axis. This is the GP.

2. Squash the output of this latent function onto

using any sigmoidal function,

�®�

�.�

� .

�®�

latent function,�

data,�

class probability,

�%n

:H:�:�:*¯

�®�

� . Although other forms will do, here

Before we get started, a quick note on

�®�

� . This± -shaped

we will prescribe it to be the cumulative Gaussian distribution,°

�®�

�®�

function satisfies our needs, mapping high�

x , and low�

into

into

�²>

�«>�� .

self that, if there were no noise (/G$D�v� ), the two equations could be rewritten as

f-�

�_�

=j

NLp

�H�

The next section will walk you through more slowly how such a classifier operates.

Section 3 explains how to train the classifier, so perhaps we’re presenting things in

reverse order! Section 4 handles classification when there are more than two classes.

A second quick note, revisiting (6) and (7) in the first document: confirm for your-

prob�

�D�

GP:7¯

.

(1)

(2)

�G�

sigmoid

and

NLp

�_�

1

x

x

�

x

x

n

�

�

�

�

�

�

8

�

�

=

b

E

d

8

N

N

g

�

N

�

N

�

�

n

b

E

�

N

�

�

�

N

:

N

�

�

N

g

�

�

2 USING THE CLASSIFIER

, and their corresponding expert-

. And suppose that in the process we formed some GP outputs

Suppose we’ve trained a classifier from�

labelled output data,�

corresponding to these data, which have some uncertainty but mean values given by

. We’re now ready to input a new data point,��� , in the left side of our schematic, in

order to determine at the other end the probability2� of its class membership.

In the first step, finding the probabilitym

�®�

NLp

�I�

�²�E

(N

+ will be explained soon, but for now consider it to be very similar toN

second step, we squash�

�®�

� � �

to find the probability of class membership,��¬�´

�®�

� � . The expected value is

�®�

�´µ¶

This is the integral of a cumulative Gaussian times a Gaussian, which can be solved

analytically. By Section 3.9 of Rasmussen and Williams (2006), the solution is:

is similar to GPR, i.e. we adapt (2):

.) In the

(3)

(4)

(5)

input data,w

�®�

�i�

�®�

�%n

r;sKt

�®�

�*·

�!�;j

An example is depicted in Figure 1.

3 TRAINING THE GP IN THE CLASSIFIER

Let’s focus on the two factors in the numerator. Assuming the data set is i.i.d.,

Among the many GPs which could be partnered with our data set, naturally we’d

ducing (3), the first step of the classifier. The second step of the classifier does not

require training as it’s a fixed sigmoidal function.

GP, how likely they are to be appropriate for the training data can be decomposed using

Bayes’ theorem:

Our objective now is to find³

andN

+ , so that we know everything about the GP pro-

like to compare their usefulness quantitatively. Considering the outputs of a certain

w-�

��

w�

�²�

�I�

¹º

Dropping the subscripts in the product,m

�®�

�®�

� . Specifically,m

� by definition, and to complete the picture,

is

���

�?�

� . A terse way of combining these two cases is to write

:Bx

x»:

�.�

� .

w-� . This is related to the output of the

The second factor in the numerator ism

w-�

first step of our schematic drawing, but first we’re interested in the value ofm

w�

� . This occurs when the derivative

which maximizes the posterior probabilitym

is informed by our sigmoid function,

�´�

�®�

w-�

(6)

(7)

2

³

�

n

�

m

�

n

�

N

�

�

³

�

N

:

N

�

�

N

+

�

p

�

N

g

�

�

°

�

�

�

m

�

�

�

�

�

°

d

�

�

x

�

m

�

n

�

m

�

�

n

�

m

�

n

m

�

�

n

�

m

�

�

n

$

¸

�

m

�

�

¹

n

�

¹

�

�

n

�

�

�

x

n

�

�

m

�

n

�

m

�

�

n

�

°

�

�

�

�

n

�

n

�

n

�

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc