See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/2985446

Gradient-Based Learning Applied to Document Recognition

READS

12,451

Y. Bengio

Université de Montréal

680 PUBLICATIONS 91,012 CITATIONS

SEE PROFILE

Article in Proceedings of the IEEE · December 1998

DOI: 10.1109/5.726791 · Source: IEEE Xplore

CITATIONS

10,313

4 authors, including:

Yann Lecun

New York University

522 PUBLICATIONS 49,788 CITATIONS

SEE PROFILE

Patrick Haffner

Interactions LLC

81 PUBLICATIONS 14,069 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

MoDeep View project

Parsing View project

All content following this page was uploaded by Yann Lecun on 23 May 2013.

The user has requested enhancement of the downloaded file.

�

YannLeCun,LeonBottou,YoshuaBengio,andPatrickHaner

I.Introduction

PROC.OFTHEIEEE,NOVEMBER� �

�

Gradient-BasedLearningAppliedtoDocument

Recognition

Abstract|

MultilayerNeuralNetworkstrainedwiththebackpropa-

gationalgorithmconstitutethebestexampleofasuccessful

Overthelastseveralyears,machinelearningtechniques,

Gradient-BasedLearningtechnique.Givenanappropriate

particularlywhenappliedtoneuralnetworks,haveplayed

networkarchitecture,Gradient-BasedLearningalgorithms

anincreasinglyimportantroleinthedesignofpattern

canbeusedtosynthesizeacomplexdecisionsurfacethatcan

recognitionsystems.Infact,itcouldbearguedthatthe

classifyhigh-dimensionalpatternssuchashandwrittenchar-

acters,withminimalpreprocessing.Thispaperreviewsvar-

availabilityoflearningtechniqueshasbeenacrucialfac-

iousmethodsappliedtohandwrittencharacterrecognition

torintherecentsuccessofpatternrecognitionapplica-

andcomparesthemonastandardhandwrittendigitrecog-

tionssuchascontinuousspeechrecognitionandhandwrit-

nitiontask.ConvolutionalNeuralNetworks,thatarespecif-

icallydesignedtodealwiththevariabilityof�Dshapes,are

ingrecognition.

showntooutperformallothertechniques.

Themainmessageofthispaperisthatbetterpattern

Real-lifedocumentrecognitionsystemsarecomposed

recognitionsystemscanbebuiltbyrelyingmoreonauto-

ofmultiplemodulesincludingeldextraction,segmenta-

tion,recognition,andlanguagemodeling.Anewlearning

maticlearning,andlessonhand-designedheuristics.This

paradigm,calledGraphTransformerNetworks(GTN),al-

ismadepossiblebyrecentprogressinmachinelearning

lowssuchmulti-modulesystemstobetrainedgloballyusing

andcomputertechnology.Usingcharacterrecognitionas

Gradient-Basedmethodssoastominimizeanoverallper-

acasestudy,weshowthathand-craftedfeatureextrac-

formancemeasure.

Twosystemsforon-linehandwritingrecognitionarede-

tioncanbeadvantageouslyreplacedbycarefullydesigned

scribed.Experimentsdemonstratetheadvantageofglobal

learningmachinesthatoperatedirectlyonpixelimages.

training,andtheexibilityofGraphTransformerNetworks.

Usingdocumentunderstandingasacasestudy,weshow

AGraphTransformerNetworkforreadingbankcheckis

thatthetraditionalwayofbuildingrecognitionsystemsby

alsodescribed.ItusesConvolutionalNeuralNetworkchar-

acterrecognizerscombinedwithglobaltrainingtechniques

manuallyintegratingindividuallydesignedmodulescanbe

toprovidesrecordaccuracyonbusinessandpersonalchecks.

replacedbyauniedandwell-principleddesignparadigm,

Itisdeployedcommerciallyandreadsseveralmillionchecks

calledGraphTransformerNetworks,thatallowstraining

perday.

allthemodulestooptimizeaglobalperformancecriterion.

Keywords|NeuralNetworks,OCR,DocumentRecogni-

tion,MachineLearning,Gradient-BasedLearning,Convo-

Sincetheearlydaysofpatternrecognitionithasbeen

lutionalNeuralNetworks,GraphTransformerNetworks,Fi-

knownthatthevariabilityandrichnessofnaturaldata,

niteStateTransducers.Nomenclature

beitspeech,glyphs,orothertypesofpatterns,makeit

almostimpossibletobuildanaccuraterecognitionsystem

entirelybyhand.Consequently,mostpatternrecognition

systemsarebuiltusingacombinationofautomaticlearn-

GTGraphtransformer.

ingtechniquesandhand-craftedalgorithms.Theusual

GTNGraphtransformernetwork.

methodofrecognizingindividualpatternsconsistsindivid-

HMMHiddenMarkovmodel.

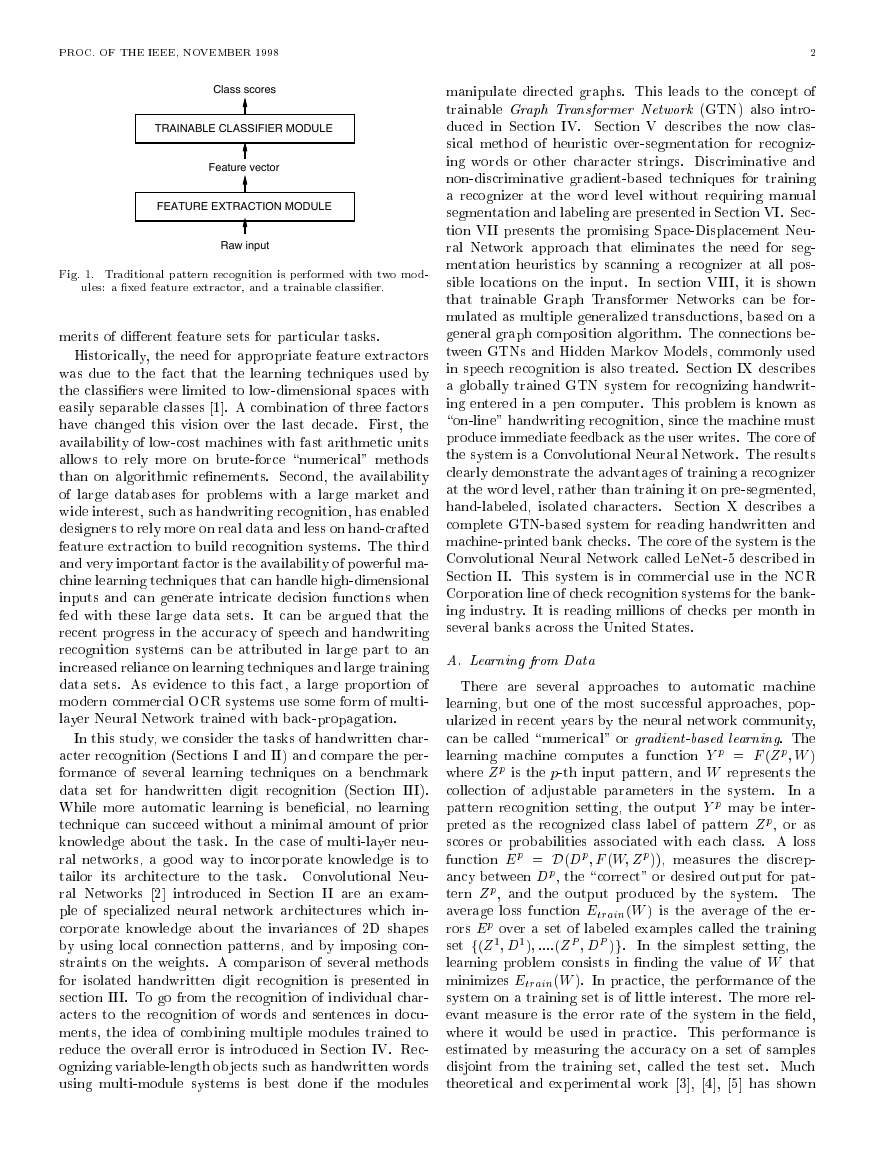

ingthesystemintotwomainmodulesshowningure�.

HOSHeuristicoversegmentation.

Therstmodule,calledthefeatureextractor,transforms

K-NNK-nearestneighbor.

theinputpatternssothattheycanberepresentedbylow-

NNNeuralnetwork.

dimensionalvectorsorshortstringsofsymbolsthat(a)can

OCROpticalcharacterrecognition.

beeasilymatchedorcompared,and(b)arerelativelyin-

PCAPrincipalcomponentanalysis.

variantwithrespecttotransformationsanddistortionsof

RBFRadialbasisfunction.

theinputpatternsthatdonotchangetheirnature.The

RS-SVMReduced-setsupportvectormethod.

featureextractorcontainsmostofthepriorknowledgeand

SDNNSpacedisplacementneuralnetwork.

isratherspecictothetask.Itisalsothefocusofmostof

SVMSupportvectormethod.

thedesigneort,becauseitisoftenentirelyhand-crafted.

TDNNTimedelayneuralnetwork.

Theclassier,ontheotherhand,isoftengeneral-purpose

V-SVMVirtualsupportvectormethod.

andtrainable.Oneofthemainproblemswiththisap-

proachisthattherecognitionaccuracyislargelydeter-

ImagePro-

arewith

Speech

the

The

authors

and

minedbytheabilityofthedesignertocomeupwithan

cessing

Services

Research

Laboratory,

AT&T

Labs-

appropriatesetoffeatures.Thisturnsouttobeadaunt-

Research,���SchulzDriveRedBank,NJ�����.

E-mail:

fyann,leonb,yoshua,hanerg@research.att.com.

YoshuaBengio

ingtaskwhich,unfortunately,mustberedoneforeachnew

isalsowiththeDepartementd'InformatiqueetdeRecherche

problem.Alargeamountofthepatternrecognitionliter-

Operationelle,UniversitedeMontreal,C.P.����Succ.Centre-Ville,

� ��ChemindelaTour,Montreal,Quebec,CanadaH�C�J�.

atureisdevotedtodescribingandcomparingtherelative

�

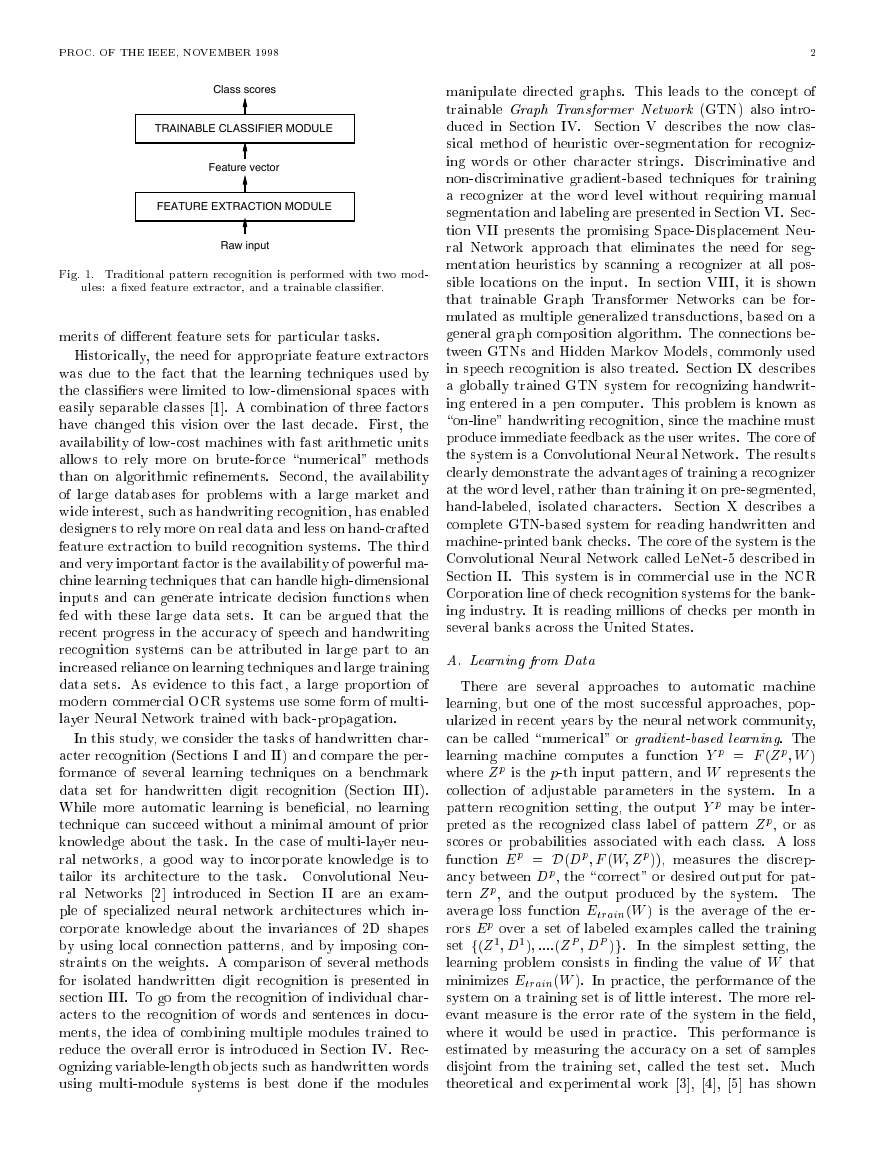

Class scores

Feature vector

Raw input

FEATURE EXTRACTION MODULE

TRAINABLE CLASSIFIER MODULE

PROC.OFTHEIEEE,NOVEMBER� �

Fig.�.Traditionalpatternrecognitionisperformedwithtwomod-

ules:axedfeatureextractor,andatrainableclassier.

meritsofdierentfeaturesetsforparticulartasks.

Historically,theneedforappropriatefeatureextractors

wasduetothefactthatthelearningtechniquesusedby

theclassierswerelimitedtolow-dimensionalspaceswith

easilyseparableclasses[�].Acombinationofthreefactors

havechangedthisvisionoverthelastdecade.First,the

availabilityoflow-costmachineswithfastarithmeticunits

allowstorelymoreonbrute-force\numerical"methods

thanonalgorithmicrenements.Second,theavailability

oflargedatabasesforproblemswithalargemarketand

wideinterest,suchashandwritingrecognition,hasenabled

designerstorelymoreonrealdataandlessonhand-crafted

featureextractiontobuildrecognitionsystems.Thethird

andveryimportantfactoristheavailabilityofpowerfulma-

chinelearningtechniquesthatcanhandlehigh-dimensional

inputsandcangenerateintricatedecisionfunctionswhen

fedwiththeselargedatasets.Itcanbearguedthatthe

recentprogressintheaccuracyofspeechandhandwriting

recognitionsystemscanbeattributedinlargeparttoan

increasedrelianceonlearningtechniquesandlargetraining

datasets.Asevidencetothisfact,alargeproportionof

moderncommercialOCRsystemsusesomeformofmulti-

layerNeuralNetworktrainedwithback-propagation.

Inthisstudy,weconsiderthetasksofhandwrittenchar-

acterrecognition(SectionsIandII)andcomparetheper-

formanceofseverallearningtechniquesonabenchmark

datasetforhandwrittendigitrecognition(SectionIII).

Whilemoreautomaticlearningisbenecial,nolearning

techniquecansucceedwithoutaminimalamountofprior

knowledgeaboutthetask.Inthecaseofmulti-layerneu-

ralnetworks,agoodwaytoincorporateknowledgeisto

tailoritsarchitecturetothetask.ConvolutionalNeu-

ralNetworks[�]introducedinSectionIIareanexam-

pleofspecializedneuralnetworkarchitectureswhichin-

corporateknowledgeabouttheinvariancesof�Dshapes

byusinglocalconnectionpatterns,andbyimposingcon-

straintsontheweights.Acomparisonofseveralmethods

forisolatedhandwrittendigitrecognitionispresentedin

sectionIII.Togofromtherecognitionofindividualchar-

acterstotherecognitionofwordsandsentencesindocu-

ments,theideaofcombiningmultiplemodulestrainedto

reducetheoverallerrorisintroducedinSectionIV.Rec-

ognizingvariable-lengthobjectssuchashandwrittenwords

usingmulti-modulesystemsisbestdoneifthemodules

�

manipulatedirectedgraphs.Thisleadstotheconceptof

trainableGraphTransformerNetwork(GTN)alsointro-

ducedinSectionIV.SectionVdescribesthenowclas-

sicalmethodofheuristicover-segmentationforrecogniz-

ingwordsorothercharacterstrings.Discriminativeand

non-discriminativegradient-basedtechniquesfortraining

arecognizeratthewordlevelwithoutrequiringmanual

segmentationandlabelingarepresentedinSectionVI.Sec-

tionVIIpresentsthepromisingSpace-DisplacementNeu-

ralNetworkapproachthateliminatestheneedforseg-

mentationheuristicsbyscanningarecognizeratallpos-

siblelocationsontheinput.InsectionVIII,itisshown

thattrainableGraphTransformerNetworkscanbefor-

mulatedasmultiplegeneralizedtransductions,basedona

generalgraphcompositionalgorithm.Theconnectionsbe-

tweenGTNsandHiddenMarkovModels,commonlyused

inspeechrecognitionisalsotreated.SectionIXdescribes

agloballytrainedGTNsystemforrecognizinghandwrit-

ingenteredinapencomputer.Thisproblemisknownas

\on-line"handwritingrecognition,sincethemachinemust

produceimmediatefeedbackastheuserwrites.Thecoreof

thesystemisaConvolutionalNeuralNetwork.Theresults

clearlydemonstratetheadvantagesoftrainingarecognizer

atthewordlevel,ratherthantrainingitonpre-segmented,

hand-labeled,isolatedcharacters.SectionXdescribesa

completeGTN-basedsystemforreadinghandwrittenand

machine-printedbankchecks.Thecoreofthesystemisthe

ConvolutionalNeuralNetworkcalledLeNet-�describedin

SectionII.ThissystemisincommercialuseintheNCR

Corporationlineofcheckrecognitionsystemsforthebank-

ingindustry.Itisreadingmillionsofcheckspermonthin

severalbanksacrosstheUnitedStates.

A.LearningfromData

Thereareseveralapproachestoautomaticmachine

learning,butoneofthemostsuccessfulapproaches,pop-

ularizedinrecentyearsbytheneuralnetworkcommunity,

canbecalled\numerical"orgradient-basedlearning.The

learningmachinecomputesafunctionYp=F(Zp;W)

whereZpisthep-thinputpattern,andWrepresentsthe

collectionofadjustableparametersinthesystem.

Ina

patternrecognitionsetting,theoutputYpmaybeinter-

pretedastherecognizedclasslabelofpatternZp,oras

scoresorprobabilitiesassociatedwitheachclass.Aloss

functionEp=D(Dp;F(W;Zp)),measuresthediscrep-

ancybetweenDp,the\correct"ordesiredoutputforpat-

ternZp,andtheoutputproducedbythesystem.The

averagelossfunctionEtrain(W)istheaverageoftheer-

rorsEpoverasetoflabeledexamplescalledthetraining

setf(Z�;D�);::::(ZP;DP)g.

Inthesimplestsetting,the

learningproblemconsistsinndingthevalueofWthat

minimizesEtrain(W).Inpractice,theperformanceofthe

systemonatrainingsetisoflittleinterest.Themorerel-

evantmeasureistheerrorrateofthesystemintheeld,

whereitwouldbeusedinpractice.Thisperformanceis

estimatedbymeasuringtheaccuracyonasetofsamples

disjointfromthetrainingset,calledthetestset.Much

theoreticalandexperimentalwork[�],[�],[�]hasshown

�

PROC.OFTHEIEEE,NOVEMBER� �

thatthegapbetweentheexpectederrorrateonthetest

setEtestandtheerrorrateonthetrainingsetEtrainde-

creaseswiththenumberoftrainingsamplesapproximately

as

EtestEtrain=k(h=P)

(�)

wherePisthenumberoftrainingsamples,hisameasureof

\eectivecapacity"orcomplexityofthemachine[�],[�],

isanumberbetween�:�and�:�,andkisaconstant.This

gapalwaysdecreaseswhenthenumberoftrainingsamples

increases.Furthermore,asthecapacityhincreases,Etrain

decreases.Therefore,whenincreasingthecapacityh,there

isatrade-obetweenthedecreaseofEtrainandthein-

creaseofthegap,withanoptimalvalueofthecapacityh

thatachievesthelowestgeneralizationerrorEtest.Most

learningalgorithmsattempttominimizeEtrainaswellas

someestimateofthegap.Aformalversionofthisiscalled

structuralriskminimization[�],[�],andisbasedonden-

ingasequenceoflearningmachinesofincreasingcapacity,

correspondingtoasequenceofsubsetsoftheparameter

spacesuchthateachsubsetisasupersetoftheprevious

subset.Inpracticalterms,StructuralRiskMinimization

isimplementedbyminimizingEtrain+H(W),wherethe

functionH(W)iscalledaregularizationfunction,andis

aconstant.H(W)ischosensuchthatittakeslargeval-

uesonparametersWthatbelongtohigh-capacitysubsets

oftheparameterspace.MinimizingH(W)ineectlim-

itsthecapacityoftheaccessiblesubsetoftheparameter

space,therebycontrollingthetradeobetweenminimiz-

ingthetrainingerrorandminimizingtheexpectedgap

betweenthetrainingerrorandtesterror.

B.Gradient-BasedLearning

Thegeneralproblemofminimizingafunctionwithre-

specttoasetofparametersisattherootofmanyissuesin

computerscience.Gradient-BasedLearningdrawsonthe

factthatitisgenerallymucheasiertominimizeareason-

ablysmooth,continuousfunctionthanadiscrete(combi-

natorial)function.Thelossfunctioncanbeminimizedby

estimatingtheimpactofsmallvariationsoftheparame-

tervaluesonthelossfunction.Thisismeasuredbythe

gradientofthelossfunctionwithrespecttotheparam-

eters.Ecientlearningalgorithmscanbedevisedwhen

thegradientvectorcanbecomputedanalytically(asop-

posedtonumericallythroughperturbations).Thisisthe

basisofnumerousgradient-basedlearningalgorithmswith

continuous-valuedparameters.Intheproceduresdescribed

inthisarticle,thesetofparametersWisareal-valuedvec-

tor,withrespecttowhichE(W)iscontinuous,aswellas

dierentiablealmosteverywhere.Thesimplestminimiza-

tionprocedureinsuchasettingisthegradientdescent

algorithmwhereWisiterativelyadjustedasfollows:

Wk=Wk�@E(W)

(�)

@W:

Inthesimplestcase,isascalarconstant.Moresophisti-

catedproceduresusevariable,orsubstituteitforadiag-

onalmatrix,orsubstituteitforanestimateoftheinverse

�

HessianmatrixasinNewtonorQuasi-Newtonmethods.

TheConjugateGradientmethod[�]canalsobeused.

However,AppendixBshowsthatdespitemanyclaims

tothecontraryintheliterature,theusefulnessofthese

second-ordermethodstolargelearningmachinesisvery

limited.Apopularminimizationprocedureisthestochasticgra-

dientalgorithm,alsocalledtheon-lineupdate.Itconsists

inupdatingtheparametervectorusinganoisy,orapprox-

imated,versionoftheaveragegradient.Inthemostcom-

moninstanceofit,Wisupdatedonthebasisofasingle

sample:

Wk=Wk�@Epk(W)

(�)

@W

Withthisproceduretheparametervectoructuates

aroundanaveragetrajectory,butusuallyconvergesconsid-

erablyfasterthanregulargradientdescentandsecondor-

dermethodsonlargetrainingsetswithredundantsamples

(suchasthoseencounteredinspeechorcharacterrecogni-

tion).ThereasonsforthisareexplainedinAppendixB.

Thepropertiesofsuchalgorithmsappliedtolearninghave

beenstudiedtheoreticallysincethe� ��'s[ ],[��],[��],

butpracticalsuccessesfornon-trivialtasksdidnotoccur

untilthemideighties.

C.GradientBack-Propagation

Gradient-BasedLearningprocedureshavebeenused

sincethelate� ��'s,buttheyweremostlylimitedtolin-

earsystems[�].Thesurprisingusefulnessofsuchsim-

plegradientdescenttechniquesforcomplexmachinelearn-

ingtaskswasnotwidelyrealizeduntilthefollowingthree

eventsoccurred.Thersteventwastherealizationthat,

despiteearlywarningstothecontrary[��],thepresence

oflocalminimainthelossfunctiondoesnotseemto

beamajorprobleminpractice.Thisbecameapparent

whenitwasnoticedthatlocalminimadidnotseemto

beamajorimpedimenttothesuccessofearlynon-linear

gradient-basedLearningtechniquessuchasBoltzmannma-

chines[��],[��].Thesecondeventwasthepopularization

byRumelhart,HintonandWilliams[��]andothersofa

simpleandecientprocedure,theback-propagational-

gorithm,tocomputethegradientinanon-linearsystem

composedofseverallayersofprocessing.Thethirdevent

wasthedemonstrationthattheback-propagationproce-

dureappliedtomulti-layerneuralnetworkswithsigmoidal

unitscansolvecomplicatedlearningtasks.Thebasicidea

ofback-propagationisthatgradientscanbecomputede-

cientlybypropagationfromtheoutputtotheinput.This

ideawasdescribedinthecontroltheoryliteratureofthe

earlysixties[��],butitsapplicationtomachinelearning

wasnotgenerallyrealizedthen.

Interestingly,theearly

derivationsofback-propagationinthecontextofneural

networklearningdidnotusegradients,but\virtualtar-

gets"forunitsinintermediatelayers[��],[��],orminimal

disturbancearguments[� ].TheLagrangeformalismused

inthecontroltheoryliteratureprovidesperhapsthebest

rigorousmethodforderivingback-propagation[��],andfor

derivinggeneralizationsofback-propagationtorecurrent

�

PROC.OFTHEIEEE,NOVEMBER� �

networks[��],andnetworksofheterogeneousmodules[��].

Asimplederivationforgenericmulti-layersystemsisgiven

inSectionI-E.

Thefactthatlocalminimadonotseemtobeaproblem

formulti-layerneuralnetworksissomewhatofatheoretical

mystery.Itisconjecturedthatifthenetworkisoversized

forthetask(asisusuallythecaseinpractice),thepresence

of\extradimensions"inparameterspacereducestherisk

ofunattainableregions.Back-propagationisbyfarthe

mostwidelyusedneural-networklearningalgorithm,and

probablythemostwidelyusedlearningalgorithmofany

form.D.LearninginRealHandwritingRecognitionSystems

Isolatedhandwrittencharacterrecognitionhasbeenex-

tensivelystudiedintheliterature(see[��],[��]forreviews),

andwasoneoftheearlysuccessfulapplicationsofneural

networks[��].Comparativeexperimentsonrecognitionof

individualhandwrittendigitsarereportedinSectionIII.

TheyshowthatneuralnetworkstrainedwithGradient-

BasedLearningperformbetterthanallothermethods

testedhereonthesamedata.Thebestneuralnetworks,

calledConvolutionalNetworks,aredesignedtolearnto

extractrelevantfeaturesdirectlyfrompixelimages(see

SectionII).

Oneofthemostdicultproblemsinhandwritingrecog-

nition,however,isnotonlytorecognizeindividualcharac-

ters,butalsotoseparateoutcharactersfromtheirneigh-

borswithinthewordorsentence,aprocessknownasseg-

mentation.Thetechniquefordoingthisthathasbecome

the\standard"iscalledHeuristicOver-Segmentation.It

consistsingeneratingalargenumberofpotentialcuts

betweencharactersusingheuristicimageprocessingtech-

niques,andsubsequentlyselectingthebestcombinationof

cutsbasedonscoresgivenforeachcandidatecharacterby

therecognizer.Insuchamodel,theaccuracyofthesys-

temdependsuponthequalityofthecutsgeneratedbythe

heuristics,andontheabilityoftherecognizertodistin-

guishcorrectlysegmentedcharactersfrompiecesofchar-

acters,multiplecharacters,orotherwiseincorrectlyseg-

mentedcharacters.Trainingarecognizertoperformthis

taskposesamajorchallengebecauseofthedicultyincre-

atingalabeleddatabaseofincorrectlysegmentedcharac-

ters.Thesimplestsolutionconsistsinrunningtheimages

ofcharacterstringsthroughthesegmenter,andthenman-

uallylabelingallthecharacterhypotheses.Unfortunately,

notonlyisthisanextremelytediousandcostlytask,itis

alsodiculttodothelabelingconsistently.Forexample,

shouldtherighthalfofacutup�belabeledasa�oras

anon-character?shouldtherighthalfofacutup�be

labeledasa�?

Therstsolution,describedinSectionVconsistsin

trainingthesystematthelevelofwholestringsofchar-

acters,ratherthanatthecharacterlevel.Thenotionof

Gradient-BasedLearningcanbeusedforthispurpose.The

systemistrainedtominimizeanoveralllossfunctionwhich

measurestheprobabilityofanerroneousanswer.SectionV

exploresvariouswaystoensurethatthelossfunctionisdif-

�

ferentiable,andthereforelendsitselftotheuseofGradient-

BasedLearningmethods.SectionVintroducestheuseof

directedacyclicgraphswhosearcscarrynumericalinfor-

mationasawaytorepresentthealternativehypotheses,

andintroducestheideaofGTN.

ThesecondsolutiondescribedinSectionVIIistoelim-

inatesegmentationaltogether.Theideaistosweepthe

recognizerovereverypossiblelocationontheinputimage,

andtorelyonthe\characterspotting"propertyoftherec-

ognizer,i.e.itsabilitytocorrectlyrecognizeawell-centered

characterinitsinputeld,eveninthepresenceofother

charactersbesidesit,whilerejectingimagescontainingno

centeredcharacters[��],[��].Thesequenceofrecognizer

outputsobtainedbysweepingtherecognizeroverthein-

putisthenfedtoaGraphTransformerNetworkthattakes

linguisticconstraintsintoaccountandnallyextractsthe

mostlikelyinterpretation.ThisGTNissomewhatsimilar

toHiddenMarkovModels(HMM),whichmakestheap-

proachreminiscentoftheclassicalspeechrecognition[��],

[� ].Whilethistechniquewouldbequiteexpensivein

thegeneralcase,theuseofConvolutionalNeuralNetworks

makesitparticularlyattractivebecauseitallowssignicant

savingsincomputationalcost.

E.GloballyTrainableSystems

Asstatedearlier,mostpracticalpatternrecognitionsys-

temsarecomposedofmultiplemodules.Forexample,a

documentrecognitionsystemiscomposedofaeldlocator,

whichextractsregionsofinterest,aeldsegmenter,which

cutstheinputimageintoimagesofcandidatecharacters,a

recognizer,whichclassiesandscoreseachcandidatechar-

acter,andacontextualpost-processor,generallybasedon

astochasticgrammar,whichselectsthebestgrammatically

correctanswerfromthehypothesesgeneratedbytherecog-

nizer.Inmostcases,theinformationcarriedfrommodule

tomoduleisbestrepresentedasgraphswithnumericalin-

formationattachedtothearcs.Forexample,theoutput

oftherecognizermodulecanberepresentedasanacyclic

graphwhereeacharccontainsthelabelandthescoreof

acandidatecharacter,andwhereeachpathrepresenta

alternativeinterpretationoftheinputstring.Typically,

eachmoduleismanuallyoptimized,orsometimestrained,

outsideofitscontext.Forexample,thecharacterrecog-

nizerwouldbetrainedonlabeledimagesofpre-segmented

characters.Thenthecompletesystemisassembled,and

asubsetoftheparametersofthemodulesismanuallyad-

justedtomaximizetheoverallperformance.Thislaststep

isextremelytedious,time-consuming,andalmostcertainly

suboptimal.

Abetteralternativewouldbetosomehowtraintheen-

tiresystemsoastominimizeaglobalerrormeasuresuchas

theprobabilityofcharactermisclassicationsatthedocu-

mentlevel.Ideally,wewouldwanttondagoodminimum

ofthisgloballossfunctionwithrespecttoalltheparam-

etersinthesystem.IfthelossfunctionEmeasuringthe

performancecanbemadedierentiablewithrespecttothe

system'stunableparametersW,wecanndalocalmin-

imumofEusingGradient-BasedLearning.However,at

�

PROC.OFTHEIEEE,NOVEMBER� �

rstglance,itappearsthatthesheersizeandcomplexity

ofthesystemwouldmakethisintractable.

ToensurethatthegloballossfunctionEp(Zp;W)isdif-

ferentiable,theoverallsystemisbuiltasafeed-forwardnet-

workofdierentiablemodules.Thefunctionimplemented

byeachmodulemustbecontinuousanddierentiableal-

mosteverywherewithrespecttotheinternalparametersof

themodule(e.g.theweightsofaNeuralNetcharacterrec-

ognizerinthecaseofacharacterrecognitionmodule),and

withrespecttothemodule'sinputs.Ifthisisthecase,a

simplegeneralizationofthewell-knownback-propagation

procedurecanbeusedtoecientlycomputethegradients

ofthelossfunctionwithrespecttoalltheparametersin

thesystem[��].Forexample,letusconsiderasystem

builtasacascadeofmodules,eachofwhichimplementsa

functionXn=Fn(Wn;Xn�),whereXnisavectorrep-

resentingtheoutputofthemodule,Wnisthevectorof

tunableparametersinthemodule(asubsetofW),and

Xn�isthemodule'sinputvector(aswellastheprevious

module'soutputvector).TheinputX�totherstmodule

istheinputpatternZp.IfthepartialderivativeofEpwith

respecttoXnisknown,thenthepartialderivativesofEp

withrespecttoWnandXn�canbecomputedusingthe

backwardrecurrence

@Ep@Wn= @F@W(Wn;Xn�)@Ep@Xn

@Ep

@Xn�=@F@X(Wn;Xn�)@Ep@Xn

(�)

where@F@W(Wn;Xn�)istheJacobianofFwithrespectto

Wevaluatedatthepoint(Wn;Xn�),and@F@X(Wn;Xn�)

istheJacobianofFwithrespecttoX.TheJacobianof

avectorfunctionisamatrixcontainingthepartialderiva-

tivesofalltheoutputswithrespecttoalltheinputs.

Therstequationcomputessometermsofthegradient

ofEp(W),whilethesecondequationgeneratesaback-

wardrecurrence,asinthewell-knownback-propagation

procedureforneuralnetworks.Wecanaveragethegradi-

entsoverthetrainingpatternstoobtainthefullgradient.

Itisinterestingtonotethatinmanyinstancesthereis

noneedtoexplicitlycomputetheJacobianmatrix.The

aboveformulausestheproductoftheJacobianwithavec-

torofpartialderivatives,anditisofteneasiertocompute

thisproductdirectlywithoutcomputingtheJacobianbe-

forehand.InByanalogywithordinarymulti-layerneural

networks,allbutthelastmodulearecalledhiddenlayers

becausetheiroutputsarenotobservablefromtheoutside.

morecomplexsituationsthanthesimplecascadeofmod-

ulesdescribedabove,thepartialderivativenotationbe-

comessomewhatambiguousandawkward.Acompletely

rigorousderivationinmoregeneralcasescanbedoneusing

Lagrangefunctions[��],[��],[��].

Traditionalmulti-layerneuralnetworksareaspecialcase

oftheabovewherethestateinformationXnisrepresented

withxed-sizedvectors,andwherethemodulesareal-

ternatedlayersofmatrixmultiplications(theweights)and

component-wisesigmoidfunctions(theneurons).However,

asstatedearlier,thestateinformationincomplexrecogni-

�

tionsystemisbestrepresentedbygraphswithnumerical

informationattachedtothearcs.Inthiscase,eachmodule,

calledaGraphTransformer,takesoneormoregraphsas

input,andproducesagraphasoutput.Networksofsuch

modulesarecalledGraphTransformerNetworks(GTN).

SectionsIV,VIandVIIIdeveloptheconceptofGTNs,

andshowthatGradient-BasedLearningcanbeusedto

trainalltheparametersinallthemodulessoastomini-

mizeagloballossfunction.Itmayseemparadoxicalthat

gradientscanbecomputedwhenthestateinformationis

representedbyessentiallydiscreteobjectssuchasgraphs,

butthatdicultycanbecircumvented,asshownlater.

II.ConvolutionalNeuralNetworksfor

IsolatedCharacterRecognition

Theabilityofmulti-layernetworkstrainedwithgradi-

entdescenttolearncomplex,high-dimensional,non-linear

mappingsfromlargecollectionsofexamplesmakesthem

obviouscandidatesforimagerecognitiontasks.Inthetra-

ditionalmodelofpatternrecognition,ahand-designedfea-

tureextractorgathersrelevantinformationfromtheinput

andeliminatesirrelevantvariabilities.Atrainableclassier

thencategorizestheresultingfeaturevectorsintoclasses.

Inthisscheme,standard,fully-connectedmulti-layernet-

workscanbeusedasclassiers.Apotentiallymoreinter-

estingschemeistorelyonasmuchaspossibleonlearning

inthefeatureextractoritself.

Inthecaseofcharacter

recognition,anetworkcouldbefedwithalmostrawin-

puts(e.g.size-normalizedimages).Whilethiscanbedone

withanordinaryfullyconnectedfeed-forwardnetworkwith

somesuccessfortaskssuchascharacterrecognition,there

areproblems.

Firstly,typicalimagesarelarge,oftenwithseveralhun-

dredvariables(pixels).Afully-connectedrstlayerwith,

sayonehundredhiddenunitsintherstlayer,wouldal-

readycontainseveraltensofthousandsofweights.Such

alargenumberofparametersincreasesthecapacityofthe

systemandthereforerequiresalargertrainingset.Inad-

dition,thememoryrequirementtostoresomanyweights

mayruleoutcertainhardwareimplementations.But,the

maindeciencyofunstructurednetsforimageorspeech

applicationsisthattheyhavenobuilt-ininvariancewith

respecttotranslations,orlocaldistortionsoftheinputs.

Beforebeingsenttothexed-sizeinputlayerofaneural

net,characterimages,orother�Dor�Dsignals,mustbe

approximatelysize-normalizedandcenteredintheinput

eld.Unfortunately,nosuchpreprocessingcanbeperfect:

handwritingisoftennormalizedatthewordlevel,which

cancausesize,slant,andpositionvariationsforindividual

characters.This,combinedwithvariabilityinwritingstyle,

willcausevariationsinthepositionofdistinctivefeatures

ininputobjects.Inprinciple,afully-connectednetworkof

sucientsizecouldlearntoproduceoutputsthatarein-

variantwithrespecttosuchvariations.However,learning

suchataskwouldprobablyresultinmultipleunitswith

similarweightpatternspositionedatvariouslocationsin

theinputsoastodetectdistinctivefeatureswhereverthey

appearontheinput.Learningtheseweightcongurations

�

PROC.OFTHEIEEE,NOVEMBER� �

requiresaverylargenumberoftraininginstancestocover

thespaceofpossiblevariations.Inconvolutionalnetworks,

describedbelow,shiftinvarianceisautomaticallyobtained

byforcingthereplicationofweightcongurationsacross

space.Secondly,adeciencyoffully-connectedarchitecturesis

thatthetopologyoftheinputisentirelyignored.Thein-

putvariablescanbepresentedinany(xed)orderwithout

aectingtheoutcomeofthetraining.Onthecontrary,

images(ortime-frequencyrepresentationsofspeech)have

astrong�Dlocalstructure:variables(orpixels)thatare

spatiallyortemporallynearbyarehighlycorrelated.Local

correlationsarethereasonsforthewell-knownadvantages

ofextractingandcombininglocalfeaturesbeforerecogniz-

ingspatialortemporalobjects,becausecongurationsof

neighboringvariablescanbeclassiedintoasmallnumber

ofcategories(e.g.edges,corners...).ConvolutionalNet-

worksforcetheextractionoflocalfeaturesbyrestricting

thereceptiveeldsofhiddenunitstobelocal.

A.ConvolutionalNetworks

ConvolutionalNetworkscombinethreearchitectural

ideastoensuresomedegreeofshift,scale,anddistor-

tioninvariance:

localreceptiveelds,sharedweights(or

weightreplication),andspatialortemporalsub-sampling.

Atypicalconvolutionalnetworkforrecognizingcharacters,

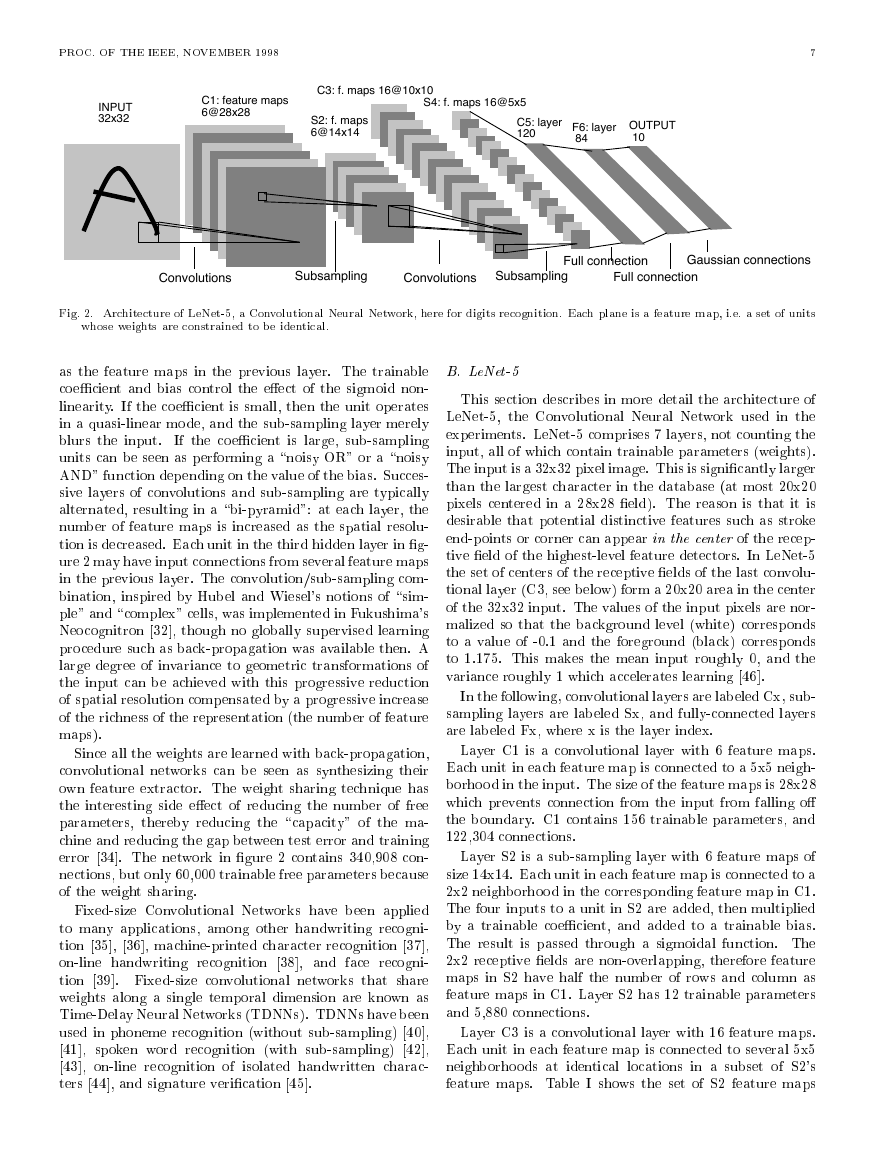

dubbedLeNet-�,isshowningure�.Theinputplane

receivesimagesofcharactersthatareapproximatelysize-

normalizedandcentered.Eachunitinalayerreceivesin-

putsfromasetofunitslocatedinasmallneighborhood

inthepreviouslayer.Theideaofconnectingunitstolocal

receptiveeldsontheinputgoesbacktothePerceptronin

theearly��s,andwasalmostsimultaneouswithHubeland

Wiesel'sdiscoveryoflocally-sensitive,orientation-selective

neuronsinthecat'svisualsystem[��].Localconnections

havebeenusedmanytimesinneuralmodelsofvisuallearn-

[��],

[��],[��],

ing[��],

[��],

[�].Withlocalreceptive

elds,neuronscanextractelementaryvisualfeaturessuch

asorientededges,end-points,corners(orsimilarfeaturesin

othersignalssuchasspeechspectrograms).Thesefeatures

arethencombinedbythesubsequentlayersinordertode-

tecthigher-orderfeatures.Asstatedearlier,distortionsor

shiftsoftheinputcancausethepositionofsalientfeatures

tovary.Inaddition,elementaryfeaturedetectorsthatare

usefulononepartoftheimagearelikelytobeusefulacross

theentireimage.Thisknowledgecanbeappliedbyforcing

asetofunits,whosereceptiveeldsarelocatedatdierent

placesontheimage,tohaveidenticalweightvectors[��],

[��],[��].Unitsinalayerareorganizedinplaneswithin

whichalltheunitssharethesamesetofweights.Theset

ofoutputsoftheunitsinsuchaplaneiscalledafeature

map.Unitsinafeaturemapareallconstrainedtoper-

formthesameoperationondierentpartsoftheimage.

Acompleteconvolutionallayeriscomposedofseveralfea-

turemaps(withdierentweightvectors),sothatmultiple

featurescanbeextractedateachlocation.Aconcreteex-

ampleofthisistherstlayerofLeNet-�showninFigure�.

UnitsinthersthiddenlayerofLeNet-�areorganizedin�

�

planes,eachofwhichisafeaturemap.Aunitinafeature

maphas��inputsconnectedtoa�by�areaintheinput,

calledthereceptiveeldoftheunit.Eachunithas��in-

puts,andtherefore��trainablecoecientsplusatrainable

bias.Thereceptiveeldsofcontiguousunitsinafeature

maparecenteredoncorrespondinglycontiguousunitsin

thepreviouslayer.Thereforereceptiveeldsofneighbor-

ingunitsoverlap.Forexample,inthersthiddenlayer

ofLeNet-�,thereceptiveeldsofhorizontallycontiguous

unitsoverlapby�columnsand�rows.Asstatedearlier,

alltheunitsinafeaturemapsharethesamesetof��

weightsandthesamebiassotheydetectthesamefeature

atallpossiblelocationsontheinput.Theotherfeature

mapsinthelayerusedierentsetsofweightsandbiases,

therebyextractingdierenttypesoflocalfeatures.Inthe

caseofLeNet-�,ateachinputlocationsixdierenttypes

offeaturesareextractedbysixunitsinidenticallocations

inthesixfeaturemaps.Asequentialimplementationof

afeaturemapwouldscantheinputimagewithasingle

unitthathasalocalreceptiveeld,andstorethestates

ofthisunitatcorrespondinglocationsinthefeaturemap.

Thisoperationisequivalenttoaconvolution,followedby

anadditivebiasandsquashingfunction,hencethename

convolutionalnetwork.Thekerneloftheconvolutionisthe

setofconnectionweightsusedbytheunitsinthefeature

map.Aninterestingpropertyofconvolutionallayersisthat

iftheinputimageisshifted,thefeaturemapoutputwill

beshiftedbythesameamount,butwillbeleftunchanged

otherwise.Thispropertyisatthebasisoftherobustness

ofconvolutionalnetworkstoshiftsanddistortionsofthe

input.Onceafeaturehasbeendetected,

itsexactlocation

becomeslessimportant.Onlyitsapproximateposition

relativetootherfeaturesisrelevant.Forexample,once

weknowthattheinputimagecontainstheendpointofa

roughlyhorizontalsegmentintheupperleftarea,acorner

intheupperrightarea,andtheendpointofaroughlyver-

ticalsegmentinthelowerportionoftheimage,wecantell

theinputimageisa�.Notonlyistheprecisepositionof

eachofthosefeaturesirrelevantforidentifyingthepattern,

itispotentiallyharmfulbecausethepositionsarelikelyto

varyfordierentinstancesofthecharacter.Asimpleway

toreducetheprecisionwithwhichthepositionofdistinc-

tivefeaturesareencodedinafeaturemapistoreducethe

spatialresolutionofthefeaturemap.Thiscanbeachieved

withaso-calledsub-samplinglayerswhichperformsalocal

averagingandasub-sampling,reducingtheresolutionof

thefeaturemap,andreducingthesensitivityoftheoutput

toshiftsanddistortions.ThesecondhiddenlayerofLeNet-

�isasub-samplinglayer.Thislayercomprisessixfeature

maps,oneforeachfeaturemapinthepreviouslayer.The

receptiveeldofeachunitisa�by�areaintheprevious

layer'scorrespondingfeaturemap.Eachunitcomputesthe

averageofitsfourinputs,multipliesitbyatrainablecoef-

cient,addsatrainablebias,andpassestheresultthrough

asigmoidfunction.Contiguousunitshavenon-overlapping

contiguousreceptiveelds.Consequently,asub-sampling

layerfeaturemaphashalfthenumberofrowsandcolumns

�

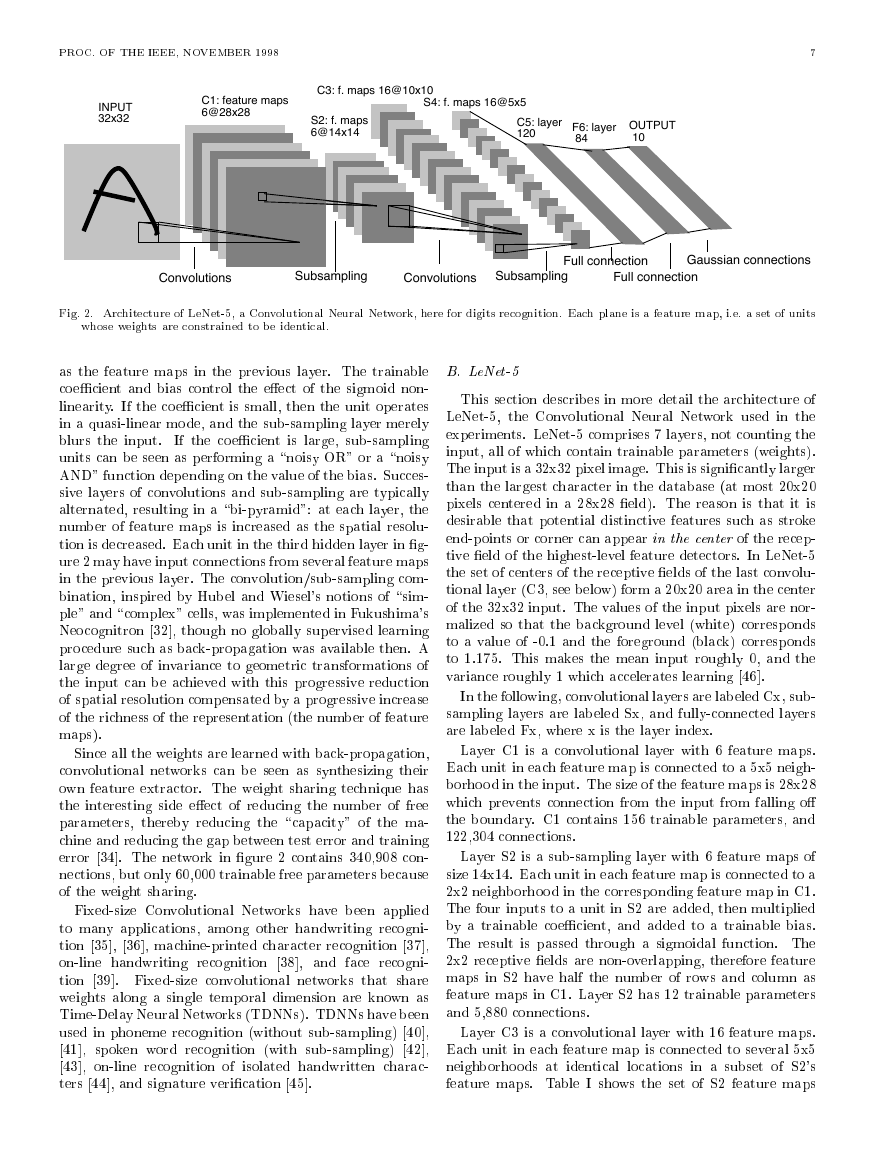

INPUT

32x32

Convolutions

Subsampling

Convolutions

Subsampling

Full connection

Full connection

C5: layer

120

S4: f. maps 16@5x5

S2: f. maps

6@14x14

C3: f. maps 16@10x10

F6: layer

84

OUTPUT

10

Gaussian connections

C1: feature maps

6@28x28

PROC.OFTHEIEEE,NOVEMBER� �

�

Fig.�.ArchitectureofLeNet-�,aConvolutionalNeuralNetwork,herefordigitsrecognition.Eachplaneisafeaturemap,i.e.asetofunits

whoseweightsareconstrainedtobeidentical.

asthefeaturemapsinthepreviouslayer.Thetrainable

B.LeNet-�

coecientandbiascontroltheeectofthesigmoidnon-

Thissectiondescribesinmoredetailthearchitectureof

linearity.Ifthecoecientissmall,thentheunitoperates

LeNet-�,theConvolutionalNeuralNetworkusedinthe

inaquasi-linearmode,andthesub-samplinglayermerely

experiments.LeNet-�comprises�layers,notcountingthe

blurstheinput.

Ifthecoecientislarge,sub-sampling

input,allofwhichcontaintrainableparameters(weights).

unitscanbeseenasperforminga\noisyOR"ora\noisy

Theinputisa��x��pixelimage.Thisissignicantlylarger

AND"functiondependingonthevalueofthebias.Succes-

thanthelargestcharacterinthedatabase(atmost��x��

sivelayersofconvolutionsandsub-samplingaretypically

pixelscenteredina��x��eld).Thereasonisthatitis

alternated,resultingina\bi-pyramid":ateachlayer,the

desirablethatpotentialdistinctivefeaturessuchasstroke

numberoffeaturemapsisincreasedasthespatialresolu-

end-pointsorcornercanappearinthecenteroftherecep-

tionisdecreased.Eachunitinthethirdhiddenlayering-

tiveeldofthehighest-levelfeaturedetectors.InLeNet-�

ure�mayhaveinputconnectionsfromseveralfeaturemaps

thesetofcentersofthereceptiveeldsofthelastconvolu-

inthepreviouslayer.Theconvolution/sub-samplingcom-

tionallayer(C�,seebelow)forma��x��areainthecenter

bination,inspiredbyHubelandWiesel'snotionsof\sim-

ofthe��x��input.Thevaluesoftheinputpixelsarenor-

ple"and\complex"cells,wasimplementedinFukushima's

malizedsothatthebackgroundlevel(white)corresponds

Neocognitron[��],thoughnogloballysupervisedlearning

toavalueof-�.�andtheforeground(black)corresponds

proceduresuchasback-propagationwasavailablethen.A

to�.���.Thismakesthemeaninputroughly�,andthe

largedegreeofinvariancetogeometrictransformationsof

varianceroughly�whichaccelerateslearning[��].

theinputcanbeachievedwiththisprogressivereduction

Inthefollowing,convolutionallayersarelabeledCx,sub-

ofspatialresolutioncompensatedbyaprogressiveincrease

samplinglayersarelabeledSx,andfully-connectedlayers

oftherichnessoftherepresentation(thenumberoffeature

arelabeledFx,wherexisthelayerindex.

maps).

LayerC�isaconvolutionallayerwith�featuremaps.

Sincealltheweightsarelearnedwithback-propagation,

Eachunitineachfeaturemapisconnectedtoa�x�neigh-

convolutionalnetworkscanbeseenassynthesizingtheir

borhoodintheinput.Thesizeofthefeaturemapsis��x��

ownfeatureextractor.Theweightsharingtechniquehas

whichpreventsconnectionfromtheinputfromfallingo

theinterestingsideeectofreducingthenumberoffree

theboundary.C�contains���trainableparameters,and

parameters,therebyreducingthe\capacity"ofthema-

���,���connections.

chineandreducingthegapbetweentesterrorandtraining

LayerS�isasub-samplinglayerwith�featuremapsof

error[��].Thenetworkingure�contains���, ��con-

size��x��.Eachunitineachfeaturemapisconnectedtoa

nections,butonly��,���trainablefreeparametersbecause

�x�neighborhoodinthecorrespondingfeaturemapinC�.

oftheweightsharing.

ThefourinputstoaunitinS�areadded,thenmultiplied

Fixed-sizeConvolutionalNetworkshavebeenapplied

byatrainablecoecient,andaddedtoatrainablebias.

tomanyapplications,amongotherhandwritingrecogni-

Theresultispassedthroughasigmoidalfunction.The

tion[��],[��],machine-printedcharacterrecognition[��],

�x�receptiveeldsarenon-overlapping,thereforefeature

on-linehandwritingrecognition[��],andfacerecogni-

mapsinS�havehalfthenumberofrowsandcolumnas

tion[� ].Fixed-sizeconvolutionalnetworksthatshare

featuremapsinC�.LayerS�has��trainableparameters

weightsalongasingletemporaldimensionareknownas

and�,���connections.

Time-DelayNeuralNetworks(TDNNs).TDNNshavebeen

usedinphonemerecognition(withoutsub-sampling)[��],

LayerC�isaconvolutionallayerwith��featuremaps.

[��],spokenwordrecognition(withsub-sampling)[��],

Eachunitineachfeaturemapisconnectedtoseveral�x�

[��],on-linerecognitionofisolatedhandwrittencharac-

neighborhoodsatidenticallocationsinasubsetofS�'s

ters[��],andsignatureverication[��].

featuremaps.TableIshowsthesetofS�featuremaps

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc