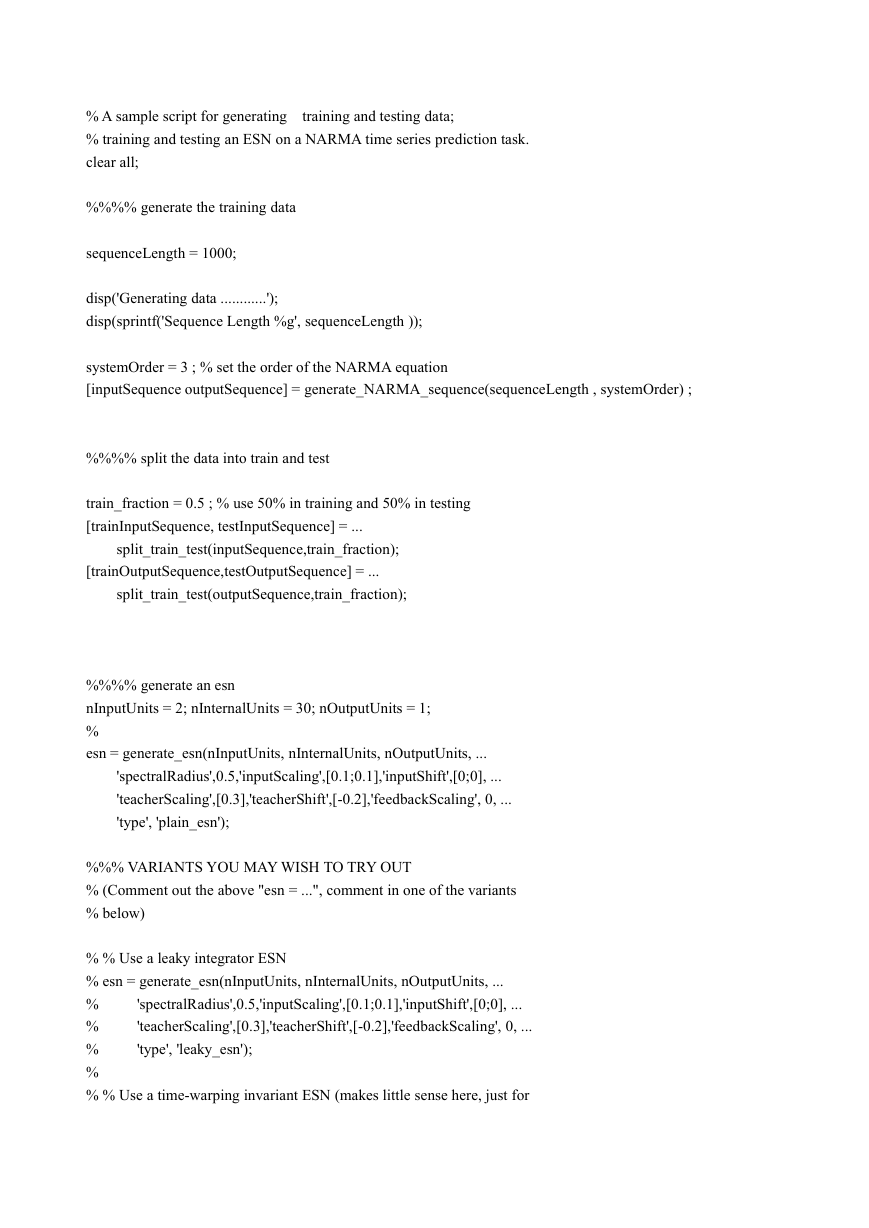

% A sample script for generating

% training and testing an ESN on a NARMA time series prediction task.

clear all;

training and testing data;

%%%% generate the training data

sequenceLength = 1000;

disp('Generating data ............');

disp(sprintf('Sequence Length %g', sequenceLength ));

systemOrder = 3 ; % set the order of the NARMA equation

[inputSequence outputSequence] = generate_NARMA_sequence(sequenceLength , systemOrder) ;

%%%% split the data into train and test

train_fraction = 0.5 ; % use 50% in training and 50% in testing

[trainInputSequence, testInputSequence] = ...

split_train_test(inputSequence,train_fraction);

[trainOutputSequence,testOutputSequence] = ...

split_train_test(outputSequence,train_fraction);

%%%% generate an esn

nInputUnits = 2; nInternalUnits = 30; nOutputUnits = 1;

%

esn = generate_esn(nInputUnits, nInternalUnits, nOutputUnits, ...

'spectralRadius',0.5,'inputScaling',[0.1;0.1],'inputShift',[0;0], ...

'teacherScaling',[0.3],'teacherShift',[-0.2],'feedbackScaling', 0, ...

'type', 'plain_esn');

%%% VARIANTS YOU MAY WISH TO TRY OUT

% (Comment out the above "esn = ...", comment in one of the variants

% below)

% % Use a leaky integrator ESN

% esn = generate_esn(nInputUnits, nInternalUnits, nOutputUnits, ...

%

%

%

%

% % Use a time-warping invariant ESN (makes little sense here, just for

'spectralRadius',0.5,'inputScaling',[0.1;0.1],'inputShift',[0;0], ...

'teacherScaling',[0.3],'teacherShift',[-0.2],'feedbackScaling', 0, ...

'type', 'leaky_esn');

�

% % demo's sake)

% esn = generate_esn(nInputUnits, nInternalUnits, nOutputUnits, ...

%

%

%

'spectralRadius',0.5,'inputScaling',[0.1;0.1],'inputShift',[0;0], ...

'teacherScaling',[0.3],'teacherShift',[-0.2],'feedbackScaling', 0, ...

'type', 'twi_esn');

% % Do online RLS learning instead of batch learning.

% esn = generate_esn(nInputUnits, nInternalUnits, nOutputUnits, ...

%

%

%

%

'spectralRadius',0.4,'inputScaling',[0.1;0.5],'inputShift',[0;1], ...

'teacherScaling',[0.3],'teacherShift',[-0.2],'feedbackScaling',0, ...

'learningMode', 'online' , 'RLS_lambda',0.9999995 , 'RLS_delta',0.000001, ...

'noiseLevel' , 0.00000000) ;

esn.internalWeights = esn.spectralRadius * esn.internalWeights_UnitSR;

%%%% train the ESN

nForgetPoints = 100 ; % discard the first 100 points

[trainedEsn stateMatrix] = ...

train_esn(trainInputSequence, trainOutputSequence, esn, nForgetPoints) ;

%%%% save the trained ESN

% save_esn(trainedEsn, 'esn_narma_demo_1');

%%%% plot the internal states of 4 units

nPoints = 200 ;

plot_states(stateMatrix,[1 2 3 4], nPoints, 1, 'traces of first 4 reservoir units') ;

% compute the output of the trained ESN on the training and testing data,

% discarding the first nForgetPoints of each

nForgetPoints = 100 ;

predictedTrainOutput = test_esn(trainInputSequence, trainedEsn, nForgetPoints);

predictedTestOutput = test_esn(testInputSequence,

trainedEsn, nForgetPoints) ;

% create input-output plots

nPlotPoints = 100 ;

plot_sequence(trainOutputSequence(nForgetPoints+1:end,:), predictedTrainOutput, nPlotPoints,...

'training: teacher sequence (red) vs predicted sequence (blue)');

plot_sequence(testOutputSequence(nForgetPoints+1:end,:), predictedTestOutput, nPlotPoints, ...

'testing: teacher sequence (red) vs predicted sequence (blue)') ;

%%%%compute NRMSE training error

trainError = compute_NRMSE(predictedTrainOutput, trainOutputSequence);

disp(sprintf('train NRMSE = %s', num2str(trainError)))

�

%%%%compute NRMSE testing error

testError = compute_NRMSE(predictedTestOutput, testOutputSequence);

disp(sprintf('test NRMSE = %s', num2str(testError)))

function [inputSequence, outputSequence] = generate_NARMA_sequence(sequenceLength, memoryLength)

%%%% create input

inputSequence = [ones(sequenceLength,1) rand(sequenceLength,1)];

% use the input sequence to drive a NARMA equation

outputSequence = 0.1*ones(sequenceLength,1);

for i = memoryLength + 1 : sequenceLength

% insert suitable NARMA equation on r.h.s., this is just an ad hoc

% example

outputSequence(i,1) = 0.7 * inputSequence(i-memoryLength,2) + 0.1 ...

+ (1 - outputSequence(i-1,1)) * outputSequence(i-1,1);

end

function [train,test] = split_train_test(sample, trainPercentage)

nSamplePoints = size(sample, 1) ;

nTrainPoints = floor(nSamplePoints * trainPercentage) ;

train = sample(1:nTrainPoints,:) ;

test = sample(nTrainPoints+1:end,:) ;

function esn = generate_esn(nInputUnits, nInternalUnits, nOutputUnits, varargin)

%%%% set the number of units

esn.nInternalUnits = nInternalUnits;

esn.nInputUnits = nInputUnits;

esn.nOutputUnits = nOutputUnits;

connectivity = min([10/nInternalUnits 1]);

nTotalUnits = nInternalUnits + nInputUnits + nOutputUnits;

esn.internalWeights_UnitSR = generate_internal_weights(nInternalUnits, ...

connectivity);

esn.nTotalUnits = nTotalUnits;

% input weight matrix has weight vectors per input unit in colums

esn.inputWeights = 2.0 * rand(nInternalUnits, nInputUnits)- 1.0;

% output weight matrix has weights for output units in rows

% includes weights for input-to-output connections

�

esn.outputWeights = zeros(nOutputUnits, nInternalUnits + nInputUnits);

%output feedback weight matrix has weights in columns

esn.feedbackWeights = (2.0 * rand(nInternalUnits, nOutputUnits)- 1.0);

%init default parameters

if nInputUnits > 0

esn.inputScaling = ones(nInputUnits, 1);

esn.inputShift

= zeros(nInputUnits, 1);

else

esn.inputScaling = []; esn.inputShift = [];

end

if nOutputUnits > 0

esn.teacherScaling = ones(nOutputUnits, 1); esn.teacherShift = zeros(nOutputUnits, 1);

else

esn.teacherScaling = []; esn.teacherShift = [];

end

esn.noiseLevel = 0.0 ;

esn.reservoirActivationFunction = 'tanh';

esn.outputActivationFunction = 'identity' ; % options: identity or tanh or sigmoid01

esn.methodWeightCompute = 'pseudoinverse' ; % options: pseudoinverse and wiener_hopf

esn.inverseOutputActivationFunction = 'identity' ; % options:

% identity or

% atanh or sigmoid01_inv

esn.spectralRadius = 1 ;

esn.feedbackScaling = zeros(nOutputUnits, 1);

esn.trained = 0 ;

esn.type = 'plain_esn' ;

esn.timeConstants = ones(esn.nInternalUnits,1);

esn.leakage = 0.5;

esn.learningMode = 'offline_singleTimeSeries' ;

esn.RLS_lambda = 1 ;

args = varargin;

nargs= length(args);

for i=1:2:nargs

switch args{i},

case 'inputScaling', esn.inputScaling = args{i+1} ;

case 'inputShift', esn.inputShift= args{i+1} ;

case 'teacherScaling', esn.teacherScaling = args{i+1} ;

case 'teacherShift', esn.teacherShift = args{i+1} ;

case 'noiseLevel', esn.noiseLevel = args{i+1} ;

case 'learningMode', esn.learningMode = args{i+1} ;

case 'reservoirActivationFunction',esn.reservoirActivationFunction=args{i+1};

case 'outputActivationFunction',esn.outputActivationFunction= ...

�

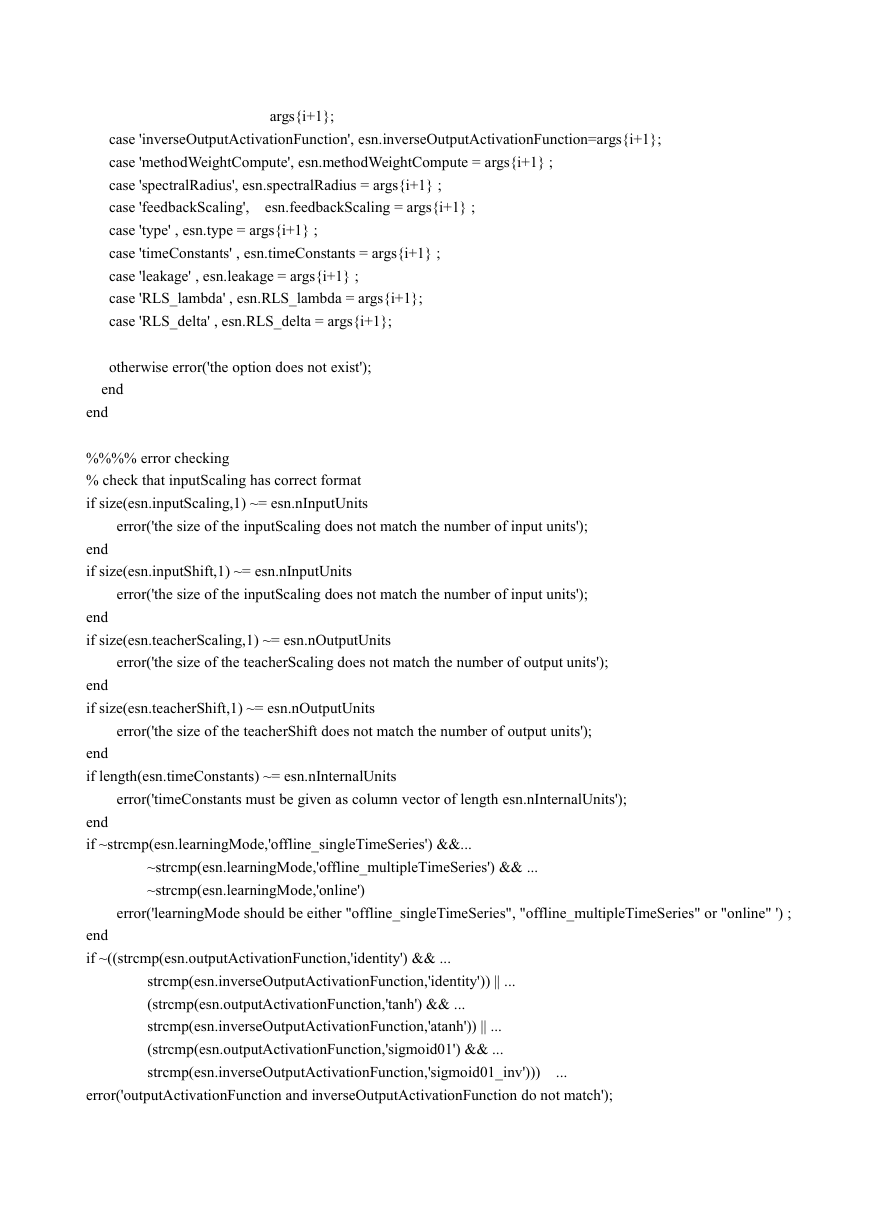

args{i+1};

case 'inverseOutputActivationFunction', esn.inverseOutputActivationFunction=args{i+1};

case 'methodWeightCompute', esn.methodWeightCompute = args{i+1} ;

case 'spectralRadius', esn.spectralRadius = args{i+1} ;

case 'feedbackScaling',

case 'type' , esn.type = args{i+1} ;

case 'timeConstants' , esn.timeConstants = args{i+1} ;

case 'leakage' , esn.leakage = args{i+1} ;

case 'RLS_lambda' , esn.RLS_lambda = args{i+1};

case 'RLS_delta' , esn.RLS_delta = args{i+1};

esn.feedbackScaling = args{i+1} ;

otherwise error('the option does not exist');

end

end

%%%% error checking

% check that inputScaling has correct format

if size(esn.inputScaling,1) ~= esn.nInputUnits

error('the size of the inputScaling does not match the number of input units');

end

if size(esn.inputShift,1) ~= esn.nInputUnits

error('the size of the inputScaling does not match the number of input units');

end

if size(esn.teacherScaling,1) ~= esn.nOutputUnits

error('the size of the teacherScaling does not match the number of output units');

end

if size(esn.teacherShift,1) ~= esn.nOutputUnits

error('the size of the teacherShift does not match the number of output units');

end

if length(esn.timeConstants) ~= esn.nInternalUnits

error('timeConstants must be given as column vector of length esn.nInternalUnits');

end

if ~strcmp(esn.learningMode,'offline_singleTimeSeries') &&...

~strcmp(esn.learningMode,'offline_multipleTimeSeries') && ...

~strcmp(esn.learningMode,'online')

error('learningMode should be either "offline_singleTimeSeries", "offline_multipleTimeSeries" or "online" ') ;

end

if ~((strcmp(esn.outputActivationFunction,'identity') && ...

strcmp(esn.inverseOutputActivationFunction,'identity')) || ...

(strcmp(esn.outputActivationFunction,'tanh') && ...

strcmp(esn.inverseOutputActivationFunction,'atanh')) || ...

(strcmp(esn.outputActivationFunction,'sigmoid01') && ...

strcmp(esn.inverseOutputActivationFunction,'sigmoid01_inv')))

...

error('outputActivationFunction and inverseOutputActivationFunction do not match');

�

end

function internalWeights = generate_internal_weights(nInternalUnits, ...

connectivity)

uccess = 0 ;

while success == 0

% following block might fail, thus we repeat until we obtain a valid

% internalWeights matrix

try,

internalWeights = sprand(nInternalUnits, nInternalUnits, connectivity);

internalWeights(internalWeights ~= 0) = internalWeights(internalWeights ~= 0)

maxVal = max(abs(myeigs(internalWeights,1)));%一个最大特征值取绝对值

internalWeights = internalWeights/maxVal;

success = 1 ;

- 0.5;

catch,

success = 0 ;

end

end

function [trained_esn, stateCollection] = ...

train_esn(trainInput, trainOutput , esn, nForgetPoints)

trained_esn = esn;

switch trained_esn.learningMode

case 'offline_singleTimeSeries'

% trainInput and trainOutput each represent a single time series in

% an array of size sequenceLength x sequenceDimension

if strcmp(trained_esn.type, 'twi_esn')

if size(trainInput,2) > 1

trained_esn.avDist = ...

mean(sqrt(sum(((trainInput(2:end,:) - trainInput(1:end - 1,:))').^2)));

trained_esn.avDist = mean(abs(trainInput(2:end,:) - trainInput(1:end - 1,:)));

else

end

end

stateCollection = compute_statematrix(trainInput, trainOutput, trained_esn, nForgetPoints) ;

teacherCollection = compute_teacher(trainOutput, trained_esn, nForgetPoints) ;

trained_esn.outputWeights

feval(trained_esn.methodWeightCompute,

=

stateCollection,

teacherCollection) ;

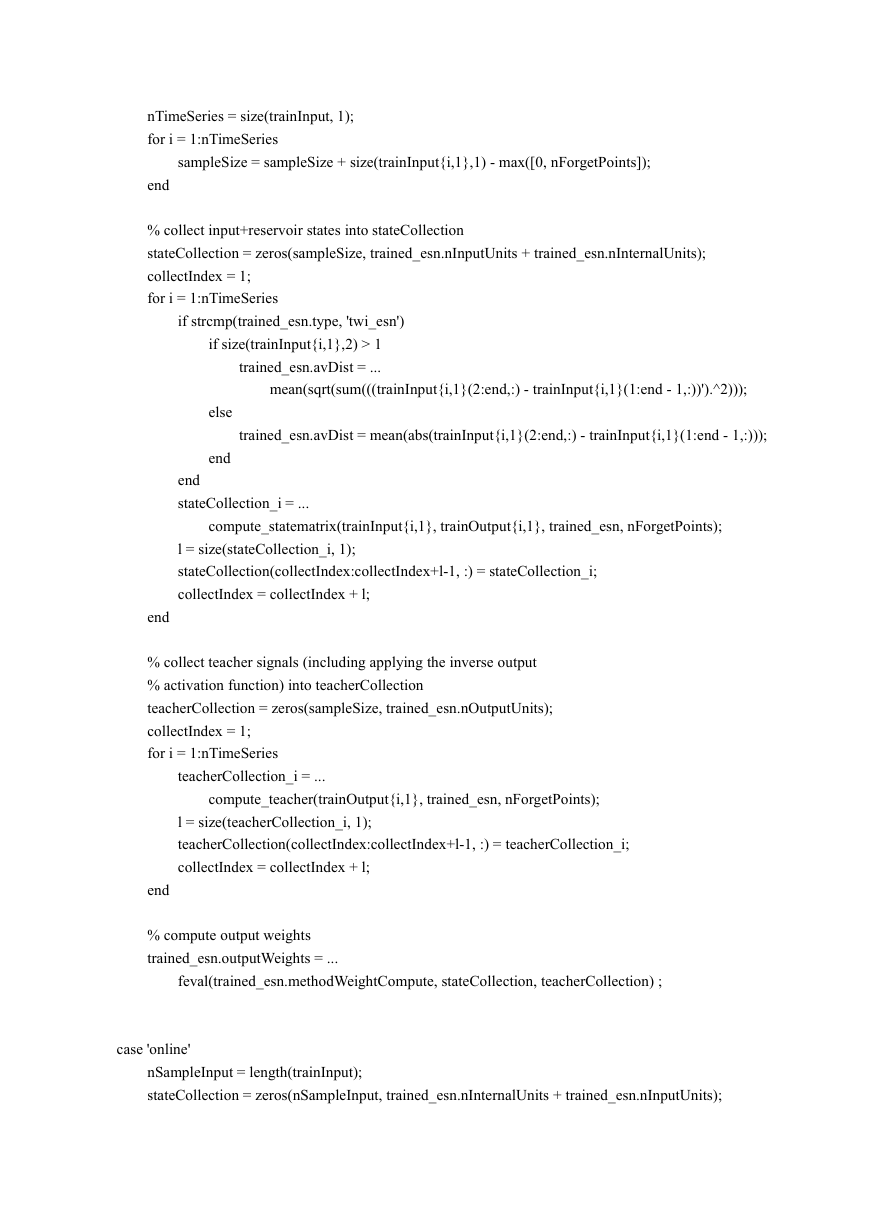

case

'offline_multipleTimeSeries'

% trainInput and trainOutput each represent a collection of K time

% series, given in cell arrays of size K x 1, where each cell is an

% array of size individualSequenceLength x sequenceDimension

% compute total size of sample points to be used

sampleSize = 0;

�

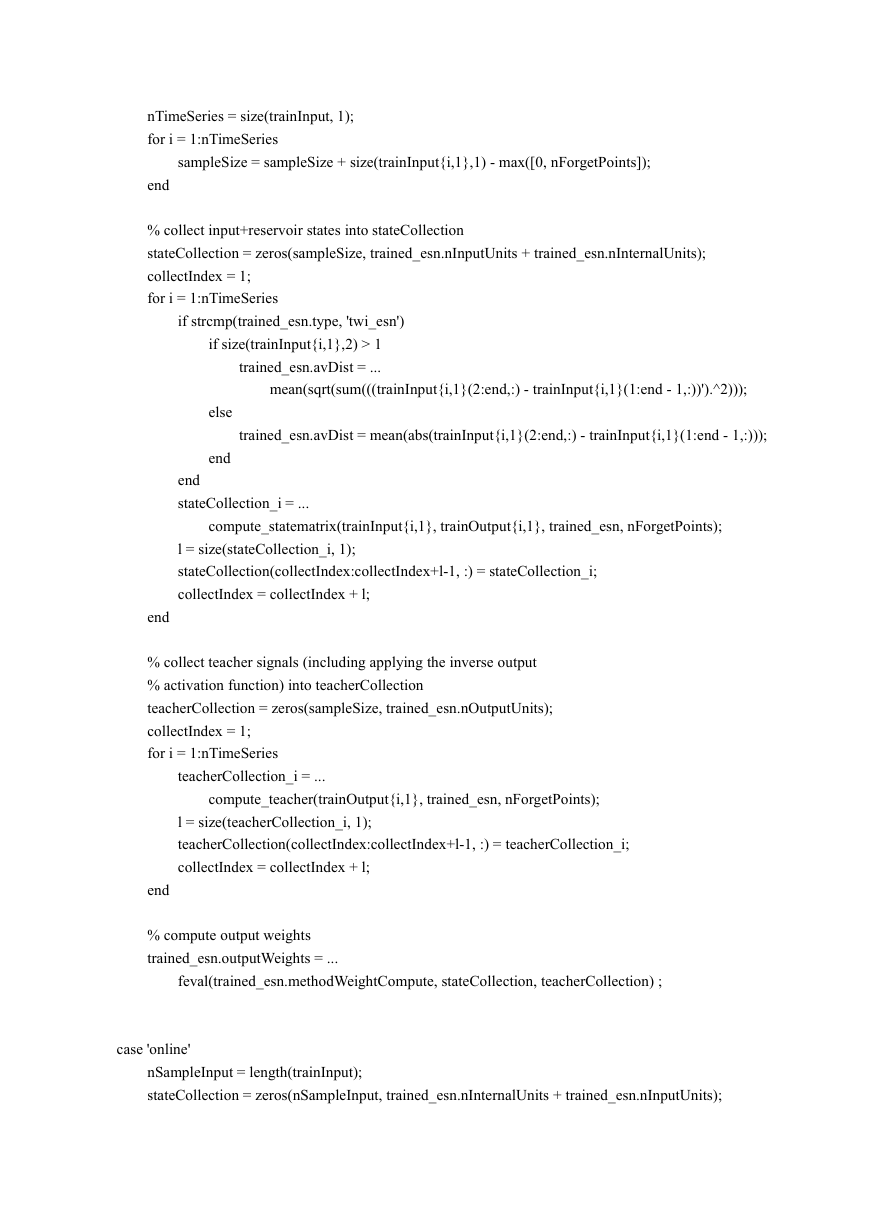

nTimeSeries = size(trainInput, 1);

for i = 1:nTimeSeries

sampleSize = sampleSize + size(trainInput{i,1},1) - max([0, nForgetPoints]);

end

% collect input+reservoir states into stateCollection

stateCollection = zeros(sampleSize, trained_esn.nInputUnits + trained_esn.nInternalUnits);

collectIndex = 1;

for i = 1:nTimeSeries

if strcmp(trained_esn.type, 'twi_esn')

if size(trainInput{i,1},2) > 1

trained_esn.avDist = ...

mean(sqrt(sum(((trainInput{i,1}(2:end,:) - trainInput{i,1}(1:end - 1,:))').^2)));

trained_esn.avDist = mean(abs(trainInput{i,1}(2:end,:) - trainInput{i,1}(1:end - 1,:)));

else

end

end

stateCollection_i = ...

compute_statematrix(trainInput{i,1}, trainOutput{i,1}, trained_esn, nForgetPoints);

l = size(stateCollection_i, 1);

stateCollection(collectIndex:collectIndex+l-1, :) = stateCollection_i;

collectIndex = collectIndex + l;

end

% collect teacher signals (including applying the inverse output

% activation function) into teacherCollection

teacherCollection = zeros(sampleSize, trained_esn.nOutputUnits);

collectIndex = 1;

for i = 1:nTimeSeries

teacherCollection_i = ...

compute_teacher(trainOutput{i,1}, trained_esn, nForgetPoints);

l = size(teacherCollection_i, 1);

teacherCollection(collectIndex:collectIndex+l-1, :) = teacherCollection_i;

collectIndex = collectIndex + l;

end

% compute output weights

trained_esn.outputWeights = ...

feval(trained_esn.methodWeightCompute, stateCollection, teacherCollection) ;

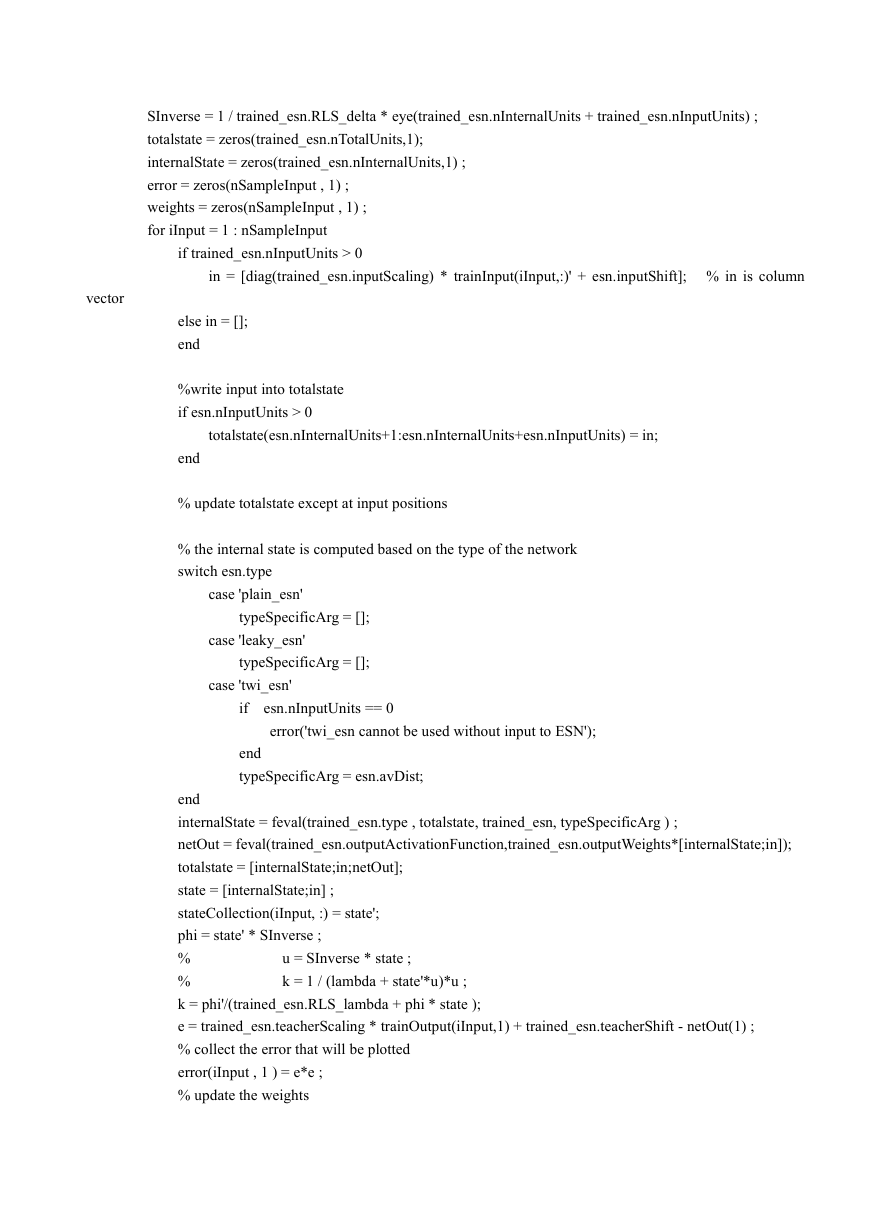

case 'online'

nSampleInput = length(trainInput);

stateCollection = zeros(nSampleInput, trained_esn.nInternalUnits + trained_esn.nInputUnits);

�

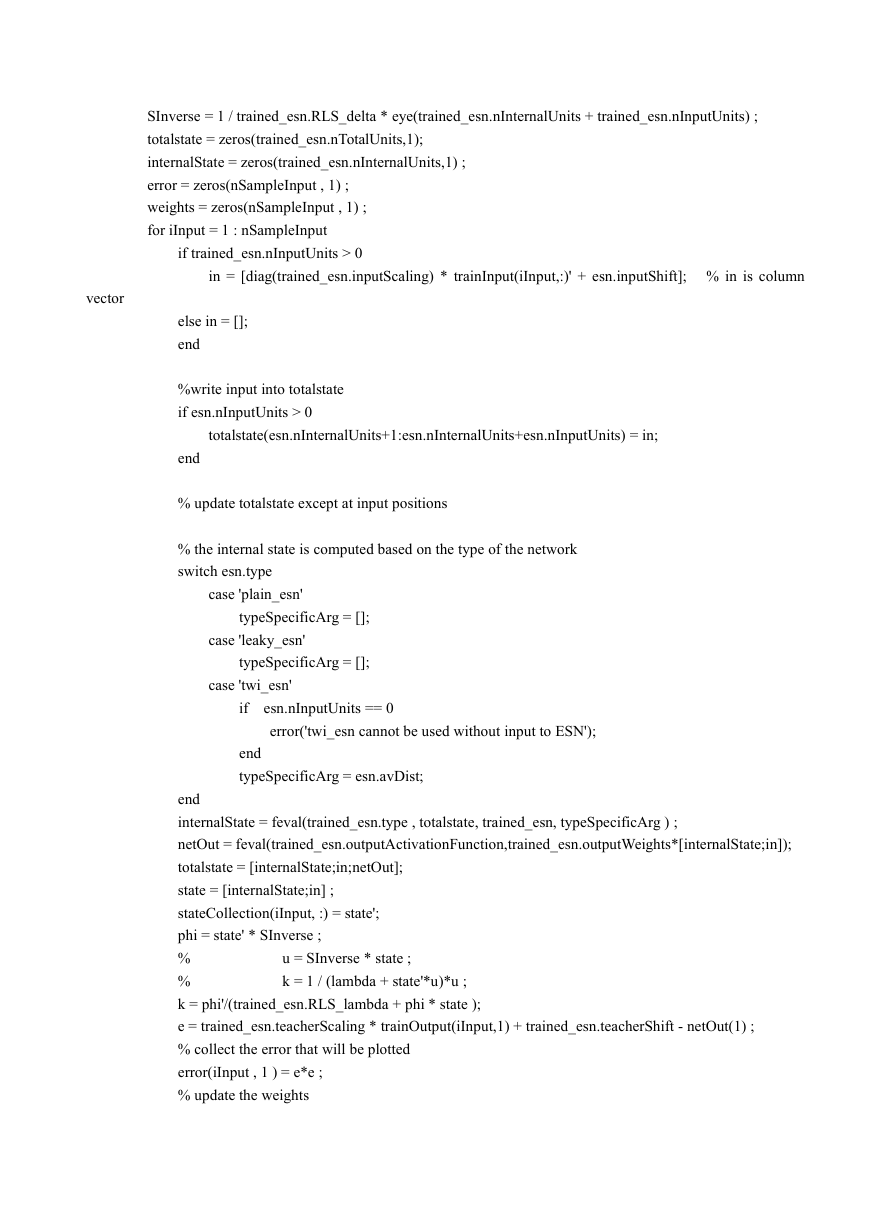

SInverse = 1 / trained_esn.RLS_delta * eye(trained_esn.nInternalUnits + trained_esn.nInputUnits) ;

totalstate = zeros(trained_esn.nTotalUnits,1);

internalState = zeros(trained_esn.nInternalUnits,1) ;

error = zeros(nSampleInput , 1) ;

weights = zeros(nSampleInput , 1) ;

for iInput = 1 : nSampleInput

if trained_esn.nInputUnits > 0

in = [diag(trained_esn.inputScaling) * trainInput(iInput,:)' + esn.inputShift]; % in is column

vector

else in = [];

end

%write input into totalstate

if esn.nInputUnits > 0

totalstate(esn.nInternalUnits+1:esn.nInternalUnits+esn.nInputUnits) = in;

end

% update totalstate except at input positions

% the internal state is computed based on the type of the network

switch esn.type

case 'plain_esn'

typeSpecificArg = [];

case 'leaky_esn'

typeSpecificArg = [];

case 'twi_esn'

if

esn.nInputUnits == 0

error('twi_esn cannot be used without input to ESN');

end

typeSpecificArg = esn.avDist;

end

internalState = feval(trained_esn.type , totalstate, trained_esn, typeSpecificArg ) ;

netOut = feval(trained_esn.outputActivationFunction,trained_esn.outputWeights*[internalState;in]);

totalstate = [internalState;in;netOut];

state = [internalState;in] ;

stateCollection(iInput, :) = state';

phi = state' * SInverse ;

%

%

k = phi'/(trained_esn.RLS_lambda + phi * state );

e = trained_esn.teacherScaling * trainOutput(iInput,1) + trained_esn.teacherShift - netOut(1) ;

% collect the error that will be plotted

error(iInput , 1 ) = e*e ;

% update the weights

u = SInverse * state ;

k = 1 / (lambda + state'*u)*u ;

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc