Medical Image Segmentation Using Deep Learning:

A Survey

Tao Lei, Senior Member, IEEE, Risheng Wang, Yong Wan, Xiaogang Du, Hongying Meng, Senior Member, IEEE

and Asoke K. Nandi, Fellow, IEEE

1

0

2

0

2

p

e

S

8

2

]

V

I

.

s

s

e

e

[

1

v

0

2

1

3

1

.

9

0

0

2

:

v

i

X

r

a

Abstract—Deep learning has been widely used for medical

image segmentation and a large number of papers has been

presented recording the success of deep learning in the field.

In this paper, we present a comprehensive thematic survey on

medical image segmentation using deep learning techniques. This

paper makes two original contributions. Firstly, compared to

traditional surveys that directly divide literatures of deep learning

on medical image segmentation into many groups and introduce

literatures in detail for each group, we classify currently popular

literatures according to a multi-level structure from coarse to fine.

Secondly, this paper focuses on supervised and weakly supervised

learning approaches, without including unsupervised approaches

since they have been introduced in many old surveys and they

are not popular currently. For supervised learning approaches,

we analyze literatures in three aspects: the selection of backbone

networks, the design of network blocks, and the improvement

of loss functions. For weakly supervised learning approaches, we

investigate literature according to data augmentation, transfer

learning, and interactive segmentation, separately. Compared

to existing surveys, this survey classifies the literatures very

differently from before and is more convenient for readers to

understand the relevant rationale and will guide them to think of

appropriate improvements in medical image segmentation based

on deep learning approaches.

Index Terms—medical

image segmentation, deep learning,

supervised learning, weakly supervised learning.

I. INTRODUCTION

M EDICAL image segmentation aims to make anatomical

or pathological structures changes in more clear in

images; it often plays a key role in computer aided diagnosis

and smart medicine due to the great improvement in diagnostic

efficiency and accuracy. Popular medical image segmentation

tasks include liver and liver-tumor segmentation [1, 2], brain

and brain-tumor segmentation [3, 4], optic disc segmentation

[5, 6], cell segmentation [7, 8], lung segmentation and pul-

monary nodules [9, 118], etc. With the development and pop-

ularization of medical imaging equipments, X-ray, Computed

Tomography (CT), Magnetic Resonance Imaging (MRI) and

ultrasound have become four important image assisted means

to help clinicians diagnose diseases, to evaluate prognopsis,

and to plan operations in medical institutions. In practical

applications, although these ways of imaging have advantages

as well as disadvantages,

they are useful for the medical

examination of different parts of human body.

To help clinicians make accurate diagnosis, it is necessary

to segment some crucial objects in medical images and extract

features from segmented areas. Early approaches to medical

image segmentation often depend on edge detection, template

matching techniques, statistical shape models, active contours,

and machine learning, etc. Zhao et al. [10] proposed a new

mathematical morphology edge detection algorithm for lung

CT images. Lalonde et al. [11] applied Hausdorff-based tem-

plate matching to disc inspection, and Chen et al. [12] also em-

ployed template matching to perform ventricular segmentation

in brain CT images. Tsai et al. [145] proposed a shape based

approach using horizontal sets for 2D segmentation of cardiac

MRI images and 3D segmentation of prostate MRI images.

Li et al. [146] used the activity profile model to segment

liver-tumors from abdominal CT images, while Li et al. [147]

proposed a framework for medical body data segmentation by

combining level sets and support vector machines (SVMs).

Held et al. [148] applied Markov random fields (MRF) to

brain MRI image segmentation. Although a large number of

approaches have been reported and they are successful in

certain circumstances, image segmentation is still one of the

most challenging topics in the field of computer vision due

to the difficulty of feature representation. In particular, it is

more difficult to extract discriminating features from medical

images than normal RGB images since the former often suffers

from problems of blur, noise, low contrast, etc. Due to the

rapid development of deep learning techniques [13], medical

image segmentation will no longer require hand-crafted feature

and convolutional neural networks (CNN) successfully achieve

hierarchical feature representation of images, and thus become

the hottest research topic in image processing and computer

vision. As CNNs used for feature learning are insensitive

to image noise, blur, contrast, etc., they provide excellent

segmentation results for medical images.

It is worth mentioning that there are currently two categories

of image segmentation tasks, semantic segmentation and in-

stance segmentation. Image semantic segmentation is a pixel-

level classification that assigns a corresponding category to

each pixel in an image. Compared to semantic segmentation,

the instance segmentation not only needs to achieve pixel-

level classification, but also needs to distinguish instances on

the basis of specific categories. In fact, there are few reports

on instance segmentation in medical image segmentation since

each organ or tissue is quite different. In this paper, we review

the advances of deep learning techniques on medical image

segmentation.

According to the number of labeled data, machine learning

is often categorized into supervised learning, weakly super-

vised learning, and unsupervised learning. The advantage of

supervised learning is that we can train models based on

carefully labeled data, but it is difficult to obtain a large

number of labeled data for medical images. On the contrary,

�

2

dom fields, k-means clustering, random forest, and reviewed

latest deep learning architectures such as the artificial neural

networks (ANNs), the convolutional neural networks (CNNs),

the recurrent neural networks (RNNs), etc. Tajbakhsh et al.

[19] reviewed solutions of medical image segmentation with

imperfect datasets, including two major dataset limitations:

scarce annotations and weak annotations. All these surveys

play an important role for the development of medical image

segmentation techniques. Hesamian et al. [119] reviewed on

three aspects of approaches (network structures),

training

techniques, and challenges. The network structures section

describes the main, popular network structures used for image

segmentation. The training techniques section discusses the J

Digit imaging technique used to train deep neural network

models. The challenges section describes the various chal-

lenges associated with medical image segmentation using deep

learning techniques. Meyer et al. [120] reviewed the advances

in the application or potential application of deep learning

to radiotherapy. Akkus et al. [149] provided an overview

of current deep learning-based segmentation approaches for

quantitative brain MRI images. Eelbode et al. [150] focus on

evaluating and summarizing the optimization methods used in

medical image segmentation tasks based primarily on Dice

scores or Jaccard indices.

Through studying the aforementioned surveys, researchers

can learn the latest techniques of medical image segmentation,

and then make more significant contributions for computer

aided diagnoses and smart healthcare. However, these sur-

veys suffer from two problems. One is that most of them

chronologically summarize the development of medical image

segmentation, and they thus ignore the technical branch of

deep learning for medical

image segmentation. The other

problem is that these surveys only introduce related tech-

nical development but not focus on the task characteristics

of medical image segmentation such as few-shot learning,

imbalance learning, etc., which limits the improvement of

medical image segmentation based on task-driven. To address

these two problems, we present a novel survey on medical

image segmentation using deep learning. In this work, we

make the following contributions:

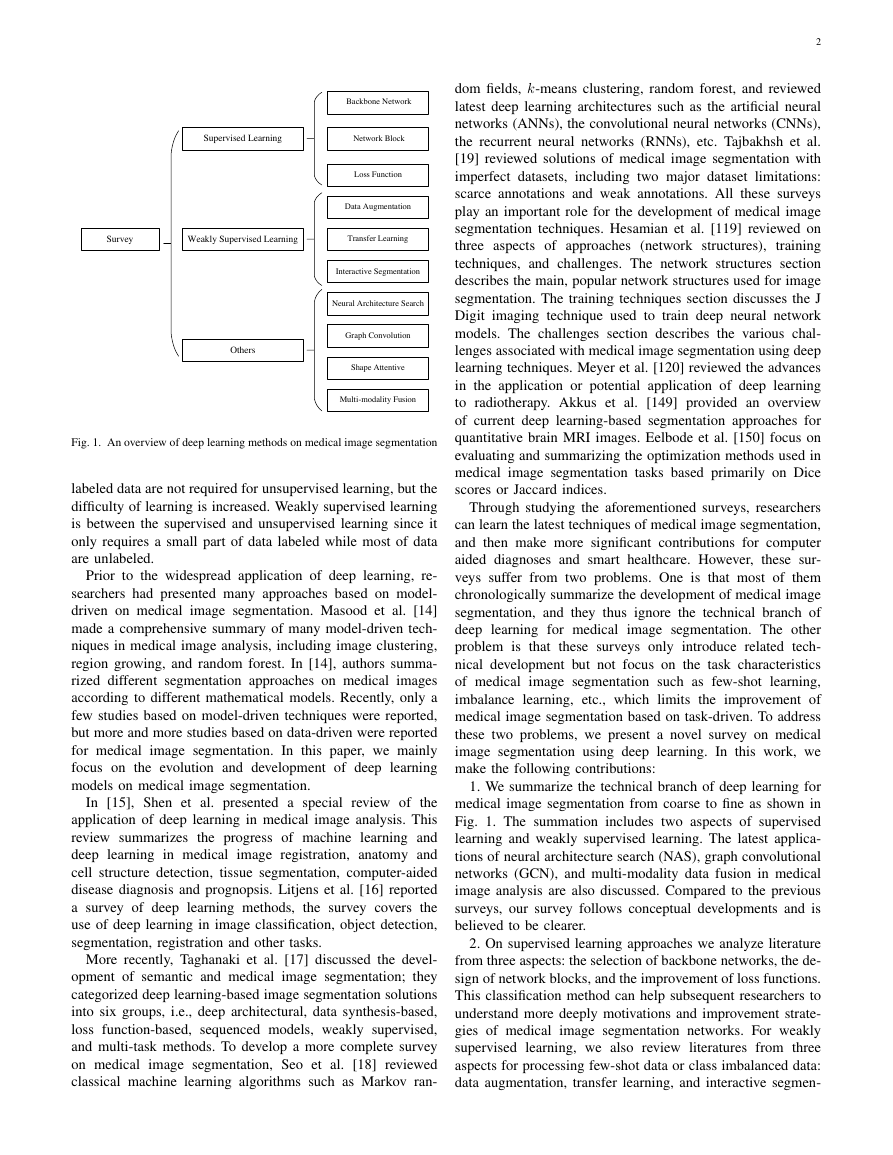

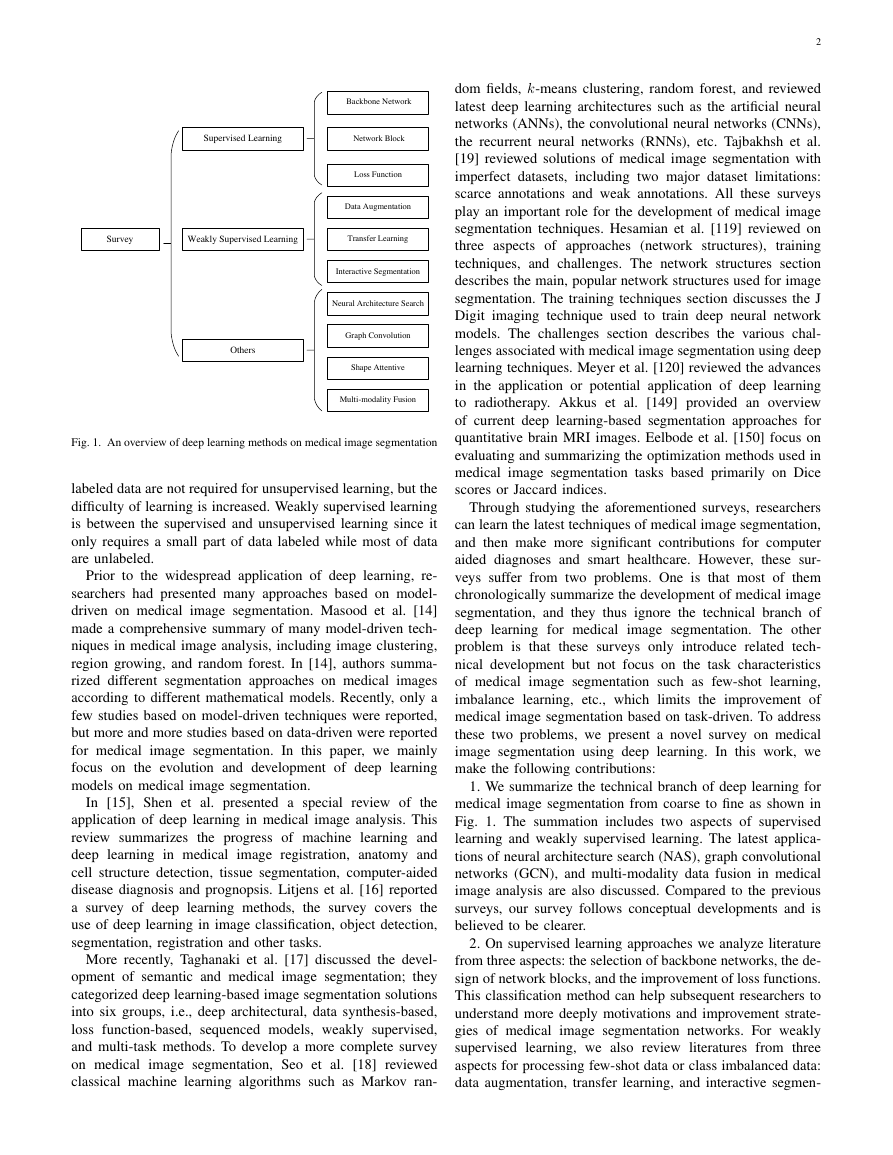

1. We summarize the technical branch of deep learning for

medical image segmentation from coarse to fine as shown in

Fig. 1. The summation includes two aspects of supervised

learning and weakly supervised learning. The latest applica-

tions of neural architecture search (NAS), graph convolutional

networks (GCN), and multi-modality data fusion in medical

image analysis are also discussed. Compared to the previous

surveys, our survey follows conceptual developments and is

believed to be clearer.

2. On supervised learning approaches we analyze literature

from three aspects: the selection of backbone networks, the de-

sign of network blocks, and the improvement of loss functions.

This classification method can help subsequent researchers to

understand more deeply motivations and improvement strate-

gies of medical image segmentation networks. For weakly

supervised learning, we also review literatures from three

aspects for processing few-shot data or class imbalanced data:

data augmentation, transfer learning, and interactive segmen-

Fig. 1. An overview of deep learning methods on medical image segmentation

labeled data are not required for unsupervised learning, but the

difficulty of learning is increased. Weakly supervised learning

is between the supervised and unsupervised learning since it

only requires a small part of data labeled while most of data

are unlabeled.

Prior to the widespread application of deep learning, re-

searchers had presented many approaches based on model-

driven on medical image segmentation. Masood et al. [14]

made a comprehensive summary of many model-driven tech-

niques in medical image analysis, including image clustering,

region growing, and random forest. In [14], authors summa-

rized different segmentation approaches on medical images

according to different mathematical models. Recently, only a

few studies based on model-driven techniques were reported,

but more and more studies based on data-driven were reported

for medical image segmentation. In this paper, we mainly

focus on the evolution and development of deep learning

models on medical image segmentation.

In [15], Shen et al. presented a special review of the

application of deep learning in medical image analysis. This

review summarizes the progress of machine learning and

deep learning in medical

image registration, anatomy and

cell structure detection, tissue segmentation, computer-aided

disease diagnosis and prognopsis. Litjens et al. [16] reported

a survey of deep learning methods, the survey covers the

use of deep learning in image classification, object detection,

segmentation, registration and other tasks.

More recently, Taghanaki et al. [17] discussed the devel-

opment of semantic and medical image segmentation; they

categorized deep learning-based image segmentation solutions

into six groups, i.e., deep architectural, data synthesis-based,

loss function-based, sequenced models, weakly supervised,

and multi-task methods. To develop a more complete survey

on medical

image segmentation, Seo et al. [18] reviewed

classical machine learning algorithms such as Markov ran-

Supervised Learning Backbone Network Network BlockLoss FunctionSurveyWeakly Supervised LearningOthersData AugmentationTransfer LearningInteractive SegmentationNeural Architecture SearchGraph ConvolutionShape AttentiveMulti-modality Fusion�

3

end-to-end architectures, such as fully convolution network

(FCN) [20], U-Net [7], Deeplab [21], etc. In these structures,

an encoder is often used to extract image features while a

decoder is often used to restore extracted features to the

original image size and output the final segmentation results.

Although the end-to-end structure is pragmatic for medical

image segmentation, it reduces the interpretability of models.

The first high-impact encoder-decoder structure, the U-Net

proposed by Ronneberger et al. [7] has been widely used

for medical

image segmentation. Fig. 3 shows the U-Net

architecture.

U-Net: The U-Net solves problems of general CNN net-

works used for medical image segmentation, since it adopts

a perfect symmetric structure and skip connection. Different

from common image segmentation, medical images usually

contain noise and show blurred boundaries. Therefore, it is

very difficult to detect or recognize objects in medical images

only depending on image low-level features. Meanwhile, it is

also impossible to obtain accurate boundaries depending only

on image semantic features due to the lack of image detail

information. Whereas, the U-Net effectively fuses low-level

and high-level image features by combining low-resolution

and high-resolution feature maps through skip connections,

which is a perfect solution for medical image segmentation

tasks. Currently, the U-Net has become the benchmark for

most medical image segmentation tasks and has inspired a lot

of meaningful improvements.

tation. This orgnization is expected to be more conducive to

researchers in finding innovations for improving the accuracy

of medical image segmentation.

3. In addition to reviewing comprehensively the devel-

opment and application of deep learning in medical image

segmentation, we also collect the currently common public

medical

image segmentation datasets. Finally, we discuss

future research trends and directions in this field.

The rest of this paper is organized as follows. In Section

II, we review the development and evolution of supervised

learning applied to medical images, including the selection

of backbone network, the design of network blocks, and the

improvement of loss function. In Section III, we introduce

the application of unsupervised or weakly supervised methods

in the field of medical image segmentation and analyze the

commonly unsupervised or weakly supervised strategies for

processing few-shot data or class imbalanced data. In Section

IV, we briefly introduce some of the most advanced methods

of medical image segmentation, including NAS, application of

GCN, multi-modality data fusion, etc. In Section V, we collect

the currently available public medical

image segmentation

datasets, and summarize limitations of current deep learning

methods and future research directions.

II. SUPERVISED LEARNING

For medical image segmentation tasks, supervised learning

is the most popular method since these tasks usually require

high accuracy. In this section, we focus on the review of

improvements of neural network architectures. These improve-

ments mainly include network backbones, network blocks and

the design of loss functions. Fig. 2 shows an overview on

the improvement of network architectures based on supervised

learning.

Fig. 3. The U-Net architecture [7].

3D Net: In practice, as most of medical data such as CT and

MRI images exist in the form of 3D volume data, the use of

3D convolution kernels can better mine the high-dimensional

spatial correlation of data. Motivated by this idea, iek et al.

[22] extended U-Net architecture to the application of 3D

data, and proposed 3D U-Net that deals with 3D medical

data directly. Due to the limitation of computational resources,

the 3D U-Net only includes three down-sampling, which

cannot effectively extract deep-layer image features leading to

limited segmentation accuracy for medical images. In addition,

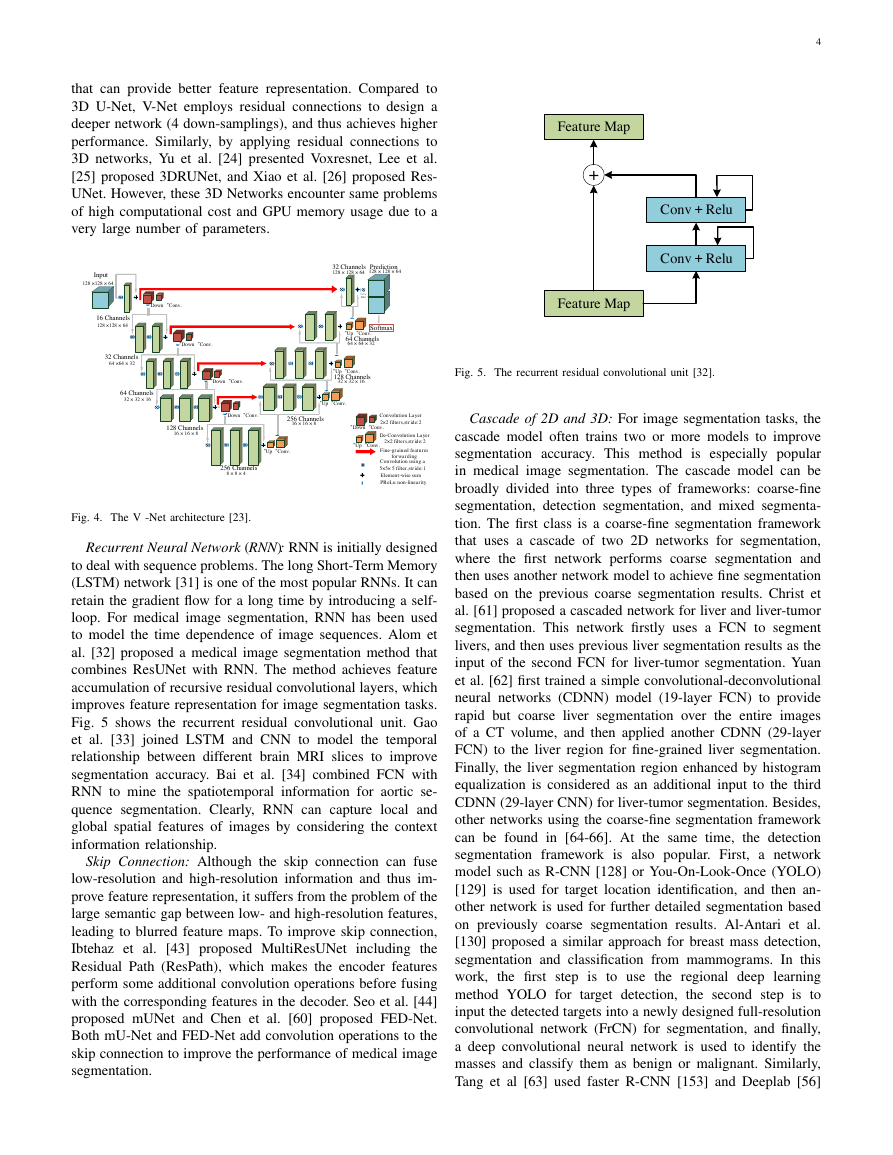

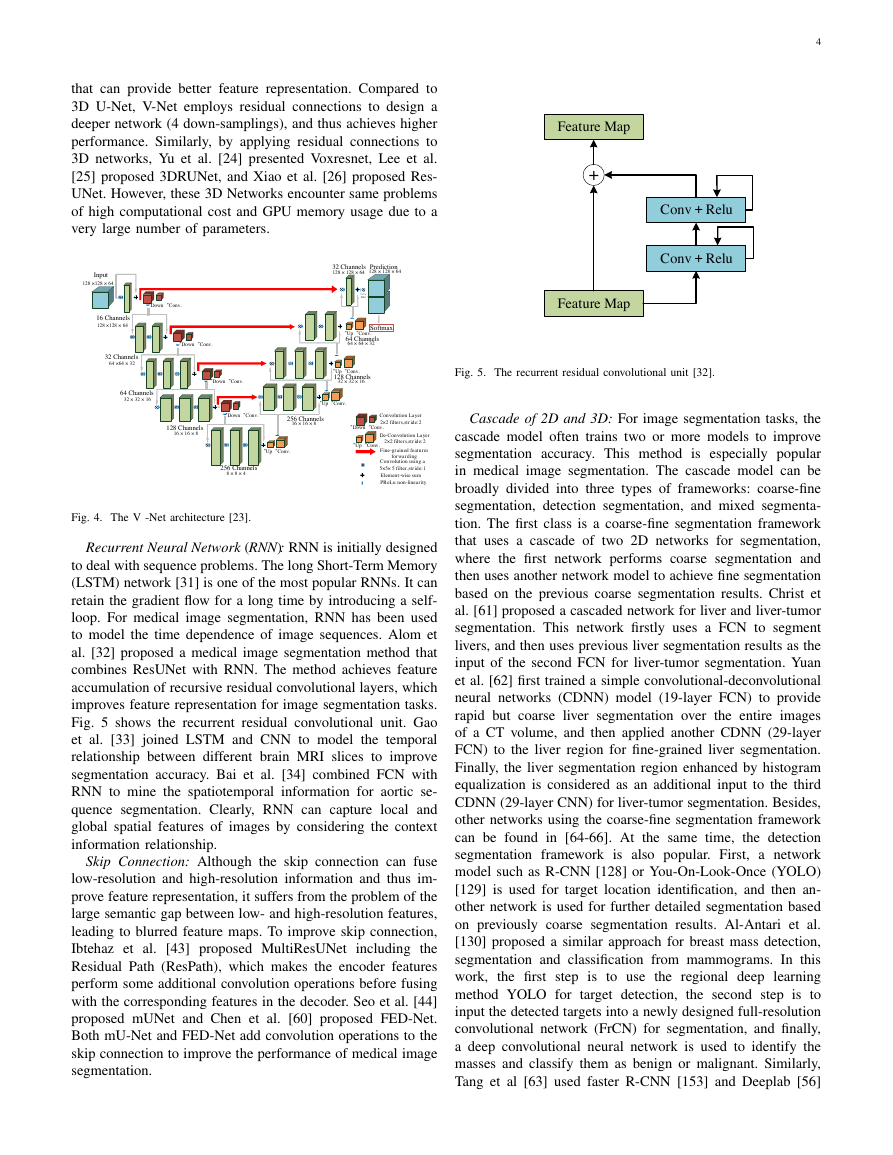

Milletari et al [23] proposed a similar architecture, V-Net, as

shown in Fig. 4. It is well known that residual connections

can avoid vanishing gradient and accelerate network conver-

gence, and it is thus easy to design deeper network structures

Fig. 2. An overview of network architectures based on supervised learning.

A. Backbone Networks

Image semantic segmentation aims to achieve pixel clas-

sification of an image. For this goal, researchers proposed

the encoder-decoder structure that is one of the most popular

Supervised Learning Backbone Network Network BlockLoss FunctionU-NetDepth SeparateDense ConnectionCascade 2D and 3DInceptionSkip ConnectionRecurrent Neural NetworkAttentionMultiscaleLocal Spatial AttentionChannel AttentionMixture AttentionNon-local AttentionPyramid PoolingAtrous Spacial Pyramid PoolingNon-Local and ASPPCross Entropy LossBoundary LossDice LossTversky LossExponential Logarithmic LossGeneralized Dice LossWeight Cross Entropy Loss3D NetConv 3×3,ReLUcopy and crop max pool 2×2up-conv 2×2Conv 1×1164641281282562565125121024102451251225625612812864642InputImagetileoutput segmentation map�

that can provide better feature representation. Compared to

3D U-Net, V-Net employs residual connections to design a

deeper network (4 down-samplings), and thus achieves higher

performance. Similarly, by applying residual connections to

3D networks, Yu et al. [24] presented Voxresnet, Lee et al.

[25] proposed 3DRUNet, and Xiao et al. [26] proposed Res-

UNet. However, these 3D Networks encounter same problems

of high computational cost and GPU memory usage due to a

very large number of parameters.

4

Fig. 5. The recurrent residual convolutional unit [32].

Cascade of 2D and 3D: For image segmentation tasks, the

cascade model often trains two or more models to improve

segmentation accuracy. This method is especially popular

in medical image segmentation. The cascade model can be

broadly divided into three types of frameworks: coarse-fine

segmentation, detection segmentation, and mixed segmenta-

tion. The first class is a coarse-fine segmentation framework

that uses a cascade of two 2D networks for segmentation,

where the first network performs coarse segmentation and

then uses another network model to achieve fine segmentation

based on the previous coarse segmentation results. Christ et

al. [61] proposed a cascaded network for liver and liver-tumor

segmentation. This network firstly uses a FCN to segment

livers, and then uses previous liver segmentation results as the

input of the second FCN for liver-tumor segmentation. Yuan

et al. [62] first trained a simple convolutional-deconvolutional

neural networks (CDNN) model (19-layer FCN) to provide

rapid but coarse liver segmentation over the entire images

of a CT volume, and then applied another CDNN (29-layer

FCN) to the liver region for fine-grained liver segmentation.

Finally, the liver segmentation region enhanced by histogram

equalization is considered as an additional input to the third

CDNN (29-layer CNN) for liver-tumor segmentation. Besides,

other networks using the coarse-fine segmentation framework

can be found in [64-66]. At the same time, the detection

segmentation framework is also popular. First, a network

model such as R-CNN [128] or You-On-Look-Once (YOLO)

[129] is used for target location identification, and then an-

other network is used for further detailed segmentation based

on previously coarse segmentation results. Al-Antari et al.

[130] proposed a similar approach for breast mass detection,

segmentation and classification from mammograms. In this

work,

the first step is to use the regional deep learning

method YOLO for target detection,

the second step is to

input the detected targets into a newly designed full-resolution

convolutional network (FrCN) for segmentation, and finally,

a deep convolutional neural network is used to identify the

masses and classify them as benign or malignant. Similarly,

Tang et al [63] used faster R-CNN [153] and Deeplab [56]

Fig. 4. The V -Net architecture [23].

Recurrent Neural Network (RNN): RNN is initially designed

to deal with sequence problems. The long Short-Term Memory

(LSTM) network [31] is one of the most popular RNNs. It can

retain the gradient flow for a long time by introducing a self-

loop. For medical image segmentation, RNN has been used

to model the time dependence of image sequences. Alom et

al. [32] proposed a medical image segmentation method that

combines ResUNet with RNN. The method achieves feature

accumulation of recursive residual convolutional layers, which

improves feature representation for image segmentation tasks.

Fig. 5 shows the recurrent residual convolutional unit. Gao

et al. [33] joined LSTM and CNN to model the temporal

relationship between different brain MRI slices to improve

segmentation accuracy. Bai et al. [34] combined FCN with

RNN to mine the spatiotemporal information for aortic se-

quence segmentation. Clearly, RNN can capture local and

global spatial features of images by considering the context

information relationship.

Skip Connection: Although the skip connection can fuse

low-resolution and high-resolution information and thus im-

prove feature representation, it suffers from the problem of the

large semantic gap between low- and high-resolution features,

leading to blurred feature maps. To improve skip connection,

Ibtehaz et al. [43] proposed MultiResUNet

including the

Residual Path (ResPath), which makes the encoder features

perform some additional convolution operations before fusing

with the corresponding features in the decoder. Seo et al. [44]

proposed mUNet and Chen et al. [60] proposed FED-Net.

Both mU-Net and FED-Net add convolution operations to the

skip connection to improve the performance of medical image

segmentation.

Input128 ×128 × 6416 Channels128 ×128 × 64“Down“Conv.“Down“Conv.“Down“Conv.“Down“Conv.32 Channels64 ×64 × 3264 Channels32 × 32 × 16128 Channels16 × 16 × 8256 Channels8 × 8 × 4“Up“Conv.“Up“Conv.“Up“Conv.“Up“Conv.256 Channels16 × 16 × 8128 Channels32 × 32 × 1664 Channels64 × 64 × 3232 Channels128 × 128 × 64Prediction128 × 128 × 641×1×filter“Down“Conv.“Up“Conv.Convolution Layer 2×2 filters,stride:2De-Convolution Layer 2×2 filters,stride:2Fine-grained features forwardingConvolution using a 5×5× 5 filter,stride:1Element-wise sumPReLu non-linearitySoftmaxFeature MapConv+ReluConv+Relu+Feature Map�

cascades for localization segmentation of the liver. In addition,

both Salehi et al [131] and Yan et al [132] proposed a kind

of cascade networks for whole-brain MRI and high-resolution

mammogram segmentation. This kind of cascade network can

effectively extract richer multi-scale context information by

using a posteriori probabilities generated by the first network

than normal cascade networks.

However, most of medical images are 3D volume data,

but a 2D convolutional neural network cannot learn temporal

information in the third dimension, and a 3D convolutional

neural network often requires high computation cost and

severes GPU memory consumption. Therefore some pseudo-

3D segmentation methods have been proposed. Oda et al [126]

proposed a three-plane method of cascading three networks

to segment the abdominal artery region effectively from the

medical CT volume. Vu et al. [127] applied the overlay of

adjacent slices as input to the central slice prediction, and

then fed the obtained 2D feature map into a standard 2D

network for model training. Although these pseudo-3D ap-

proaches can segment object from 3D volume data, they only

obtain limited accuracy improvement due to the utilization of

local temporal information. Compared to pseudo-3D networks,

hybrid cascading 2D and 3D networks are more popular.

Li et al. [67] proposed a hybrid densely connected U-Net

(H-DenseUNet) for liver and liver-tumor segmentation. This

method first employs a simple Resnet to obtain a rough liver

segmentation result, utilizing the 2D DenseUNet to extract

2D image features effectively, then uses the 3D DenseUNet

to extract 3D image features, and finally designs a hybrid

feature fusion layer to jointly optimize 2D and 3D features.

Although the H-DenseUNet reduces the complexity of models

compared to an entire 3D network, the model is complex and

it still suffers from a large number of parameters from 3D

convolutions. For the problem, Zhang et al. [37] proposed a

lightweight hybrid convolutional network (LW-HCN) with a

similar structure to the H-DenseUNet, but the former requires

fewer parameters and computational cost than the latter due

to the design of the depthwise and spatiotemporal separate

(DSTS) block and the use of 3D depth separable convolution.

Similarly, Dey et al. [68] also designed a cascade of 2D and

3D network for liver and liver-tumor segmentation.

Obviously, among the three types of cascade networks

mentioned above, the hybrid 2D and 3D cascade network

can effectively improve segmentation accuracy and reduce the

learning burdens.

In contrast to the above cascade networks, Valanarasu et

al. [133] proposed a complete cascade network namely KiU-

Net to perform brain dissection segmentation. The perfor-

mance of vanilla U-Net is greatly degraded when detecting

smaller anatomical structures with fuzzy noise boundaries.

To overcome this problem, authors designed a novel over-

complete architecture Ki-Net, in which the spatial size of the

intermediate layer is larger than that of the input data, and

this is achieved by using an up-sampling layer after each

conversion layer in the encoder. Thus the proposed Ki-Net

possesses stronger edge capture capability compared to U-Net

and finally it is cascaded with the vanilla U-Net to improve

the overall segmentation accuracy. Since the KiU-Net can

5

exploit both the low-level fine edges feature maps using Ki-

Net and the high-level shape feature maps using U-Net, it

not only improves segmentation accuracy but also achieves

fast convergence for small anatomical landmarks and blurred

noisy boundaries.

Others:A generating adversarial networks (GAN) [79] has

been widely used in many areas of computer vision. In its

infancy, the GAN was often used for data augmentation by

generating new samples, which would be reviewed in Section

III, but later researchers discovered that the idea of generative

confrontation could be used in almost any field, and was

therefore also used for image segmentation. Since medical

images usually show low contrast, blurred boundaries between

different tissues or between tissues and lesions, and sparse

medical image data with labels, U-Net-based segmentation

methods using pixel loss to learn local and global relation-

ships between pixels are not sufficient for medical

image

segmentation, and the use of generative adversarial networks

is becoming a popular idea for improving image segmentation.

Luc et al. [121] firstly applied the generative adversarial

network to image segmentation, where the generative network

is used for segmentation models and the adversarial network is

trained as a classifier. Singh et al. [122] proposed a conditional

generation adversarial network (cGAN) to segment breast

tumors within the target area (ROI) in mammograms. The

generative network learns to identify tumor regions and gen-

erates segmentation results, and the adversarial network learns

to distinguish between ground truth and segmentation results

from the generative network, thereby enforcing the generative

network to obtain labels as realistic as possible. The cGAN

works fine when the number of training samples is limited.

Conze et al. [123] utilized cascaded pretrained convolutional

encoder-decoders as generators of cGAN for abdominal multi-

organ segmentation, and considered the adversarial network as

a discriminator to enforces the model to create realistic organ

delineations.

In addition, the incorporation of the prior knowledge about

organ shape and position may be crucial for improving medical

image segmentation effect, where images are corrupted and

thus contain artefacts due to limitations of imaging techniques.

However, there are few works about how to incorporate prior

knowledge into CNN models. As one of the earliest studies

in this field, Oktay et al. [124] proposed a novel and general

method to combine a priori knowledge of shape and label

structure into the anatomically constrained neural networks

(ACNN) for medical image analysis tasks. In this way, the neu-

ral network training process can be constrained and guided to

make more anatomical and meaningful predictions, especially

in cases where input image data is not sufficiently informative

or consistent enough (e.g., missing object boundaries). Sim-

ilarly, Boutillon et al. [125] incorporated anatomical priors

into a conditional adversarial framework for scapula bone

segmentation, combining shape priors with conditional neural

networks to encourage models to follow global anatomical

properties in terms of shape and position information, and

to make segmentation results as accurate as possible. The

above study shows that improved models can provide higher

segmentation accuracy and they are more robust since priori

�

6

2) Inception: For CNNs, deep networks often give better

performances than shallow ones, but they encounter some new

problems such as vanishing gradient, the difficulty of network

convergence, the requirement of large memory usage, etc.

The inception structure overcomes these problems. It gives

better performance by merging convolution kernels in parallel

without increasing the depth of networks. This structure is able

to extract richer image features using multi-scale convolution

kernels, and to perform feature fusion to obtain better feature

representation. Inspired by GoogleNet [29-30], Gu et al.

[28] proposed CE-Net by introducing the inception structure

into medical image segmentation. The CE-Net adds atrous

convolution to each parallel structure to extract features on

a wide reception field, and adds 1 × 1 convolution of feature

maps, Fig. 8 shows the architecture of the inception. However,

the inception structure is complex leading to the difficulty of

model modification.

knowledge constraints are employed in the training process of

neural networks.

B. Network Function Block

1) Dense Connection: Dense connection is often used to

construct a kind of special convolution neural networks. For

dense connection networks, the input of each layer comes from

the output of all previous layers in the process of forward

transmission. Inspired by the dense connection, Guan et al.

[27] proposed an improved U-Net by replacing each sub-

block of U-Net with a form of dense connections as shown in

Fig. 6. Although the dense connection is helpful for obtaining

richer image features, it often reduces the robustness of feature

representation to a certain extent and increases the number of

parameters.

Zhou et al. [41] connected all U-Net layers (from one to

four) together as shown in Fig. 7. The advantage of this

structure is that it allows the network to learn automatically

importance of features at different layers. Besides, the skip

connection is redesigned so that features with different se-

mantic scales can be aggregated in the decoder, resulting in a

highly flexible feature fusion scheme. The disadvantage is that

the number of parameters is increased due to the employment

of dense connection. Therefore, a pruning method is integrated

into model optimization to reduce the number of parameters.

Meanwhile, the deep supervision [42] is also employed to

balance the decline of segmentation accuracy caused by the

pruning.

Fig. 6. Dense connection architecture [27].

Fig. 8. The inception architecture [28].

3) Depth Separability: To improve the generalization ca-

pability of network models and to reduce the requirement

of memory usage, many researchers focus on the study of

lightweight network models for complex medical 3D volume

data. By extending the depth separable convolution to the

design of 3D networks, Lei et al. [35] proposed a lightweight

V-Net (LV-Net) with fewer operations than V-Net for liver

segmentation. Generally, the depth separability decomposes

standard convolution into channel-wise convolution and point-

wise convolution [36]. The number of vanilla convolution

operation is usually DK × DK × M × N, where M is the

dimension of the input feature map, N is the dimension of

the output feature map, DK is the size of the convolution

kernel. However,

the number of the channel convolution

operation is DK × DK × 1 × M and the point convolution

is 1 × 1 × M × N. Compared to vanilla convolution,

the

computational cost of depthwise separable convolution is (1/N

+ 1/D2

K) times that of the vanilla convolution. Besides, Zhang

et al. [37] and Huang et al. [38] also proposed the application

of depthwise separable convolutions to the segmentation of

Fig. 7. The U-Net++ architecture [41].

1×1 conv+BN+Relu3×3 conv+BN+ReluConcatenation connectionX0,0X0,1X0,2X0,3X0,4X1,0X1,1X1,2X1,3X2,0X2,1X2,2X3,0X3,1X4,0Total LossDown-samplingUp-samplingSkip connectionXi,jConvolutionFeature MapFeature Map3×3 ConvRate = 1Channel = 5121×1 ConvRate = 1Channel = 5123×3 ConvRate = 3Channel = 5121×1 ConvRate = 1Channel = 5123×3 ConvRate = 3Channel = 5123×3 ConvRate = 1Channel = 5121×1 ConvRate = 1Channel = 5123×3 ConvRate = 5Channel = 5123×3 ConvRate = 3Channel = 5123×3 ConvRate = 1Channel = 512�

3D medical volume data. Other related works for lightweight

deep networks can be found in [39-40].

4) Attention Mechanism: For neural networks, an attention

block can selectively change input or assigns different weights

to input variables according to different importance. In recent

years, most of researches combining deep learning and visual

attention mechanism have focused on using masks to form

attention mechanisms. The principle of masks is to design

a new layer that can identify key features from an image,

through training and learning, and then let networks only focus

on interesting areas of images.

Local Spatial Attention: The spatial attention block aims

to calculate the feature importance of each pixel in space-

domain and extract the key information of an image. Jaderberg

et al. [45] early proposed a spatial transformer network (ST-

Net) for image classification by using spatial attention that

transforms the spatial information of an original image into

another space and retains the key information. Normal pooling

is equivalent to the information merge that easily causes the

loss of key information. For this problem, a block called spatial

transformer is designed to extract key information of images

by performing a spatial transformation. Inspired by this, Oktay

et al. [46] proposed attention U-Net. The improved U-Net

uses an attention block to change the output of the encoder

before fusing features from the encoder and the corresponding

decoder. The attention block outputs a gating signal to control

feature importance of pixels at different spatial positions. Fig.

9 shows the architecture. This block combines the Relu and

sigmoid functions via 1 × 1 convolution to generate a weight

map that is corrected by multiplying features from the encoder.

Fig. 9. The attention block in the attention U-Net [46].

Channel Attention: The channel attention block can achieve

feature recalibration, which utilizes learned global information

to emphasize selectively useful features and suppress useless

features. Hu et al. [47] proposed SE-Net that introduced the

channel attention to the field of image analysis and won

the ImageNet Challenge in 2017. This method implements

attention weighting on channels using three steps; Fig. 10

shows this architecture. The first is the squeezing operation,

the global average pooling is performed on input features to

obtain the 1 × 1 × Channel feature map. The second is the

excitation operation, where channel features are interacted to

reduce the number of channels, and then the reduced channel

features are reconstructed back to the number of channels.

Finally the sigmoid function is employed to generate a feature

weight map of [0, 1] that multiplies the scale back to the

original input feature. Chen et al. [60] proposed FED-Net that

uses the SE block to achieve the feature channel attention.

Mixture Attention: Spatial and channel attention mecha-

nisms are two popular strategies for improving feature rep-

7

Fig. 10. The channel attention in the SE-Net [47].

resentation. However, the spatial attention ignores the differ-

ence of different channel information and treats each channel

equally. On the contrary, the channel attention pools global

information directly while ignoring local information in each

channel, which is a relatively rough operation. Therefore, com-

bining advantages of two attention mechanisms, researchers

have designed many models based on a mixed domain atten-

tion block. Kaul et al. [48] proposed the focusNet using a

mixture of spatial attention and channel attention for medical

image segmentation, where the SE-Block is used for channel

attention and a branch of spatial attention is designed. Besides,

other related works can be found in [39-40].

To improve the feature discriminant representation of net-

works, Wang et al. [53] embedded an attention block inside

the central bottleneck between the contraction path and the

expansion path of the U-Net, and proposed the ScleraSegNet.

Furthermore, they compared the performance of channel at-

tention, spatial attention, and different combinations of two

attentions for medical image segmentations. They concluded

that the channel-centric attention was the most effective in

improving image segmentation performance. Based on this

conclusion, they finally won the championship of the sclera

segmentation benchmarking competition (SSBC2019).

Although those attention mechanisms mentioned above im-

prove the final segmentation performance, they only perform

an operation of local convolution. The operation focuses on the

area of neighboring convolution kernels but misses the global

information. In addition, the operation of down-sampling leads

to the loss of spatial information, which is especially unfavor-

able for biomedical image segmentation. A basic solution is to

extract long-distance information by stacking multiple layers,

but this is low efficiency due to a large number of parameters

and high computational cost. In the decoder, the up-sampling,

the deconvolution, and the interpolation are also performd in

the way of local convolution.

Non-local Attention: Recently, Wang et al. [54] proposed a

Non-local U-Net to overcome the drawback of local convo-

lution for medical image segmentation. The Non-local U-Net

employs the self-attention mechanism and the global aggrega-

tion block to extract full image information during the parts

of both up-sampling and down-sampling, which can improve

the final segmentation accuracy. Fig. 11 shows the global

aggregation block. The Non-local block is a general-purpose

block that can be easily embedded in different convolutional

neural networks to improve their performance.

It can be seen that the attention mechanism is effective

for improving image segmentation accuracy. In fact, spatial

attention looks for interesting target regions while channel

1×1 Conv1×1 Conv+Relu1×1 ConvSigmoidResampler×HWCHWC1×1×C1×1×CF(gp)F(ex)F(scale)�

attention looks for interesting features. The mixed attention

mechanism can take advantages of both spaces and channles.

However, compared with the non-local attention, the conven-

tional attention mechanism lacks the ability of exploiting the

associations between different targets and features, so CNNs

based on non-local attention usually exhibit better performance

than normal CNNs for image segmentation tasks.

5) Multi-scale Information Fusion: One of the challenges

in medical image segmentation is a large range of scales

among objects. For example, a tumor in the middle or late

stage could be much larger than that in the early stage. The

size of perceptive field roughly determines how much context

information we can use. The general convolution or pooling

only employs a single kernel, for instance, a 3 × 3 kernel for

convolution and a 2 × 2 kernel for pooling.

Pyramid Pooling: The parallel operation of multi-scale pool-

ing can effectively improve context information of networks,

and thus extract richer semantic information. He et al. [55]

first proposed spatial pyramid pooling (SPP) to achieve multi-

scale feature extraction. The SPP divides an image from the

fine space to the coarse space, then gathers local features and

extracts multi-scale features. Inspired by the SPP, a multi-scale

information extraction block is designed and named residual

multi-kernel pooling (RMP) [28] that uses four pooling kernels

with different sizes to encode global context

information.

However, the up-sampling operation in RMP cannot restore the

loss of detail information due to pooling that usually enlarges

the receptive field but reduces the image resolution.

Atrous Spatial Pyramid Pooling: In order to reduce the

loss of detail information caused by pooling operation, re-

searchers proposed atrous convolution instead of the polling

operation. Compared with the vanilla convolution, the atrous

convolution can effectively enlarge the receptive field without

increasing the number of parameters. Combining advantages

of the atrous convolution and the SPP block, Chen et al. [56]

proposed the atrous spatial pyramid pooling module (ASPP) to

improve image segmentation results. The ASPP shows strong

recognition capability on same objects with different scales.

Similarly, Lopez et al. [57] applied the superposition of multi-

scale atrous convolutions to brain tumor segmentation, which

achieves a clear accuracy improvement.

However, the ASPP suffers from two serious problems for

image segmentation. The first problem is the loss of local

information as shown in Fig. 12, where we assume that the

convolutional kernel is 3×3 and the dilation rate is 2 for three

iterations. The second problem is that the information could

be irrelevant across large distances. How to simultaneously

handle the relationship between objects with different scales is

important for designing a fine atrous convolutional network. In

response to the above problems, Wang et al. [58] designed an

hybrid expansion convolution (HDC) networks. This structure

uses a sawtooth wave-like heuristic to allocate the dilation rate,

so that information from a wider pixel range can be accessed

and thus the gridding effect is suppressed. In [58], authors gave

several atrous convolution sequences using variable dilation

rate, e.g., [1,2,3], [3,4,5], [1,2,5], [5,9,17], and [1,2,5,9].

Non-local and ASPP: The atrous convolution can effi-

ciently enlarge the receptive field to collect richer semantic

8

it

information, but it causes the loss of detail information due

to the gridding effect. Therefore,

is necessary to add

constraints or establish pixel associations for improving the

atrous convolution performance. Recently, Yang et al. [59]

proposed a combination block of ASPP and Non-local for the

segmentation of human body parts, as shown in Fig. 13. ASPP

uses multiple parallel atrous convolutions with different scales

to capture richer information, and the Non-local operation

captures a wide range of dependencies. This combination

possesses advantages of both ASPP and Non-local, and it has

a good application prospect for medical image segmentation.

C. Loss Function

In addition to the design of both network backbone and

the function block, the selection of loss functions is also an

important factor for the improvement of network performance.

1) Cross Entropy Loss: For image segmentation tasks, the

cross entropy is one of the most popular loss functions. The

function compares pixel-wisely the predicted category vector

with the real segmentation result vector. For the case of binary

segmentation, let P (Y = 1) = p and P (Y = 0) = 1 − p,

then the prediction is given by the sigmoid function, where

P ( ˆY = 1) = 1/(1 + e−x) = ˆp and P ( ˆY = 0) = 1 − 1/(1 +

e−x) = 1 − ˆp, x is the output of neural networks. The cross

entropy loss is defined as

CE(p, ˆp) = −(plog(ˆp) + (1 − p)log(1 − ˆp)).

(1)

2) Weighted Cross Entropy Loss: The cross entropy loss

deals with each pixel of images equally, and thus outputs an

average value, which ignores the class imbalance and leads

to a problem that

the loss function depends on the class

including the maximal number of pixels. Therefore, the cross

entropy loss often shows low performance for small target

segmentation.

To address the problem of class imbalance, Long et al. [20]

proposed weighted cross entropy loss (WCE) to counteract

the class imbalance. For the case of binary segmentation, the

weighted cross entropy loss is defined as

W CE(p, ˆp) = −(βplog(ˆp) + (1 − p)log(1 − ˆp)),

(2)

where β is used to tune the proportion of positive and negative

samples, and it is an empirical value. If β > 1, the number of

false negatives will be decreased; on the contrary, the number

of false positives will be decreased when β < 1. In fact, the

cross entropy is a special case of the weighted cross entropy

when β = 1. To adjust the weight of positive and negative

samples simultaneously, we can use the balanced cross entropy

(BCE) loss function that is defined as

BCE(p, ˆp) = −(βplog(ˆp) + (1 − β)(1 − p)log(1 − ˆp)).

(3)

In [7], Ronneberger et al. proposed U-Net in which the

cross entropy loss function is improved by adding a distance

function. The improved loss function is able to improve the

learning capability of models for inter-class distance. The

distance function is defined as

D(x) = ω0e

−(d1(x)+d2(x)2

2σ2

,

(4)

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc