TITLE PAGE PROVIDED BY ISOCD 11172-2

CODING OF MOVING PICTURES AND ASSOCIATED AUDIO --

FOR DIGITAL STORAGE MEDIA AT UP TO ABOUT 1.5 Mbit/s --

Part 2 Video

CONTENTS

FOREWORD

INTRODUCTION - PART 2: VIDEO

I.1

I.2

I.3

I.4

I.5

I.6

Temporal Processing

Spatial Redundancy Reduction

Purpose

I.1.1

Coding Parameters

Overview of the Algorithm

I.2.1

I.2.2 Motion Representation - Macroblocks

I.2.3

Encoding

Decoding

Structure of the Coded bitstream

Features Supported by the Algorithm

I.6.1

I.6.2

I.6.3

I.6.4

I.6.5

Random Access

Fast Search

Reverse Playback

Error Robustness

Editing

1

2

Scope

References

GENERAL NORMATIVE ELEMENTS

1.1

1.2

TECHNICAL NORMATIVE ELEMENTS

2.1

2.2

Definitions

Symbols and Abbreviations

Arithmetic Operators

2.2.1

Logical Operators

2.2.2

Relational Operators

2.2.3

Bitwise Operators

2.2.4

2.2.5

Assignment

2.2.6 Mnemonics

2.2.7 Constants

Method of Describing Bit Stream Syntax

Requirements

2.4.1

2.4.2

2.4.3

2.4.4

Coding Structure and Parameters

Specification of the Coded Video Bitstream Syntax

Semantics for the Video Bitstream Syntax

The Video Decoding Process

2.3

2.4

2-ANNEX A (normative) 8 by 8 Inverse Discrete Cosine Transform

2-ANNEX B (normative) Variable Length Code Tables

2-B.1 Macroblock Addressing

2-B.2 Macroblock Type

2-B.3 Macroblock Pattern

2-B.4 Motion Vectors

2-B.5 DCT Coefficients

2-ANNEX C (normative) Video Buffering Verifier

2-C.1 Video Buffering Verifier

4

5

5

5

5

6

6

6

7

8

9

9

10

10

10

10

10

11

11

11

12

12

19

19

19

20

20

20

20

21

21

23

23

26

33

41

A-1

B-1

B-1

B-2

B-3

B-4

B-5

C-1

C-1

�

2-ANNEX D (informative) Guide to Encoding Video

2-D.1

2-D.2 OVERVIEW

INTRODUCTION

2-D.2.1 Video Concepts

2-D.2.2 MPEG Video Compression Techniques

2-D.2.3 Bitstream Hierarchy

2-D.2.4 Decoder Overview

2-D.3 PREPROCESSING

2-D.3.1 Conversion from CCIR 601 Video to MPEG SIF

2-D.3.2 Conversion from Film

2-D.4 MODEL DECODER

2-D.4.1 Need for a Decoder Model

2-D.4.2 Decoder Model

2-D.4.3 Buffer Size and Delay

2-D.5 MPEG VIDEO BITSTREAM SYNTAX

2-D.5.1 Sequence

2-D.5.2 Group of Pictures

2-D.5.3 Picture

2-D.5.4 Slice

2-D.5.5 Macroblock

2-D.5.6 Block

2-D.6 CODING MPEG VIDEO

2-D.6.1 Rate Control

2-D.6.2 Motion Estimation and Compensation

2-D.6.2.1 Motion Compensation

2-D.6.2.2 Motion Estimation

2-D.6.2.3 Coding of Motion Vectors

2-D.6.3 Coding I-Pictures

2-D.6.3.1 Slices in I-Pictures

2-D.6.3.2 Macroblocks in I Pictures

2-D.6.3.3 DCT Transform

2-D.6.3.4 Quantization

2-D.6.3.4 Coding of Quantized Coefficients

2-D.6.4 Coding P-Pictures

2-D.6.4.1 Slices in P-Pictures

2-D.6.4.2 Macroblocks in P Pictures

2-D.6.4.3 Selection of Macroblock Type

2-D.6.4.4 DCT Transform

2-D.6.4.5 Quantization of P Pictures

2-D.6.4.6 Coding of Quantized Coefficients

2-D.6.5 Coding B-Pictures

2-D.6.5.1 Slices in B-Pictures

2-D.6.5.2 Macroblocks in B Pictures

2-D.6.5.4 Selection of Macroblock Type

2-D.6.5.5 DCT Transform

2-D.6.5.6 Quantization

2-D.6.5.7 Coding

2-D.6.6 Coding D-Pictures

2-D.7 DECODING MPEG VIDEO

2-D.7.1 Decoding a Sequence

2-D.8 POST PROCESSING

2-D.8.1 Editing

2-D.8.2 Resampling

2-D.8.2.1 Conversion of MPEG SIF to CCIR 601 Format

2-D.8.2.2 Temporal Resampling

D-1

D-1

D-1

D-1

D-2

D-3

D-6

D-7

D-7

D-10

D-11

D-11

D-11

D-12

D-13

D-13

D-17

D-20

D-24

D-26

D-28

D-28

D-28

D-29

D-29

D-30

D-34

D-36

D-36

D-36

D-37

D-38

D-39

D-44

D-45

D-45

D-47

D-50

D-50

D-51

D-51

D-51

D-52

D-53

D-54

D-54

D-54

D-54

D-55

D-55

D-56

D-56

D-57

D-57

D-58

�

E-1

2-ANNEX E (informative) Bibliography

FOREWORD

This standard is a committee draft that was submitted for approval to ISO-IEC/JTC1 SC29 on 23 November 1991. It was

prepared by SC29/WG11 also known as MPEG (Moving Pictures Expert Group). MPEG was formed in 1988 to

establish a standard for the coded representation of moving pictures and associated audio stored on digital storage media.

This standard is published in four Parts. Part 1 - systems - specifies the system coding layer of the standard. It defines a

multiplexed structure for combining audio and video data and means of representing the timing information needed to

replay synchronized sequences in real-time. Part 2 - video - specifies the coded representation of video data and the

decoding process required to reconstruct pictures. Part 3 - audio - specifies the coded representation of audio data. Part

4 - conformance testing - is still in preparation. It will specify the procedures for determining the characteristics of coded

bit streams and for testing compliance with the requirements stated in Parts 1, 2 and 3.

In Part 1 of this standard all annexes are informative and contain no normative requirements.

In Part 2 of this standard 2-Annex A, 2-Annex B and 2-Annex C contain normative requirements and are an integral part

of this standard. 2-Annex D and 2-Annex E are informative and contain no normative requirements.

In Part 3 of this standard 3-Annex A and 3-Annex B contain normative requirements and are an integral part of this

standard. All other annexes are informative and contain no normative requirements.

INTRODUCTION - PART 2: VIDEO

I.1

This Part of this standard was developed in response to the growing need for a common format for representing

compressed video on various digital storage media such as CDs, DATs, Winchester disks and optical drives. The

standard specifies a coded representation that can be used for compressing video sequences to bitrates around 1.5 Mbit/s.

The use of this standard means that motion video can be manipulated as a form of computer data and can be transmitted

and received over existing and future networks. The coded representation can be used with with both 625-line and 525-

line television and provides flexibility for use with workstation and personal computer displays.

The standard was developed to operate principally from storage media offering a continual transfer rate of about 1.5

Mbit/s. Nevertheless it can be used more widely than this because the approach taken is generic.

Purpose

I.1.1

Coding Parameters

The intention in developing this standard has been to define a source coding algorithm with a large degree of flexibility

that can be used in many different applications. To achieve this goal, a number of the parameters defining the

characteristics of coded bitstreams and decoders are contained in the bitstream itself. This allows for example, the

algorithm to be used for pictures with a variety of sizes and aspect ratios and on channels or devices operating at a wide

range of bitrates.

Because of the large range of the characteristics of bitstreams that can be represented by this standard, a sub-set of these

coding parameters known as the "Constrained Parameters bitstream" has been defined. The aim in defining the

constrained parameters is to offer guidance about a widely useful range of parameters. Conforming to this set of

constraints in not a requirement of this standard. A flag in the bitstream indicates whether or not it is a Constrained

Parameters bitstream.

Summary of the Constrained Parameters:

Horizontal picture size

Vertical picture size

Less than or equal to 768 pels

Less than or equal to 576 lines

�

Less than +/- 64 pels (using half-pel vectors)

Overview of the Algorithm

Less than or equal to 396 macroblocks

Less than or equal to 396x25 macroblocks per second

Less than or equal to 30 Hz

Picture area

Pixel rate

Picture rate

Motion vector range

[Vector scale code <= 4)

Input buffer size (in VBV model) Less than or equal to 327 680 bits

Bitrate Less than or equal to 1 856 000 bits/second (constant bitrate)

I.2

The coded representation defined in this standard achieves a high compression ratio while preserving good picture

quality. The algorithm is not lossless as the exact pel values are not preserved during coding. The choice of the

techniques is based on the need to balance a high picture quality and compression ratio with the requirement to make

random access to the coded bitstream. Obtaining good picture quality at the bitrates of interest demands very high

compression, which is not achievable with intraframe coding alone. The need for random access, however, is best

satisfied with pure intraframe coding. This requires a careful balance between intra- and interframe coding and between

recursive and non-recursive temporal redundancy reduction.

A number of techniques are used to achieve high compression. The first, which is almost independent from this standard,

is to select an appropriate spatial resolution for the signal. The algorithm then uses block-based motion compensation to

reduce the temporal redundancy. Motion compensation is used both for causal prediction of the current picture from a

previous picture, and for non-causal, interpolative prediction from past and future pictures. Motion vectors are defined

for each 16-pel by 16-line region of the image. The difference signal, the prediction error, is further compressed using

the discrete cosine transform (DCT) to remove spatial correlation before it is quantized in an irreversible process that

discards the less important information. Finally, the motion vectors are combined with the residual DCT information,

and transmitted using variable length codes.

I.2.1

Temporal Processing

Because of the conflicting requirements of random access and highly efficient compression, three main picture types are

defined. Intra pictures (I-Pictures) are coded without reference to other pictures. They provide access points to the

coded sequence where decoding can begin, but are coded with only moderate compression. Predicted pictures (P-

Pictures) are coded more efficiently using motion compensated prediction from a past intra or predicted picture and are

generally used as a reference for further prediction. Bidirectionally-predicted pictures (B-Pictures) provide the highest

degree of compression but require both past and future reference pictures for motion compensation. Bidirectionally-

predicted pictures are never used as references for prediction. The organisation of the three picture types in a sequence is

very flexible. The choice is left to the encoder and will depend on the requirements of the application. Figure I.1

illustrates the relationship between the three different picture types.

�

Figure I.1 Example of temporal picture structure, M=3

The fourth picture type defined in the standard, the DC-picture, is provided to allow a simple, but limited quality, fast-

forward mode.

I.2.2

Motion Representation - Macroblocks

The choice of 16 by 16 macroblocks for the motion-compensation unit is a result of the trade-off between the coding gain

provided by using motion information and the overhead needed to store it. Each macroblock can be one of a number of

different types. For example, intra-coded, forwards- prediction-coded, backwards-prediction coded, and bidirectionally-

prediction-coded macroblocks are permitted in bidirectionally-predicted pictures. Depending on the type of the

macroblock, motion vector information and other side information is stored with the compressed prediction error signal

in each macroblock. The motion vectors are encoded differentially with respect to the last transmitted motion vector,

using variable length codes. The maximum length of the vectors that may be represented can be programmed, on a

picture-by-picture basis, so that the most demanding applications can be met without compromising the performance of

the system in more normal situations.

It is the responsibility of the encoder to calculate appropriate motion vectors. The standard does not specify how this

should be done.

I.2.3

Spatial Redundancy Reduction

Both original pictures and prediction error signals have high spatial redundancy. This standard uses a block-based DCT

method with visually weighted quantization and run-length coding. After motion compensated prediction or

interpolation, the residual picture is split into 8 by 8 blocks. These are transformed into the DCT domain where they are

weighted before being quantized. After quantization many of the coefficients are zero in value and so two-dimensional

run-length and variable length coding is used to encode the remaining coefficients efficiently.

I.3

The standard does not specify an encoding process. It specifies the syntax and semantics of the bitstream and the signal

processing in the decoder. As a result, many options are left open to encoders to trade-off cost and speed against picture

quality and coding efficiency. This clause is a brief description of the functions that need to be performed by an encoder.

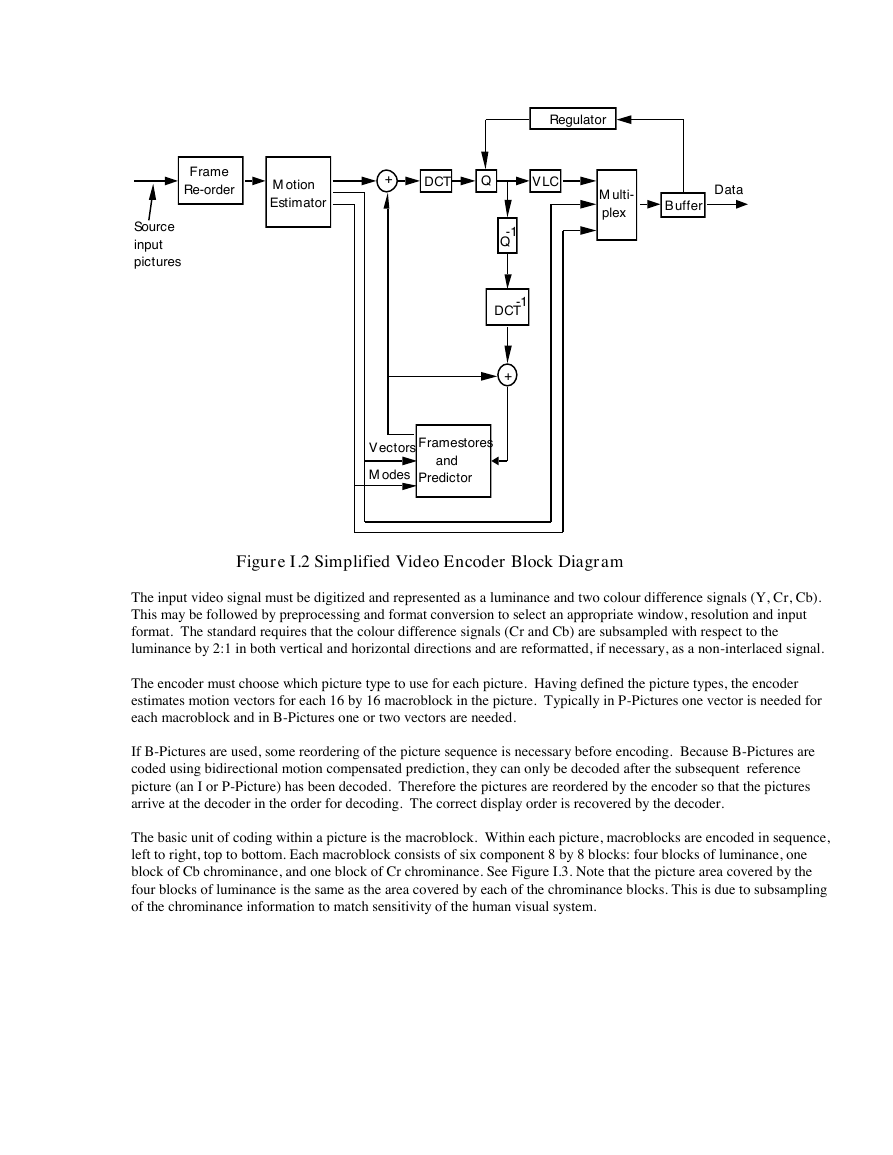

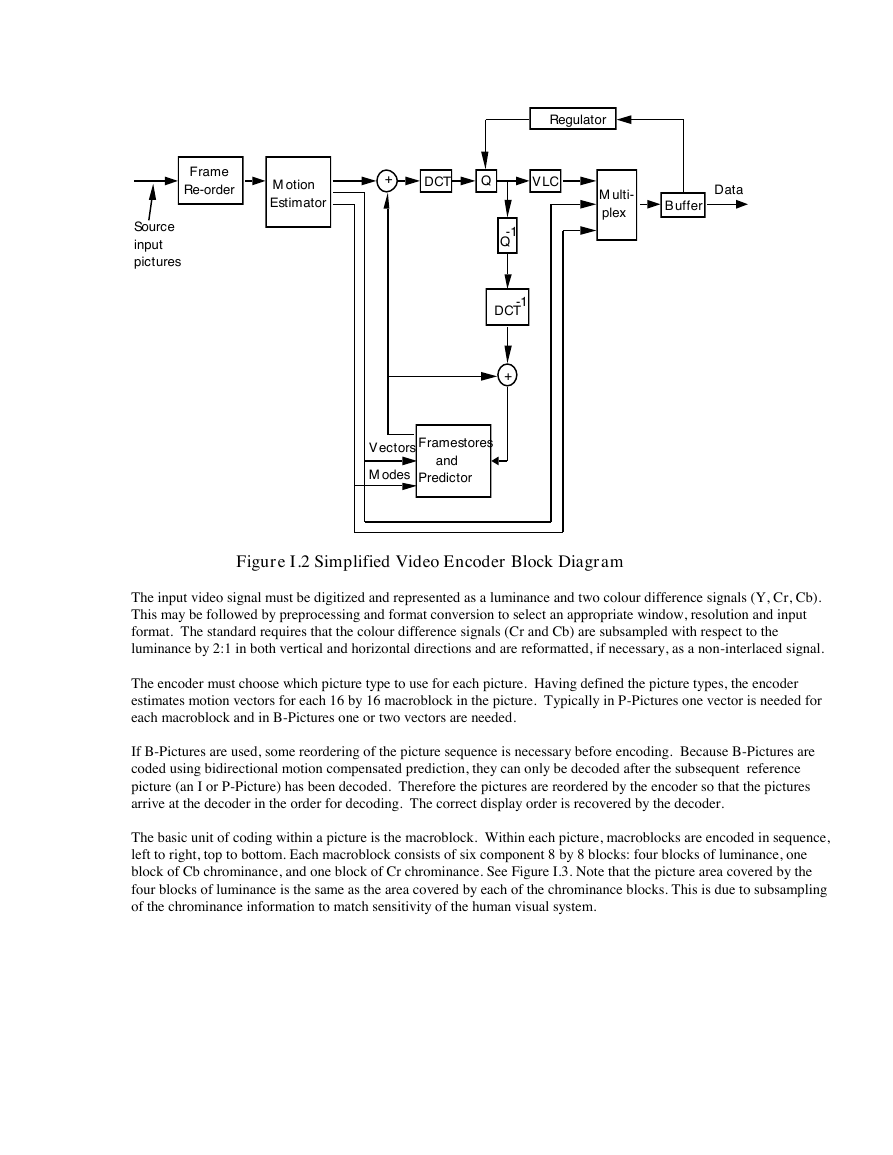

Figure I.2 shows the main functional blocks.

Encoding

1 2 3 4 5 6 7 8 9 I B B B P B B B P Forward PredictionBidirectional Prediction�

Figure I.2 Simplified Video Encoder Block Diagram

The input video signal must be digitized and represented as a luminance and two colour difference signals (Y, Cr, Cb).

This may be followed by preprocessing and format conversion to select an appropriate window, resolution and input

format. The standard requires that the colour difference signals (Cr and Cb) are subsampled with respect to the

luminance by 2:1 in both vertical and horizontal directions and are reformatted, if necessary, as a non-interlaced signal.

The encoder must choose which picture type to use for each picture. Having defined the picture types, the encoder

estimates motion vectors for each 16 by 16 macroblock in the picture. Typically in P-Pictures one vector is needed for

each macroblock and in B-Pictures one or two vectors are needed.

If B-Pictures are used, some reordering of the picture sequence is necessary before encoding. Because B-Pictures are

coded using bidirectional motion compensated prediction, they can only be decoded after the subsequent reference

picture (an I or P-Picture) has been decoded. Therefore the pictures are reordered by the encoder so that the pictures

arrive at the decoder in the order for decoding. The correct display order is recovered by the decoder.

The basic unit of coding within a picture is the macroblock. Within each picture, macroblocks are encoded in sequence,

left to right, top to bottom. Each macroblock consists of six component 8 by 8 blocks: four blocks of luminance, one

block of Cb chrominance, and one block of Cr chrominance. See Figure I.3. Note that the picture area covered by the

four blocks of luminance is the same as the area covered by each of the chrominance blocks. This is due to subsampling

of the chrominance information to match sensitivity of the human visual system.

Frame Re-order Motion Estimator Source input pictures+ DCT Q VLC Q -1DCT -1+ Framestores and Predictor Vectors Modes Multi- plex Regulator BufferData�

Figure I.3 Macroblock Structure

Firstly, for a given macroblock, the coding mode is chosen. It depends on the picture type, the effectiveness of motion

compensated predictionin that local` region, and the nature of the signal within the block. Secondly, depending on the

coding mode, a motion compensated prediction of the contents of the block based on past and/or future reference pictures

is formed. This prediction is subtracted from the actual data in the current macroblock to form an error signal. Thirdly,

this error signal is separated into 8 by 8 blocks (4 luminance and 2 chrominance blocks in each macroblock) and a

discrete cosine transform is performed on each block. The resulting 8 by 8 block of DCT coefficients is quantized and

the two-dimensional block is scanned in a zig-zag order to convert it into a one-dimensional string of quantized DCT

coefficients. Fourthly, the side-information for the macroblock (type, vectors etc) and the quantized coefficient data is

encoded. For maximum efficiency, a number of variable length code tables are defined for the different data elements.

Run-length coding is used for the quantized coefficient data.

A consequence of using different picture types and variable length coding is that the overall data rate is variable. In

applications that involve a fixed-rate channel, a FIFO buffer may be used to match the encoder output to the channel.

The status of this buffer may be monitored to control the number of bits generated by the encoder. Controlling the

quantization process is the most direct way of controlling the bitrate. The standard specifies an abstract model of the

buffering system (the Video Buffer Verifier) in order to constrain the maximum variability in the number of bits that are

used for a given picture. This ensures that a bitstream can be decoded with a buffer of known size

At this stage, the coded representation of the picture has been generated. The final step in the encoder is to regenerate I-

Pictures and P-Pictures by decoding the data so that they can be used as reference pictures for subsequent encoding. The

quantized coefficients are dequantized and an inverse 8 by 8 DCT is performed on each block. The error signal produced

is then added back to the prediction signal and clipped to the required range to give a decoded reference picture.

I.4

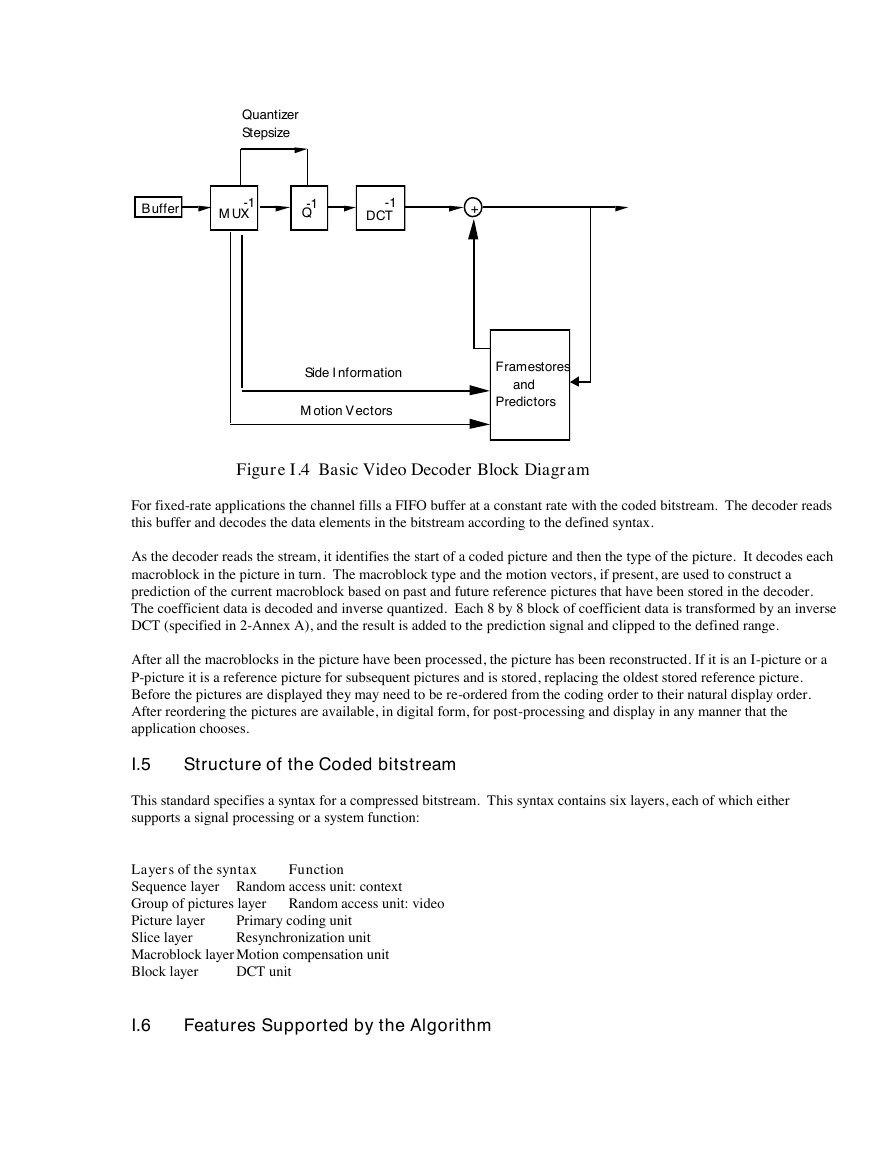

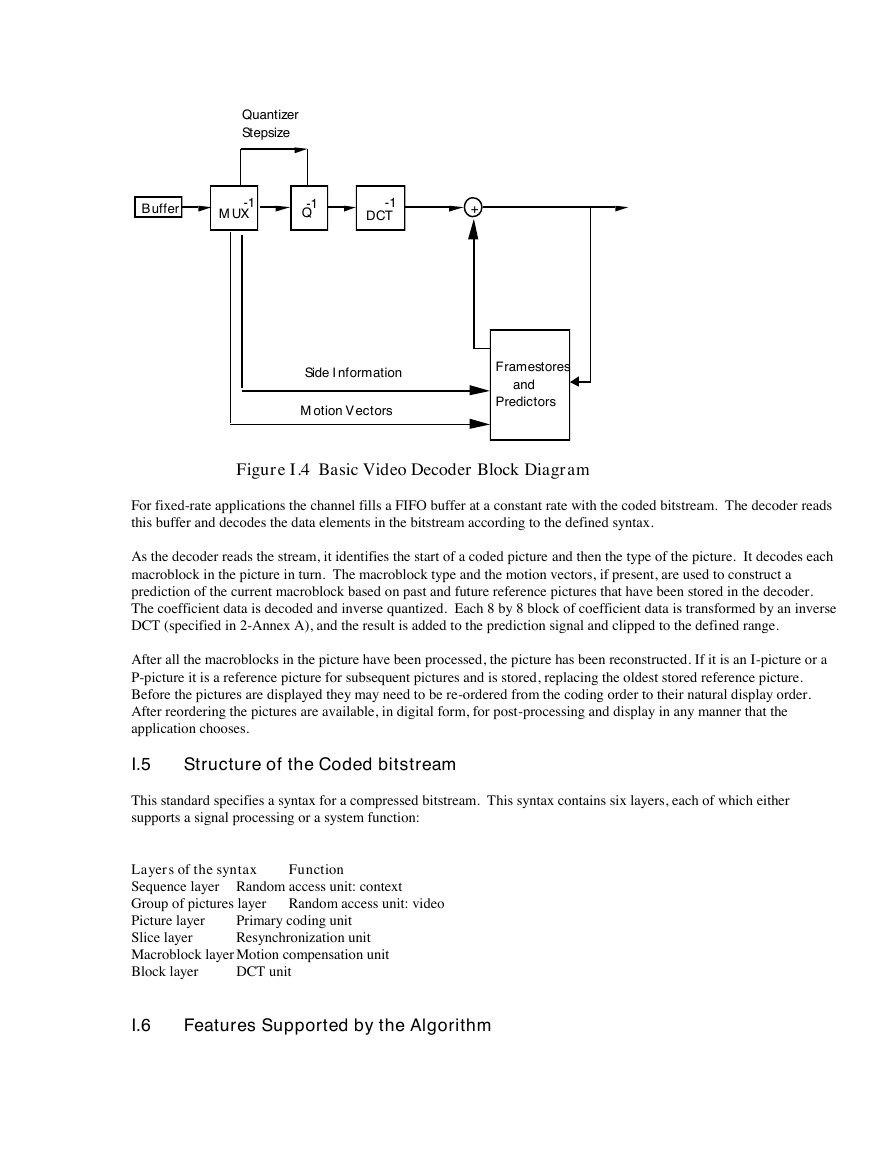

Decoding is the inverse of the encoding operation. It is considerably simpler than encoding as there is no need to

perform motion estimation and there are many fewer options. The decoding process is defined by this standard. The

description that follows is a very brief overview of one possible way of decoding a bitstream. Other decoders with

different architectures are possible. Figure I.4 shows the main functional blocks.

Decoding

0 1 2 3 4 5 Y CrCb�

Figure I.4 Basic Video Decoder Block Diagram

For fixed-rate applications the channel fills a FIFO buffer at a constant rate with the coded bitstream. The decoder reads

this buffer and decodes the data elements in the bitstream according to the defined syntax.

As the decoder reads the stream, it identifies the start of a coded picture and then the type of the picture. It decodes each

macroblock in the picture in turn. The macroblock type and the motion vectors, if present, are used to construct a

prediction of the current macroblock based on past and future reference pictures that have been stored in the decoder.

The coefficient data is decoded and inverse quantized. Each 8 by 8 block of coefficient data is transformed by an inverse

DCT (specified in 2-Annex A), and the result is added to the prediction signal and clipped to the defined range.

After all the macroblocks in the picture have been processed, the picture has been reconstructed. If it is an I-picture or a

P-picture it is a reference picture for subsequent pictures and is stored, replacing the oldest stored reference picture.

Before the pictures are displayed they may need to be re-ordered from the coding order to their natural display order.

After reordering the pictures are available, in digital form, for post-processing and display in any manner that the

application chooses.

I.5

This standard specifies a syntax for a compressed bitstream. This syntax contains six layers, each of which either

supports a signal processing or a system function:

Layers of the syntax

Sequence layer Random access unit: context

Group of pictures layer Random access unit: video

Picture layer

Slice layer

Macroblock layer Motion compensation unit

Block layer

I.6

Features Supported by the Algorithm

Structure of the Coded bitstream

Function

Primary coding unit

Resynchronization unit

DCT unit

BufferMUX -1Quantizer StepsizeQ -1DCT -1+ Side InformationMotion VectorsFramestores and Predictors�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc