Advancements in Graph

Neural Networks:

PGNNs, Pretraining, and OGB

Jure Leskovec

Includes joint work with W. Hu, J. You, M. Fey, Y. Dong, B. Liu,

M. Catasta, K. Xu, S. Jegelka, M. Zitnik, P. Liang, V. Pande

�

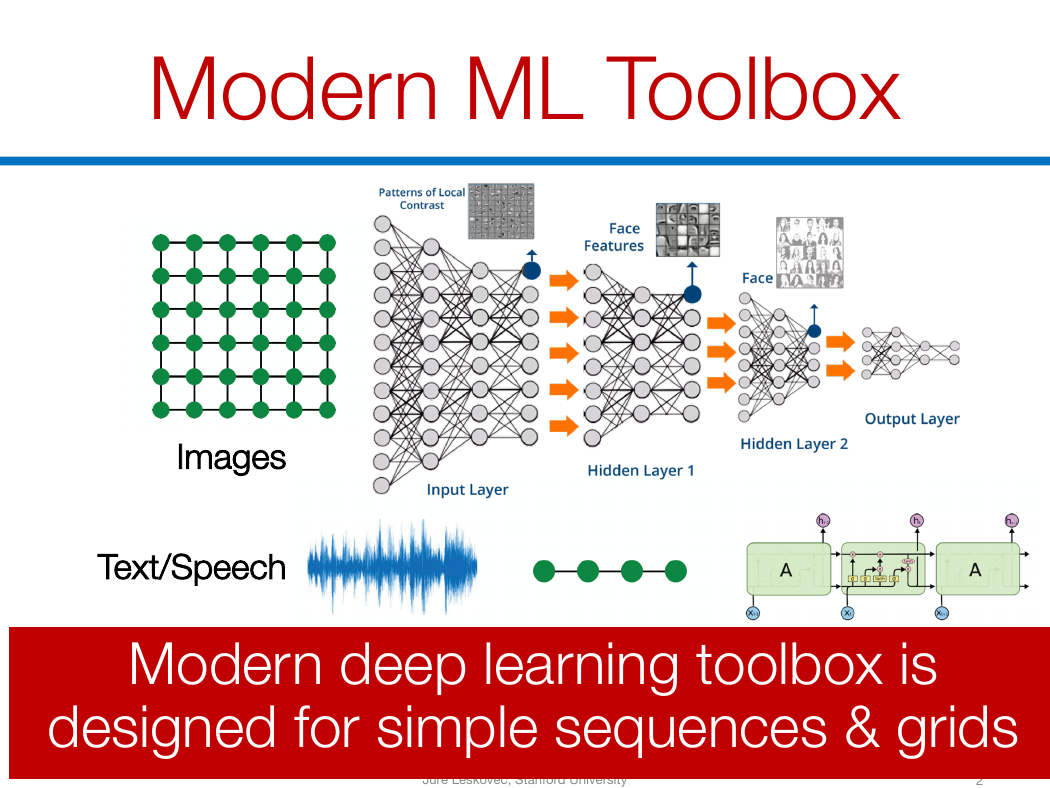

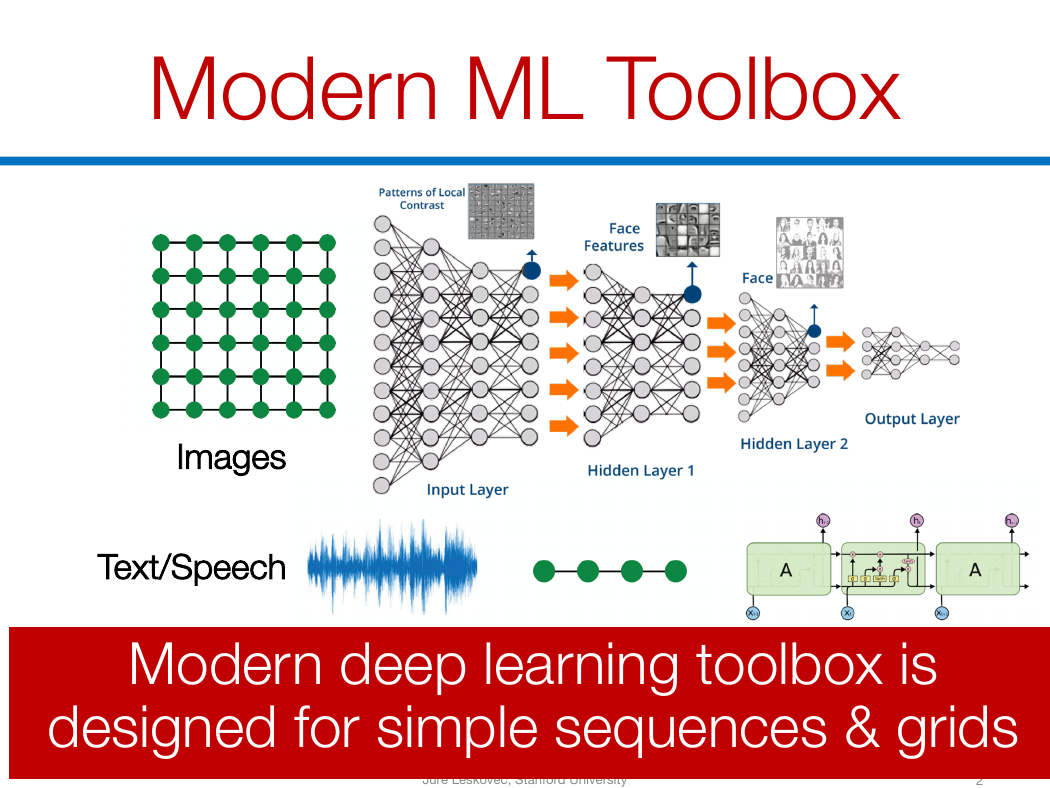

Modern ML Toolbox

Images

Text/Speech

Modern deep learning toolbox is

designed for simple sequences & grids

Jure Leskovec, Stanford University

2

�

But not everything

can be represented as

a sequence or a grid

How can we develop neural

networks that are much more

broadly applicable?

New frontiers beyond classic neural

networks that learn on images and

sequences

Jure Leskovec, Stanford University

3

�

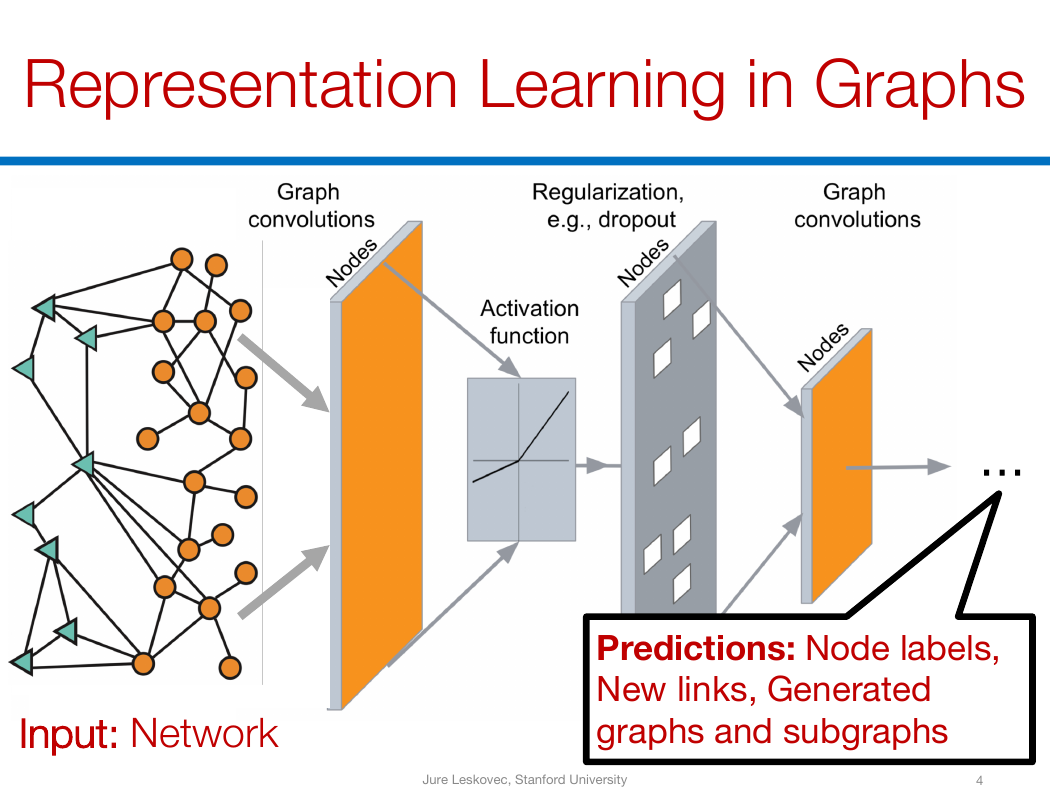

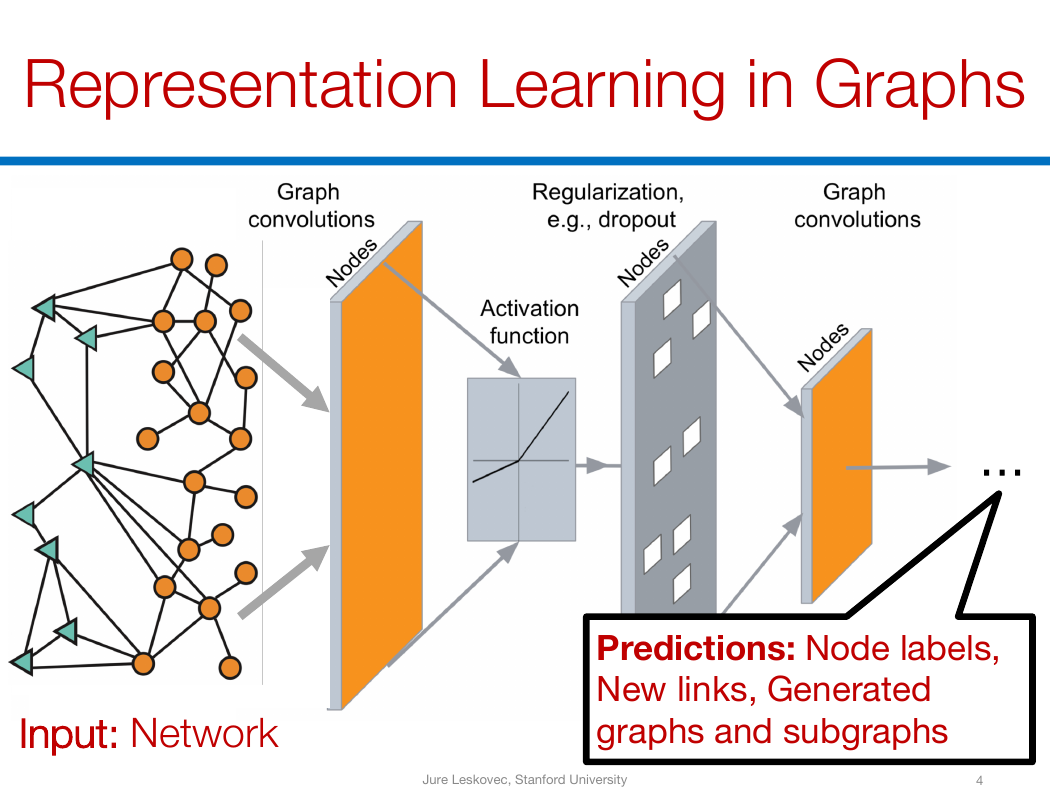

Representation Learning in Graphs

z

…

Input: Network

Predictions: Node labels,

New links, Generated

graphs and subgraphs

Jure Leskovec, Stanford University

4

�

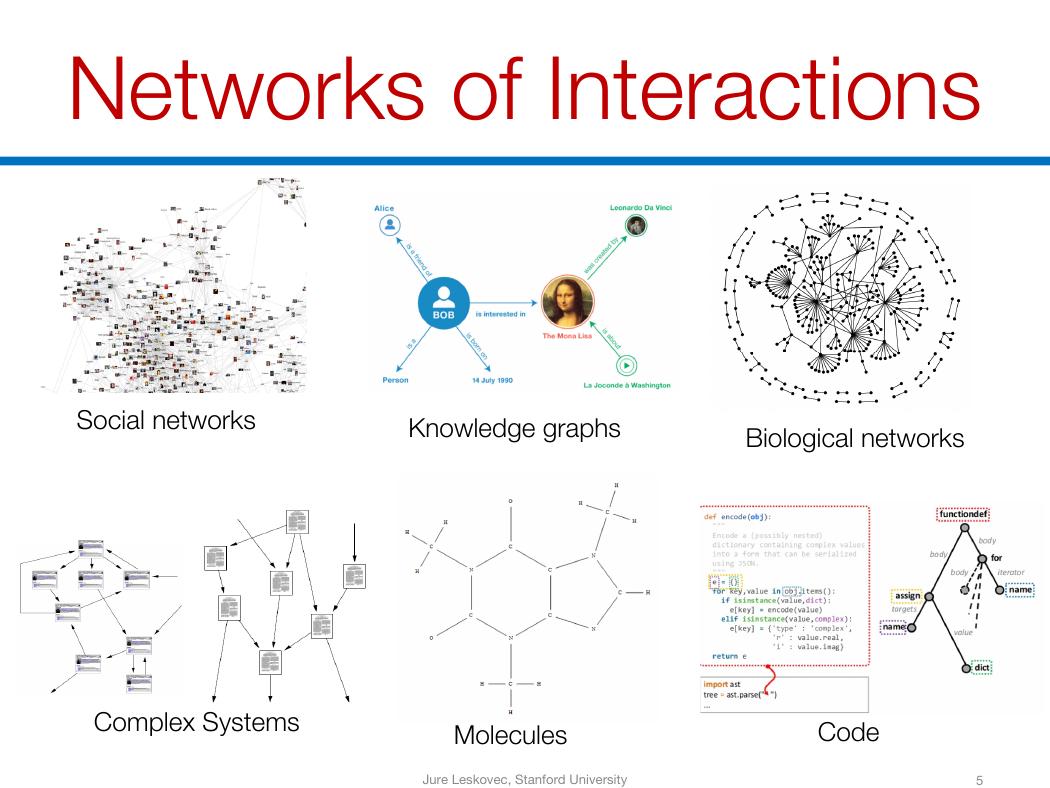

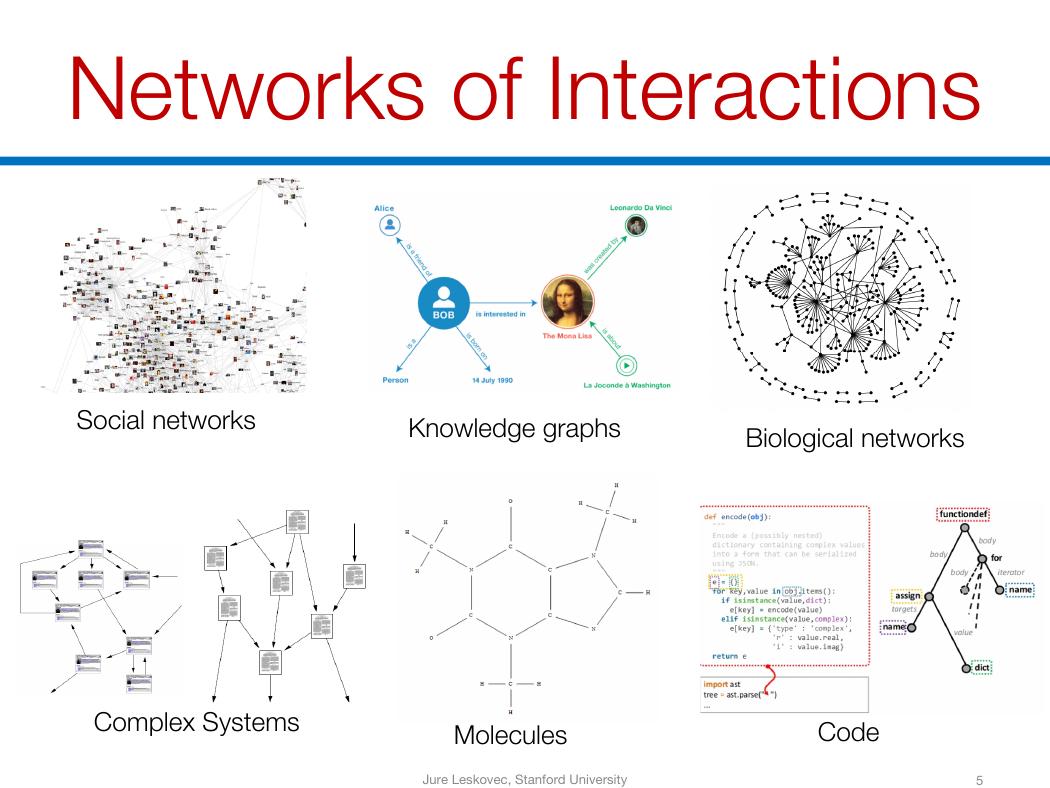

Networks of Interactions

Social networks

Knowledge graphs

Biological networks

Complex Systems

Molecules

Jure Leskovec, Stanford University

Code

5

�

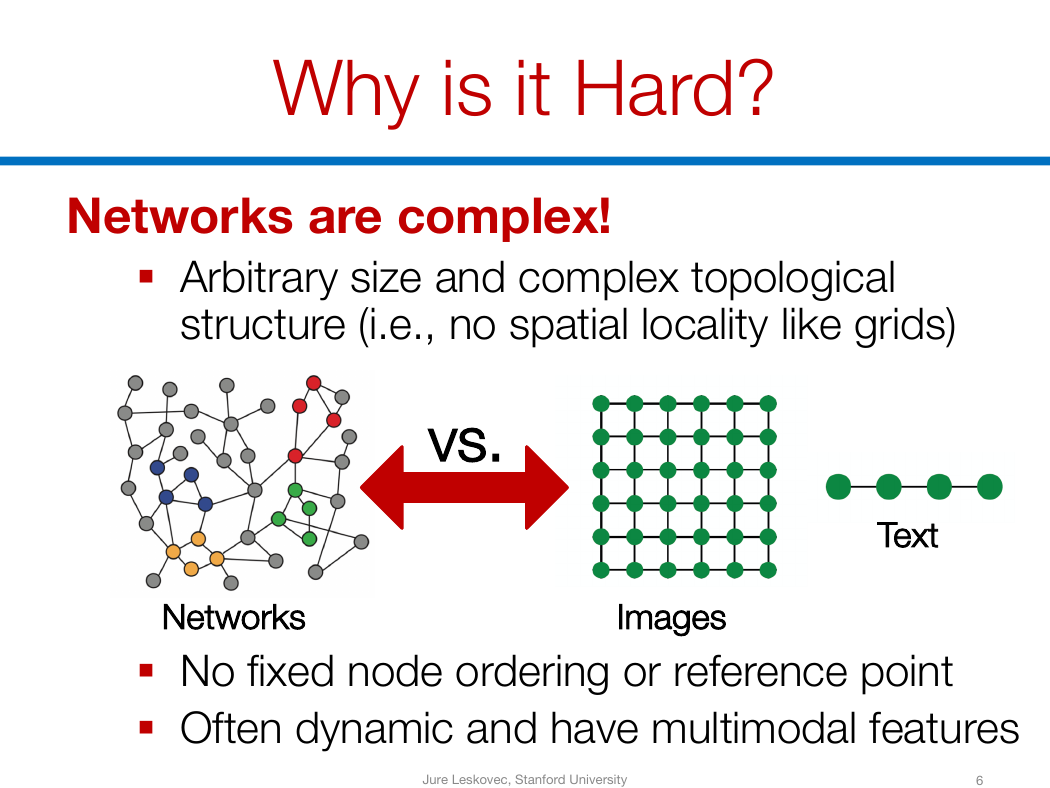

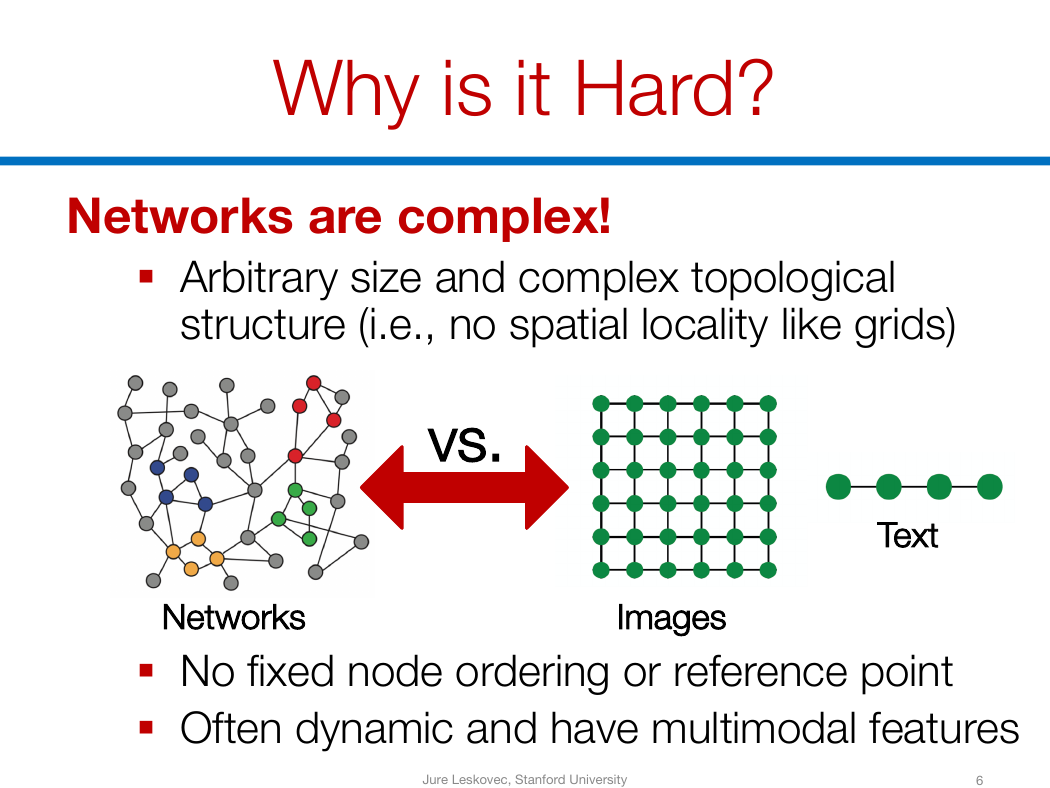

Why is it Hard?

Networks are complex!

§ Arbitrary size and complex topological

structure (i.e., no spatial locality like grids)

vs.

Text

Networks

§ No fixed node ordering or reference point

§ Often dynamic and have multimodal features

Images

Jure Leskovec, Stanford University

6

�

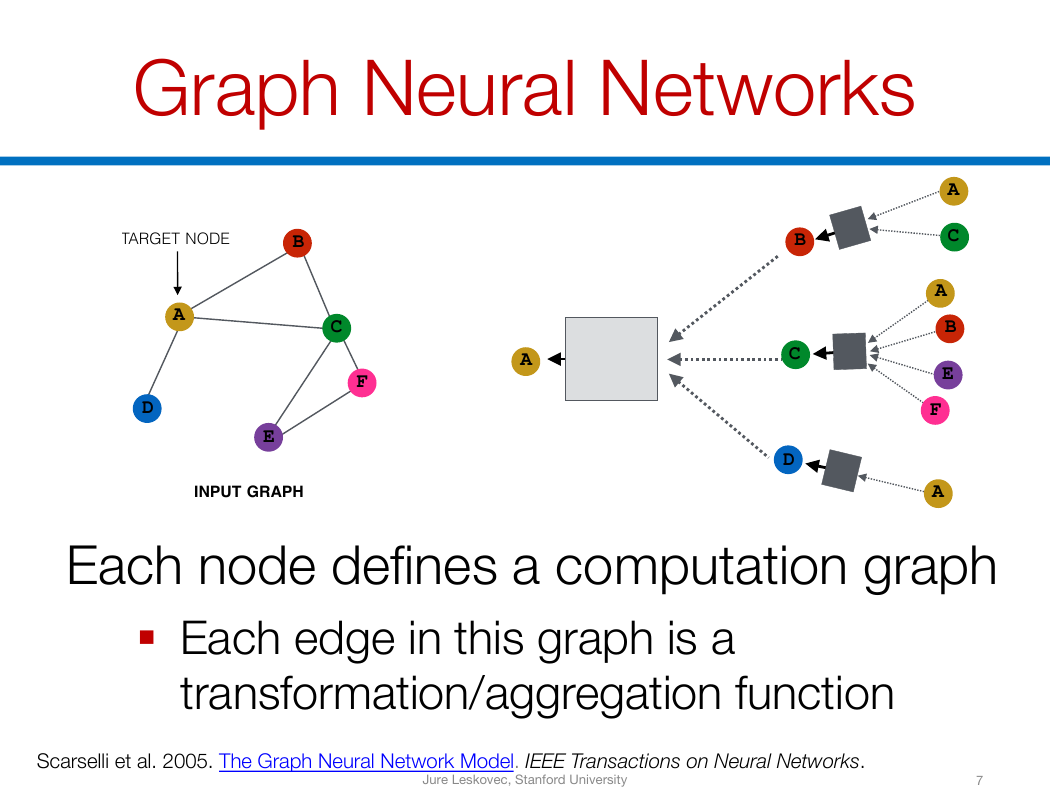

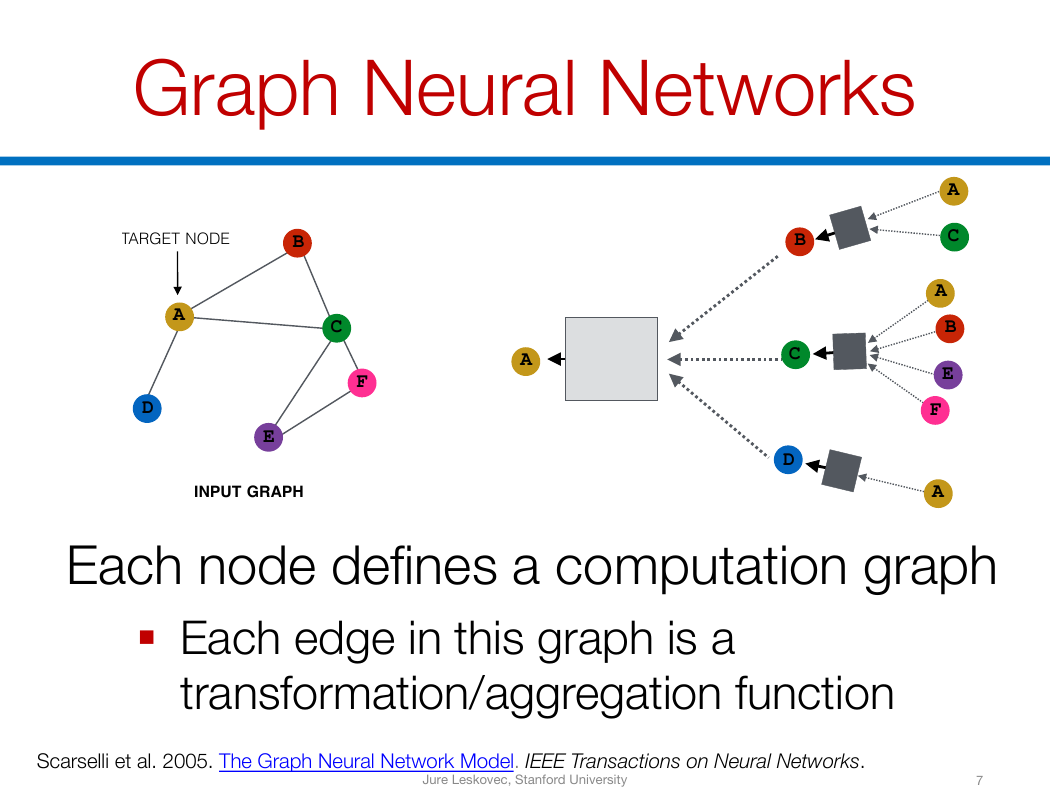

Graph Neural Networks

TARGET NODE

B

A

D

E

INPUT GRAPH

C

F

A

B

C

D

A

C

A

B

E

F

A

Each node defines a computation graph

§ Each edge in this graph is a

transformation/aggregation function

Scarselli et al. 2005. The Graph Neural Network Model. IEEE Transactions on Neural Networks.

Jure Leskovec, Stanford University

7

�

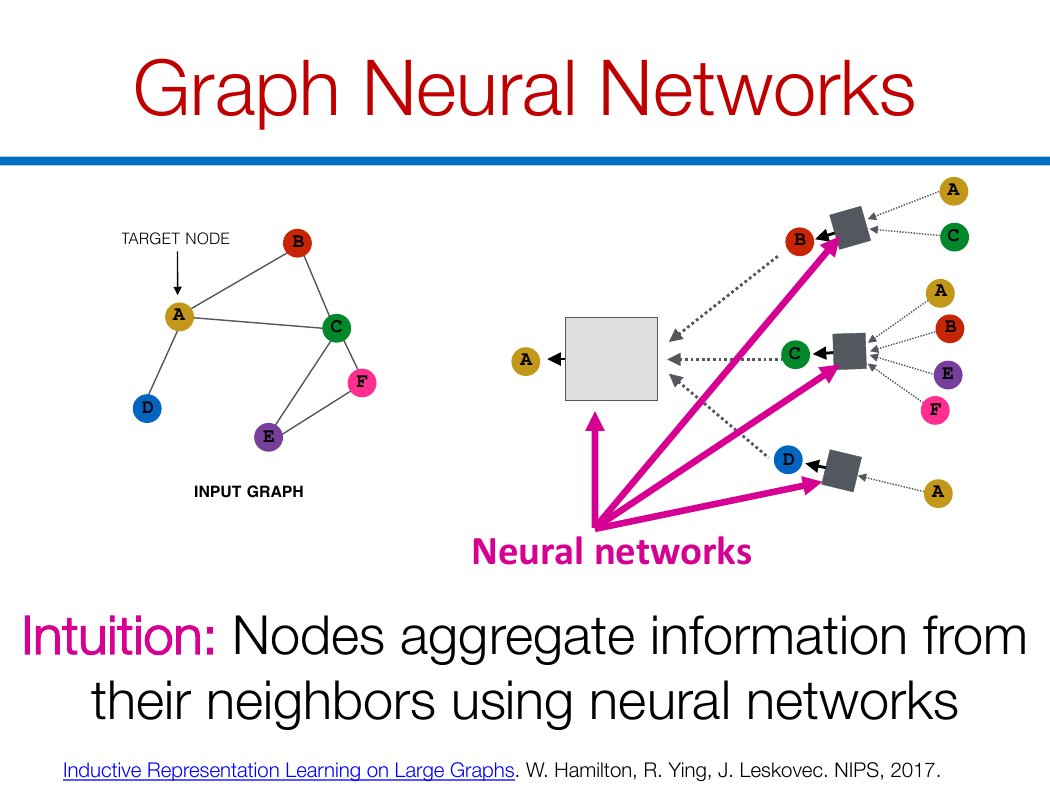

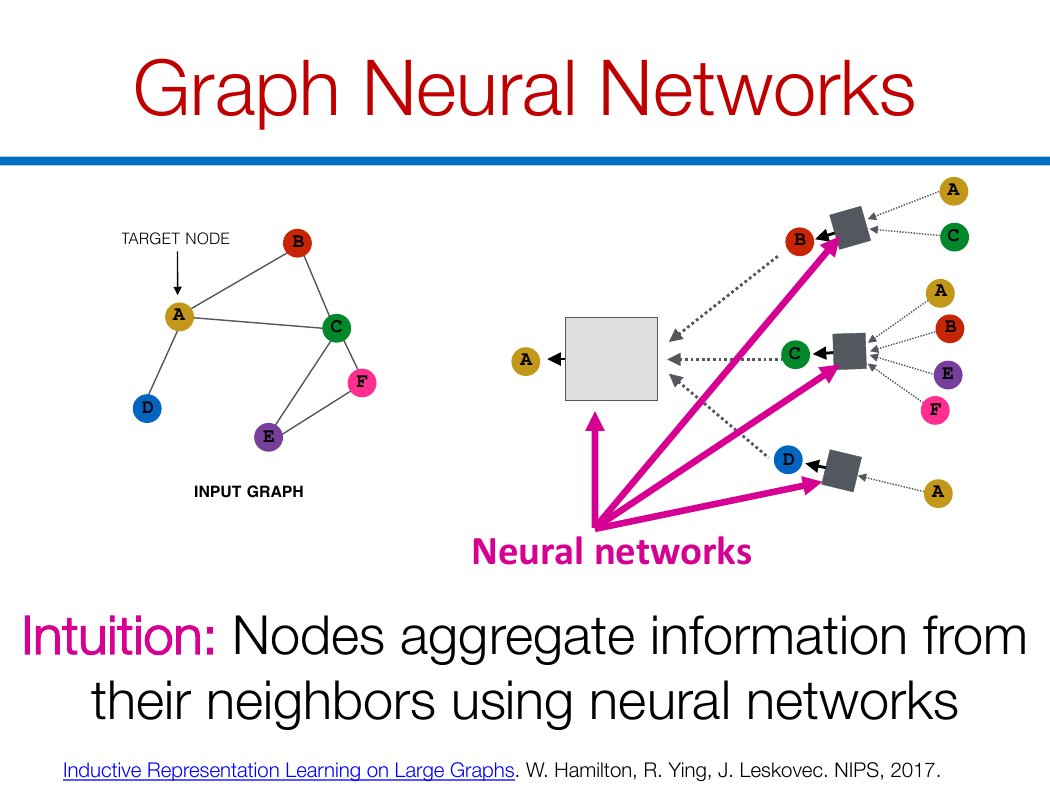

Graph Neural Networks

TARGET NODE

B

A

D

E

INPUT GRAPH

C

F

A

B

C

D

A

C

A

B

E

F

A

Neural networks

Intuition: Nodes aggregate information from

their neighbors using neural networks

Inductive Representation Learning on Large Graphs. W. Hamilton, R. Ying, J. Leskovec. NIPS, 2017.

Jure Leskovec, Stanford University

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc