课 题:

机器学习与数据挖掘

学 院:

信息工程学院

专 业:

姓 名:

学 号:

19 计算机科学与技术

胡俊峰

401030919008

指导老师:

邱桃荣

日 期:

2020 年 6 月 11 日

�

3.1 数据分析

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

#载入训练集

train_data = pd.read_excel('E:/FOut/train.xlsx')

x_train = train.drop(['price','id'],axis=1)

y_train = train['price']

#连续型数据

numeric_features=['feat1','feat3','feat5','feat7','feat8','feat9','feat11','fea

t12','feat13','feat14','feat15','feat16','feat17']

#离散型数据

categorical_features=[

'feat2','feat4','feat6','feat10','feat18','feat19','fea

t20' ]

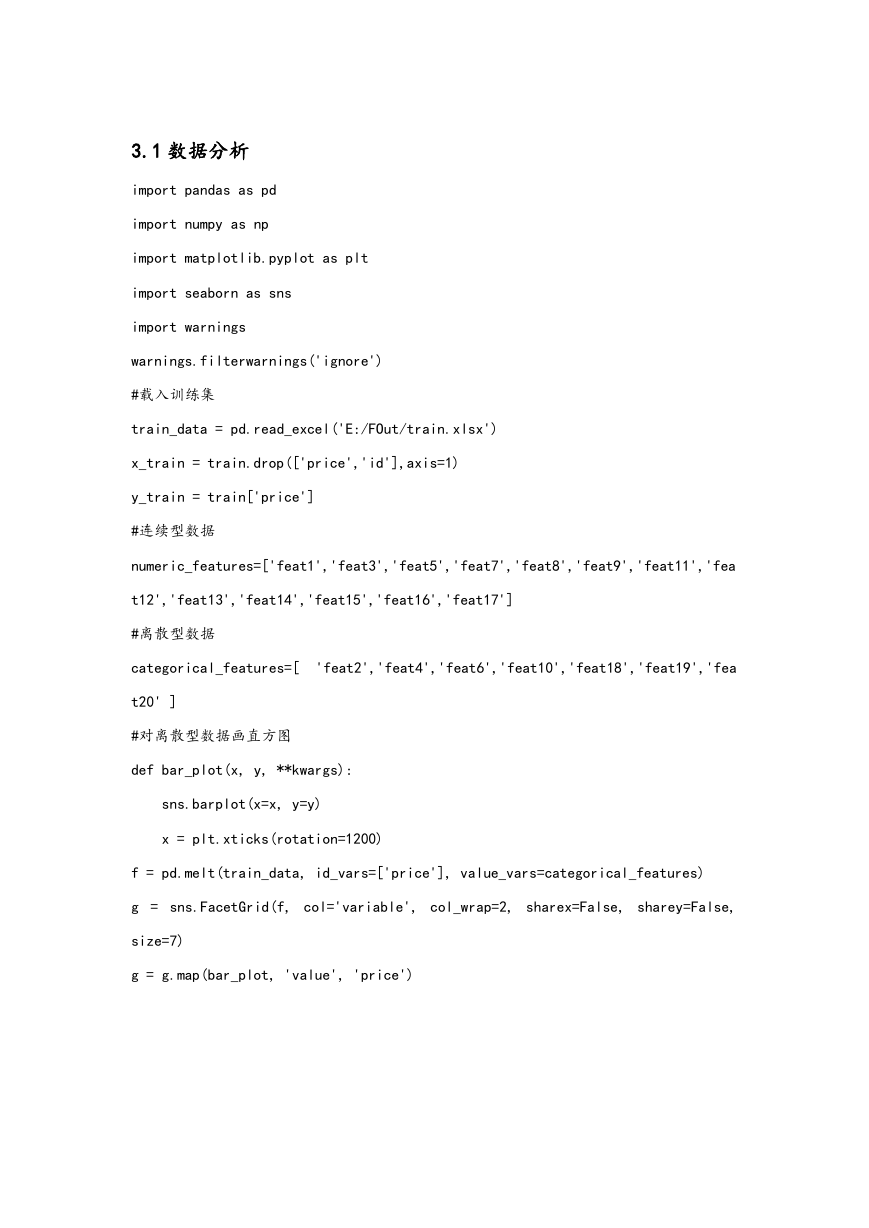

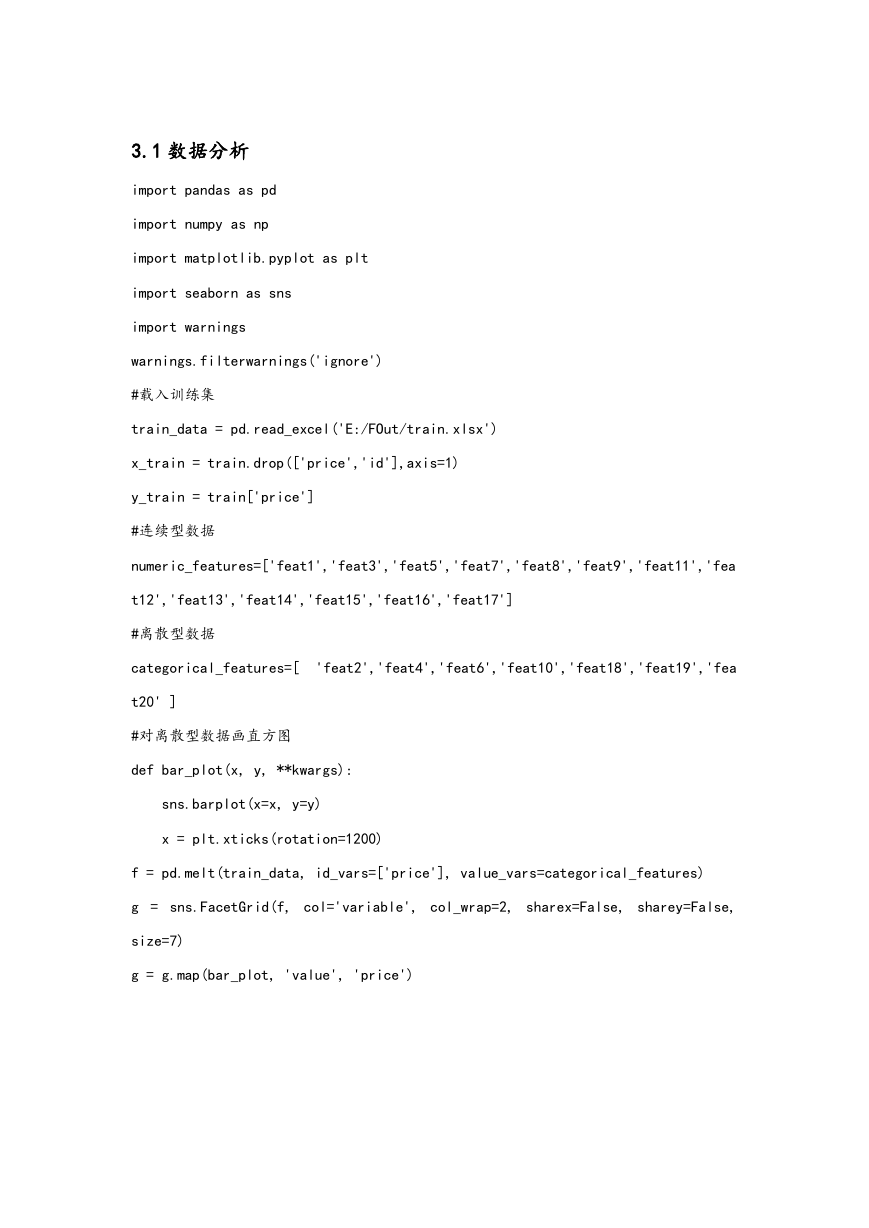

#对离散型数据画直方图

def bar_plot(x, y, **kwargs):

sns.barplot(x=x, y=y)

x = plt.xticks(rotation=1200)

f = pd.melt(train_data, id_vars=['price'], value_vars=categorical_features)

g = sns.FacetGrid(f, col='variable', col_wrap=2, sharex=False, sharey=False,

size=7)

g = g.map(bar_plot, 'value', 'price')

�

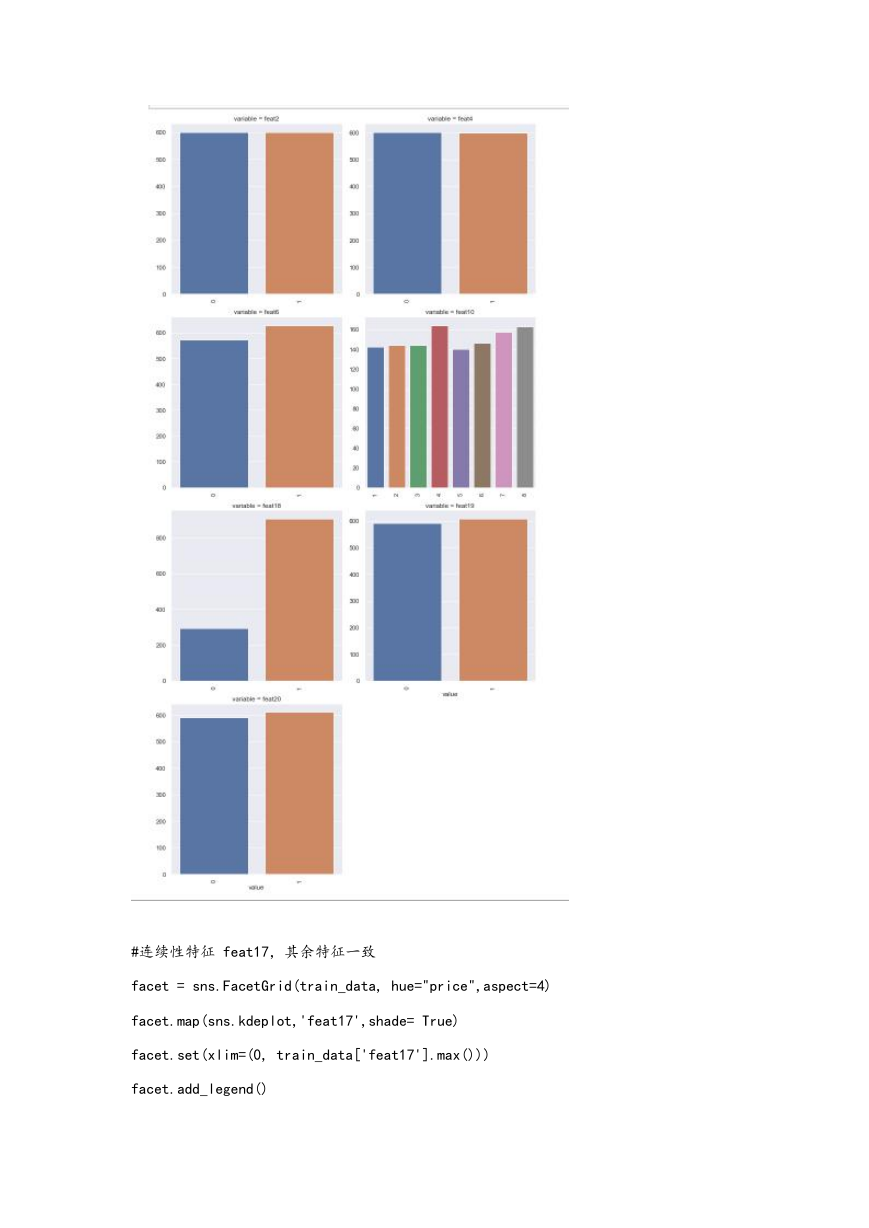

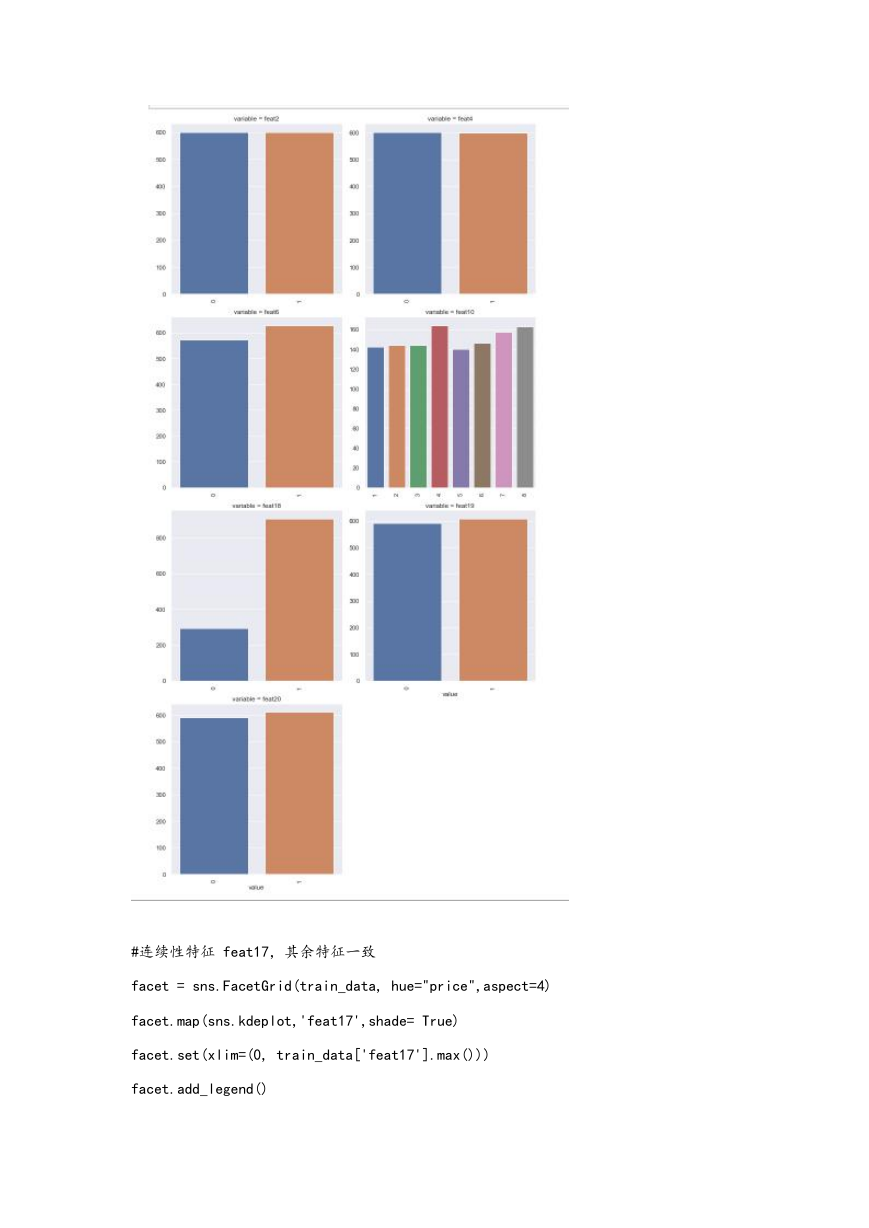

#连续性特征 feat17,其余特征一致

facet = sns.FacetGrid(train_data, hue="price",aspect=4)

facet.map(sns.kdeplot,'feat17',shade= True)

facet.set(xlim=(0, train_data['feat17'].max()))

facet.add_legend()

�

plt.xlabel('feat17')

plt.ylabel('density')

特征 17,特征 3,特征 5,其余图片不一一列举

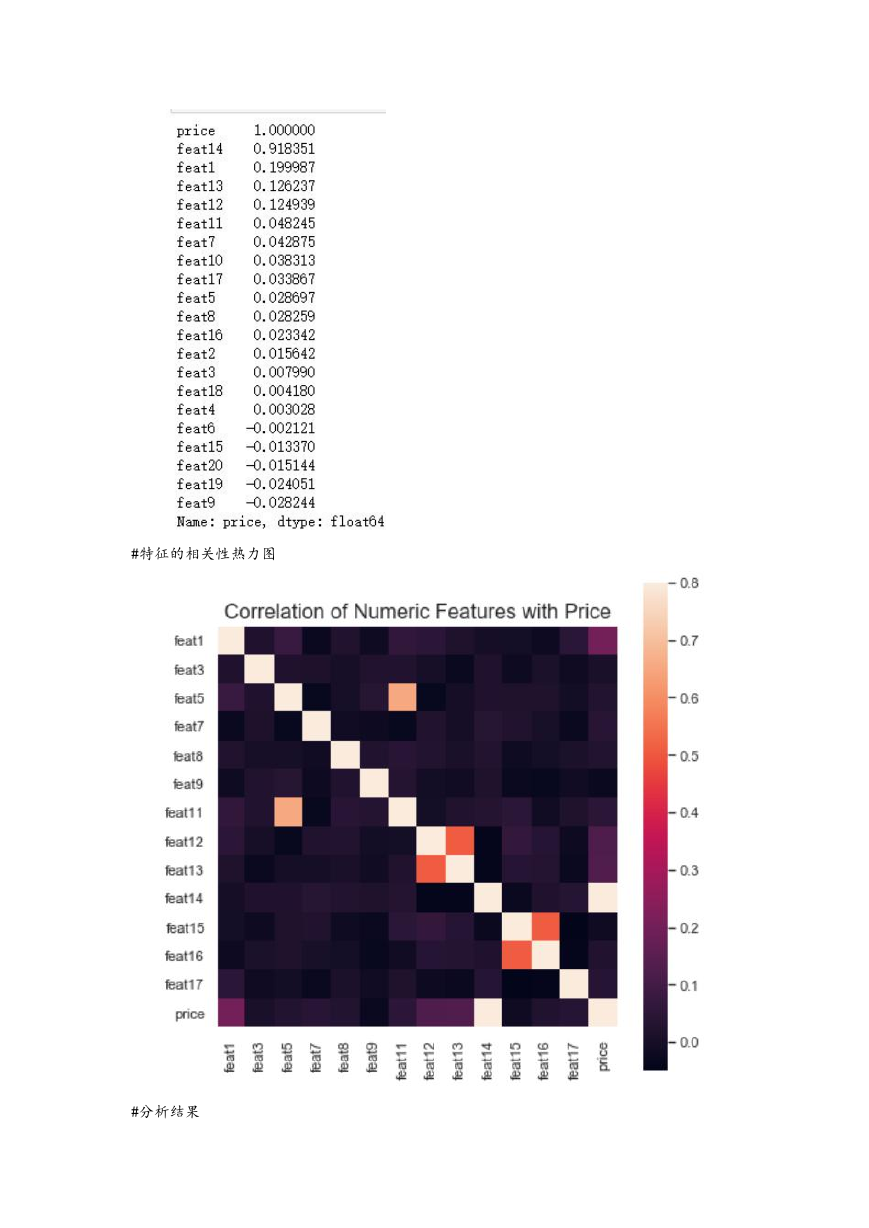

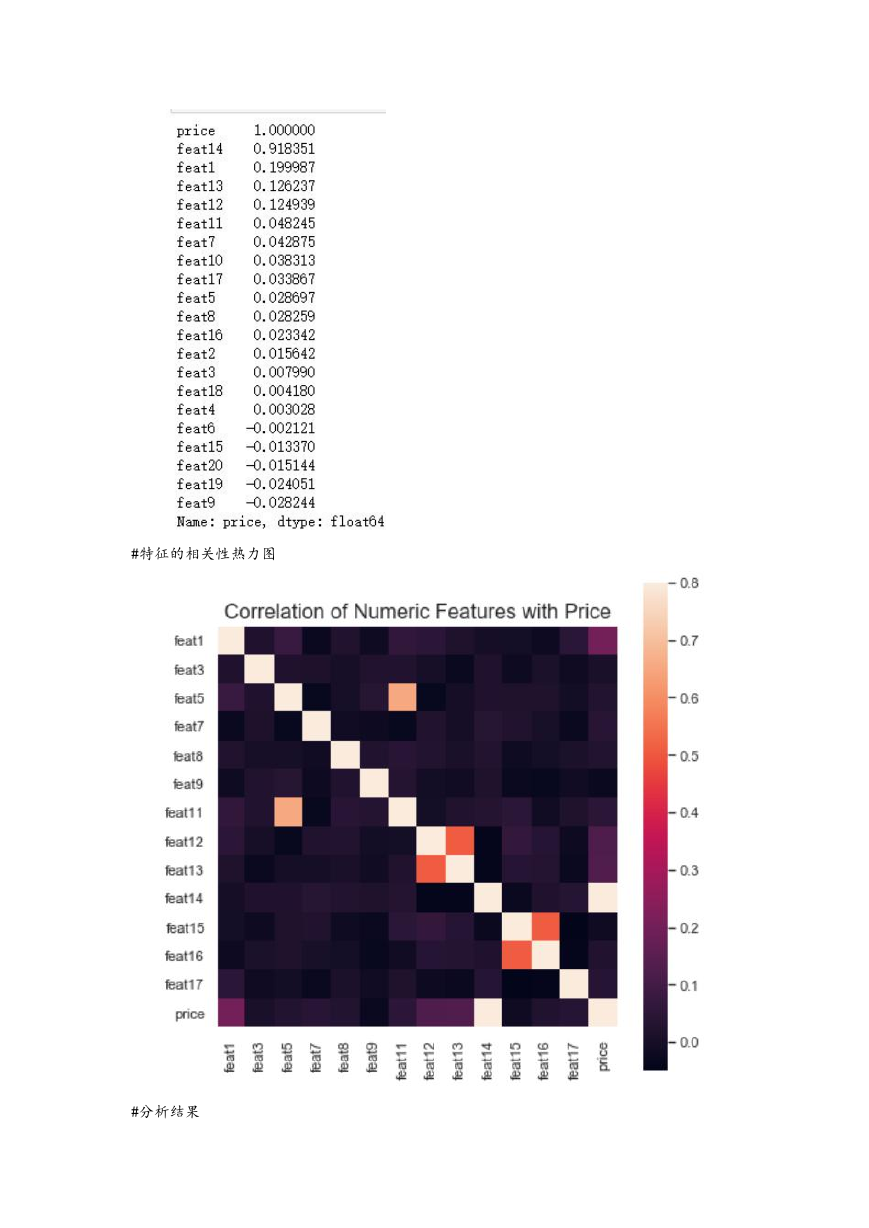

#特征间相关性

train_corr = train_data.drop(['id'],axis=1).corr()

print(train_corr['price'].sort_values(ascending = False),'\n')

�

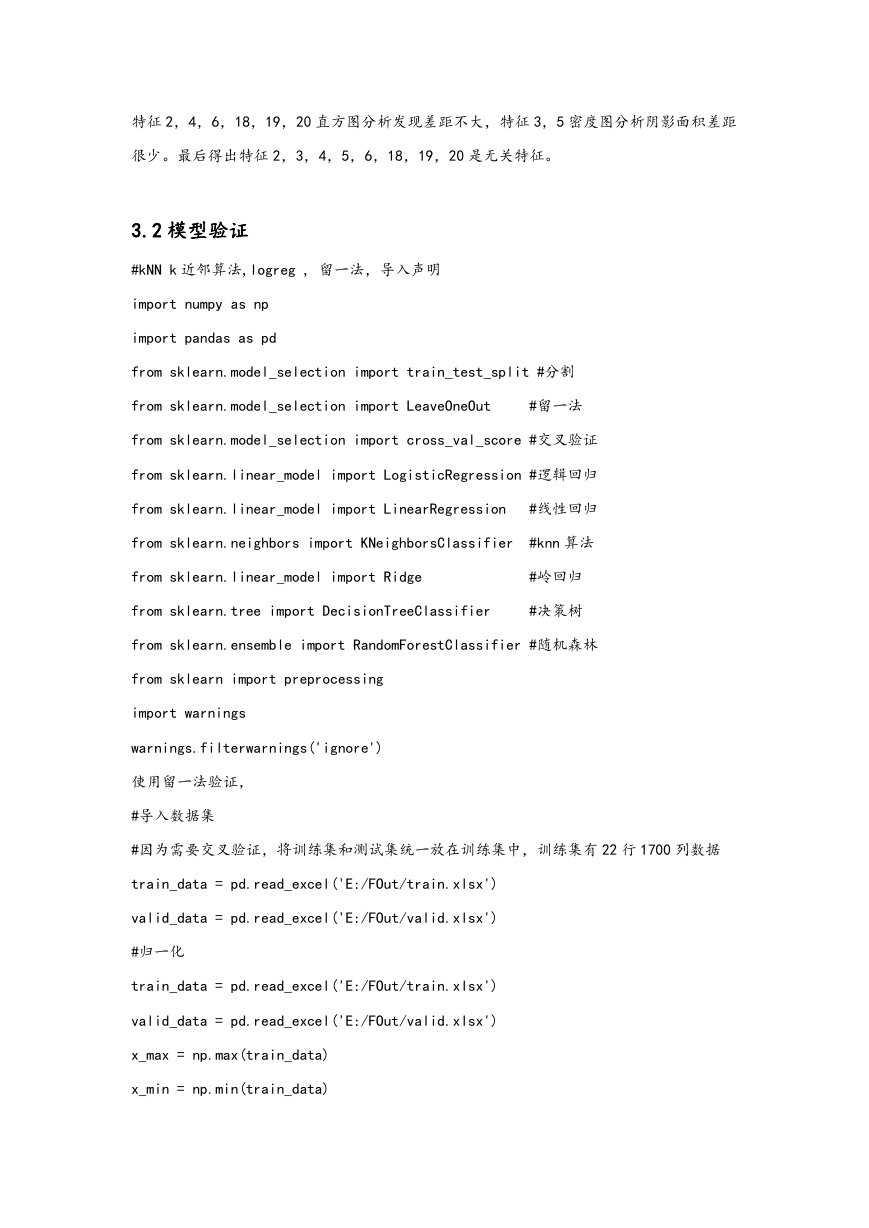

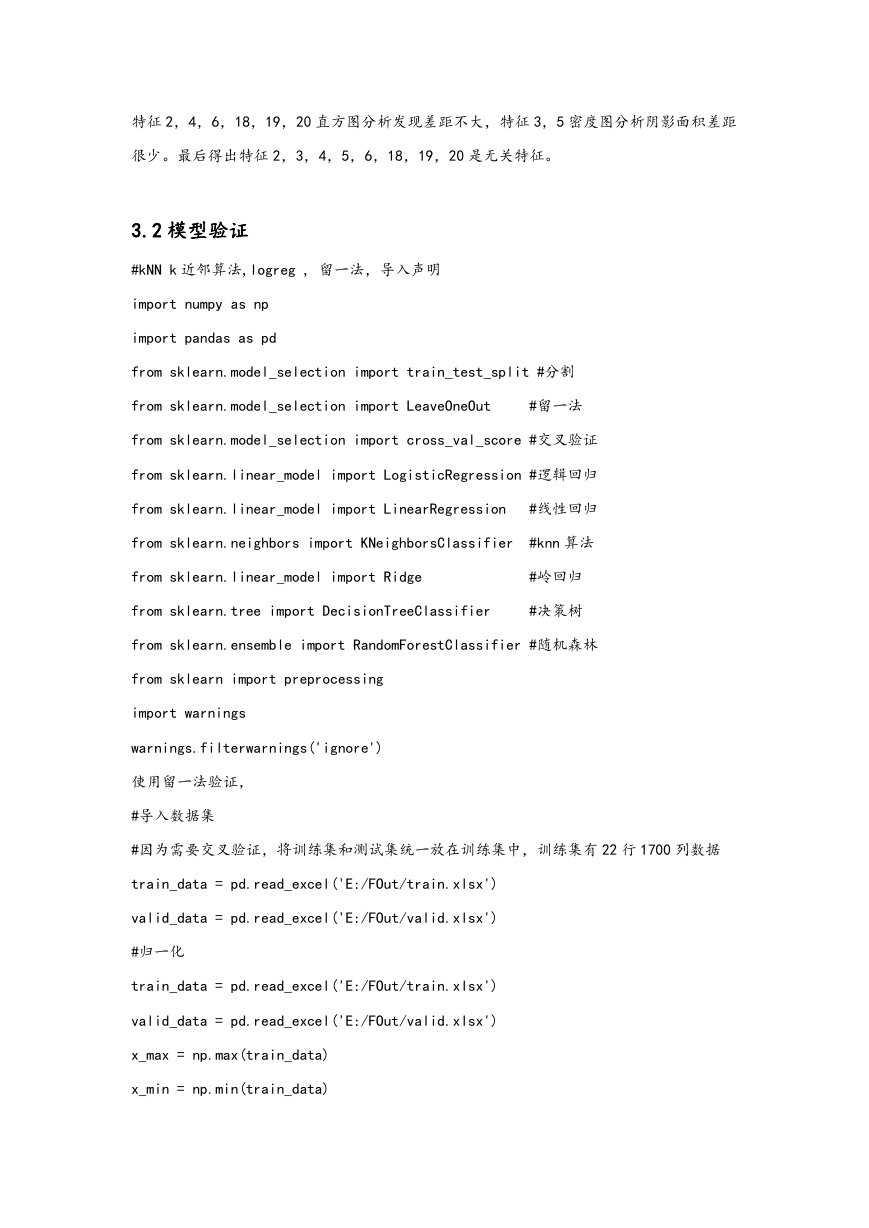

#特征的相关性热力图

#分析结果

�

特征 2,4,6,18,19,20 直方图分析发现差距不大,特征 3,5 密度图分析阴影面积差距

很少。最后得出特征 2,3,4,5,6,18,19,20 是无关特征。

3.2 模型验证

#kNN k 近邻算法,logreg , 留一法,导入声明

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split #分割

from sklearn.model_selection import LeaveOneOut

#留一法

from sklearn.model_selection import cross_val_score #交叉验证

from sklearn.linear_model import LogisticRegression #逻辑回归

from sklearn.linear_model import LinearRegression

#线性回归

from sklearn.neighbors import KNeighborsClassifier

#knn 算法

from sklearn.linear_model import Ridge

#岭回归

from sklearn.tree import DecisionTreeClassifier

#决策树

from sklearn.ensemble import RandomForestClassifier #随机森林

from sklearn import preprocessing

import warnings

warnings.filterwarnings('ignore')

使用留一法验证,

#导入数据集

#因为需要交叉验证,将训练集和测试集统一放在训练集中,训练集有 22 行 1700 列数据

train_data = pd.read_excel('E:/FOut/train.xlsx')

valid_data = pd.read_excel('E:/FOut/valid.xlsx')

#归一化

train_data = pd.read_excel('E:/FOut/train.xlsx')

valid_data = pd.read_excel('E:/FOut/valid.xlsx')

x_max = np.max(train_data)

x_min = np.min(train_data)

�

train_data1 = (train_data-x_min)/(x_max-x_min)

xv_max = np.max(valid_data)

xv_min = np.min(valid_data)

valid_data1 = (valid_data-xv_min)/(xv_max-xv_min)

#清除无关特征后,训练和测试

x_train=train_data1.drop(['price','id','feat2','feat3','feat4','feat5','feat6',

'feat10','feat18','feat19','feat20'],axis=1)

y_train = train_data1['price']

x_valid=valid_data1.drop(['price','id','feat2','feat3','feat4','feat5','feat6',

'feat10','feat18','feat19','feat20'],axis=1)

y_valid = valid_data1['price']

#查看数据是否符合要求。

print(x_train)

print(y_train)

print(x_valid)

print(y_valid)

#留一法 重复 10 次

loo = LeaveOneOut()

for i in range(1,10):

print("random_state

is

",

i,",

and

accuracy

score

is:")

train_x,test_x,train_y,test_y=train_test_split(x_train,y_train,test_size=0.

2,random_state=0)

#knn = KNeighborsClassifier(n_neighbors=1).fit(train_x,train_y)

#logreg = LogisticRegression().fit(train_x,train_y)

#lr = LinearRegression().fit(train_x,train_y)

Ri = Ridge().fit(train_x,train_y)

#tree

=

DecisionTreeClassifier(max_depth=12).fit(train_x,train_y)

#forest=RandomForestClassifier(n_estimators=12,random_state=2).fit(train_x,

�

train_y)

scores = cross_val_score(Ri,x_train,y_train, cv=loo)

print("Test set accuracy: {:.2f}".format(Ri.score(test_x,test_y)))

print("Number of cv iterations: ", len(scores))

print("Mean accuracy: {:.2f}".format(scores.mean()))

print("valid set accuracy: {:.2f}".format(Ri.score(x_valid, y_valid))) #验证集上

测试

#10 倍交叉验证

for i in range(1,10):

train_x,test_x,train_y,test_y=train_test_split(x_train,y_train,test_size=0.

2,random_state=0)

#tree = DecisionTreeClassifier(max_depth=12).fit(train_x,train_y)

#knn = KNeighborsClassifier(n_neighbors=1).fit(train_x,train_y)

#logreg = LogisticRegression().fit(train_x,train_y)

#lr = LinearRegression().fit(train_x,train_y)

#Ri=Ridge().fit(train_x,train_y)

forest=RandomForestClassifier(n_estimators=12,random_state=2).fit(train_x,t

rain_y)

scores = cross_val_score(forest,x_train,y_train,cv=10)

print(scores.mean())

print("valid set accuracy: {:.2f}".format(forest.score(x_valid, y_valid))) #验证

集上测试

3.3 测试结果

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc