Learning the parts of objects by

nonnegative matrix factorization

D.D. Lee from Bell Lab

H.S. Seung from MIT

Presenter: Zhipeng Zhao

�

Introduction

• NMF (Nonnegative Matrix Factorization):

Theory: Perception of the whole is based on

perception of its parts.

• Comparison with another two matrix factorization

methods:

PCA (Principle Components Analysis)

VA (Vector quantization )

�

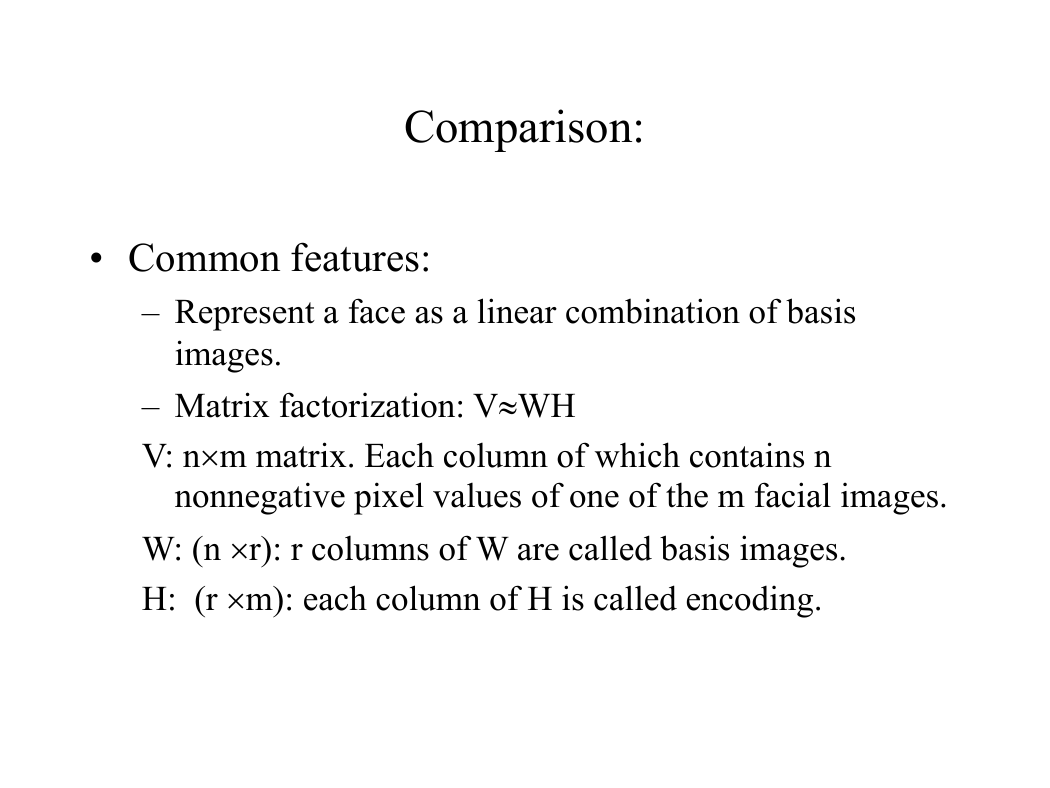

Comparison:

• Common features:

– Represent a face as a linear combination of basis

images.

– Matrix factorization: VWH

V: nm matrix. Each column of which contains n

nonnegative pixel values of one of the m facial images.

W: (n r): r columns of W are called basis images.

H: (r m): each column of H is called encoding.

�

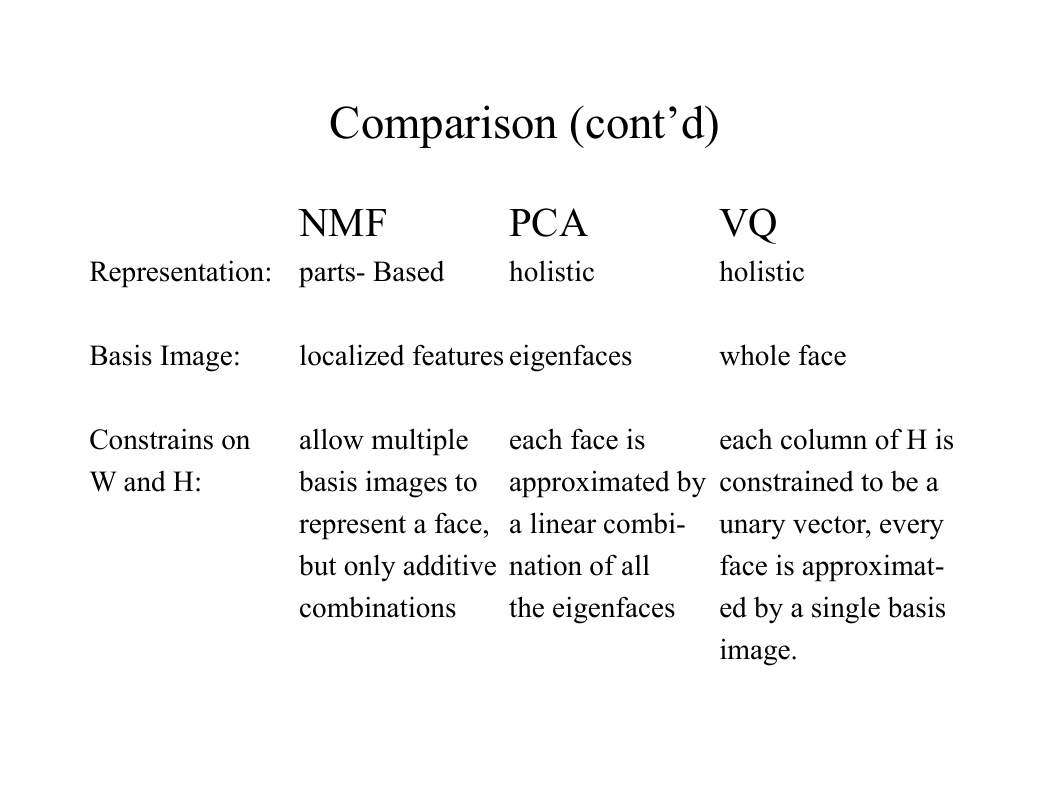

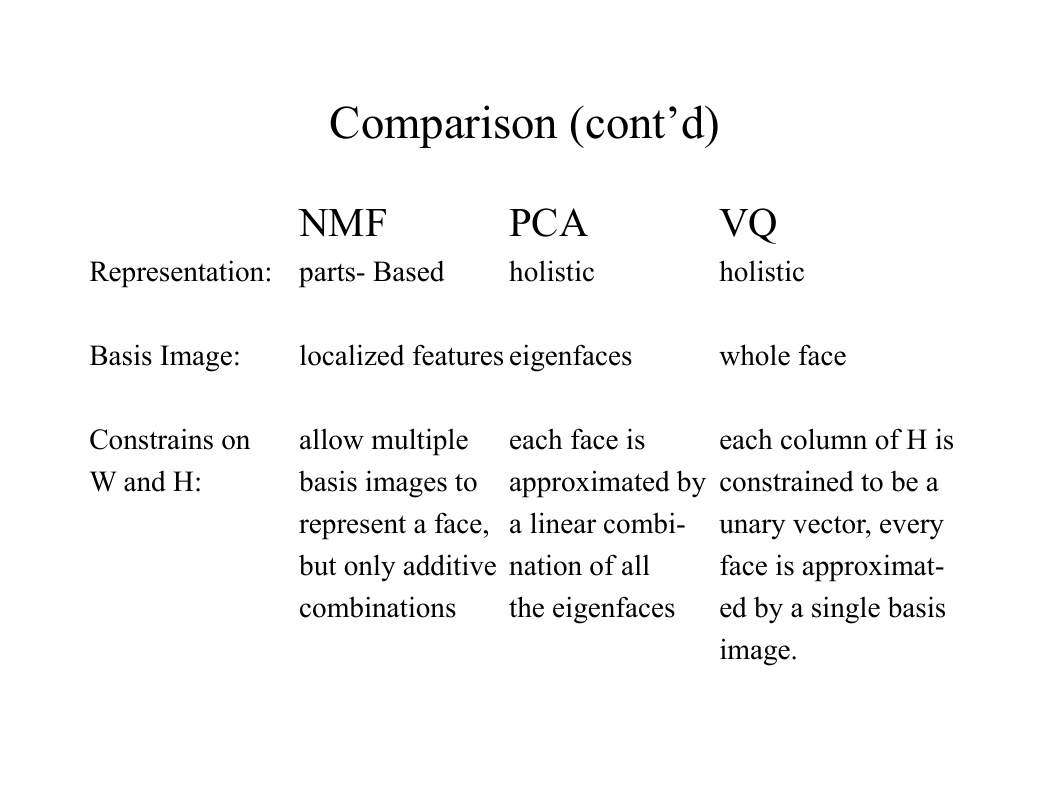

Comparison (cont’d)

NMF

Representation: parts- Based

PCA

holistic

VQ

holistic

Basis Image: localized features eigenfaces

whole face

Constrains on

W and H:

each face is

each column of H is

allow multiple

basis images to approximated by constrained to be a

represent a face, a linear combi-

unary vector, every

face is approximat-

but only additive nation of all

combinations

the eigenfaces

ed by a single basis

image.

�

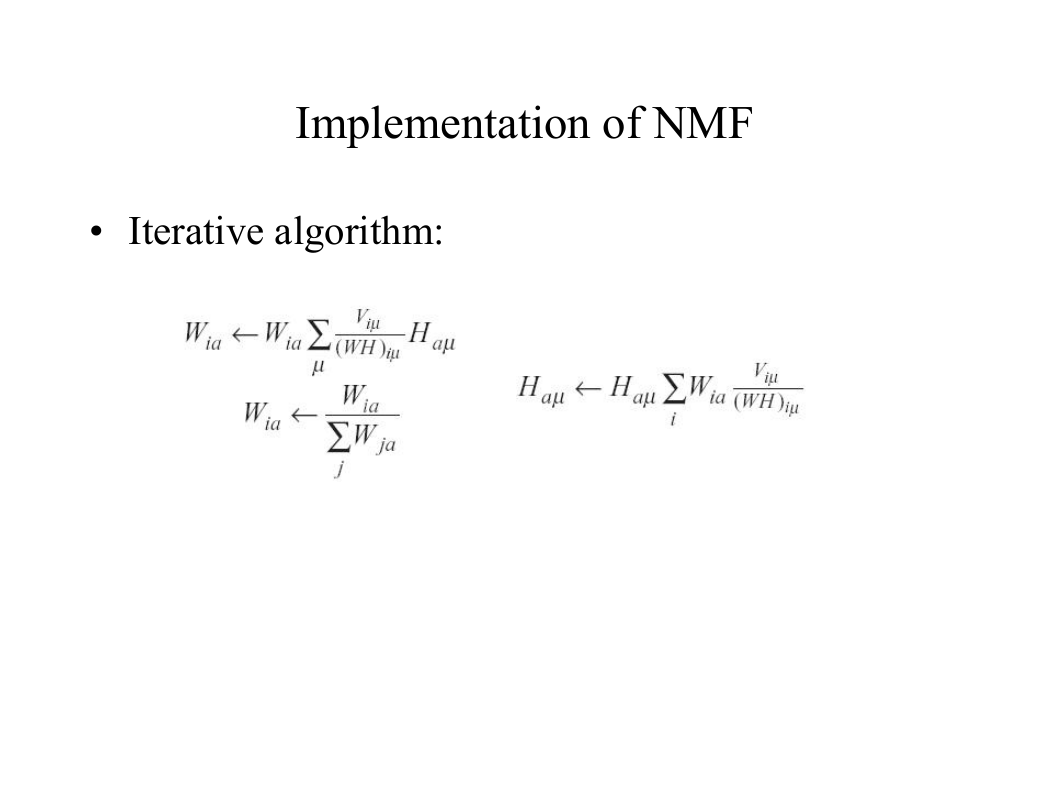

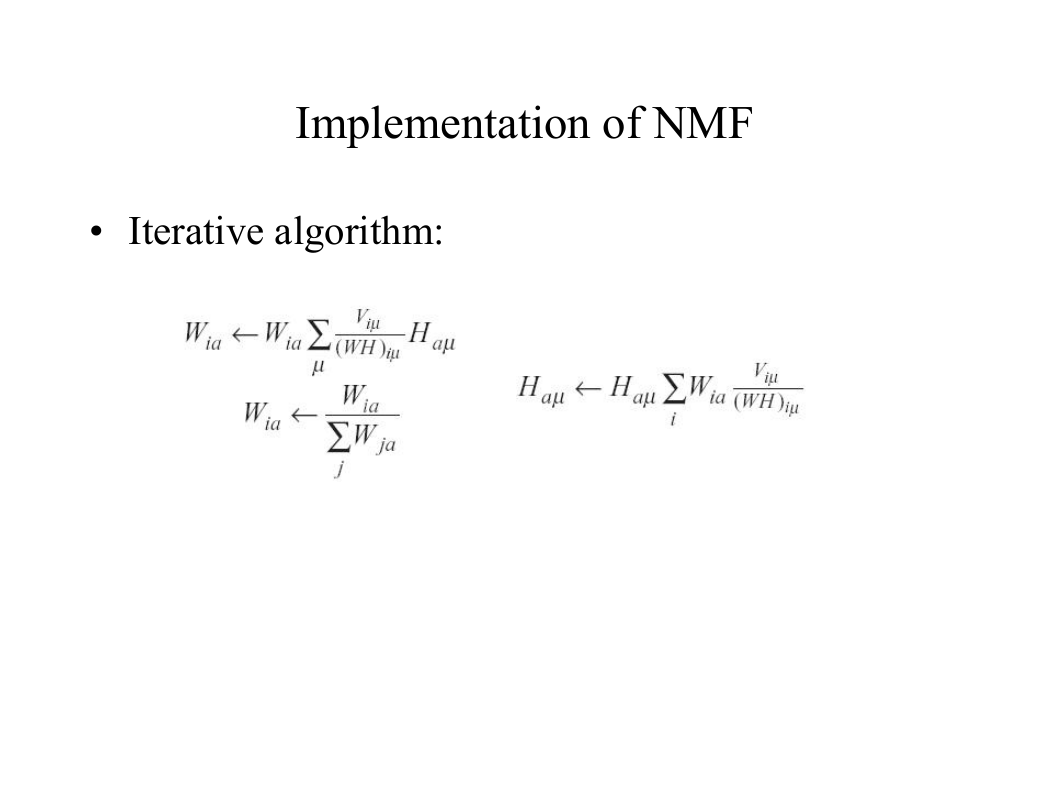

Implementation of NMF

• Iterative algorithm:

�

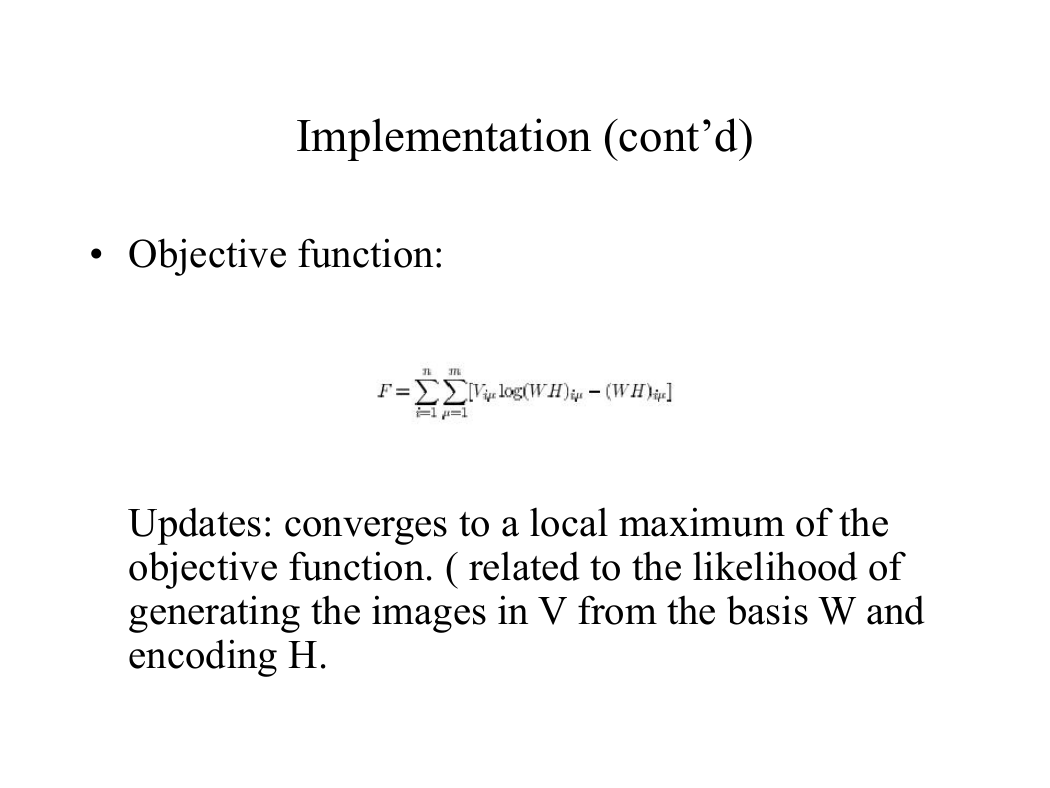

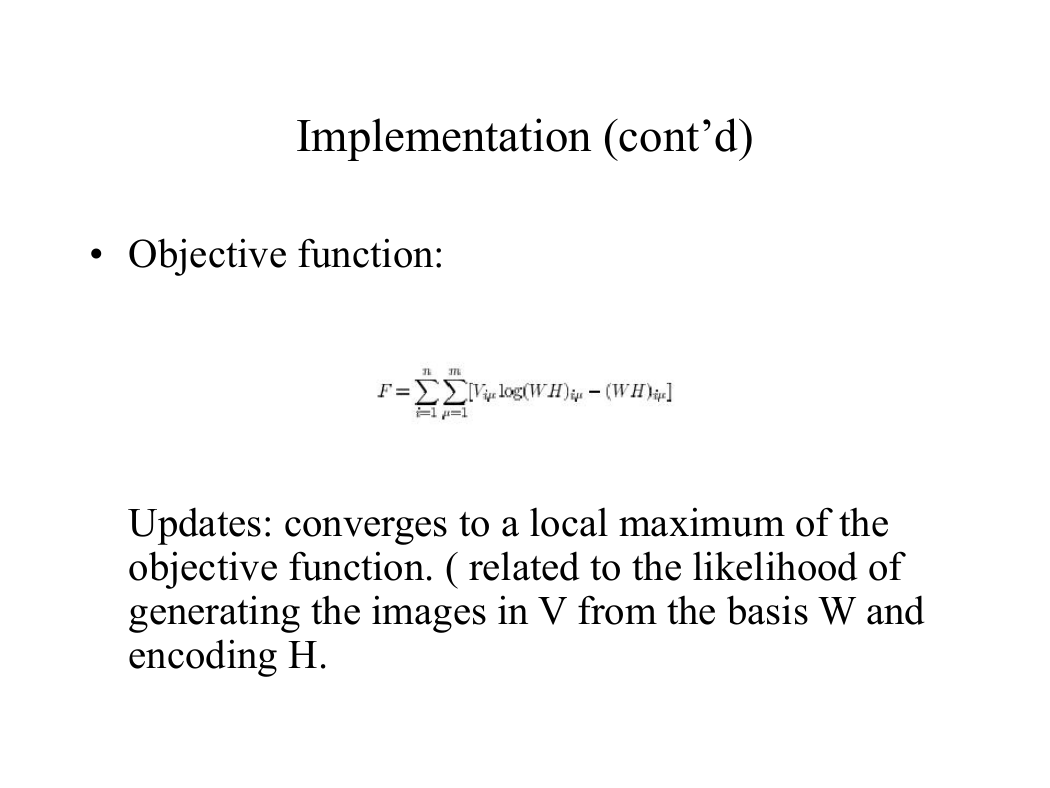

Implementation (cont’d)

• Objective function:

Updates: converges to a local maximum of the

objective function. ( related to the likelihood of

generating the images in V from the basis W and

encoding H.

�

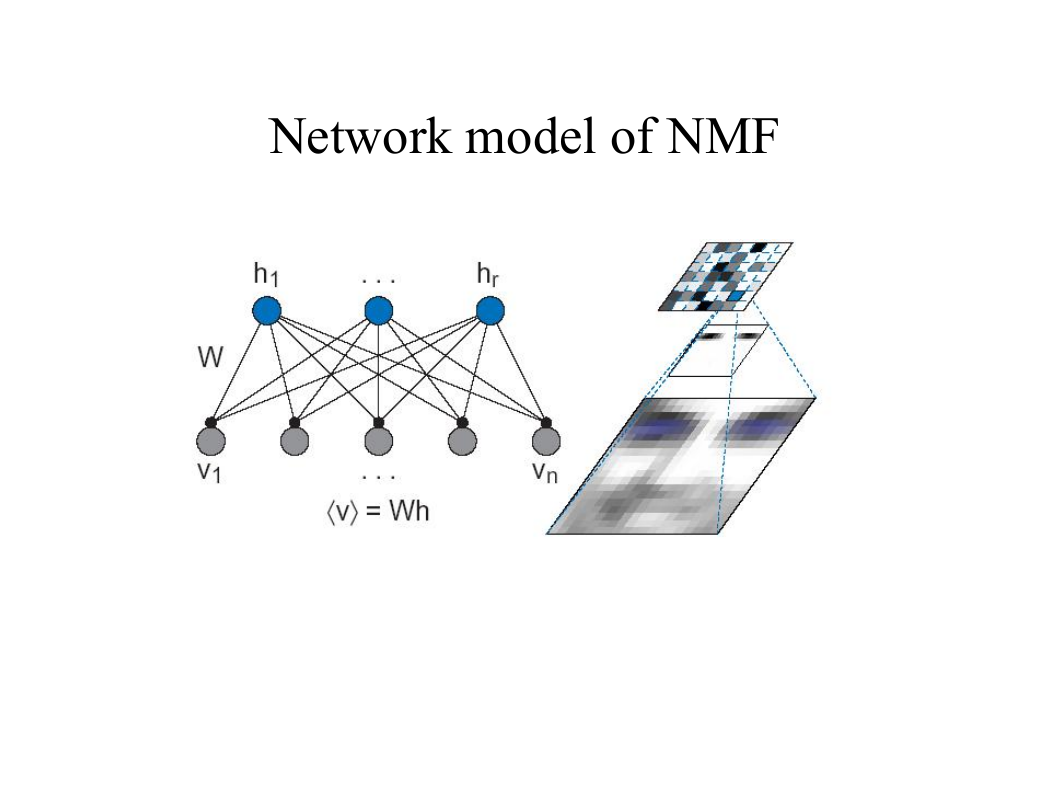

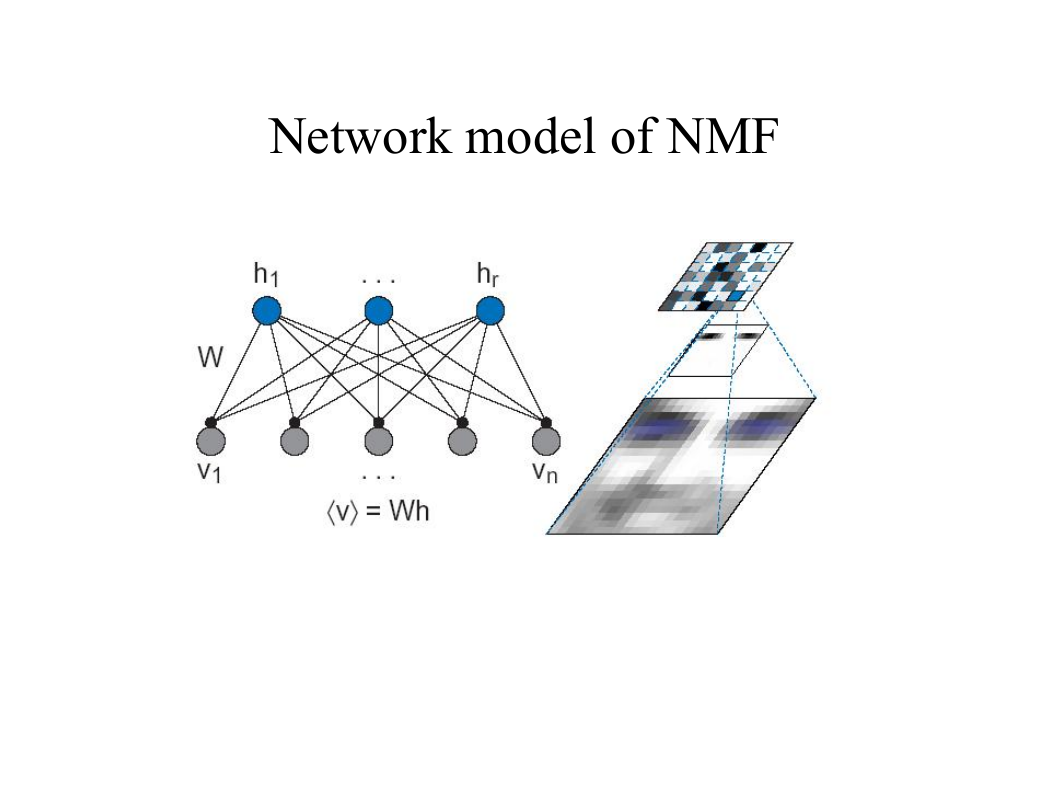

Network model of NMF

�

Semantic analysis of text doc. using NMF

• A corpus of documents summarized by matrix V,

where Vi is the number of times the ith word in

the vocabulary appears in the th document.

• NMF algorithm involves finding the approximate

factorization of this Matrix VWH into a feature

set W and hidden variables H, in the same way as

was done for faces.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc