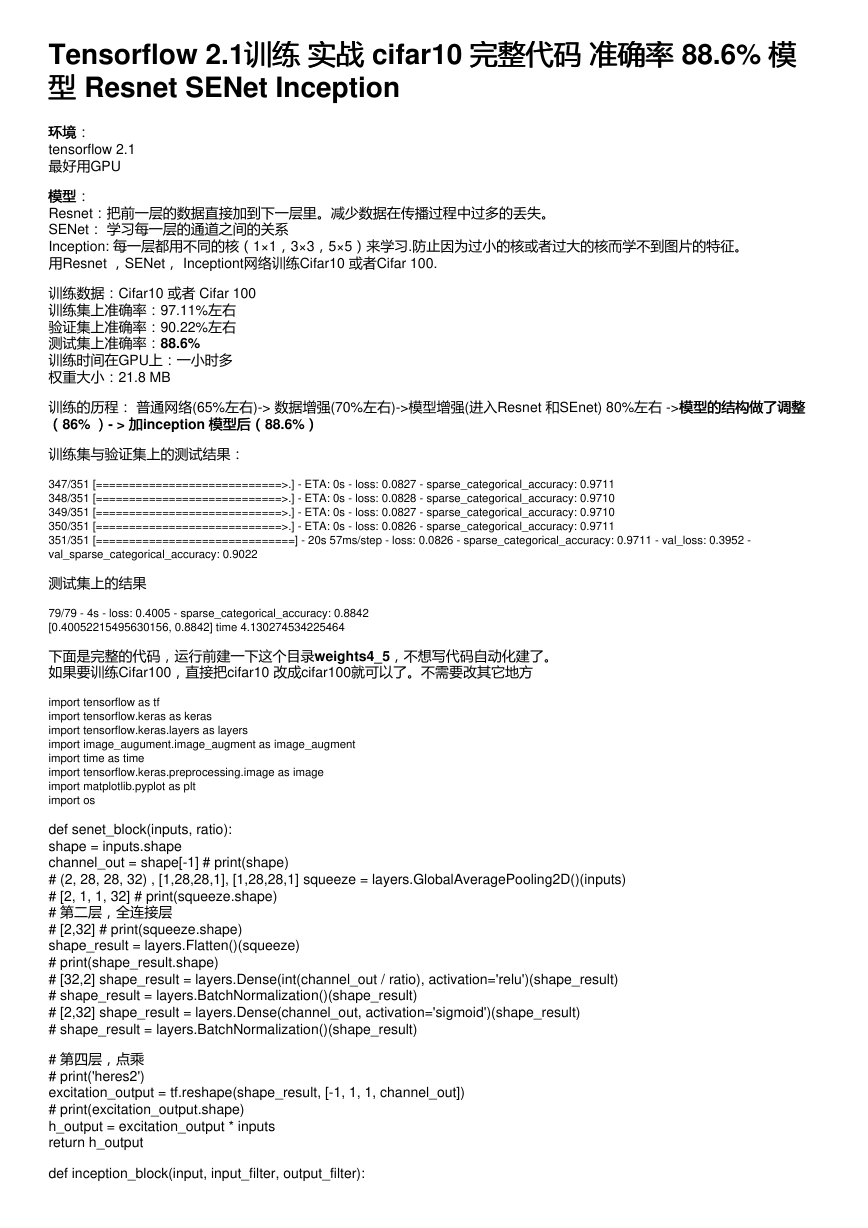

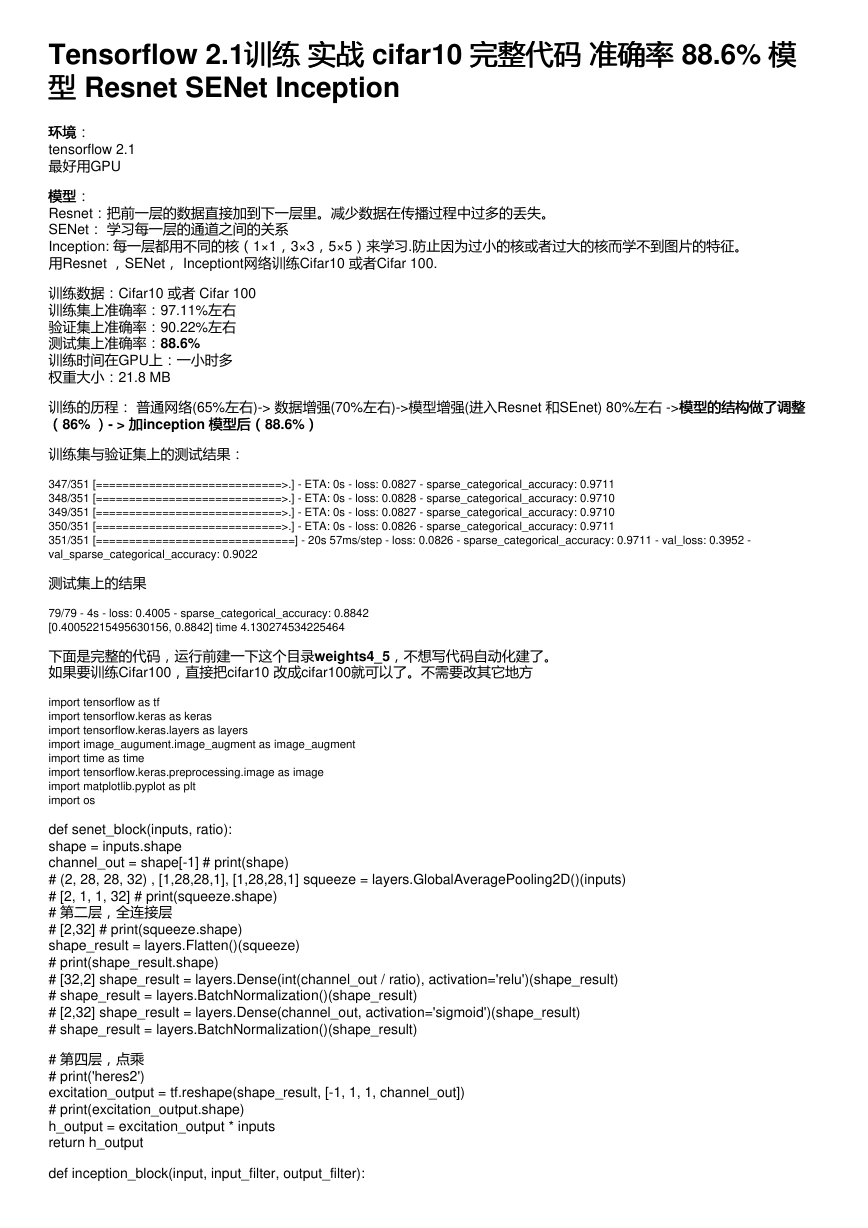

Tensorflow 2.1训练训练 实战实战 cifar10 完整代码

型型 Resnet SENet Inception

完整代码 准确率准确率 88.6% 模模

环境环境:

tensorflow 2.1

最好用GPU

模型模型:

Resnet:把前一层的数据直接加到下一层里。减少数据在传播过程中过多的丢失。

SENet: 学习每一层的通道之间的关系

Inception: 每一层都用不同的核(1×1,3×3,5×5)来学习.防止因为过小的核或者过大的核而学不到图片的特征。

用Resnet ,SENet, Inceptiont网络训练Cifar10 或者Cifar 100.

训练数据:Cifar10 或者 Cifar 100

训练集上准确率:97.11%左右

验证集上准确率:90.22%左右

测试集上准确率:88.6%

训练时间在GPU上:一小时多

权重大小:21.8 MB

训练的历程: 普通网络(65%左右)-> 数据增强(70%左右)->模型增强(进入Resnet 和SEnet) 80%左右 -> 模型的结构做了调整

模型的结构做了调整

((86% ))- > 加加inception 模型后(

模型后(88.6%))

训练集与验证集上的测试结果:

347/351 [============================>.] - ETA: 0s - loss: 0.0827 - sparse_categorical_accuracy: 0.9711

348/351 [============================>.] - ETA: 0s - loss: 0.0828 - sparse_categorical_accuracy: 0.9710

349/351 [============================>.] - ETA: 0s - loss: 0.0827 - sparse_categorical_accuracy: 0.9710

350/351 [============================>.] - ETA: 0s - loss: 0.0826 - sparse_categorical_accuracy: 0.9711

351/351 [==============================] - 20s 57ms/step - loss: 0.0826 - sparse_categorical_accuracy: 0.9711 - val_loss: 0.3952 -

val_sparse_categorical_accuracy: 0.9022

测试集上的结果

79/79 - 4s - loss: 0.4005 - sparse_categorical_accuracy: 0.8842

[0.40052215495630156, 0.8842] time 4.130274534225464

下面是完整的代码,运行前建一下这个目录weights4_5,不想写代码自动化建了。

如果要训练Cifar100,直接把cifar10 改成cifar100就可以了。不需要改其它地方

import tensorflow as tf

import tensorflow.keras as keras

import tensorflow.keras.layers as layers

import image_augument.image_augment as image_augment

import time as time

import tensorflow.keras.preprocessing.image as image

import matplotlib.pyplot as plt

import os

def senet_block(inputs, ratio):

shape = inputs.shape

channel_out = shape[-1] # print(shape)

# (2, 28, 28, 32) , [1,28,28,1], [1,28,28,1] squeeze = layers.GlobalAveragePooling2D()(inputs)

# [2, 1, 1, 32] # print(squeeze.shape)

# 第二层,全连接层

# [2,32] # print(squeeze.shape)

shape_result = layers.Flatten()(squeeze)

# print(shape_result.shape)

# [32,2] shape_result = layers.Dense(int(channel_out / ratio), activation='relu')(shape_result)

# shape_result = layers.BatchNormalization()(shape_result)

# [2,32] shape_result = layers.Dense(channel_out, activation='sigmoid')(shape_result)

# shape_result = layers.BatchNormalization()(shape_result)

# 第四层,点乘

# print('heres2')

excitation_output = tf.reshape(shape_result, [-1, 1, 1, channel_out])

# print(excitation_output.shape)

h_output = excitation_output * inputs

return h_output

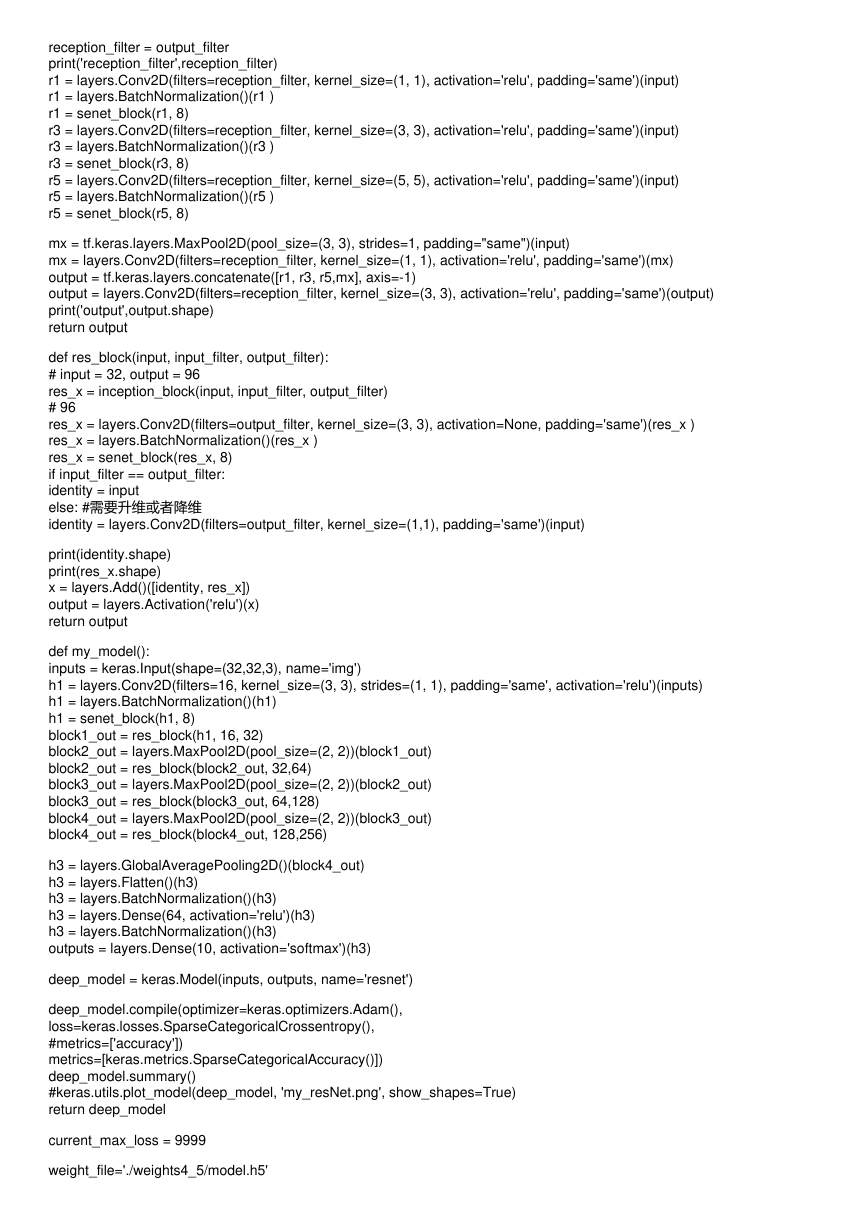

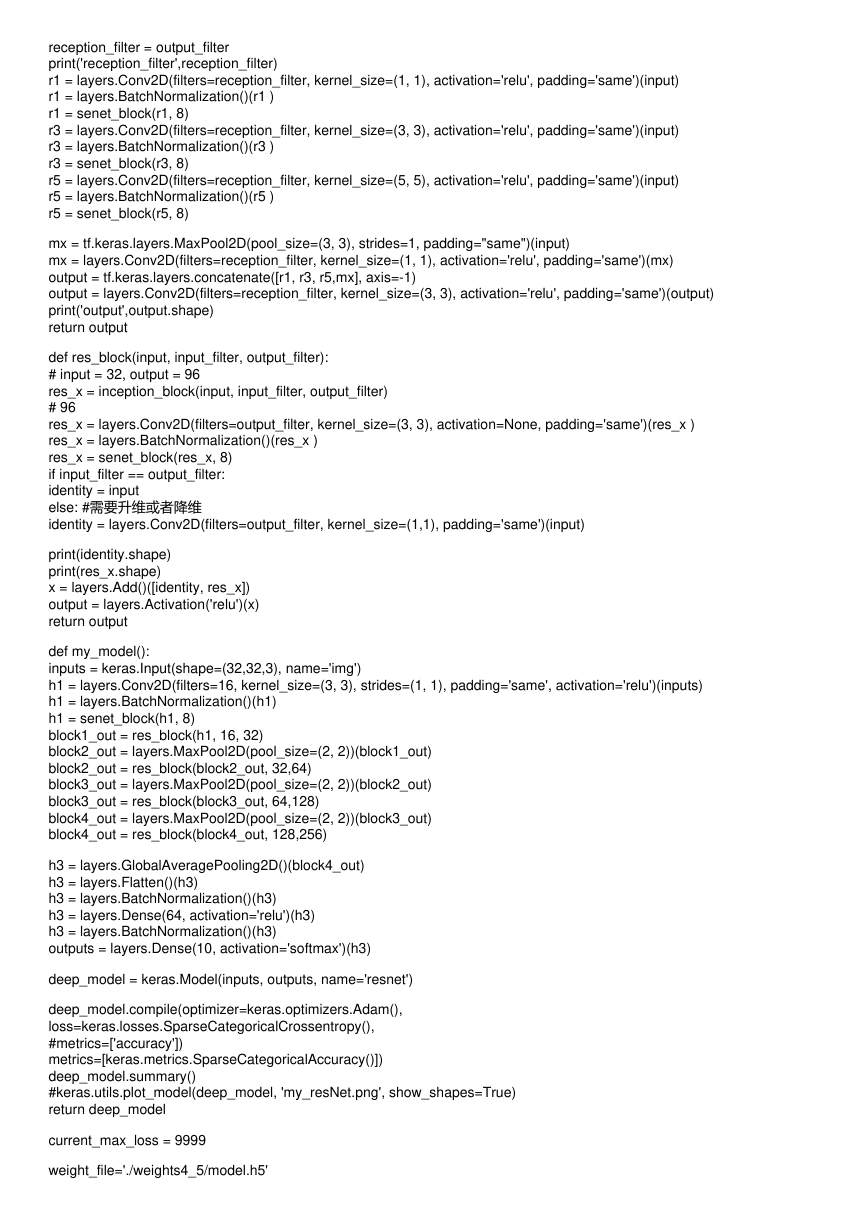

def inception_block(input, input_filter, output_filter):

�

reception_filter = output_filter

print('reception_filter',reception_filter)

r1 = layers.Conv2D(filters=reception_filter, kernel_size=(1, 1), activation='relu', padding='same')(input)

r1 = layers.BatchNormalization()(r1 )

r1 = senet_block(r1, 8)

r3 = layers.Conv2D(filters=reception_filter, kernel_size=(3, 3), activation='relu', padding='same')(input)

r3 = layers.BatchNormalization()(r3 )

r3 = senet_block(r3, 8)

r5 = layers.Conv2D(filters=reception_filter, kernel_size=(5, 5), activation='relu', padding='same')(input)

r5 = layers.BatchNormalization()(r5 )

r5 = senet_block(r5, 8)

mx = tf.keras.layers.MaxPool2D(pool_size=(3, 3), strides=1, padding="same")(input)

mx = layers.Conv2D(filters=reception_filter, kernel_size=(1, 1), activation='relu', padding='same')(mx)

output = tf.keras.layers.concatenate([r1, r3, r5,mx], axis=-1)

output = layers.Conv2D(filters=reception_filter, kernel_size=(3, 3), activation='relu', padding='same')(output)

print('output',output.shape)

return output

def res_block(input, input_filter, output_filter):

# input = 32, output = 96

res_x = inception_block(input, input_filter, output_filter)

# 96

res_x = layers.Conv2D(filters=output_filter, kernel_size=(3, 3), activation=None, padding='same')(res_x )

res_x = layers.BatchNormalization()(res_x )

res_x = senet_block(res_x, 8)

if input_filter == output_filter:

identity = input

else: #需要升维或者降维

identity = layers.Conv2D(filters=output_filter, kernel_size=(1,1), padding='same')(input)

print(identity.shape)

print(res_x.shape)

x = layers.Add()([identity, res_x])

output = layers.Activation('relu')(x)

return output

def my_model():

inputs = keras.Input(shape=(32,32,3), name='img')

h1 = layers.Conv2D(filters=16, kernel_size=(3, 3), strides=(1, 1), padding='same', activation='relu')(inputs)

h1 = layers.BatchNormalization()(h1)

h1 = senet_block(h1, 8)

block1_out = res_block(h1, 16, 32)

block2_out = layers.MaxPool2D(pool_size=(2, 2))(block1_out)

block2_out = res_block(block2_out, 32,64)

block3_out = layers.MaxPool2D(pool_size=(2, 2))(block2_out)

block3_out = res_block(block3_out, 64,128)

block4_out = layers.MaxPool2D(pool_size=(2, 2))(block3_out)

block4_out = res_block(block4_out, 128,256)

h3 = layers.GlobalAveragePooling2D()(block4_out)

h3 = layers.Flatten()(h3)

h3 = layers.BatchNormalization()(h3)

h3 = layers.Dense(64, activation='relu')(h3)

h3 = layers.BatchNormalization()(h3)

outputs = layers.Dense(10, activation='softmax')(h3)

deep_model = keras.Model(inputs, outputs, name='resnet')

deep_model.compile(optimizer=keras.optimizers.Adam(),

loss=keras.losses.SparseCategoricalCrossentropy(),

#metrics=['accuracy'])

metrics=[keras.metrics.SparseCategoricalAccuracy()])

deep_model.summary()

#keras.utils.plot_model(deep_model, 'my_resNet.png', show_shapes=True)

return deep_model

current_max_loss = 9999

weight_file='./weights4_5/model.h5'

�

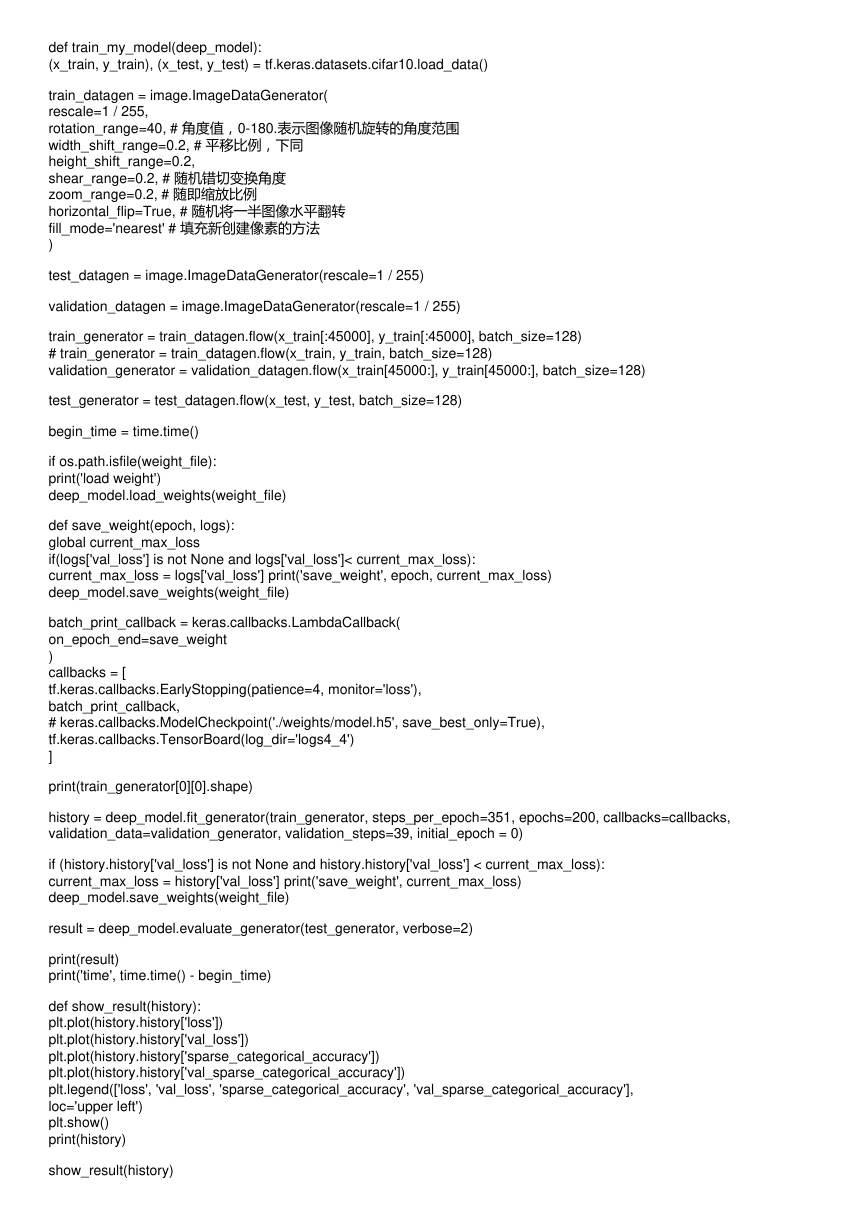

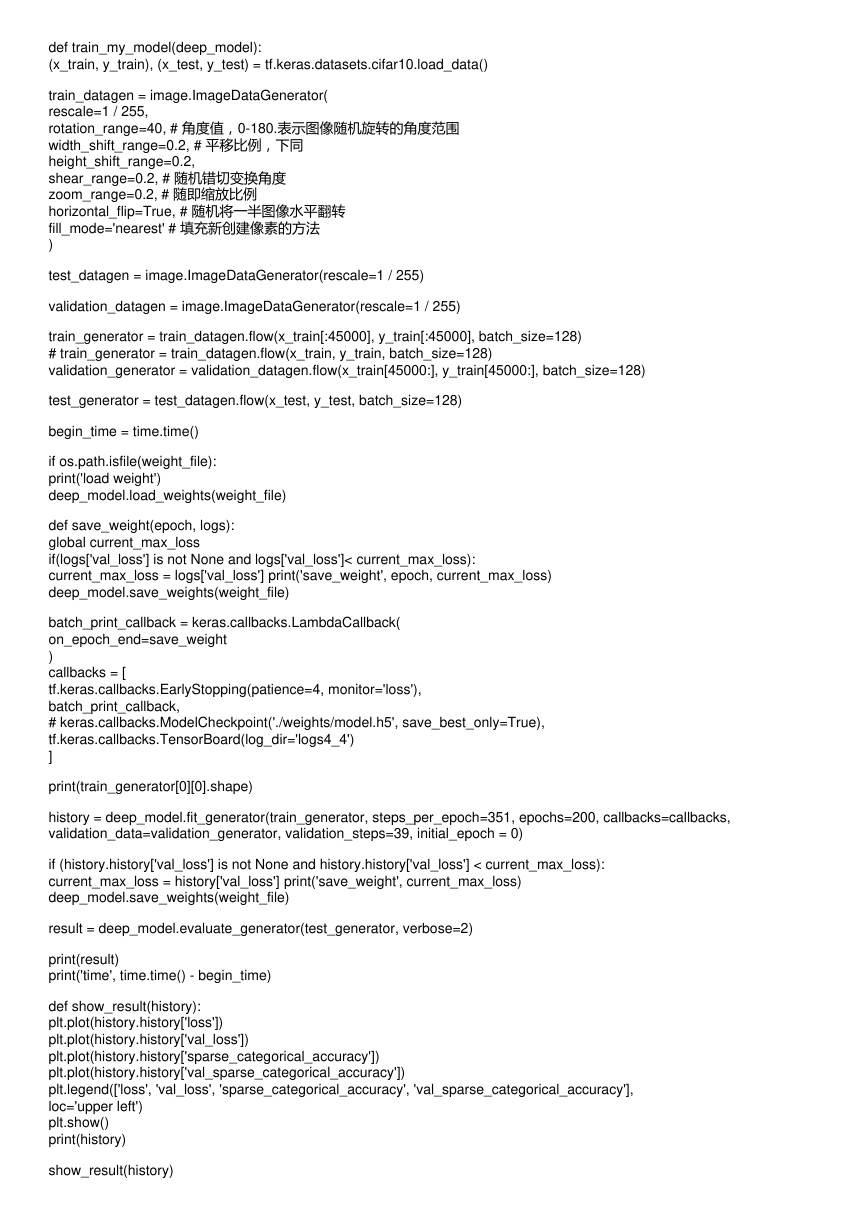

def train_my_model(deep_model):

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.cifar10.load_data()

train_datagen = image.ImageDataGenerator(

rescale=1 / 255,

rotation_range=40, # 角度值,0-180.表示图像随机旋转的角度范围

width_shift_range=0.2, # 平移比例,下同

height_shift_range=0.2,

shear_range=0.2, # 随机错切变换角度

zoom_range=0.2, # 随即缩放比例

horizontal_flip=True, # 随机将一半图像水平翻转

fill_mode='nearest' # 填充新创建像素的方法

)

test_datagen = image.ImageDataGenerator(rescale=1 / 255)

validation_datagen = image.ImageDataGenerator(rescale=1 / 255)

train_generator = train_datagen.flow(x_train[:45000], y_train[:45000], batch_size=128)

# train_generator = train_datagen.flow(x_train, y_train, batch_size=128)

validation_generator = validation_datagen.flow(x_train[45000:], y_train[45000:], batch_size=128)

test_generator = test_datagen.flow(x_test, y_test, batch_size=128)

begin_time = time.time()

if os.path.isfile(weight_file):

print('load weight')

deep_model.load_weights(weight_file)

def save_weight(epoch, logs):

global current_max_loss

if(logs['val_loss'] is not None and logs['val_loss']< current_max_loss):

current_max_loss = logs['val_loss'] print('save_weight', epoch, current_max_loss)

deep_model.save_weights(weight_file)

batch_print_callback = keras.callbacks.LambdaCallback(

on_epoch_end=save_weight

)

callbacks = [

tf.keras.callbacks.EarlyStopping(patience=4, monitor='loss'),

batch_print_callback,

# keras.callbacks.ModelCheckpoint('./weights/model.h5', save_best_only=True),

tf.keras.callbacks.TensorBoard(log_dir='logs4_4')

]

print(train_generator[0][0].shape)

history = deep_model.fit_generator(train_generator, steps_per_epoch=351, epochs=200, callbacks=callbacks,

validation_data=validation_generator, validation_steps=39, initial_epoch = 0)

if (history.history['val_loss'] is not None and history.history['val_loss'] < current_max_loss):

current_max_loss = history['val_loss'] print('save_weight', current_max_loss)

deep_model.save_weights(weight_file)

result = deep_model.evaluate_generator(test_generator, verbose=2)

print(result)

print('time', time.time() - begin_time)

def show_result(history):

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.plot(history.history['sparse_categorical_accuracy'])

plt.plot(history.history['val_sparse_categorical_accuracy'])

plt.legend(['loss', 'val_loss', 'sparse_categorical_accuracy', 'val_sparse_categorical_accuracy'],

loc='upper left')

plt.show()

print(history)

show_result(history)

�

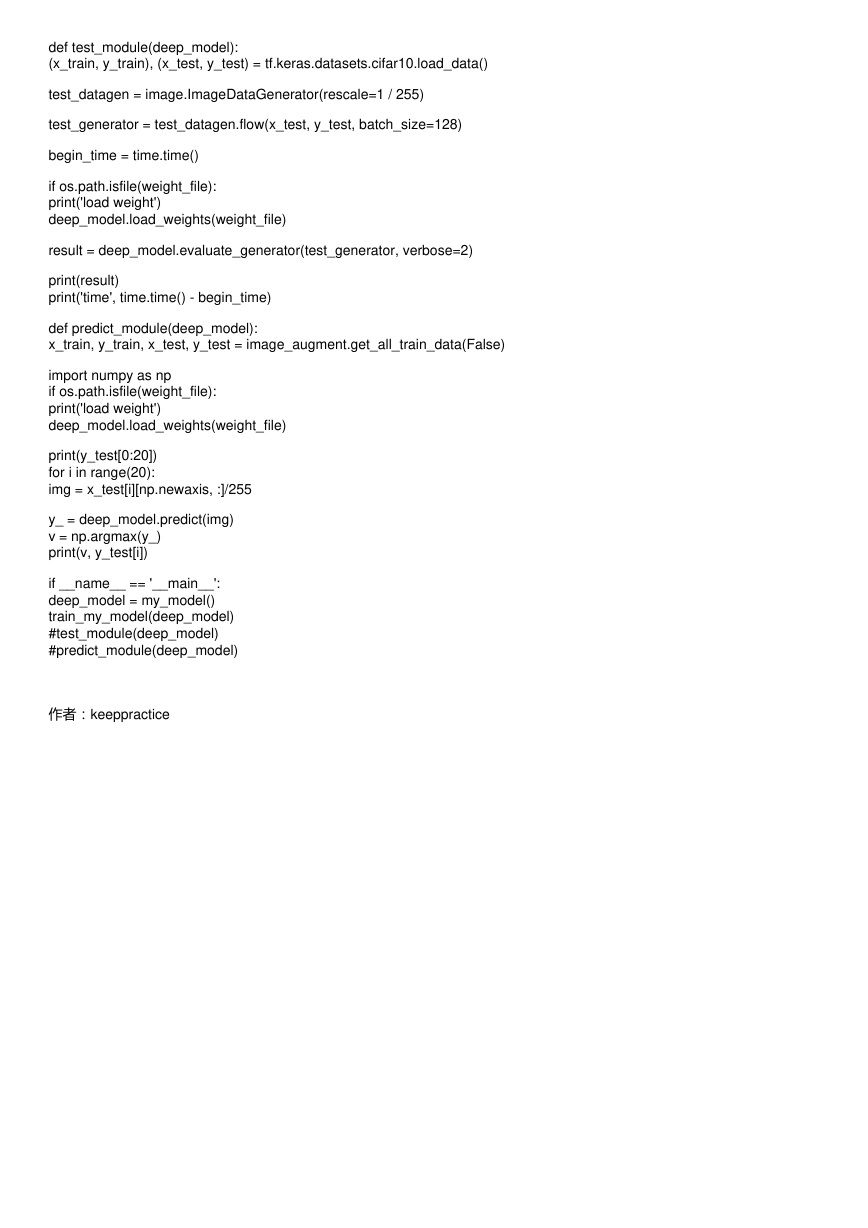

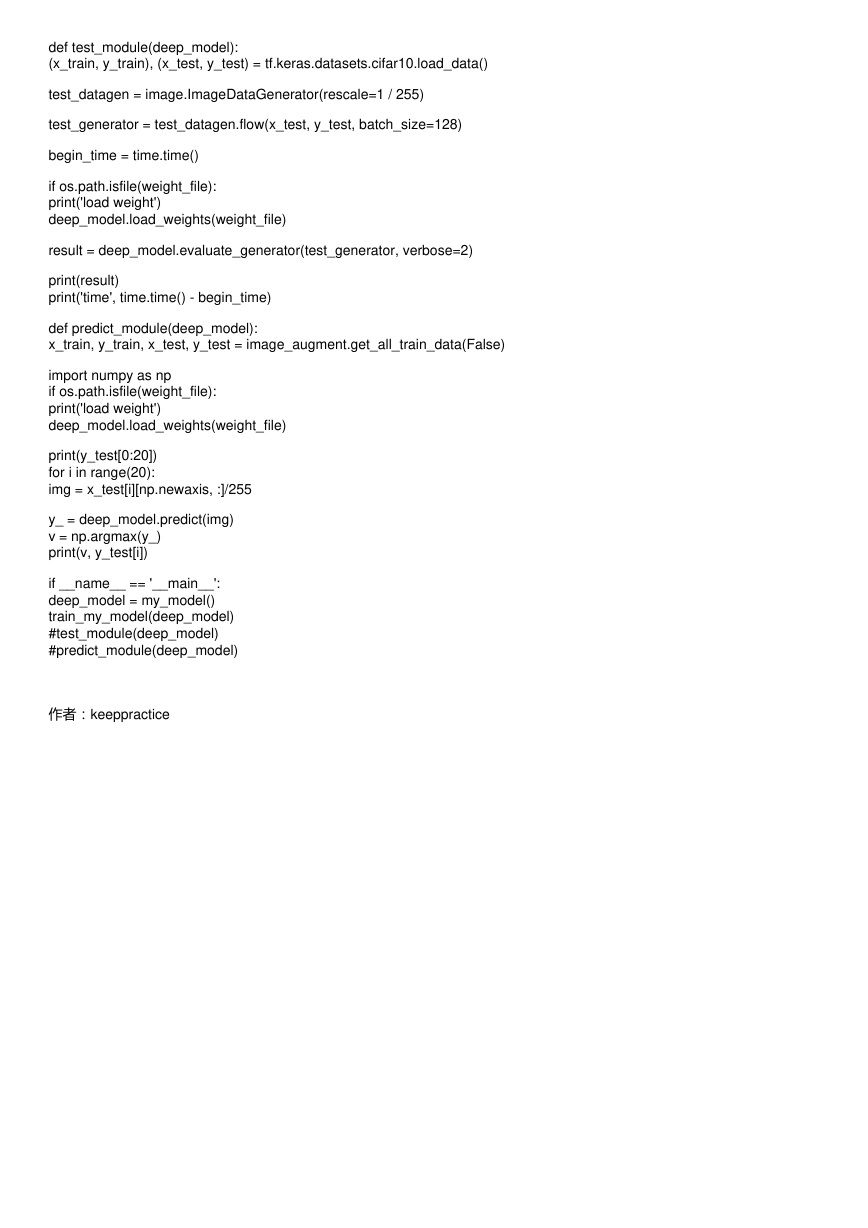

def test_module(deep_model):

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.cifar10.load_data()

test_datagen = image.ImageDataGenerator(rescale=1 / 255)

test_generator = test_datagen.flow(x_test, y_test, batch_size=128)

begin_time = time.time()

if os.path.isfile(weight_file):

print('load weight')

deep_model.load_weights(weight_file)

result = deep_model.evaluate_generator(test_generator, verbose=2)

print(result)

print('time', time.time() - begin_time)

def predict_module(deep_model):

x_train, y_train, x_test, y_test = image_augment.get_all_train_data(False)

import numpy as np

if os.path.isfile(weight_file):

print('load weight')

deep_model.load_weights(weight_file)

print(y_test[0:20])

for i in range(20):

img = x_test[i][np.newaxis, :]/255

y_ = deep_model.predict(img)

v = np.argmax(y_)

print(v, y_test[i])

if __name__ == '__main__':

deep_model = my_model()

train_my_model(deep_model)

#test_module(deep_model)

#predict_module(deep_model)

作者:keeppractice

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc