Contents

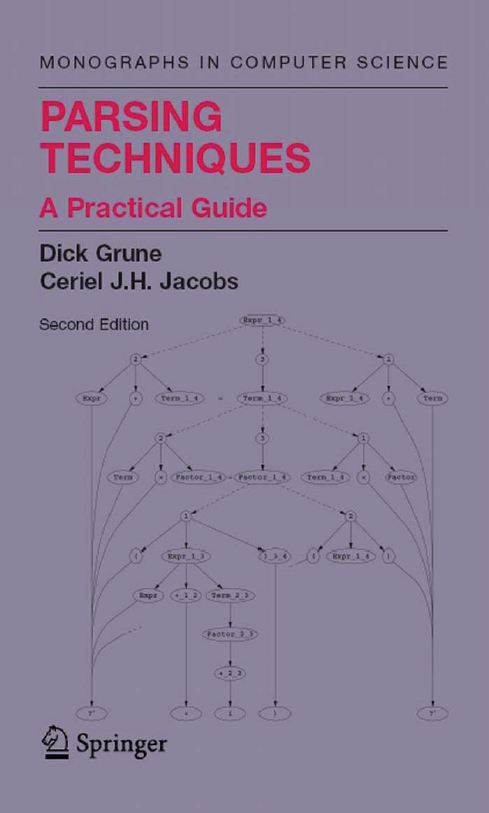

Preface to the Second Edition

Preface to the First Edition

1. Introduction

1.1 Parsing as a Craft

1.2 The Approach Used

1.3 Outline of the Contents

1.4 The Annotated Bibliography

2. Grammars as a Generating Device

2.1 Languages as Infinite Sets

2.1.1 Language

2.1.2 Grammars

2.1.3 Problems with Infinite Sets

2.1.4 Describing a Language through a Finite Recipe

2.2 Formal Grammars

2.2.1 The Formalism of Formal Grammars

2.2.2 Generating Sentences from a Formal Grammar

2.2.3 The Expressive Power of Formal Grammars

2.3 The Chomsky Hierarchy of Grammars and Languages

2.3.1 Type 1 Grammars

2.3.2 Type 2 Grammars

2.3.3 Type 3 Grammars

2.3.4 Type 4 Grammars

2.3.5 Conclusion

2.4 Actually Generating Sentences froma Grammar

2.4.1 The Phrase-Structure Case

2.4.2 The CS Case

2.4.3 The CF Case

2.5 To Shrink or Not To Shrink

2.6 Grammars that Produce the Empty Language

2.7 The Limitations of CF and FS Grammars

2.7.1 The uvwxy Theorem

2.7.2 The uvw Theorem

2.8 CF and FS Grammars as Transition Graphs

2.9 Hygiene in Context-Free Grammars

2.9.1 Undefined Non-Terminals

2.9.2 Unreachable Non-Terminals

2.9.3 Non-Productive Rules and Non-Terminals

2.9.4 Loops

2.9.5 Cleaning up a Context-Free Grammar

2.10 Set Properties of Context-Free and Regular Languages

2.11 The Semantic Connection

2.11.1 Attribute Grammars

2.11.2 Transduction Grammars

2.11.3 Augmented Transition Networks

2.12 A Metaphorical Comparison of Grammar Types

2.13 Conclusion

3. Introduction to Parsing

3.1 The Parse Tree

3.1.1 The Size of a Parse Tree

3.1.2 Various Kinds of Ambiguity

3.1.3 Linearization of the Parse Tree

3.2 Two Ways to Parse a Sentence

3.2.1 Top-Down Parsing

3.2.2 Bottom-Up Parsing

3.2.3 Applicability

3.3 Non-Deterministic Automata

3.3.1 Constructing the NDA

3.3.2 Constructing the Control Mechanism

3.4 Recognition and Parsing for Type 0 to Type 4 Grammars

3.4.1 Time Requirements

3.4.2 Type 0 and Type 1 Grammars

3.4.3 Type 2 Grammars

3.4.4 Type 3 Grammars

3.4.5 Type 4 Grammars

3.5 An Overview of Context-Free Parsing Methods

3.5.1 Directionality

3.5.2 Search Techniques

3.5.3 General Directional Methods

3.5.4 Linear Methods

3.5.5 Deterministic Top-Down and Bottom-Up Methods

3.5.6 Non-Canonical Methods

3.5.7 Generalized Linear Methods

3.5.8 Conclusion

3.6 The "Strength" of a Parsing Technique

3.7 Representations of Parse Trees

3.7.1 Parse Trees in the Producer-Consumer Model

3.7.2 Parse Trees in the Data Structure Model

3.7.3 Parse Forests

3.7.4 Parse-Forest Grammars

3.8 When are we done Parsing?

3.9 Transitive Closure

3.10 The Relation between Parsing and Boolean Matrix Multiplication

3.11 Conclusion

4. General Non-Directional Parsing

4.1 Unger's Parsing Method

4.1.1 Unger's Method without ε-Rules or Loops

4.1.2 Unger's Method with ε-Rules

4.1.3 Getting Parse-Forest Grammars from Unger Parsing

4.2 The CYK Parsing Method

4.2.1 CYK Recognition with General CF Grammars

4.2.2 CYK Recognition with a Grammar in Chomsky Normal Form

4.2.3 Transforming a CF Grammar into Chomsky Normal Form

4.2.4 The Example Revisited

4.2.5 CYK Parsing with Chomsky Normal Form

4.2.6 Undoing the Effect of the CNF Transformation

4.2.7 A Short Retrospective of CYK

4.2.8 Getting Parse-Forest Grammars from CYK Parsing

4.3 Tabular Parsing

4.3.1 Top-Down Tabular Parsing

4.3.2 Bottom-Up Tabular Parsing

4.4 Conclusion

5. Regular Grammars and Finite-State Automata

5.1 Applications of Regular Grammars

5.1.1 Regular Languages in CF Parsing

5.1.2 Systems with Finite Memory

5.1.3 Pattern Searching

5.1.4 SGML and XML Validation

5.2 Producing from a Regular Grammar

5.3 Parsing with a Regular Grammar

5.3.1 Replacing Sets by States

5.3.2 ε-Transitions and Non-Standard Notation

5.4 Manipulating Regular Grammars and Regular Expressions

5.4.1 Regular Grammars from Regular Expressions

5.4.2 Regular Expressions from Regular Grammars

5.5 Manipulating Regular Languages

5.6 Left-Regular Grammars

5.7 Minimizing Finite-State Automata

5.8 Top-Down Regular Expression Recognition

5.8.1 The Recognizer

5.8.2 Evaluation

5.9 Semantics in FS Systems

5.10 Fast Text Search Using Finite-State Automata

5.11 Conclusion

6. General Directional Top-Down Parsing

6.1 Imitating Leftmost Derivations

6.2 The Pushdown Automaton

6.3 Breadth-First Top-Down Parsing

6.3.1 An Example

6.3.2 A Counterexample: Left Recursion

6.4 Eliminating Left Recursion

6.5 Depth-First (Backtracking) Parsers

6.6 Recursive Descent

6.6.1 A Naive Approach

6.6.2 Exhaustive Backtracking Recursive Descent

6.6.3 Breadth-First Recursive Descent

6.7 Definite Clause Grammars

6.7.1 Prolog

6.7.2 The DCG Format

6.7.3 Getting Parse Tree Information

6.7.4 Running Definite Clause Grammar Programs

6.8 Cancellation Parsing

6.8.1 Cancellation Sets

6.8.2 The Transformation Scheme

6.8.3 Cancellation Parsing with ε-Rules

6.9 Conclusion

7. General Directional Bottom-Up Parsing

7.1 Parsing by Searching

7.1.1 Depth-First (Backtracking) Parsing

7.1.2 Breadth-First (On-Line) Parsing

7.1.3 A Combined Representation

7.1.4 A Slightly More Realistic Example

7.2 The Earley Parser

7.2.1 The Basic Earley Parser

7.2.2 The Relation between the Earley and CYK Algorithms

7.2.3 Handling ε-Rules

7.2.4 Exploiting Look-Ahead

7.2.5 Left and Right Recursion

7.3 Chart Parsing

7.3.1 Inference Rules

7.3.2 A Transitive Closure Algorithm

7.3.3 Completion

7.3.4 Bottom-Up (Actually Left-Corner)

7.3.5 The Agenda

7.3.6 Top-Down

7.3.7 Conclusion

7.4 Conclusion

8. Deterministic Top-Down Parsing

8.1 Replacing Search by Table Look-Up

8.2 LL(1) Parsing

8.2.1 LL(1) Parsing without ε-Rules

8.2.2 LL(1) Parsing with ε-Rules

8.2.3 LL(1) versus Strong-LL(1)

8.2.4 Full LL(1) Parsing

8.2.5 Solving LL(1) Conflicts

8.2.6 LL(1) and Recursive Descent

8.3 Increasing the Power of Deterministic LL Parsing

8.3.1 LL(k) Grammars

8.3.2 Linear-Approximate LL(k)

8.3.3 LL-Regular

8.4 Getting a Parse Tree Grammar from LL(1) Parsing

8.5 Extended LL(1) Grammars

8.6 Conclusion

9. Deterministic Bottom-Up Parsing

9.1 Simple Handle-Finding Techniques

9.2 Precedence Parsing

9.2.1 Parenthesis Generators

9.2.2 Constructing the Operator-Precedence Table

9.2.3 Precedence Functions

9.2.4 Further Precedence Methods

9.3 Bounded-Right-Context Parsing

9.3.1 Bounded-Context Techniques

9.3.2 Floyd Productions

9.4 LR Methods

9.5 LR(0)

9.5.1 The LR(0) Automaton

9.5.2 Using the LR(0) Automaton

9.5.3 LR(0) Conflicts

9.5.4 ε-LR(0) Parsing

9.5.5 Practical LR Parse Table Construction

9.6 LR(1)

9.6.1 LR(1) with ε-Rules

9.6.2 LR(k > 1) Parsing

9.6.3 Some Properties of LR(k) Parsing

9.7 LALR(1)

9.7.1 Constructing the LALR(1) Parsing Tables

9.7.2 Identifying LALR(1) Conflicts

9.8 SLR(1)

9.9 Conflict Resolvers

9.10 Further Developments of LR Methods

9.10.1 Elimination of Unit Rules

9.10.2 Reducing the Stack Activity

9.10.3 Regular Right Part Grammars

9.10.4 Incremental Parsing

9.10.5 Incremental Parser Generation

9.10.6 Recursive Ascent

9.10.7 Regular Expressions of LR Languages

9.11 Getting a Parse Tree Grammar from LR Parsing

9.12 Left and Right Contexts of Parsing Decisions

9.12.1 The Left Context of a State

9.12.2 The Right Context of an Item

9.13 Exploiting the Left and Right Contexts

9.13.1 Discriminating-Reverse (DR) Parsing

9.13.2 LR-Regular

9.13.3 LAR(m) Parsing

9.14 LR(k) as an Ambiguity Test

9.15 Conclusion

10. Non-Canonical Parsers

10.1 Top-Down Non-Canonical Parsing

10.1.1 Left-Corner Parsing

10.1.2 Deterministic Cancellation Parsing

10.1.3 Partitioned LL

10.1.4 Discussion

10.2 Bottom-Up Non-Canonical Parsing

10.2.1 Total Precedence

10.2.2 NSLR(1)

10.2.3 LR(k,∞)

10.2.4 Partitioned LR

10.3 General Non-Canonical Parsing

10.4 Conclusion

11. Generalized Deterministic Parsers

11.1 Generalized LR Parsing

11.1.1 The Basic GLR Parsing Algorithm

11.1.2 Necessary Optimizations

11.1.3 Hidden Left Recursion and Loops

11.1.4 Extensions and Improvements

11.2 Generalized LL Parsing

11.2.1 Simple Generalized LL Parsing

11.2.2 Generalized LL Parsing with Left-Recursion

11.2.3 Generalized LL Parsing with ε-Rules

11.2.4 Generalized Cancellation and LC Parsing

11.3 Conclusion

12. Substring Parsing

12.1 The Suffix Grammar

12.2 General (Non-Linear) Methods

12.2.1 A Non-Directional Method

12.2.2 A Directional Method

12.3 Linear-Time Methods for LL and LR Grammars

12.3.1 Linear-Time Suffix Parsing for LL(1) Grammars

12.3.2 Linear-Time Suffix Parsing for LR(1) Grammars

12.3.3 Tabular Methods

12.3.4 Discussion

12.4 Conclusion

13. Parsing as Intersection

13.1 The Intersection Algorithm

13.1.1 The Rule Sets I[sub(rules)], I[sub(rough)], and I

13.1.2 The Languages of [sub(rules)], I[sub(rough)], and I

13.1.3 An Example: Parsing Arithmetic Expressions

13.2 The Parsing of FSAs

13.2.1 Unknown Tokens

13.2.2 Substring Parsing by Intersection

13.2.3 Filtering

13.3 Time and Space Requirements

13.4 Reducing the Intermediate Size: Earley's Algorithm on FSAs

13.5 Error Handling Using Intersection Parsing

13.6 Conclusion

14. Parallel Parsing

14.1 The Reasons for Parallel Parsing

14.2 Multiple Serial Parsers

14.3 Process-Configuration Parsers

14.3.1 A Parallel Bottom-up GLR Parser

14.3.2 Some Other Process-Configuration Parsers

14.4 Connectionist Parsers

14.4.1 Boolean Circuits

14.4.2 A CYK Recognizer on a Boolean Circuit

14.4.3 Rytter's Algorithm

14.5 Conclusion

15. Non-Chomsky Grammars and Their Parsers

15.1 The Unsuitability of Context-Sensitive Grammars

15.1.1 Understanding Context-Sensitive Grammars

15.1.2 Parsing with Context-Sensitive Grammars

15.1.3 Expressing Semantics in Context-Sensitive Grammars

15.1.4 Error Handling in Context-Sensitive Grammars

15.1.5 Alternatives

15.2 Two-Level Grammars

15.2.1 VW Grammars

15.2.2 Expressing Semantics in a VW Grammar

15.2.3 Parsing with VW Grammars

15.2.4 Error Handling in VW Grammars

15.2.5 Infinite Symbol Sets

15.3 Attribute and Affix Grammars

15.3.1 Attribute Grammars

15.3.2 Affix Grammars

15.4 Tree-Adjoining Grammars

15.4.1 Cross-Dependencies

15.4.2 Parsing with TAGs

15.5 Coupled Grammars

15.5.1 Parsing with Coupled Grammars

15.6 Ordered Grammars

15.6.1 Rule Ordering by Control Grammar

15.6.2 Parsing with Rule-Ordered Grammars

15.6.3 Marked Ordered Grammars

15.6.4 Parsing with Marked Ordered Grammars

15.7 Recognition Systems

15.7.1 Properties of a Recognition System

15.7.2 Implementing a Recognition System

15.7.3 Parsing with Recognition Systems

15.7.4 Expressing Semantics in Recognition Systems

15.7.5 Error Handling in Recognition Systems

15.8 Boolean Grammars

15.8.1 Expressing Context Checks in Boolean Grammars

15.8.2 Parsing with Boolean Grammars

15.8.3 §-Calculus

15.9 Conclusion

16. Error Handling

16.1 Detection versus Recovery versus Correction

16.2 Parsing Techniques and Error Detection

16.2.1 Error Detection in Non-Directional Parsing Methods

16.2.2 Error Detection in Finite-State Automata

16.2.3 Error Detection in General Directional Top-Down Parsers

16.2.4 Error Detection in General Directional Bottom-Up Parsers

16.2.5 Error Detection in Deterministic Top-Down Parsers

16.2.6 Error Detection in Deterministic Bottom-Up Parsers

16.3 Recovering from Errors

16.4 Global Error Handling

16.5 Regional Error Handling

16.5.1 Backward/Forward Move Error Recovery

16.5.2 Error Recovery with Bounded-Context Grammars

16.6 Local Error Handling

16.6.1 Panic Mode

16.6.2 FOLLOW-Set Error Recovery

16.6.3 Acceptable-Sets Derived from Continuations

16.6.4 Insertion-Only Error Correction

16.6.5 Locally Least-Cost Error Recovery

16.7 Non-Correcting Error Recovery

16.7.1 Detection and Recovery

16.7.2 Locating the Error

16.8 Ad Hoc Methods

16.8.1 Error Productions

16.8.2 Empty Table Slots

16.8.3 Error Tokens

16.9 Conclusion

17. Practical Parser Writing and Usage

17.1 A Comparative Survey

17.1.1 Considerations

17.1.2 General Parsers

17.1.3 General Substring Parsers

17.1.4 Linear-Time Parsers

17.1.5 Linear-Time Substring Parsers

17.1.6 Obtaining and Using a Parser Generator

17.2 Parser Construction

17.2.1 Interpretive, Table-Based, and Compiled Parsers

17.2.2 Parsing Methods and Implementations

17.3 A Simple General Context-Free Parser

17.3.1 Principles of the Parser

17.3.2 The Program

17.3.3 Handling Left Recursion

17.3.4 Parsing in Polynomial Time

17.4 Programming Language Paradigms

17.4.1 Imperative and Object-Oriented Programming

17.4.2 Functional Programming

17.4.3 Logic Programming

17.5 Alternative Uses of Parsing

17.5.1 Data Compression

17.5.2 Machine Code Generation

17.5.3 Support of Logic Languages

17.6 Conclusion

18. Annotated Bibliography

18.1 Major Parsing Subjects

18.1.1 Unrestricted PS and CS Grammars

18.1.2 General Context-Free Parsing

18.1.3 LL Parsing

18.1.4 LR Parsing

18.1.5 Left-Corner Parsing

18.1.6 Precedence and Bounded-Right-Context Parsing

18.1.7 Finite-State Automata

18.1.8 General Books and Papers on Parsing

18.2 Advanced Parsing Subjects

18.2.1 Generalized Deterministic Parsing

18.2.2 Non-Canonical Parsing

18.2.3 Substring Parsing

18.2.4 Parsing as Intersection

18.2.5 Parallel Parsing Techniques

18.2.6 Non-Chomsky Systems

18.2.7 Error Handling

18.2.8 Incremental Parsing

18.3 Parsers and Applications

18.3.1 Parser Writing

18.3.2 Parser-Generating Systems

18.3.3 Applications

18.3.4 Parsing and Deduction

18.3.5 Parsing Issues in Natural Language Handling

18.4 Support Material

18.4.1 Formal Languages

18.4.2 Approximation Techniques

18.4.3 Transformations on Grammars

18.4.4 Miscellaneous Literature

A. Hints and Solutions to Selected Problems

Author Index

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

Q

R

S

T

U

V

W

Y

Z

Subject Index

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

Q

R

S

T

U

V

W

X

Y

Z

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc