Half-title

Title

Copyright

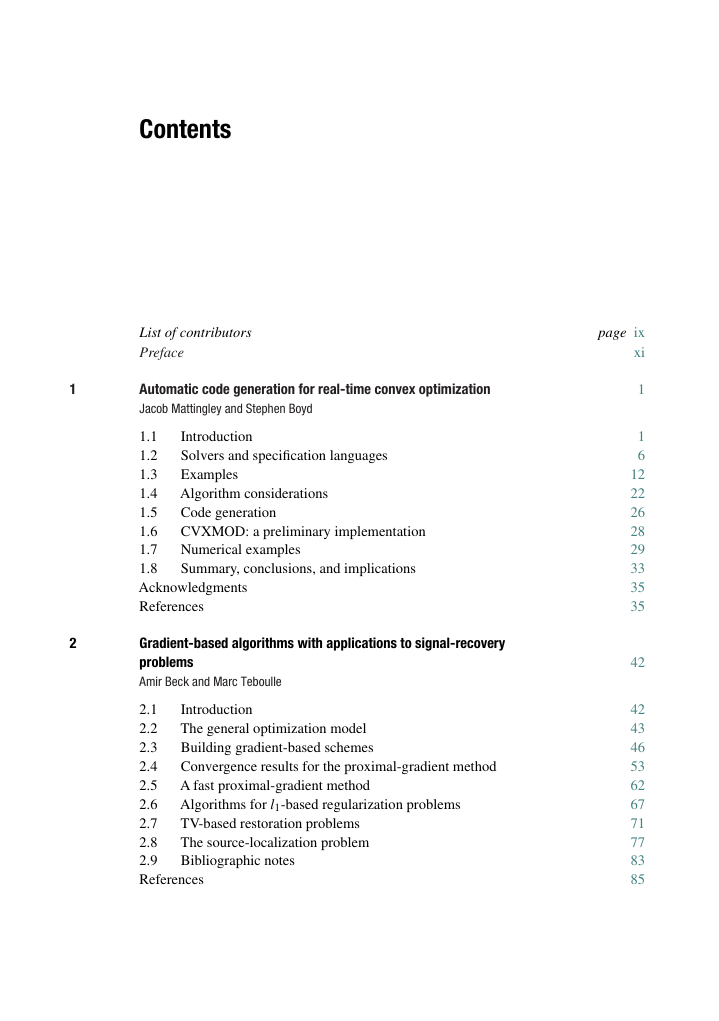

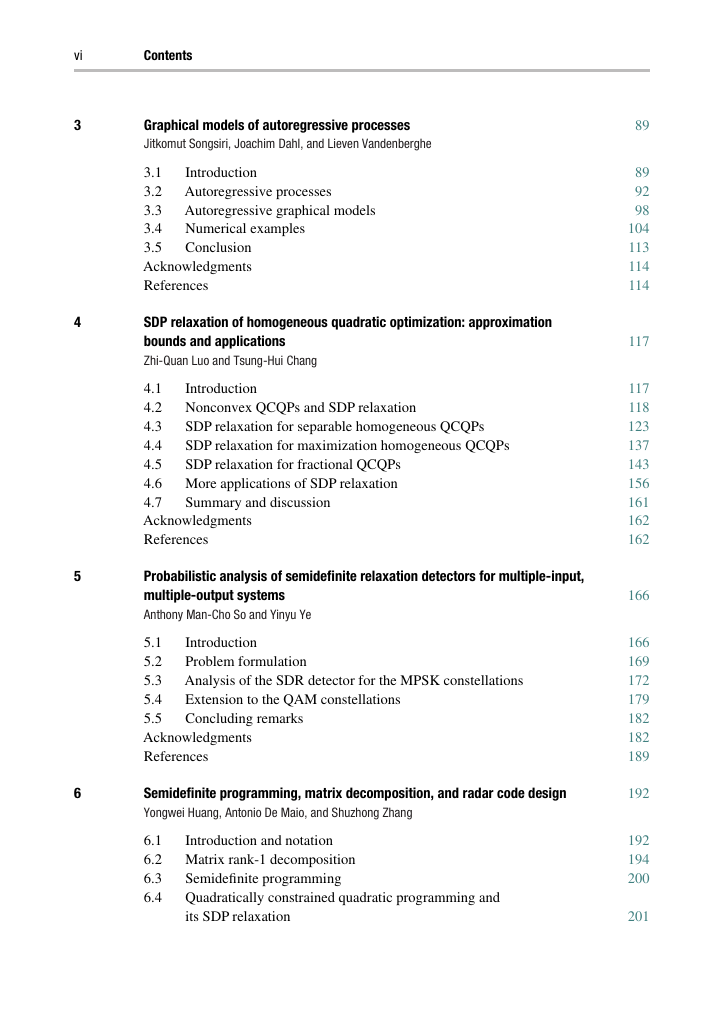

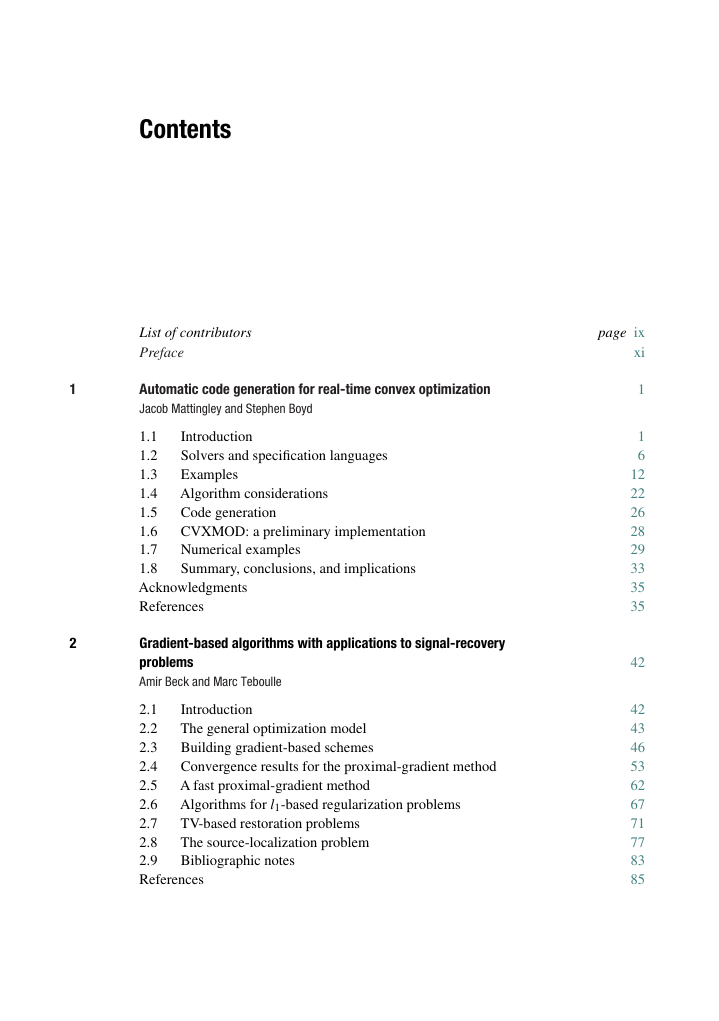

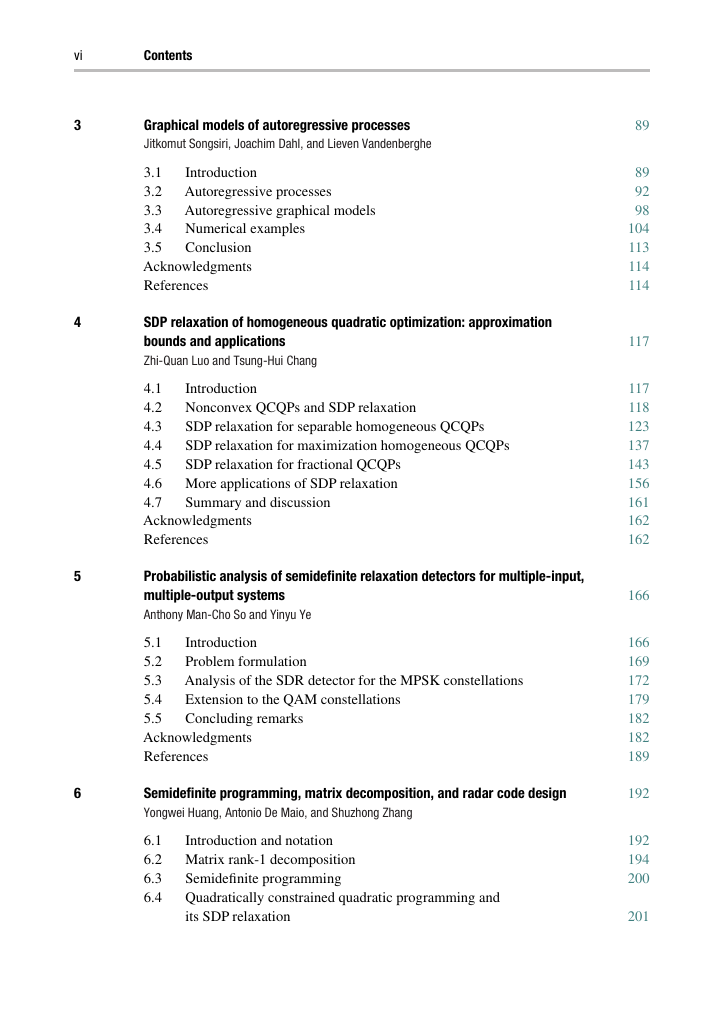

Contents

List of contributors

Preface

1 Automatic code generation for real-time convex optimization

1.1 Introduction

1.1.1 Advisory optimization

1.1.2 Embedded optimization

1.1.3 Convex optimization

1.1.4 Outline

1.1.5 Previous and related work

Control

Signal processing, communications, and networking

Code generation

1.2 Solvers and specification languages

1.2.1 Problem families and instances

1.2.2 Solvers

1.2.3 Specification languages

1.2.4 Parser-solvers

1.2.5 Code generators

1.2.6 Example from CVXMOD

1.3 Examples

Real-time adaptation

Real-time trajectory planning

Feedback control

Real-time sensing, estimation, or detection

Real-time system identification

1.3.1 Adaptive filtering and equalization

1.3.2 Optimal order execution

1.3.3 Sliding-window smoothing

1.3.4 Sliding-window estimation

1.3.5 Real-time input design

1.3.6 Model predictive control

1.3.7 Optimal network flow rates

1.3.8 Optimal power generation and distribution

1.3.9 Processor-speed scheduling

1.4 Algorithm considerations

1.4.1 Requirements

Stability and reliability

Graceful handling of infeasibility

Guaranteed-run time bounds

1.4.2 Exploitable features

Known (and often modest) accuracy requirements

Good initializations are often available

Variable ranges can be determined

1.4.3 Interior-point methods

1.4.4 Solving systems with KKT-like structure

1.5 Code generation

1.5.1 Custom KKT solver

1.5.2 Additional optimizations

1.6 CVXMOD: a preliminary implementation

1.6.1 Algorithm

1.7 Numerical examples

1.7.1 Model predictive control

1.7.2 Optimal order execution

1.7.3 Optimal network flow rates

1.7.4 Real-time actuator optimization

1.8 Summary, conclusions, and implications

Acknowledgments

References

2 Gradient-based algorithms with applications to signal-recovery problems

2.1 Introduction

2.2 The general optimization model

2.2.1 Generic problem formulation

2.2.2 Signal recovery via nonsmooth regularization

2.3 Building gradient-based schemes

2.3.1 The quadratic-approximation model for (M)

2.3.2 The fixed-point approach

2.3.3 Majorization–minimization technique

The idea

The MM method for the RLS problem

2.3.4 Fermat–Weber location problem

2.4 Convergence results for the proximal-gradient method

2.4.1 The prox-grad map

2.4.2 Fundamental inequalities

2.4.3 Convergence of the proximal-gradient method: the convex case

Proximal-gradient method with constant stepsize

Proximal-gradient method with backtracking

2.4.4 The nonconvex case

2.5 A fast proximal-gradient method

2.5.1 Idea of the method

2.5.2 A fast proximal-gradient method using two past iterations

Fast proximal gradient method with constant stepsize

Fast proximal-gradient method with backtracking

2.5.3 Monotone versus nonmonotone

Monotone fast proximal-gradient method

2.6 Algorithms for l1-based regularization problems

2.6.1 Problem formulation

2.6.2 ISTA: iterative shrinkage/thresholding algorithm

2.6.3 FISTA: fast ISTA

2.6.4 Numerical examples

2.7 TV-based restoration problems

2.7.1 Problem formulation

2.7.2 TV-based denoising

2.7.3 TV-based deblurring

2.7.4 Numerical example

2.8 The source-localization problem

2.8.1 Problem formulation

2.8.2 The simple fixed-point algorithm: definition and analysis

2.8.3 The SWLS algorithm

2.9 Bibliographic notes

References

3 Graphical models of autoregressive processes

3.1 Introduction

Notation

3.2 Autoregressive processes

3.2.1 Least-squares linear prediction

3.2.2 Maximum-likelihood estimation

3.2.3 Maximum-entropy estimation

3.3 Autoregressive graphical models

3.3.1 Conditional independence in time series

3.3.2 Maximum-likelihood and maximum-entropy estimation

3.3.3 Properties of block-Toeplitz sample covariances

Exactness of the relaxation

Stability of estimated models

3.3.4 Summary

3.4 Numerical examples

3.4.1 Randomly generated data

3.4.2 Model selection

3.4.3 Air pollution data

3.4.4 stock marketsInternational

3.4.5 European stock markets

3.5 Conclusion

Acknowledgments

References

4 SDP relaxation of homogeneous quadratic optimization: approximation bounds and applications

4.1 Introduction

4.2 Nonconvex QCQPs and SDP relaxation

4.2.1 SDP relaxation

4.2.2 Extracting a rank-1 solution: Gaussian sampling

4.2.3 Approximation ratio

4.3 SDP relaxation for separable homogeneous QCQPs

4.3.1 Separable homogeneous QCQPs

4.3.2 Downlink transmit beamforming

4.3.3 SDP relaxation for unicast transmit beamforming

4.3.4 SDP relaxation for far-field, multicast transmit beamforming

4.3.5 SDP relaxation for single-group, multicast transmit beamforming

Worst-case approximation bounds

Numerical results

4.4 SDP relaxation for maximization homogeneous QCQPs

4.4.1 Worst-case approximation bounds

4.4.2 Numerical results

4.5 SDP relaxation for fractional QCQPs

4.5.1 SDP relaxation for fractional QCQPs

Worst-case approximation bounds

4.5.2 SDP relaxation for generalized fractional QCQPs

Worst-case approximation bounds

Numerical results

4.6 More applications of SDP relaxation

4.6.1 SDP relaxation for the magnitude least-squares (MLS) problem

4.6.2 SDP relaxation for the magnitude-squared least-squares (MSLS) problem

4.7 Summary and discussion

Acknowledgments

References

5 Probabilistic analysis of semidefinite relaxation detectors for multiple-input, multiple-output systems

5.1 Introduction

5.2 Problem formulation

5.3 Analysis of the SDR detector for the MPSK constellations

5.3.1 The…

5.3.2 The M = 2 case

5.4 Extension to the QAM constellations

5.5 Concluding remarks

Acknowledgments

A5 Appendix to Chapter 5. Some probabilistic estimates

A5.1 Bounding the largest singular value of an arbitrary matrix

A5.2 The largest singular value of a complex Gaussian random matrix

A5.3 The largest singular value of a real Gaussian random matrix

A5.4 The squared-norm of a complex Gaussian random vector

References

6 Semidefinite programming, matrix decomposition, and radar code design

6.1 Introduction and notation

6.2 Matrix rank-1 decomposition

6.2.1 Rank-1 matrix decomposition schemes

6.2.2 Numerical performance

6.3 Semidefinite programming

6.4 Quadratically constrained quadratic programming and its SDP relaxation

6.5 Polynomially solvable QCQP problems

6.5.1 QCQP problem with two constraints

6.5.2 QCQP problem with three constraints

6.5.3 QCQP problem with homogeneous functions

6.6 The radar code-design problem

6.6.1 Background

6.6.2 System model

6.7 Performance measures for code design

6.7.1 Detection probability

6.7.2 Doppler frequency estimation accuracy

6.7.3 The similarity constraint

6.8 Optimal code design

6.9 Performance analysis

6.10 Conclusions

A6 Appendix to Chapter 6

A6.1 Proof of Proposition 6.1

A6.2 Feasibility of (QP), (EQPR), and (EQPRD)

A6.2.1 Feasibility of (QP)

A6.2.2 Feasibility of (EQPR)

A6.2.3 Feasibility of (EQPRD)

References

7 Convex analysis for non-negative blind source separation with application in imaging

7.1 Introduction

7.2 Problem statement

7.2.1 Assumptions

7.3 Review of some concepts in convex analysis

7.3.1 Affine hull

7.3.2 Convex hull

7.4 Non-negative blind source-separation criterion via CAMNS

7.4.1 Convex analysis of the problem, and the CAMNS criterion

7.4.2 An alternative form of the CAMNS criterion

7.5 Systematic linear-programming method for CAMNS

7.6 Alternating volume-maximization heuristics for CAMNS

7.7 Numerical results

7.7.1 Example of 3-source case: cell separation

7.7.2 Example of 4-source case: ghosting effect

7.7.3 Example of 5-source case: human face separation

7.7.4 Monte Carlo simulation: noisy environment

7.8 Summary and discussion

A7 Appendix to Chapter 7

A7.1 Proof of Lemma 7.2

A7.2 Proof of Proposition 7.1

A7.3 Proof of Lemma 7.5

A7.4 Proofof Lemma 7.6

A7.5 Proof of Lemma 7.7

A7.6 Proof of Theorem 7.3

Acknowledgments

References

8 Optimization techniques in modern sampling theory

8.1 Introduction

8.2 Notation and mathematical preliminaries

8.2.1 Notation

8.2.2 Projections

8.2.3 Frames

8.3 Sampling and reconstruction setup

8.3.1 Signal priors

Subspace priors

Smoothness priors

8.3.2 Sampling process

8.3.3 Reconstruction method

Unconstrained reconstruction

Constrained reconstruction

8.4 Optimization methods

8.5 Subspace priors

8.5.1 Unconstrained reconstruction

Least-squares recovery

Minimax recovery

8.5.2 Constrained reconstruction

Least-squares recovery

Minimax recovery

8.6 Smoothness priors

8.6.1 Unconstrained reconstruction

Least-squares approximation

Minimax recovery

8.6.2 Constrained reconstruction

Least-squares approximation

Minimax-regret recovery

8.7 Comparison of the various scenarios

8.8 Sampling with noise

8.8.1 Quadratic-optimization problems

8.8.2 Minimax recovery using SDP relaxation

8.9 Conclusions

Acknowledgments

References

9 Robust broadband adaptive beamforming using convex optimization

9.1 Introduction

9.2 Background

9.2.1 Linearly constrained minimum variance beamformer

9.2.2 Diagonal loading

9.3 Robust broadband beamformers

9.3.1 Robust beamformer with separate magnitude and phase constraints

9.3.2 Robust beamformer with combined magnitude and phase constraints

9.3.3 Robust beamformer with Chebychev constraints

9.3.4 Robust beamformer without frequency discretization

9.3.5 Summary of the proposed techniques

9.4 Simulations

9.5 Conclusions

Acknowledgments

References

10 Cooperative distributed multi-agent optimization

10.1 Introduction and motivation

10.2 Distributed-optimization methods using dual decomposition

10.2.1 Basic notation and terminology

10.2.2 Primal and dual problem

Duality gap and dual solutions

Dual-subgradient method

10.2.3 Distributed methods for utility-based network-resource allocation

10.2.4 Approximate primal solutions and rate analysis

Boundedness of dual iterates

Convergence-rate estimates

10.2.5 Numerical example

10.3 Distributed-optimization methods using consensus algorithms

10.3.1 Problem and algorithm

Problem

Algorithm

Representation using transition matrices

10.3.2 Information-exchange model

10.3.3 Convergence of transition matrices

10.3.4 Convergence analysis of the subgradient method

Disagreement estimate

Estimate for the auxiliary sequence

Performance bound for the algorithm

10.4 Extensions

10.4.1 Quantization effects on optimization

Performance bound for the quantized method

10.4.2 Consensus with local constraints

Convergence and rate of convergence results

10.5 Future work

10.5.1 Optimization with delays

10.5.2 Optimization with constraints

10.5.3 Nonconvex local-objective functions

10.6 Conclusions

10.7 Problems

References

11 Competitive optimization of cognitive radio MIMO systems via game theory

11.1 Introduction and motivation

11.1.1 Interference constraints: individual and conservative versus global and flexible

11.1.2 System design: a game-theoretical approach

11.1.3 Outline

11.1.4 Notation

11.2 Strategic non-cooperative games: basic solution concepts and algorithms

11.2.1 Existence and uniqueness of the NE

Existence of a Nash solution

Uniqueness of a Nash solution

11.2.2 Convergence to a fixed point

11.3 Opportunistic communications over unlicensed bands

11.3.1 Properties of the multiuser waterfilling mapping

11.3.2 MIMO waterfilling as a projector

11.3.3 Contraction properties of the multiuser MIMO waterfilling mapping

Intermediate definitions

Case of full row-rank (fat/square) channel matrices

Case of full column-rank (tall) channel matrices

Case of full-rank channel matrices

11.3.4 Existence and uniqueness of the Nash equilibrium

11.3.5 Distributed algorithms

11.4 Opportunistic communications under individual interference constraints

11.4.1 Game with null constraints

Nash equilibria: existence and uniqueness

Distributed algorithms

11.4.2 Game with null constraints via virtual noise shaping

Nash equilibria: existence and uniqueness

Distributed algorithms

11.4.3 Game with null and soft constraints

Nash equilibria: existence and uniqueness

Distributed algorithms

11.5 Opportunistic communications under global interference constraints

11.5.1 Equilibrium solutions: existence and uniqueness

11.5.2 Distributed algorithms

11.6 Conclusions

Acknowledgments

References

12 Nash equilibria: the variational approach

12.1 Introduction

12.2 The Nash-equilibrium problem

12.2.1 Review of multifunctions

12.2.2 Connection to variational inequalities

12.2.3 The Karush–Kuhn–Tucker conditions

12.2.4 Regularization and single-valued formulations

12.3 Existence theory

12.3.1 Special cases

12.3.2 A game with prices

12.3.3 Jointly convex constraints

12.3.4 The NCP approach

12.4 Uniqueness theory

12.4.1 A matrix-theoretic criterion

12.5 Sensitivity analysis

12.5.1 Local uniqueness

12.5.2 Stability of an equilibrium

12.6 Iterative algorithms

12.6.1 Non-expansiveness of the proximal responses

12.6.2 Descent methods for variational equilibria

12.7 A communication game

12.7.1 A model with QoS constraints

12.7.2 Exogenous prices

12.7.3 Endogenous prices

Acknowledgments

References

Afterword

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc