See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/13853244

Long Short-term Memory

Article in Neural Computation · December 1997

DOI: 10.1162/neco.1997.9.8.1735 · Source: PubMed

CITATIONS

3,842

2 authors, including:

READS

12,549

Sepp Hochreiter

Johannes Kepler University Linz

132 PUBLICATIONS 5,945 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

IBD Sharing between Africans, Neandertals, and Denisovans View project

Self-Normalizing Neural Networks View project

All content following this page was uploaded by Sepp Hochreiter on 03 April 2016.

The user has requested enhancement of the downloaded file.

�

LONGSHORT-TERMMEMORY

NeuralComputation (�):����{����,� �

JurgenSchmidhuber

SeppHochreiter

IDSIA

FakultatfurInformatik

CorsoElvezia��

TechnischeUniversitatMunchen

� ��Lugano,Switzerland

��� �Munchen,Germany

juergen@idsia.ch

hochreit@informatik.tu-muenchen.de

http://www.idsia.ch/~juergen

http://www�.informatik.tu-muenchen.de/~hochreit

Abstract

Learningtostoreinformationoverextendedtimeintervalsviarecurrentbackpropagation

takesaverylongtime,mostlyduetoinsucient,decayingerrorbackow.Webrieyreview

Hochreiter's� �analysisofthisproblem,thenaddressitbyintroducinganovel,ecient,

gradient-basedmethodcalled\LongShort-TermMemory"(LSTM).Truncatingthegradient

wherethisdoesnotdoharm,LSTMcanlearntobridgeminimaltimelagsinexcessof����

discretetimestepsbyenforcingconstanterrorowthrough\constanterrorcarrousels"within

specialunits.Multiplicativegateunitslearntoopenandcloseaccesstotheconstanterror

ow.LSTMislocalinspaceandtime;itscomputationalcomplexitypertimestepandweight

isO(�).Ourexperimentswitharticialdatainvolvelocal,distributed,real-valued,andnoisy

patternrepresentations.IncomparisonswithRTRL,BPTT,RecurrentCascade-Correlation,

Elmannets,andNeuralSequenceChunking,LSTMleadstomanymoresuccessfulruns,and

learnsmuchfaster.LSTMalsosolvescomplex,articiallongtimelagtasksthathavenever

beensolvedbypreviousrecurrentnetworkalgorithms.

INTRODUCTION

�

Recurrentnetworkscaninprincipleusetheirfeedbackconnectionstostorerepresentationsof

recentinputeventsinformofactivations(\short-termmemory",asopposedto\long-termmem-

ory"embodiedbyslowlychangingweights).Thisispotentiallysignicantformanyapplications,

includingspeechprocessing,non-Markoviancontrol,andmusiccomposition(e.g.,Mozer� �).

Themostwidelyusedalgorithmsforlearningwhattoputinshort-termmemory,however,take

toomuchtimeordonotworkwellatall,especiallywhenminimaltimelagsbetweeninputsand

correspondingteachersignalsarelong.Althoughtheoreticallyfascinating,existingmethodsdo

notprovideclearpracticaladvantagesover,say,backpropinfeedforwardnetswithlimitedtime

windows.Thispaperwillreviewananalysisoftheproblemandsuggestaremedy.

Theproblem.Withconventional\Back-PropagationThroughTime"(BPTT,e.g.,Williams

andZipser� �,Werbos� ��)or\Real-TimeRecurrentLearning"(RTRL,e.g.,Robinsonand

Fallside� ��),errorsignals\owingbackwardsintime"tendtoeither(�)blowupor(�)vanish:

thetemporalevolutionofthebackpropagatederrorexponentiallydependsonthesizeofthe

weights(Hochreiter� �).Case(�)mayleadtooscillatingweights,whileincase(�)learningto

bridgelongtimelagstakesaprohibitiveamountoftime,ordoesnotworkatall(seesection�).

Theremedy.Thispaperpresents\LongShort-TermMemory"(LSTM),anovelrecurrent

networkarchitectureinconjunctionwithanappropriategradient-basedlearningalgorithm.LSTM

isdesignedtoovercometheseerrorback-owproblems.Itcanlearntobridgetimeintervalsin

excessof����stepsevenincaseofnoisy,incompressibleinputsequences,withoutlossofshort

timelagcapabilities.Thisisachievedbyanecient,gradient-basedalgorithmforanarchitecture

�

�

enforcingconstant(thusneitherexplodingnorvanishing)errorowthroughinternalstatesof

specialunits(providedthegradientcomputationistruncatedatcertainarchitecture-specicpoints

|thisdoesnotaectlong-termerrorowthough).

Outlineofpaper.Section�willbrieyreviewpreviouswork.Section�beginswithanoutline

ofthedetailedanalysisofvanishingerrorsduetoHochreiter(� �).Itwillthenintroduceanaive

approachtoconstanterrorbackpropfordidacticpurposes,andhighlightitsproblemsconcerning

informationstorageandretrieval.TheseproblemswillleadtotheLSTMarchitectureasdescribed

inSection�.Section�willpresentnumerousexperimentsandcomparisonswithcompeting

methods.LSTMoutperformsthem,andalsolearnstosolvecomplex,articialtasksnoother

recurrentnetalgorithmhassolved.Section�willdiscussLSTM'slimitationsandadvantages.The

appendixcontainsadetaileddescriptionofthealgorithm(A.�),andexpliciterrorowformulae

(A.�).� PREVIOUSWORK

Thissectionwillfocusonrecurrentnetswithtime-varyinginputs(asopposedtonetswithsta-

tionaryinputsandxpoint-basedgradientcalculations,e.g.,Almeida� ��,Pineda� ��).

Gradient-descentvariants.TheapproachesofElman(� ��),Fahlman(� �),Williams

(� � ),Schmidhuber(� �a),Pearlmutter(� � ),andmanyoftherelatedalgorithmsinPearl-

mutter'scomprehensiveoverview(� �)suerfromthesameproblemsasBPTTandRTRL(see

Sections�and�).

Time-delays.OthermethodsthatseempracticalforshorttimelagsonlyareTime-Delay

NeuralNetworks(Langetal.� �)andPlate'smethod(Plate� �),whichupdatesunitactiva-

tionsbasedonaweightedsumofoldactivations(seealsodeVriesandPrincipe� �).Linetal.

(� �)proposevariantsoftime-delaynetworkscalledNARXnetworks.

Timeconstants.Todealwithlongtimelags,Mozer(� �)usestimeconstantsinuencing

changesofunitactivations(deVriesandPrincipe'sabove-mentionedapproach(� �)mayinfact

beviewedasamixtureofTDNNandtimeconstants).Forlongtimelags,however,thetime

constantsneedexternalnetuning(Mozer� �).Sunetal.'salternativeapproach(� �)updates

theactivationofarecurrentunitbyaddingtheoldactivationandthe(scaled)currentnetinput.

Thenetinput,however,tendstoperturbthestoredinformation,whichmakeslong-termstorage

impractical.

Ring'sapproach.Ring(� �)alsoproposedamethodforbridginglongtimelags.Whenever

aunitinhisnetworkreceivesconictingerrorsignals,headdsahigherorderunitinuencing

appropriateconnections.Althoughhisapproachcansometimesbeextremelyfast,tobridgea

timelaginvolving���stepsmayrequiretheadditionof���units.Also,Ring'snetdoesnot

generalizetounseenlagdurations.

Bengioetal.'sapproaches.Bengioetal.(� �)investigatemethodssuchassimulated

annealing,multi-gridrandomsearch,time-weightedpseudo-Newtonoptimization,anddiscrete

errorpropagation.Their\latch"and\�-sequence"problemsareverysimilartoproblem�awith

minimaltimelag���(seeExperiment�).BengioandFrasconi(� �)alsoproposeanEMapproach

forpropagatingtargets.Withnso-called\statenetworks",atagiventime,theirsystemcanbe

inoneofonlyndierentstates.SeealsobeginningofSection�.Buttosolvecontinuousproblems

suchasthe\addingproblem"(Section�.�),theirsystemwouldrequireanunacceptablenumber

ofstates(i.e.,statenetworks).

Kalmanlters.PuskoriusandFeldkamp(� �)useKalmanltertechniquestoimprove

recurrentnetperformance.Sincetheyuse\aderivativediscountfactorimposedtodecayexpo-

nentiallytheeectsofpastdynamicderivatives,"thereisnoreasontobelievethattheirKalman

FilterTrainedRecurrentNetworkswillbeusefulforverylongminimaltimelags.

Secondordernets.WewillseethatLSTMusesmultiplicativeunits(MUs)toprotecterror

owfromunwantedperturbations.ItisnottherstrecurrentnetmethodusingMUsthough.

Forinstance,WatrousandKuhn(� �)useMUsinsecondordernets.SomedierencestoLSTM

are:(�)WatrousandKuhn'sarchitecturedoesnotenforceconstanterrorowandisnotdesigned

�

�

tosolvelongtimelagproblems.(�)Ithasfullyconnectedsecond-ordersigma-piunits,whilethe

LSTMarchitecture'sMUsareusedonlytogateaccesstoconstanterrorow.(�)Watrousand

Kuhn'salgorithmcostsO(W�)operationspertimestep,oursonlyO(W),whereWisthenumber

ofweights.SeealsoMillerandGiles(� �)foradditionalworkonMUs.

Simpleweightguessing.Toavoidlongtimelagproblemsofgradient-basedapproacheswe

maysimplyrandomlyinitializeallnetworkweightsuntiltheresultingnethappenstoclassifyall

trainingsequencescorrectly.Infact,recentlywediscovered(SchmidhuberandHochreiter� �,

HochreiterandSchmidhuber� �,� �)thatsimpleweightguessingsolvesmanyoftheproblems

in(Bengio� �,BengioandFrasconi� �,MillerandGiles� �,Linetal.� �)fasterthan

thealgorithmsproposedtherein.Thisdoesnotmeanthatweightguessingisagoodalgorithm.

Itjustmeansthattheproblemsareverysimple.Morerealistictasksrequireeithermanyfree

parameters(e.g.,inputweights)orhighweightprecision(e.g.,forcontinuous-valuedparameters),

suchthatguessingbecomescompletelyinfeasible.

Adaptivesequencechunkers.Schmidhuber'shierarchicalchunkersystems(� �b,� �)

dohaveacapabilitytobridgearbitrarytimelags,butonlyifthereislocalpredictabilityacrossthe

subsequencescausingthetimelags(seealsoMozer� �).Forinstance,inhispostdoctoralthesis

(� �),Schmidhuberuseshierarchicalrecurrentnetstorapidlysolvecertaingrammarlearning

tasksinvolvingminimaltimelagsinexcessof����steps.Theperformanceofchunkersystems,

however,deterioratesasthenoiselevelincreasesandtheinputsequencesbecomelesscompressible.

LSTMdoesnotsuerfromthisproblem.

� CONSTANTERRORBACKPROP

�.� EXPONENTIALLYDECAYINGERROR

ConventionalBPTT(e.g.WilliamsandZipser� �).Outputunitk'stargetattimetis

denotedbydk(t).Usingmeansquarederror,k'serrorsignalis

#k(t)=f�k(netk(t))(dk(t)yk(t));

where

yi(t)=fi(neti(t))

istheactivationofanon-inputunitiwithdierentiableactivationfunctionfi,

neti(t)=Xjwijyj(t�)

isuniti'scurrentnetinput,andwijistheweightontheconnectionfromunitjtoi.Some

non-outputunitj'sbackpropagatederrorsignalis

#j(t)=f�j(netj(t))Xiwij#i(t+�):

Thecorrespondingcontributiontowjl'stotalweightupdateis#j(t)yl(t�),whereisthe

learningrate,andlstandsforanarbitraryunitconnectedtounitj.

OutlineofHochreiter'sanalysis(� �,page� -��).Supposewehaveafullyconnected

netwhosenon-inputunitindicesrangefrom�ton.Letusfocusonlocalerrorowfromunitu

tounitv(laterwewillseethattheanalysisimmediatelyextendstoglobalerrorow).Theerror

occurringatanarbitraryunituattimesteptispropagated\backintotime"forqtimesteps,to

anarbitraryunitv.Thiswillscaletheerrorbythefollowingfactor:

@#u(t) =(

f�v(netv(t�))wuv

@#v(tq)

f�v(netv(tq))Pnl=�@#l(tq+�)

(�)

@#u(t)wlv

�

q=�

q>�:

�

Withlq=vandl�=u,weobtain:

qYm=�f�lm(netlm(tm))wlmlm�

@#u(t) =nXl�=�:::

nXlq�=�

@#v(tq)

(�)

(proofbyinduction).Thesumofthenq�termsQqm=�f�lm(netlm(tm))wlmlm�determinesthe

totalerrorbackow(notethatsincethesummationtermsmayhavedierentsigns,increasing

thenumberofunitsndoesnotnecessarilyincreaseerrorow).

Intuitiveexplanationofequation(�).If

jf�lm(netlm(tm))wlmlm�j>�:�

forallm(ascanhappen,e.g.,withlinearflm)thenthelargestproductincreasesexponentially

withq.Thatis,theerrorblowsup,andconictingerrorsignalsarrivingatunitvcanleadto

oscillatingweightsandunstablelearning(forerrorblow-upsorbifurcationsseealsoPineda� ��,

BaldiandPineda� �,Doya� �).Ontheotherhand,if

jf�lm(netlm(tm))wlmlm�j<�:�

forallm,thenthelargestproductdecreasesexponentiallywithq.Thatis,theerrorvanishes,and

nothingcanbelearnedinacceptabletime.

Ifflmisthelogisticsigmoidfunction,thenthemaximalvalueoff�lmis�.��.Ifylm�isconstant

andnotequaltozero,thenjf�lm(netlm)wlmlm�jtakesonmaximalvalueswhere

wlmlm�= �ylm�coth(��netlm);

goestozeroforjwlmlm�j!�,andislessthan�:�forjwlmlm�j<�:�(e.g.,iftheabsolutemax-

imalweightvaluewmaxissmallerthan�.�).Hencewithconventionallogisticsigmoidactivation

functions,theerrorowtendstovanishaslongastheweightshaveabsolutevaluesbelow�.�,

especiallyinthebeginningofthetrainingphase.Ingeneraltheuseoflargerinitialweightswill

nothelpthough|asseenabove,forjwlmlm�j!�therelevantderivativegoestozero\faster"

thantheabsoluteweightcangrow(also,someweightswillhavetochangetheirsignsbycrossing

zero).Likewise,increasingthelearningratedoesnothelpeither|itwillnotchangetheratioof

long-rangeerrorowandshort-rangeerrorow.BPTTistoosensitivetorecentdistractions.(A

verysimilar,morerecentanalysiswaspresentedbyBengioetal.� �).

Globalerrorow.Thelocalerrorowanalysisaboveimmediatelyshowsthatglobalerror

owvanishes,too.Toseethis,computeXu:uoutputunit@#v(tq)

:

@#u(t)

Weakupperboundforscalingfactor.Thefollowing,slightlyextendedvanishingerror

analysisalsotakesn,thenumberofunits,intoaccount.Forq>�,formula(�)canberewritten

as

(WuT)TF�(t�)q�Ym=�(WF�(tm))Wvf�v(netv(tq));

wheretheweightmatrixWisdenedby[W]ij:=wij,v'soutgoingweightvectorWvisdenedby

[Wv]i:=[W]iv=wiv,u'sincomingweightvectorWuTisdenedby[WuT]i:=[W]ui=wui,andfor

m=�;:::;q,F�(tm)isthediagonalmatrixofrstorderderivativesdenedas:[F�(tm)]ij:=�

ifi�=j,and[F�(tm)]ij:=f�i(neti(tm))otherwise.HereTisthetranspositionoperator,

[A]ijistheelementinthei-thcolumnandj-throwofmatrixA,and[x]iisthei-thcomponent

ofvectorx.

�

�

Usingamatrixnormk:kAcompatiblewithvectornormk:kx,wedene

f�max:=maxm=�;:::;qfkF�(tm)kAg:

Formaxi=�;:::;nfjxijgkxkxwegetjxTyjnkxkxkykx:Since

jf�v(netv(tq))jkF�(tq)kAf�max;

weobtainthefollowinginequality:

j@#v(tq)

jn(f�max)qkWvkxkWuTkxkWkq�A n(f�maxkWkA)q:

@#u(t)

ThisinequalityresultsfromkWvkx=kWevkxkWkAkevkxkWkA

and

kWuTkx=keuWkxkWkAkeukxkWkA;

whereekistheunitvectorwhosecomponentsare�exceptforthek-thcomponent,whichis�.

Notethatthisisaweak,extremecaseupperbound|itwillbereachedonlyifallkF�(tm)kA

takeonmaximalvalues,andifthecontributionsofallpathsacrosswhicherrorowsbackfrom

unitutounitvhavethesamesign.LargekWkA,however,typicallyresultinsmallvaluesof

kF�(tm)kA,asconrmedbyexperiments(see,e.g.,Hochreiter� �).

Forexample,withnorms

kWkA:=maxrXsjwrsj

and

kxkx:=maxrjxrj;

wehavef�max=�:��forthelogisticsigmoid.Weobservethatif

jwijjwmax<�:�n�i;j;

thenkWkAnwmax<�:�willresultinexponentialdecay|bysetting:=nwmax

�:�<�:�,

weobtain

j@#v(tq)

jn()q:

@#u(t)

WerefertoHochreiter's� �thesisforadditionalresults.

�.� CONSTANTERRORFLOW:NAIVEAPPROACH

Asingleunit.Toavoidvanishingerrorsignals,howcanweachieveconstanterrorowthrough

asingleunitjwithasingleconnectiontoitself?Accordingtotherulesabove,attimet,j'slocal

errorbackowis#j(t)=f�j(netj(t))#j(t+�)wjj.Toenforceconstanterrorowthroughj,we

require

f�j(netj(t))wjj=�:�:

NotethesimilaritytoMozer'sxedtimeconstantsystem(� �)|atimeconstantof�:�is

appropriateforpotentiallyinnitetimelags�.

Theconstanterrorcarrousel.

Integratingthedierentialequationabove,weobtain

fj(netj(t))=netj(t)wjj

forarbitrarynetj(t).Thismeans:fjhastobelinear,andunitj'sacti-

vationhastoremainconstant:

yj(t+�)=fj(netj(t+�))=fj(wjjyj(t))=yj(t):

�Wedonotusetheexpression\timeconstant"inthedierentialsense,as,e.g.,Pearlmutter(� �).

�

�

Intheexperiments,thiswillbeensuredbyusingtheidentityfunctionfj:fj(x)=x;�x,andby

settingwjj=�:�.Werefertothisastheconstanterrorcarrousel(CEC).CECwillbeLSTM's

centralfeature(seeSection�).

Ofcourseunitjwillnotonlybeconnectedtoitselfbutalsotootherunits.Thisinvokestwo

obvious,relatedproblems(alsoinherentinallothergradient-basedapproaches):

�.Inputweightconict:forsimplicity,letusfocusonasingleadditionalinputweightwji.

Assumethatthetotalerrorcanbereducedbyswitchingonunitjinresponsetoacertaininput,

andkeepingitactiveforalongtime(untilithelpstocomputeadesiredoutput).Providediisnon-

zero,sincethesameincomingweighthastobeusedforbothstoringcertaininputsandignoring

others,wjiwilloftenreceiveconictingweightupdatesignalsduringthistime(recallthatjis

linear):thesesignalswillattempttomakewjiparticipatein(�)storingtheinput(byswitching

onj)and(�)protectingtheinput(bypreventingjfrombeingswitchedobyirrelevantlater

inputs).Thisconictmakeslearningdicult,andcallsforamorecontext-sensitivemechanism

forcontrolling\writeoperations"throughinputweights.

�.Outputweightconict:assumejisswitchedonandcurrentlystoressomeprevious

input.Forsimplicity,letusfocusonasingleadditionaloutgoingweightwkj.Thesamewkjhas

tobeusedforbothretrievingj'scontentatcertaintimesandpreventingjfromdisturbingk

atothertimes.Aslongasunitjisnon-zero,wkjwillattractconictingweightupdatesignals

generatedduringsequenceprocessing:thesesignalswillattempttomakewkjparticipatein(�)

accessingtheinformationstoredinjand|atdierenttimes|(�)protectingunitkfrombeing

perturbedbyj.Forinstance,withmanytaskstherearecertain\shorttimelagerrors"thatcanbe

reducedinearlytrainingstages.However,atlatertrainingstagesjmaysuddenlystarttocause

avoidableerrorsinsituationsthatalreadyseemedundercontrolbyattemptingtoparticipatein

reducingmoredicult\longtimelagerrors".Again,thisconictmakeslearningdicult,and

callsforamorecontext-sensitivemechanismforcontrolling\readoperations"throughoutput

weights.Ofcourse,inputandoutputweightconictsarenotspecicforlongtimelags,butoccurfor

shorttimelagsaswell.Theireects,however,becomeparticularlypronouncedinthelongtime

lagcase:asthetimelagincreases,(�)storedinformationmustbeprotectedagainstperturbation

forlongerandlongerperiods,and|especiallyinadvancedstagesoflearning|(�)moreand

morealreadycorrectoutputsalsorequireprotectionagainstperturbation.

Duetotheproblemsabovethenaiveapproachdoesnotworkwellexceptincaseofcertain

simpleproblemsinvolvinglocalinput/outputrepresentationsandnon-repeatinginputpatterns

(seeHochreiter� �andSilvaetal.� �).Thenextsectionshowshowtodoitright.

� LONGSHORT-TERMMEMORY

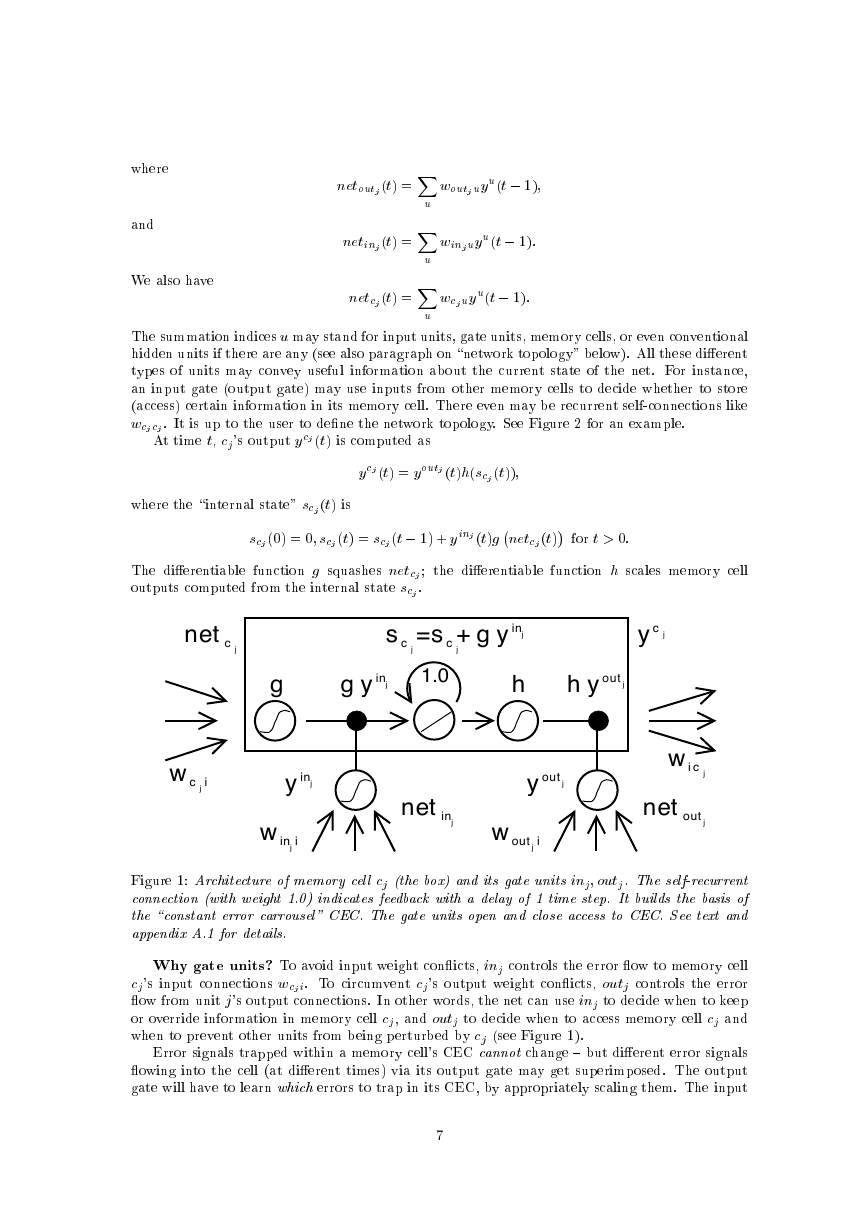

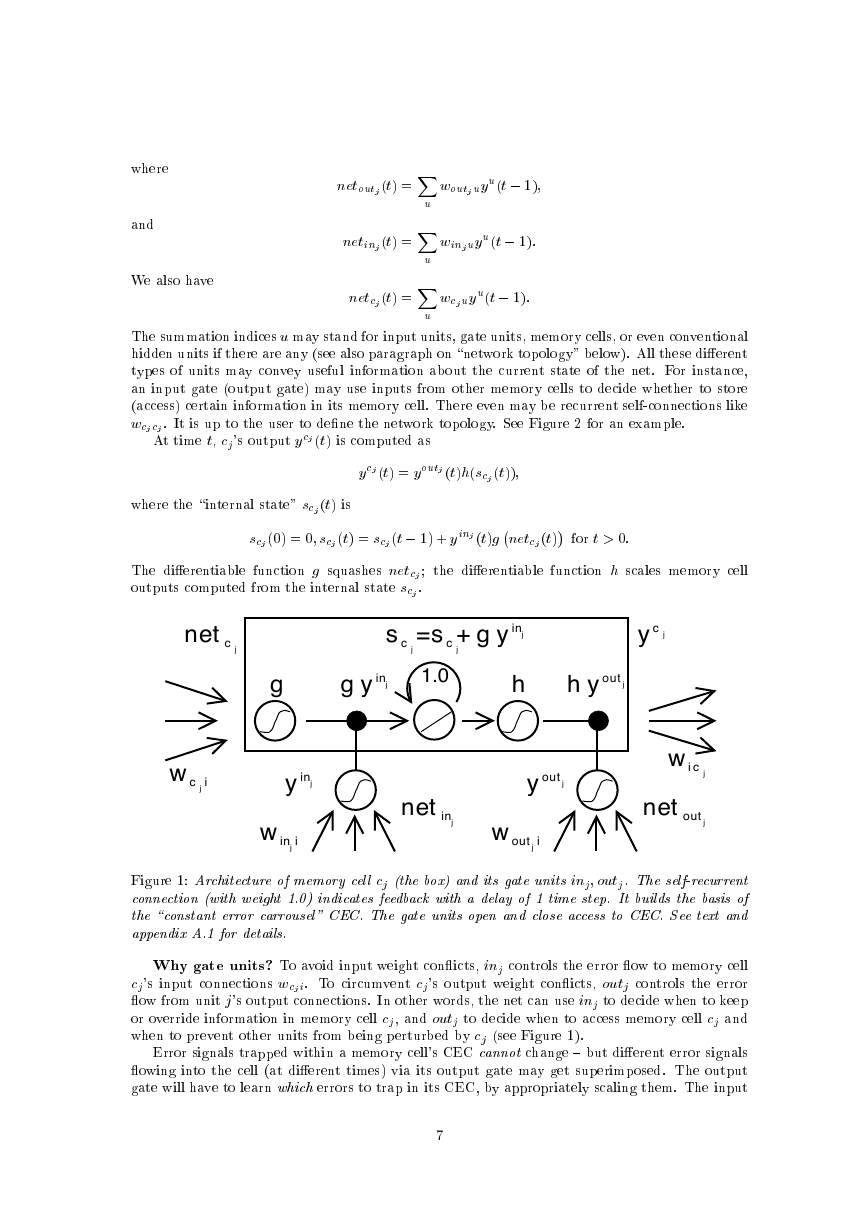

Memorycellsandgateunits.Toconstructanarchitecturethatallowsforconstanterrorow

throughspecial,self-connectedunitswithoutthedisadvantagesofthenaiveapproach,weextend

theconstanterrorcarrouselCECembodiedbytheself-connected,linearunitjfromSection�.�

byintroducingadditionalfeatures.Amultiplicativeinputgateunitisintroducedtoprotectthe

memorycontentsstoredinjfromperturbationbyirrelevantinputs.Likewise,amultiplicative

outputgateunitisintroducedwhichprotectsotherunitsfromperturbationbycurrentlyirrelevant

memorycontentsstoredinj.

Theresulting,morecomplexunitiscalledamemorycell(seeFigure�).Thej-thmemorycell

isdenotedcj.Eachmemorycellisbuiltaroundacentrallinearunitwithaxedself-connection

(theCEC).Inadditiontonetcj,cjgetsinputfromamultiplicativeunitoutj(the\outputgate"),

andfromanothermultiplicativeunitinj(the\inputgate").inj'sactivationattimetisdenoted

byyinj(t),outj'sbyyoutj(t).Wehave

youtj(t)=foutj(netoutj(t));yinj(t)=finj(netinj(t));

�

�

where

netoutj(t)=Xuwoutjuyu(t�);

and

netinj(t)=Xuwinjuyu(t�):

Wealsohave

netcj(t)=Xuwcjuyu(t�):

Thesummationindicesumaystandforinputunits,gateunits,memorycells,orevenconventional

hiddenunitsifthereareany(seealsoparagraphon\networktopology"below).Allthesedierent

typesofunitsmayconveyusefulinformationaboutthecurrentstateofthenet.Forinstance,

aninputgate(outputgate)mayuseinputsfromothermemorycellstodecidewhethertostore

(access)certaininformationinitsmemorycell.Thereevenmayberecurrentself-connectionslike

wcjcj.Itisuptotheusertodenethenetworktopology.SeeFigure�foranexample.

Attimet,cj'soutputycj(t)iscomputedas

ycj(t)=youtj(t)h(scj(t));

wherethe\internalstate"scj(t)is

scj(�)=�;scj(t)=scj(t�)+yinj(t)gnetcj(t)fort>�:

Thedierentiablefunctiongsquashesnetcj;thedierentiablefunctionhscalesmemorycell

outputscomputedfromtheinternalstatescj.

net c j

s c j

g

g y inj

g+

=

s c j

1.0

y inj

h

y c j

h y out j

y out j

net

inj

w ic j

w i

inj

w i

out j

Figure�:Architectureofmemorycellcj(thebox)anditsgateunitsinj;outj.Theself-recurrent

connection(withweight�.�)indicatesfeedbackwithadelayof�timestep.Itbuildsthebasisof

the\constanterrorcarrousel"CEC.ThegateunitsopenandcloseaccesstoCEC.Seetextand

appendixA.�fordetails.

Whygateunits?Toavoidinputweightconicts,injcontrolstheerrorowtomemorycell

cj'sinputconnectionswcji.Tocircumventcj'soutputweightconicts,outjcontrolstheerror

owfromunitj'soutputconnections.Inotherwords,thenetcanuseinjtodecidewhentokeep

oroverrideinformationinmemorycellcj,andoutjtodecidewhentoaccessmemorycellcjand

whentopreventotherunitsfrombeingperturbedbycj(seeFigure�).

Errorsignalstrappedwithinamemorycell'sCECcannotchange{butdierenterrorsignals

owingintothecell(atdierenttimes)viaitsoutputgatemaygetsuperimposed.Theoutput

gatewillhavetolearnwhicherrorstotrapinitsCEC,byappropriatelyscalingthem.Theinput

y inj

wi c j

net

out j

�

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc