Tensor Decompositions and Applications

Author(s): Tamara G. Kolda and Brett W. Bader

Source: SIAM Review, Vol. 51, No. 3 (September 2009), pp. 455-500

Published by: Society for Industrial and Applied Mathematics

Stable URL: http://www.jstor.org/stable/25662308 .

Accessed: 08/02/2015 21:16

Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at .

http://www.jstor.org/page/info/about/policies/terms.jsp

.

JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of

content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms

of scholarship. For more information about JSTOR, please contact support@jstor.org.

.

Society for Industrial and Applied Mathematics is collaborating with JSTOR to digitize, preserve and extend

access to SIAM Review.

http://www.jstor.org

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

Vol. 51, No. 3, pp. 455-500

SIAM review (c) 2009 Society for Industrial and Applied Mathematics

Tensor Decompositions

Applications*

and

Tamara G. Kolda*

Brett W. Bader*

Abstract.

tensor decompositions,

tensors

survey provides

software. A tensor

an overview of higher-order

is a multidimensional

(i.e., AT-way arrays with N

numerical

chemometrics,

signal processing,

analysis, data mining, neuroscience,

This

and available

of higher-order

metrics,

merical

tensor decompositions

gular value decomposition:

sum of rank-one

component

PARAFAC2,

ants of all of the above. The N-way Toolbox,

examples

tensors, and the Tucker decomposition

of software packages

are many

DEDICOM,

CANDECOMP/PARAFAC

can be considered

CANDELINC,

analysis.

There

for working with tensors.

and PARATUCK2

graph analysis,

to be higher-order

Tensor Toolbox,

or N-w&y

3) have

>

linear algebra,

their applications,

array. Decompositions

applications

computer

in psycho

vision, nu

and elsewhere. Two particular

sin

a tensor as a

of the matrix

extensions

(CP) decomposes

is a higher-order

form of principal

INDSCAL,

including

as well as nonnegative

and Multilinear

Engine

vari

are

other tensor decompositions,

Key words,

tensor decompositions, multiway

canonical

decomposition

arrays, multilinear

algebra, parallel factors (PARAFAC),

(CANDECOMP),

higher-order

principal

components

analysis

(Tucker), higher-order

singular value decomposition

(HOSVD)

AMS

subject classifications.

15A69, 65F99

DOI.

10.1137/07070111X

I. Introduction. A tensor is a multidimensional

array. More formally, an N-way

or TVth-order tensor is an element of the tensor product of N vector spaces, each of

which has its own coordinate system. This notion of tensors is not to be confused with

tensors in physics and engineering (such as stress tensors) [175], which are generally

referred to as tensor fields in mathematics

[69]. A third-order tensor has three indices,

as shown in Figure 1.1. A first-order tensor is a vector, a second-order tensor is a

matrix, and tensors of order three or higher are called higher-order tensors.

The goal of this survey is to provide an overview of higher-order tensors and their

there has been active research on tensor decompositions

decompositions.

and models

applied to data arrays for extracting and explaining

their properties) for the past four decades, very little of this work has been published

(i.e., decompositions

Though

*

Received

published

multiprogram

United

DE-AC04-94AL85000.

or reproduce

purposes.

by the editors August

24, 2007; accepted

for publication

electronically August

5, 2009. This work was funded by Sandia National

laboratory

States Department

operated

by Sandia Corporation,

of Energy's National Nuclear

The U.S. Government

retains a nonexclusive,

the published

form of this contribution,

Copyright

is owned by SIAM

to the extent not

.html

/ sirev/51-3/70111

(in revised form) June 3, 2008;

a

for the

Laboratories,

a Lockheed Martin

Security Administration

royalty-free

under Contract

license to publish

or allow others to do so, for U.S. Government

Company,

limited by these rights.

http: / / www.siam.org/journals

Informatics,

'''Mathematics,

Livermore, CA 94551-9159

* Computer

NM 87185-1318

and Decisions

Sciences Department,

Sandia National

Laboratories,

Science

and

(tgkolda@sandia.gov).

Informatics Department,

Sandia National

Laboratories,

Albuquerque,

(bwbader@sandia.gov).

455

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

456 TAMARA G. KOLDA AND BRETT W BADER

.

II

:

.J

/__/v

=

j

f

i,...,j

^

Fig.

I.I A third-order

tensor: X eRIXJxK.

in applied mathematics

attention of SIAM readers.

journals. Therefore, we wish to bring this research to the

is attributed to Cattell

[38] and Harshman

[90] in 1970, all of which appeared

Tensor decompositions originated with Hitchcock

in 1927 [105, 106], and the idea

of a multiway model

in 1944 [40, 41]. These concepts received

in the 1960s

[224, 225, 226] and Carroll

scant attention until the work of Tucker

in psychometrics

and Chang

[13] are generally credited as being the first to

literature. Appellof and Davidson

use tensor decompositions

(in 1981) in chemometrics, and tensors have since become

in that field [103, 201, 27, 28, 31, 152, 241, 121, 12, 9, 29], even

extremely popular

spawning a book

in psychometrics

in 2004

and chemometrics, there was a great deal of interest in decompositions

of bilinear

[130, sec. 4.6.4]. The most

forms in the field of algebraic complexity; see, e.g., Knuth

interesting example of this is Strassen matrix multiplication, which is an application

of a 4 x 4 x 4 tensor to describe 2x2 matrix multiplication

of a decomposition

[208, 141, 147, 24].

[200]. In parallel to the developments

In the last ten years, interest in tensor decompositions has expanded

to other

[62, 196, 47, 43, 68, 80, 173, 60, 61], numer

include signal processing

fields. Examples

[87, 63, 64, 132, 244, 133, 149], computer vision [229, 230, 231, 190,

ical linear algebra

[22, 108, 23, 89, 114, 88, 115], data min

236, 237, 193, 102, 235], numerical analysis

[136, 135, 15], neuroscience

ing [190, 4, 157, 211, 5, 209, 210, 44, 14], graph analysis

[20, 163, 165, 167, 170, 168, 169, 2, 3, 70, 71], and more. Several surveys have been

written in other fields [138, 52, 104, 27, 28, 47, 129, 78, 48, 200, 69, 29, 6, 184], and

a book has appeared very recently on multiway data analysis

there

are several software packages available for working with tensors [179, 11, 146, 85, 16,

17, 18, 239, 243].

Wherever possible, the titles in the references section of this review are hyper

linked to either the publisher web page for the paper or the author's version. Many

format. We also direct the reader to

older papers are now available online in PDF

three-mode bibliography,1 which includes several out-of-print books

P. Kroonenberg's

and theses (including his own [138]). Likewise, R. Harshman's web site2 has many

[90] and Kruskal's

hard-to-find papers,

1989 paper

including his original 1970 PARAFAC

[143], which is now out of print.

The remainder of this review is organized as follows. Section 2 describes the no

tation and common operations used throughout the review; additionally, we provide

pointers to other papers that discuss notation. Both the CANDECOMP/PARAFAC

[139]. Moreover,

paper

1

http://three-mode.leidenuniv.nl/bibliogr/bibliogr.htm

2

http: / /www. psychology, uwo.ca/facuity/harshman

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

TENSOR DECOMPOSITIONS AND APPLICATIONS 457

[38, 90] and Tucker

[226] tensor decompositions

(PCA). fn section 3, we discuss the CP decomposition,

can be considered to be higher

(CP)

(SVD) and principal

order generalizations of the matrix singular value decomposition

its con

component analysis

nection to tensor rank and tensor border rank, conditions for uniqueness, algorithms

is covered

and computational

in section 4, where we discuss

its relationship to compression, the notion of n-rank,

Section 5 covers other decom

algorithms and computational

issues, and applications.

positions, including fNDSCAL [38], PARAFAC2 [92], CANDELfNC [39], DEDfCOM

[93], and PARATUCK2

Section 6 provides information

about software for tensor computations. We summarize our findings in section 7.

[100], and their applications.

The Tucker decomposition

issues, and applications.

2. Notation

and Preliminaries.

In this review, we have tried to remain as con

sistent as possible with terminology that would be familiar to applied mathematicians

in the area of tensor decomposi

and with the terminology of previous publications

tions. The notation used here is very similar to that proposed by Kiers

[122]. Other

standards have been proposed as well; see Harshman

and Hong

[96].

[94] and Harshman

The order of a tensor is the number of dimensions, also known as ways or modes.3

(tensors of order one) are denoted by boldface lowercase letters, e.g., a. Matri

Vectors

ces (tensors of order two) are denoted by boldface capital letters, e.g., A. Higher-order

tensors (order three or higher) are denoted by boldface Euler script letters, e.g., X.

Scalars

are denoted

lowercase

a.

letters,

e.g.,

by

The

ith entry of a vector a is denoted by c^, element (i,j) of a matrix A

denoted by a^-, and element (i,j,k) of a third-order tensor X

Indices typically range from 1 to their capital version, e.g., i = 1,...,/.

element in a sequence

is denoted by a superscript in parentheses, e.g., A^

the nth matrix

in a sequence.

is

is denoted by Xijk

The nth

denotes

Subarrays are formed when a subset of the indices is fixed. For matrices, these

are the rows and columns. A colon is used to indicate all elements of a mode. Thus,

the jth column of A

is denoted by

a;;. Alternatively, the jth column of a matrix, a..j, may be denoted more compactly

as dij.

is denoted by a;j, and the ith row of a matrix A

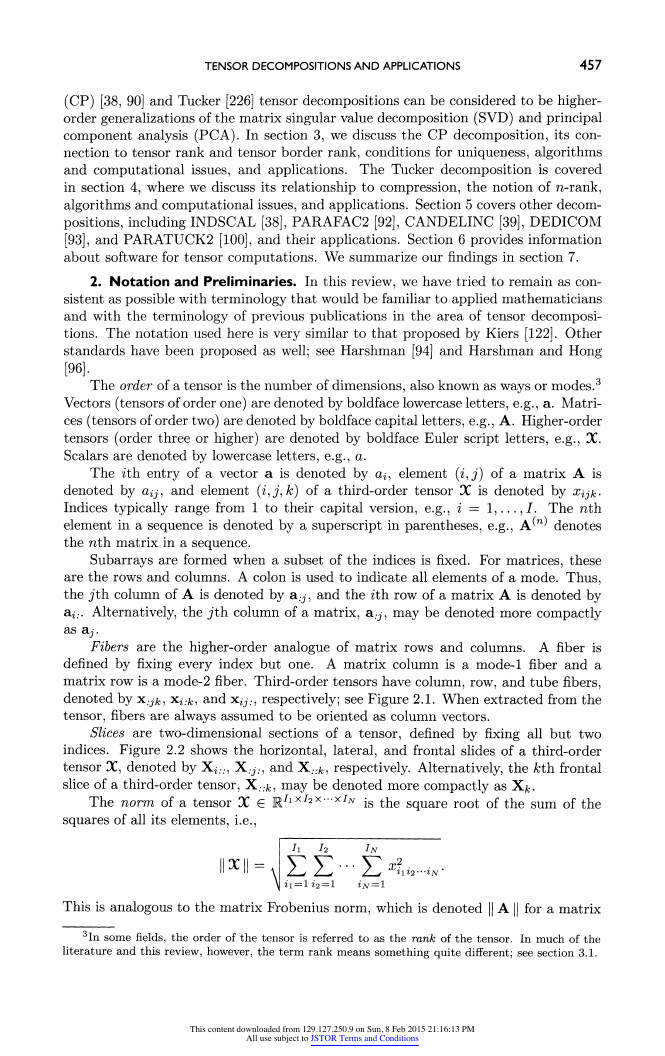

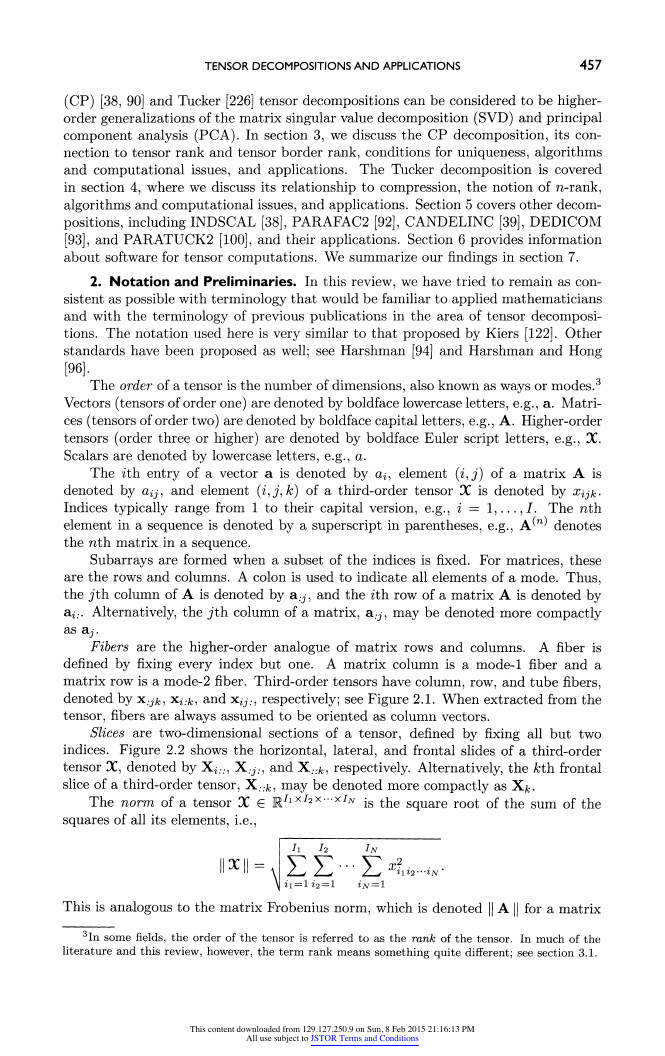

Fibers are the higher-order analogue of matrix rows and columns. A fiber is

defined by fixing every index but one. A matrix column is a mode-1 fiber and a

matrix row is a mode-2 fiber. Third-order tensors have column, row, and tube fibers,

denoted by x;jfc, x^,

extracted from the

tensor, fibers are always assumed to be oriented as column vectors.

and x^;, respectively; see Figure 2.1. When

Slices are two-dimensional

sections of a tensor, defined by fixing all but two

indices. Figure 2.2 shows the horizontal,

lateral, and frontal slides of a third-order

tensor X, denoted by X^...., X^.., and X;;fc, respectively. Alternatively, the kth frontal

slice of a third-order tensor, X;;fc, may be denoted more compactly as X/~.

square root of the sum of the

- x/at ig

The norm of a tensor X G R^x^x

squares of all its elements, i.e.,

wxw =

\ EE-EC

\ 2i=l 22 = 1

*JV = 1

This is analogous to the matrix Frobenius norm, which is denoted

|| A

|| for a matrix

3In some fields, the order of the tensor

is referred to as the rank of the tensor.

literature and this review, however,

the term rank means

something

In much of the

quite different; see section 3.1.

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

458 TAMARA G. KOLDA AND BRETT W. BADER

(a) Mode-1

(column)

fibers: x:j-fc

(b) Mode-2

(row) fibers: x^./-

(c) Mode-3

(tube) fibers:

Fig. 2.1

Fibers

of a Srd-order

tensor.

(a) Horizontal

slices: Xj;;

(b) Lateral

slices: X.j;

(c) Frontal

slices: X;;fc

(or Xfc)

Fig. 2.2

Slices

of a Srd-order

tensor.

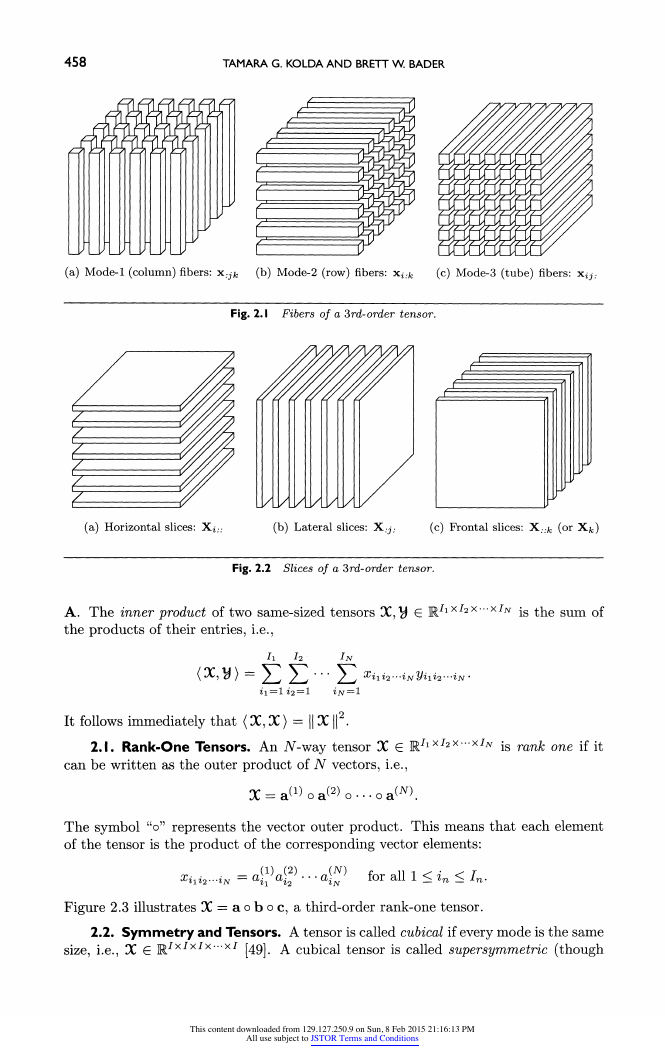

inner product of two same-sized tensors X,y G ^ix/2x

- x/jv ^s ^ne sum Qf

A. The

the products of their entries, i.e.,

h

In

=

(X,y)

^2

i7V = l

It follows immediately that (X, X) = || X ||2.

ii =1 22 = 1

Ii

Xi1i2...iNyi1i2...iN.

2.1. Rank-One Tensors. An Af-way tensor X e ^^^x-x/iv

can be written as the outer product of N vectors, i.e.,

js mn? one jf ^

^aWoaP'o.-oaC)

The symbol "o" represents the vector outer product. This means that each element

of the tensor is the product of the corresponding vector elements:

for all 1 < in < In.

Figure 2.3 illustrates X = a o b o c, a third-order rank-one tensor.

xili2...iN =

a^a^f

<4^?

2.2. Symmetry and Tensors. A tensor is called cubical if every mode

is the same

[49]. A cubical tensor is called super symmetric (though

size, i.e., X G ^Ixlxlx--'xl

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

TENSOR DECOMPOSITIONS AND APPLICATIONS 459

Z-/

=

0

/

a

Fig. 2.3 Rank-one

third-order

tensor, X = aoboc.

The

(i,j,k)

element of X

is given by x^

=

dibjck.

this term is challenged by Comon et al. [49], who instead prefer just "symmetric") if

its elements remain constant under any permutation of the indices. For instance, a

three-way tensor X G R/x/x/

is supersymmetric if

=

%ijk

%ikj

~

%jik

~~

~

~

%jki

%kij

%kji

for all

i, J1, fc =

1, . . . , -T.

Tensors can be (partially) symmetric in two or more modes as well. For example,

is symmetric in modes one and two if all its frontal

a three-way tensor X G RIxIxK

slices

are

symmetric,

i.e.,

Xk=XTk

forallife = l,...,A\

Analysis of supersymmetric tensors, which can be shown to be bijectively related

[106, 105], which

to homogeneous polynomials, predates even the work of Hitchcock

was mentioned

in the introduction; see [50, 49] for details.

2.3. Diagonal Tensors. A tensor X G R7lX/2X"'x/Ar is diagonal

if xili2...iN ^ 0

= in- Figure 2.4 illustrates a cubical tensor with ones along the

only if ii = i^ ?

superdiagonal.

Fig. 2.4

Three-way

tensor of size I x I x I with ones along

the superdiagonal.

a Tensor

Transforming

2.4. Matricization:

also

known as unfolding or flattening, is the process of reordering the elements of an N-w&y

array into a matrix. For instance, a 2 x 3 x 4 tensor can be arranged as a 6 x 4 matrix or

a 3 x 8 matrix, and so on. In this review, we consider only the special case of mode-n

matricization because

it is the only form relevant to our discussion. A more general

treatment of matricization

[134]. The mode-n matricization of

a tensor X G Rjix/2x-x/jv

and arranges the mode-n fibers to be

can be found in Kolda

js denoted by X(n)

into a Matrix. Matricization,

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

460 TAMARA G. KOLDA AND BRETT W BADER

the columns of the resulting matrix. Though conceptually simple, the formal notation

is clunky. Tensor element (n, Z2,..., z'at) maps to matrix element (zn, j), where

j=1+Yi(ik

fc = l m=l

~ i^jk with Jk =

N k-1

nim'

The concept is easier to understand using an example. Let the frontal slices of X G

R3x4x2 be

(2.1)

Xi = 2 5 8 11 , X2 =

7

"14

10]

3 6 9 12J

16 19 22"

[13

14 17 20 23 .

L15 18 21 24_

Then the three mode-n unfoldings are

X(1)

'1 4 7 10 13 16 19 22"

= 2 5 8 11 14 17 20 23 ,

(_3 6 9 12 15 18 21 24

_

(2)

13 14 15"

16 17 18

2

'1

4

5

7 8

10 11 12 22 23 24

3

6

9 19 20 21 '

^

_ [1

~

2 3 4

5

|_13 14 15 16 17

9 10 11 12'

21 22 23 24

*

Different papers sometimes use different orderings of the columns for the mode-n

unfolding; compare the above with [63] and [122]. In general, the specific permutation

see

of columns is not important so long as it is consistent across related calculations;

[134] for further details.

Last, we note that it is also possible to vectorize a tensor. Once again the ordering

of the elements is not important so long as it is consistent. In the example above, the

vectorized version is

=

vec(X)

"1"

2

_24_

2.5. Tensor Multiplication:

The n-Mode Product. Tensors can be multiplied

together, though obviously the notation and symbols for this are much more complex

see, e.g., Bader and

than for matrices. For a full treatment of tensor multiplication

i.e., multiplying a

Kolda

tensor by a matrix

The n-mode (matrix) product of a tensor X G Ri"ix/2x-- x/JV witn a matrix U G

x IN.

[16]. Here we consider only the tensor n-mode product,

(or a vector) in mode n.

jTn_i x J x In+1

is of size h

is denoted

RJxIn

xn U

and

x

x

x

by X

Elementwise,

we have

(X Xn

U)-i...-ri_ij

=

in+1...iN

Xii*2-iN

^2

in = l

Ujin'

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

TENSOR DECOMPOSITIONS AND APPLICATIONS 461

Each mode-n fiber is multiplied by the matrix U. The

terms of unfolded tensors:

idea can also be expressed in

y = xxnu

&

Y(n)

=

ux(n).

The n-mode product of a tensor with a matrix

case when a tensor defines a multilinear operator.

is related to a change of basis in the

As an example,

let X be the tensor defined above in (2.1) and let U =

[2 4 i]

Then the product y = X xx U G R2x4x2

is

_ [22 49 76

1 ~~

103]

'

[28 64 100 136J

_ [130

2 ~

157 184 211"

[172 208 244 280

*

A few facts regarding n-mode matrix products are in order. For distinct modes

in a series of multiplications,

the order of the multiplication

is irrelevant, i.e.,

lxmAxnB

= lxnB

xrn A

(m^n).

If the modes are the same, then

X x n A x n B =

Xxn(BA).

The n-mode (vector) product of a tensor X G M7* X/2X"'xIn with a vector v G R7n

x In-\ x

is denoted by X xn v. The result is of order N ?

l, i.e., the size is ii x

Jn+i

x

x 1^.

Elementwise,

(X Xn

v)i1...in_1in+1...iN

?

In

in=l

%i1i2--'iN Vin

The idea is to compute the inner product of each mode-n fiber with the vector v.

For example, let X be as given in (2.1) and define v =

[l 2 3 4]

. Then

X x2 v =

'70

190"

80 200

90 210_

.

When

it comes to mode-n vector multiplication, precedence matters because the

order of the intermediate results changes. In other words,

X xm a xn b =

(X xm a) xn_i b =

(X xn b) xm a

for m < n.

See [16] for further discussion of concepts in this subsection.

2.6. Matrix Kronecker, Khatri-Rao,

and Hadamard

Products.

Several matrix

products are important in the sections that follow, so we briefly define them here.

The Kronecker product of matrices A G E/Xj

(g) B. The result is a matrix of size (IK) x (JL) and defined by

and B G RKxL

A

is denoted by

anB

a2iB

....

ai2B

a22B

auB"

a2jB

A<8>B=

=

_a/iB

[ai0bi

a/2B

ai0b2

a/jB

ai 0 b3

aj^b^-i

aj^bz,].

This content downloaded from 129.127.250.9 on Sun, 8 Feb 2015 21:16:13 PM

All use subject to JSTOR Terms and Conditions

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc