Raymond W. Yeung

Information Theory and

Network Coding

SPIN Springer’s internal project number, if known

May 31, 2008

Springer

�

�

To my parents and my family

�

�

Preface

This book is an evolution from my book A First Course in Information Theory

published in 2002 when network coding was still at its infancy. The last few

years have witnessed the rapid development of network coding into a research

field of its own in information science. With its root in information theory,

network coding not only has brought about a paradigm shift in network com-

munications at large, but also has had significant influence on such specific

research fields as coding theory, networking, switching, wireless communica-

tions, distributed data storage, cryptography, and optimization theory. While

new applications of network coding keep emerging, the fundamental results

that lay the foundation of the subject are more or less mature. One of the

main goals of this book therefore is to present these results in a unifying and

coherent manner.

While the previous book focused only on information theory for discrete

random variables, the current book contains two new chapters on information

theory for continuous random variables, namely the chapter on differential

entropy and the chapter on continuous-valued channels. With these topics

included, the book becomes more comprehensive and is more suitable to be

used as a textbook for a course in an electrical engineering department.

What is in this book

Out of the twenty-one chapters in this book, the first sixteen chapters

belong to Part I, Components of Information Theory, and the last five chapters

belong to Part II, Fundamentals of Network Coding. Part I covers the basic

topics in information theory and prepare the reader for the discussions in

Part II. A brief rundown of the chapters will give a better idea of what is in

this book.

Chapter 1 contains a high level introduction to the contents of this book.

First, there is a discussion on the nature of information theory and the main

results in Shannon’s original paper in 1948 which founded the field. There are

also pointers to Shannon’s biographies and his works.

�

VIII

Preface

Chapter 2 introduces Shannon’s information measures for discrete random

variables and their basic properties. Useful identities and inequalities in in-

formation theory are derived and explained. Extra care is taken in handling

joint distributions with zero probability masses. There is a section devoted

to the discussion of maximum entropy distributions. The chapter ends with a

section on the entropy rate of a stationary information source.

Chapter 3 is an introduction to the theory of I-Measure which establishes

a one-to-one correspondence between Shannon’s information measures and

set theory. A number of examples are given to show how the use of informa-

tion diagrams can simplify the proofs of many results in information theory.

Such diagrams are becoming standard tools for solving information theory

problems.

Chapter 4 is a discussion of zero-error data compression by uniquely de-

codable codes, with prefix codes as a special case. A proof of the entropy

bound for prefix codes which involves neither the Kraft inequality nor the

fundamental inequality is given. This proof facilitates the discussion of the

redundancy of prefix codes.

Chapter 5 is a thorough treatment of weak typicality. The weak asymp-

totic equipartition property and the source coding theorem are discussed. An

explanation of the fact that a good data compression scheme produces almost

i.i.d. bits is given. There is also an introductory discussion of the Shannon-

McMillan-Breiman theorem. The concept of weak typicality will be further

developed in Chapter 10 for continuous random variables.

Chapter 6 contains a detailed discussion of strong typicality which applies

to random variables with finite alphabets. The results developed in this chap-

ter will be used for proving the channel coding theorem and the rate-distortion

theorem in the next two chapters.

The discussion in Chapter 7 of the discrete memoryless channel is an en-

hancement of the discussion in the previous book. In particular, the new def-

inition of the discrete memoryless channel enables rigorous formulation and

analysis of coding schemes for such channels with or without feedback. The

proof of the channel coding theorem uses a graphical model approach that

helps explain the conditional independence of the random variables.

Chapter 8 is an introduction to rate-distortion theory. The version of the

rate-distortion theorem here, proved by using strong typicality, is a stronger

version of the original theorem obtained by Shannon.

In Chapter 9, the Blahut-Arimoto algorithms for computing the channel

capacity and the rate-distortion function are discussed, and a simplified proof

for convergence is given. Great care is taken in handling distributions with

zero probability masses.

Chapter 10 and Chapter 11 are the two chapters devoted to the discus-

sion of information theory for continuous random variables. Chapter 10 intro-

duces differential entropy and related information measures, and their basic

properties are discussed. The asymptotic equipartion property for continuous

random variables is proved. The last section on maximum differential entropy

�

Preface

IX

distributions echos the section in Chapter 2 on maximum entropy distribu-

tions.

Chapter 11 discusses a variety of continuous-valued channels, with the

continuous memoryless channel being the basic building block. In proving the

capacity of the memoryless Gaussian channel, a careful justification is given

for the existence of the differential entropy of the output random variable.

Based on this result, the capacity of a system of parallel/correlated Gaus-

sian channels is obtained. Heuristic arguments leading to the formula for the

capacity of the bandlimited white/colored Gaussian channel are given. The

chapter ends with a proof of the fact that zero-mean Gaussian noise is the

worst additive noise.

Chapter 12 explores the structure of the I-Measure for Markov structures.

Set-theoretic characterizations of full conditional independence and Markov

random field are discussed. The treatment of Markov random field here maybe

too specialized for the average reader, but the structure of the I-Measure and

the simplicity of the information diagram for a Markov chain is best explained

as a special case of a Markov random field.

Information inequalities are sometimes called the laws of information the-

ory because they govern the impossibilities in information theory. In Chap-

ter 13, the geometrical meaning of information inequalities and the relation

between information inequalities and conditional independence are explained

in depth. The framework for information inequalities discussed here is the

basis of the next two chapters.

Chapter 14 explains how the problem of proving information inequalities

can be formulated as a linear programming problem. This leads to a complete

characterization of all information inequalities provable by conventional tech-

niques. These inequalities, called Shannon-type inequalities, can be proved by

the World Wide Web available software package ITIP. It is also shown how

Shannon-type inequalities can be used to tackle the implication problem of

conditional independence in probability theory.

Shannon-type inequalities are all the information inequalities known dur-

ing the first half century of information theory. In the late 1990’s, a few new

inequalities, called non-Shannon-type inequalities, were discovered. These in-

equalities imply the existence of laws in information theory beyond those laid

down by Shannon. In Chapter 15, we discuss these inequalities and their ap-

plications.

Chapter 16 explains an intriguing relation between information theory

and group theory. Specifically, for every information inequality satisfied by

any joint probability distribution, there is a corresponding group inequality

satisfied by any finite group and its subgroups, and vice versa. Inequalities

of the latter type govern the orders of any finite group and their subgroups.

Group-theoretic proofs of Shannon-type information inequalities are given. At

the end of the chapter, a group inequality is obtained from a non-Shannon-

type inequality discussed in Chapter 15. The meaning and the implication of

this inequality are yet to be understood.

�

X

Preface

Chapter 17 starts Part II of the book with a discussion of the butterfly

network, the primary example in network coding. Variations of the butterfly

network are analyzed in detail. The advantage of network coding over store-

and-forward in wireless and satellite communications is explained through a

simple example. We also explain why network coding with multiple infor-

mation sources is substantially different from network coding with a single

information source.

In Chapter 18, the fundamental bound for single-source network coding,

called the max-flow bound, is explained in detail. The bound is established

for a general class of network codes.

In Chapter 19, we discuss various classes of linear network codes on acyclic

networks that achieve the max-flow bound to different extents. Static net-

work codes, a special class of linear network codes that achieves the max-flow

bound in the presence of channel failure, is also discussed. Polynomial-time

algorithms for constructing these codes are presented.

In Chapter 20, we formulate and analyze convolutional network codes on

cyclic networks. The existence of such codes that achieve the max-flow bound

is proved.

Network coding theory is further developed in Chapter 21. The scenario

when more than one information source are multicast in a point-to-point

acyclic network is discussed. An implicit characterization of the achievable

information rate region which involves the framework for information inequal-

ities developed in Part I is proved.

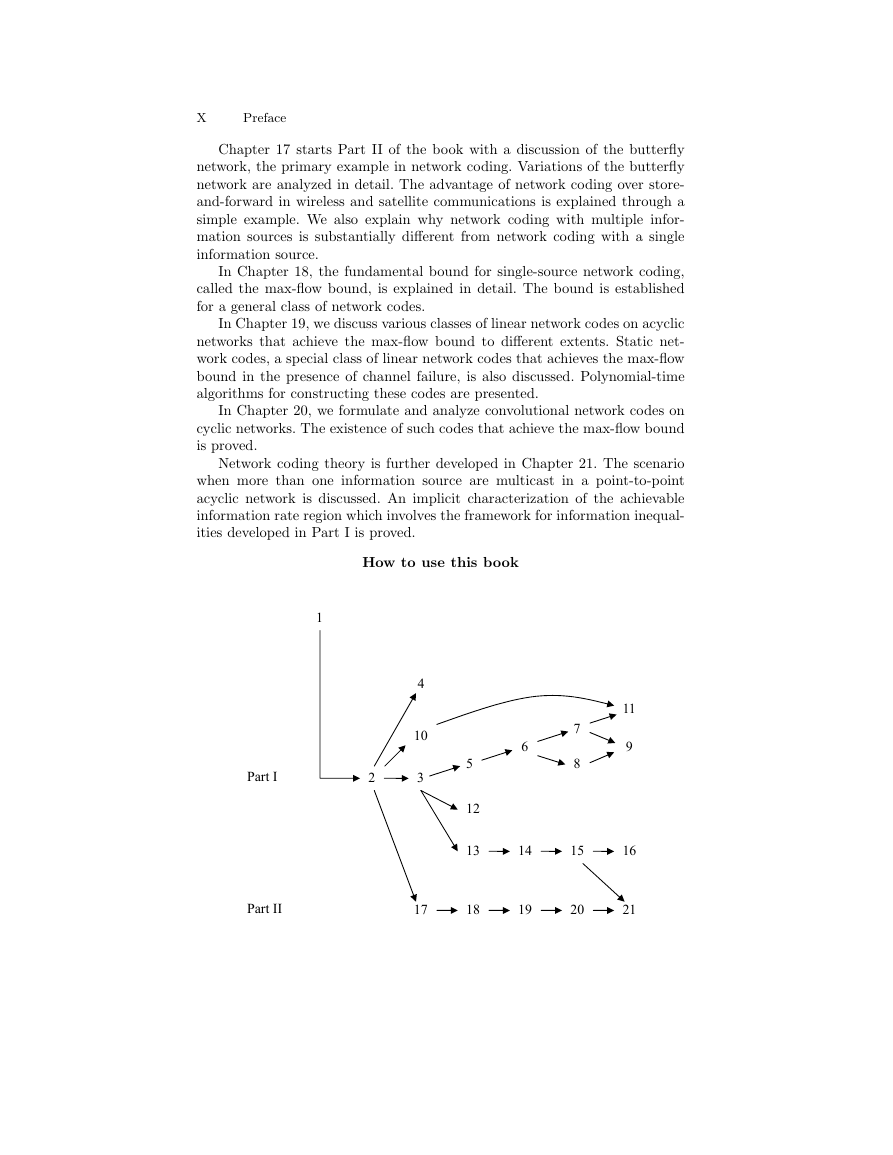

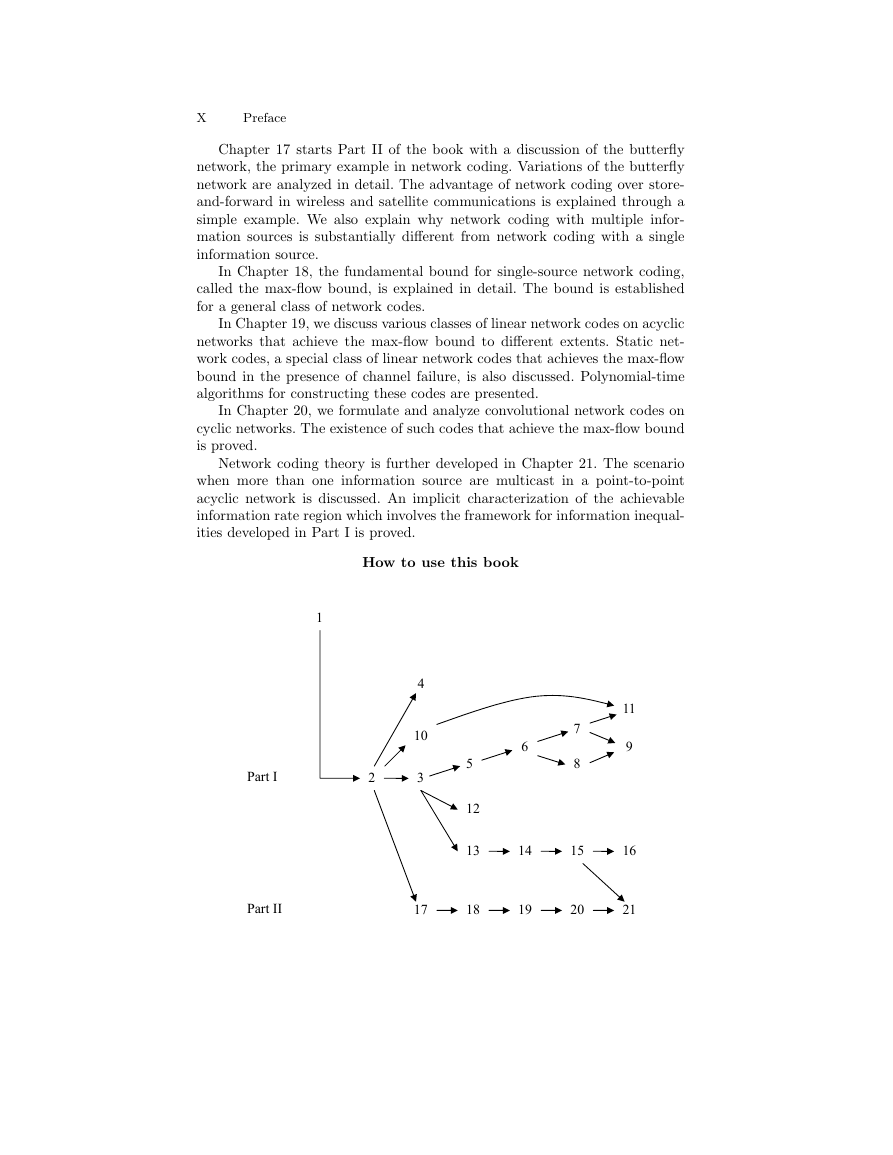

How to use this book

1 2 341017Part II Part I 1819202156712131415168911�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc