Preface

Acknowledgments

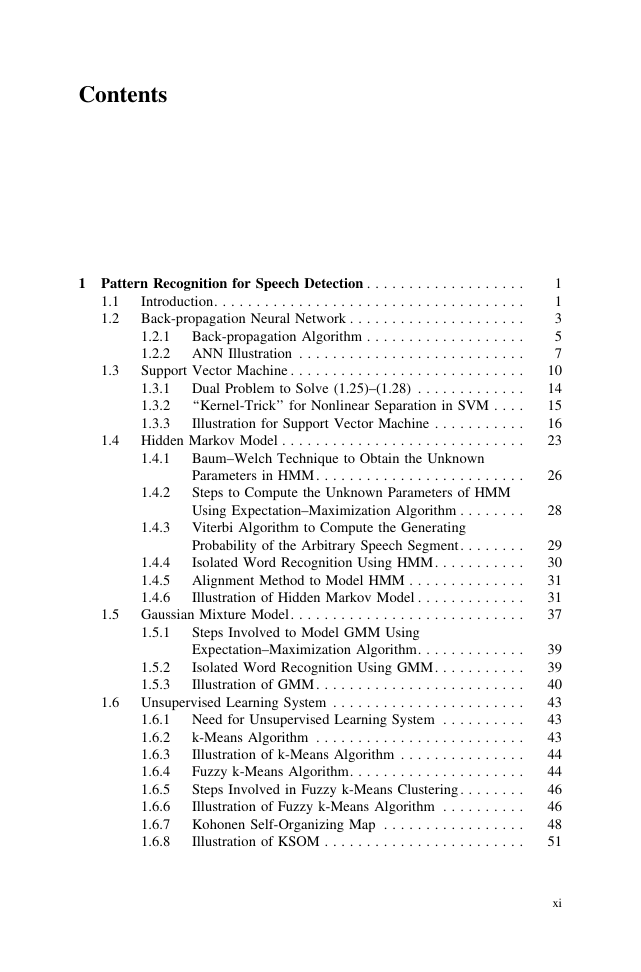

Contents

m-Files

1 Pattern Recognition for Speech Detection

1.1 Introduction

1.2 Back-Propagation Neural Network

1.2.1 Back-Propagation Algorithm

1.2.2 ANN Illustration

1.3 Support Vector Machine

1.3.1 Dual Problem to Solve (1.25)--(1.28)

1.3.2 ``Kernel-Trick'' for Nonlinear Separation in SVM

1.3.3 Illustration for Support Vector Machine

1.4 Hidden Markov Model

1.4.1 Baum--Welch Technique to Obtain the Unknown Parameters in HMM

1.4.2 Steps to Compute the Unknown Parameters of HMM Using Expectation--Maximization Algorithm

1.4.3 Viterbi Algorithm to Compute the Generating Probability of the Arbitrary Speech Segment WORDX

1.4.4 Isolated Word Recognition Using HMM

1.4.5 Alignment Method to Model HMM

1.4.6 Illustration of Hidden Markov Model

1.5 Gaussian Mixture Model

1.5.1 Steps Involved to Model GMM Using Expectation--Maximization Algorithm

1.5.2 Isolated Word Recognition Using GMM

1.5.3 Illustration of GMM

1.6 Unsupervised Learning System

1.6.1 Need for Unsupervised Learning System

1.6.2 K-Means Algorithm

1.6.3 Illustration of k-Means Algorithm

1.6.4 Fuzzy k-Means Algorithm

1.6.5 Steps Involved in Fuzzy k-Means Clustering

1.6.6 Illustration of Fuzzy k-Means Algorithm

1.6.7 Kohonen Self-Organizing Map

1.6.8 Illustration of KSOM

1.7 Dimensionality Reduction Techniques

1.7.1 Principal Component Analysis

1.7.2 Illustration of PCA Using 2D to 1D Conversion

1.7.3 Illustration of PCA

1.7.4 Linear Discriminant Analysis

1.7.5 Small Sample Size Problem in LDA

1.7.6 Null-Space LDA

1.7.7 Kernel LDA

1.7.8 Kernel-Trick to Execute LDA in the Higher-Dimensional Space

1.7.9 Illustration of Dimensionality Reduction Using LDA

1.8 Independent Component Analysis

1.8.1 Solving ICA Bases Using Kurtosis Measurement

1.8.2 Steps to Obtain the ICA Bases

1.8.3 Illustration of Dimensionality Reduction Using ICA

2 Speech Production Model

2.1 Introduction

2.2 1-D Sound Waves

2.2.1 Physics on Sound Wave Travelling Through the Tube with Uniform Cross-Sectional Area A

2.2.2 Solution to (2.9) and (2.18)

2.3 Vocal Tract Model as the Cascade Connections of Identical Length Tubes with Different Cross-Sections

2.4 Modelling the Vocal Tract from the Speech Signal

2.4.1 Autocorrelation Method

2.4.2 Auto Covariance Method

2.5 Lattice Structure to Obtain Excitation Source for the Typical Speech Signal

2.5.1 Computation of Lattice Co-efficient from LPC Co-efficients

3 Feature Extraction of the Speech Signal

3.1 Endpoint Detection

3.2 Dynamic Time Warping

3.3 Linear Predictive Co-efficients

3.4 Poles of the Vocal Tract

3.5 Reflection Co-efficients

3.6 Log Area Ratio

3.7 Cepstrum

3.8 Line Spectral Frequencies

3.9 Mel-Frequency Cepstral Co-efficients

3.9.1 Gibbs Phenomenon

3.9.2 Discrete Cosine Transformation

3.10 Spectrogram

3.10.1 Time Resolution Versus Frequency Resolution in Spectrogram

3.11 Discrete Wavelet Transformation

3.12 Pitch Frequency Estimation

3.12.1 Autocorrelation Approach

3.12.2 Homomorphic Filtering Approach

3.13 Formant Frequency Estimation

3.13.1 Formant Extraction Using Vocal Tract Model

3.13.2 Formant Extraction Using Homomorphic Filtering

4 Speech Compression

4.1 Uniform Quantization

4.2 Nonuniform Quantization

4.3 Adaptive Quantization

4.4 Differential Pulse Code Modulation

4.4.1 Illustrations of the Prediction of Speech Signal Using lpc

4.5 Code-Excited Linear Prediction

4.5.1 Estimation of the Delay Constant D

4.5.2 Estimation of the Gain Constants G1 and G2

4.6 Assessment of the Quality of the Compressed Speech Signal

A Constrained Optimization Using Lagrangian Techniques

A.1 Constrained Optimization with Equality Constraints

A.2 Constrained Optimization with Inequality Constraints

A.3 Kuhn--Tucker Conditions

B Expectation--Maximization Algorithm

C Diagonalization of the Matrix

C.1 Positive Definite Matrix

D Condition Number

D.1 Preemphasis

E Spectral Flatness

E.1 Demonstration on Spectral Flatness

F Functional Blocks of the Vocal Tract and the Ear

F.1 Vocal Tract Model

F.2 Ear Model

About the Author

About the Book

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc