第六届“认证杯”数学中国

数学建模国际赛

承 诺 书

我们仔细阅读了第六届“认证杯”数学中国数学建模国际赛的竞赛规则。

我们完全明白,在竞赛开始后参赛队员不能以任何方式(包括电话、电子邮

件、网上咨询等)与队外的任何人(包括指导教师)研究、讨论与赛题有关的问

题。

我们知道,抄袭别人的成果是违反竞赛规则的, 如果引用别人的成果或其他

公开的资料(包括网上查到的资料),必须按照规定的参考文献的表述方式在正

文引用处和参考文献中明确列出。

我们郑重承诺,严格遵守竞赛规则,以保证竞赛的公正、公平性。如有违反

竞赛规则的行为,我们将受到严肃处理。

我们允许数学中国网站(www.madio.net)公布论文,以供网友之间学习交流,

数学中国网站以非商业目的的论文交流不需要提前取得我们的同意。

我们的参赛队号为:3806

我们选择的题目是: B

参赛队员 (签名) :

队员 1: 郁振东

队员 2: 李开颜

队员 3: 温正峤

参赛队教练员 (签名): 无

�

Team # 3806

Page 2 of 17

第六届“认证杯”数学中国

数学建模国际赛

编 号 专 用 页

参赛队伍的参赛队号:(请各个参赛队提前填写好):

3806

竞赛统一编号(由竞赛组委会送至评委团前编号):

竞赛评阅编号(由竞赛评委团评阅前进行编号):

�

Team # 3806

Page 3 of 17

Authorship Attribution System Based on SVM

Abstract:

With the development of the investigation methods and many language evidence

appears in E-mails, we can use handwriting analysis to identify the authorship by

linguistic features of the E-mail. Studying the relationship between authorship and

word frequency, word formation of E-mail, identify the authorship would be more

efficiently and more quickly. Many successful techniques like stylometric features and

grammatical rules extraction have been invented for authorship attribution of literary

works. Due to informality and short size of E-mails, these techniques cannot perform

well in e-mail authorship attribution.

In consideration of this situation, in this paper, through the idea of machine

learning, we chose part of speech of words as the feature for per E-mail and found the

relationship between the authorship and part of speech, so as to analyse the

relationship between E-mails and authorship to identify whether or not the E-mail

comes from particular person. Part of speech was differentiated into 36 kinds, each

part of speech corresponds to specific symbol refereed to the Table in Appendix. In

our study, we found that there was no LS in all 1600 E-mails, therefore, we adopted

35-dimensions model as final machine learning model. Finally, after testing the

accuracy can reach 97.2561%.

We divided all 1600 E-mails(about 1000 E-mails come from Steven Kean, 600

E-mails come from other person) downloaded into two parts randomly.One is used to

extract the features of E-mail and as the train set for machine learning, taking up 80%

of all E-mails. 20% E-mails left are used as test set for machine learning. In order to

extract the features of E-mail, we take advantage of TextBlob toolkit to split, tag,

normalize the E-mails, in order to acquire the times of words with different parts of

speech finally. After acquiring the statistics, SVM classifier model was used to learn

the different parts between Steve Kean’s E-mails and other person’s, then we did a

test after learning all 80% of E-mails, the accuracy reached above 97.2561%. In order

to sum the relationship between the accuracy and training samples size, we designed a

contrast test: we chose 90%, 80%, 70%, 60%, 50%, 40% amount of training samples

to train, got the different of accuracy in 10 test times, draw up the line chart in

Figure2. From the Figure2, we concluded that: the accuracy increases if the amounts

of training samples increase, however, the more amounts of training samples was

trained, the higher variance of accuracy we got.

Key words: Handwriting analysis、Machine Learning、part of speech

�

Team # 3806

Page 4 of 17

Contents

I. Introduction...............................................................................................................5

1.1 Problem Description..........................................................................................5

1.2 Terminology and Definition..............................................................................5

1.3 Our Work...........................................................................................................5

II. The Description of the Problem............................................................................. 5

2.1 Feature Extraction............................................................................................. 5

2.1.1 Splitting and Tagging.............................................................................. 5

2.1.2 Statistic and Normalization..................................................................... 5

2.2 Machine Learning..............................................................................................6

III. Models.....................................................................................................................6

3.1 Flow Chart.........................................................................................................6

3.2.1 Definitions and Symbols......................................................................... 7

3.2.2 Assumptions............................................................................................ 7

3.2.3 The Foundation of Model........................................................................7

3.2.4 Solution and Result Analysis.................................................................. 8

3.2.5 Strength and Weakness........................................................................... 9

3.3 Machine Learning Model.................................................................................. 9

3.3.1 Definitions and Symbols......................................................................... 9

3.3.2 The Foundation of Model......................................................................10

3.3.3 Solution and Result Analysis................................................................ 11

3.3.4 Strength and Weakness......................................................................... 13

IV. Conclusions...........................................................................................................13

4.1 Conclusions of the Problem............................................................................ 13

4.2 Methods Used in Our Models......................................................................... 13

4.3 Applications of Our Models............................................................................13

V. Future Work.......................................................................................................... 13

Model Promotion...................................................................................................13

VI. References.............................................................................................................14

VII. Appendix............................................................................................................. 14

7.1 Tag Description.............................................................................................. 14

7.2 Codes..............................................................................................................15

�

Team # 3806

Page 5 of 17

I. Introduction

1.1 Problem Description

Handwriting analysis has been used in criminal investigation for a long time to

link people to written evidence. In law cases, we can take advantage of handwriting

analysis to find out the criminal suspect accurately and quickly. However, with the

spread of World Wide Web, E-mail has changed our way of written communication.

With the increase of E-mail traffic, the use of E-mail for illegitimate purpose such as

spamming, threatening, illegal transaction etc, also has an increasing trend. Therefore,

handwriting analysis is also applied widely on law cases, to match the authorship and

E-mail.

Through the study and development of recent years, handwriting analysis’s

maturity have got a huge promotion. The main difficulty of handwriting analysis is

how to extract the E-mail’s features appropriately and precisely when the E-mails

appear with different formats, lengths or some other situations. Word frequency,

lexical, syntactic, structural, grammatical features, and other features have been

researched in various paper on Literary. However, due to unreliable values, these large

numbers of features remain redundant and lower accuracy of an authorship attribution

system by creating noise, value of these stylometric and grammatical features cannot

be calculated reliably.

1.2 Terminology and Definition

(1) SVM(support vector machine): A model of supervised learning to process pattern

recognition, classification and regression analysis in machine learning.

(2) TextBlod: A tool kit supporting Python2 and Python3 used to process textual data.

1.3 Our Work

(1) Use TextBlob toolkit to extract the feature(part of speech), gain the time of

occurrence of different parts of speech, normalize the statistic.

(2)Use SVM classifier for learning and judgement by the statistic extracted above.

(3)Design contrast test to gain the different accuracy rates with different amounts of

training samples, evaluate model and optimize algorithm to improve accuracy .

II. The Description of the Problem

2.1 Feature Extraction

2.1.1 Splitting and Tagging

Each E-mail content should be split into independent words on account of text

file’s data structure. Those words can be identified independently, then will be sent to

tag its part of speech.

2.1.2 Statistic and Normalization

�

Team # 3806

Page 6 of 17

After tagging per word in per E-mail, statistic about the time of occurrence of

various parts of speech should be acquired, so that, we can get the information from

the statistic. Normalization is the last procedure to process the data we got.

2.2 Machine Learning

There are about 1600 E-mails downloaded from specified website , about 1000

E-mails come from a person called Steven Kean, left come from others. Those 1600

E-mails would be divided to two parts randomly for training and testing. Getting a

E-mail from test set randomly as testing sample, we can use this sample to examine

whether the model built has the ability to estimate the chosen E-mail came from

Steven Kean or not.

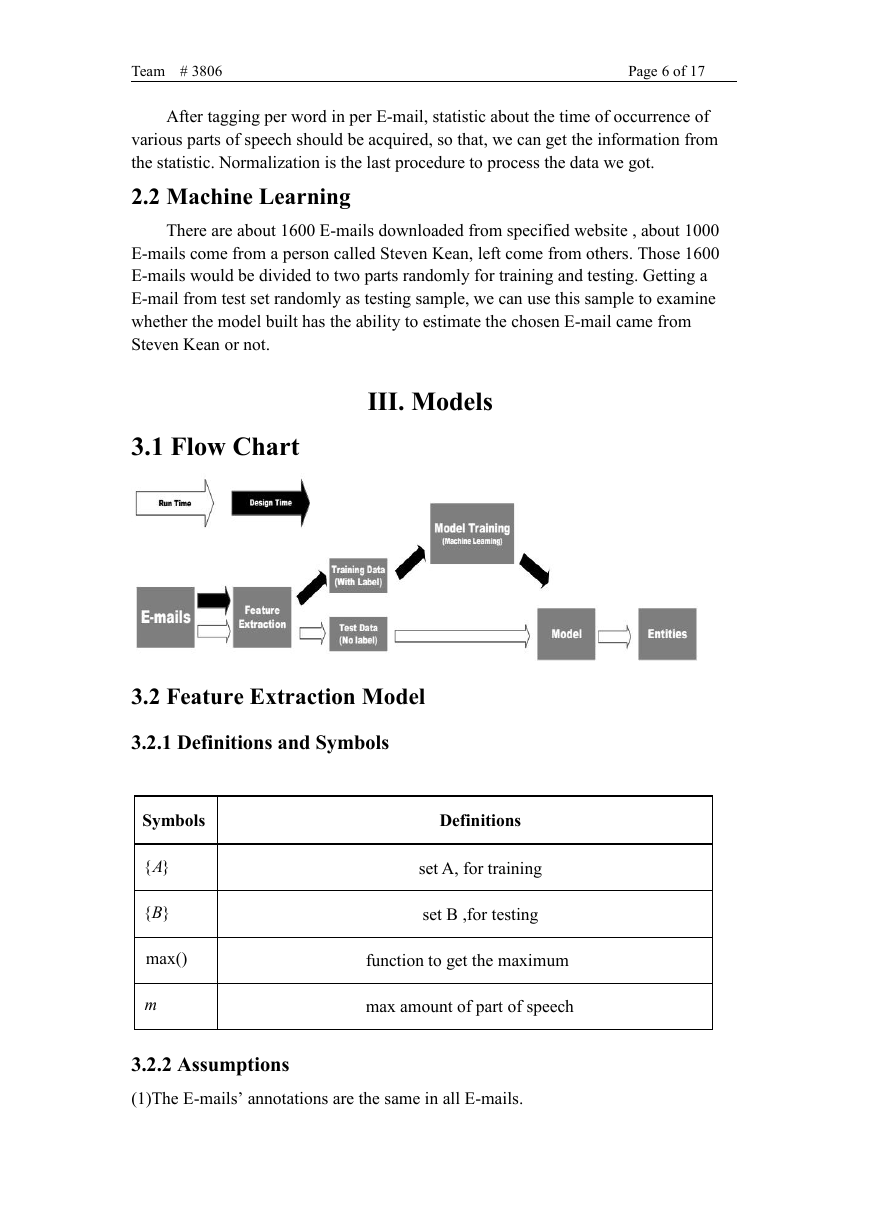

III. Models

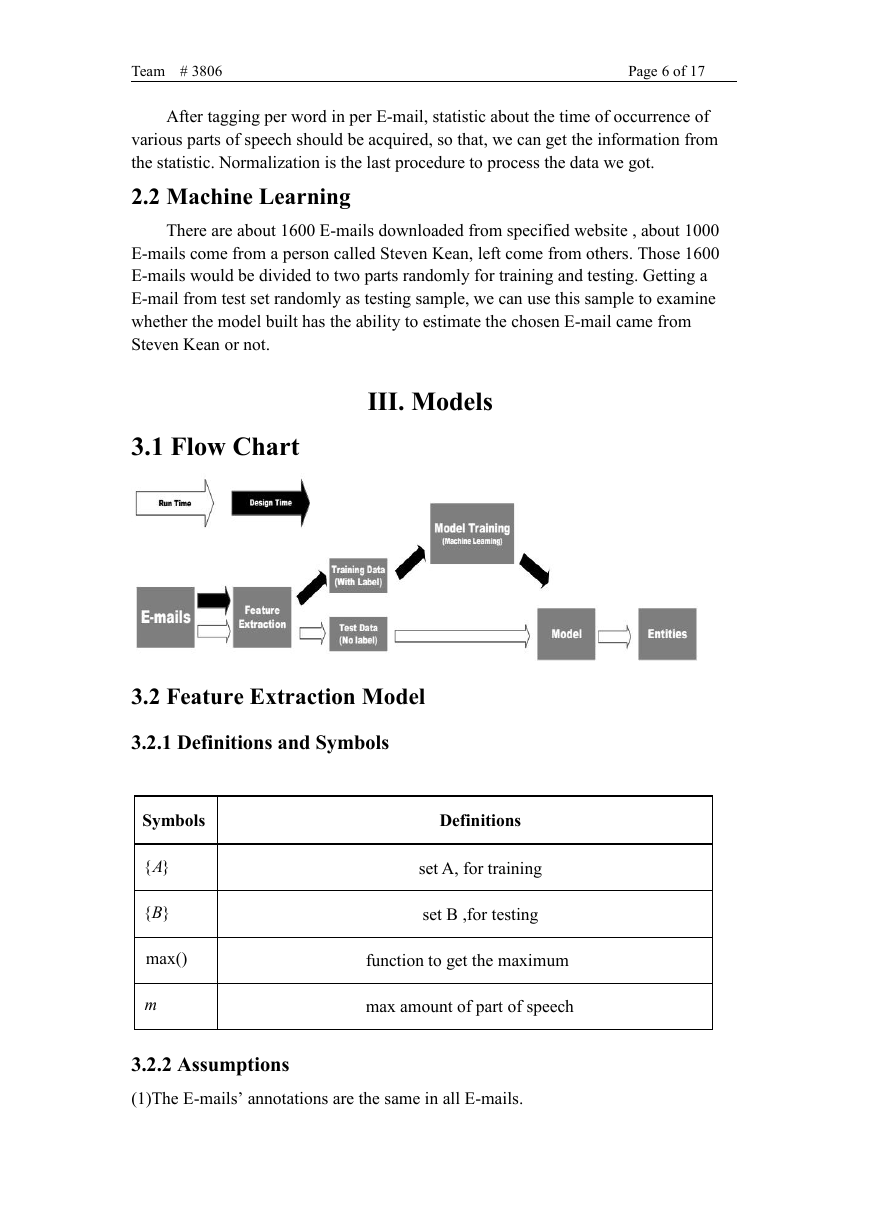

3.1 Flow Chart

3.2 Feature Extraction Model

3.2.1 Definitions and Symbols

Symbols

}{A

}{B

max()

m

Definitions

set A, for training

set B ,for testing

function to get the maximum

max amount of part of speech

3.2.2 Assumptions

(1)The E-mails’ annotations are the same in all E-mails.

�

Team # 3806

Page 7 of 17

(2)The E-mails do not contain messy codes, useless characters.

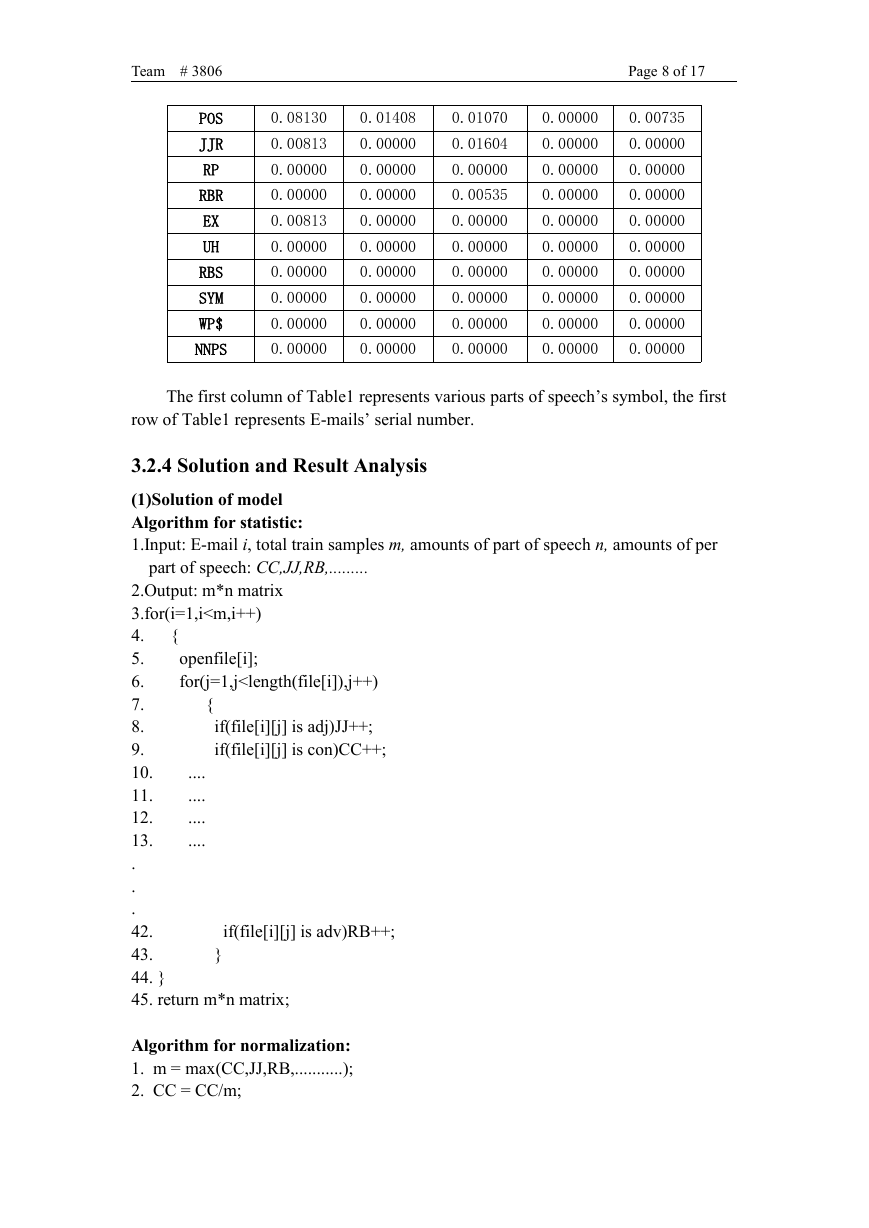

3.2.3 The Foundation of Model

First, we divided E-mail datasets downloaded into two sets(called set A and set

B ). Set A is used as the train set for machine learning model. Set B is used as test set

for machine learning model.

Second, we called the TextBlob toolkit to split E-mails’ content in set A to

independent words, so as to accomplish part of speech tagging successfully and easily.

After all E-mails’ independent words in set A was tagged, we calculated the times of

occurrence of various parts of speech per E-mail, then list them out.

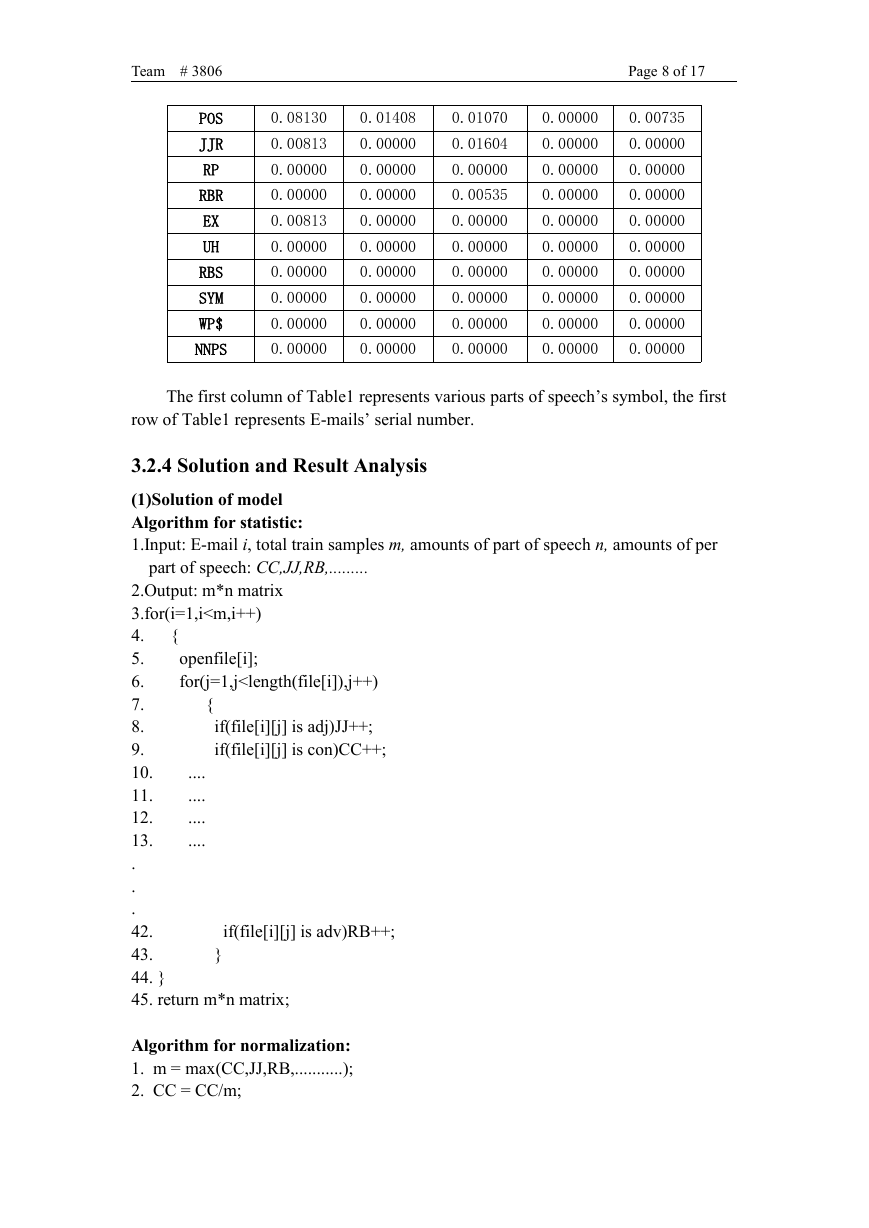

Third, we took the highest time of occurrence of part of speech as unit one,

normalize part of speech left successively, then list them out like Table1.

Table1.Fragment of statistic after normalizing

52759

53555

1.00000

0.33333

1.00000

0.42254

0.14634

0.15493

0.18699

0.09756

0.16901

0.02817

0.12195

0.05634

0.21951

0.02439

0.01408

0.01408

0.00000

0.01408

0.11382

0.04878

0.02817

0.00000

0.14634

0.04225

0.13821

0.08130

0.05634

0.05634

0.21138

0.11268

0.07317

0.01626

0.07042

0.00000

0.04878

0.02817

0.01626

0.04878

0.02817

0.07042

0.00000

0.00000

0.00813

0.00813

0.00000

0.00000

0.00000

0.00000

0.00813

0.00000

54536

1.00000

0.33155

0.10160

0.35829

0.06417

0.12834

0.08021

0.11230

0.02139

0.08021

0.03209

0.16043

0.31551

0.08021

0.17647

0.12299

0.08021

0.04813

0.07487

0.13369

0.00000

0.00000

0.01070

0.00535

0.00535

54539

54542

1.00000

1.00000

0.54545

0.48529

0.20455

0.10294

0.11364

0.27206

0.11364

0.04412

0.02273

0.08088

0.02273

0.07353

0.00000

0.02206

0.04545

0.02206

0.15909

0.11029

0.02273

0.05147

0.02273

0.08088

0.04545

0.11029

0.09091

0.04412

0.06818

0.13971

0.02273

0.03676

0.04545

0.01471

0.02273

0.14706

0.02273

0.10294

0.20455

0.26471

0.00000

0.01471

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

NN

JJ

CD

IN

VBZ

TO

NNP

VBD

FW

VBP

VBG

PRP

DT

MD

VB

RB

VBN

CC

PRP$

NNS

JJS

WDT

WP

PDT

WRB

�

Team # 3806

Page 8 of 17

POS

JJR

RP

RBR

EX

UH

RBS

SYM

WP$

NNPS

0.08130

0.01408

0.00813

0.00000

0.00000

0.00000

0.00000

0.00000

0.00813

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.01070

0.01604

0.00000

0.00535

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00735

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

0.00000

The first column of Table1 represents various parts of speech’s symbol, the first

row of Table1 represents E-mails’ serial number.

3.2.4 Solution and Result Analysis

(1)Solution of model

Algorithm for statistic:

1.Input: E-mail i, total train samples m, amounts of part of speech n, amounts of per

part of speech: CC,JJ,RB,.........

{

{

openfile[i];

for(j=1,j

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc