�

Optimal Control with Engineering Applications

Hans P. Geering

Hans P. Geering

Optimal Control

with Engineering

Applications

With 12 Figures

123

�

Hans P. Geering, Ph.D.

Professor of Automatic Control and Mechatronics

Measurement and Control Laboratory

Department of Mechanical and Process Engineering

ETH Zurich

Sonneggstrasse 3

CH-8092 Zurich, Switzerland

Library of Congress Control Number: 2007920933

ISBN 978-3-540-69437-3 Springer Berlin Heidelberg New York

This work is subject to copyright. All rights are reserved, whether the whole or part of the material

is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broad-

casting, reproduction on microfilm or in any other way, and storage in data banks. Duplication of

this publication or parts thereof is permitted only under the provisions of the German Copyright Law

of September 9, 1965, in its current version, and permission for use must always be obtained from

Springer. Violations are liable for prosecution under the German Copyright Law.

Springer is a part of Springer Science+Business Media

springer.com

© Springer-Verlag Berlin Heidelberg 2007

The use of general descriptive names, registered names, trademarks, etc. in this publication does not

imply, even in the absence of a specific statement, that such names are exempt from the relevant

protective laws and regulations and therefore free for general use.

Typesetting: Camera ready by author

Production: LE-TEX Jelonek, Schmidt & Vöckler GbR, Leipzig

Cover design: eStudi Calamar

Steinen-Bro

o

S.L. F.

,

o

, Girona, Spain

SPIN 11880127

7/3100/YL - 5 4 3 2 1 0

Printed on acid-free paper

�

Foreword

This book is based on the lecture material for a one-semester senior-year

undergraduate or first-year graduate course in optimal control which I have

taught at the Swiss Federal Institute of Technology (ETH Zurich) for more

than twenty years. The students taking this course are mostly students in

mechanical engineering and electrical engineering taking a major in control.

But there also are students in computer science and mathematics taking this

course for credit.

The only prerequisites for this book are: The reader should be familiar with

dynamics in general and with the state space description of dynamic systems

in particular. Furthermore, the reader should have a fairly sound understand-

ing of differential calculus.

The text mainly covers the design of open-loop optimal controls with the help

of Pontryagin’s Minimum Principle, the conversion of optimal open-loop to

optimal closed-loop controls, and the direct design of optimal closed-loop

optimal controls using the Hamilton-Jacobi-Bellman theory.

In theses areas, the text also covers two special topics which are not usually

found in textbooks: the extension of optimal control theory to matrix-valued

performance criteria and Lukes’ method for the iterative design of approxi-

matively optimal controllers.

Furthermore, an introduction to the phantastic, but incredibly intricate field

of differential games is given. The only reason for doing this lies in the

fact that the differential games theory has (exactly) one simple application,

namely the LQ differential game. It can be solved completely and it has a

very attractive connection to the H∞ method for the design of robust linear

time-invariant controllers for linear time-invariant plants. — This route is

the easiest entry into H∞ theory. And I believe that every student majoring

in control should become an expert in H∞ control design, too.

The book contains a rather large variety of optimal control problems. Many

of these problems are solved completely and in detail in the body of the text.

Additional problems are given as exercises at the end of the chapters. The

solutions to all of these exercises are sketched in the Solution section at the

end of the book.

�

vi

Foreword

Acknowledgements

First, my thanks go to Michael Athans for elucidating me on the background

of optimal control in the first semester of my graduate studies at M.I.T. and

for allowing me to teach his course in my third year while he was on sabbatical

leave.

I am very grateful that Stephan A. R. Hepner pushed me from teaching the

geometric version of Pontryagin’s Minimum Principle along the lines of [2],

[20], and [14] (which almost no student understood because it is so easy, but

requires 3D vision) to teaching the variational approach as presented in this

text (which almost every student understands because it is so easy and does

not require any 3D vision).

I am indebted to Lorenz M. Schumann for his contributions to the material

on the Hamilton-Jacobi-Bellman theory and to Roberto Cirillo for explaining

Lukes’ method to me.

Furthermore, a large number of persons have supported me over the years. I

cannot mention all of them here. But certainly, I appreciate the continuous

support by Gabriel A. Dondi, Florian Herzog, Simon T. Keel, Christoph

M. Sch¨ar, Esfandiar Shafai, and Oliver Tanner over many years in all aspects

of my course on optimal control. — Last but not least, I like to mention my

secretary Brigitte Rohrbach who has always eagle-eyed my texts for errors

and silly faults.

Finally, I thank my wife Rosmarie for not killing me or doing any other

harm to me during the very intensive phase of turning this manuscript into

a printable form.

Hans P. Geering

Fall 2006

�

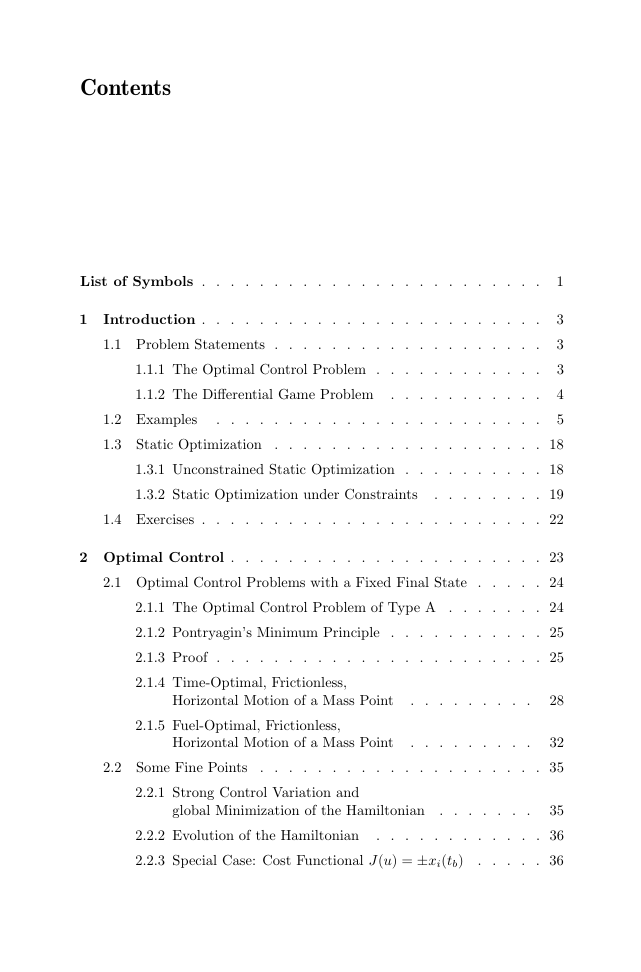

Contents

List of Symbols .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1 Introduction .

.

1.1 Problem Statements .

.

.

.

.

.

1.1.1 The Optimal Control Problem .

1.1.2 The Differential Game Problem .

.

.

.

.

.

.

.

.

1.3.1 Unconstrained Static Optimization .

1.3.2 Static Optimization under Constraints

.

1.2 Examples

.

1.3 Static Optimization .

1.4 Exercises .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2 Optimal Control .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2.1 Optimal Control Problems with a Fixed Final State .

.

.

.

2.1.1 The Optimal Control Problem of Type A .

.

2.1.2 Pontryagin’s Minimum Principle .

.

2.1.3 Proof .

.

.

2.1.4 Time-Optimal, Frictionless,

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Horizontal Motion of a Mass Point

.

.

.

2.1.5 Fuel-Optimal, Frictionless,

2.2 Some Fine Points

Horizontal Motion of a Mass Point

.

.

2.2.1 Strong Control Variation and

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

global Minimization of the Hamiltonian .

.

2.2.2 Evolution of the Hamiltonian

.

.

2.2.3 Special Case: Cost Functional J(u) = ±xi(tb)

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

3

.

3

.

3

.

4

.

.

5

. 18

. 18

. 19

. 22

. 23

. 24

. 24

. 25

. 25

28

32

. 35

35

. 36

. 36

�

viii

Contents

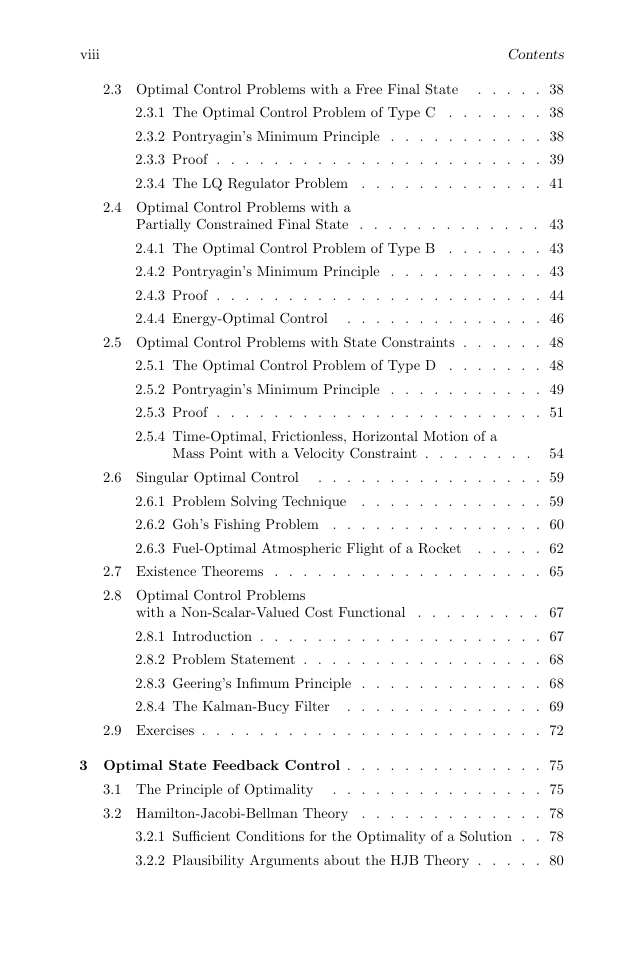

2.3 Optimal Control Problems with a Free Final State

2.3.1 The Optimal Control Problem of Type C .

.

2.3.2 Pontryagin’s Minimum Principle .

2.3.3 Proof .

.

.

.

.

.

2.3.4 The LQ Regulator Problem .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2.4 Optimal Control Problems with a

.

.

.

.

.

.

.

.

.

.

.

.

Partially Constrained Final State .

2.4.1 The Optimal Control Problem of Type B .

.

2.4.2 Pontryagin’s Minimum Principle .

.

2.4.3 Proof .

.

.

.

.

2.4.4 Energy-Optimal Control

.

.

.

.

.

2.5 Optimal Control Problems with State Constraints .

.

.

.

.

.

.

.

.

.

.

2.5.1 The Optimal Control Problem of Type D .

.

.

2.5.2 Pontryagin’s Minimum Principle .

2.5.3 Proof .

.

.

.

2.5.4 Time-Optimal, Frictionless, Horizontal Motion of a

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2.6 Singular Optimal Control

Mass Point with a Velocity Constraint .

.

.

.

2.6.1 Problem Solving Technique

2.6.2 Goh’s Fishing Problem .

2.6.3 Fuel-Optimal Atmospheric Flight of a Rocket

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2.7 Existence Theorems .

.

2.8 Optimal Control Problems

.

.

.

.

.

.

.

.

.

.

with a Non-Scalar-Valued Cost Functional

.

.

2.8.1 Introduction .

.

.

2.8.2 Problem Statement .

.

.

2.8.3 Geering’s Infimum Principle .

.

.

2.8.4 The Kalman-Bucy Filter

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2.9 Exercises .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3 Optimal State Feedback Control .

.

.

3.1 The Principle of Optimality

.

3.2 Hamilton-Jacobi-Bellman Theory .

.

.

.

3.2.1 Sufficient Conditions for the Optimality of a Solution .

3.2.2 Plausibility Arguments about the HJB Theory .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. 38

. 38

. 38

. 39

. 41

. 43

. 43

. 43

. 44

. 46

. 48

. 48

. 49

. 51

54

. 59

. 59

. 60

. 62

. 65

. 67

. 67

. 68

. 68

. 69

. 72

. 75

. 75

. 78

. 78

. 80

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc