Hands-On Exercises

Cloudera Custom Training:

General Notes ............................................................................................................................ 3

Hands-On Exercise: Query Hadoop Data with Apache Impala ................................. 6

Hands-On Exercise: Access HDFS with the Command Line and Hue ...................... 8

Hands-On Exercise: Run a YARN Job .............................................................................. 14

Hands-On Exercise: Explore RDDs Using the Spark Shell ....................................... 20

Hands-On Exercise: Process Data Files with Apache Spark ................................... 30

Hands-On Exercise: Use Pair RDDs to Join Two Datasets ....................................... 34

Hands-On Exercise: Write and Run an Apache Spark Application ...................... 39

Hands-On Exercise: Configure an Apache Spark Application ................................ 44

Hands-On Exercise: View Jobs and Stages in the Spark Application UI .............. 49

Hands-On Exercise: Persist an RDD ................................................................................ 55

�

Hands-On Exercise: Implement an Iterative Algorithm with Apache Spark .... 58

Hands-On Exercise: Use Apache Spark SQL for ETL .................................................. 62

Hands-On Exercise: Write an Apache Spark Streaming Application .................. 68

Hands-On Exercise: Process Multiple Batches with Apache Spark Streaming 73

Streaming ................................................................................................................................ 78

Appendix A: Enabling Jupyter Notebook for PySpark .............................................. 82

Appendix B: Managing Services on the Course Virtual Machine .......................... 85

Hands-On Exercise: Process Apache Kafka Messages with Apache Spark

2

Copyright © 2010-2016 Cloudera, Inc. All rights reserved.

Not to be reproduced or shared without prior written consent from Cloudera.

2

�

3

Points to Note while Working in the Virtual Machine

General Notes

Cloudera’s training courses use a Virtual Machine running the CentOS Linux

distribution. This VM has CDH installed in pseudo-distributed mode. Pseudo-

distributed mode is a method of running Hadoop whereby all Hadoop daemons run

on the same machine. It is, essentially, a cluster consisting of a single machine. It

works just like a larger Hadoop cluster; the only key difference is that the HDFS

block replication factor is set to 1, since there is only a single DataNode available.

• The Virtual Machine (VM) is set to log in as the user training automatically. If

you log out, you can log back in as the user training with the password

training.

If you need it, the root password is training. You may be prompted for this if,

for example, you want to change the keyboard layout. In general, you should not

need this password since the training user has unlimited sudo privileges.

In some command-line steps in the exercises, you will see lines like this:

The dollar sign ($) at the beginning of each line indicates the Linux shell prompt.

The actual prompt will include additional information (for example,

[training@localhost training_materials]$) but this is omitted

from these instructions for brevity.

The backslash (\) at the end of the first line signifies that the command is not

completed, and continues on the next line. You can enter the code exactly as

shown (on two lines), or you can enter it on a single line. If you do the latter,

you should not type in the backslash.

• Although most students are comfortable using UNIX text editors like vi or emacs,

some might prefer a graphical text editor. To invoke the graphical editor from

the command line, type gedit followed by the path of the file you wish to edit.

$ hdfs dfs -put united_states_census_data_2010 \

/user/training/example

•

•

Copyright © 2010-2016 Cloudera, Inc. All rights reserved.

Not to be reproduced or shared without prior written consent from Cloudera.

3

�

Appending & to the command allows you to type additional commands while the

editor is still open. Here is an example of how to edit a file named myfile.txt:

4

$ gedit myfile.txt &

Points to Note during the Exercises

Directories

• The main directory for the exercises is

~/training_materials/devsh/exercises. Each directory under that

one corresponds to an exercise or set of exercises—this is referred to in the

instructions as “the exercise directory.” Any scripts or files required for the

exercise (other than data) are in the exercise directory.

• Within each exercise directory you may find the following subdirectories:

• solution—This contains solution code for each exercise.

• stubs—A few of the exercises depend on provided starter files

containing skeleton code.

• Maven project directories—For exercises for which you must write Scala

classes, you have been provided with preconfigured Maven project

directories. Within these projects are two packages: stubs, where you

will do your work using starter skeleton classes; and solution,

containing the solution class.

• Data files used in the exercises are in ~/training_materials/data.

Usually you will upload the files to HDFS before working with them.

• The VM defines a few environment variables that are used in place of longer

paths in the instructions. Since each variable is automatically replaced with its

corresponding values when you run commands in the terminal, this makes it

easier and faster for you to enter a command.

• The two environment variables for this course are $DEVSH and $DEVDATA.

Under $DEVSH you can find exercises, examples, and scripts.

• You can always use the echo command if you would like to see the value of

an environment variable:

Copyright © 2010-2016 Cloudera, Inc. All rights reserved.

Not to be reproduced or shared without prior written consent from Cloudera.

4

�

$ echo $DEVSH

5

Step-by-Step Instructions

As the exercises progress, and you gain more familiarity with the tools and

environment, we provide fewer step-by-step instructions; as in the real world, we

merely give you a requirement and it’s up to you to solve the problem! You should

feel free to refer to the hints or solutions provided, ask your instructor for

assistance, or consult with your fellow students.

There are additional challenges for some of the hands-on exercises. If you finish the

main exercise, please attempt the additional steps.

If you are unable to complete an exercise, we have provided a script to catch you up

automatically. Each exercise has instructions for running the catch-up script, where

applicable.

The script will prompt for which exercise you are starting; it will set up all the

required data as if you had completed all the previous exercises.

Warning: If you run the catch up script, you may lose your work. (For example, all

data will be deleted from HDFS.)

$ $DEVSH/scripts/catchup.sh

Bonus Exercises

Catch-Up Script

Copyright © 2010-2016 Cloudera, Inc. All rights reserved.

Not to be reproduced or shared without prior written consent from Cloudera.

5

�

Hands-On Exercise: Query Hadoop

Data with Apache Impala

Files and Data Used in This Exercise:

Impala/Hive table:

device

6

Using the Hue Impala Query Editor

In this exercise, you will use the Hue Impala Query Editor to explore data in a

Hadoop cluster.

This exercise is intended to let you begin to familiarize yourself with the course

Virtual Machine as well as Hue. You will also briefly explore the Impala Query Editor.

1. Start Firefox on the VM using the shortcut provided on the main menu panel at

the top of the screen.

2. Click the Hue bookmark, or visit http://localhost:8888/.

3. Because this is the first time anyone has logged into Hue on this server, you will

be prompted to create a new user account. Enter username training and

password training, and then click Create account. (If prompted you may

click Remember.)

• Note: When you first log in to Hue you may see a misconfiguration

warning. This is because not all the services Hue depends on are installed

on the course VM in order to save space. You can disregard the message.

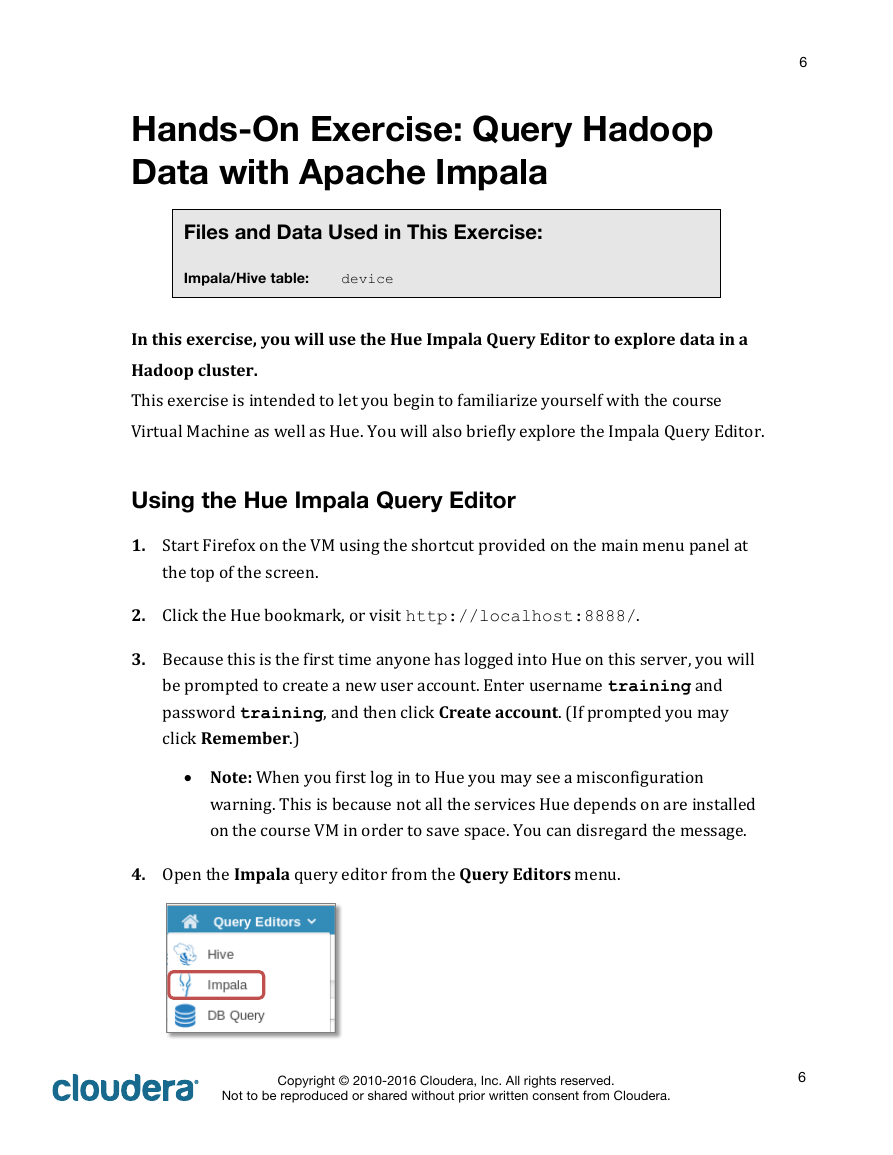

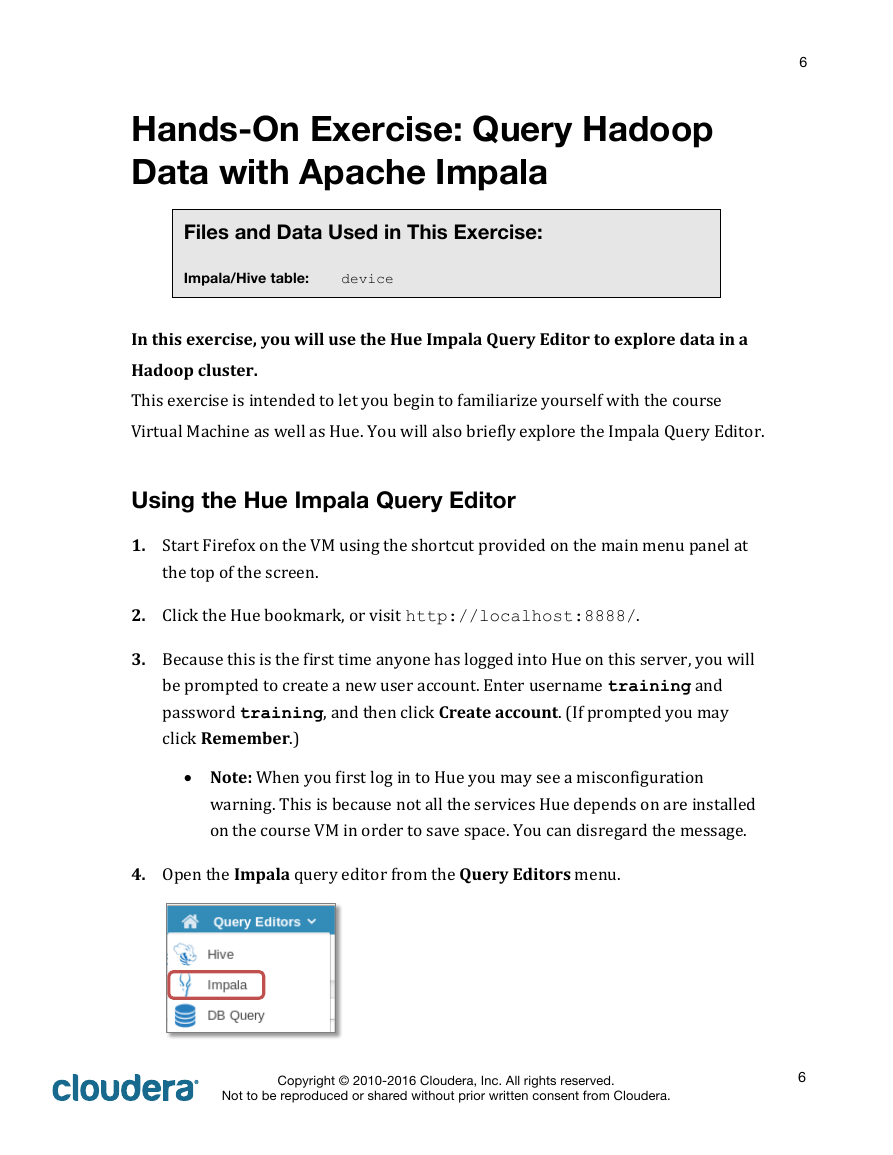

4. Open the Impala query editor from the Query Editors menu.

Copyright © 2010-2016 Cloudera, Inc. All rights reserved.

Not to be reproduced or shared without prior written consent from Cloudera.

6

�

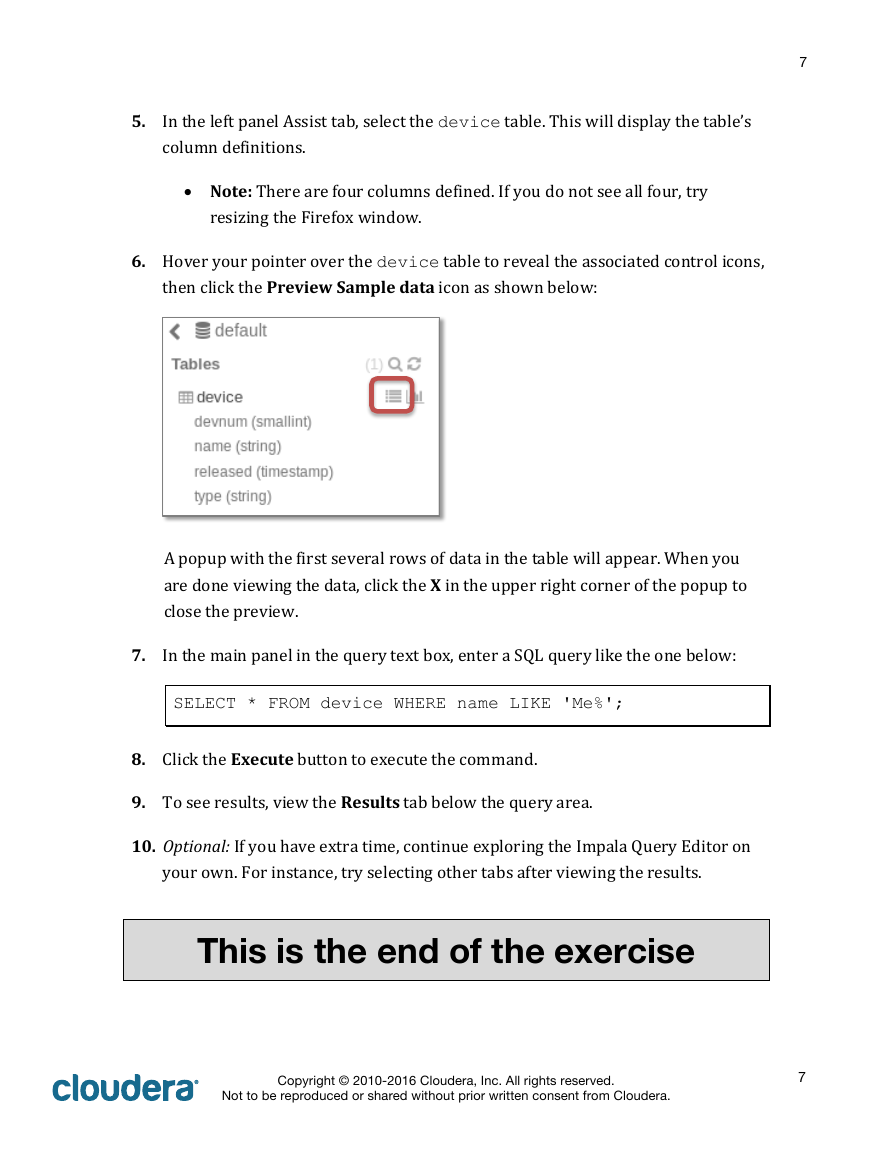

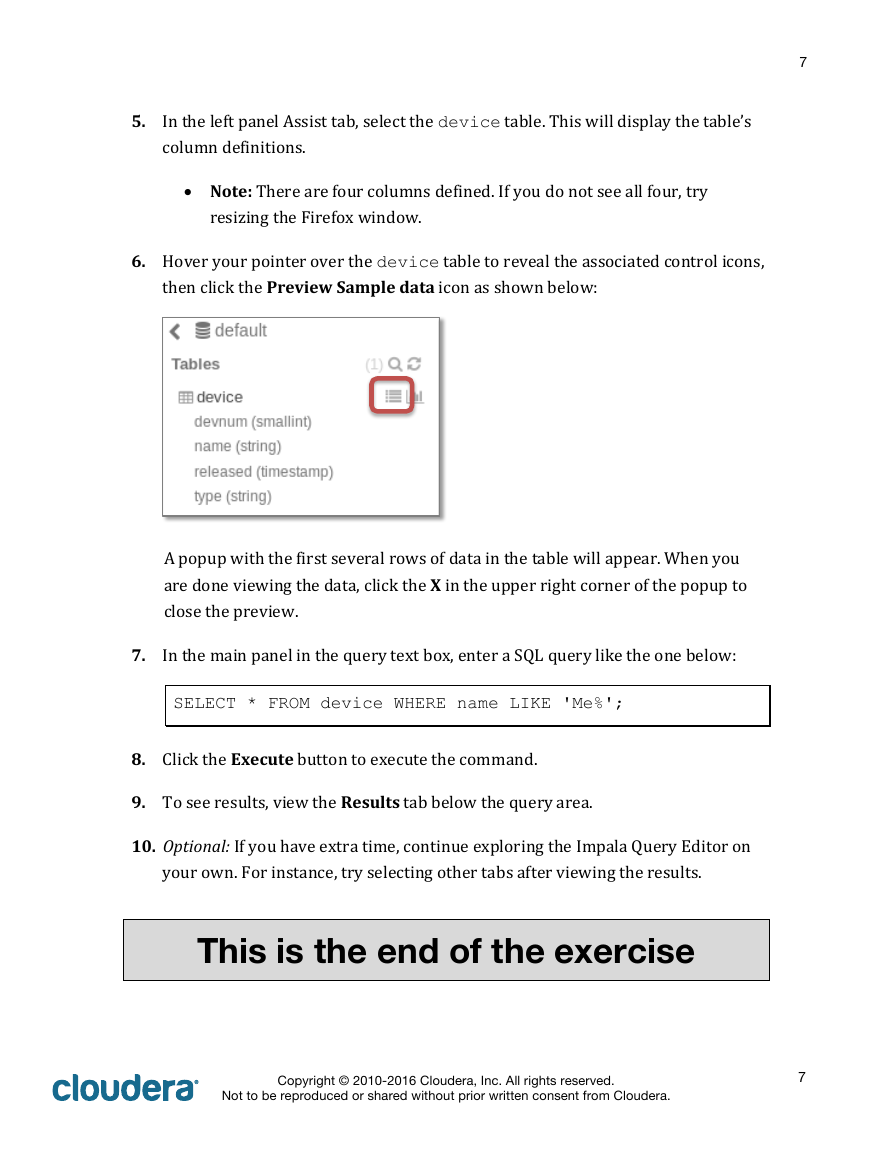

5. In the left panel Assist tab, select the device table. This will display the table’s

column definitions.

• Note: There are four columns defined. If you do not see all four, try

resizing the Firefox window.

6. Hover your pointer over the device table to reveal the associated control icons,

then click the Preview Sample data icon as shown below:

7

SELECT * FROM device WHERE name LIKE 'Me%';

A popup with the first several rows of data in the table will appear. When you

are done viewing the data, click the X in the upper right corner of the popup to

close the preview.

7. In the main panel in the query text box, enter a SQL query like the one below:

8. Click the Execute button to execute the command.

9. To see results, view the Results tab below the query area.

10. Optional: If you have extra time, continue exploring the Impala Query Editor on

your own. For instance, try selecting other tabs after viewing the results.

This is the end of the exercise

Copyright © 2010-2016 Cloudera, Inc. All rights reserved.

Not to be reproduced or shared without prior written consent from Cloudera.

7

�

Hands-On Exercise: Access HDFS

with the Command Line and Hue

8

Files and Data Used in This Exercise:

Data files (local):

$DEVDATA/kb/*

$DEVDATA/base_stations.tsv

Exploring the HDFS Command Line Interface

In this exercise, you will practice working with HDFS, the Hadoop Distributed

File System.

You will use the HDFS command line tool and the Hue File Browser web-based

interface to manipulate files in HDFS.

The simplest way to interact with HDFS is by using the hdfs command. To execute

filesystem commands within HDFS, use the hdfs dfs command.

1. Open a terminal window by double-clicking the Terminal icon on the desktop.

2. Enter:

This shows you the contents of the root directory in HDFS. There will be

multiple entries, one of which is /user. Each user has a “home” directory

under this directory, named after their username; your username in this course

is training, therefore your home directory is /user/training.

3. Try viewing the contents of the /user directory by running:

You will see your home directory in the directory listing.

$ hdfs dfs -ls /

$ hdfs dfs -ls /user

Copyright © 2010-2016 Cloudera, Inc. All rights reserved.

Not to be reproduced or shared without prior written consent from Cloudera.

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc