OPENNI MIGRATION GUIDE

OpenNI/NiTE 2Migration GuideTransitioning from OpenNI/NiTE 1.5 to

OpenNI/NiTE 2Document Version 1.0March 2013

Disclaimer and Proprietary Information Notice

The information contained in this document is subject to change without notice and does not

represent

a commitment by PrimeSense Ltd. PrimeSense Ltd.

and its subsidiaries make no warranty of any kind with regard to this material,

including, but not limited to implied warranties of merchantability

and fitness for a particular purpose whether arising out of law, custom, conduct or otherwise.

While the information contained herein is assumed to be accurate, PrimeSense Ltd. assumes no

responsibility

for any errors or omissions

contained herein, and assumes no liability for special, direct, indirect

or consequential damage, losses, costs, charges, claims, demands, fees or

expenses, of any nature or kind, which are incurred in connection with the

furnishing, performance or use of this material.

This document contains proprietary information, which is protected by U.S. and international

copyright laws.

All rights reserved. No part of this document may be reproduced, photocopied or translated into

another language

without the prior written consent of PrimeSense Ltd.

License Notice

OpenNI is written and distributed under the Apache License, which means that its source code is

freely-distributed and available to the general public.

You may obtain a copy of the License at:http://www.apache.org/licenses/LICENSE-2.0[1]

NiTE is a proprietary software item of PrimeSense and is written and licensed under the NiTE

License terms, which might be amended from time to time. You should have received a copy of

the NiTE License along with NiTE.

The latest version of the NiTE License can be accessed

at:http://www.primesense.com/solutions/nite-middleware/nite-licensing-terms/[2]

Trademarks

PrimeSense, the PrimeSense logo, Natural Interaction, OpenNI, and NiTE are trademarks and

registered trademarks of PrimeSense Ltd. Other brands and their products are trademarks or

registered trademarks of their respective holders and should be noted as such.

1 Introduction

1.1 Scope

This guide is targeted at developers using OpenNI versions that came before OpenNI 2, with

particular emphasis on those developers using OpenNI1.5.2 who are interested in transitioning

�

to OpenNI 2. This guide is designed as both an aid to porting existing applications from the old

API to the new API, as well as to enable experienced OpenNI developers to learn and understand

the concepts that have changed in this new API.

This guide provides a general overview to all programming features that have changed.

Included also is a lookup table of all classes and structures in OpenNI 1.5, showing the

equivalent functionality in OpenNI 2.

1.2 Audience

The intended audience of this document is the OpenNI 1.5.x developer community interested in

moving to OpenNI 2.

1.3 Related Documentation

[1] NiTE 2 Programmerӳ Guide, PrimeSense.

1.4 Support

The first line of support for OpenNI/NiTE developers is the OpenNI.org web site. There you will

find a wealth of development resources, including ready-made sample solutions and a large and

lively community of OpenNI/NiTE developers. See:

http://www.OpenNI.org[3]

If you have any questions or require assistance that you have not been able to obtain at

OpenNI.org, please contact PrimeSense customer support at:

Support@primesense.com[4]

2 Overview

2.1 New OpenNI Design Philosophy

OpenNI 2 represents a major change in the underlying design philosophy of OpenNI. A careful

analysis of the previous OpenNI API, version 1.5, revealed that it has many features that are

rarely or never used by the developer community. When designing OpenNI 2, PrimeSense has

endeavored to greatly reduce the complexity of the API. Features have been redesigned around

basic functionality that was of most interest to the developer community. Of especial

importance is that OpenNI 2 vastly simplifies the interface for communicating with depth

sensors.

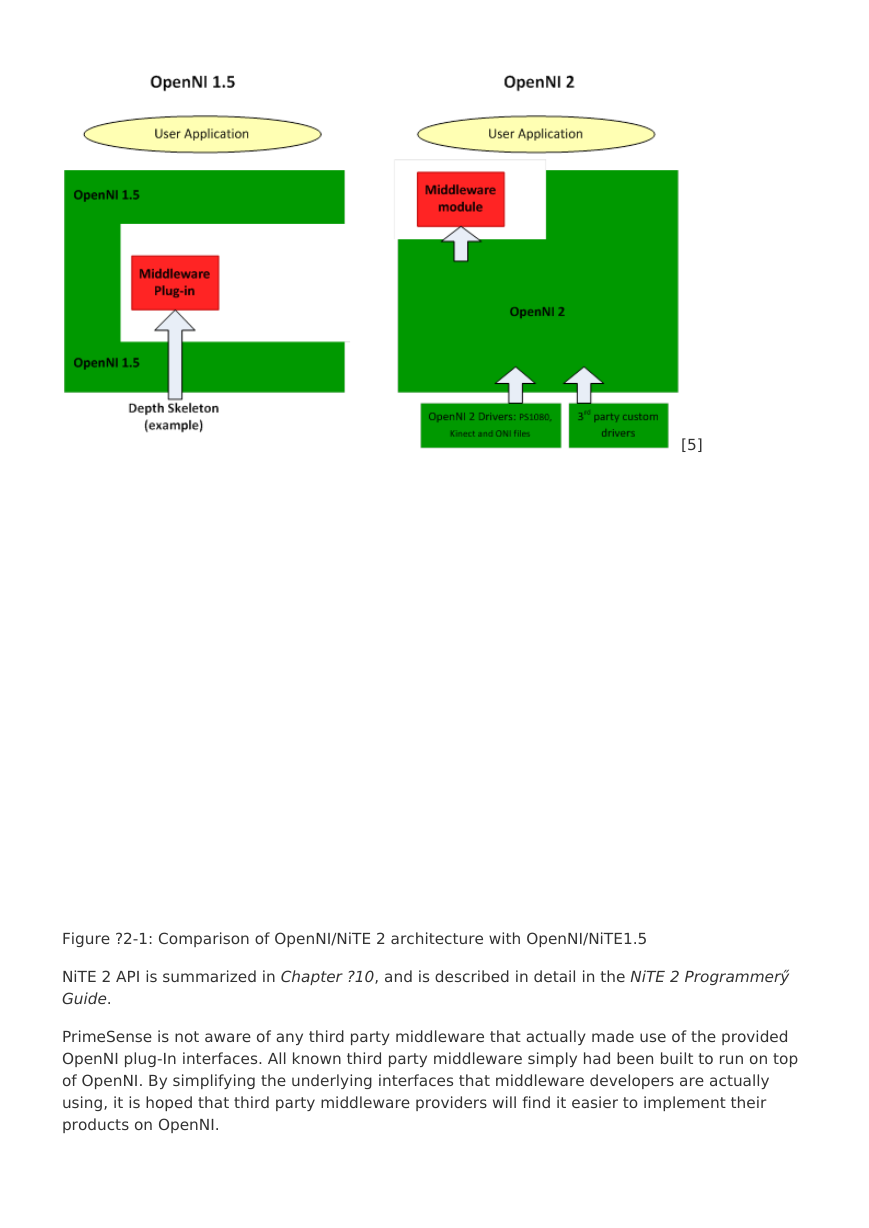

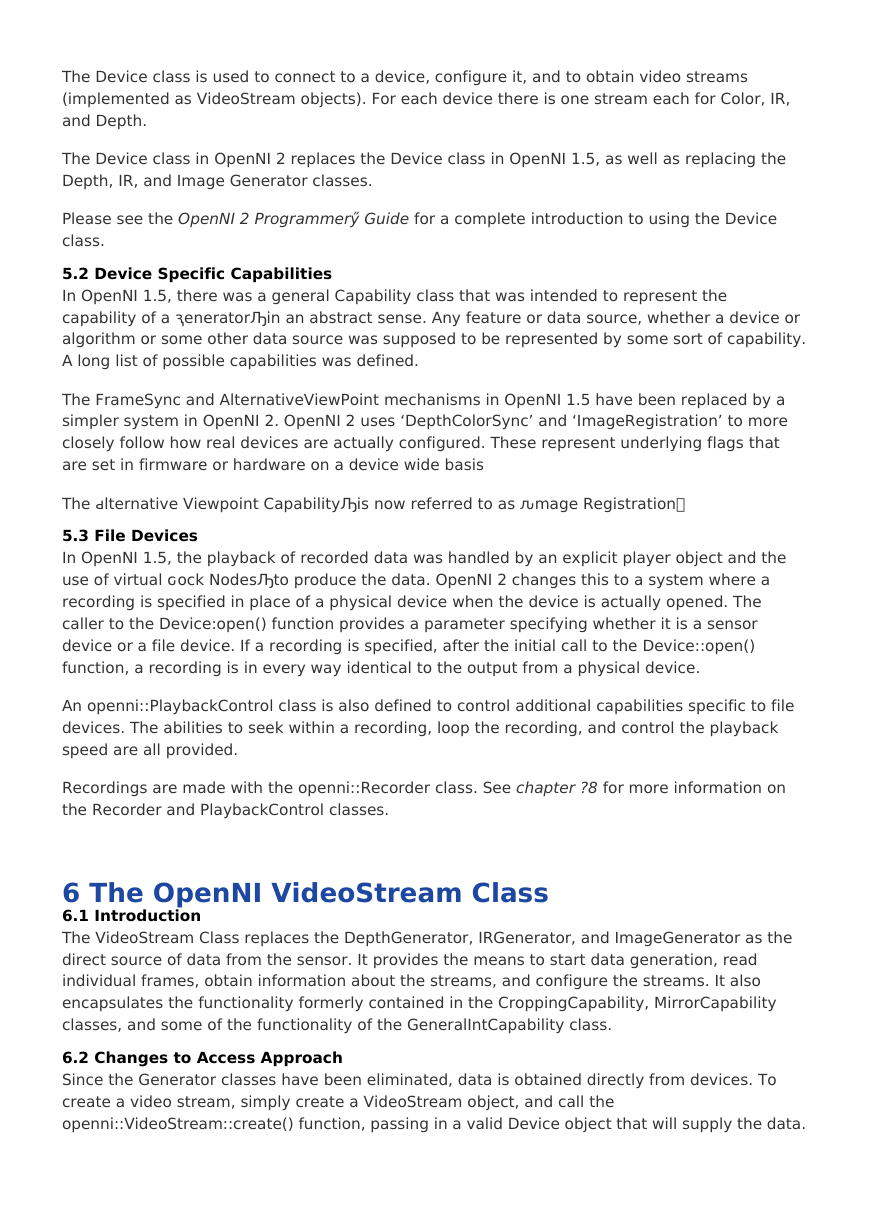

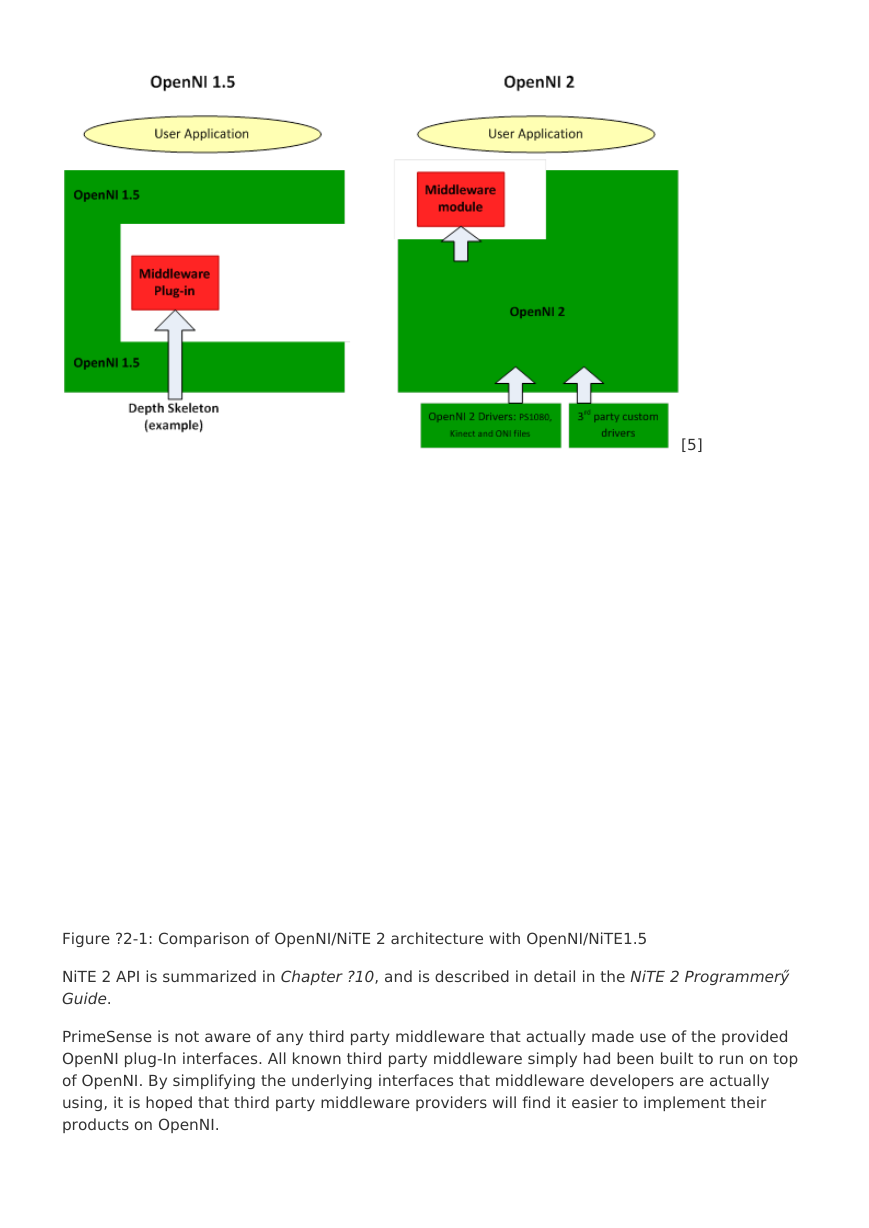

2.2 Simplified Middleware Interface

The plug-in architecture of the original OpenNI has been removed completely. OpenNI 2 is now

strictly an API for communicating with sources of depth and image information via underlying

drivers. PrimeSense NiTE middleware algorithms for interpreting depth information are now

available as a standalone middleware package on top of OpenNI, complete with their own API.

Previously, the API was through OpenNI only and you included NiTE functionality via plug-ins.

The following high level diagram compares the OpenNI/NiTE 2 architecture with the

OpenNI/NiTE1.5 architecture.

�

[5]

Figure ?2-1: Comparison of OpenNI/NiTE 2 architecture with OpenNI/NiTE1.5

NiTE 2 API is summarized in Chapter ?10, and is described in detail in the NiTE 2 Programmerӳ

Guide.

PrimeSense is not aware of any third party middleware that actually made use of the provided

OpenNI plug-In interfaces. All known third party middleware simply had been built to run on top

of OpenNI. By simplifying the underlying interfaces that middleware developers are actually

using, it is hoped that third party middleware providers will find it easier to implement their

products on OpenNI.

�

2.3 Simplified Data Types

OpenNI 1.5 had a wide variety of complex data types. For example, depth maps were wrapped

in metadata. This made it more complicated to work with many types of data that, in their raw

form, are simply arrays. OpenNI 2 achieves the following:

Unifies the data representations for IR, RGB, and depth data

Provides access to the underlying array

Eliminates unused or irrelevant metadata

OpenNI 2 allows for the possibility of not needing to double buffer incoming data

OpenNI 2 solves the double buffering problem by performing implicit double buffering. Due to

the way that frames were handled in OpenNI 1.5, the underlying SDK was always forced to

double buffer all data coming in from the sensor. This had implementation issues, complexity

problems and performance problems. The data type simplification in OpenNI 2 allows for the

possibility of not needing to double buffer incoming data. However, if desired it is still possible

to double-buffer. OpenNI 2 allocates one or more user buffers and a work buffer.

2.4 Transition from Data Centric to Device Centric

The overall design of the API can now be described as device centric, rather than data centric.

The concepts of Production Nodes, Production Graphs, Generators, Capabilities, and other such

data centric concepts, have been eliminated in favor of a much simpler model that provides

simple and direct access to the underlying devices and the data they produce. The functionality

provided by OpenNI 2 is generally the same as that provided by OpenNI 1.5.2, but the

complicated metaphors for accessing that functionality are gone.

2.5 Easier to Learn and Understand

It is expected that the new API will be much easier for programmers to learn and begin using.

OpenNI 1.5.2 had nearly one hundred classes, as well as over one hundred supporting data

structures and enumerations. In OpenNI 2, this number has been reduced to roughly a dozen,

with another dozen or so supporting data types. The core functionality of the API can be

understood by learning about just four central classes:

openni::OpenNI

openni::Device

openni::VideoStream

openni::VideoFrameRef

Unfortunately, this redesign has required us to break backward compatibility with OpenNI 1.5.2.

The decision to do this was not made lightly; however, it was deemed necessary in order to

achieve the design goals of the new API.

2.6 Event Driven Depth Access

In OpenNI 1.5 and before, depth was accessed by placing a loop around a blocking function that

provided new frames as they arrive. Until a new frame arrived the thread would be blocked.

OpenNI 2 still has this functionality, but it also provides callback functions for event driven

depth reading. These callback functions are invoked on sensors becoming available (further

sensors can be connected to the host system while OpenNI is running), and on depth becoming

available.

�

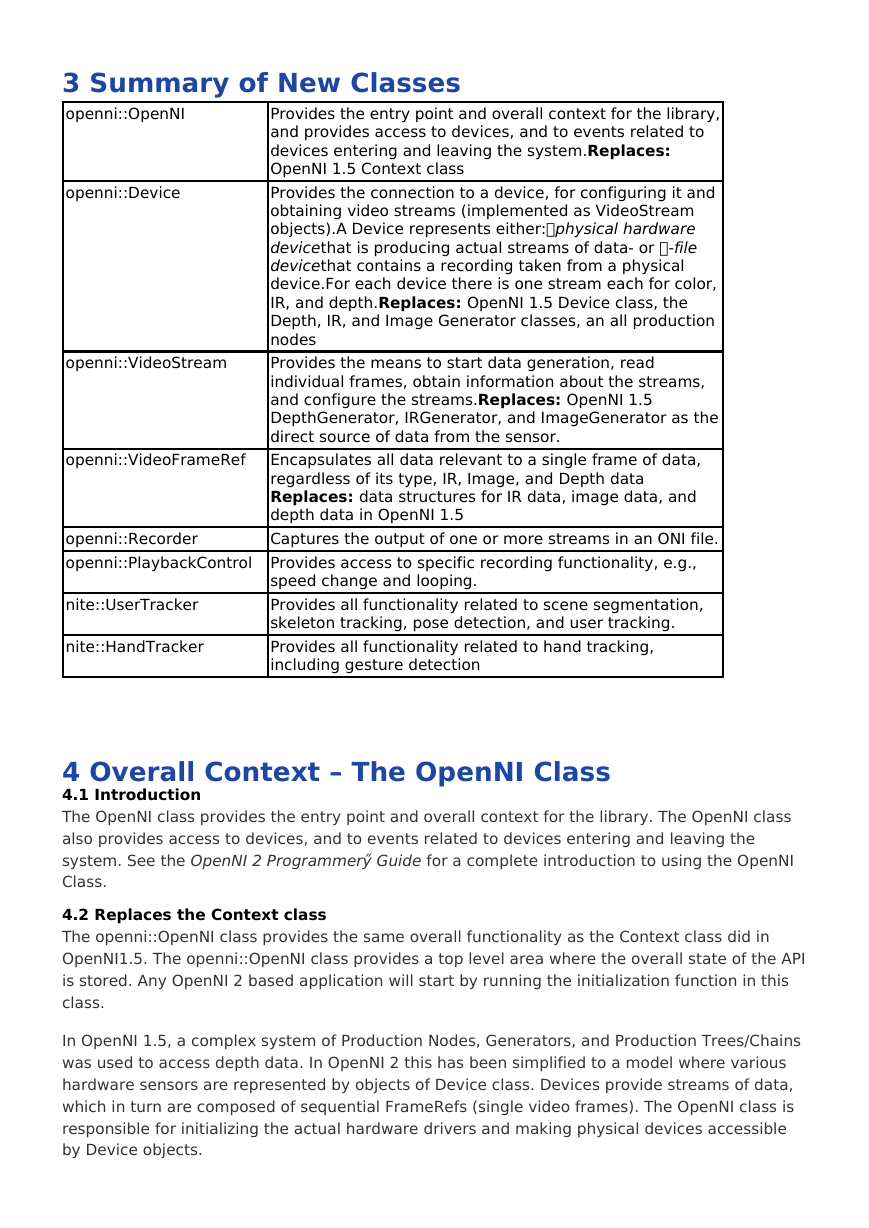

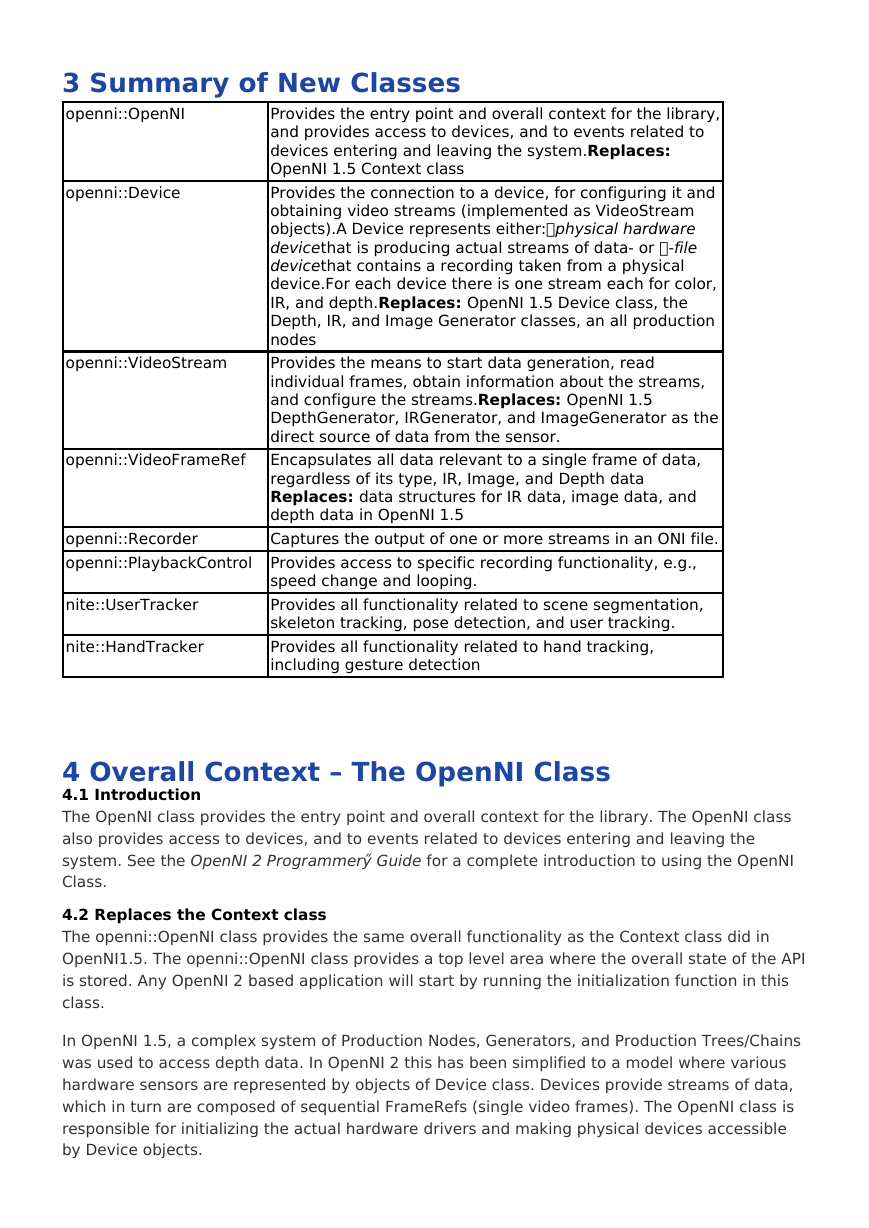

3 Summary of New Classes

openni::OpenNI

openni::Device

openni::VideoStream

openni::VideoFrameRef

nite::UserTracker

nite::HandTracker

Provides the entry point and overall context for the library,

and provides access to devices, and to events related to

devices entering and leaving the system.Replaces:

OpenNI 1.5 Context class

Provides the connection to a device, for configuring it and

obtaining video streams (implemented as VideoStream

objects).A Device represents either:ޠphysical hardware

devicethat is producing actual streams of data- or ޠ-file

devicethat contains a recording taken from a physical

device.For each device there is one stream each for color,

IR, and depth.Replaces: OpenNI 1.5 Device class, the

Depth, IR, and Image Generator classes, an all production

nodes

Provides the means to start data generation, read

individual frames, obtain information about the streams,

and configure the streams.Replaces: OpenNI 1.5

DepthGenerator, IRGenerator, and ImageGenerator as the

direct source of data from the sensor.

Encapsulates all data relevant to a single frame of data,

regardless of its type, IR, Image, and Depth data

Replaces: data structures for IR data, image data, and

depth data in OpenNI 1.5

Captures the output of one or more streams in an ONI file.

speed change and looping.

Provides all functionality related to scene segmentation,

skeleton tracking, pose detection, and user tracking.

Provides all functionality related to hand tracking,

including gesture detection

openni::Recorder

openni::PlaybackControl Provides access to specific recording functionality, e.g.,

4 Overall Context – The OpenNI Class

4.1 Introduction

The OpenNI class provides the entry point and overall context for the library. The OpenNI class

also provides access to devices, and to events related to devices entering and leaving the

system. See the OpenNI 2 Programmerӳ Guide for a complete introduction to using the OpenNI

Class.

4.2 Replaces the Context class

The openni::OpenNI class provides the same overall functionality as the Context class did in

OpenNI1.5. The openni::OpenNI class provides a top level area where the overall state of the API

is stored. Any OpenNI 2 based application will start by running the initialization function in this

class.

In OpenNI 1.5, a complex system of Production Nodes, Generators, and Production Trees/Chains

was used to access depth data. In OpenNI 2 this has been simplified to a model where various

hardware sensors are represented by objects of Device class. Devices provide streams of data,

which in turn are composed of sequential FrameRefs (single video frames). The OpenNI class is

responsible for initializing the actual hardware drivers and making physical devices accessible

by Device objects.

�

Note that openni::OpenNI is implemented as a collection of static functions. Unlike the Context

class in OpenNI 1.5, it does not need to be instantiated. Simply call the initialize() function to

make the API ready to use.

4.3 Device Events (New Functionality)

OpenNI 2 now provides the following device events:

Device added to the system

Device deleted from the system

Device reconfigured

These events are implemented using an OpenNI::Listener class and various callback registration

functions. See the OpenNI 2 Programmerӳ Guide for details. The EventBasedRead sample

provided with OpenNI 2 provides example code showing how to use this functionality.

4.4 Miscellaneous Functionality

The various error types implemented in OpenNI 1.5 via the EnumerationErrors class have been

replaced with simple return codes. Translation of these codes into human readable strings is

handled by openni::OpenNI. This replaces the Xn::EnumerationErrors class from OpenNI 1.5.

OpenNI 1.5 provided a specific xn::Version class and XnVersion structure to deal with API

version information. In OpenNI 2, the OniVersion structure is provided to store the API version.

This information is accessed via a simple call to the openni::OpenNI::Version() function. This

replaces the xn::Version class and the XnVersion structure from OpenNI 1.5.

5 The OpenNI Device Class

5.1 Introduction

In OpenNI 1.5, data was produced by Ԑroduction NodesԮ There was a mechanism whereby

individual Production Nodes could specify dependency on each other, but in principal there was

(for example) no difference between a Depth Generator node that was providing data from a

camera, and a Hands Generator node creating hand points from that same data. The end

application was not supposed to care whether the hand points it was using were generated from

a depth map, or some other data source.

The old approach had a certain symmetry in how data was accessed, but it ignored the

underlying reality that in all real cases the raw data produced by a hardware device is either a

RGB video stream, an IR stream, or a Depth stream. All other data is produced directly from

these streams by middleware.

OpenNI 2 has switched to a metaphor that much more closely mimics the real life situation. In

doing so, we were able to reduce the complexity of interacting with hardware, the data it

produces, and the middleware that acts on that hardware.

The base of the new hierarchy is the Device class. A Device represents either:

ޠphysical hardware device that is producing actual streams of data

– or -

ޠfile device that contains a recording taken from a physical device.

�

The Device class is used to connect to a device, configure it, and to obtain video streams

(implemented as VideoStream objects). For each device there is one stream each for Color, IR,

and Depth.

The Device class in OpenNI 2 replaces the Device class in OpenNI 1.5, as well as replacing the

Depth, IR, and Image Generator classes.

Please see the OpenNI 2 Programmerӳ Guide for a complete introduction to using the Device

class.

5.2 Device Specific Capabilities

In OpenNI 1.5, there was a general Capability class that was intended to represent the

capability of a ԇeneratorԠin an abstract sense. Any feature or data source, whether a device or

algorithm or some other data source was supposed to be represented by some sort of capability.

A long list of possible capabilities was defined.

The FrameSync and AlternativeViewPoint mechanisms in OpenNI 1.5 have been replaced by a

simpler system in OpenNI 2. OpenNI 2 uses ‘DepthColorSync’ and ‘ImageRegistration’ to more

closely follow how real devices are actually configured. These represent underlying flags that

are set in firmware or hardware on a device wide basis

The ԁlternative Viewpoint CapabilityԠis now referred to as ԉmage RegistrationԮ

5.3 File Devices

In OpenNI 1.5, the playback of recorded data was handled by an explicit player object and the

use of virtual ԍock NodesԠto produce the data. OpenNI 2 changes this to a system where a

recording is specified in place of a physical device when the device is actually opened. The

caller to the Device:open() function provides a parameter specifying whether it is a sensor

device or a file device. If a recording is specified, after the initial call to the Device::open()

function, a recording is in every way identical to the output from a physical device.

An openni::PlaybackControl class is also defined to control additional capabilities specific to file

devices. The abilities to seek within a recording, loop the recording, and control the playback

speed are all provided.

Recordings are made with the openni::Recorder class. See chapter ?8 for more information on

the Recorder and PlaybackControl classes.

6 The OpenNI VideoStream Class

6.1 Introduction

The VideoStream Class replaces the DepthGenerator, IRGenerator, and ImageGenerator as the

direct source of data from the sensor. It provides the means to start data generation, read

individual frames, obtain information about the streams, and configure the streams. It also

encapsulates the functionality formerly contained in the CroppingCapability, MirrorCapability

classes, and some of the functionality of the GeneralIntCapability class.

6.2 Changes to Access Approach

Since the Generator classes have been eliminated, data is obtained directly from devices. To

create a video stream, simply create a VideoStream object, and call the

openni::VideoStream::create() function, passing in a valid Device object that will supply the data.

�

Once initialized, there are two options for obtaining data: a polling loop, and event driven

access.

Polling is most similar to the functionality of the WaitXUpdateX() family in OpenNI 1.5. To

perform polling , call the openni::VideoStream::start() function on a properly initialized

VideoStream object. (A properly initialized VideoStream object is where a device has been

opened, a stream has been associated with the device, and the start command has been given.

Then, call the openni::VideoStream::readframe() function. This function will block until a new

frame of data is read.

To access data in an event driven manner, you need to implement a class that extends the

openni::VideoStream::Listener class, and write a callback function to handle each new data

frame. See the OpenNI 2 Programmerӳ Guide for more information.

6.3 Getting Stream Information

The openni::VideoStream::getSensorInfo() function is provided to obtain a SensorInfo object for

an initialized video stream. This object allows enumeration of available video modes supported

by the sensor.

The openni::VideoMode object encapsulates the resolution, frame rate, and pixel format of a

given VideoMode. Openni::VideoStream::getVideoMode() will provide the current VideoMode

setting for a given stream.

Functions are also provided by the VideoStream class that enable you to check resolution, and

obtain minimum and maximum values for a depth stream.

6.4 Configuring Streams

To change the configuration of a stream, use the OpenNI::VideoStream:setVideoMode() function.

A valid VideoMode should first be obtained from the list contained in a SensorInfo object

associated with a VideoStream, and then passed in using setVideoMode().

6.5 Mirroring Data

Instead of a ԍirroring CapabilityԬ mirroring is now simply a flag set at the VideoStream level.

Use the VideoStream::setMirroringEnabled() function to turn it on and off.

6.6 Cropping

Instead of a ԃropping CapabilityԬ cropping is now set at the VideoStream level. This is done

directly with the VideoStream::setCropping() function. The parameters to be passed are no

longer encapsulated in a ԃroppingԠobject as they were in OpenNI 1.5 ֠they are now simply a

collection of 4 integers.

6.7 Other Properties

The ԇeneral IntԠcapabilities have been replaced with simple get and set functions for integer

based sensor settings. Use of these should be rare, however, since all of the commonly used

properties

(i.e., mirroring, cropping, resolution, and others) are encapsulated by other functions rather

than manipulated directly.

.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc