QUESTION 1

You've been brought in as solutions architect to assist an enterprise customer with their migration of

an e-commerce platform to Amazon Virtual Private Cloud (VPC).

The previous architect has already deployed a 3-tier VPC. The

configuration is as follows:

VPC: vpc-2f8bc447

IGW: igw-2d8bc445

NACL: ad-208bc448

Subnets and Route Tables: Web

servers: subnet-258bc44d

Application servers: subnet-248bc44c

Database servers: subnet-9189c6f9 Route

Tables:

rtb-218bc449

rtb-238bc44b

Associations:

subnet-258bc44d : rtb-218bc449

subnet-248bc44c : rtb-238bc44b

subnet-9189c6f9 : rtb-238bc44b

You are now ready to begin deploying EC2 instances into the VPC. Web servers must have direct

access to the Internet. Application and database servers cannot have direct access to the Internet.

Which configuration below will allow you the ability to remotely administer your application and

database servers, as well as allow these servers to retrieve updates from the Internet?

A. Create a bastion and NAT instance in subnet-258bc44d, and add a route from rtb-238bc44b to

the NAT instance.整个题库只有此选项出现2个NAT

B. Add a route from rtb-238bc44b to igw-2d8bc445 and add a bastion and NAT instance within

subnet-248bc44c.

C. Create a bastion and NAT instance in subnet-248bc44c, and add a route from rtb-238bc44b to

subnet-258bc44d.

D. Create a bastion and NAT instance in subnet-258bc44d, add a route from rtb-238bc44b to

Igw-2d8bc445, and a new NACL that allows access between subnet-258bc44d and subnet-

248bc44c.

Answer: A

QUESTION 2

You are designing the network infrastructure for an application server in Amazon VPC. Users will

access all the application instances from the Internet, as well as from an on-premises network.

The on-premises network is connected to your VPC over an AWS Direct Connect link. How

would you design routing to meet the above requirements?

A. Configure a single routing table with a default route via the Internet gateway. Propagate a

default route via BGP on the AWS Direct Connect customer router. Associate the routing

table with all VPC subnets.

B. Configure a single routing table with a default route via the Internet gateway.

Propagate specific routes for the on-premises networks出现就选via BGP on the AWS Direct Connect

customer router. Associate the routing table with all VPC subnets.

C. Configure two routing tables: one that has a default route via the Internet gateway, and another that has a

default route via the VPN gateway.

Associate both routing tables with each VPC subnet.

D. Configure a single routing table with two default routes: one to the Internet via an Internet gateway, the

other to the on-premises network via the VPN gateway.

Use this routing table across all subnets in your VPC.

Answer: B

QUESTION 3

Within the IAM service a GROUP is regarded as a:

A. A collection of AWS accounts

B. It's the group of EC2 machines that gain the permissions specified in the GROUP.

C. There's no GROUP in IAM, but only USERS and RESOURCES.

�

D. A collection of users.

Answer: D

QUESTION 4

Amazon EC2 provides a repository of public data sets that can be seamlessly integrated into AWS

cloud-based applications.

What is the monthly charge for using the public data sets?

A.

B.

C.

D.

A 1 time charge of 10$ for all the datasets.

1$ per dataset per month

10$ per month for all the datasets

There is no charge for using the public data sets整个题库只有此题有10$,选择不需要花钱的选项

Answer: D

QUESTION 5

In the Amazon RDS Oracle DB engine, the Database Diagnostic Pack and the Database Tuning

Pack are only available with oracle Enterprise Edition

QUESTION 6

You have deployed a web application, targeting a global audience across multiple AWS Regions

under the domain name example.com.

You decide to use Route53 Latency-Based Routing to serve web requests to users from the

region closest to the user. To provide business continuity in the event of server downtime you

configure weighted record sets associated with two web servers in separate Availability Zones

per region. During a DR test you notice that when you disable all web servers in one of the

regions Route53 does not automatically direct all users to the other region.

What could be happening? Choose 2 answers

A. You did not set "Evaluate Target Health" to 'Yes"出现就选 on the latency alias resource

record set associated with example.com in the region where you disabled the servers

B. The value of the weight associated with the latency alias resource record set in the region with

the disabled servers is higher than the weight for the other region

C. One of the two working web servers in the other region did not pass its HTTP health check

D. Latency resource record sets cannot be used in combination with weighted resource record sets

E. 关键词You did not setup an HTTP health check for one or more of the weighted resource

record sets associated with the disabled web servers

Answer: AE

QUESTION 7

An international company has deployed a multi-tier web application that relies on DynamoDB in a

single region. For regulatory reasons they need disaster recovery capability in a separate region

with a Recovery Time Objective of 2 hours and a Recovery Point Objective of 24 hours. They

should synchronize their data on a regular basis and be able to provision the web application

rapidly using CloudFormation.

The objective is to minimize changes to the existing web application, control the throughput of

DynamoDB used for the synchronization of data, and synchronize only the modified elements.

Which design would you choose to meet these requirements?

A. Use AWS Data Pipeline to schedule a DynamoDB cross region copy once a day, create a

"LastUpdated出现就选" attribute in your DynamoDB table that would represent the timestamp of

the last update and use it as a filter

B. Use AWS Data Pipeline to schedule an export of the DynamoDB table to S3 in the current region

once a day, then schedule another task Immediately after it that will import data from S3 to

DynamoDB in the other region

C. Use EMR and write a custom script to retrieve data from DynamoDB in the current region using a

SCAN operation and push it to DynamoDB in the second region

D. Send also each write into an SQS queue in the second region, use an auto-scaling group behind

the SQS queue to replay the write in the second region

Answer: A

QUESTION 8

Your company currently has a 2-tier web application running in an on-premises data center.

�

You have experienced several infrastructure failures in the past few months resulting in significant

financial losses. Your CIO is strongly considering moving the application to AWS. While working

on achieving buy-In from the other company executives, he asks you to develop a disaster

recovery plan to help improve business continuity in the short term. He specifies a target

Recovery Time Objective (RTO) of 4 hours and a Recovery Point Objective (RPO) of 1 hour or

less. He also asks you to implement the solution within 2 weeks.

Your database is 200GB in size and you have a 20Mbps Internet connection.

How would you do this while minimizing costs?

A. Create an EBS backed private AMI which includes a fresh install of your application.

Develop a CloudFormation template which includes your AMI and the required EC2, AutoScaling,

and ELB resources to support deploying the application across Multiple-Availability-Zones出现就

选. Asynchronously replicate transactions from your on-premises database to a database instance

in AWS across a secure VPN connection.

B. Deploy your application on EC2 instances within an Auto Scaling group across multiple

availability zones. Asynchronously replicate transactions from your on-premises database to a

database instance in AWS across a secure VPN connection.

C. Create an EBS backed private AMI which includes a fresh install of your application.

Setup a script in your data center to backup the local database every 1 hour and to encrypt and

copy the resulting file to an S3 bucket using multi-part upload.

D. Install your application on a compute-optimized EC2 instance capable of supporting the

application's average load.

Synchronously replicate transactions from your on-premises database to a database instance in

AWS across a secure Direct Connect connection.

Answer: A

QUESTION 9

You would like to create a mirror image of your production environment in another region for

disaster recovery purposes.

Which of the following AWS resources do not need to be recreated in the second region? Choose

2 answers

A. Route S3 Record Sets出现就选

B. Launch Configurations

C. EC2 Key Pairs

D. Security Groups

E.

F. Elastic IP Addresses (EIP)

IAM Roles选项单独出现就选

Answer: AE

QUESTION 10

Your startup wants to implement an order fulfillment process for selling a personalized gadget

that needs an average of 3-4 days to produce with some orders taking up to 6 months.

You expect 10 orders per day on your first day, 1000 orders per day after 6 months and 10,000

orders after 12 months. Orders coming in are checked for consistency, then dispatched to your

manufacturing plant for production, quality control, packaging, shipment and payment processing.

If the product does not meet the quality standards at any stage of the process, employees may

force the process to repeat a step. Customers are notified via email about order status and any

critical issues with their orders such as payment failure.

Your base architecture includes AWS Elastic Beanstalk for your website with an RDS MySQL

instance for customer data and orders.

How can you implement the order fulfillment process while making sure that the emails are

delivered reliably?

A. Add a business process management application to your Elastic Beanstalk app servers and re-

use the RDS database for tracking order status.

Use one of the Elastic Beanstalk instances to send emails to customers.

B. Use SWF with an Auto Scaling group of activity workers and a decider instance in another Auto

Scaling group with min/max=1.

Use SES to send emails to customers. 结合出现就选

�

C. Use an SQS queue to manage all process tasks. Use an Auto Scaling group of EC2 instances

that poll the tasks and execute them.

Use SES to send emails to customers.

D. Use SWF with an Auto Scaling group of activity workers and a decider instance in another Auto

Scaling group with min/max=1.

Use the decider instance to send emails to customers.

Answer: B

QUESTION 11

Your company runs a customer facing event registration site. This site is built with a 3-tier

architecture with web and application tier servers and a MySQL database. The application

requires 6 web tier servers and 6 application tier servers for normal operation, but can run on a

minimum of 65% server capacity and a single MySQL database.

When deploying this application in a region with three availability zones (AZs), which

architecture provides high availability?

A. A web tier deployed across 2 AZs with 3 EC2 (Elastic Compute Cloud) instances in each A2

inside an Auto Scaling Group behind an ELB (elastic load balancer), and an application tier

deployed across 2 AZs with 3 EC2 instances In each AZ inside an Auto Scaling Group behind an

ELB, and one RDS (Relational Database Service) instance deployed with read replicas in the

other AZ.

B. A web tier deployed across 3 AZs with 2 EC2 结合出现就选 (Elastic Compute Cloud) instances in

each A

inside an Auto Scaling Group behind an ELB (elastic load balancer), and an application tier

deployed across 3 AZs with 2 EC2 instances In each AZ inside an Auto Scaling Group behind an

ELB, and a Multi-AZ RDS (Relational Database Service) deployment.

C. A web tier deployed across 2 AZs with 3 EC2 (Elastic Compute Cloud) instances in each AZ

inside an Auto Scaling Group behind an ELB (elastic load balancer), and an application tier

deployed across 2 AZs with 3 EC2 instances in each AZ inside an Auto Scaling Group behind an

ELB, and a Multi-AZ RDS (Relational Database Service) deployment

D. A web tier deployed across 3 AZs with 2 EC2 (Elastic Compute Cloud) instances in each AZ inside

an Auto Scaling Group behind an ELB (elastic load balancer), and an application tier deployed

across 3 AZs with 2 EC2 instances in each AZ inside an Auto Scaling Group behind an ELB, and

one RDS (Relational Database Service) instance deployed with read replicas in the two other AZs.

Answer: B

QUESTION 12

Your application is using an ELB in front of an Auto Scaling group of web/application servers

deployed across two AZs and a Multi-AZ RDS Instance for data persistence. The database CPU

is often above 80% usage and 90% of I/O operations on the database are reads. To improve

performance you recently added a single-node Memcached ElastiCache Cluster to cache

frequent DB query results. In the next weeks the overall workload is expected to grow by 30%.

Do you need to change anything in the architecture to maintain the high availability of the

application with the anticipated additional load? Why

A. Yes, you should deploy two Memcached ElastiCache Clusters in different AZs because the RDS

instance will not be able to handle the load if the cache node fails. Cpu超过80-90%,需要选择 避免

出现fails,部署不同的AZ

B. No, if the cache node fails you can always get the same data from the DB without having any

availability impact.

C. No, if the cache node fails the automated ElastiCache node recovery feature will prevent any

availability impact.

D. Yes, you should deploy the Memcached ElastiCache Cluster with two nodes in the same AZ as the

RDS DB master instance to handle the load if one cache node fails.

Answer: A

QUESTION 13

�

An ERP application is deployed across multiple AZs in a single region. In the event of failure, the

Recovery Time Objective (RTO) must be less than 3 hours, and the Recovery Point Objective

(RPO) must be 15 minutes. The customer realizes that data corruption occurred roughly 1.5

hours ago. What DR strategy could be used to achieve this RTO and RPO in the event of this kind

of failure?

A. Take 15 minute DB backups stored in Glacier with transaction logs stored in S3 every 5 minutes.

B. Use synchronous database master-slave replication between two availability zones.

C. Take hourly DB backups to EC2 instance store volumes with transaction logs stored In S3 every 5

minutes.

D. Take hourly DB backups to S3, with transaction logs stored in S3 every 5 minutes. 需要1.5小时数据的恢

复,所以一小时单位备份,并每5分钟log备份

Answer: D

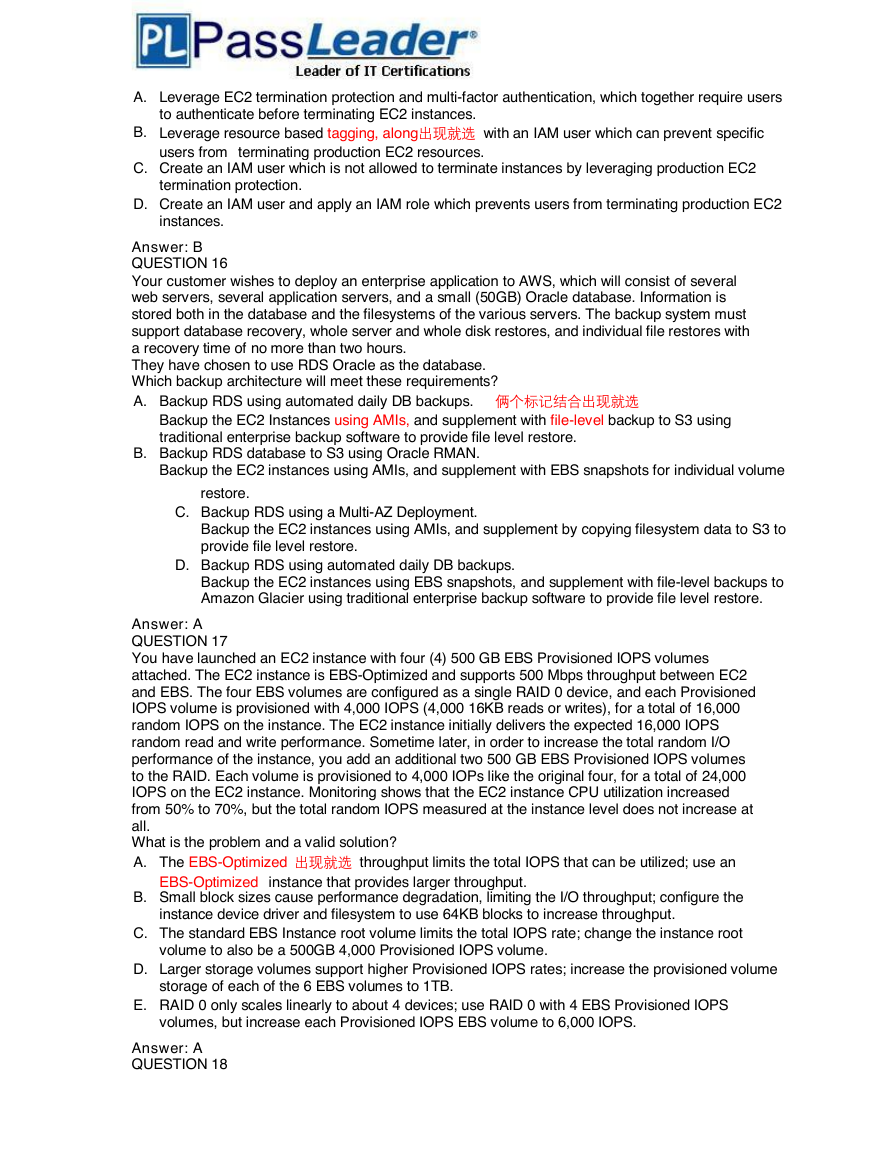

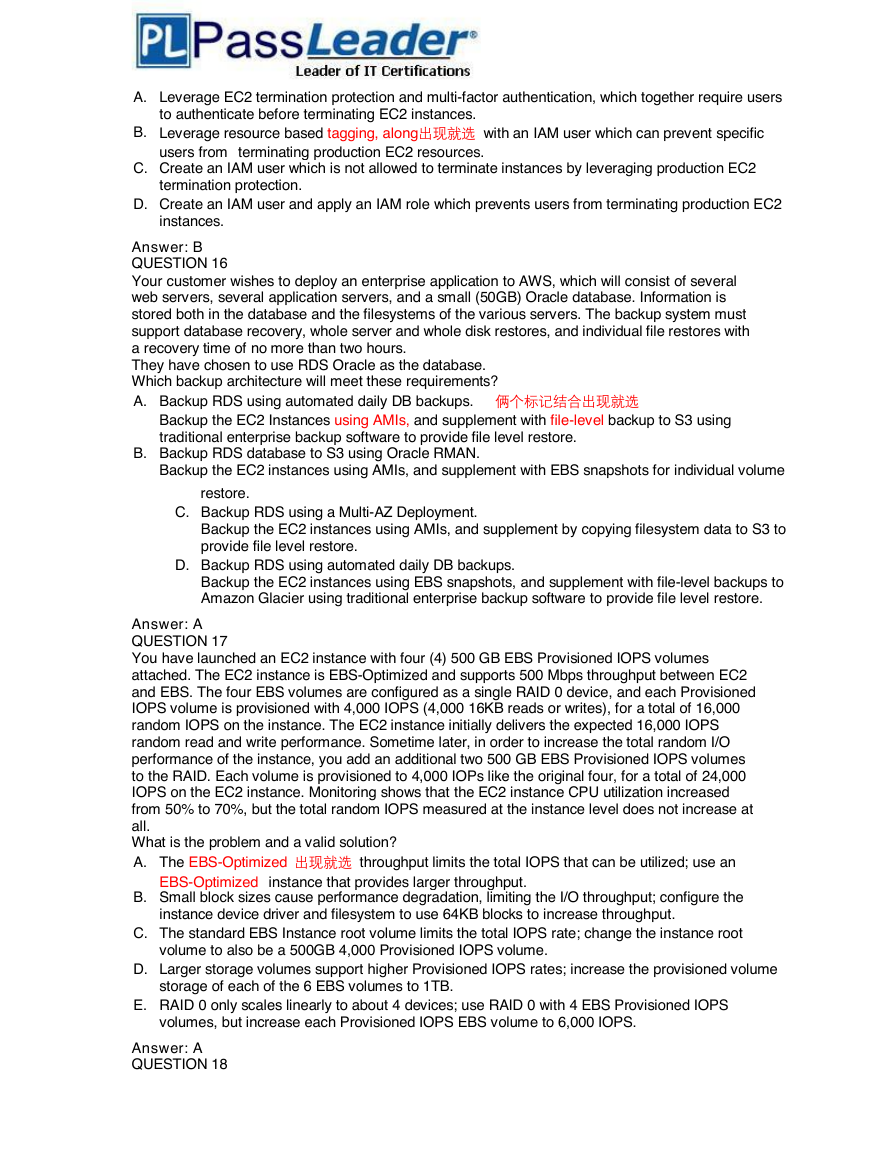

QUESTION 14

Refer to the architecture diagram above of a batch processing solution using Simple Queue

Service (SQS) to set up a message queue between EC2 instances which are used as batch

processors. CloudWatch monitors the number of job requests (queued messages) and an Auto

Scaling group adds or deletes batch servers automatically based on parameters set in

CloudWatch alarms.

You can use this architecture to implement which of the following features in a cost effective and

efficient manner?

A. Coordinate‘协调’出现就选 number of EC2 instances with number of Job requests automatically, thus improving cost

B. Reduce the overall time for executing Jobs through parallel processing by allowing a busy EC2 instance that

effectiveness.

C.

receives a message to pass it to the next instance in a daisy-chain setup.

Implement fault tolerance against EC2 instance failure since messages would remain in SQS and work can

continue with recovery of EC2 instances.

Implement fault tolerance against SQS failure by backing up messages to S3.

D. Handle high priority Jobs before lower priority Jobs by assigning a priority metadata field to SQS messages.

E.

Implement message passing between EC2 instances within a batch by exchanging messages

through SQS.

Answer: A

QUESTION 15

Your system recently experienced down time. During the troubleshooting process you found that

a new administrator mistakenly terminated several production EC2 instances.

Which of the following strategies will help prevent a similar situation in the future?

The administrator still must be able to:

- launch, start, stop, and terminate development resources,

- launch and start production instances.

�

A. Leverage EC2 termination protection and multi-factor authentication, which together require users

to authenticate before terminating EC2 instances.

B. Leverage resource based tagging, along出现就选 with an IAM user which can prevent specific

users from terminating production EC2 resources.

C. Create an IAM user which is not allowed to terminate instances by leveraging production EC2

termination protection.

D. Create an IAM user and apply an IAM role which prevents users from terminating production EC2

instances.

Answer: B

QUESTION 16

Your customer wishes to deploy an enterprise application to AWS, which will consist of several

web servers, several application servers, and a small (50GB) Oracle database. Information is

stored both in the database and the filesystems of the various servers. The backup system must

support database recovery, whole server and whole disk restores, and individual file restores with

a recovery time of no more than two hours.

They have chosen to use RDS Oracle as the database.

Which backup architecture will meet these requirements?

A. Backup RDS using automated daily DB backups. 俩个标记结合出现就选

Backup the EC2 Instances using AMIs, and supplement with file-level backup to S3 using

traditional enterprise backup software to provide file level restore.

B. Backup RDS database to S3 using Oracle RMAN.

Backup the EC2 instances using AMIs, and supplement with EBS snapshots for individual volume

restore.

C. Backup RDS using a Multi-AZ Deployment.

Backup the EC2 instances using AMIs, and supplement by copying filesystem data to S3 to

provide file level restore.

D. Backup RDS using automated daily DB backups.

Backup the EC2 instances using EBS snapshots, and supplement with file-level backups to

Amazon Glacier using traditional enterprise backup software to provide file level restore.

Answer: A

QUESTION 17

You have launched an EC2 instance with four (4) 500 GB EBS Provisioned IOPS volumes

attached. The EC2 instance is EBS-Optimized and supports 500 Mbps throughput between EC2

and EBS. The four EBS volumes are configured as a single RAID 0 device, and each Provisioned

IOPS volume is provisioned with 4,000 IOPS (4,000 16KB reads or writes), for a total of 16,000

random IOPS on the instance. The EC2 instance initially delivers the expected 16,000 IOPS

random read and write performance. Sometime later, in order to increase the total random I/O

performance of the instance, you add an additional two 500 GB EBS Provisioned IOPS volumes

to the RAID. Each volume is provisioned to 4,000 IOPs like the original four, for a total of 24,000

IOPS on the EC2 instance. Monitoring shows that the EC2 instance CPU utilization increased

from 50% to 70%, but the total random IOPS measured at the instance level does not increase at

all.

What is the problem and a valid solution?

A. The EBS-Optimized 出现就选 throughput limits the total IOPS that can be utilized; use an

EBS-Optimized instance that provides larger throughput.

B. Small block sizes cause performance degradation, limiting the I/O throughput; configure the

instance device driver and filesystem to use 64KB blocks to increase throughput.

C. The standard EBS Instance root volume limits the total IOPS rate; change the instance root

volume to also be a 500GB 4,000 Provisioned IOPS volume.

D. Larger storage volumes support higher Provisioned IOPS rates; increase the provisioned volume

storage of each of the 6 EBS volumes to 1TB.

E. RAID 0 only scales linearly to about 4 devices; use RAID 0 with 4 EBS Provisioned IOPS

volumes, but increase each Provisioned IOPS EBS volume to 6,000 IOPS.

Answer: A

QUESTION 18

�

A customer has a 10 GB AWS Direct Connect connection to an AWS region where they have a

web application hosted on Amazon Elastic Computer Cloud (EC2). The application has

dependencies on an on-premises mainframe database that uses a BASE (Baste Available, Soft

state, Eventual consistency) rather than an ACID (Atomicity, Consistency, Isolation, Durability)

consistency model. The application is exhibiting undesirable behavior because the database is

not able to handle the volume of writes. How can you reduce the load on your on-premises

database resources in the most cost-effective way?

A. Provision an RDS read-replica database on AWS to handle the writes and synchronize the two databases using Data

Pipeline.

B. Modify the application to use DynamoDB to feed an EMR cluster which uses a map function to write to the on-premises

database.

C. Modify the application to write to an Amazon SQS queue and develop a worker出现就选 process to flush the queue to the

on-premises database. 修改应用程序以写入Amazon SQS队列并开发工作进程以将队列刷新到本地数据库

D. Use an Amazon Elastic Map Reduce (EMR) S3DistCp as a synchronization mechanism between the on- premises

database and a Hadoop cluster on AWS.

Answer: C

QUESTION 19

Your company plans to host a large donation捐赠 website on Amazon Web Services (AWS).

You anticipate a large and undetermined amount of traffic that will create many database writes.

To be certain that you do not drop any writes to a database hosted on AWS, which service should

you use?

A. Amazon Simple Queue Service (SQS) for capturing the writes and draining the queue to write to

the database. 俩个标记结合出现就选

B. Amazon DynamoDB with provisioned write throughput up to the anticipated peak write

throughput.

C. Amazon ElastiCache to store the writes until the writes are committed to the database.

D. Amazon RDS with provisioned IOPS up to the anticipated peak write throughput.

Answer: A

QUESTION 20

You have been asked to design the storage layer for an application. The application requires disk

performance of at least 100,000 IOPS. In addition, the storage layer must be able to survive the

loss of an individual disk, EC2 instance, or Availability Zone without any data loss.

The volume you provide must have a capacity of at least 3 TB.

Which of the following designs will meet these objectives?

A. Instantiate a c3 8xlarge instance in us-east-1.

Provision 4x1TB EBS volumes, attach them to the instance, and configure them as a single RAID

5 volume.

Ensure that EBS snapshots are performed every 15 minutes.

B. Instantiate a c3 8xlarge instance in us-east-1.

Provision 3xlTB EBS volumes, attach them to the Instance, and configure them as a single RAID

0 volume.

Ensure that EBS snapshots are performed every 15 minutes.

C. Instantiate an 12 8xlarge instance in us-east-1a.

Create a RAID 0 volume using the four 800GB SSD ephemeral disks provided with the instance.

Provision 3x1TB EBS volumes, attach them to the instance, and configure them as a second

RAID 0 volume.

Configure synchronous, block-level replication from the ephemeral-backed volume to the EBS-

backed volume.

D. Instantiate a c3 8xlarge instance in us-east-1.

Provision an AWS Storage Gateway and configure it for 3 TB of storage and 100,000 IOPS.

Attach the volume to the instance.

E. Instantiate an 12 8xlarge instance in us-east-1a. 标记结合出现就选

Create a RAID 0 volume using the four 800GB SSD ephemeral disks provided with the instance.

Configure synchronous, block-level replication to an identically configured instance in us-east-1b.

Answer: E

QUESTION 21

�

Your company has HQ in Tokyo and branch offices all over the world and is using a logistics

software with a multi-regional deployment on AWS in Japan, Europe and US.

The logistic software has a 3-tier architecture and currently uses MySQL 5.6 for data persistence.

Each region has deployed its own database.

In the HQ region you run an hourly batch process reading data from every region to compute

cross-regional reports that are sent by email to all offices.

This batch process must be completed as fast as possible to quickly optimize logistics.

How do you build the database architecture in order to meet the requirements?

A. For each regional deployment, use MySQL on EC2 with a master in the region and use S3 to

copy data files hourly to the HQ region.

B. For each regional deployment, use RDS MySQL with a master in the region and send hourly RDS

snapshots to the HQ region.

C. Use Direct Connect to connect all regional MySQL deployments to the HQ region and reduce

network latency for the batch process.

D. For each regional deployment, use RDS MySQL with a master in the region and a read replica In

the HQ region.

E. For each regional deployment, use MySQL on EC2 with a master in the region and send hourly

EBS snapshots to the HQ region.

Answer: D

QUESTION 22

Company B is launching a new game app for mobile devices. Users will log into the game using

their existing social media account. To streamline data capture, Company B would like to directly

save player data and scoring information from the mobile app to a DynamoDB table named

ScoreData. When a user saves their game, the progress data will be stored to the GameState S3

bucket. What is the best approach for storing data to DynamoDB and S3?

A. Use Login with Amazon allowing users to sign in with an Amazon account providing the mobile app with access to the

ScoreData DynamoDB table and the GameState S3 bucket.

B. Use temporary security credentials出现就选 that assume a role providing access to the ScoreData DynamoDB table

and the GameState S3 bucket using web identity federation

C. Use an IAM user with access credentials assigned a role providing access to the ScoreData DynamoDB table and the

GameState S3 bucket for distribution with the mobile app

D. Use an EC2 instance that is launched with an EC2 role providing access to the ScoreData DynamoDB table and the

GameState S3 bucket that communicates with the mobile app via web services

Answer: B

QUESTION 23

You have recently joined a startup company building sensors to measure street noise and air quality

in urban areas.

The company has been running a pilot deployment of around 100 sensors for 3 months. Each sensor

uploads 1KB of sensor data every minute to a backend hosted on AWS. During the pilot, you

measured a peak of 10 IOPS on the database, and you stored an average of 3GB of sensor data per

month in the database.

The current deployment consists of a load-balanced, auto scaled Ingestion layer using EC2

instances, and a PostgreSQL RDS database with 500GB standard storage

The pilot is considered a success and your CEO has managed to get the attention of some potential

Investors. The business plan requires a deployment of at least 100k sensors which needs to be

supported by the backend.

You also need to store sensor data for at least two years to be able to compare year over year

improvements.

To secure funding, you have to make sure that the platform meets these requirements and leaves

room for further scaling.

Which setup will meet the requirements?

A. Replace the RDS instance with a 6 node Redshift cluster with 96TB of storage

B. Keep the current architecture, but upgrade RDS storage to 3TB and 10k provisioned IOPS

C. Ingest data into a DynamoDB table and move old出现就选 data to a Redshift cluster

D. Add an SQS queue to the ingestion layer to buffer writes to the RDS Instance

Answer: C

QUESTION 24

A web design company currently runs several FTP servers that their 250 customers use to upload

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc