# see issue #152

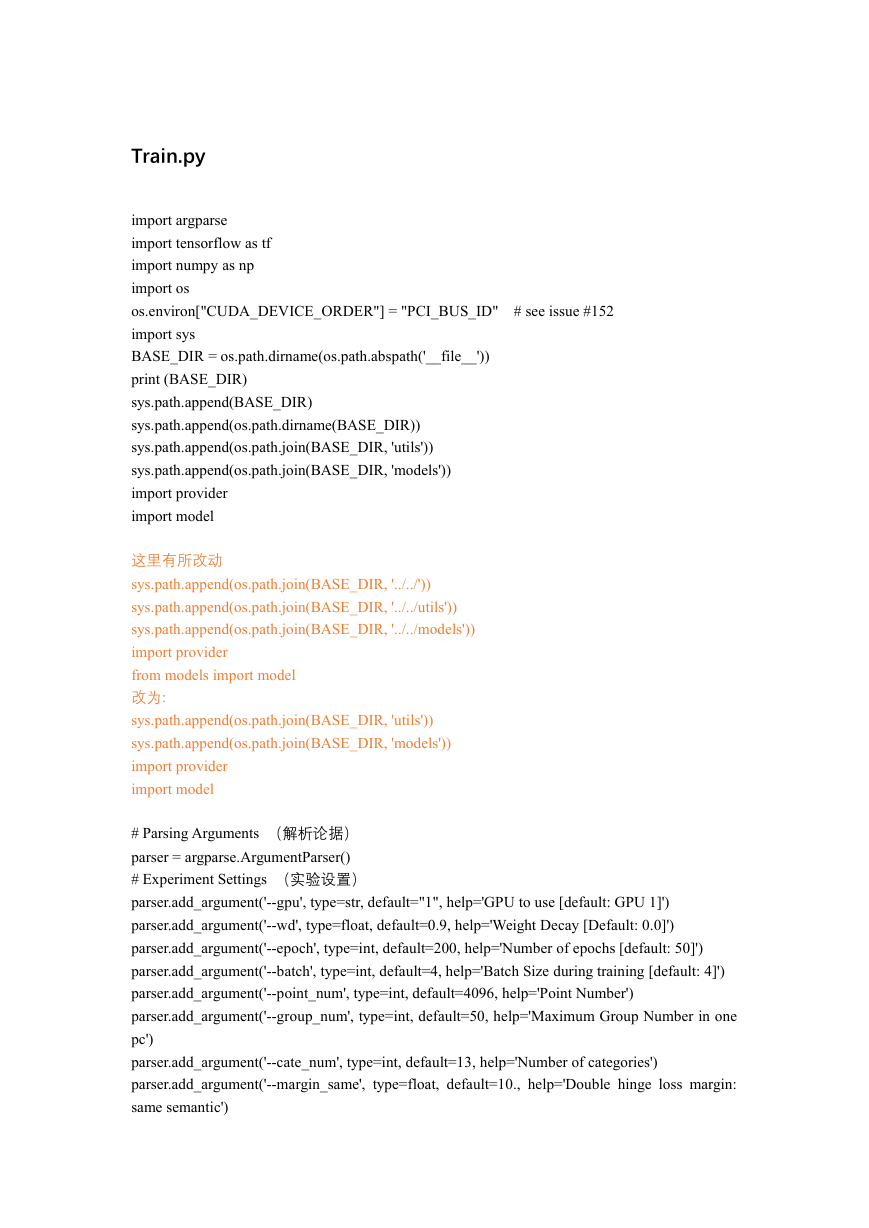

Train.py

import argparse

import tensorflow as tf

import numpy as np

import os

os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID"

import sys

BASE_DIR = os.path.dirname(os.path.abspath('__file__'))

print (BASE_DIR)

sys.path.append(BASE_DIR)

sys.path.append(os.path.dirname(BASE_DIR))

sys.path.append(os.path.join(BASE_DIR, 'utils'))

sys.path.append(os.path.join(BASE_DIR, 'models'))

import provider

import model

这里有所改动

sys.path.append(os.path.join(BASE_DIR, '../../'))

sys.path.append(os.path.join(BASE_DIR, '../../utils'))

sys.path.append(os.path.join(BASE_DIR, '../../models'))

import provider

from models import model

改为:

sys.path.append(os.path.join(BASE_DIR, 'utils'))

sys.path.append(os.path.join(BASE_DIR, 'models'))

import provider

import model

# Parsing Arguments (解析论据)

parser = argparse.ArgumentParser()

# Experiment Settings (实验设置)

parser.add_argument('--gpu', type=str, default="1", help='GPU to use [default: GPU 1]')

parser.add_argument('--wd', type=float, default=0.9, help='Weight Decay [Default: 0.0]')

parser.add_argument('--epoch', type=int, default=200, help='Number of epochs [default: 50]')

parser.add_argument('--batch', type=int, default=4, help='Batch Size during training [default: 4]')

parser.add_argument('--point_num', type=int, default=4096, help='Point Number')

parser.add_argument('--group_num', type=int, default=50, help='Maximum Group Number in one

pc')

parser.add_argument('--cate_num', type=int, default=13, help='Number of categories')

parser.add_argument('--margin_same', type=float, default=10., help='Double hinge loss margin:

same semantic')

�

parser.add_argument('--margin_diff',

different semantic')

type=float, default=80., help='Double hinge loss margin:

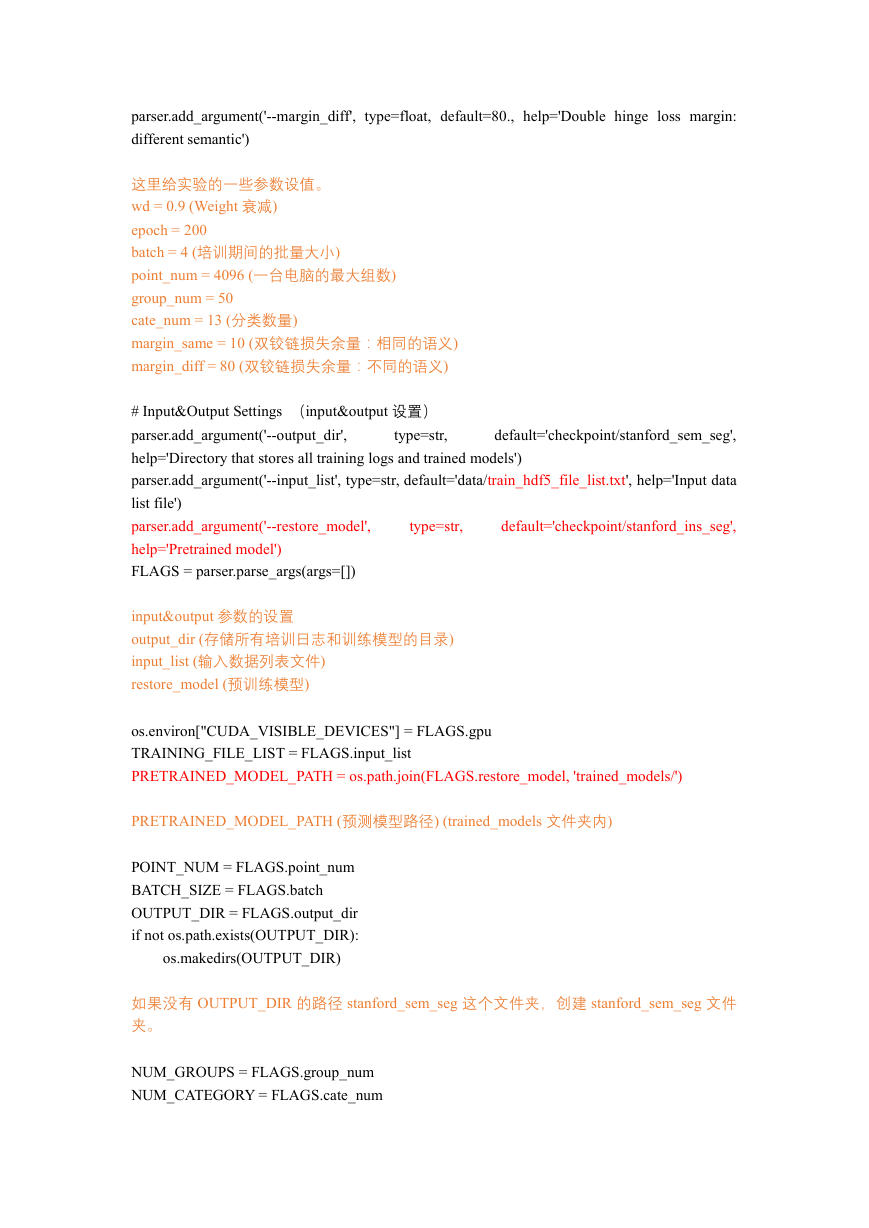

这里给实验的一些参数设值。

wd = 0.9 (Weight 衰减)

epoch = 200

batch = 4 (培训期间的批量大小)

point_num = 4096 (一台电脑的最大组数)

group_num = 50

cate_num = 13 (分类数量)

margin_same = 10 (双铰链损失余量:相同的语义)

margin_diff = 80 (双铰链损失余量:不同的语义)

# Input&Output Settings (input&output 设置)

parser.add_argument('--output_dir',

type=str,

help='Directory that stores all training logs and trained models')

parser.add_argument('--input_list', type=str, default='data/train_hdf5_file_list.txt', help='Input data

list file')

parser.add_argument('--restore_model',

help='Pretrained model')

FLAGS = parser.parse_args(args=[])

default='checkpoint/stanford_sem_seg',

type=str,

default='checkpoint/stanford_ins_seg',

input&output 参数的设置

output_dir (存储所有培训日志和训练模型的目录)

input_list (输入数据列表文件)

restore_model (预训练模型)

os.environ["CUDA_VISIBLE_DEVICES"] = FLAGS.gpu

TRAINING_FILE_LIST = FLAGS.input_list

PRETRAINED_MODEL_PATH = os.path.join(FLAGS.restore_model, 'trained_models/')

PRETRAINED_MODEL_PATH (预测模型路径) (trained_models 文件夹内)

POINT_NUM = FLAGS.point_num

BATCH_SIZE = FLAGS.batch

OUTPUT_DIR = FLAGS.output_dir

if not os.path.exists(OUTPUT_DIR):

os.makedirs(OUTPUT_DIR)

如果没有 OUTPUT_DIR 的路径 stanford_sem_seg 这个文件夹,创建 stanford_sem_seg 文件

夹。

NUM_GROUPS = FLAGS.group_num

NUM_CATEGORY = FLAGS.cate_num

�

print('#### Batch Size: {0}'.format(BATCH_SIZE))

print('#### Point Number: {0}'.format(POINT_NUM))

print('#### Training using GPU: {0}'.format(FLAGS.gpu))

DECAY_STEP = 800000.

DECAY_RATE = 0.5

LEARNING_RATE_CLIP = 1e-6

BASE_LEARNING_RATE = 1e-4

MOMENTUM = 0.9

梯度下降的参数数值设置。

TRAINING_EPOCHES = FLAGS.epoch

MARGINS = [FLAGS.margin_same, FLAGS.margin_diff]

print('### Training epoch: {0}'.format(TRAINING_EPOCHES))

MODEL_STORAGE_PATH = os.path.join(OUTPUT_DIR, 'trained_models')

if not os.path.exists(MODEL_STORAGE_PATH):

os.mkdir(MODEL_STORAGE_PATH)

LOG_STORAGE_PATH = os.path.join(OUTPUT_DIR, 'logs')

if not os.path.exists(LOG_STORAGE_PATH):

os.mkdir(LOG_STORAGE_PATH)

SUMMARIES_FOLDER = os.path.join(OUTPUT_DIR, 'summaries')

if not os.path.exists(SUMMARIES_FOLDER):

os.mkdir(SUMMARIES_FOLDER)

在 stanford_sem_seg 这个文件夹中是否有 trained_models、logs、summaries 这三个文件夹,

没有的话创建这些文件夹。

LOG_DIR = FLAGS.output_dir

if not os.path.exists(LOG_DIR):

os.mkdir(LOG_DIR)

os.system('cp %s %s' % (os.path.join(BASE_DIR, 'models/model.py'), LOG_DIR))

model def

os.system('cp %s %s' % (os.path.join(BASE_DIR,

procedure

'train.py'), LOG_DIR))

# bkp of

# bkp of train

调用 model.py 中定义过的函数

运行 train 的程序

�

def printout(flog, data):

print(data)

flog.write(data + '\n')

定义的 printout 函数

具体作用:后面看了才知道

def train():

with tf.Graph().as_default():

with tf.device('/gpu:' + str(FLAGS.gpu)):

batch = tf.Variable(0, trainable=False, name='batch')

learning_rate = tf.train.exponential_decay(

# base learning rate

# global_var indicating the number of steps

BASE_LEARNING_RATE,

batch * BATCH_SIZE,

DECAY_STEP, # step size

DECAY_RATE,

staircase=True

# decay rate

# Stair-case or continuous decreasing)

learning_rate = tf.maximum(learning_rate, LEARNING_RATE_CLIP)

计 算 指 数 衰 减 的 学 习 率 。 训 练 时 学 习 率 最 好 随 着 训 练 衰 减 。 函 数

tf.train.exponential_decay(learning_rate, global_step, decay_steps, decay_rate, staircase=False,

name=None)为指数衰减函数。

BASE_LEARNING_RATE = 1e-4;BATCH_SIZE = 4;DECAY_STEP = 800000;DECAY_RATE

= 0.5

计算公式如下:

decayed_learning_rate = learning_rate *decay_rate ^ (global_step / decay_steps)

此处 global_step = batch * BATCH_SIZE

lr_op = tf.summary.scalar('learning_rate', learning_rate)

这里是为了实现 tensorboard 可视化 learning_rate

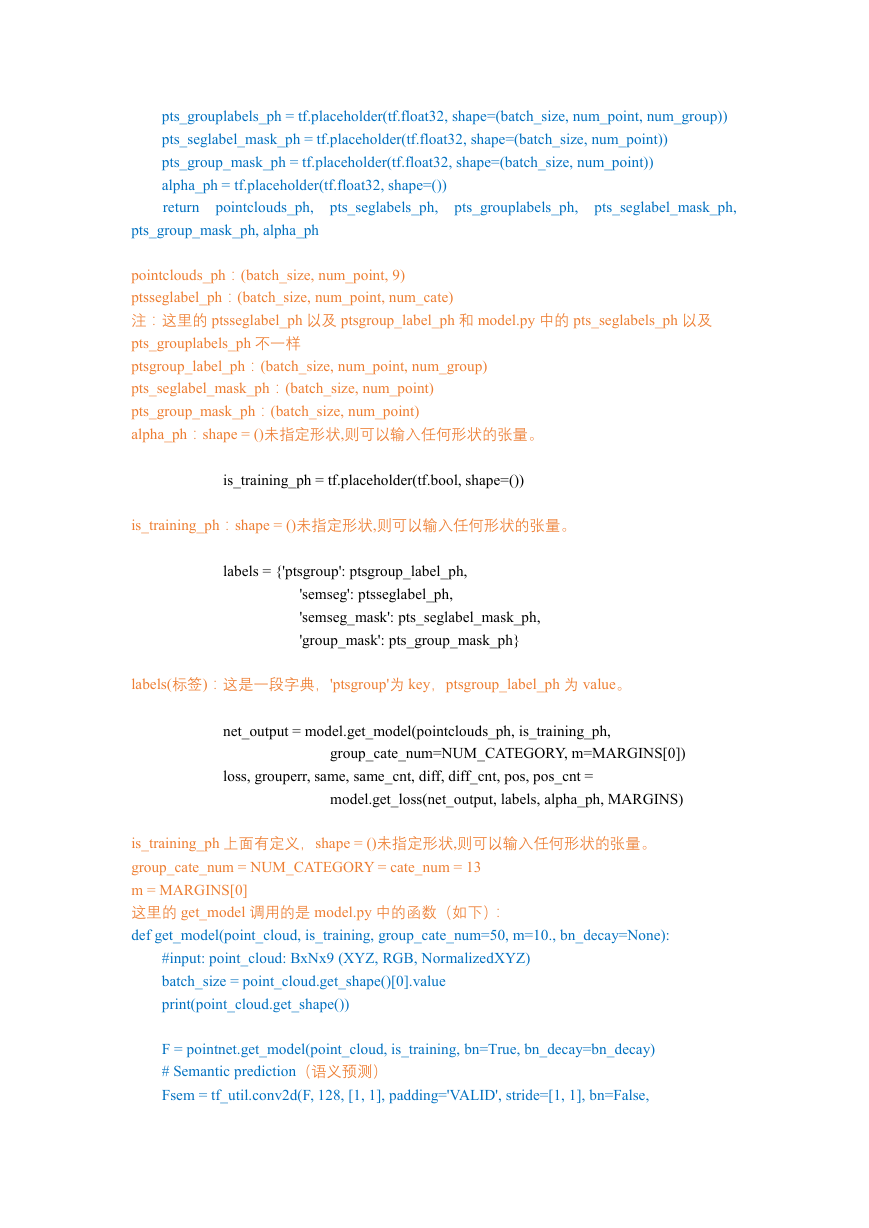

pointclouds_ph,

ptsseglabel_ph,

pts_seglabel_mask_ph,

pts_group_mask_ph, alpha_ph = model. placeholder_inputs(BATCH_SIZE,

POINT_NUM, NUM_GROUPS, NUM_CATEGORY)

ptsgroup_label_ph,

这里的 placeholder_inputs 调用的是 model.py 中的函数(如下):

def placeholder_inputs(batch_size, num_point, num_group, num_cate):

if num_point == 0:

pointclouds_ph = tf.placeholder(tf.float32, shape=(batch_size, None, 9))

else:

pointclouds_ph = tf.placeholder(tf.float32, shape=(batch_size, num_point, 9))

pts_seglabels_ph = tf.placeholder(tf.int32, shape=(batch_size, num_point, num_cate))

�

pts_grouplabels_ph = tf.placeholder(tf.float32, shape=(batch_size, num_point, num_group))

pts_seglabel_mask_ph = tf.placeholder(tf.float32, shape=(batch_size, num_point))

pts_group_mask_ph = tf.placeholder(tf.float32, shape=(batch_size, num_point))

alpha_ph = tf.placeholder(tf.float32, shape=())

pts_seglabels_ph,

return

pts_grouplabels_ph,

pointclouds_ph,

pts_group_mask_ph, alpha_ph

pts_seglabel_mask_ph,

pointclouds_ph:(batch_size, num_point, 9)

ptsseglabel_ph:(batch_size, num_point, num_cate)

注:这里的 ptsseglabel_ph 以及 ptsgroup_label_ph 和 model.py 中的 pts_seglabels_ph 以及

pts_grouplabels_ph 不一样

ptsgroup_label_ph:(batch_size, num_point, num_group)

pts_seglabel_mask_ph:(batch_size, num_point)

pts_group_mask_ph:(batch_size, num_point)

alpha_ph:shape = ()未指定形状,则可以输入任何形状的张量。

is_training_ph = tf.placeholder(tf.bool, shape=())

is_training_ph:shape = ()未指定形状,则可以输入任何形状的张量。

labels = {'ptsgroup': ptsgroup_label_ph,

'semseg': ptsseglabel_ph,

'semseg_mask': pts_seglabel_mask_ph,

'group_mask': pts_group_mask_ph}

labels(标签):这是一段字典,'ptsgroup'为 key,ptsgroup_label_ph 为 value。

net_output = model.get_model(pointclouds_ph, is_training_ph,

group_cate_num=NUM_CATEGORY, m=MARGINS[0])

loss, grouperr, same, same_cnt, diff, diff_cnt, pos, pos_cnt =

model.get_loss(net_output, labels, alpha_ph, MARGINS)

is_training_ph 上面有定义,shape = ()未指定形状,则可以输入任何形状的张量。

group_cate_num = NUM_CATEGORY = cate_num = 13

m = MARGINS[0]

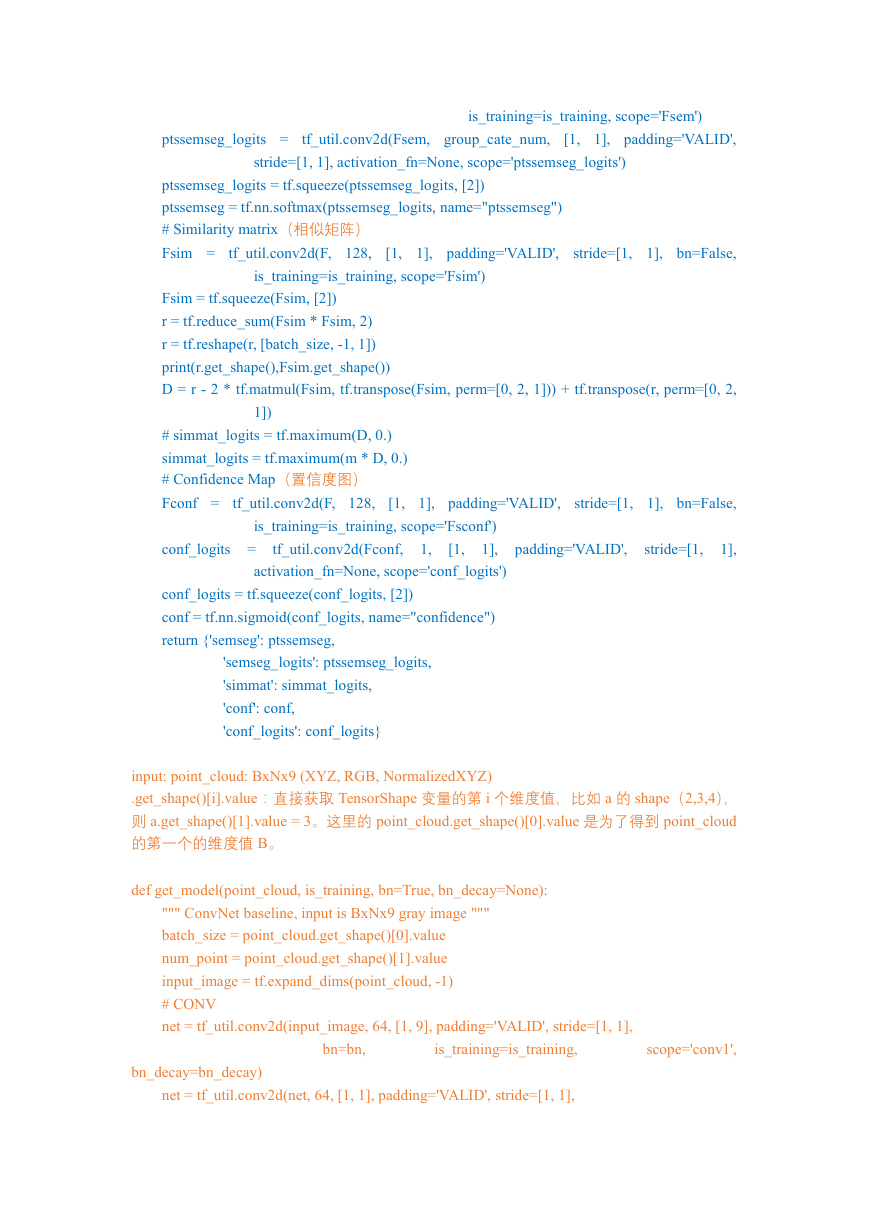

这里的 get_model 调用的是 model.py 中的函数(如下):

def get_model(point_cloud, is_training, group_cate_num=50, m=10., bn_decay=None):

#input: point_cloud: BxNx9 (XYZ, RGB, NormalizedXYZ)

batch_size = point_cloud.get_shape()[0].value

print(point_cloud.get_shape())

F = pointnet.get_model(point_cloud, is_training, bn=True, bn_decay=bn_decay)

# Semantic prediction(语义预测)

Fsem = tf_util.conv2d(F, 128, [1, 1], padding='VALID', stride=[1, 1], bn=False,

�

ptssemseg_logits = tf_util.conv2d(Fsem, group_cate_num,

[1, 1], padding='VALID',

stride=[1, 1], activation_fn=None, scope='ptssemseg_logits')

is_training=is_training, scope='Fsem')

ptssemseg_logits = tf.squeeze(ptssemseg_logits, [2])

ptssemseg = tf.nn.softmax(ptssemseg_logits, name="ptssemseg")

# Similarity matrix(相似矩阵)

Fsim = tf_util.conv2d(F, 128,

[1, 1], padding='VALID',

is_training=is_training, scope='Fsim')

stride=[1, 1], bn=False,

Fsim = tf.squeeze(Fsim, [2])

r = tf.reduce_sum(Fsim * Fsim, 2)

r = tf.reshape(r, [batch_size, -1, 1])

print(r.get_shape(),Fsim.get_shape())

D = r - 2 * tf.matmul(Fsim, tf.transpose(Fsim, perm=[0, 2, 1])) + tf.transpose(r, perm=[0, 2,

1])

# simmat_logits = tf.maximum(D, 0.)

simmat_logits = tf.maximum(m * D, 0.)

# Confidence Map(置信度图)

Fconf = tf_util.conv2d(F, 128,

[1, 1], padding='VALID',

stride=[1, 1], bn=False,

conf_logits = tf_util.conv2d(Fconf,

1,

is_training=is_training, scope='Fsconf')

1],

activation_fn=None, scope='conf_logits')

[1,

padding='VALID',

stride=[1,

1],

conf_logits = tf.squeeze(conf_logits, [2])

conf = tf.nn.sigmoid(conf_logits, name="confidence")

return {'semseg': ptssemseg,

'semseg_logits': ptssemseg_logits,

'simmat': simmat_logits,

'conf': conf,

'conf_logits': conf_logits}

input: point_cloud: BxNx9 (XYZ, RGB, NormalizedXYZ)

.get_shape()[i].value:直接获取 TensorShape 变量的第 i 个维度值,比如 a 的 shape(2,3,4),

则 a.get_shape()[1].value = 3。这里的 point_cloud.get_shape()[0].value 是为了得到 point_cloud

的第一个的维度值 B。

def get_model(point_cloud, is_training, bn=True, bn_decay=None):

""" ConvNet baseline, input is BxNx9 gray image """

batch_size = point_cloud.get_shape()[0].value

num_point = point_cloud.get_shape()[1].value

input_image = tf.expand_dims(point_cloud, -1)

# CONV

net = tf_util.conv2d(input_image, 64, [1, 9], padding='VALID', stride=[1, 1],

bn=bn,

is_training=is_training,

scope='conv1',

bn_decay=bn_decay)

net = tf_util.conv2d(net, 64, [1, 1], padding='VALID', stride=[1, 1],

�

bn=bn,

is_training=is_training,

scope='conv2',

bn_decay=bn_decay)

net = tf_util.conv2d(net, 64, [1, 1], padding='VALID', stride=[1, 1],

is_training=is_training,

bn=bn,

scope='conv3',

bn_decay=bn_decay)

net = tf_util.conv2d(net, 128, [1, 1], padding='VALID', stride=[1, 1],

bn=bn, is_training=is_training, scope='conv4', bn_decay=bn_decay)

points_feat1 = tf_util.conv2d(net, 1024, [1, 1], padding='VALID', stride=[1, 1],

bn=bn, is_training=is_training, scope='conv5', bn_decay=bn_decay)

# MAX

pc_feat1

=

tf_util.max_pool2d(points_feat1,

[num_point,

1],

padding='VALID',

scope='maxpool1')

# FC

pc_feat1 = tf.reshape(pc_feat1, [batch_size, -1])

pc_feat1 = tf_util.fully_connected(pc_feat1, 256, bn=bn, is_training=is_training, scope='fc1',

bn_decay=bn_decay)

pc_feat1 = tf_util.fully_connected(pc_feat1, 128, bn=bn, is_training=is_training, scope='fc2',

bn_decay=bn_decay)

print(pc_feat1)

# CONCAT

pc_feat1_expand = tf.tile(tf.reshape(pc_feat1, [batch_size, 1, 1, -1]), [1, num_point, 1, 1])

points_feat1_concat = tf.concat(axis=3, values=[points_feat1, pc_feat1_expand])

# CONV

net = tf_util.conv2d(points_feat1_concat, 512, [1, 1], padding='VALID', stride=[1, 1], bn=bn,

is_training=is_training, scope='conv6')

256,

is_training=is_training, scope='conv7')

net = tf_util.conv2d(net,

[1,

1],

padding='VALID',

stride=[1,

1],

bn=bn,

# net = tf_util.dropout(net, keep_prob=0.7, is_training=is_training, scope='dp1')

# net = tf_util.conv2d(net, 13, [1, 1], padding='VALID', stride=[1, 1],

# activation_fn=None, scope='conv8')

# net = tf.squeeze(net, [2])

return net

def conv2d(inputs,num_output_channels,kernel_size,scope,stride=[1, 1],padding='SAME',

use_xavier=True,stddev=1e-3,weight_decay=0.0,activation_fn=tf.nn.relu,bn=False,

bn_decay=None,is_training=None):与 tf_util.conv2d(F, 128, [1, 1], padding='VALID', stride=[1,

1], bn=False, is_training=is_training, scope='Fsem')的参数一一对应

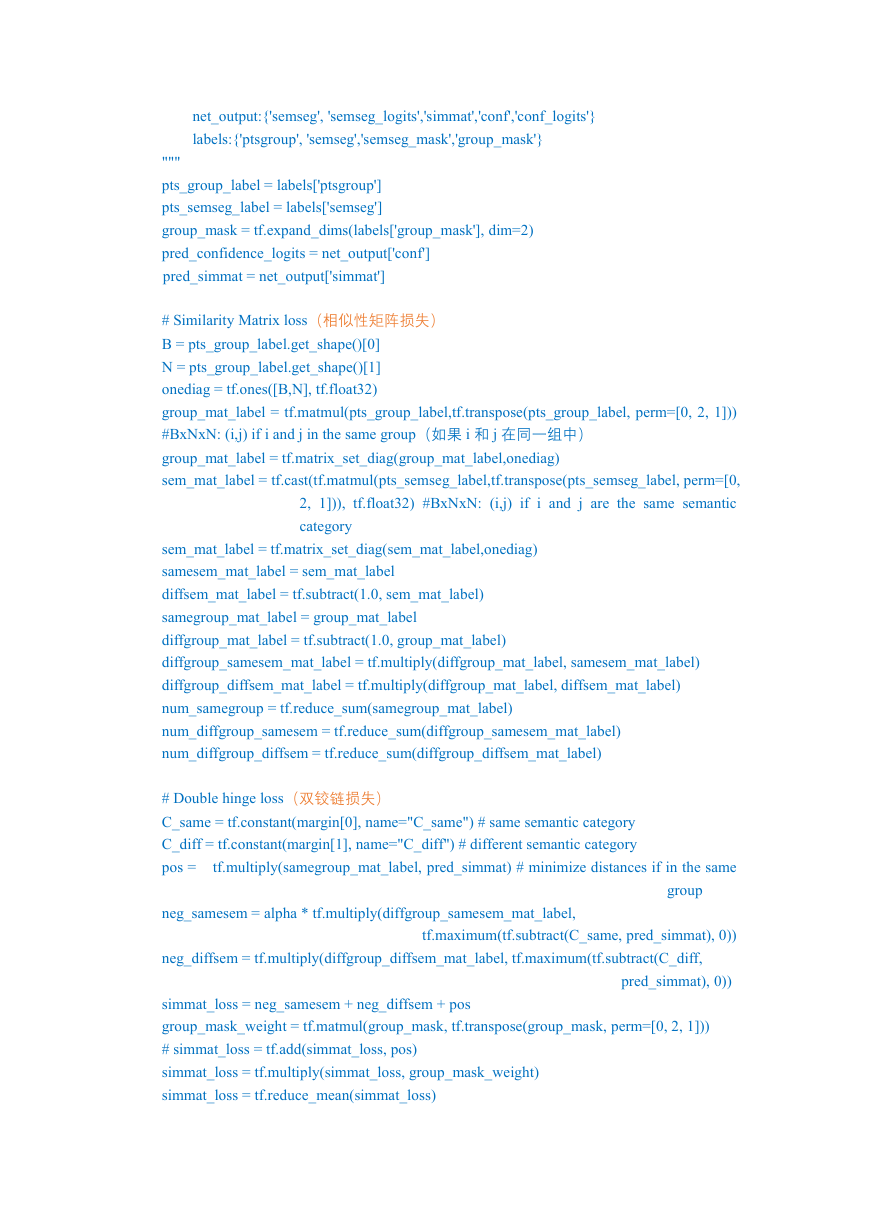

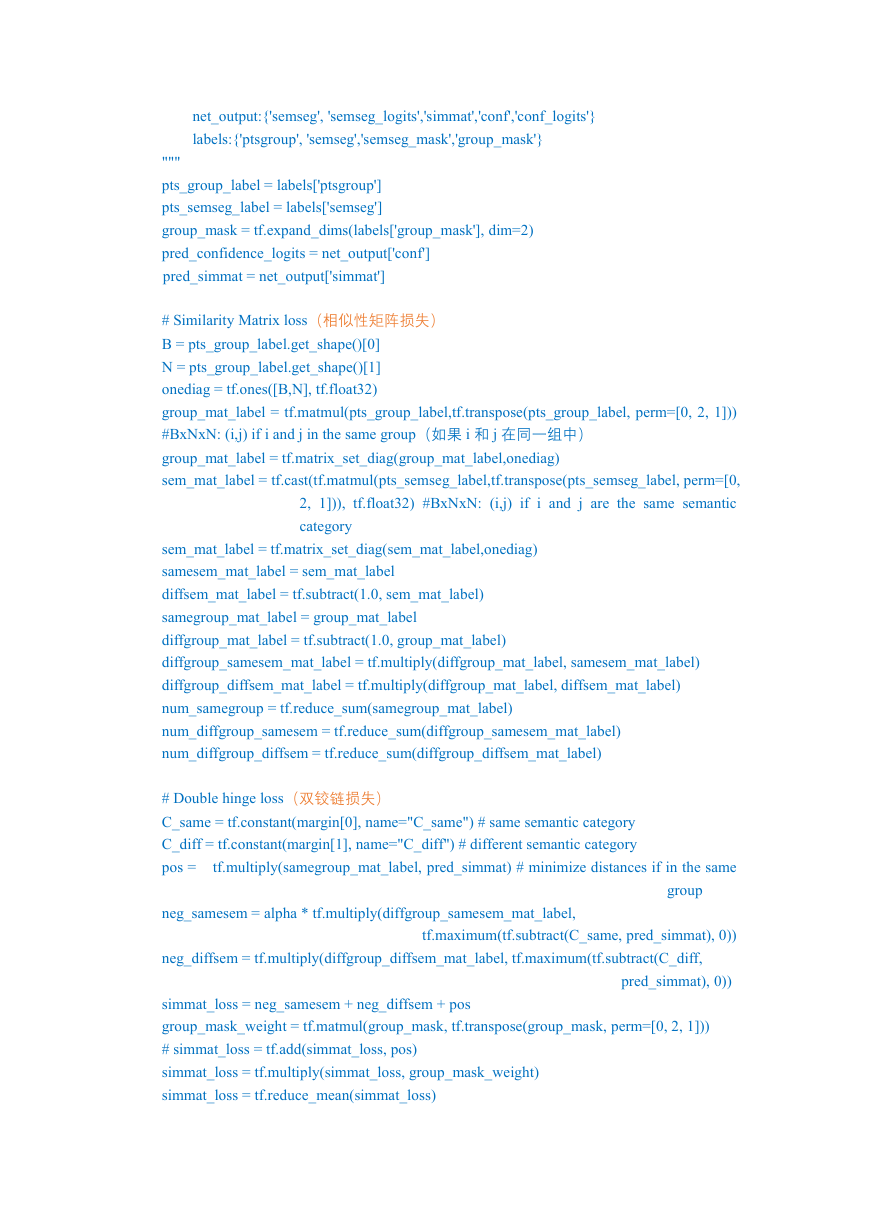

这里的 get_ loss 调用的是 model.py 中的函数(如下)

def get_loss(net_output, labels, alpha=10., margin=[1.,2.]):

"""

input:

�

net_output:{'semseg', 'semseg_logits','simmat','conf','conf_logits'}

labels:{'ptsgroup', 'semseg','semseg_mask','group_mask'}

"""

pts_group_label = labels['ptsgroup']

pts_semseg_label = labels['semseg']

group_mask = tf.expand_dims(labels['group_mask'], dim=2)

pred_confidence_logits = net_output['conf']

pred_simmat = net_output['simmat']

# Similarity Matrix loss(相似性矩阵损失)

B = pts_group_label.get_shape()[0]

N = pts_group_label.get_shape()[1]

onediag = tf.ones([B,N], tf.float32)

group_mat_label = tf.matmul(pts_group_label,tf.transpose(pts_group_label, perm=[0, 2, 1]))

#BxNxN: (i,j) if i and j in the same group(如果 i 和 j 在同一组中)

group_mat_label = tf.matrix_set_diag(group_mat_label,onediag)

sem_mat_label = tf.cast(tf.matmul(pts_semseg_label,tf.transpose(pts_semseg_label, perm=[0,

2, 1])), tf.float32) #BxNxN: (i,j) if i and j are the same semantic

category

sem_mat_label = tf.matrix_set_diag(sem_mat_label,onediag)

samesem_mat_label = sem_mat_label

diffsem_mat_label = tf.subtract(1.0, sem_mat_label)

samegroup_mat_label = group_mat_label

diffgroup_mat_label = tf.subtract(1.0, group_mat_label)

diffgroup_samesem_mat_label = tf.multiply(diffgroup_mat_label, samesem_mat_label)

diffgroup_diffsem_mat_label = tf.multiply(diffgroup_mat_label, diffsem_mat_label)

num_samegroup = tf.reduce_sum(samegroup_mat_label)

num_diffgroup_samesem = tf.reduce_sum(diffgroup_samesem_mat_label)

num_diffgroup_diffsem = tf.reduce_sum(diffgroup_diffsem_mat_label)

# Double hinge loss(双铰链损失)

C_same = tf.constant(margin[0], name="C_same") # same semantic category

C_diff = tf.constant(margin[1], name="C_diff") # different semantic category

pos = tf.multiply(samegroup_mat_label, pred_simmat) # minimize distances if in the same

group

neg_samesem = alpha * tf.multiply(diffgroup_samesem_mat_label,

neg_diffsem = tf.multiply(diffgroup_diffsem_mat_label, tf.maximum(tf.subtract(C_diff,

tf.maximum(tf.subtract(C_same, pred_simmat), 0))

pred_simmat), 0))

simmat_loss = neg_samesem + neg_diffsem + pos

group_mask_weight = tf.matmul(group_mask, tf.transpose(group_mask, perm=[0, 2, 1]))

# simmat_loss = tf.add(simmat_loss, pos)

simmat_loss = tf.multiply(simmat_loss, group_mask_weight)

simmat_loss = tf.reduce_mean(simmat_loss)

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc