Red-Black Tree

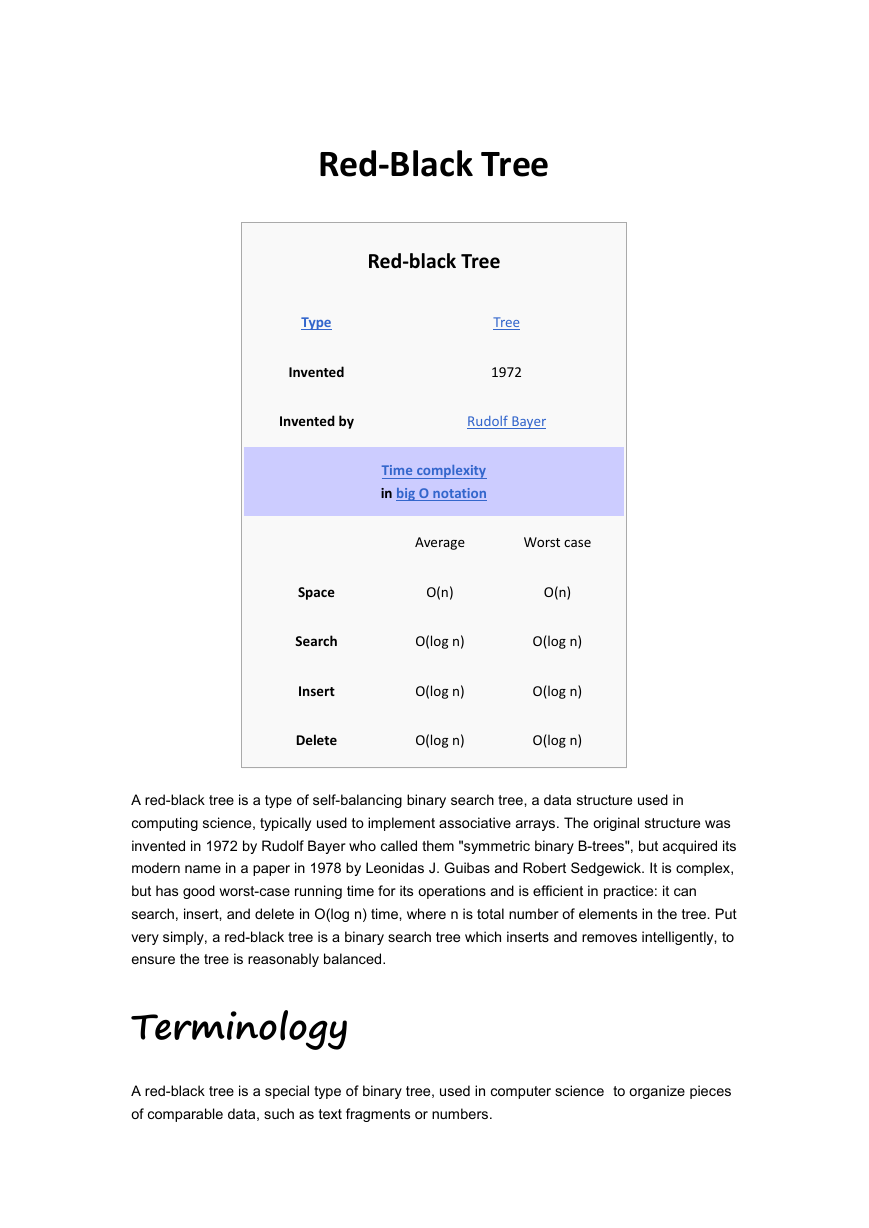

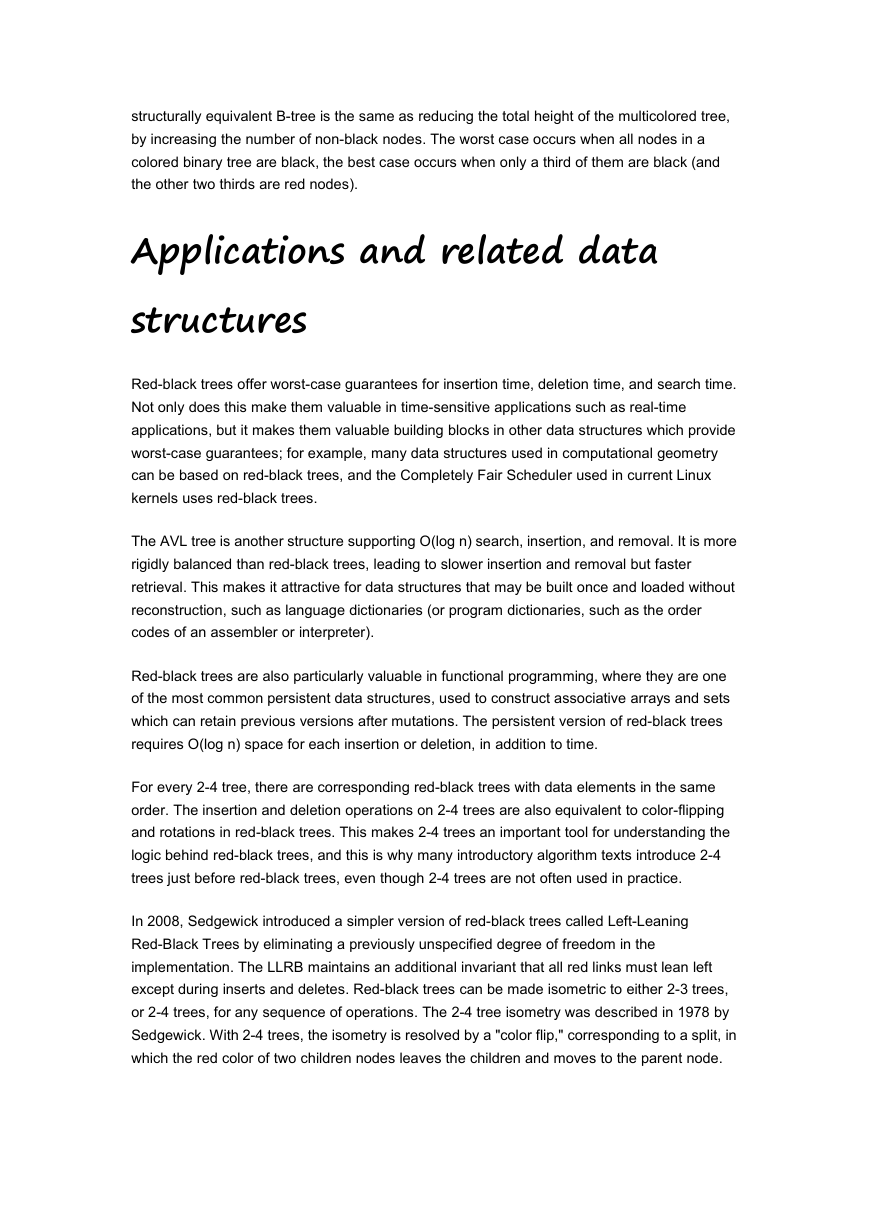

Red-black Tree

Type

Invented

Tree

1972

Invented by

Rudolf Bayer

Time complexity

in big O notation

Average

Worst case

O(n)

O(n)

O(log n)

O(log n)

O(log n)

O(log n)

O(log n)

O(log n)

Space

Search

Insert

Delete

A red-black tree is a type of self-balancing binary search tree, a data structure used in

computing science, typically used to implement associative arrays. The original structure was

invented in 1972 by Rudolf Bayer who called them "symmetric binary B-trees", but acquired its

modern name in a paper in 1978 by Leonidas J. Guibas and Robert Sedgewick. It is complex,

but has good worst-case running time for its operations and is efficient in practice: it can

search, insert, and delete in O(log n) time, where n is total number of elements in the tree. Put

very simply, a red-black tree is a binary search tree which inserts and removes intelligently, to

ensure the tree is reasonably balanced.

Terminology

A red-black tree is a special type of binary tree, used in computer science to organize pieces

of comparable data, such as text fragments or numbers.

�

The leaf nodes of red-black trees do not contain data. These leaves need not be explicit in

computer memory — a null child pointer can encode the fact that this child is a leaf — but it

simplifies some algorithms for operating on red-black trees if the leaves really are explicit

nodes. To save memory, sometimes a single sentinel node performs the role of all leaf nodes;

all references from internal nodes to leaf nodes then point to the sentinel node.

Red-black trees, like all binary search trees, allow efficient in-order traversal in the fashion,

Left-Root-Right, of their elements. The search-time results from the traversal from root to leaf,

and therefore a balanced tree, having the least possible tree height, results in O(log n) search

time.

Properties

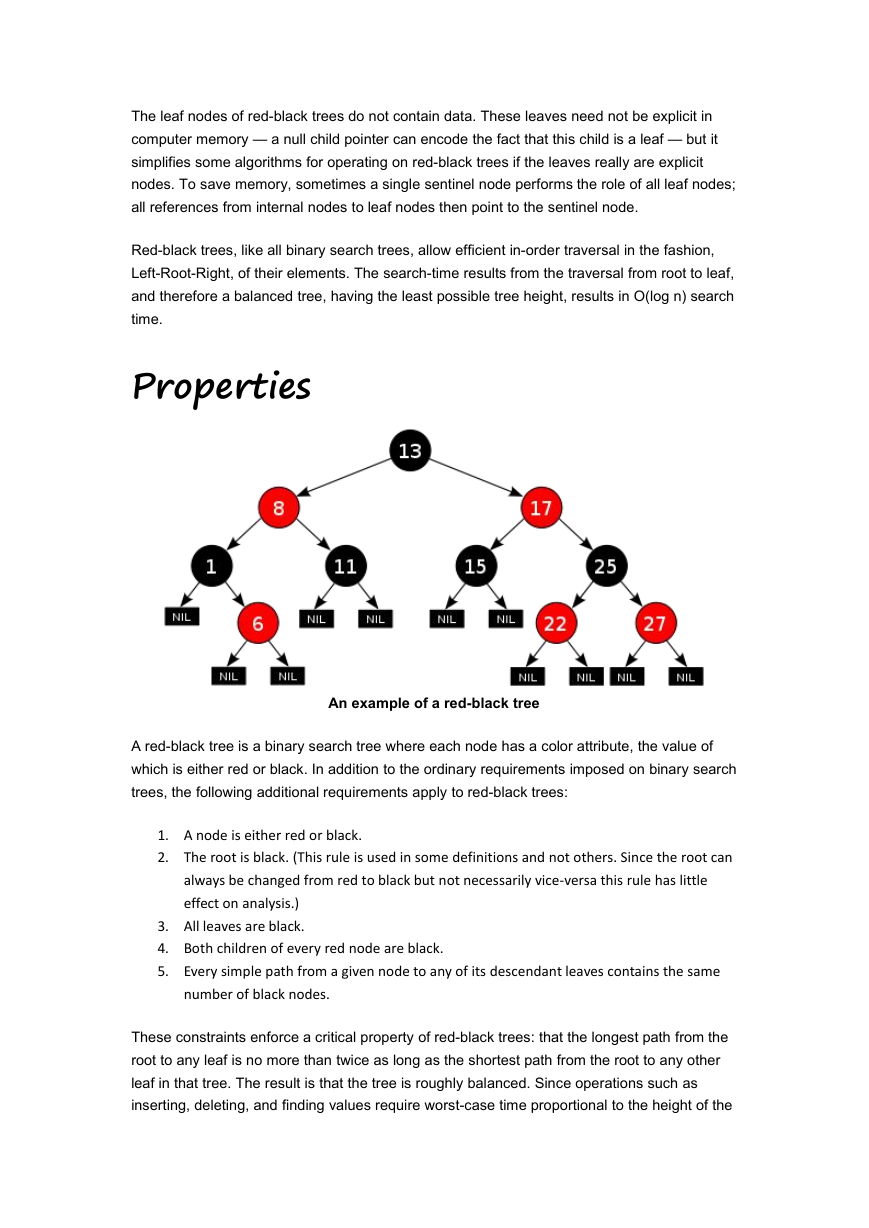

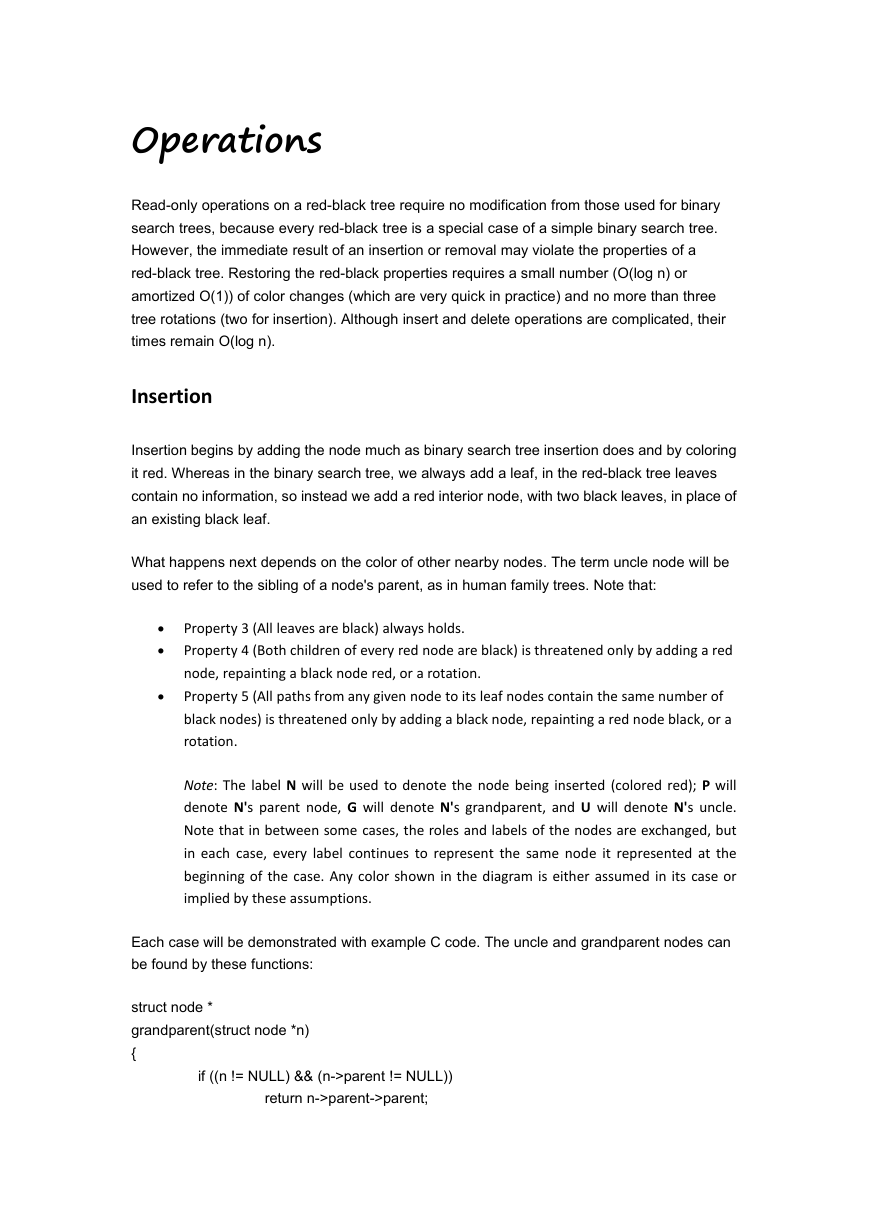

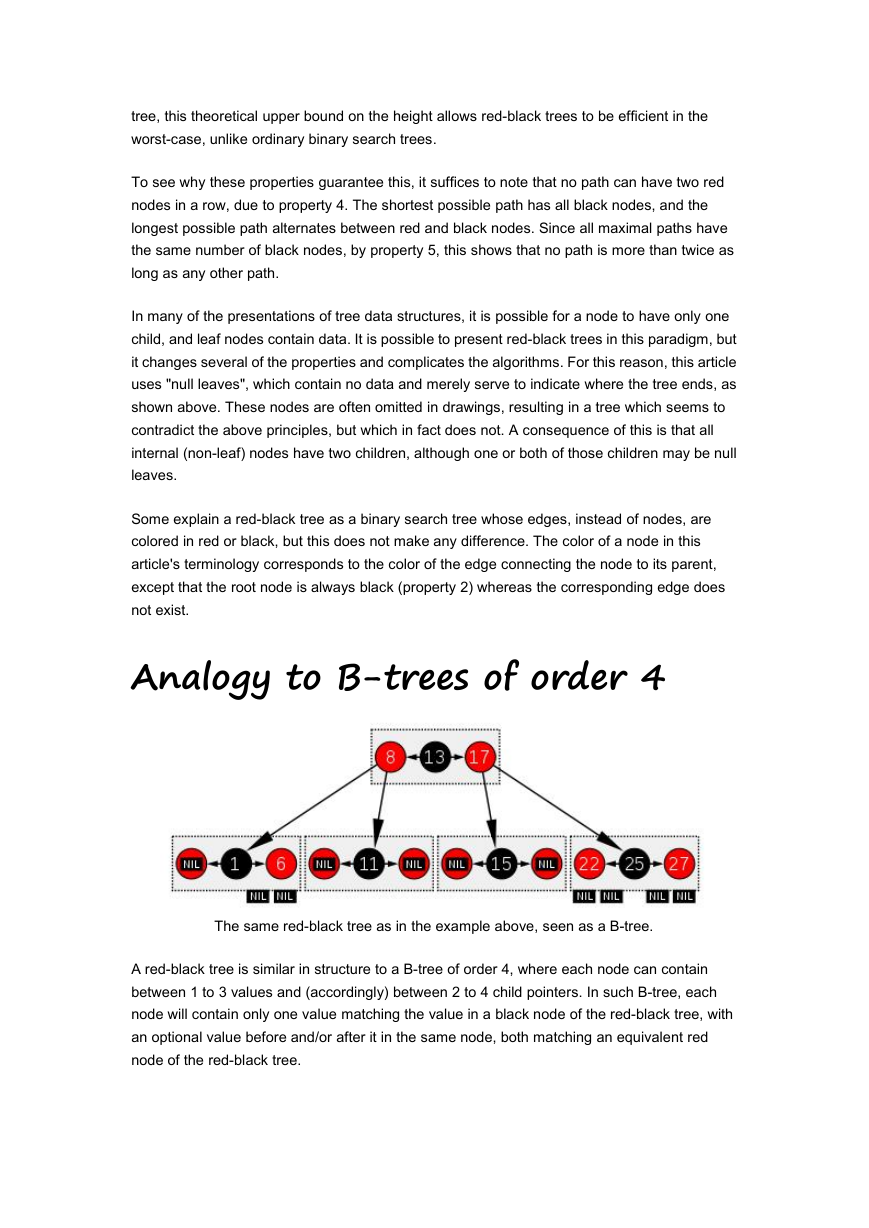

An example of a red-black tree

A red-black tree is a binary search tree where each node has a color attribute, the value of

which is either red or black. In addition to the ordinary requirements imposed on binary search

trees, the following additional requirements apply to red-black trees:

1. A node is either red or black.

2. The root is black. (This rule is used in some definitions and not others. Since the root can

always be changed from red to black but not necessarily vice-versa this rule has little

effect on analysis.)

3. All leaves are black.

4. Both children of every red node are black.

5. Every simple path from a given node to any of its descendant leaves contains the same

number of black nodes.

These constraints enforce a critical property of red-black trees: that the longest path from the

root to any leaf is no more than twice as long as the shortest path from the root to any other

leaf in that tree. The result is that the tree is roughly balanced. Since operations such as

inserting, deleting, and finding values require worst-case time proportional to the height of the

�

tree, this theoretical upper bound on the height allows red-black trees to be efficient in the

worst-case, unlike ordinary binary search trees.

To see why these properties guarantee this, it suffices to note that no path can have two red

nodes in a row, due to property 4. The shortest possible path has all black nodes, and the

longest possible path alternates between red and black nodes. Since all maximal paths have

the same number of black nodes, by property 5, this shows that no path is more than twice as

long as any other path.

In many of the presentations of tree data structures, it is possible for a node to have only one

child, and leaf nodes contain data. It is possible to present red-black trees in this paradigm, but

it changes several of the properties and complicates the algorithms. For this reason, this article

uses "null leaves", which contain no data and merely serve to indicate where the tree ends, as

shown above. These nodes are often omitted in drawings, resulting in a tree which seems to

contradict the above principles, but which in fact does not. A consequence of this is that all

internal (non-leaf) nodes have two children, although one or both of those children may be null

leaves.

Some explain a red-black tree as a binary search tree whose edges, instead of nodes, are

colored in red or black, but this does not make any difference. The color of a node in this

article's terminology corresponds to the color of the edge connecting the node to its parent,

except that the root node is always black (property 2) whereas the corresponding edge does

not exist.

Analogy to B-trees of order 4

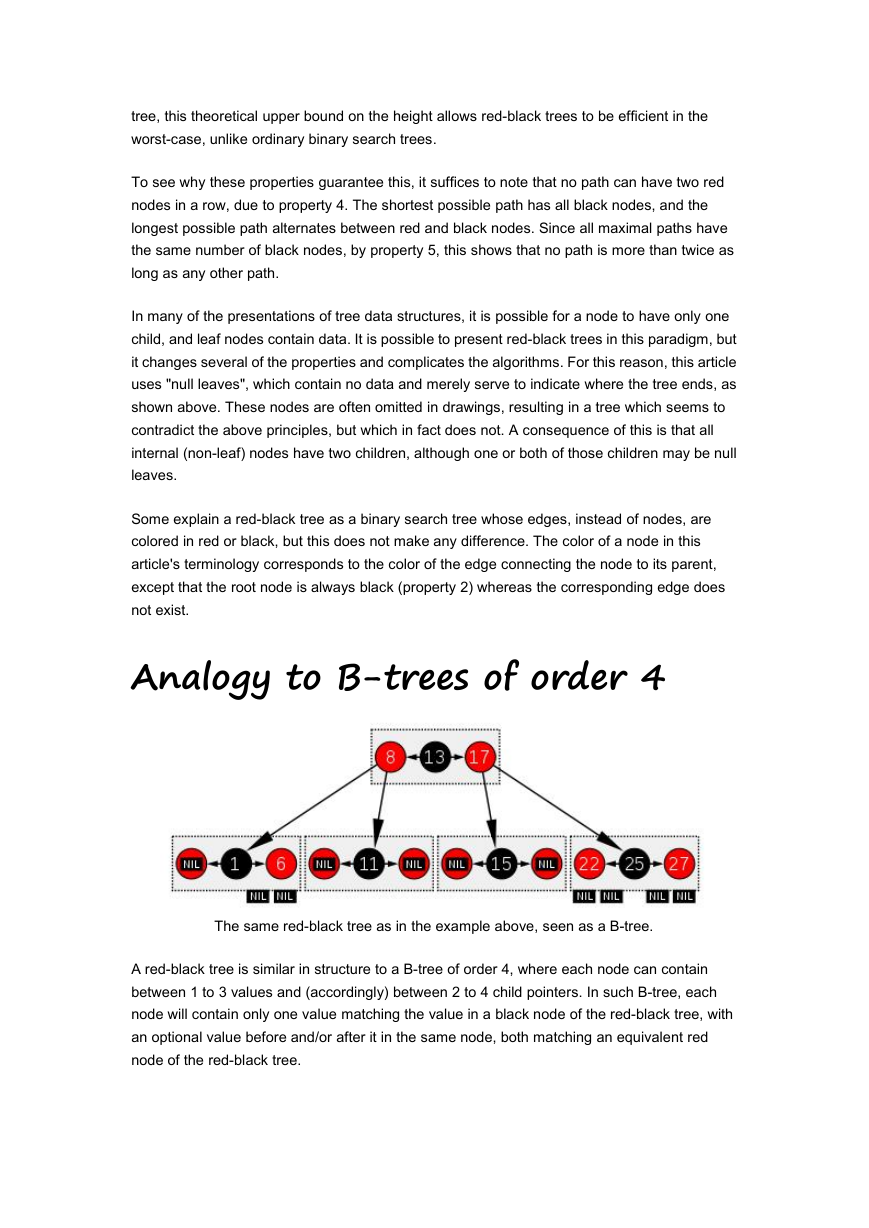

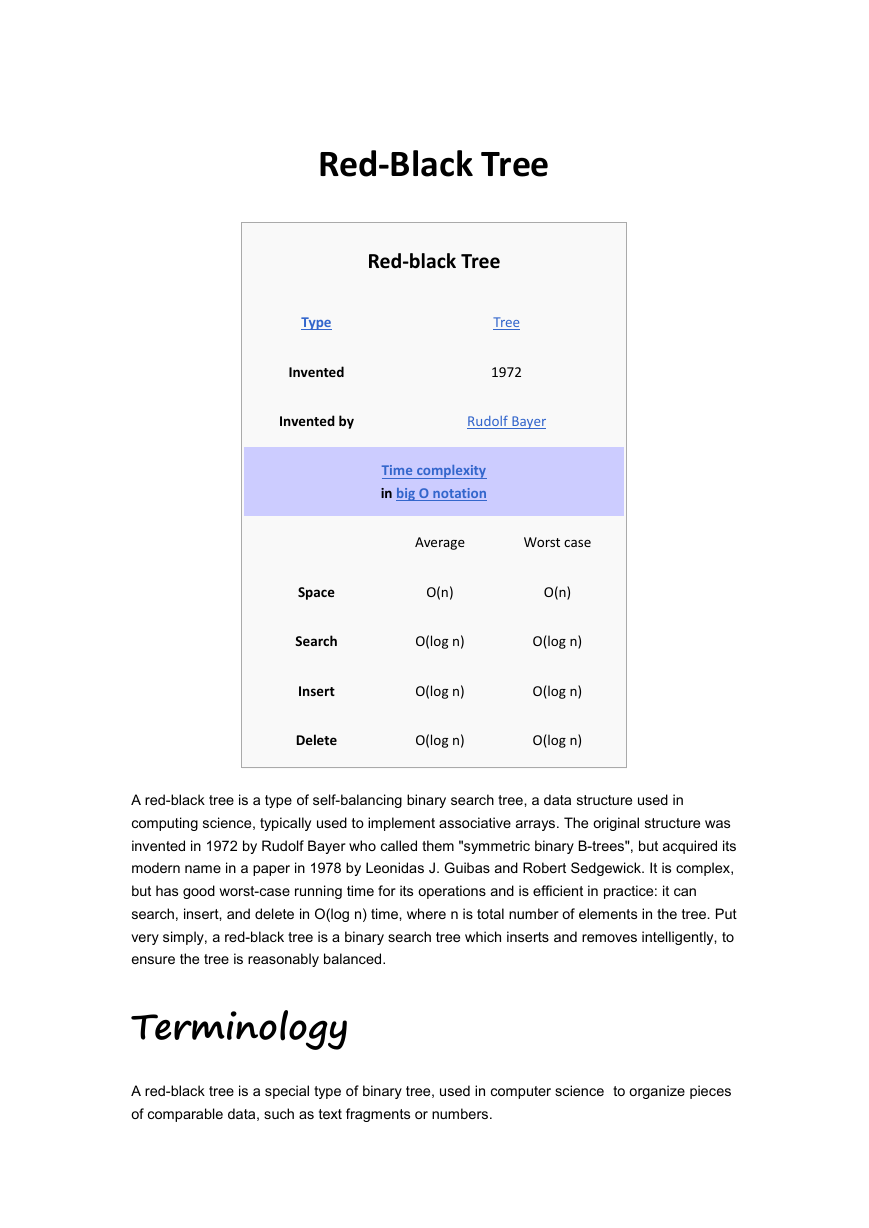

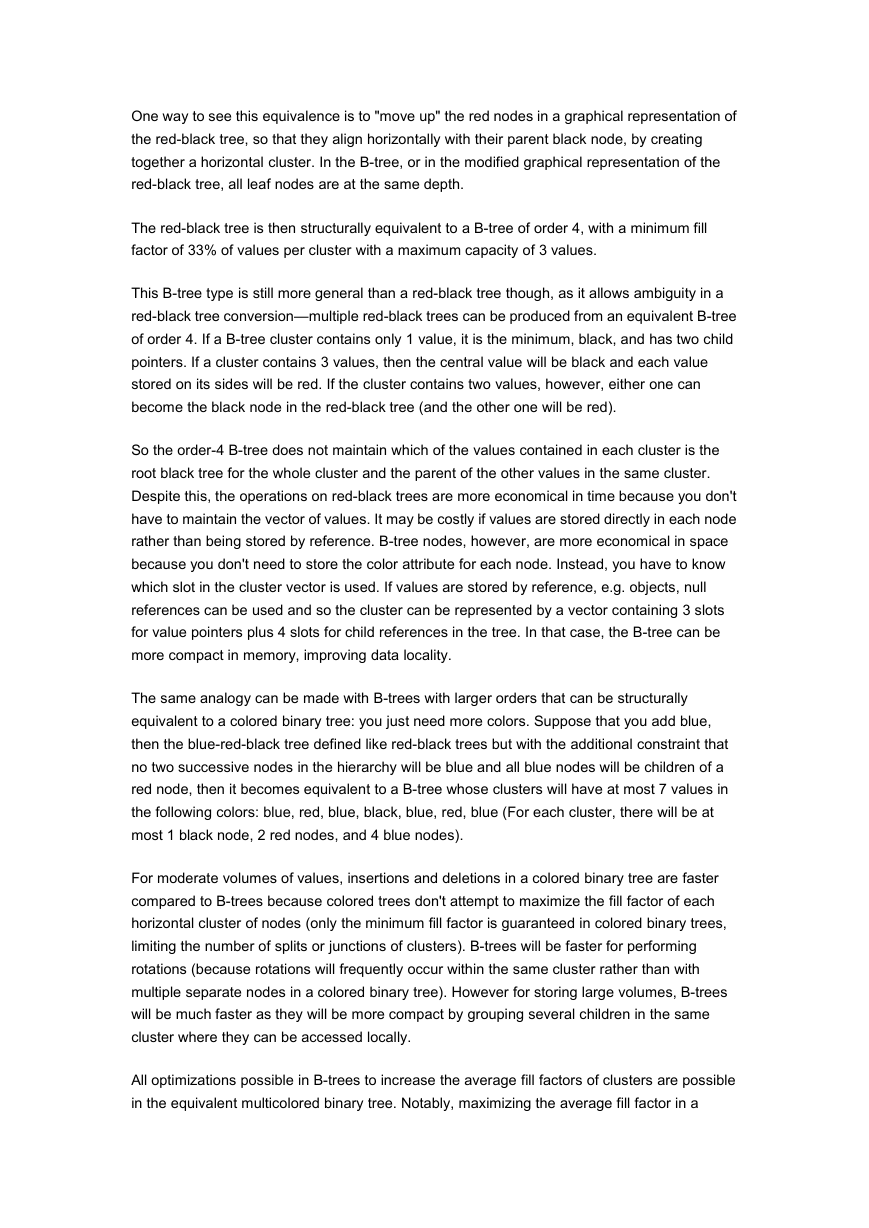

The same red-black tree as in the example above, seen as a B-tree.

A red-black tree is similar in structure to a B-tree of order 4, where each node can contain

between 1 to 3 values and (accordingly) between 2 to 4 child pointers. In such B-tree, each

node will contain only one value matching the value in a black node of the red-black tree, with

an optional value before and/or after it in the same node, both matching an equivalent red

node of the red-black tree.

�

One way to see this equivalence is to "move up" the red nodes in a graphical representation of

the red-black tree, so that they align horizontally with their parent black node, by creating

together a horizontal cluster. In the B-tree, or in the modified graphical representation of the

red-black tree, all leaf nodes are at the same depth.

The red-black tree is then structurally equivalent to a B-tree of order 4, with a minimum fill

factor of 33% of values per cluster with a maximum capacity of 3 values.

This B-tree type is still more general than a red-black tree though, as it allows ambiguity in a

red-black tree conversion—multiple red-black trees can be produced from an equivalent B-tree

of order 4. If a B-tree cluster contains only 1 value, it is the minimum, black, and has two child

pointers. If a cluster contains 3 values, then the central value will be black and each value

stored on its sides will be red. If the cluster contains two values, however, either one can

become the black node in the red-black tree (and the other one will be red).

So the order-4 B-tree does not maintain which of the values contained in each cluster is the

root black tree for the whole cluster and the parent of the other values in the same cluster.

Despite this, the operations on red-black trees are more economical in time because you don't

have to maintain the vector of values. It may be costly if values are stored directly in each node

rather than being stored by reference. B-tree nodes, however, are more economical in space

because you don't need to store the color attribute for each node. Instead, you have to know

which slot in the cluster vector is used. If values are stored by reference, e.g. objects, null

references can be used and so the cluster can be represented by a vector containing 3 slots

for value pointers plus 4 slots for child references in the tree. In that case, the B-tree can be

more compact in memory, improving data locality.

The same analogy can be made with B-trees with larger orders that can be structurally

equivalent to a colored binary tree: you just need more colors. Suppose that you add blue,

then the blue-red-black tree defined like red-black trees but with the additional constraint that

no two successive nodes in the hierarchy will be blue and all blue nodes will be children of a

red node, then it becomes equivalent to a B-tree whose clusters will have at most 7 values in

the following colors: blue, red, blue, black, blue, red, blue (For each cluster, there will be at

most 1 black node, 2 red nodes, and 4 blue nodes).

For moderate volumes of values, insertions and deletions in a colored binary tree are faster

compared to B-trees because colored trees don't attempt to maximize the fill factor of each

horizontal cluster of nodes (only the minimum fill factor is guaranteed in colored binary trees,

limiting the number of splits or junctions of clusters). B-trees will be faster for performing

rotations (because rotations will frequently occur within the same cluster rather than with

multiple separate nodes in a colored binary tree). However for storing large volumes, B-trees

will be much faster as they will be more compact by grouping several children in the same

cluster where they can be accessed locally.

All optimizations possible in B-trees to increase the average fill factors of clusters are possible

in the equivalent multicolored binary tree. Notably, maximizing the average fill factor in a

�

structurally equivalent B-tree is the same as reducing the total height of the multicolored tree,

by increasing the number of non-black nodes. The worst case occurs when all nodes in a

colored binary tree are black, the best case occurs when only a third of them are black (and

the other two thirds are red nodes).

Applications and related data

structures

Red-black trees offer worst-case guarantees for insertion time, deletion time, and search time.

Not only does this make them valuable in time-sensitive applications such as real-time

applications, but it makes them valuable building blocks in other data structures which provide

worst-case guarantees; for example, many data structures used in computational geometry

can be based on red-black trees, and the Completely Fair Scheduler used in current Linux

kernels uses red-black trees.

The AVL tree is another structure supporting O(log n) search, insertion, and removal. It is more

rigidly balanced than red-black trees, leading to slower insertion and removal but faster

retrieval. This makes it attractive for data structures that may be built once and loaded without

reconstruction, such as language dictionaries (or program dictionaries, such as the order

codes of an assembler or interpreter).

Red-black trees are also particularly valuable in functional programming, where they are one

of the most common persistent data structures, used to construct associative arrays and sets

which can retain previous versions after mutations. The persistent version of red-black trees

requires O(log n) space for each insertion or deletion, in addition to time.

For every 2-4 tree, there are corresponding red-black trees with data elements in the same

order. The insertion and deletion operations on 2-4 trees are also equivalent to color-flipping

and rotations in red-black trees. This makes 2-4 trees an important tool for understanding the

logic behind red-black trees, and this is why many introductory algorithm texts introduce 2-4

trees just before red-black trees, even though 2-4 trees are not often used in practice.

In 2008, Sedgewick introduced a simpler version of red-black trees called Left-Leaning

Red-Black Trees by eliminating a previously unspecified degree of freedom in the

implementation. The LLRB maintains an additional invariant that all red links must lean left

except during inserts and deletes. Red-black trees can be made isometric to either 2-3 trees,

or 2-4 trees, for any sequence of operations. The 2-4 tree isometry was described in 1978 by

Sedgewick. With 2-4 trees, the isometry is resolved by a "color flip," corresponding to a split, in

which the red color of two children nodes leaves the children and moves to the parent node.

�

Operations

Read-only operations on a red-black tree require no modification from those used for binary

search trees, because every red-black tree is a special case of a simple binary search tree.

However, the immediate result of an insertion or removal may violate the properties of a

red-black tree. Restoring the red-black properties requires a small number (O(log n) or

amortized O(1)) of color changes (which are very quick in practice) and no more than three

tree rotations (two for insertion). Although insert and delete operations are complicated, their

times remain O(log n).

Insertion

Insertion begins by adding the node much as binary search tree insertion does and by coloring

it red. Whereas in the binary search tree, we always add a leaf, in the red-black tree leaves

contain no information, so instead we add a red interior node, with two black leaves, in place of

an existing black leaf.

What happens next depends on the color of other nearby nodes. The term uncle node will be

used to refer to the sibling of a node's parent, as in human family trees. Note that:

Property 3 (All leaves are black) always holds.

Property 4 (Both children of every red node are black) is threatened only by adding a red

node, repainting a black node red, or a rotation.

Property 5 (All paths from any given node to its leaf nodes contain the same number of

black nodes) is threatened only by adding a black node, repainting a red node black, or a

rotation.

Note: The label N will be used to denote the node being inserted (colored red); P will

denote N's parent node, G will denote N's grandparent, and U will denote N's uncle.

Note that in between some cases, the roles and labels of the nodes are exchanged, but

in each case, every label continues to represent the same node it represented at the

beginning of the case. Any color shown in the diagram is either assumed in its case or

implied by these assumptions.

Each case will be demonstrated with example C code. The uncle and grandparent nodes can

be found by these functions:

struct node *

grandparent(struct node *n)

{

if ((n != NULL) && (n->parent != NULL))

return n->parent->parent;

�

else

return NULL;

}

struct node *

uncle(struct node *n)

{

struct node *g = grandparent(n);

if (g == NULL)

return NULL; // No grandparent means no uncle

if (n->parent == g->left)

return g->right;

else

return g->left;

}

Case 1: The new node N is at the root of the tree. In this case, it is repainted black to satisfy

Property 2 (The root is black). Since this adds one black node to every path at once, Property

5 (All paths from any given node to its leaf nodes contain the same number of black nodes) is

not violated.

void

insert_case1(struct node *n)

{

if (n->parent == NULL)

n->color = BLACK;

else

insert_case2(n);

}

Case 2: The new node's parent P is black, so Property 4 (Both children of every red node are

black) is not invalidated. In this case, the tree is still valid. Property 5 (All paths from any given

node to its leaf nodes contain the same number of black nodes) is not threatened, because the

new node N has two black leaf children, but because N is red, the paths through each of its

children have the same number of black nodes as the path through the leaf it replaced, which

was black, and so this property remains satisfied.

void

insert_case2(struct node *n)

{

if (n->parent->color == BLACK)

return; /* Tree is still valid */

else

insert_case3(n);

}

�

Note: In the following cases it can be assumed that N has a grandparent node G, because

its parent P is red, and if it were the root, it would be black. Thus, N also has an uncle

node U, although it may be a leaf in cases 4 and 5.

Case 3: If both the parent P and the uncle U are red, then both nodes can be repainted

black and the grandparent G becomes red (to maintain Property 5 (All paths from any

given node to its leaf nodes contain the same number of black nodes)). Now, the new

red node N has a black parent. Since any path through the parent or uncle must pass

through the grandparent, the number of black nodes on these paths has not changed.

However, the grandparent G may now violate properties 2 (The root is black) or 4

(Both children of every red node are black) (property 4 possibly being violated since G

may have a red parent). To fix this, this entire procedure is recursively performed on G

from case 1. Note that this is a tail-recursive call, so it could be rewritten as a loop;

since this is the only loop, and any rotations occur after this loop, this proves that a

constant number of rotations occur.

void

insert_case3(struct node *n)

{

struct node *u = uncle(n), *g;

if ((u != NULL) && (u->color == RED)) {

n->parent->color = BLACK;

u->color = BLACK;

g = grandparent(n);

g->color = RED;

insert_case1(g);

} else {

}

insert_case4(n);

}

Note: In the remaining cases, it is assumed that the parent node P is the left child of its

parent. If it is the right child, left and right should be reversed throughout cases 4 and 5.

The code samples take care of this.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc