Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial

Network

Christian Ledig, Lucas Theis, Ferenc Husz´ar, Jose Caballero, Andrew Cunningham,

Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, Wenzhe Shi

Twitter

{cledig,ltheis,fhuszar,jcaballero,aacostadiaz,aaitken,atejani,jtotz,zehanw,wshi}@twitter.com

Abstract

1. Introduction

Despite the breakthroughs in accuracy and speed of

single image super-resolution using faster and deeper con-

volutional neural networks, one central problem remains

largely unsolved: how do we recover the finer texture details

when we super-resolve at large upscaling factors? The

behavior of optimization-based super-resolution methods is

principally driven by the choice of the objective function.

Recent work has largely focused on minimizing the mean

squared reconstruction error. The resulting estimates have

high peak signal-to-noise ratios, but they are often lacking

high-frequency details and are perceptually unsatisfying in

the sense that they fail to match the fidelity expected at

the higher resolution.

In this paper, we present SRGAN,

a generative adversarial network (GAN) for image super-

resolution (SR). To our knowledge, it is the first framework

capable of inferring photo-realistic natural images for 4×

upscaling factors. To achieve this, we propose a perceptual

loss function which consists of an adversarial loss and a

content loss. The adversarial loss pushes our solution to

the natural image manifold using a discriminator network

that is trained to differentiate between the super-resolved

images and original photo-realistic images. In addition, we

use a content loss motivated by perceptual similarity instead

of similarity in pixel space. Our deep residual network

is able to recover photo-realistic textures from heavily

downsampled images on public benchmarks. An extensive

mean-opinion-score (MOS) test shows hugely significant

gains in perceptual quality using SRGAN. The MOS scores

obtained with SRGAN are closer to those of the original

high-resolution images than to those obtained with any

state-of-the-art method.

The highly challenging task of estimating a high-

resolution (HR)

image from its low-resolution (LR)

counterpart is referred to as super-resolution (SR). SR

received substantial attention from within the computer

vision research community and has a wide range of

applications [62, 70, 42].

4× SRGAN (proposed)

original

Figure 1: Super-resolved image (left) is almost indistin-

guishable from original (right). [4× upscaling]

The ill-posed nature of the underdetermined SR problem

is particularly pronounced for high upscaling factors, for

which texture detail in the reconstructed SR images is

typically absent. The optimization target of supervised

SR algorithms is commonly the minimization of the mean

squared error (MSE) between the recovered HR image

and the ground truth. This is convenient as minimizing

MSE also maximizes the peak signal-to-noise ratio (PSNR),

which is a common measure used to evaluate and compare

SR algorithms [60]. However, the ability of MSE (and

PSNR) to capture perceptually relevant differences, such

as high texture detail, is very limited as they are defined

based on pixel-wise image differences [59, 57, 25]. This

is illustrated in Figure 2, where highest PSNR does not

necessarily reflect the perceptually better SR result. The

4681

�

bicubic

(21.59dB/0.6423)

SRResNet

(23.53dB/0.7832)

SRGAN

(21.15dB/0.6868)

original

Figure 2: From left to right: bicubic interpolation, deep residual network optimized for MSE, deep residual generative

adversarial network optimized for a loss more sensitive to human perception, original HR image. Corresponding PSNR and

SSIM are shown in brackets. [4× upscaling]

perceptual difference between the super-resolved and orig-

inal image means that the recovered image is not photo-

realistic as defined by Ferwerda [15].

In this work we propose a super-resolution generative

adversarial network (SRGAN) for which we employ a

deep residual network (ResNet) with skip-connection and

diverge from MSE as the sole optimization target. Different

from previous works, we define a novel perceptual loss us-

ing high-level feature maps of the VGG network [48, 32, 4]

combined with a discriminator that encourages solutions

perceptually hard to distinguish from the HR reference

images. An example photo-realistic image that was super-

resolved with a 4× upscaling factor is shown in Figure 1.

1.1. Related work

1.1.1

Image super-resolution

Recent overview articles on image SR include Nasrollahi

and Moeslund [42] or Yang et al. [60]. Here we will focus

on single image super-resolution (SISR) and will not further

discuss approaches that recover HR images from multiple

images [3, 14].

Prediction-based methods were among the first methods

to tackle SISR. While these filtering approaches, e.g. linear,

bicubic or Lanczos [13] filtering, can be very fast, they

oversimplify the SISR problem and usually yield solutions

with overly smooth textures. Methods that put particularly

focus on edge-preservation have been proposed [1, 38].

More powerful approaches aim to establish a complex

mapping between low- and high-resolution image informa-

tion and usually rely on training data. Many methods that

are based on example-pairs rely on LR training patches for

which the corresponding HR counterparts are known. Early

work was presented by Freeman et al. [17, 16]. Related ap-

proaches to the SR problem originate in compressed sensing

[61, 11, 68]. In Glasner et al. [20] the authors exploit patch

redundancies across scales within the image to drive the SR.

This paradigm of self-similarity is also employed in Huang

et al. [30], where self dictionaries are extended by further

allowing for small transformations and shape variations. Gu

et al. [24] proposed a convolutional sparse coding approach

that improves consistency by processing the whole image

rather than overlapping patches.

To reconstruct realistic texture detail while avoiding

edge artifacts, Tai et al. [51] combine an edge-directed SR

algorithm based on a gradient profile prior [49] with the

benefits of learning-based detail synthesis. Zhang et al. [69]

propose a multi-scale dictionary to capture redundancies of

similar image patches at different scales. To super-resolve

landmark images, Yue et al. [66] retrieve correlating HR

images with similar content from the web and propose a

structure-aware matching criterion for alignment.

Neighborhood embedding approaches upsample a LR

image patch by finding similar LR training patches in a low

dimensional manifold and combining their corresponding

HR patches for reconstruction [53, 54]. In Kim and Kwon

[34] the authors emphasize the tendency of neighborhood

approaches to overfit and formulate a more general map of

example pairs using kernel ridge regression. The regression

problem can also be solved with Gaussian process regres-

sion [26], trees [45] or Random Forests [46]. In Dai et al.

[5] a multitude of patch-specific regressors is learned and

the most appropriate regressors selected during testing.

Recently convolutional neural network (CNN) based SR

4682

�

In Wang

algorithms have shown excellent performance.

[58] the authors encode a sparse representation

et al.

prior into their feed-forward network architecture based on

the learned iterative shrinkage and thresholding algorithm

(LISTA) [22]. Dong et al. [8, 9] used bicubic interpolation

to upscale an input image and trained a three layer deep

fully convolutional network end-to-end to achieve state-

of-the-art SR performance. Subsequently, it was shown

that enabling the network to learn the upscaling filters

directly can further increase performance both in terms of

accuracy and speed [10, 47, 56]. With their deeply-recursive

convolutional network (DRCN), Kim et al. [33] presented

a highly performant architecture that allows for long-range

pixel dependencies while keeping the number of model

parameters small. Of particular relevance for our paper are

[4],

the works by Johnson et al.

who rely on a loss function closer to perceptual similarity

to recover visually more convincing HR images.

[32] and Bruna et al.

1.1.2 Design of convolutional neural networks

The state of the art for many computer vision problems is

meanwhile set by specifically designed CNN architectures

following the success of the work by Krizhevsky et al. [36].

It was shown that deeper network architectures can be

difficult to train but have the potential to substantially

increase the network’s accuracy as they allow modeling

mappings of very high complexity [48, 50].

To effi-

ciently train these deeper network architectures, batch-

normalization [31] is often used to counteract the internal

co-variate shift. Deeper network architectures have also

been shown to increase performance for SISR, e.g. Kim et

al. [33] formulate a recursive CNN and present state-of-the-

art results. Another powerful design choice that eases the

training of deep CNNs is the recently introduced concept of

residual blocks [28] and skip-connections [29, 33]. Skip-

connections relieve the network architecture of modeling

the identity mapping that is trivial in nature, however, po-

tentially non-trivial to represent with convolutional kernels.

In the context of SISR it was also shown that learning

upscaling filters is beneficial in terms of accuracy and speed

[10, 47, 56]. This is an improvement over Dong et al. [9]

where bicubic interpolation is employed to upscale the LR

observation before feeding the image to the CNN.

1.1.3 Loss functions

Pixel-wise loss functions such as MSE struggle to handle

the uncertainty inherent in recovering lost high-frequency

details such as texture: minimizing MSE encourages find-

ing pixel-wise averages of plausible solutions which are

typically overly-smooth and thus have poor perceptual qual-

ity [41, 32, 12, 4]. Reconstructions of varying perceptual

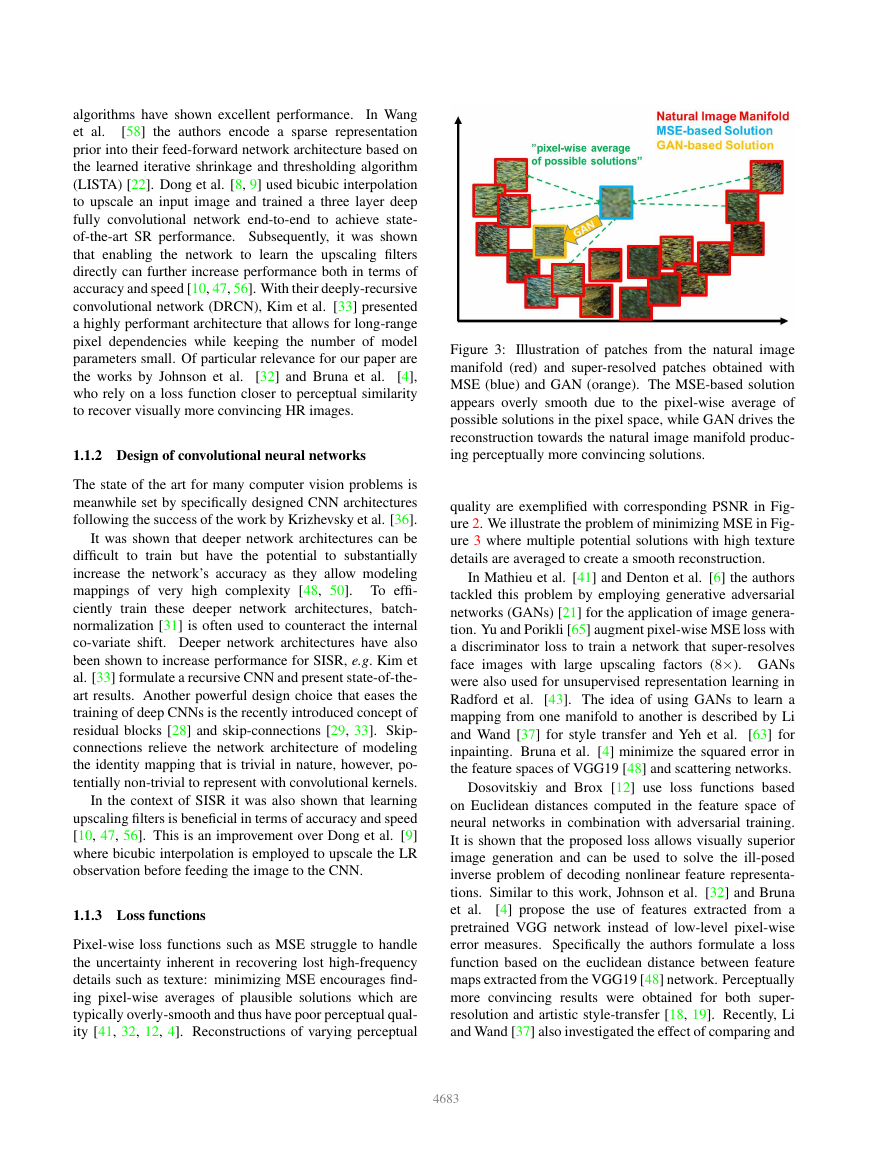

Figure 3:

Illustration of patches from the natural image

manifold (red) and super-resolved patches obtained with

MSE (blue) and GAN (orange). The MSE-based solution

appears overly smooth due to the pixel-wise average of

possible solutions in the pixel space, while GAN drives the

reconstruction towards the natural image manifold produc-

ing perceptually more convincing solutions.

quality are exemplified with corresponding PSNR in Fig-

ure 2. We illustrate the problem of minimizing MSE in Fig-

ure 3 where multiple potential solutions with high texture

details are averaged to create a smooth reconstruction.

In Mathieu et al. [41] and Denton et al. [6] the authors

tackled this problem by employing generative adversarial

networks (GANs) [21] for the application of image genera-

tion. Yu and Porikli [65] augment pixel-wise MSE loss with

a discriminator loss to train a network that super-resolves

face images with large upscaling factors (8×). GANs

were also used for unsupervised representation learning in

Radford et al.

[43]. The idea of using GANs to learn a

mapping from one manifold to another is described by Li

and Wand [37] for style transfer and Yeh et al.

[63] for

inpainting. Bruna et al. [4] minimize the squared error in

the feature spaces of VGG19 [48] and scattering networks.

Dosovitskiy and Brox [12] use loss functions based

on Euclidean distances computed in the feature space of

neural networks in combination with adversarial training.

It is shown that the proposed loss allows visually superior

image generation and can be used to solve the ill-posed

inverse problem of decoding nonlinear feature representa-

tions. Similar to this work, Johnson et al. [32] and Bruna

et al.

[4] propose the use of features extracted from a

pretrained VGG network instead of low-level pixel-wise

error measures. Specifically the authors formulate a loss

function based on the euclidean distance between feature

maps extracted from the VGG19 [48] network. Perceptually

more convincing results were obtained for both super-

resolution and artistic style-transfer [18, 19]. Recently, Li

and Wand [37] also investigated the effect of comparing and

4683

�

blending patches in pixel or VGG feature space.

1.2. Contribution

GANs provide a powerful framework for generating

plausible-looking natural images with high perceptual qual-

ity. The GAN procedure encourages the reconstructions

to move towards regions of the search space with high

probability of containing photo-realistic images and thus

closer to the natural image manifold as shown in Figure 3.

In this paper we describe the first very deep ResNet

[28, 29] architecture using the concept of GANs to form a

perceptual loss function for photo-realistic SISR. Our main

contributions are:

• We set a new state of the art for image SR with

high upscaling factors (4×) as measured by PSNR and

structural similarity (SSIM) with our 16 blocks deep

ResNet (SRResNet) optimized for MSE.

• We propose SRGAN which is a GAN-based network

optimized for a new perceptual loss. Here we replace

the MSE-based content loss with a loss calculated on

feature maps of the VGG network [48], which are

more invariant to changes in pixel space [37].

• We confirm with an extensive mean opinion score

(MOS) test on images from three public benchmark

datasets that SRGAN is the new state of the art, by a

large margin, for the estimation of photo-realistic SR

images with high upscaling factors (4×).

We describe the network architecture and the perceptual

loss in Section 2. A quantitative evaluation on public bench-

mark datasets as well as visual illustrations are provided in

Section 3. The paper concludes with a discussion in Section

4 and concluding remarks in Section 5.

2. Method

In SISR the aim is to estimate a high-resolution, super-

resolved image I SR from a low-resolution input image

I LR. Here I LR is the low-resolution version of its high-

resolution counterpart I HR. The high-resolution images

In training, I LR is

are only available during training.

obtained by applying a Gaussian filter to I HR followed by a

downsampling operation with downsampling factor r. For

an image with C color channels, we describe I LR by a

real-valued tensor of size W × H × C and I HR, I SR by

rW × rH × C respectively.

Our ultimate goal is to train a generating function G that

estimates for a given LR input image its corresponding HR

counterpart. To achieve this, we train a generator network as

a feed-forward CNN GθG parametrized by θG. Here θG =

{W1:L; b1:L} denotes the weights and biases of a L-layer

deep network and is obtained by optimizing a SR-specific

loss function lSR. For training images I HR

with corresponding I LR

, n = 1, . . . , N , we solve:

n

, n = 1, . . . , N

n

ˆθG = arg min

θG

1

N

N

X

n=1

lSR(GθG (I LR

n ), I HR

n )

(1)

In this work we will specifically design a perceptual loss

lSR as a weighted combination of several loss components

that model distinct desirable characteristics of the recovered

SR image. The individual loss functions are described in

more detail in Section 2.2.

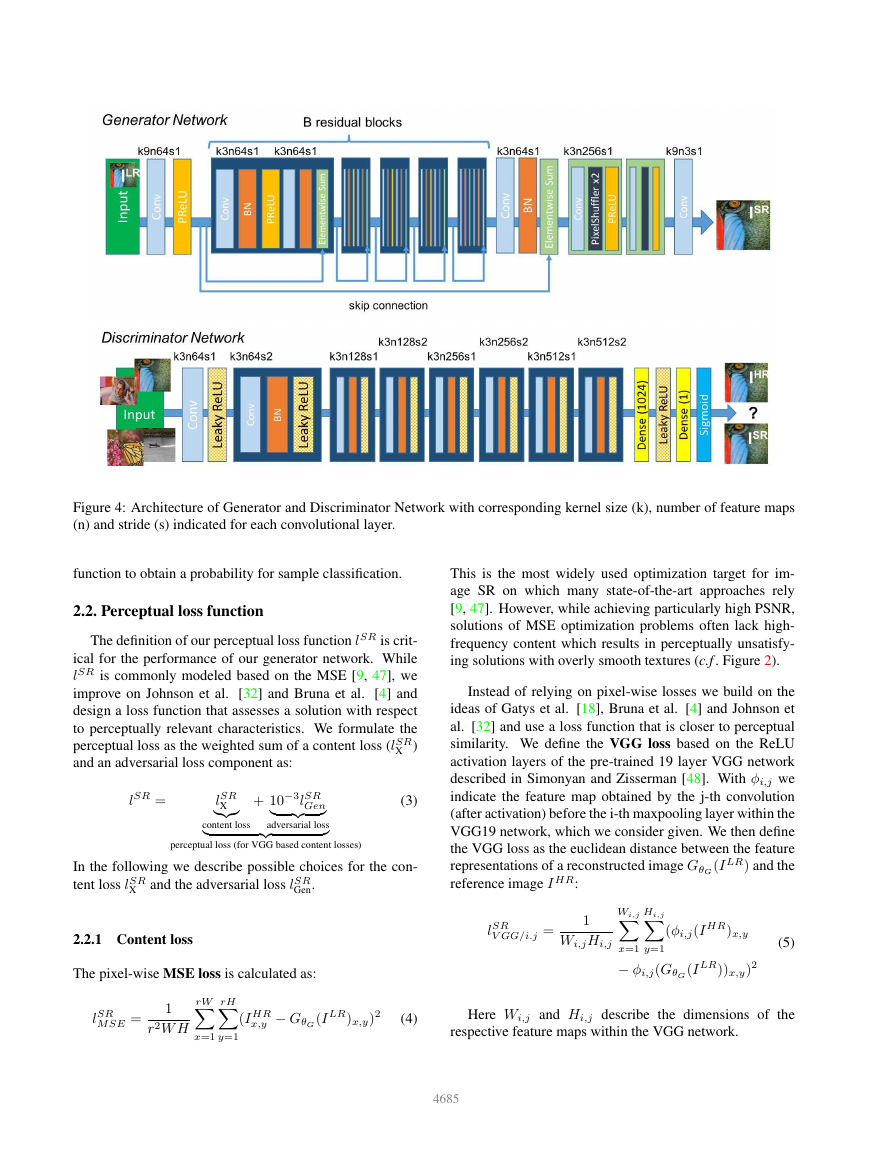

2.1. Adversarial network architecture

Following Goodfellow et al.

[21] we further define

a discriminator network DθD which we optimize in an

alternating manner along with GθG to solve the adversarial

min-max problem:

min

θG

max

θD

E

IHR∼ptrain(IHR)[log DθD (I HR)]+

E

I LR∼pG(I LR)[log(1 − DθD (GθG(I LR))]

(2)

The general idea behind this formulation is that it allows

one to train a generative model G with the goal of fooling a

differentiable discriminator D that is trained to distinguish

super-resolved images from real images. With this approach

our generator can learn to create solutions that are highly

similar to real images and thus difficult to classify by D.

This encourages perceptually superior solutions residing in

the subspace, the manifold, of natural images. This is in

contrast to SR solutions obtained by minimizing pixel-wise

error measurements, such as the MSE.

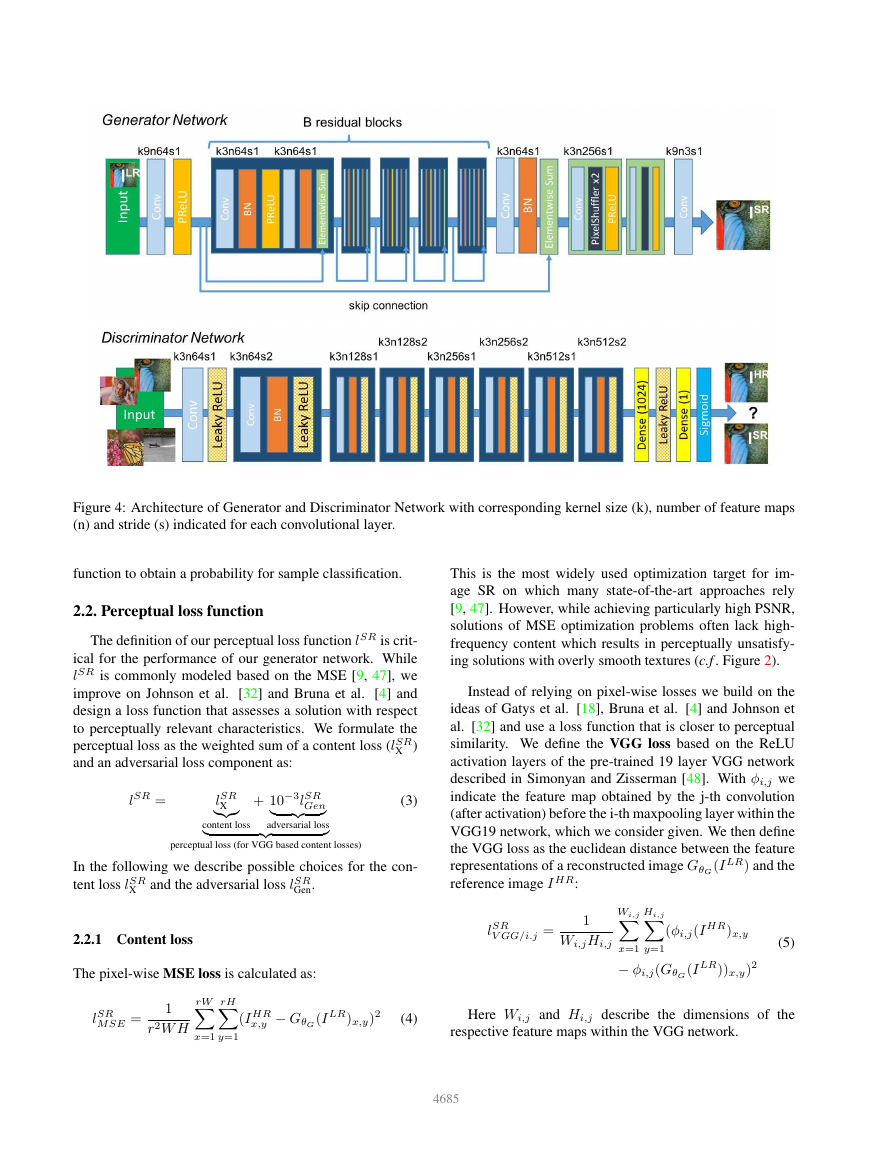

At the core of our very deep generator network G, which

is illustrated in Figure 4 are B residual blocks with identical

layout. Inspired by Johnson et al. [32] we employ the block

layout proposed by Gross and Wilber [23]. Specifically, we

use two convolutional layers with small 3×3 kernels and 64

feature maps followed by batch-normalization layers [31]

and ParametricReLU [27] as the activation function. We

increase the resolution of the input image with two trained

sub-pixel convolution layers as proposed by Shi et al. [47].

To discriminate real HR images from generated SR

samples we train a discriminator network. The architecture

is shown in Figure 4. We follow the architectural guidelines

[43] and use LeakyReLU

summarized by Radford et al.

activation (α = 0.2) and avoid max-pooling throughout

the network. The discriminator network is trained to solve

the maximization problem in Equation 2. It contains eight

convolutional layers with an increasing number of 3 × 3

filter kernels, increasing by a factor of 2 from 64 to 512 ker-

nels as in the VGG network [48]. Strided convolutions are

used to reduce the image resolution each time the number

of features is doubled. The resulting 512 feature maps are

followed by two dense layers and a final sigmoid activation

4684

�

Figure 4: Architecture of Generator and Discriminator Network with corresponding kernel size (k), number of feature maps

(n) and stride (s) indicated for each convolutional layer.

function to obtain a probability for sample classification.

2.2. Perceptual loss function

The definition of our perceptual loss function lSR is crit-

ical for the performance of our generator network. While

lSR is commonly modeled based on the MSE [9, 47], we

improve on Johnson et al. [32] and Bruna et al. [4] and

design a loss function that assesses a solution with respect

to perceptually relevant characteristics. We formulate the

perceptual loss as the weighted sum of a content loss (lSR

X )

and an adversarial loss component as:

lSR =

lSR

X

|{z}

|

content loss

adversarial loss

+ 10−3lSR

Gen

}

}

|

{z

{z

perceptual loss (for VGG based content losses)

(3)

In the following we describe possible choices for the con-

tent loss lSR

X and the adversarial loss lSR

Gen.

2.2.1 Content loss

The pixel-wise MSE loss is calculated as:

This is the most widely used optimization target for im-

age SR on which many state-of-the-art approaches rely

[9, 47]. However, while achieving particularly high PSNR,

solutions of MSE optimization problems often lack high-

frequency content which results in perceptually unsatisfy-

ing solutions with overly smooth textures (c.f . Figure 2).

Instead of relying on pixel-wise losses we build on the

ideas of Gatys et al. [18], Bruna et al. [4] and Johnson et

al. [32] and use a loss function that is closer to perceptual

similarity. We define the VGG loss based on the ReLU

activation layers of the pre-trained 19 layer VGG network

described in Simonyan and Zisserman [48]. With φi,j we

indicate the feature map obtained by the j-th convolution

(after activation) before the i-th maxpooling layer within the

VGG19 network, which we consider given. We then define

the VGG loss as the euclidean distance between the feature

representations of a reconstructed image GθG (I LR) and the

reference image I HR:

lSR

V GG/i.j =

1

Wi,jHi,j

Wi,j

X

Hi,j

X

(φi,j(I HR)x,y

y=1

x=1

− φi,j(GθG (I LR))x,y)2

(5)

lSR

M SE =

1

r2W H

rW

X

rH

X

x=1

y=1

(I HR

x,y − GθG (I LR)x,y)2

(4)

Here Wi,j and Hi,j describe the dimensions of the

respective feature maps within the VGG network.

4685

�

2.2.2 Adversarial loss

In addition to the content losses described so far, we also

add the generative component of our GAN to the perceptual

loss. This encourages our network to favor solutions that

reside on the manifold of natural images, by trying to

fool the discriminator network. The generative loss lSR

Gen

is defined based on the probabilities of the discriminator

DθD (GθG (I LR)) over all training samples as:

lSR

Gen =

N

X

n=1

− log DθD (GθG(I LR))

(6)

Here, DθD (GθG (I LR)) is the probability that the recon-

structed image GθG (I LR) is a natural HR image. For better

gradient behavior we minimize − log DθD (GθG (I LR)) in-

stead of log[1 − DθD (GθG(I LR))] [21].

3. Experiments

3.1. Data and similarity measures

We perform experiments on three widely used bench-

mark datasets Set5 [2], Set14 [68] and BSD100, the testing

set of BSD300 [40]. All experiments are performed with

a scale factor of 4× between low- and high-resolution

images. This corresponds to a 16× reduction in image

pixels. For fair comparison, all reported PSNR [dB] and

SSIM [57] measures were calculated on the y-channel of

center-cropped, removal of a 4-pixel wide strip from each

border, images using the daala package1. Super-resolved

images for the reference methods, including nearest neigh-

bor, bicubic, SRCNN [8] and SelfExSR [30], were obtained

from online material supplementary to Huang et al.2 [30]

and for DRCN from Kim et al.3

[33]. Results obtained

with SRResNet (for losses: lSR

V GG/2.2) and the

SRGAN variants are available online4. Statistical tests were

performed as paired two-sided Wilcoxon signed-rank tests

and significance determined at p < 0.05.

M SE and lSR

The reader may also be interested in an independently

developed GAN-based solution on GitHub5. However it

only provides experimental results on a limited set of faces,

which is a more constrained and easier task.

3.2. Training details and parameters

We trained all networks on a NVIDIA Tesla M40 GPU

using a random sample of 350 thousand images from the

ImageNet database [44]. These images are distinct from the

1https://github.com/xiph/daala (commit: 8d03668)

2https://github.com/jbhuang0604/SelfExSR

3http://cv.snu.ac.kr/research/DRCN/

4https://twitter.box.com/s/

lcue6vlrd01ljkdtdkhmfvk7vtjhetog

5https://github.com/david-gpu/srez

testing images. We obtained the LR images by downsam-

pling the HR images (BGR, C = 3) using bicubic kernel

with downsampling factor r = 4. For each mini-batch we

crop 16 random 96 × 96 HR sub images of distinct training

images. Note that we can apply the generator model to

images of arbitrary size as it is fully convolutional. For

optimization we use Adam [35] with β1 = 0.9. The SRRes-

Net networks were trained with a learning rate of 10−4 and

106 update iterations. We employed the trained MSE-based

SRResNet network as initialization for the generator when

training the actual GAN to avoid undesired local optima.

All SRGAN variants were trained with 105 update iterations

at a learning rate of 10−4 and another 105 iterations at a

lower rate of 10−5. We alternate updates to the generator

and discriminator network, which is equivalent to k = 1

as used in Goodfellow et al. [21]. Our generator network

has 16 identical (B = 16) residual blocks. During test time

we turn batch-normalization update off to obtain an output

that deterministically depends only on the input [31]. Our

implementation is based on Theano [52] and Lasagne [7].

3.3. Mean opinion score (MOS) testing

We have performed a MOS test to quantify the ability of

different approaches to reconstruct perceptually convincing

images. Specifically, we asked 26 raters to assign an inte-

gral score from 1 (bad quality) to 5 (excellent quality) to the

super-resolved images. The raters rated 12 versions of each

image on Set5, Set14 and BSD100: nearest neighbor (NN),

bicubic, SRCNN [8], SelfExSR [30], DRCN [33], ESPCN

[47], SRResNet-MSE, SRResNet-VGG22∗ (∗not rated on

BSD100), SRGAN-MSE∗, SRGAN-VGG22∗, SRGAN-

VGG54 and the original HR image. Each rater thus rated

1128 instances (12 versions of 19 images plus 9 versions of

100 images) that were presented in a randomized fashion.

The raters were calibrated on the NN (score 1) and HR (5)

versions of 20 images from the BSD300 training set. In a

pilot study we assessed the calibration procedure and the

test-retest reliability of 26 raters on a subset of 10 images

from BSD100 by adding a method’s images twice to a

larger test set. We found good reliability and no significant

differences between the ratings of the identical images.

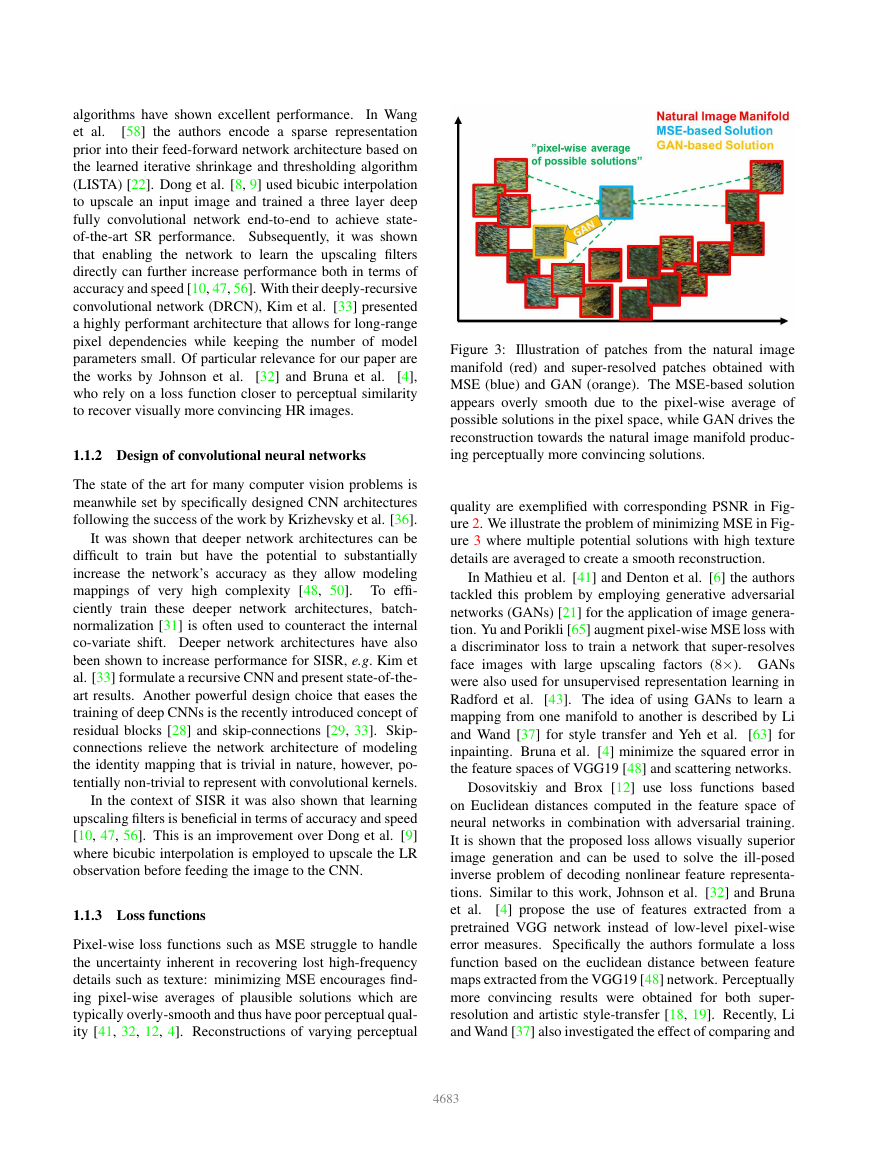

Raters very consistently rated NN interpolated test images

as 1 and the original HR images as 5 (c.f . Figure 5).

The experimental results of the conducted MOS tests are

summarized in Table 1, Table 2 and Figure 5.

3.4. Investigation of content loss

We investigated the effect of different content

loss

choices in the perceptual loss for the GAN-based networks.

Specifically we investigate lSR = lSR

Gen for the

following content losses lSR

X :

X + 10−3lSR

• SRGAN-MSE: lSR

M SE, to investigate the adversarial

network with the standard MSE as content loss.

4686

�

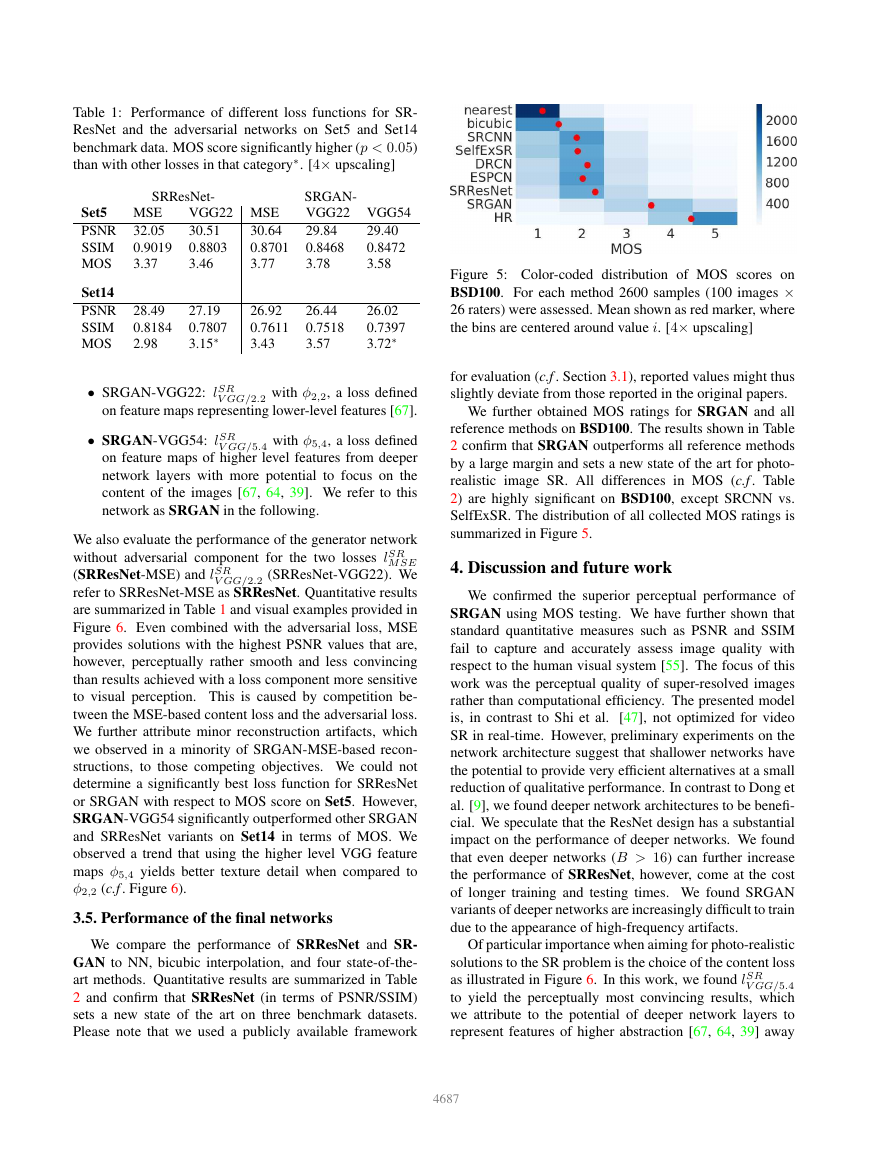

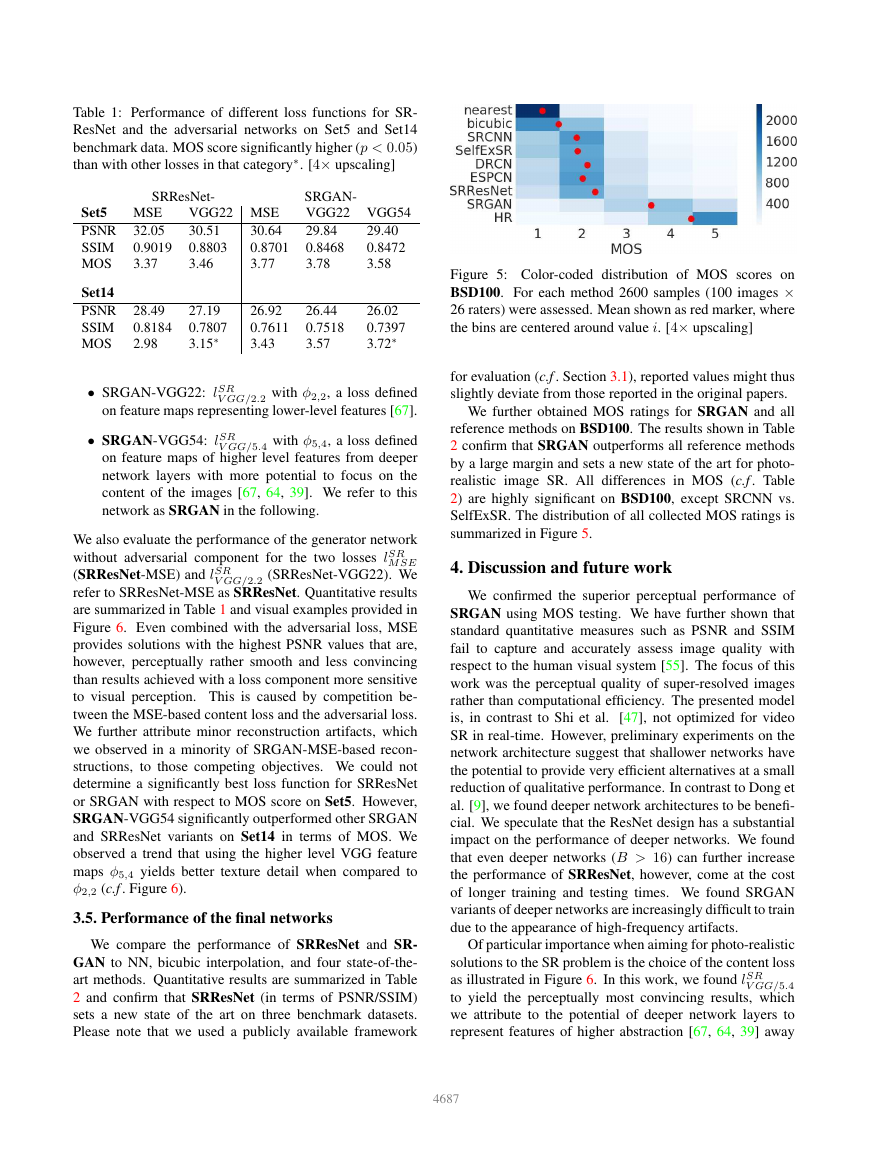

Table 1: Performance of different loss functions for SR-

ResNet and the adversarial networks on Set5 and Set14

benchmark data. MOS score significantly higher (p < 0.05)

than with other losses in that category∗. [4× upscaling]

SRResNet-

Set5

MSE

PSNR 32.05

SSIM 0.9019

MOS

3.37

VGG22 MSE

30.64

30.51

0.8701

0.8803

3.46

3.77

SRGAN-

VGG22 VGG54

29.84

0.8468

3.78

29.40

0.8472

3.58

Set14

PSNR 28.49

SSIM 0.8184

MOS

2.98

27.19

0.7807

3.15∗

26.92

0.7611

3.43

26.44

0.7518

3.57

26.02

0.7397

3.72∗

• SRGAN-VGG22: lSR

V GG/2.2 with φ2,2, a loss defined

on feature maps representing lower-level features [67].

• SRGAN-VGG54: lSR

V GG/5.4 with φ5,4, a loss defined

on feature maps of higher level features from deeper

network layers with more potential to focus on the

content of the images [67, 64, 39]. We refer to this

network as SRGAN in the following.

We also evaluate the performance of the generator network

without adversarial component for the two losses lSR

M SE

(SRResNet-MSE) and lSR

V GG/2.2 (SRResNet-VGG22). We

refer to SRResNet-MSE as SRResNet. Quantitative results

are summarized in Table 1 and visual examples provided in

Figure 6. Even combined with the adversarial loss, MSE

provides solutions with the highest PSNR values that are,

however, perceptually rather smooth and less convincing

than results achieved with a loss component more sensitive

to visual perception. This is caused by competition be-

tween the MSE-based content loss and the adversarial loss.

We further attribute minor reconstruction artifacts, which

we observed in a minority of SRGAN-MSE-based recon-

structions, to those competing objectives. We could not

determine a significantly best loss function for SRResNet

or SRGAN with respect to MOS score on Set5. However,

SRGAN-VGG54 significantly outperformed other SRGAN

and SRResNet variants on Set14 in terms of MOS. We

observed a trend that using the higher level VGG feature

maps φ5,4 yields better texture detail when compared to

φ2,2 (c.f . Figure 6).

3.5. Performance of the final networks

We compare the performance of SRResNet and SR-

GAN to NN, bicubic interpolation, and four state-of-the-

art methods. Quantitative results are summarized in Table

2 and confirm that SRResNet (in terms of PSNR/SSIM)

sets a new state of the art on three benchmark datasets.

Please note that we used a publicly available framework

Figure 5: Color-coded distribution of MOS scores on

BSD100. For each method 2600 samples (100 images ×

26 raters) were assessed. Mean shown as red marker, where

the bins are centered around value i. [4× upscaling]

for evaluation (c.f . Section 3.1), reported values might thus

slightly deviate from those reported in the original papers.

We further obtained MOS ratings for SRGAN and all

reference methods on BSD100. The results shown in Table

2 confirm that SRGAN outperforms all reference methods

by a large margin and sets a new state of the art for photo-

realistic image SR. All differences in MOS (c.f . Table

2) are highly significant on BSD100, except SRCNN vs.

SelfExSR. The distribution of all collected MOS ratings is

summarized in Figure 5.

4. Discussion and future work

We confirmed the superior perceptual performance of

SRGAN using MOS testing. We have further shown that

standard quantitative measures such as PSNR and SSIM

fail to capture and accurately assess image quality with

respect to the human visual system [55]. The focus of this

work was the perceptual quality of super-resolved images

rather than computational efficiency. The presented model

[47], not optimized for video

is, in contrast to Shi et al.

SR in real-time. However, preliminary experiments on the

network architecture suggest that shallower networks have

the potential to provide very efficient alternatives at a small

reduction of qualitative performance. In contrast to Dong et

al. [9], we found deeper network architectures to be benefi-

cial. We speculate that the ResNet design has a substantial

impact on the performance of deeper networks. We found

that even deeper networks (B > 16) can further increase

the performance of SRResNet, however, come at the cost

of longer training and testing times. We found SRGAN

variants of deeper networks are increasingly difficult to train

due to the appearance of high-frequency artifacts.

Of particular importance when aiming for photo-realistic

solutions to the SR problem is the choice of the content loss

as illustrated in Figure 6. In this work, we found lSR

V GG/5.4

to yield the perceptually most convincing results, which

we attribute to the potential of deeper network layers to

represent features of higher abstraction [67, 64, 39] away

4687

�

SRResNet

SRGAN-MSE

SRGAN-VGG22

SRGAN-VGG54

original HR image

(a)

(c)

(e)

(g)

(i)

(b)

(d)

(f)

(h)

(j)

Figure 6: SRResNet (left: a,b), SRGAN-MSE (middle left: c,d), SRGAN-VGG2.2 (middle: e,f) and SRGAN-VGG54

(middle right: g,h) reconstruction results and corresponding reference HR image (right: i,j). [4× upscaling]

Table 2: Comparison of NN, bicubic, SRCNN [8], SelfExSR [30], DRCN [33], ESPCN [47], SRResNet, SRGAN-VGG54

and the original HR on benchmark data. Highest measures (PSNR [dB], SSIM, MOS) in bold. [4× upscaling]

Set5

PSNR

SSIM

MOS

Set14

PSNR

SSIM

MOS

BSD100

PSNR

SSIM

MOS

nearest

26.26

0.7552

1.28

bicubic

28.43

0.8211

1.97

SRCNN SelfExSR DRCN ESPCN SRResNet

30.07

0.8627

2.57

32.05

0.9019

3.37

30.33

0.872

2.65

31.52

0.8938

3.26

30.76

0.8784

2.89

24.64

0.7100

1.20

25.99

0.7486

1.80

27.18

0.7861

2.26

27.45

0.7972

2.34

28.02

0.8074

2.84

27.66

0.8004

2.52

28.49

0.8184

2.98

25.02

0.6606

1.11

25.94

0.6935

1.47

26.68

0.7291

1.87

26.83

0.7387

1.89

27.21

0.7493

2.12

27.02

0.7442

2.01

27.58

0.7620

2.29

SRGAN HR

∞

29.40

1

0.8472

3.58

4.32

26.02

0.7397

3.72

25.16

0.6688

3.56

∞

1

4.32

∞

1

4.46

from pixel space. We speculate that feature maps of these

deeper layers focus purely on the content while leaving the

adversarial loss focusing on texture details which are the

main difference between the super-resolved images without

the adversarial loss and photo-realistic images. We also

note that the ideal loss function depends on the application.

For example, approaches that hallucinate finer detail might

be less suited for medical applications or surveillance. The

perceptually convincing reconstruction of text or structured

scenes [30] is challenging and part of future work. The

development of content loss functions that describe image

spatial content, but more invariant to changes in pixel space

will further improve photo-realistic image SR results.

5. Conclusion

We have described a deep residual network SRRes-

Net that sets a new state of the art on public benchmark

datasets when evaluated with the widely used PSNR mea-

sure. We have highlighted some limitations of this PSNR-

focused image super-resolution and introduced SRGAN,

which augments the content loss function with an adversar-

ial loss by training a GAN. Using extensive MOS testing,

we have confirmed that SRGAN reconstructions for large

upscaling factors (4×) are, by a considerable margin, more

photo-realistic than reconstructions obtained with state-of-

the-art reference methods.

4688

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc