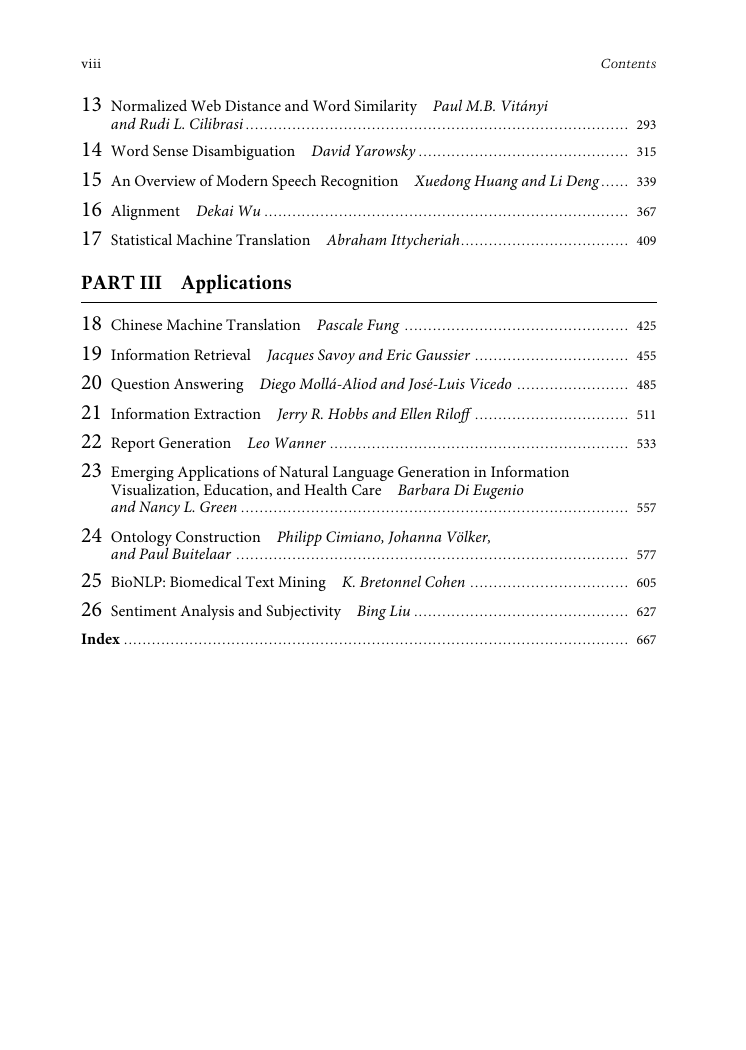

Contents

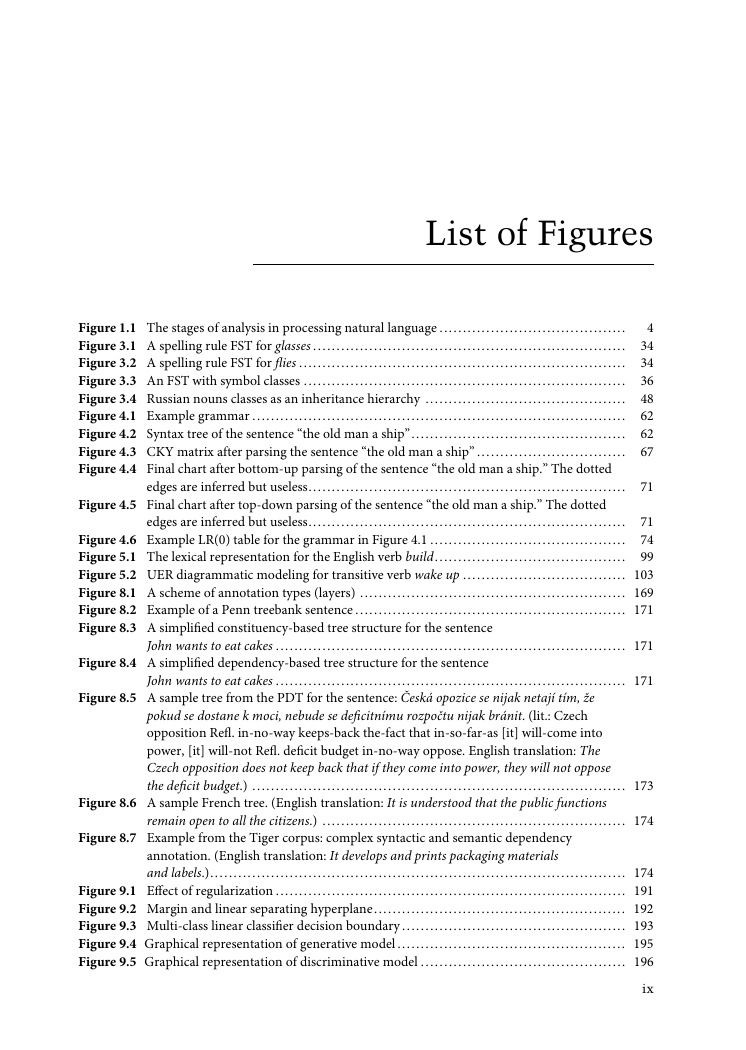

List of Figures

List of Tables

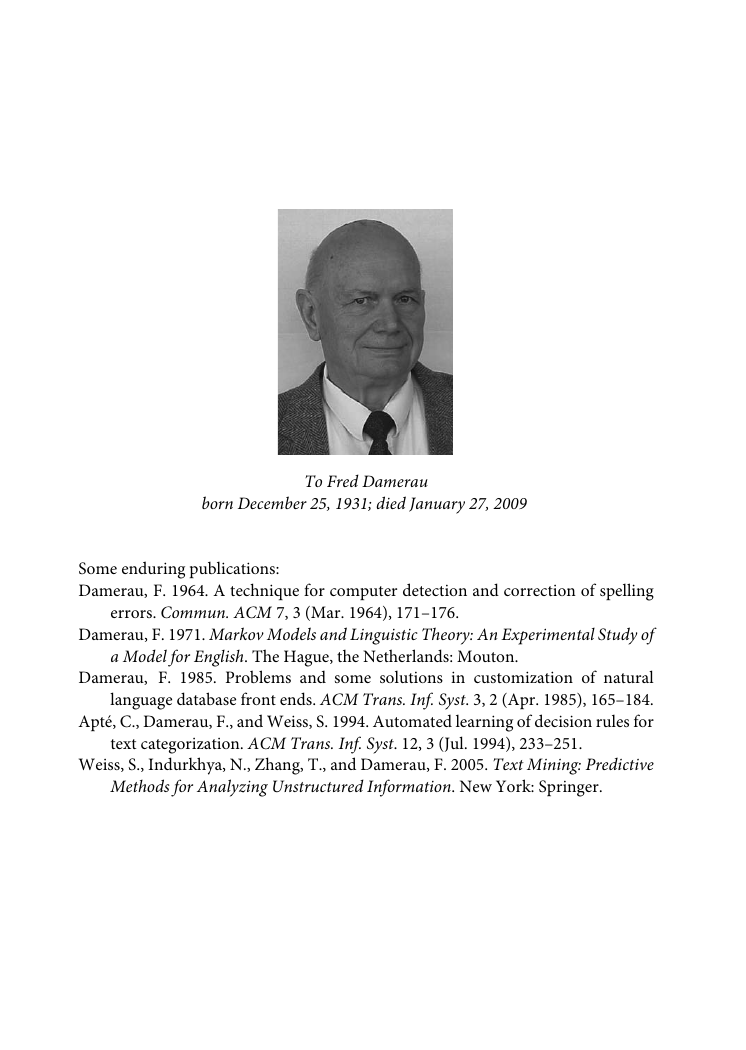

Editors

Board of Reviewers

Contributors

Preface

Part I: Classical Approaches

1. Classical Approaches to Natural Language Processing

1.1 Context

1.2 The Classical Toolkit

Text Preprocessing

Lexical Analysis

Syntactic Parsing

Semantic Analysis

Natural Language Generation

1.3 Conclusions

Reference

2. Text Preprocessing

2.1 Introduction

2.2 Challenges of Text Preprocessing

Character-Set Dependence

Language Dependence

Corpus Dependence

Application Dependence

2.3 Tokenization

Tokenization in Space-Delimited Languages

Tokenization in Unsegmented Languages

2.4 Sentence Segmentation

Sentence Boundary Punctuation

The Importance of Context

Traditional Rule-Based Approaches

Robustness and Trainability

Trainable Algorithms

2.5 Conclusion

References

3. Lexical Analysis

3.1 Introduction

3.2 Finite State Morphonology

Closing Remarks on Finite State Morphonology

3.3 Finite State Morphology

Disjunctive Affixes, Inflectional Classes, and Exceptionality

Further Remarks on Finite State Lexical Analysis

3.4 "Difficult" Morphology and Lexical Analysis

Isomorphism Problems

Contiguity Problems

3.5 Paradigm-Based Lexical Analysis

Paradigmatic Relations and Generalization

The Role of Defaults

Paradigm-Based Accounts of Difficult Morphology

Further Remarks on Paradigm-Based Approaches

3.6 Concluding Remarks

Acknowledgments

References

4. Syntactic Parsing

4.1 Introduction

4.2 Background

Context-Free Grammars

Example Grammar

Syntax Trees

Other Grammar Formalisms

Basic Concepts in Parsing

4.3 The Cocke–Kasami–Younger Algorithm

Handling Unary Rules

Example Session

Handling Long Right-Hand Sides

4.4 Parsing as Deduction

Deduction Systems

The CKY Algorithm

Chart Parsing

Bottom-Up Left-Corner Parsing

Top-Down Earley-Style Parsing

Example Session

Dynamic Filtering

4.5 Implementing Deductive Parsing

Agenda-Driven Chart Parsing

Storing and Retrieving Parse Results

4.6 LR Parsing

The LR(0) Table

Deterministic LR Parsing

Generalized LR Parsing

Optimized GLR Parsing

4.7 Constraint-Based Grammars

Overview

Unification

Tabular Parsing with Unification

4.8 Issues in Parsing

Robustness

Disambiguation

Efficiency

4.9 Historical Notes and Outlook

Acknowledgments

References

5. Semantic Analysis

5.1 Basic Concepts and Issues in Natural Language Semantics

5.2 Theories and Approaches to Semantic Representation

Logical Approaches

Discourse Representation Theory

Pustejovsky's Generative Lexicon

Natural Semantic Metalanguage

Object-Oriented Semantics

5.3 Relational Issues in Lexical Semantics

Sense Relations and Ontologies

Roles

5.4 Fine-Grained Lexical-Semantic Analysis: Three Case Studies

Emotional Meanings: "Sadness" and "Worry" in English and Chinese

Ethnogeographical Categories: "Rivers" and "Creeks"

Functional Macro-Categories

5.5 Prospectus and "Hard Problems"

Acknowledgments

References

6. Natural Language Generation

6.1 Introduction

6.2 Examples of Generated Texts: From Complex to Simple and Back Again

Complex

Simple

Today

6.3 The Components of a Generator

Components and Levels of Representation

6.4 Approaches to Text Planning

The Function of the Speaker

Desiderata for Text Planning

Pushing vs. Pulling

Planning by Progressive Refinement of the Speaker's Message

Planning Using Rhetorical Operators

Text Schemas

6.5 The Linguistic Component

Surface Realization Components

Relationship to Linguistic Theory

Chunk Size

Assembling vs. Navigating

Systemic Grammars

Functional Unification Grammars

6.6 The Cutting Edge

Story Generation

Personality-Sensitive Generation

6.7 Conclusions

References

Part II: Empirical and Statistical Approaches

7. Corpus Creation

7.1 Introduction

7.2 Corpus Size

7.3 Balance, Representativeness, and Sampling

7.4 Data Capture and Copyright

7.5 Corpus Markup and Annotation

7.6 Multilingual Corpora

7.7 Multimodal Corpora

7.8 Conclusions

References

8. Treebank Annotation

8.1 Introduction

8.2 Corpus Annotation Types

8.3 Morphosyntactic Annotation

8.4 Treebanks: Syntactic, Semantic, and Discourse Annotation

Motivation and Definition

An Example: The Penn Treebank

Annotation and Linguistic Theory

Going Beyond the Surface Shape of the Sentence

8.5 The Process of Building Treebanks

8.6 Applications of Treebanks

8.7 Searching Treebanks

8.8 Conclusions

Acknowledgments

References

9. Fundamental Statistical Techniques

9.1 Binary Linear Classification

9.2 One-versus-All Method for Multi-Category Classification

9.3 Maximum Likelihood Estimation

9.4 Generative and Discriminative Models

Naive Bayes

Logistic Regression

9.5 Mixture Model and EM

9.6 Sequence Prediction Models

Hidden Markov Model

Local Discriminative Model for Sequence Prediction

Global Discriminative Model for Sequence Prediction

References

10. Part-of-Speech Tagging

10.1 Introduction

Parts of Speech

Part-of-Speech Problem

10.2 The General Framework

10.3 Part-of-Speech Tagging Approaches

Rule-Based Approaches

Markov Model Approaches

Maximum Entropy Approaches

10.4 Other Statistical and Machine Learning Approaches

Methods and Relevant Work

Combining Taggers

10.5 POS Tagging in Languages Other Than English

Chinese

Korean

Other Languages

10.6 Conclusion

References

11. Statistical Parsing

11.1 Introduction

11.2 Basic Concepts and Terminology

Syntactic Representations

Statistical Parsing Models

Parser Evaluation

11.3 Probabilistic Context-Free Grammars

Basic Definitions

PCFGs as Statistical Parsing Models

Learning and Inference

11.4 Generative Models

History-Based Models

PCFG Transformations

Data-Oriented Parsing

11.5 Discriminative Models

Local Discriminative Models

Global Discriminative Models

11.6 Beyond Supervised Parsing

Weakly Supervised Parsing

Unsupervised Parsing

11.7 Summary and Conclusions

Acknowledgments

References

12. Multiword Expressions

12.1 Introduction

12.2 Linguistic Properties of MWEs

Idiomaticity

Other Properties of MWEs

Testing an Expression for MWEhood

Collocations and MWEs

A Word on Terminology and Related Fields

12.3 Types of MWEs

Nominal MWEs

Verbal MWEs

Prepositional MWEs

12.4 MWE Classification

12.5 Research Issues

Identification

Extraction

Internal Syntactic Disambiguation

MWE Interpretation

12.6 Summary

Acknowledgments

References

13. Normalized Web Distance and Word Similarity

13.1 Introduction

13.2 Some Methods for Word Similarity

Association Measures

Attributes

Relational Word Similarity

Latent Semantic Analysis

13.3 Background of the NWD Method

13.4 Brief Introduction to Kolmogorov Complexity

13.5 Information Distance

Normalized Information Distance

Normalized Compression Distance

13.6 Word Similarity: Normalized Web Distance

13.7 Applications and Experiments

Hierarchical Clustering

Classification

Matching the Meaning

Systematic Comparison with WordNet Semantics

13.8 Conclusion

References

14. Word Sense Disambiguation

14.1 Introduction

14.2 Word Sense Inventories and Problem Characteristics

Treatment of Part of Speech

Sources of Sense Inventories

Granularity of Sense Partitions

Hierarchical vs. Flat Sense Partitions

Idioms and Specialized Collocational Meanings

Regular Polysemy

Related Problems

14.3 Applications of Word Sense Disambiguation

Applications in Information Retrieval

Applications in Machine Translation

Other Applications

14.4 Early Approaches to Sense Disambiguation

Bar-Hillel: An Early Perspective on WSD

Early AI Systems: Word Experts

Dictionary-Based Methods

Kelly and Stone: An Early Corpus-Based Approach

14.5 Supervised Approaches to Sense Disambiguation

Training Data for Supervised WSD Algorithms

Features for WSD Algorithms

Supervised WSD Algorithms

14.6 Lightly Supervised Approaches to WSD

WSD via Word-Class Disambiguation

WSD via Monosemous Relatives

Hierarchical Class Models Using Selectional Restriction

Graph-Based Algorithms for WSD

Iterative Bootstrapping Algorithms

14.7 Unsupervised WSD and Sense Discovery

14.8 Conclusion

References

15. An Overview of Modern Speech Recognition

15.1 Introduction

15.2 Major Architectural Components

Acoustic Models

Language Models

Decoding

15.3 Major Historical Developments in Speech Recognition

15.4 Speech-Recognition Applications

IVR Applications

Appliance—"Response Point"

Mobile Applications

15.5 Technical Challenges and Future Research Directions

Robustness against Acoustic Environments and a Multitude of Other Factors

Capitalizing on Data Deluge for Speech Recognition

Self-Learning and Adaptation for Speech Recognition

Developing Speech Recognizers beyond the Language Barrier

Detection of Unknown Events in Speech Recognition

Learning from Human Speech Perception and Production

Capitalizing on New Trends in Computational Architectures for Speech Recognition

Embedding Knowledge and Parallelism into Speech-Recognition Decoding

15.6 Summary

References

16. Alignment

16.1 Introduction

16.2 Definitions and Concepts

Alignment

Constraints and Correlations

Classes of Algorithms

16.3 Sentence Alignment

Length-Based Sentence Alignment

Lexical Sentence Alignment

Cognate-Based Sentence Alignment

Multifeature Sentence Alignment

Comments on Sentence Alignment

16.4 Character, Word, and Phrase Alignment

Monotonic Alignment for Words

Non-Monotonic Alignment for Single-Token Words

Non-Monotonic Alignment for Multitoken Words and Phrases

16.5 Structure and Tree Alignment

Cost Functions

Algorithms

Strengths and Weaknesses of Structure and Tree Alignment Techniques

16.6 Biparsing and ITG Tree Alignment

Syntax-Directed Transduction Grammars (or Synchronous CFGs)

Inversion Transduction Grammars

Cost Functions

Algorithms

Grammars for Biparsing

Strengths and Weaknesses of Biparsing and ITG Tree Alignment Techniques

16.7 Conclusion

Acknowledgments

References

17. Statistical Machine Translation

17.1 Introduction

17.2 Approaches

17.3 Language Models

17.4 Parallel Corpora

17.5 Word Alignment

17.6 Phrase Library

17.7 Translation Models

IBM Models

Phrase-Based Systems

Syntax-Based Systems for Machine Translation

Direct Translation Models

17.8 Search Strategies

17.9 Research Areas

Acknowledgment

References

Part III: Applications

18. Chinese Machine Translation

18.1 Introduction

18.2 Preprocessing—What Is a Chinese Word?

The Maximum Entropy Framework for Word Segmentation

Translation-Driven Word Segmentation

18.3 Phrase-Based SMT—From Words to Phrases

18.4 Example-Based MT—Translation by Analogy

18.5 Syntax-Based MT—Structural Transfer

18.6 Semantics-Based SMT and Interlingua

Word Sense Translation

Semantic Role Labels

18.7 Applications

Chinese Term and Named Entity Translation

Chinese Spoken Language Translation

Crosslingual Information Retrieval Using Machine Translation

18.8 Conclusion and Discussion

Acknowledgments

References

19. Information Retrieval

19.1 Introduction

19.2 Indexing

Indexing Dimensions

Indexing Process

19.3 IR Models

Classical Boolean Model

Vector-Space Models

Probabilistic Models

Query Expansion and Relevance Feedback

Advanced Models

19.4 Evaluation and Failure Analysis

Evaluation Campaigns

Evaluation Measures

Failure Analysis

19.5 Natural Language Processing and Information Retrieval

Morphology

Orthographic Variation and Spelling Errors

Syntax

Semantics

Related Applications

19.6 Conclusion

Acknowledgments

References

20. Question Answering

20.1 Introduction

20.2 Historical Context

20.3 A Generic Question-Answering System

Question Analysis

Document or Passage Selection

Answer Extraction

Variations of the General Architecture

20.4 Evaluation of QA Systems

Evolution of the TREC QA Track

Evaluation Metrics

20.5 Multilinguality in Question Answering

20.6 Question Answering on Restricted Domains

20.7 Recent Trends and Related Work

Acknowledgment

References

21. Information Extraction

21.1 Introduction

21.2 Diversity of IE Tasks

Unstructured vs. Semi-Structured Text

Single-Document vs. Multi-Document IE

Assumptions about Incoming Documents

21.3 IE with Cascaded Finite-State Transducers

Complex Words

Basic Phrases

Complex Phrases

Domain Events

Template Generation: Merging Structures

21.4 Learning-Based Approaches to IE

Supervised Learning of Extraction Patterns and Rules

Supervised Learning of Sequential Classifier Models

Weakly Supervised and Unsupervised Approaches

Discourse-Oriented Approaches to IE

21.5 How Good Is Information Extraction?

Acknowledgments

References

22. Report Generation

22.1 Introduction

22.2 What Makes Report Generation a Distinct Task?

What Makes a Text a Report?

Report as Text Genre

Characteristic Features of Report Generation

22.3 What Does Report Generation Start From?

Data and Knowledge Sources

Data Assessment and Interpretation

22.4 Text Planning for Report Generation

Content Selection

Discourse Planning

22.5 Linguistic Realization for Report Generation

Input and Levels of Linguistic Representation

Tasks of Linguistic Realization

22.6 Sample Report Generators

22.7 Evaluation in Report Generation

22.8 Conclusions: The Present and the Future of RG

Acknowledgments

References

23. Emerging Applications of Natural Language Generation in Information Visualization, Education, and Health Care

23.1 Introduction

23.2 Multimedia Presentation Generation

23.3 Language Interfaces for Intelligent Tutoring Systems

CIRCSIM-Tutor

AUTOTUTOR

ATLAS-ANDES, WHY2-ATLAS, and WHY2-AUTOTUTOR

Briefly Noted

23.4 Argumentation for Health-Care Consumers

Acknowledgments

References

24. Ontology Construction

24.1 Introduction

24.2 Ontology and Ontologies

Anatomy of an Ontology

Types of Ontologies

Ontology Languages

Ontologies and Natural Language

24.3 Ontology Engineering

Principles

Methodologies

24.4 Ontology Learning

State of the Art

24.5 Summary

Acknowledgments

References

25. BioNLP: Biomedical Text Mining

25.1 Introduction

25.2 The Two Basic Domains of BioNLP: Medical and Biological

Medical/Clinical NLP

Biological/Genomic NLP

Model Organism Databases, and the Database Curator as a Canonical User

BioNLP in the Context of Natural Language Processing and Computational Linguistics

25.3 Just Enough Biology

25.4 Biomedical Text Mining

Named Entity Recognition

Named Entity Normalization

Abbreviation Disambiguation

25.5 Getting Up to Speed: Ten Papers and Resources That Will Let You Read Most Other Work in BioNLP

Named Entity Recognition 1: KeX

Named Entity Recognition 2: Collier, Nobata, and GENIA

Information Extraction 1: Blaschke et al. (1999)

Information Extraction 2: Craven and Kumlein (1999)

Information Extraction 3: MedLEE, BioMedLEE, and GENIES

Corpora 1: PubMed/MEDLINE

Corpora 2: GENIA

Lexical Resources 1: The Gene Ontology

Lexical Resources 2: Entrez Gene

Lexical Resources 3: Unified Medical Language System

25.6 Tools

Tokenization

Named Entity Recognition (Genes/Proteins)

Named Entity Recognition (Medical Concepts)

Full Parsers

25.7 Special Considerations in BioNLP

Code Coverage, Testing, and Corpora

User Interface Design

Portability

Proposition Banks and Semantic Role Labeling in BioNLP

The Difference between an Application That Will Relieve Pain and Suffering and an Application That Will Get You Published at ACL

Acknowledgments

References

26. Sentiment Analysis and Subjectivity

26.1 The Problem of Sentiment Analysis

26.2 Sentiment and Subjectivity Classification

Document-Level Sentiment Classification

Sentence-Level Subjectivity and Sentiment Classification

Opinion Lexicon Generation

26.3 Feature-Based Sentiment Analysis

Feature Extraction

Opinion Orientation Identification

Basic Rules of Opinions

26.4 Sentiment Analysis of Comparative Sentences

Problem Definition

Comparative Sentence Identification

Object and Feature Extraction

Preferred Object Identification

26.5 Opinion Search and Retrieval

26.6 Opinion Spam and Utility of Opinions

Opinion Spam

Utility of Reviews

26.7 Conclusions

Acknowledgments

References

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc