Title

Preface

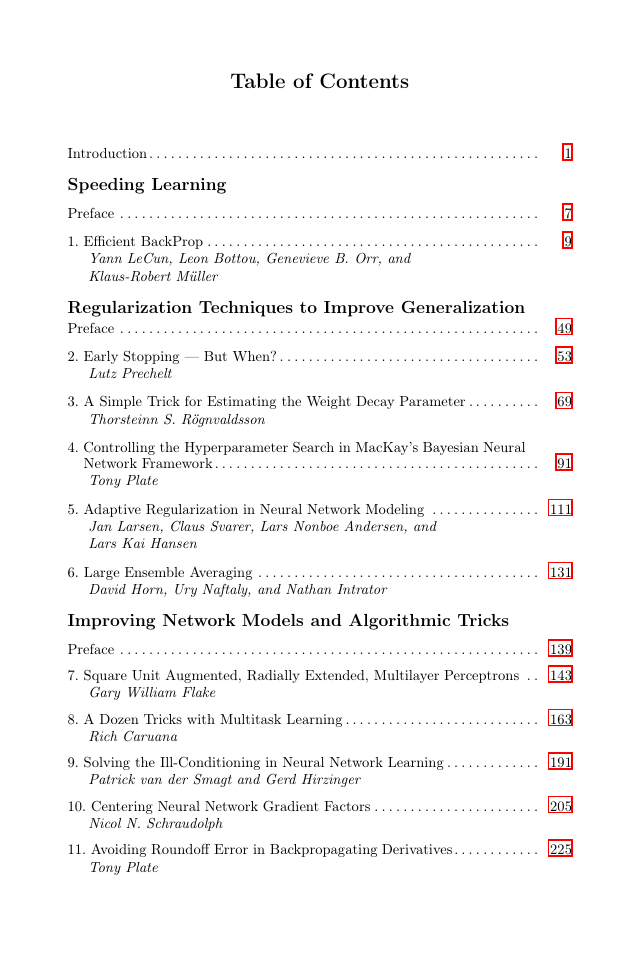

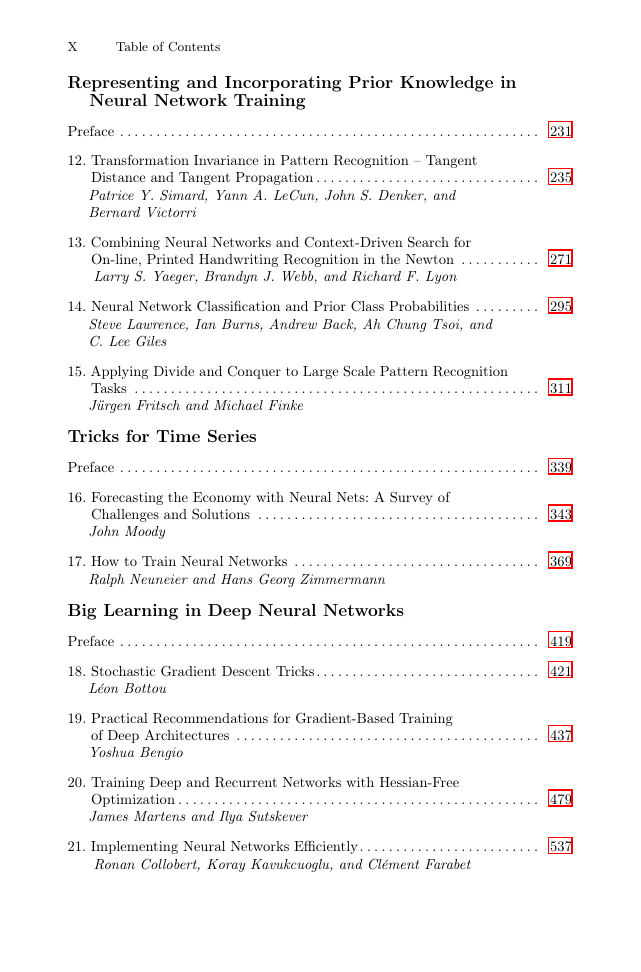

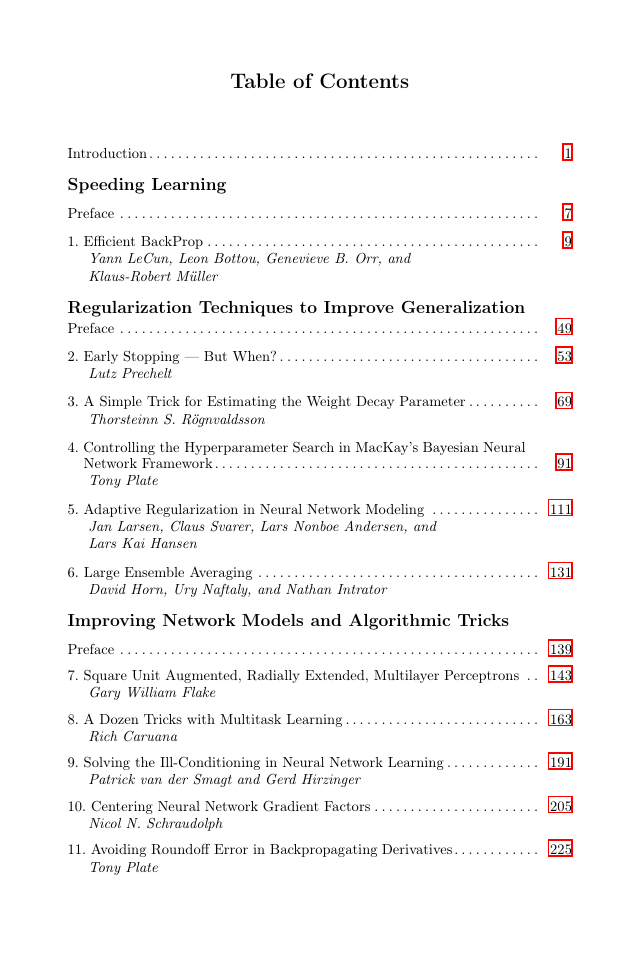

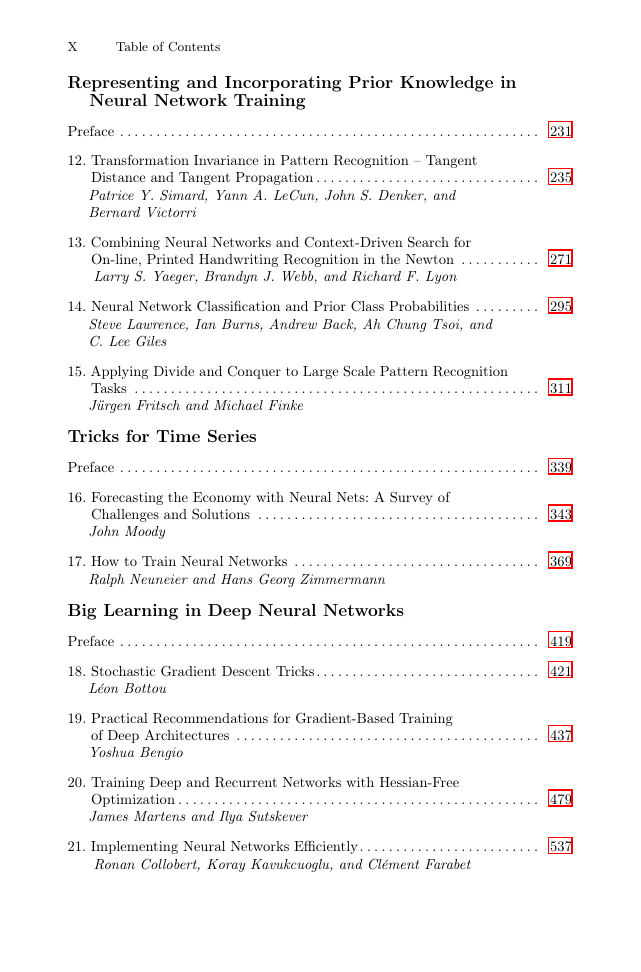

Table of Contents

Introduction

Speeding Learning

Speeding Learning

Regularization Techniques to Improve Generalization

Efficient BackProp

Introduction

Learning and Generalization

Standard Backpropagation

A Few Practical Tricks

Stochastic versus Batch Learning

Shuffling the Examples

Normalizing the Inputs

The Sigmoid

Choosing Target Values

Initializing the Weights

Choosing Learning Rates

Radial Basis Functions vs Sigmoid Units

Convergence of Gradient Descent

A Little Theory

Examples

Input Transformations and Error Surface Transformations Revisited

Classical Second Order Optimization Methods

Newton Algorithm

Conjugate Gradient

Quasi-Newton (BFGS)

Gauss-Newton and Levenberg Marquardt

Tricks to Compute the Hessian Information in Multilayer Networks

Finite Difference

Square Jacobian Approximation for the Gauss-Newton and Levenberg-Marquardt Algorithms

Backpropagating Second Derivatives

Backpropagating the Diagonal Hessian in Neural Nets

Computing the Product of the Hessian and a Vector

Analysis of the Hessian in Multi-layer Networks

Applying Second Order Methods to Multilayer Networks

A Stochastic Diagonal Levenberg Marquardt Method

Computing the Principal Eigenvalue/Vector of the Hessian

Discussion and Conclusion

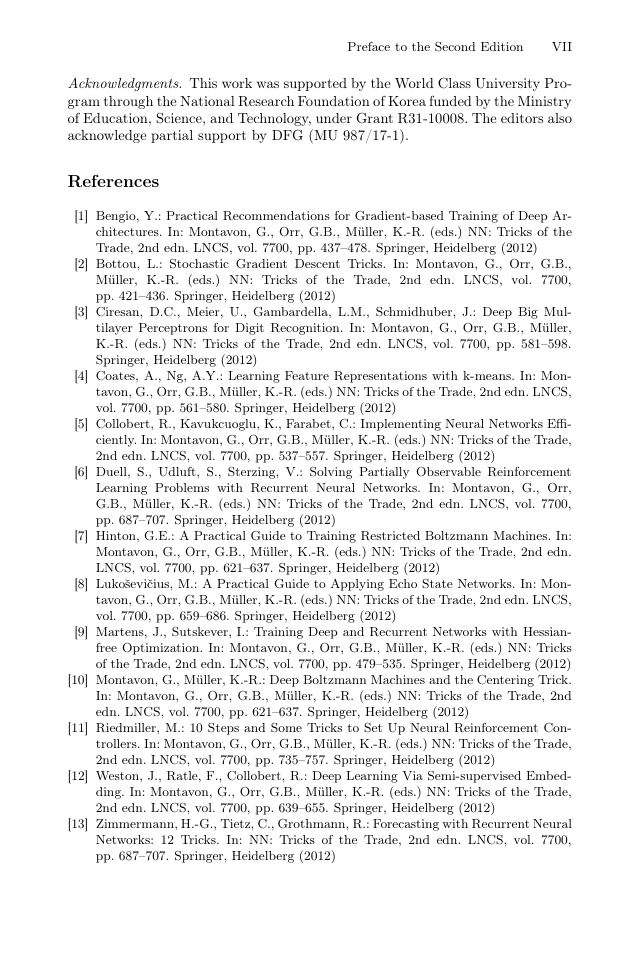

References

Regularization Techniques to Improve Generalization

Early Stopping — But When?

Early Stopping Is Not Quite as Simple

Why Early Stopping?

The Basic Early Stopping Technique

The Uglyness of Reality

How to Do Early Stopping Best

Some Classes of Stopping Criteria

The Trick: Criterion Selection Rules

Where and How Well Does This Trick Work?

Concrete Questions

Experimental Setup

Experiment Results

Discussion: Answers to the Questions

Generalization of These Results

Why This Works

References

A Simple Trick for Estimating the Weight Decay

Parameter

Introduction

Ill-Posed Problems, Regularization, and Such Things...

Ill-Posed Problems

Regularization

Bias and Variance

Bayesian Framework

Weight Decay

Early Stopping

Estimating

Search Estimates

Two Early Stopping Estimates

Experiments

Data Sets

Experimental Procedure

Quality of the Estimates

Weight Decay versus Early Stopping Committees

Conclusions

References

Controlling the Hyperparameter Search in

MacKay’s Bayesian Neural Network Framework

Introduction

Hyperparameter Updates

Difficulties with Using the Update Formulas

Control Strategies

Choosing When to Update Hyperparameters

Dealing with Out-of-Bounds Estimates of Numbers of Well-Determined Parameters

Further Generally Applicable Strategies

Experimental Setup

Targets for ``Good'' Performance

Network Architecture and Training

Effectiveness of Control Strategies

Relationship of Test Set Error to Evidence

Conclusion

References

Adaptive Regularization in Neural Network Modeling

Introduction

Training and Generalization

Adapting Regularization Parameters

Numerical Experiments

Potentials and Limitations in the Approach

Classification

Time Series Prediction

Conclusions

References

Large Ensemble Averaging

Introduction

Extrapolation to Large-Ensemble Averages

Application to the Sunspots Problem

Best Result

Theoretical Analysis

References

Improving Network Models and Algorithmic Tricks

Improving Network Models and Algorithmic Tricks

Square Unit Augmented, Radially Extended, Multilayer Perceptrons

Introduction and Motivation

The Trick: A SQUARE-MLP

Example Applications

Hill-Plateau Function Approximation

Two-Spirals Classification

Vowel Classification

Theoretical Justification

Intuitive and Topological Justification

Conclusions

References

A Dozen Tricks with Multitask Learning

Introduction to Multitask Learning in Backprop Nets

Single and Multitask Learning of Task 1

Results

Discussion

Tricks for Using Multitask Learning in the Real World

Using the Future to Predict the Present

Multiple Metrics

Multiple Output Representations

Time Series Prediction

Using Non-operational Features

Using Extra Tasks to Focus Attention

Hints: Tasks Hand-Crafted by a Domain Expert

Handling other Categories in Classification

Sequential Transfer

Similar Tasks With Different Data Distributions

Learning with Hierarchical Data

Some Inputs Work Better as Outputs

Getting the Most Out of MTL

Use Large Hidden Layers

Do Early Stopping for Each Task Separately

Use Different Learning Rates for Different Tasks

Use a Private Hidden Layer for the Main Task

Chapter Summary

References

Solving the Ill-Conditioning in Neural Network Learning

Introduction

The Learning Process

Learning Methodology

Condition of the Learning Problem

What Causes the Singularities

Definition of Minimum

Local Minima are Caused by BackPropagation

A New Neural Network Structure

Influence on the Approximation Error E

M and the Universal Approximation Theorems

Example

Applications

Conclusion

References

Centering Neural Network Gradient Factors

Introduction

Centered Backpropagation

Activity Propagation

Weight Modification

Error Backpropagation

Implementation Techniques

A Priori Methods

Adaptive Methods

Empirical Results

Setup of Experiments

Symmetry Detection Problem

Vowel Recognition Problem

Discussion

References

Avoiding Roundoff Error in Backpropagating Derivatives

Introduction

Roundoff Error in Sigmoid Units

Sum-Squared Error Computations

Single Logistic-Output Cross-Entropy Computations

Other Approaches to Avoiding Zero-Derivatives with the Logistic Function

Softmax and Cross-Entropy Computations

Roundoff Error in Tanh Units

Why Bother?

References

Representing and Incorporating Prior Knowledge in

Neural Network Training

Representing and Incorporating Prior Knowledge in Neural Network Training

Transformation Invariance in Pattern Recognition– Tangent Distance and Tangent Propagation

Introduction

Memory Based Algorithms

Learned-Function Algorithms

Tangent Distance

Implementation

Some Illustrative Results

How to Make Tangent Distance Work

Tangent Propagation

Local Rule

Results

How to Make Tangent Prop Work

Tangent Vectors

Lie Groups and Lie Algebras

Tangent Vectors

Important Transformations in Image Processing

Conclusion

References

Combining Neural Networks and Context-Driven Search for On-line, Printed Handwriting

Recognition in the Newton

Introduction

System Overview

Tentative Segmentation

Character Classification

Representation

Architecture

Normalizing Output Error

Negative Training

Stroke Warping

Frequency Balancing

Error Emphasis

Annealing

Quantized Weights

Context-Driven Search

Lexical Context

Geometric Context

Integration with Word Segmentation

Discussion

Future Extensions

References

Neural Network Classification and Prior Class

Probabilities

Introduction

The Trick

Prior Scaling

Probabilistic Sampling

Post Scaling

Equalizing Class Membership

Experimental Results

Performance Measures

ECG Classification Problem

Explanation

Convergence and Representation Issues

Overlapping Distributions

Limitations

A Posteriori Proofs

Conclusions

References

Applying Divide and Conquer to Large Scale

Pattern Recognition Tasks

Introduction

Hierarchical Classification

Decomposition of Posterior Probabilities

Hierarchical Interpretation

Estimation of Conditional Node Posteriors

Classifier Tree Design

Optimality

Prior Knowledge

Confusion Matrices

Agglomerative Clustering

Application to Speech Recognition

Statistical Speech Recognition

Emission and Transition Modeling

Phonetic Context Modeling

Connectionist Acoustic Modeling

ACID Clustering

Training Hierarchies of Neural Networks on Large Datasets

Conclusions

References

Tricks for Time Series

Tricks for Time Series

Forecasting the Economy with Neural Nets: A Survey of Challenges and Solutions

Challenges of Macroeconomic Forecasting

A Survey of Neural Network Solutions

Smoothing Regularizers for Better Generalization

Model Selection and Interpretation

Improving Forecasts via Architecture and Input Selection

Architecture Selection via the Prediction Risk

Estimation of Prediction Risk

Algebraic Estimates of Prediction Risk

NCV: Cross-Validation for Nonlinear Models

Pruning Inputs via Directed Search and Sensitivity Analysis

Empirical Example

Gaining Economic Understanding through Model Visualization

Discussion

References

How to Train Neural Networks

Introduction

Preprocessing

Architectures

Net Internal Preprocessing by a Diagonal Connector

Net Internal Preprocessing by a Bottleneck Network

Squared Inputs

Interaction Layer

Averaging

Regularization by Random Targets

An Integrated Network Architecture for Forecasting Problems

Cost Functions

Robust Estimation with LnCosh

Robust Estimation with CDEN

Error Bar Estimation with CDEN

Data Meets Structure

The Observer-Observation Dilemma

Learning Reviewed

Parameter Noise as an Implicit Penalty Function

Cleaning Reviewed

Data Noise Reviewed

Cleaning with Noise

A Unifying Approach: The Separation of Structure and Noise

Architectural Optimization

Node-Pruning

Weight-Pruning

The Training Procedure

Training Paradigms: Early vs. Late Stopping

Setup Steps

Learning: Generation of Structural Hypothesis

Pruning: Falsification of the generated Structure

Final Stopping Criteria of the Training

Diagram of the Training Procedure

Experiments

Conclusion

References

Big Learning in Deep Neural Networks

Big Learning and Deep Neural Networks

Stochastic Gradient Descent Tricks

Introduction

What Is Stochastic Gradient Descent?

Gradient Descent

Stochastic Gradient Descent

The Convergence of Stochastic Gradient Descent

When to Use Stochastic Gradient Descent?

The Trade-Offs of Large Scale Learning

Asymptotic Analysis of the Large-Scale Case

General Recommendations

Preparing the Data

Monitoring and Debugging

Linear Models with L2 Regularization

Sparsity

Learning Rates

Averaged Stochastic Gradient Descent

Experiments

Conclusion

References

Practical Recommendations for Gradient-Based Training of Deep Architectures

Introduction

Deep Learning and Greedy Layer-Wise Pretraining

Denoising and Contractive Auto-encoders

Online Learning and Optimization of Generalization Error

Gradients

Gradient Descent and Learning Rate

Gradient Computation and Automatic Differentiation

Hyper-parameters

Neural Network Hyper-parameters

Hyper-parameters of the Model and Training Criterion

Manual Search and Grid Search

Random Sampling of Hyper-parameters

Debugging and Analysis

Gradient Checking and Controlled Overfitting

Visualizations and Statistics

Other Recommendations

Multi-core Machines, BLAS and GPUs

Sparse High-Dimensional Inputs

Symbolic Variables, Embeddings, Multi-task Learning and Multi-relational Learning

Open Questions

On the Added Difficulty of Training Deeper Architectures

Adaptive Learning Rates and Second-Order Methods

Conclusion

References

Training Deep and Recurrent Networks with Hessian-Free Optimization

Introduction

Feedforward Neural Networks

Recurrent Neural Networks

Hessian-Free Optimization Basics

Exact Multiplication by the Hessian

The Generalized Gauss-Newton Matrix

Multiplying by the Gauss-Newton Matrix

Typical Losses

Dealing with Non-convex Losses

Implementation Details

Efficiency via Parallelism

Verifying the Correctness of G Products

Damping

Tikhonov Damping

Problems with Tikhonov Damping

Scale-Sensitive Damping

Structural Damping

The Levenberg-Marquardt Heuristic

Trust-Region Methods

CG Truncation as Damping

Line Searching

Convergence of CG

Initializing CG

Preconditioning

The Effects of Preconditioning

Designing a Good Preconditioner

The Empirical Fisher Diagonal

An Unbiased Estimator for the Diagonal of G

Minibatching

Higher Quality Gradient Estimates

Minibatch Overfitting and Methods to Combat It

Tricks and Recipes

Summary

References

Implementing Neural Networks Efficiently

Efficient Environment

Scripting Language

Multi-purpose Efficient N-Dimensional Tensor Object

Modular Neural Networks

Additional Torch7 Packages

Efficient Runtime Execution

Float or Double Representations

Memory Allocation Control

BLAS/LAPACK Interfaces

SIMD Instructions

Ordering Memory Accesses

OpenMP Support

CUDA Support

Benchmarks

Efficient Optimization Heuristics

Conclusion

References

Better Representations: Invariant, Disentangled and

Reusable

Better Representations: Invariant, Disentangled

and Reusable

Learning Feature Representations with K-Means

Introduction

Data, Pre-processing and Initialization

Pre-processing

Initialization

Comparison to Sparse Feature Learning

Application to Image Recognition

Parameters

Encoders

Local Receptive Fields and Multiple Layers

Deep Networks

Conclusion

References

Deep Big Multilayer Perceptrons for Digit

Recognition

Introduction

Data

Architectures

Deforming Images to Get More Training Instances

Forming a Committee

Using the GPU to Train Deep MLPs

Single MLP

Committee of MLP

Discussion

References

A Practical Guide to Training Restricted

Boltzmann Machines

Introduction

An Overview of Restricted Boltzmann Machines and Contrastive Divergence

How to Collect Statistics When Using Contrastive Divergence

Updating the Hidden States

Updating the Visible States

Collecting the Statistics Needed for Learning

A Recipe for Getting the Learning Signal for CD1

The Size of a Mini-batch

A Recipe for Dividing the Training Set into Mini-batches

Monitoring the Progress of Learning

A Recipe for Using the Reconstruction Error

Monitoring the Overfitting

A Recipe for Monitoring the Overfitting

The Learning Rate

A Recipe for Setting the Learning Rates for Weights and Biases

The Initial Values of the Weights and Biases

A Recipe for Setting the Initial Values of the Weights and Biases

Momentum

A Recipe for Using Momentum

Weight-Decay

A Recipe for Using Weight-Decay

Encouraging Sparse Hidden Activities

A Recipe for Sparsity

The Number of Hidden Units

A Recipe for Choosing the Number of Hidden Units

Different Types of Unit

Softmax and Multinomial Units

Gaussian Visible Units

Gaussian Visible and Hidden Units

Binomial Units

Rectified Linear Units

Varieties of Contrastive Divergence

Displaying What Is Happening during Learning

Using RBM's for Discrimination

Computing the Free Energy of a Visible Vector

Dealing with Missing Values

References

Deep Boltzmann Machines and the Centering

Trick

Introduction

Boltzmann Machines

Deep Boltzmann Machines

Training Boltzmann Machines

The Centering Trick

Understanding the Centering Trick

Evaluating Boltzmann Machines

Discriminative Analysis

Generative Analysis

Experiments

Conclusion

References

Deep Learning via Semi-supervised Embedding

Introduction

Semi-supervised Embedding

Embedding Algorithms

Semi-supervised Algorithms

Semi-supervised Embedding for Deep Learning

Labeling Unlabeled Data as Neighbors (Building the Graph)

When Do We Expect This Approach to Work?

Why Is This Approach Good?

Experimental Evaluation

Small-Scale Experiments

MNIST Experiments

Deeper MNIST Experiments

Semantic Role Labeling

Object Recognition Using Unlabeled Video

Conclusion

References

Identifying Dynamical Systems for Forecasting and

Control

Identifying Dynamical Systems for Forecasting

and Control

A Practical Guide to Applying

Echo State Networks

Introduction

The Basic Model

Producing a Reservoir

Function of the Reservoir

Global Parameters of the Reservoir

Practical Approach to Reservoir Production

Pointers to Reservoir Extensions

Training Readouts

Ridge Regression

Regularization

Large Datasets

Direct Pseudoinverse Solution

Initial Transient

Regression Weighting

Readouts for Classification

Online Learning

Pointers to Readouts Extensions

Dealing with Output Feedbacks

Output Feedbacks

Teacher Forcing

Online Learning with Real Feedbacks

Summary and Implementations

References

Forecasting with Recurrent Neural Networks:

12 Tricks

Introduction

Tricks for Recurrent Neural Networks

Conclusion and Outlook

References

Solving Partially Observable Reinforcement

Learning Problems with Recurrent Neural Networks

Introduction

Background

The Trick of Modeling a Markovian State Space Using a Recurrent Neural Network

Improving the Generalization Capabilities with Respect to Actions

A Recipe to Improve the Modeling of State Transitions

Scaling of Inputs and Targets

Block Validation

Removal of Invalid Data Patterns

Learning Settings

Double Rest Learning

A Recipe to Generate an Efficient State Estimation Function

Application of a Neural State Estimator

The Markov Decision Process Extraction Network

Reward Function Design Influences the Performance of a State Estimator

Choosing the Forecast Horizon of a State Estimator

The Trick of Addressing Long Term Dependencies

A Recipe to Find a Good Shortcut Length

Experiments on Long Term Dependencies Problems

Conclusion

References

10 Steps and Some Tricks to Set up Neural

Reinforcement Controllers

Overview

The Reinforcement Learning Framework

Learning in Markovian Decision Processes

Q-Learning with Function Approximation

Characteristics of the Control Task

Modeling the Learning Task

State Information

Actions

Choice of Control Interval t

The Terminal Goal State and The Non-terminal Goal State Setting

Choice of X+

Choice of X-

Choice of Immediate and Final Costs

Discounting

Choice of X0

Choice of the Maximal Episode Length N

Tricks

Scaling the Input Values

The X++-Trick

Artificial Training Transitions

Growing Batch

Training the Neural Q-Function

Exploration

Delays

Experiments

The Control Task

Modeling as a Learning Task

Applied Tricks

Measuring Quality

Results on the Simulated Cart Pole

Results on the Real Cart Pole

Conclusion

References

Author Index

Subject Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc