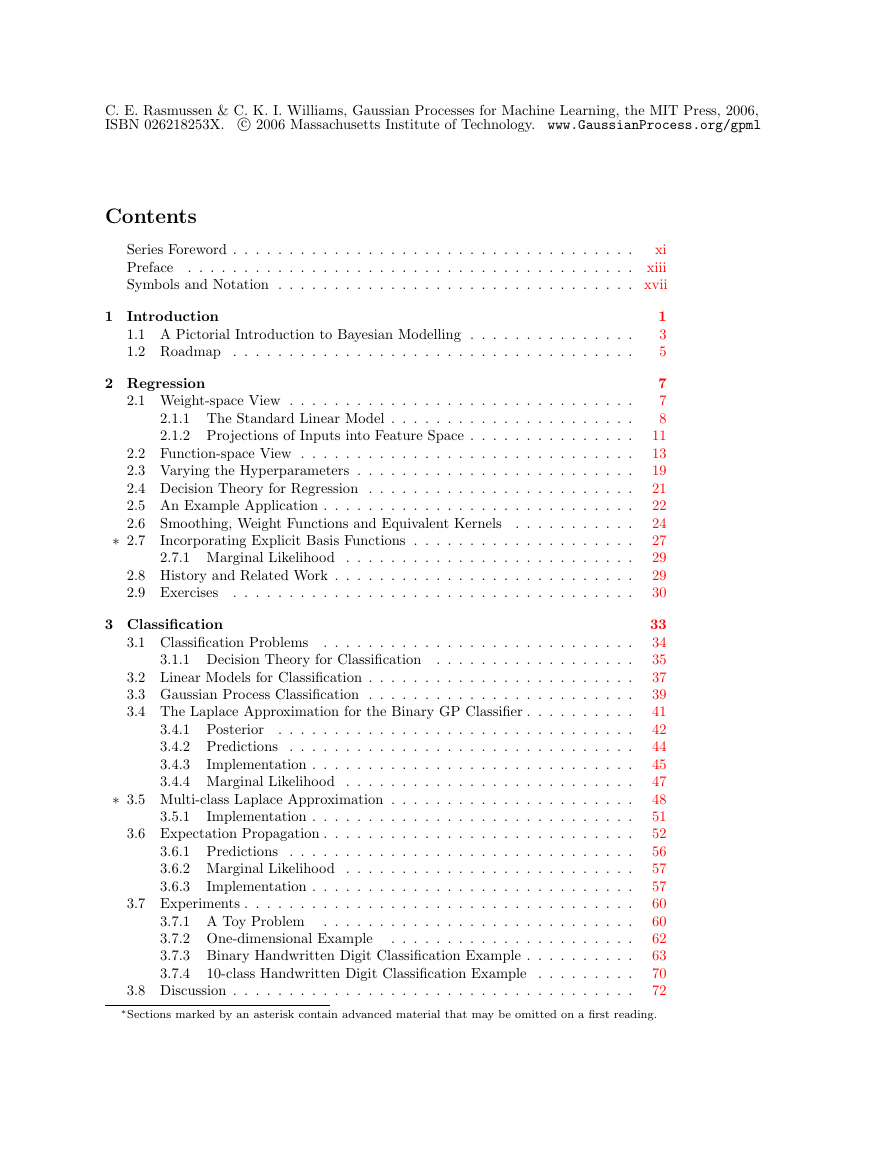

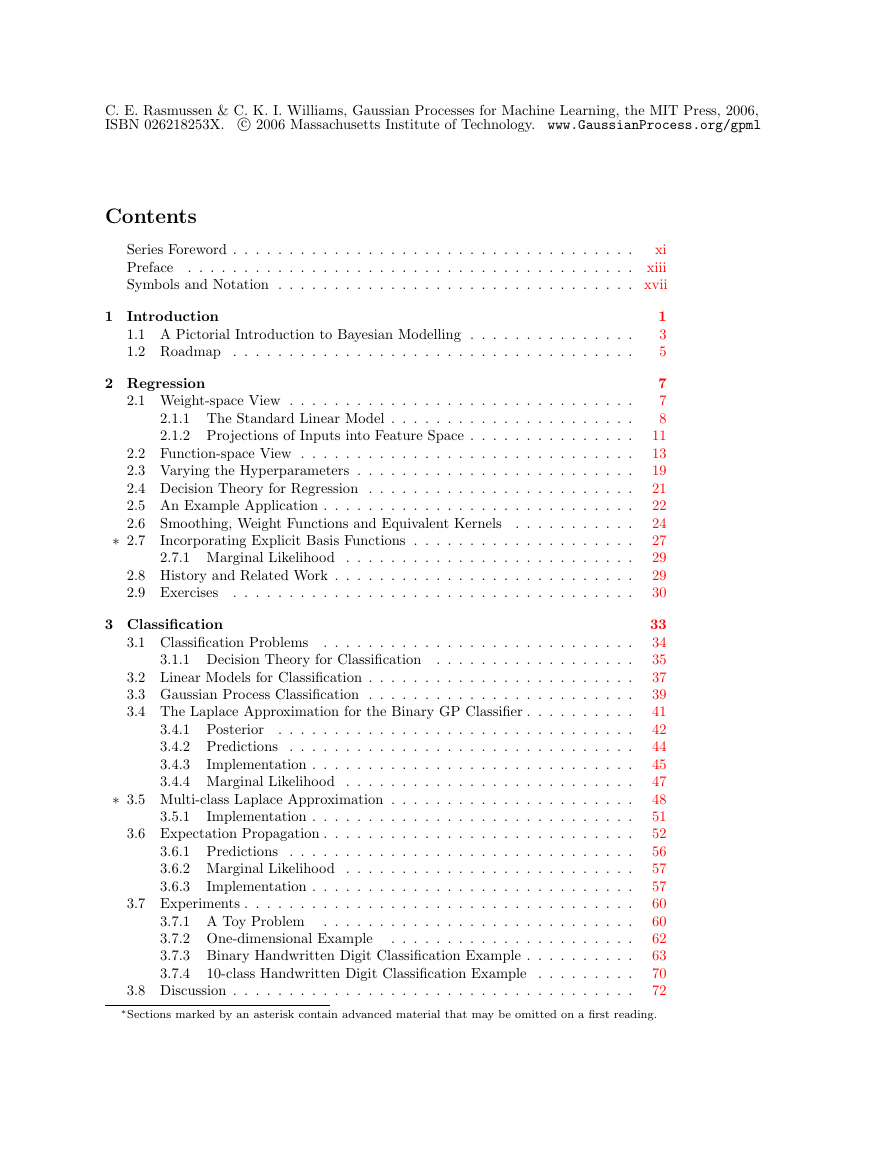

Series Foreword

Preface

Symbols and Notation

Introduction

A Pictorial Introduction to Bayesian Modelling

Roadmap

Regression

Weight-space View

The Standard Linear Model

Projections of Inputs into Feature Space

Function-space View

Varying the Hyperparameters

Decision Theory for Regression

An Example Application

Smoothing, Weight Functions and Equivalent Kernels

* Incorporating Explicit Basis Functions

Marginal Likelihood

History and Related Work

Exercises

Classification

Classification Problems

Decision Theory for Classification

Linear Models for Classification

Gaussian Process Classification

The Laplace Approximation for the Binary GP Classifier

Posterior

Predictions

Implementation

Marginal Likelihood

* Multi-class Laplace Approximation

Implementation

Expectation Propagation

Predictions

Marginal Likelihood

Implementation

Experiments

A Toy Problem

One-dimensional Example

Binary Handwritten Digit Classification Example

10-class Handwritten Digit Classification Example

Discussion

* Appendix: Moment Derivations

Exercises

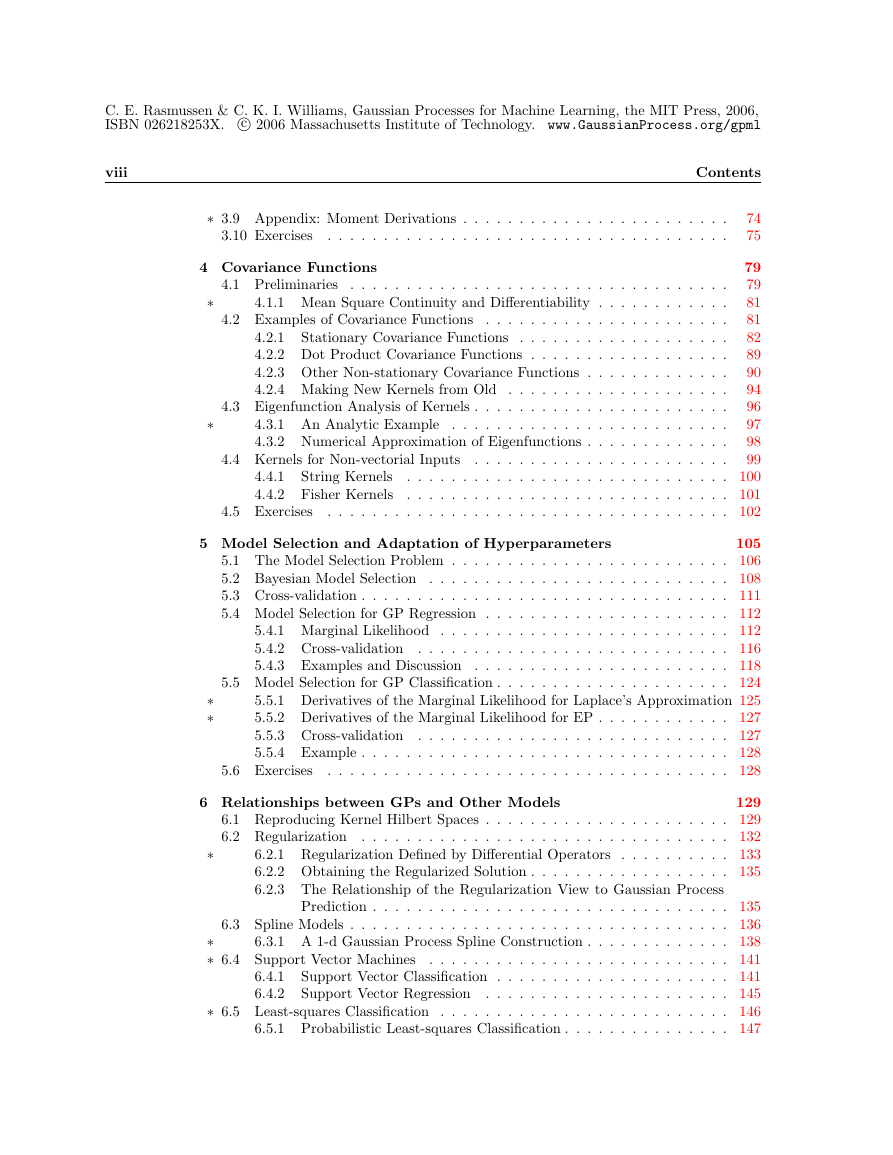

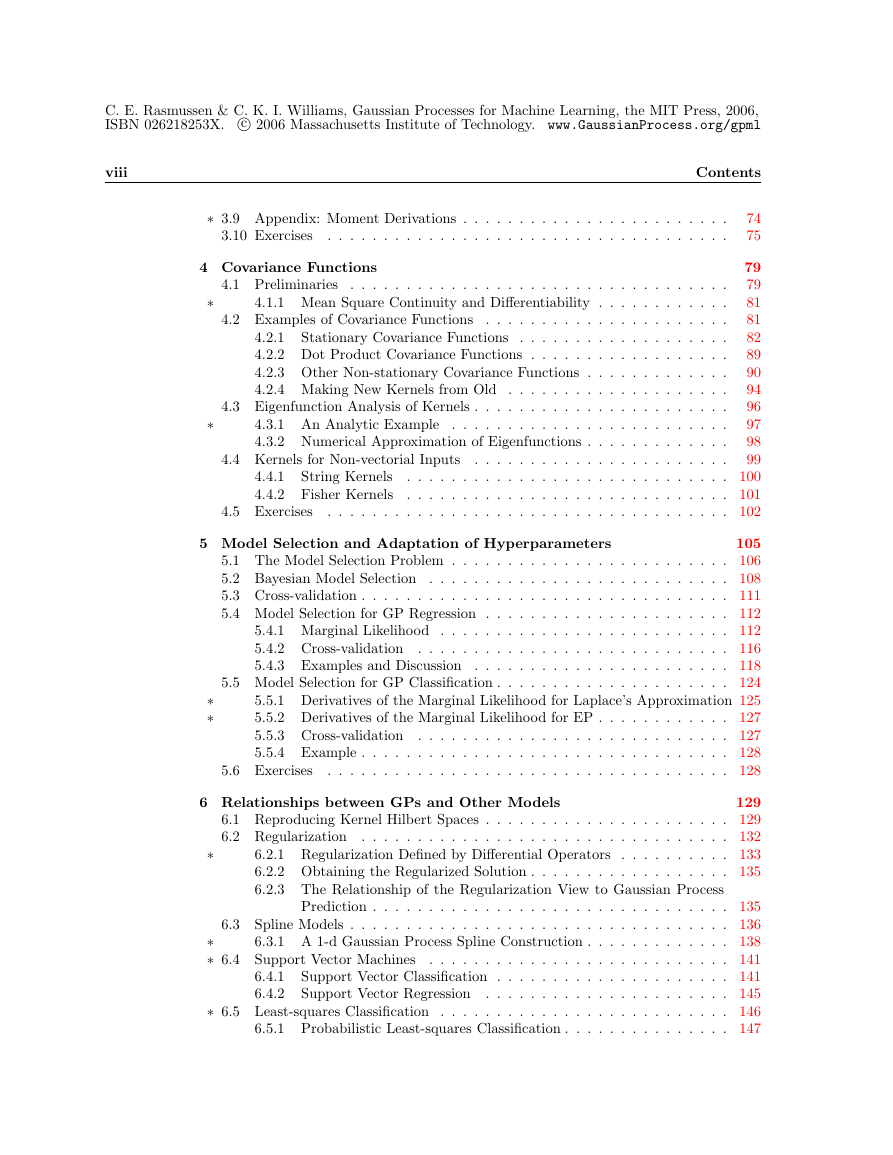

Covariance Functions

Preliminaries

* Mean Square Continuity and Differentiability

Examples of Covariance Functions

Stationary Covariance Functions

Dot Product Covariance Functions

Other Non-stationary Covariance Functions

Making New Kernels from Old

Eigenfunction Analysis of Kernels

* An Analytic Example

Numerical Approximation of Eigenfunctions

Kernels for Non-vectorial Inputs

String Kernels

Fisher Kernels

Exercises

Model Selection and Adaptation of Hyperparameters

The Model Selection Problem

Bayesian Model Selection

Cross-validation

Model Selection for GP Regression

Marginal Likelihood

Cross-validation

Examples and Discussion

Model Selection for GP Classification

* Derivatives of the Marginal Likelihood for Laplace's Approximation

* Derivatives of the Marginal Likelihood for EP

Cross-validation

Example

Exercises

Relationships between GPs and Other Models

Reproducing Kernel Hilbert Spaces

Regularization

* Regularization Defined by Differential Operators

Obtaining the Regularized Solution

The Relationship of the Regularization View to Gaussian Process Prediction

Spline Models

* A 1-d Gaussian Process Spline Construction

* Support Vector Machines

Support Vector Classification

Support Vector Regression

* Least-squares Classification

Probabilistic Least-squares Classification

* Relevance Vector Machines

Exercises

Theoretical Perspectives

The Equivalent Kernel

Some Specific Examples of Equivalent Kernels

* Asymptotic Analysis

Consistency

Equivalence and Orthogonality

* Average-case Learning Curves

* PAC-Bayesian Analysis

The PAC Framework

PAC-Bayesian Analysis

PAC-Bayesian Analysis of GP Classification

Comparison with Other Supervised Learning Methods

* Appendix: Learning Curve for the Ornstein-Uhlenbeck Process

Exercises

Approximation Methods for Large Datasets

Reduced-rank Approximations of the Gram Matrix

Greedy Approximation

Approximations for GPR with Fixed Hyperparameters

Subset of Regressors

The Nyström Method

Subset of Datapoints

Projected Process Approximation

Bayesian Committee Machine

Iterative Solution of Linear Systems

Comparison of Approximate GPR Methods

Approximations for GPC with Fixed Hyperparameters

* Approximating the Marginal Likelihood and its Derivatives

* Appendix: Equivalence of SR and GPR Using the Nyström Approximate Kernel

Exercises

Further Issues and Conclusions

Multiple Outputs

Noise Models with Dependencies

Non-Gaussian Likelihoods

Derivative Observations

Prediction with Uncertain Inputs

Mixtures of Gaussian Processes

Global Optimization

Evaluation of Integrals

Student's t Process

Invariances

Latent Variable Models

Conclusions and Future Directions

Appendix Mathematical Background

Joint, Marginal and Conditional Probability

Gaussian Identities

Matrix Identities

Matrix Derivatives

Matrix Norms

Cholesky Decomposition

Entropy and Kullback-Leibler Divergence

Limits

Measure and Integration

Lp Spaces

Fourier Transforms

Convexity

Appendix Gaussian Markov Processes

Fourier Analysis

Sampling and Periodization

Continuous-time Gaussian Markov Processes

Continuous-time GMPs on R

The Solution of the Corresponding SDE on the Circle

Discrete-time Gaussian Markov Processes

Discrete-time GMPs on Z

The Solution of the Corresponding Difference Equation on PN

The Relationship Between Discrete-time and Sampled Continuous-time GMPs

Markov Processes in Higher Dimensions

Appendix Datasets and Code

Bibliography

Author Index

Subject Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc