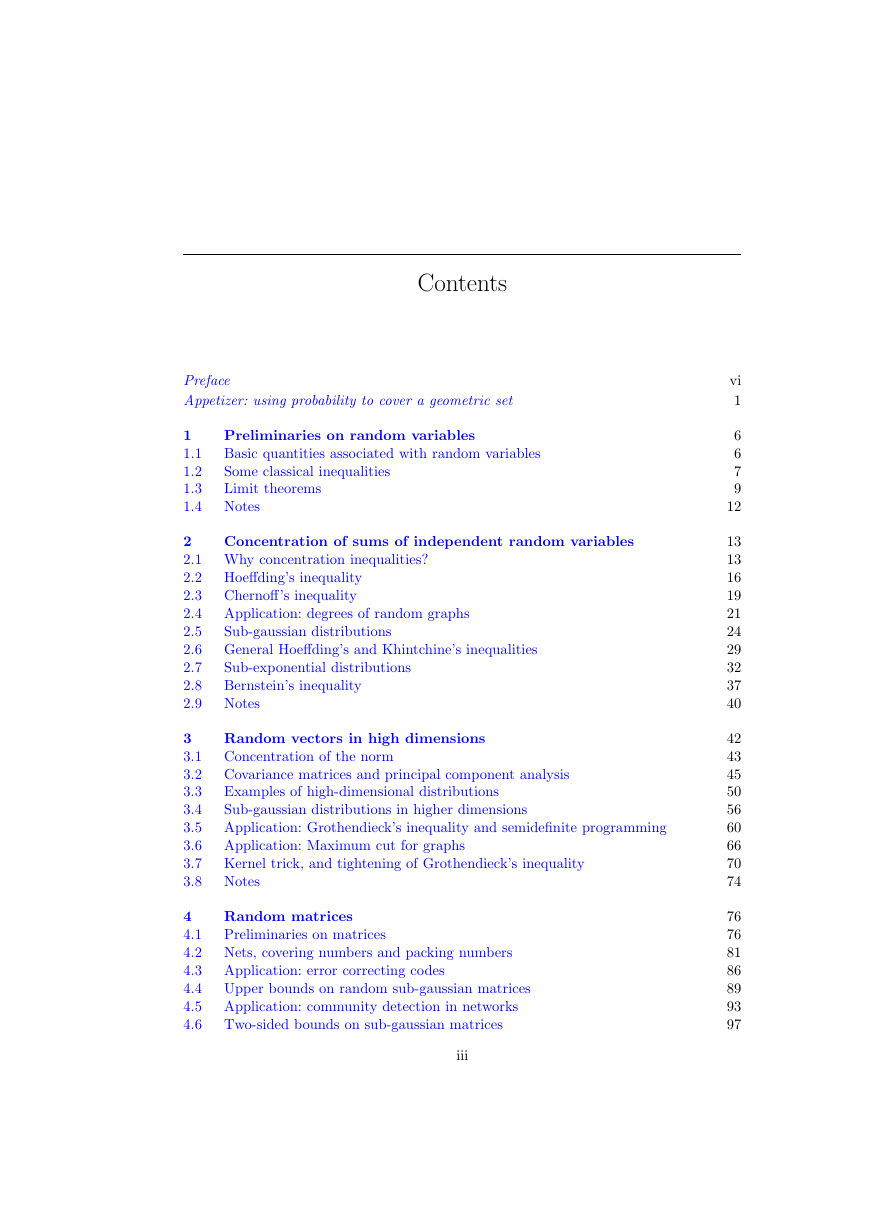

Preface

Who is this book for?

Why this book?

What is this book about?

Prerequisites

A word on exercises

Related reading

Acknowledgements

Appetizer: using probability to cover a geometric set

Preliminaries on random variables

Basic quantities associated with random variables

Some classical inequalities

Limit theorems

Notes

Concentration of sums of independent random variables

Why concentration inequalities?

Hoeffding's inequality

Chernoff's inequality

Application: degrees of random graphs

Sub-gaussian distributions

General Hoeffding's and Khintchine's inequalities

Sub-exponential distributions

Bernstein's inequality

Notes

Random vectors in high dimensions

Concentration of the norm

Covariance matrices and principal component analysis

Examples of high-dimensional distributions

Sub-gaussian distributions in higher dimensions

Application: Grothendieck's inequality and semidefinite programming

Application: Maximum cut for graphs

Kernel trick, and tightening of Grothendieck's inequality

Notes

Random matrices

Preliminaries on matrices

Nets, covering numbers and packing numbers

Application: error correcting codes

Upper bounds on random sub-gaussian matrices

Application: community detection in networks

Two-sided bounds on sub-gaussian matrices

Application: covariance estimation and clustering

Notes

Concentration without independence

Concentration of Lipschitz functions on the sphere

Concentration on other metric measure spaces

Application: Johnson-Lindenstrauss Lemma

Matrix Bernstein's inequality

Application: community detection in sparse networks

Application: covariance estimation for general distributions

Notes

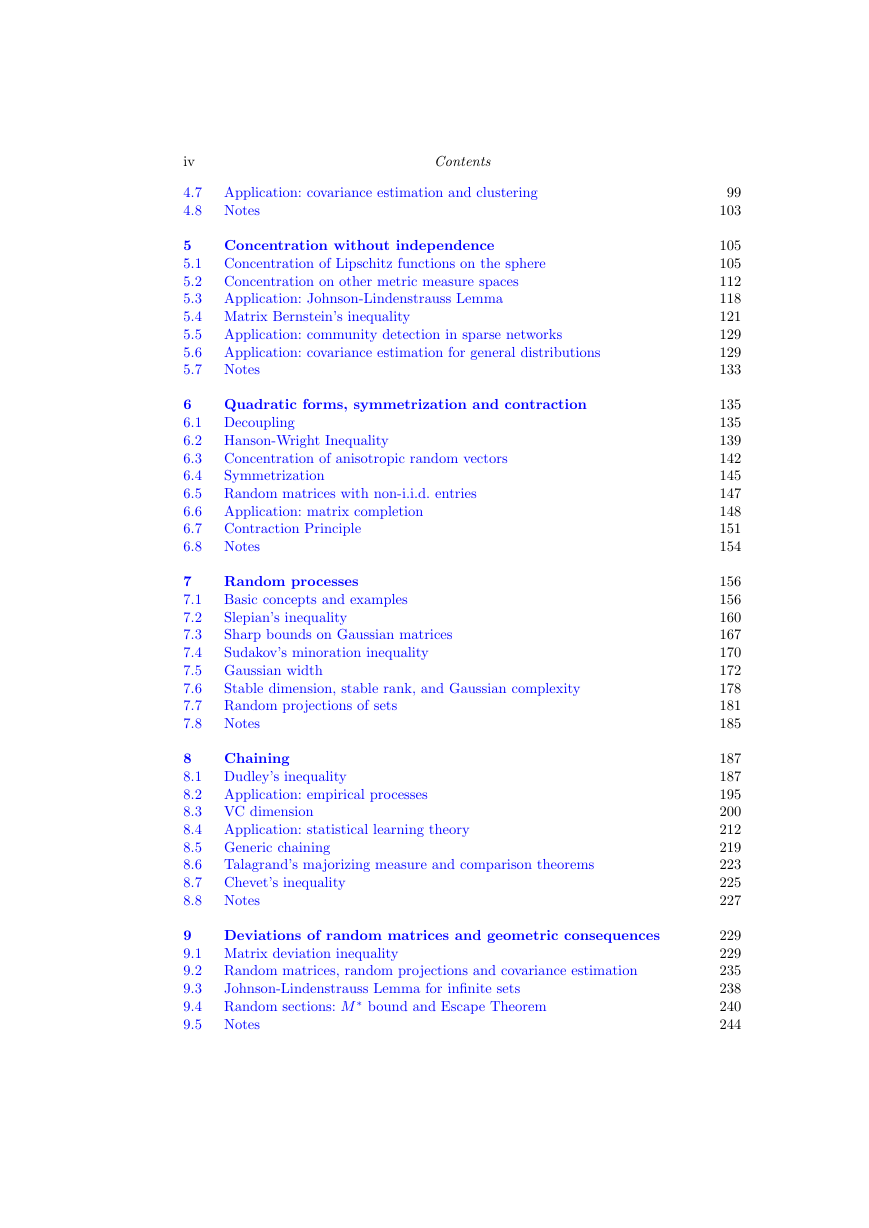

Quadratic forms, symmetrization and contraction

Decoupling

Hanson-Wright Inequality

Concentration of anisotropic random vectors

Symmetrization

Random matrices with non-i.i.d. entries

Application: matrix completion

Contraction Principle

Notes

Random processes

Basic concepts and examples

Slepian's inequality

Sharp bounds on Gaussian matrices

Sudakov's minoration inequality

Gaussian width

Stable dimension, stable rank, and Gaussian complexity

Random projections of sets

Notes

Chaining

Dudley's inequality

Application: empirical processes

VC dimension

Application: statistical learning theory

Generic chaining

Talagrand's majorizing measure and comparison theorems

Chevet's inequality

Notes

Deviations of random matrices and geometric consequences

Matrix deviation inequality

Random matrices, random projections and covariance estimation

Johnson-Lindenstrauss Lemma for infinite sets

Random sections: M* bound and Escape Theorem

Notes

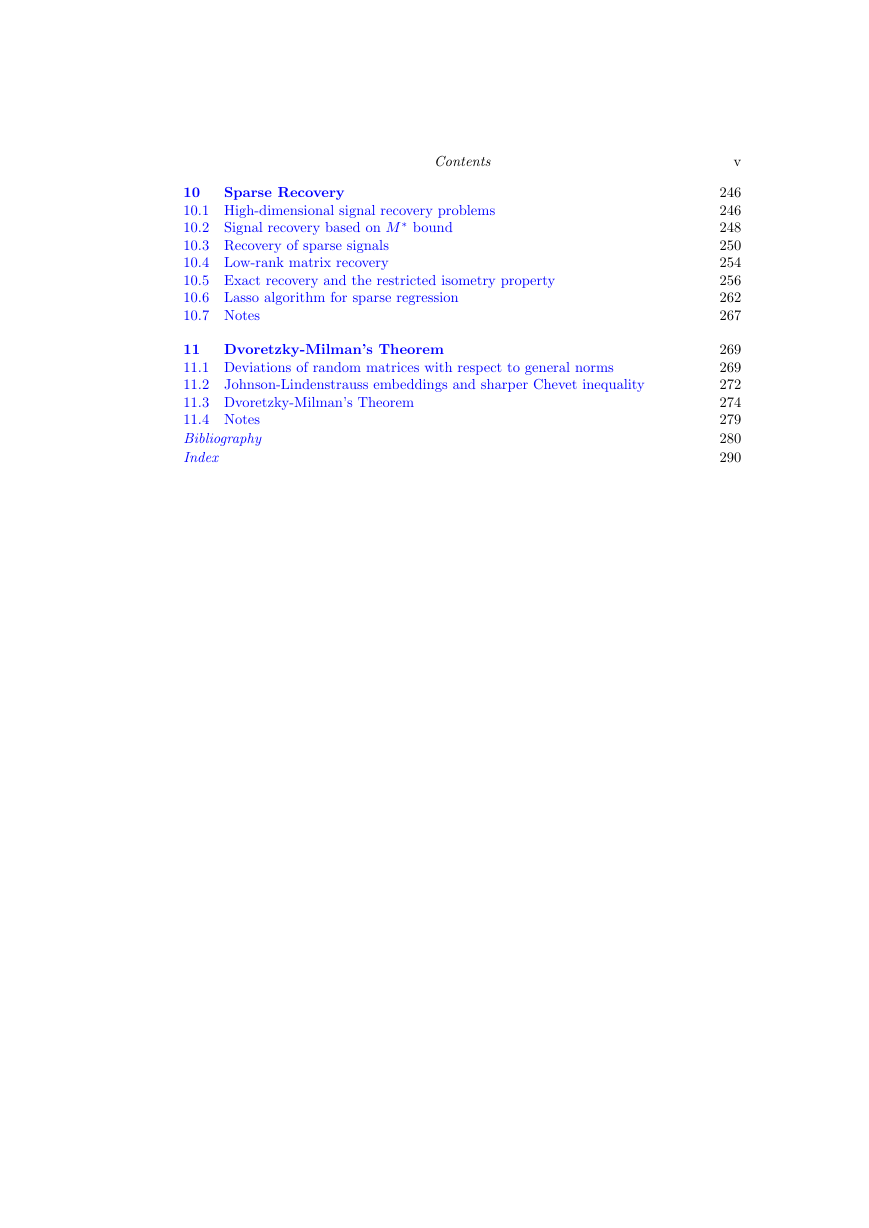

Sparse Recovery

High-dimensional signal recovery problems

Signal recovery based on M* bound

Recovery of sparse signals

Low-rank matrix recovery

Exact recovery and the restricted isometry property

Lasso algorithm for sparse regression

Notes

Dvoretzky-Milman's Theorem

Deviations of random matrices with respect to general norms

Johnson-Lindenstrauss embeddings and sharper Chevet inequality

Dvoretzky-Milman's Theorem

Notes

Bibliography

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc