A Survey on Transfer Learning

迁移学习研究综述

in many real-world applications,

Abstract—A major assumption in many machine learning and data mining

algorithms is that the training and future data must be in the same feature space and

have the same distribution. However,

this

assumption may not hold. For example, we sometimes have a classification task in

one domain of interest, but we only have sufficient training data in another domain of

interest, where the latter data may be in a different feature space or follow a different

data distribution. In such cases, knowledge transfer, if done successfully, would

greatly improve the performance of

learning by avoiding much expensive

data-labeling efforts. In recent years, transfer learning has emerged as a new learning

framework to address this problem. This survey focuses on categorizing and

reviewing the current progress on transfer learning for classification, regression, and

clustering problems. In this survey, we discuss the relationship between transfer

learning and other related machine learning techniques such as domain adaptation,

multitask learning and sample selection bias, as well as covariate shift. We also

explore some potential future issues in transfer learning research.

摘要:在许多机器学习和数据挖掘算法中,一个重要的假设就是目前的训练

数据和将来的训练数据,一定要在相同的特征空间并且具有相同的分布。然而,

在许多现实的应用案例中,这个假设可能不会成立。比如,我们有时候在某个感

兴趣的领域有个分类任务,但是我们只有另一个感兴趣领域的足够训练数据,并

且后者的数据可能处于与之前领域不同的特征空间或者遵循不同的数据分布。这

类情况下,如果知识的迁移做的成功,我们将会通过避免花费大量昂贵的标记样

本数据的代价,使得学习性能取得显著的提升。近年来,为了解决这类问题,迁

移学习作为一个新的学习框架出现在人们面前。这篇综述主要聚焦于当前迁移学

习对于分类、回归和聚类问题的梳理和回顾。在这篇综述中,我们主要讨论了其

他的机器学习算法,比如领域适应、多任务学习、样本选择偏差以及协方差转变

等和迁移学习之间的关系。我们也探索了一些迁移学习在未来的潜在方法的研究。

Index Terms-Transfer learning, survey, machine learning, data mining.

关键词:迁移学习;综述;机器学习;数据挖掘

1 Introduction

1 引言

Data mining and maching learning technologies have already achieved

significant success in many knowledge engineering areas including classification,

regression, and clustering (e.g., [1],[2]). However, many machine learning methods

work well only under a common assump-tion: the training and test data are drawn

from the same feature space and the same distribution. When the distribution changes,

most statistical models need to be rebuilt from scratch using newly collected training

data. In many real-world applications, it is expensive or impossible to recollect the

needed training data and rebuild the models. It would be nice to reduce the need and

effort to recollect the training data. In such cases, kowledge transfer or tansfer

leaming between task domains would be desirable

数据挖掘和机器学习技术已经在许多知识工程领域取得了显著的成功,包括

�

分类、回归和聚类(例如[1],[2])。然而,许多机器学习和数据挖掘算法中的一

个主要假设是,训练和未来(测试)数据必须在相同的特征空间中,并且具有相

同的分布。然而,在许多实际应用中,要重新获取所需的训练数据并重建模型是

昂贵的甚至是不可能的。如果能够减少对于训练数据的需要和训练数据对模型的

影响,那想必是极好的。在这种情况下,不同任务之间的知识迁移或者迁移学习

将会是很有必要的。

Many examples in knowledge engineering can be found where transfer learning

can truly be beneficial. One example is Web-document classification [3], I4], [5],

where our goal is to classify a given Web document into several predefined categories.

As an example, in the area of Webdocument classification (see, e.g., 16), the labeled

examples may be the university webpages that are associated with category

information obtained through previous manuallabeling efforts. For a classification

task on a newly created website where the data features or data distributions may be

different, there may be a lack of labeled training data. As a result, we may not be able

to directly apply the webpage classifiers learned on the university website to the new

website. In such cases, it would be helpful if we could transfer the classification

knowledge into the new domain.

许多知识工程领域的例子,都能够从迁移学习中真正获益。举一个网页文件

分类的例子[3-5]。我们的目的是把给定的网页文件分类到几个之前定义的目录里。

作为一个例子,在网页文件分类中[6],可能是根据之前手工标注的样本,与之关

联的分类信息,而进行分类的大学网页。对于一个新建网页的分类任务,其中,

数据特征或数据分布可能不同,因此就出现了已标注训练样本的缺失问题。因此,

我们将不能直接把之前在大学网页上的分类器用到新的网页中进行分类。在这类

情况下,如果我们能够把分类知识迁移到新的领域中是非常有帮助的。

The need for transfer learning may arise when the data can be easily outdated. In

this case, the labeled data obtained in one time period may not follow the same

distribution in a later time period. For example, in indoor WiFi localization problems,

which aims to detect a user’s current location based on previously collected WiFi data,

it is very expensive to calibrate WiFi data for building localization models in a

large-scale environment, because a user needs to label a large collection of WiFi

signal data at each location. However, the WiFi signal-strength values may be a

function of time, device, or other dynamic factors. A model trained in one time period

or on one device may cause the performance for location estimation in another time

period or on another device to be reduced. To reduce the recalibration effort, we might

wish to adapt the localization model trained in one time period (the source domain)

for a new time period (the target domain), or to adapt the localization model trained

on a mobile device (the source domain) for a new mobile device (the target domain),

as done in [7].

当数据很容易就过时的时候,对于迁移学习的需求将会大大提高。在这种情

况下,一个时期所获得的被标记的数据将不会服从另一个时期的分布。例如室内

wifi 定位问题,它旨在基于之前 wifi 用户的数据来查明用户当前的位置。在大规

模的环境中,为了建立位置模型来校正 wifi 数据,代价是非常昂贵的。因为用

�

户需要在每一个位置收集和标记大量的 wifi 信号数据。然而,wifi 的信号强度可

能是一个时间、设备或者其他类型的动态因素函数。在一个时间或一台设备上训

练的模型可能导致另一个时间或设备上位置估计的性能降低。为了减少再校正的

代价,我们可能会把在一个时间段(源域)内建立的位置模型适配到另一个时间

段(目标域),或者把在一台设备(源域)上训练的位置模型适配到另一台设备(目

标域)上[7]。

As a third example, consider the problem of sentiment classification, where our

task is to automatically classify the reviews on a product, such as a brand of camera,

into positive and negative views. For this classification task, we need to first collect

many reviews of the product and annotate them. We would then tran a classifier on

the annotate them. We would then train a classifier on the reviews with their

corresponding labels. Since the distribution of review data among different types of

products can be very different, to maintain good classification performance, we need

to collect a large amount of labeled data in order to train the review-classification

models for each product. However, this data-labeling process can be very expensive

to do. To reduce the effort for annotating reviews for various porducts, we may want

to adapt a classification model

is trained on some products to help learn

classification models for some other products. In such cases, transfer learning can

save a significant amount of labeling effort [8].

that

对于第三个例子,关于情感分类的问题。我们的任务是自动将产品(例如相

机品牌)上的评论分类为正面和负面意见。对于这些分类任务,我们需要首先收

集大量的关于本产品和相关产品的评论。然后我们需要在与它们相关标记的评论

上,训练分类器。因此,关于不同产品牌的评论分布将会变得十分不一样。为了

达到良好的分类效果,我们需要收集大量的带标记的数据来对某一产品进行情感

分类。然而,标记数据的过程可能会付出昂贵的代价。为了降低对不同的产品进

行情感标记的注释,我们将会训练在某一个产品上的情感分类模型,并把它适配

到其它产品上去。在这种情况下,迁移学习将会节省大量的标记成本[8]。

In this survey paper, we give a comprehensive overview of transfer learning for

classification, regression, and clustering developed in machine learning and data

mining areas. There has been a large amount of work on transfer learning for

reinforcement learning in the machine learning literature (e.g., [9], [10]). However, in

this paper, we only focus on transfer learning for classification, regression, and

clustering problems that are related more closely to data mining tasks. By doing the

survey, we hope to provide a useful resource for the data mining and machine learning

community.

在这篇文章中,我们给出了在机器学习和数据挖掘领域,迁移学习在分类、

回归和聚类方面的发展。同时,也有在机器学习方面的文献中,大量的迁移学习

对增强学习的工作。然而,在这篇文章中,我们更多的关注于在数据挖掘及其相

近的领域,关于迁移学习对分类、回归和聚类方面的问题。通过这篇综述,我们

希望对于数据挖掘和机器学习的团体能够提供一些有用的帮助。

The rest of the survey is organized as follows: In the next four sections, we first

�

give a general overview and define some notations we will use later. We, then, briefly

survey the history of transfer learning, give a unified definition of transfer learning

and categorize transfer learning into three different settings (given in Table 2 and Fig.

2). For each setting, we review different approaches, given in Table 3 in detail. After

that, in Section 6, we review some current research on the topic of “negative transfer,”

which happens when knowledge transfer has a negative impact on target learning. In

Section 7, we introduce some successful applications of transfer learning and list

some published data sets and software toolkits for transfer learning research. Finally,

we conclude the paper with a discussion of future works in Section 8.

接下来本文的组织结构如下:在接下来的四个环节,我们先给出了一个总体

的全览,并且定义了一些接下来用到的标记。然后,我们简短概括一下迁移学习

的发展历程,同时给出迁移学习的统一定义,并将迁移学习分为三种不同的设置

(在图 2 和表 2 中给出)。我们对于每一种设置回顾了不同的方法,在表 3 中给

出。之后,在第 6 节,我们回顾了一些当前关于“负迁移”这一话题的研究,即那

些发生在对知识迁移的过程中,产生负面影响的时候。在第 7 节,我们介绍了迁

移学习的一些成功的应用,并且列举了一些已经发布的关于迁移学习数据集和工

具包。最后在结论中,我们展望了迁移学习的发展前景。

�

2 Overview

2 概述

2.1 A Brief History of Transfer Learning

2.1 简短的有关迁移学习的历史

Traditional data mining and machine learning algorithms make predictions on

the future data using statistical models that are trained on previously collected labeled

or unlabeled training data [11], [12], [13]. Semisupervised classification [14], [15],

[16], [17] addresses the problem that the labeled data may be too few to build a good

classifier, by making use of a large amount of unlabeled data and a small amount of

labeled data. Variations of supervised and semisupervised learning for imperfect data

sets have been studied; for example, Zhu and Wu [18] have studied how to deal with

the noisy class-label problems. Yang et al. considered cost-sensitive learning [19]

when additional tests can be made to future samples. Nevertheless, most of them

assume that the distributions of the labeled and unlabeled data are the same. Transfer

learning, in contrast, allows the domains, tasks, and distributions used in training and

testing to be different. In the real world, we observe many examples of transfer

learning. For example, we may find that learning to recognize apples might help to

recognize pears. Similarly, learning to play the electronic organ may help facilitate

learning the piano. The study of Transfer learning is motivated by the fact that people

can intelligently apply knowledge learned previously to solve new problems faster or

with better solutions. The fundamental motivation for Transfer learning in the field of

machine learning was discussed in a NIPS-95 workshop on “Learning to Learn,”

which focused on the need for lifelong machine learning methods that retain and reuse

previously learned knowledge.

传统的数据挖掘和机器学习算法通过使用之前收集到的带标记的数据或者

不带标记的数据进行训练,进而对将来的数据进行预测。在版监督分类中这样标

注这类问题,即带标记的样本太少,以至于只使用大量未标记的样本数据和少量

已标记的样本数据不能建立良好的分类器。监督学习和半监督学习分别对于缺失

数据集的不同已经有人进行研究过。例如周和吴研究过如何处理噪音类标记的问

题。杨认为当增加测试时,可以使得代价敏感的学习作为未来的样本。尽管如此,

他们中的大多数假定的前提是带标记或者是未标记的样本都是服从相同分布的。

相反,迁移学习允许训练和测试的域、任务以及分布是不同的。在现实中我们可

以发现很多迁移学习的例子。例如我们可能发现,学习如何辨认苹果将会有助于

辨认梨子。类似的,学会弹电子琴将会有助于学习钢琴。对于迁移学习研究的驱

动,是基于事实上,人类可以智能地把先前学习到的知识应用到新的问题上进而

快速或者更好的解决新问题。最初的关于迁移学习的研究是在 NIPS-95 研讨会上,

机器学习领域的一个研讨话题“学会学习”,就是关注于保留和重用之前学到的知

识这种永久的机器学习方法。

Research on transfer learning has attracted more and more attention since 1995

in different names: learning to learn, life-long learning, knowledge transfer, inductive

transfer, multitask learning, knowledge consolidation, context-sensitive learning,

knowledge-based inductive bias, metalearning, and incremental/cumulative learning

[20]. Among these, a closely related learning technique to transfer learning is the

�

multitask learning framework [21], which tries to learn multiple tasks simultaneously

even when they are different. A typical approach for multitask learning is to uncover

the common (latent) features that can benefit each individual task.

自从 1995 年开始,迁移学习就以不同的名字受到了越来越多人的关注:学

会学习、终生学习、知识迁移、感应迁移、多任务学习、知识整合、前后敏感学

习、基于感应阈值的学习、元学习、增量或者累积学习。所有的这些,都十分接

近让迁移学习成为一个多任务学习的一个框架这样的学习技术,即使他们是不同

的,也要尽量学习多项任务。多任务学习的一个典型的方法是揭示是每个任务都

受益的共同(潜在)特征。

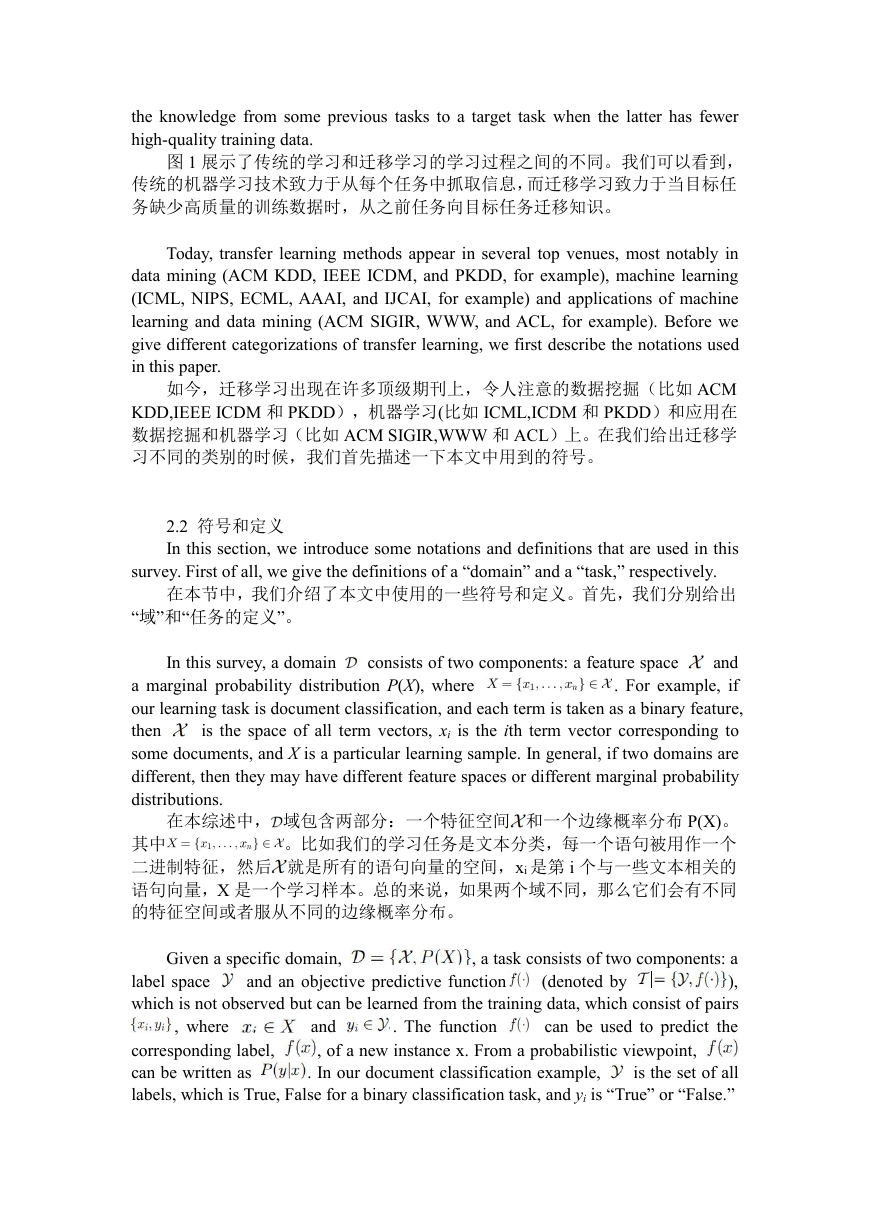

In 2005, the Broad Agency Announcement (BAA) 05-29 of Defense Advanced

Research Projects Agency (DARPA)’s Information Processing Technology Office

(IPTO) gave a new mission of transfer learning: the ability of a system to recognize

and apply knowledge and skills learned in previous tasks to novel tasks. In this

definition, transfer learning aims to extract the knowledge from one or more source

tasks and applies the knowledge to a target task. In contrast to multitask learning,

rather than learning all of the source and target tasks simultaneously, transfer learning

cares most about the target task. The roles of the source and target tasks are no longer

symmetric in transfer learning.

在 2005 年,美国国防部高级研究计划局的信息处理技术办公室发表的代理

公告,给出了迁移学习的新任务:把之前任务中学习到的知识和技能应用到新的

任务中的能力。在这个定义中,迁移学习旨在从一个或者多个源任务中提取信息,

进而应用到目标任务上。与多任务学习相反,迁移学习不是同时学习源目标和任

务目标的内容,而是更多的关注与任务目标。在迁移学习中,源任务和目标任务

不再是对称的。

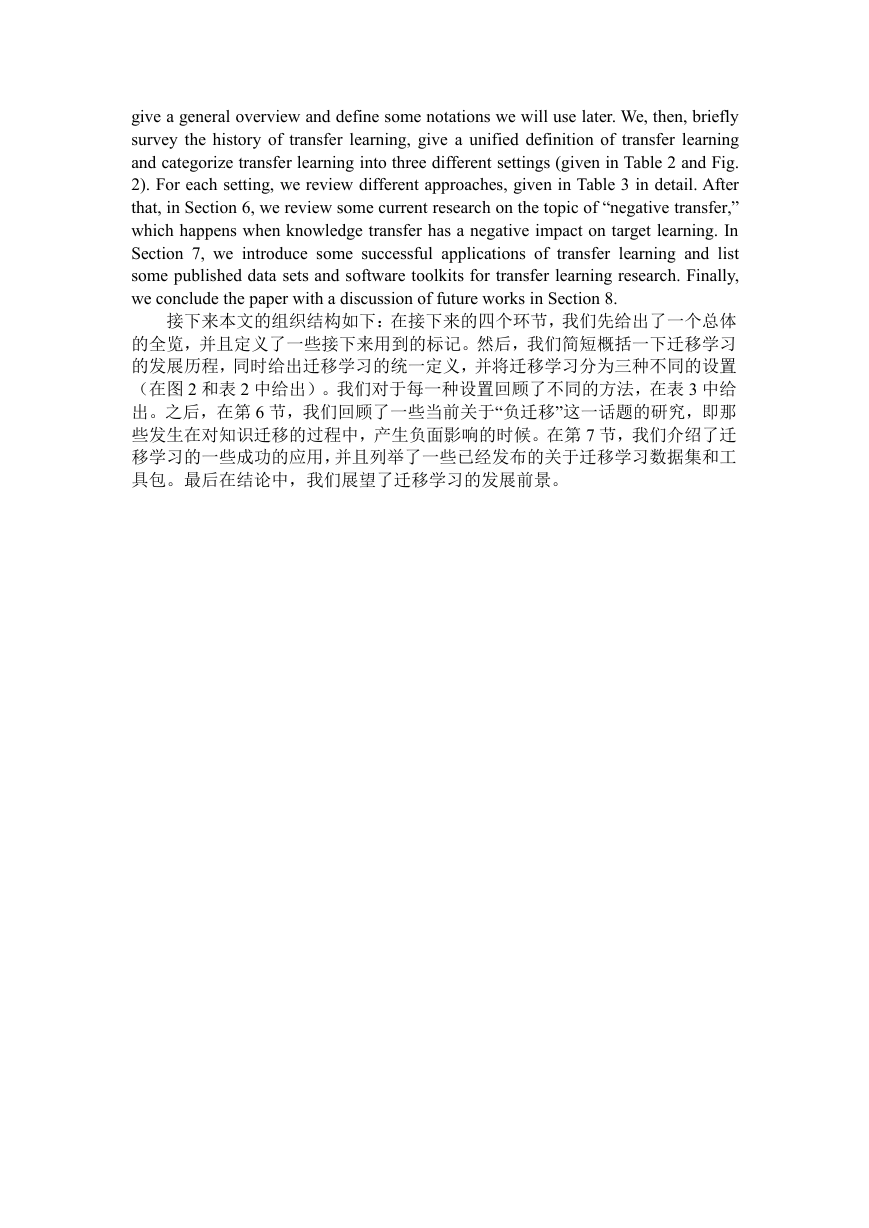

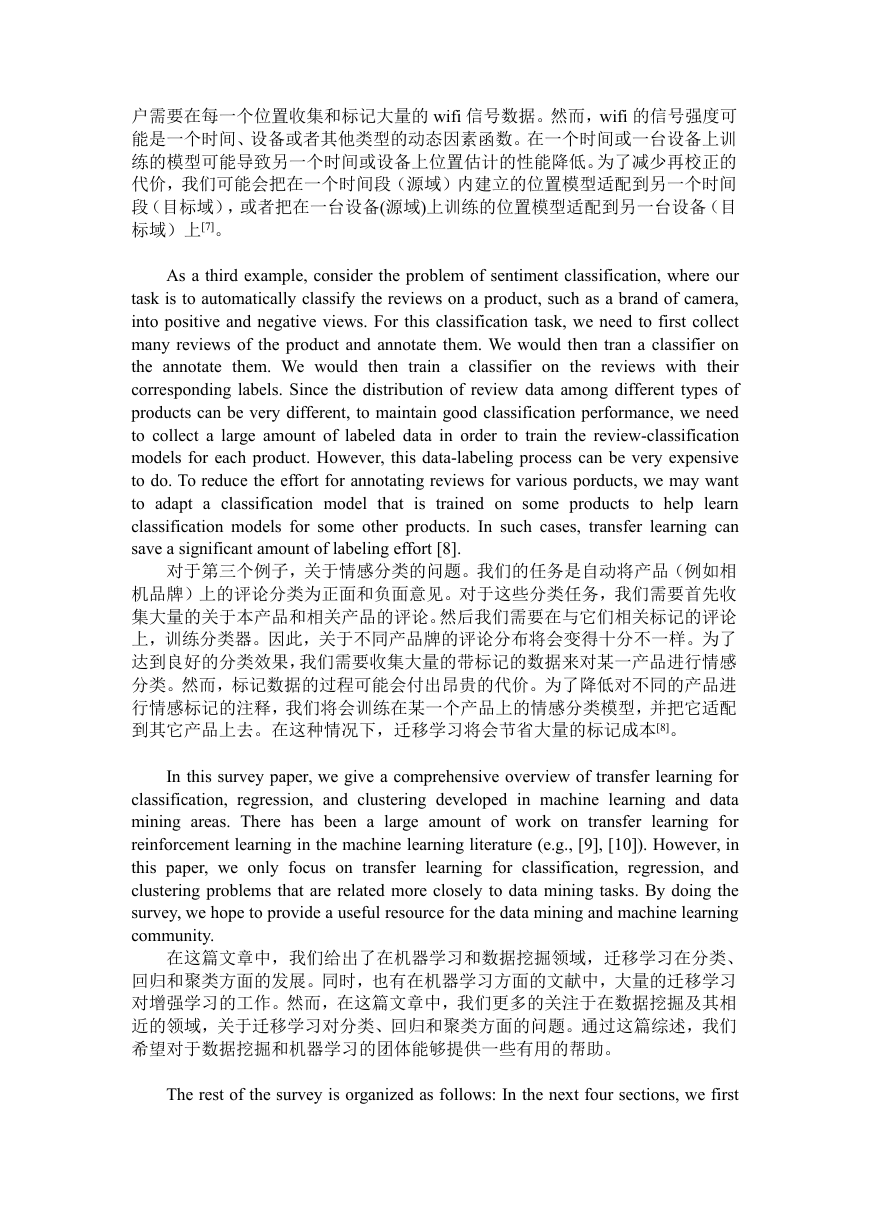

图 1 (a)传统机器学习与(b)迁移学习的学习过程之间的区别

Fig. 1 shows the difference between the learning processes of traditional and

transfer learning techniques. As we can see, traditional machine learning techniques

try to learn each task from scratch, while transfer learning techniques try to transfer

�

the knowledge from some previous tasks to a target task when the latter has fewer

high-quality training data.

图 1 展示了传统的学习和迁移学习的学习过程之间的不同。我们可以看到,

传统的机器学习技术致力于从每个任务中抓取信息,而迁移学习致力于当目标任

务缺少高质量的训练数据时,从之前任务向目标任务迁移知识。

Today, transfer learning methods appear in several top venues, most notably in

data mining (ACM KDD, IEEE ICDM, and PKDD, for example), machine learning

(ICML, NIPS, ECML, AAAI, and IJCAI, for example) and applications of machine

learning and data mining (ACM SIGIR, WWW, and ACL, for example). Before we

give different categorizations of transfer learning, we first describe the notations used

in this paper.

如今,迁移学习出现在许多顶级期刊上,令人注意的数据挖掘(比如 ACM

KDD,IEEE ICDM 和 PKDD),机器学习(比如 ICML,ICDM 和 PKDD)和应用在

数据挖掘和机器学习(比如 ACM SIGIR,WWW 和 ACL)上。在我们给出迁移学

习不同的类别的时候,我们首先描述一下本文中用到的符号。

2.2 符号和定义

In this section, we introduce some notations and definitions that are used in this

survey. First of all, we give the definitions of a “domain” and a “task,” respectively.

在本节中,我们介绍了本文中使用的一些符号和定义。首先,我们分别给出

“域”和“任务的定义”。

In this survey, a domain

consists of two components: a feature space

and

. For example, if

a marginal probability distribution P(X), where

our learning task is document classification, and each term is taken as a binary feature,

then

is the space of all term vectors, xi is the ith term vector corresponding to

some documents, and X is a particular learning sample. In general, if two domains are

different, then they may have different feature spaces or different marginal probability

distributions.

在本综述中, 域包含两部分:一个特征空间 和一个边缘概率分布 P(X)。

。比如我们的学习任务是文本分类,每一个语句被用作一个

其中

二进制特征,然后 就是所有的语句向量的空间,xi 是第 i 个与一些文本相关的

语句向量,X 是一个学习样本。总的来说,如果两个域不同,那么它们会有不同

的特征空间或者服从不同的边缘概率分布。

, where

. The function

(denoted by

Given a specific domain,

and an objective predictive function

, a task consists of two components: a

),

label space

which is not observed but can be learned from the training data, which consist of pairs

can be used to predict the

corresponding label,

can be written as

is the set of all

labels, which is True, False for a binary classification task, and yi is “True” or “False.”

and

, of a new instance x. From a probabilistic viewpoint,

. In our document classification example,

�

给定一个具体的领域,

,每个任务个由两部分组成:一个标

签空间 和一个目标预测函数 (由

表示)。任务不可被直观观测,

但是可以通过训练数据学习得来,task 由成对的

。

函数 可用于预测 x 的实例对应的标签 。从概率学角度看, 也可被写为

。在我们的文件分类例子中, 是所有标签的值域,可以取到 True 或者 False

组成,其中

,

这个二元分类目标,yi 可以取到 True 或者 False。

, and one target domain,

For simplicity, in this survey, we only consider the case where there is one source

domain

, as this is by far the most popular of the

research works in the literature. More specifically, we denote the source domain data

is

as

is the data instance and

the corresponding class label. In our document classification example,

can be a

set of term vectors together with their associated true or false class labels. Similarly,

we denote the target-domain data as

is in

, where the input

, where

is the corresponding output. In most cases,

and

简化起见,本文中我们只考虑一个源域 和一个目标域 ,因为这在迄今

来表示源

为止各种文献中是最普遍的。更准确点,用

域,其中

是对应的类标签。以文档分类为例, 是

文 档 对 象 向 量 及 对 应 的 true 或 false 标 签 的 集 合 。 相 似 地 有 目 标 域 记 法

是 相 对 应的 输 出 。一般情况下有

,其中 来 自 ,

是数据样本,

.

。

We now give a unified definition of transfer learning.

现在我们给出迁移学习的统一定义:

Definition 1 (Transfer Learning). Given a source domain

a target domain

of the target predictive function

and learning task

in

,

,Transfer learning aims to help improve the learning

, where

using the knowledge in

and learning task

and

.

,or

定义 1 迁移学习 (Transfer learning): 给定源域 和学习任务 ,一个目标域

和学习任务 ,迁移学习致力于用 和 中的知识,帮助提高中目标预测函

)或源域学习任务和目标域学

数 的学习。其中的源域和目标域不相同(

习任务不同

。

implies that either

In the above definition, a domain is a pair

. Thus, the condition

. For example, in our document

classification example, this means that between a source document set and a target

document set, either the term features are different between the two sets (e.g., they use

different languages), or their marginal distributions are different.

or

在上面定义中,一个域是一对

意味着源域和目

标域实例不同(

)。

例如,在我们的文档分类示例中,这意味着在源文档集和目标文档集之间,两个

集之间的术语特征不同(例如,它们使用不同的语言),或者它们的边缘分布不

同。

)或者源域和目标域边缘概率分布不同(

。因此,条件

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc