Copyright(版权)

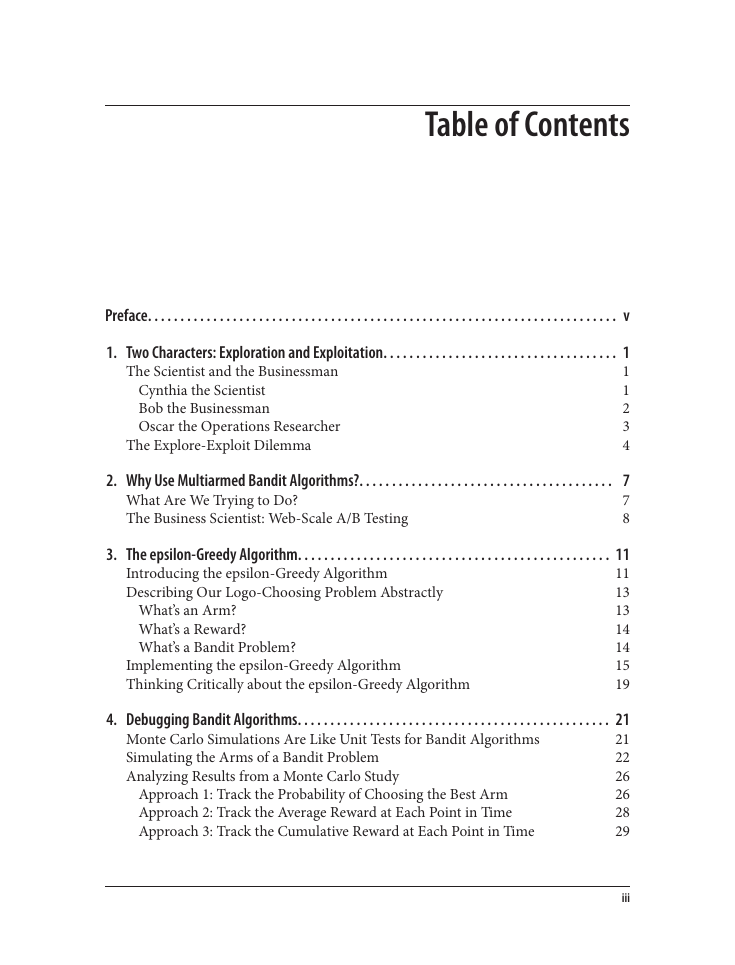

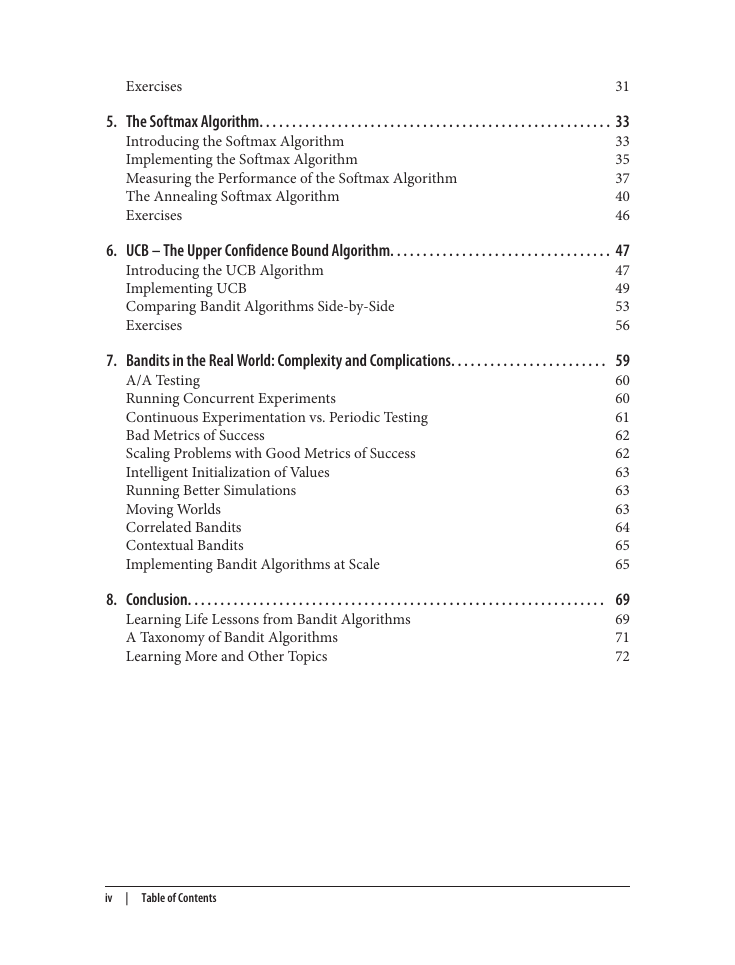

Table of Contents

Preface

Finding the Code for This Book

Dealing with Jargon: A Glossary

Conventions Used in This Book

Using Code Examples

Safari® Books Online

How to Contact Us

Acknowledgments

Chapter 1. Two Characters: Exploration and Exploitation

The Scientist and the Businessman

Cynthia the Scientist

Bob the Businessman

Oscar the Operations Researcher

The Explore-Exploit Dilemma

Chapter 2. Why Use Multiarmed Bandit Algorithms?

What Are We Trying to Do?

The Business Scientist: Web-Scale A/B Testing

Chapter 3. The epsilon-Greedy Algorithm

Introducing the epsilon-Greedy Algorithm

Describing Our Logo-Choosing Problem Abstractly

What’s an Arm?

What’s a Reward?

What’s a Bandit Problem?

Implementing the epsilon-Greedy Algorithm

Thinking Critically about the epsilon-Greedy Algorithm

Chapter 4. Debugging Bandit Algorithms

Monte Carlo Simulations Are Like Unit Tests for Bandit Algorithms

Simulating the Arms of a Bandit Problem

Analyzing Results from a Monte Carlo Study

Approach 1: Track the Probability of Choosing the Best Arm

Approach 2: Track the Average Reward at Each Point in Time

Approach 3: Track the Cumulative Reward at Each Point in Time

Exercises

Chapter 5. The Softmax Algorithm

Introducing the Softmax Algorithm

Implementing the Softmax Algorithm

Measuring the Performance of the Softmax Algorithm

The Annealing Softmax Algorithm

Exercises

Chapter 6. UCB – The Upper Confidence Bound Algorithm

Introducing the UCB Algorithm

Implementing UCB

Comparing Bandit Algorithms Side-by-Side

Exercises

Chapter 7. Bandits in the Real World: Complexity and Complications

A/A Testing

Running Concurrent Experiments

Continuous Experimentation vs. Periodic Testing

Bad Metrics of Success

Scaling Problems with Good Metrics of Success

Intelligent Initialization of Values

Running Better Simulations

Moving Worlds

Correlated Bandits

Contextual Bandits

Implementing Bandit Algorithms at Scale

Chapter 8. Conclusion

Learning Life Lessons from Bandit Algorithms

A Taxonomy of Bandit Algorithms

Learning More and Other Topics

About the Author

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc