线

…

…

…

…

…

…

…

…

…

…

…

…

…

名

姓

号

学

班

级

业

专

院

学

…

…

…

…

…

…

…

…

…

…

…

…

…

…

密

…

…

…

…

…

…

…

…

…

封

…

…

…

…

…

…

…

…

…

山东大学 机器学习与模式识别 课程试卷 A

2018-2019 学年 第一 学期

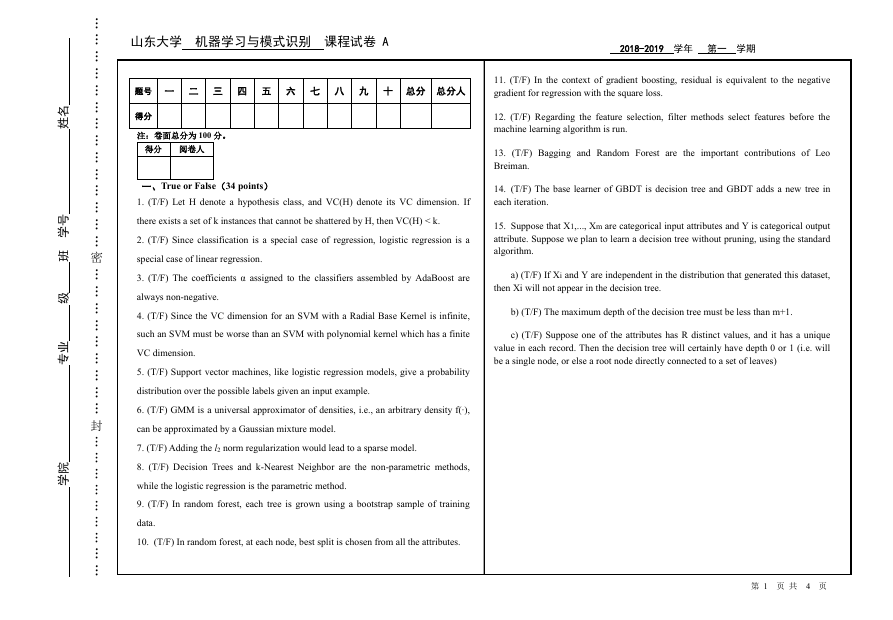

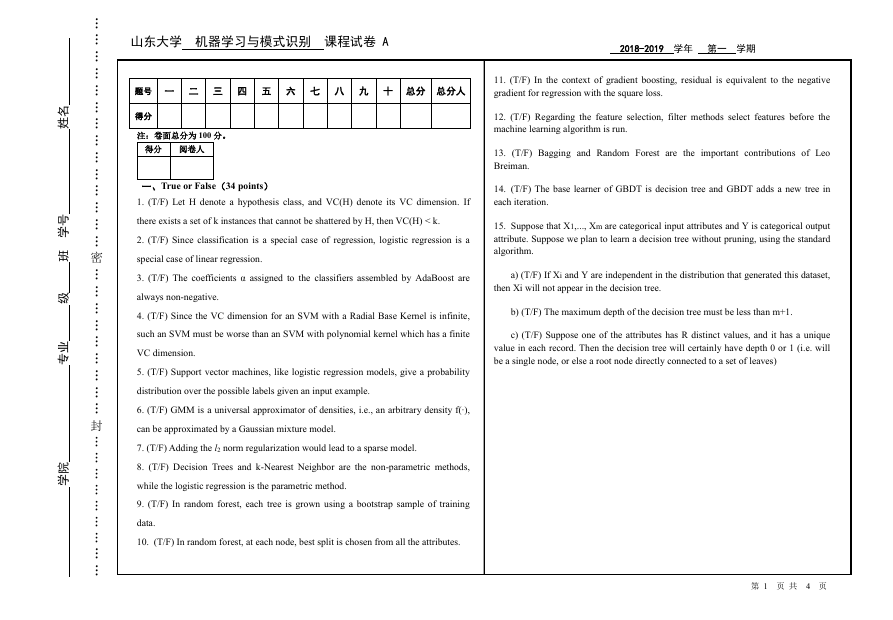

题号 一 二 三 四 五 六 七 八 九 十 总分 总分人

得分

注:卷面总分为 100 分。

得分 阅卷人

一、True or False(34 points)

1. (T/F) Let H denote a hypothesis class, and VC(H) denote its VC dimension. If

there exists a set of k instances that cannot be shattered by H, then VC(H) < k.

2. (T/F) Since classification is a special case of regression, logistic regression is a

special case of linear regression.

3. (T/F) The coefficients α assigned to the classifiers assembled by AdaBoost are

always non-negative.

4. (T/F) Since the VC dimension for an SVM with a Radial Base Kernel is infinite,

such an SVM must be worse than an SVM with polynomial kernel which has a finite

VC dimension.

5. (T/F) Support vector machines, like logistic regression models, give a probability

distribution over the possible labels given an input example.

6. (T/F) GMM is a universal approximator of densities, i.e., an arbitrary density f(∙),

can be approximated by a Gaussian mixture model.

7. (T/F) Adding the l2 norm regularization would lead to a sparse model.

8. (T/F) Decision Trees and k-Nearest Neighbor are the non-parametric methods,

while the logistic regression is the parametric method.

9. (T/F) In random forest, each tree is grown using a bootstrap sample of training

data.

10. (T/F) In random forest, at each node, best split is chosen from all the attributes.

11. (T/F) In the context of gradient boosting, residual is equivalent to the negative

gradient for regression with the square loss.

12. (T/F) Regarding the feature selection, filter methods select features before the

machine learning algorithm is run.

13. (T/F) Bagging and Random Forest are the important contributions of Leo

Breiman.

14. (T/F) The base learner of GBDT is decision tree and GBDT adds a new tree in

each iteration.

15. Suppose that X1,..., Xm are categorical input attributes and Y is categorical output

attribute. Suppose we plan to learn a decision tree without pruning, using the standard

algorithm.

a) (T/F) If Xi and Y are independent in the distribution that generated this dataset,

then Xi will not appear in the decision tree.

b) (T/F) The maximum depth of the decision tree must be less than m+1.

c) (T/F) Suppose one of the attributes has R distinct values, and it has a unique

value in each record. Then the decision tree will certainly have depth 0 or 1 (i.e. will

be a single node, or else a root node directly connected to a set of leaves)

第 1 页 共 4 页

�

线

…

…

…

…

…

…

…

…

…

…

…

…

…

名

姓

号

学

班

级

业

专

院

学

…

…

…

…

…

…

…

…

…

…

…

…

…

…

密

…

…

…

…

…

…

…

…

…

封

…

…

…

…

…

…

…

…

…

山东大学 机器学习与模式识别 课程试卷 A

2018-2019 学年 第一 学期

得分 阅卷人

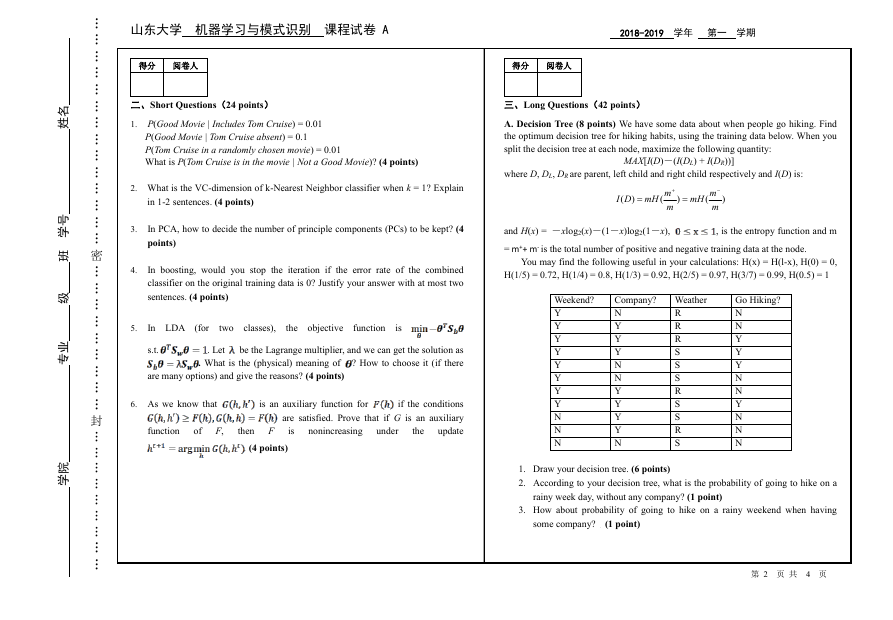

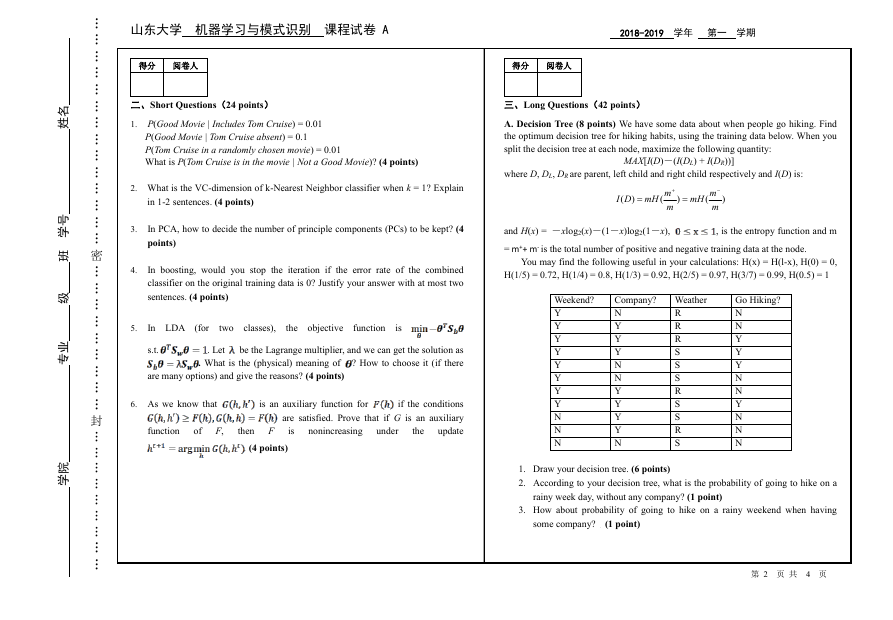

二、Short Questions(24 points)

1. P(Good Movie | Includes Tom Cruise) = 0.01

P(Good Movie | Tom Cruise absent) = 0.1

P(Tom Cruise in a randomly chosen movie) = 0.01

What is P(Tom Cruise is in the movie | Not a Good Movie)? (4 points)

2. What is the VC-dimension of k-Nearest Neighbor classifier when k = 1? Explain

in 1-2 sentences. (4 points)

In PCA, how to decide the number of principle components (PCs) to be kept? (4

points)

In boosting, would you stop the iteration if the error rate of the combined

classifier on the original training data is 0? Justify your answer with at most two

sentences. (4 points)

In LDA

the objective

two classes),

function

(for

is

3.

4.

5.

s.t.

. Let

be the Lagrange multiplier, and we can get the solution as

? How to choose it (if there

. What is the (physical) meaning of

are many options) and give the reasons? (4 points)

6. As we know that

function

of F,

is an auxiliary function for

if the conditions

are satisfied. Prove that if G is an auxiliary

update

nonincreasing

under

the

is

then F

. (4 points)

得分 阅卷人

三、Long Questions(42 points)

A. Decision Tree (8 points) We have some data about when people go hiking. Find

the optimum decision tree for hiking habits, using the training data below. When you

split the decision tree at each node, maximize the following quantity:

where D, DL, DR are parent, left child and right child respectively and I(D) is:

MAX[I(D)-(I(DL) + I(DR))]

DI

(

)

=

mmH

+

(

m

)

=

mmH

−

(

m

)

and H(x) = -xlog2(x)-(1-x)log2(1-x),

= m++ m- is the total number of positive and negative training data at the node.

, is the entropy function and m

You may find the following useful in your calculations: H(x) = H(l-x), H(0) = 0,

H(1/5) = 0.72, H(1/4) = 0.8, H(1/3) = 0.92, H(2/5) = 0.97, H(3/7) = 0.99, H(0.5) = 1

Weekend?

Y

Y

Y

Y

Y

Y

Y

Y

N

N

N

Company? Weather

N

Y

Y

Y

N

N

Y

Y

Y

Y

N

R

R

R

S

S

S

R

S

S

R

S

Go Hiking?

N

N

Y

Y

Y

N

N

Y

N

N

N

1. Draw your decision tree. (6 points)

2. According to your decision tree, what is the probability of going to hike on a

rainy week day, without any company? (1 point)

3. How about probability of going to hike on a rainy weekend when having

some company? (1 point)

第 2 页 共 4 页

�

线

…

…

…

…

…

…

…

…

…

…

…

…

…

名

姓

号

学

班

级

业

专

院

学

…

…

…

…

…

…

…

…

…

…

…

…

…

…

密

…

…

…

…

…

…

…

…

…

封

…

…

…

…

…

…

…

0

-

…

…

山东大学 机器学习与模式识别 课程试卷 A

2018-2019 学年 第一 学期

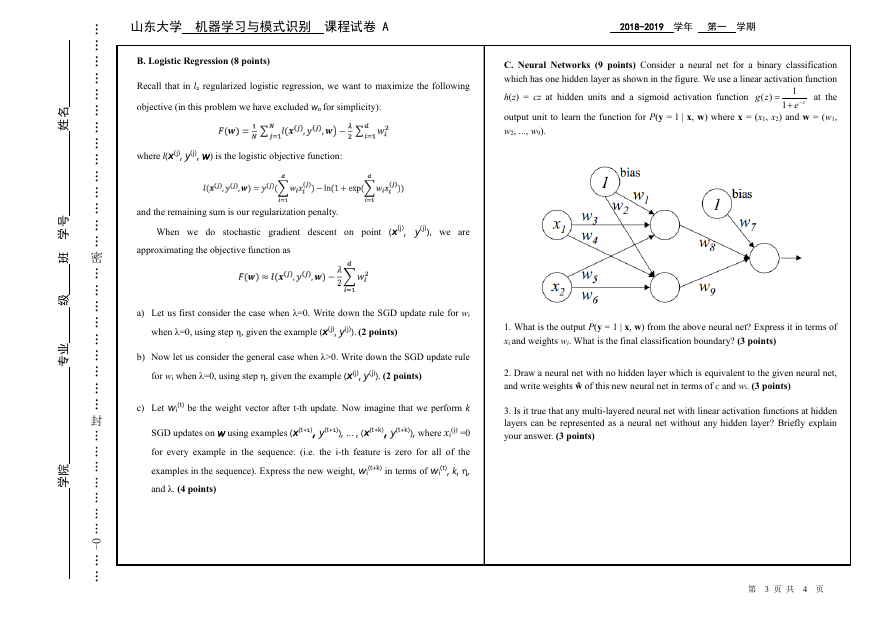

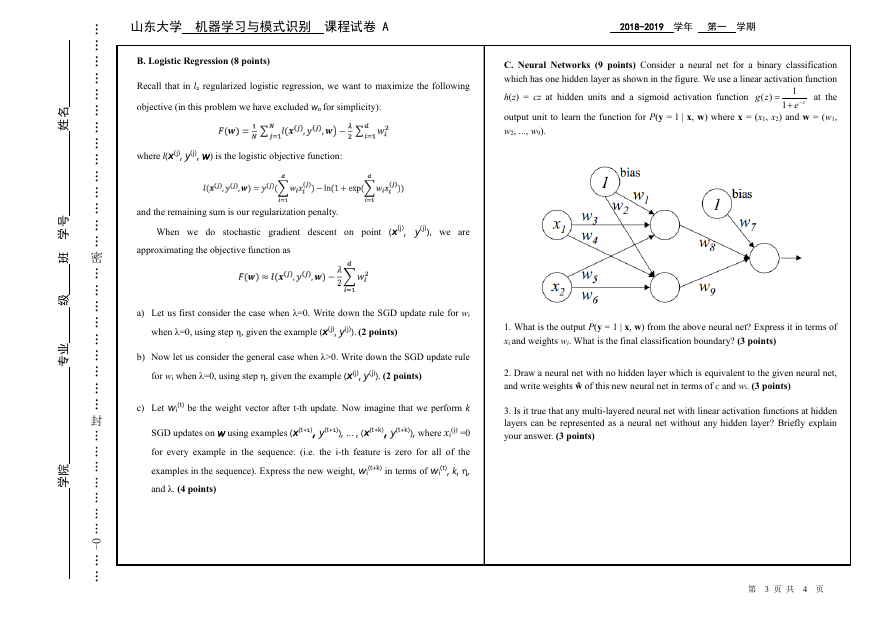

B. Logistic Regression (8 points)

Recall that in l2 regularized logistic regression, we want to maximize the following

objective (in this problem we have excluded w0 for simplicity):

where l(x(j), y(j), w) is the logistic objective function:

and the remaining sum is our regularization penalty.

When we do stochastic gradient descent on point (x(j), y(j)), we are

approximating the objective function as

a) Let us first consider the case when λ=0. Write down the SGD update rule for wi

when λ=0, using step η, given the example (x(j), y(j)). (2 points)

b) Now let us consider the general case when λ>0. Write down the SGD update rule

for wi when λ=0, using step η, given the example (x(j), y(j)). (2 points)

c) Let wi

(t) be the weight vector after t-th update. Now imagine that we perform k

SGD updates on w using examples (x(t+1), y(t+1)), … , (x(t+k), y(t+k)), where xi

(j) =0

for every example in the sequence. (i.e. the i-th feature is zero for all of the

(t), k, η,

examples in the sequence). Express the new weight, wi

and λ. (4 points)

(t+k) in terms of wi

C. Neural Networks (9 points) Consider a neural net for a binary classification

which has one hidden layer as shown in the figure. We use a linear activation function

h(z) = cz at hidden units and a sigmoid activation function

at the

zg

)(

=

1

ze

−+

1

output unit to learn the function for P(y = l | x, w) where x = (x1, x2) and w = (w1,

w2, ..., w9).

1. What is the output P(y = 1 | x, w) from the above neural net? Express it in terms of

xi and weights wi. What is the final classification boundary? (3 points)

2. Draw a neural net with no hidden layer which is equivalent to the given neural net,

and write weights ŵ of this new neural net in terms of c and wi. (3 points)

3. Is it true that any multi-layered neural net with linear activation functions at hidden

layers can be represented as a neural net without any hidden layer? Briefly explain

your answer. (3 points)

第 3 页 共 4 页

�

线

…

…

…

…

…

…

…

…

…

…

…

…

…

名

姓

号

学

班

级

业

专

院

学

…

…

…

…

…

…

…

…

…

…

…

…

…

…

密

…

…

…

…

…

…

…

…

…

封

…

…

…

…

…

…

…

…

…

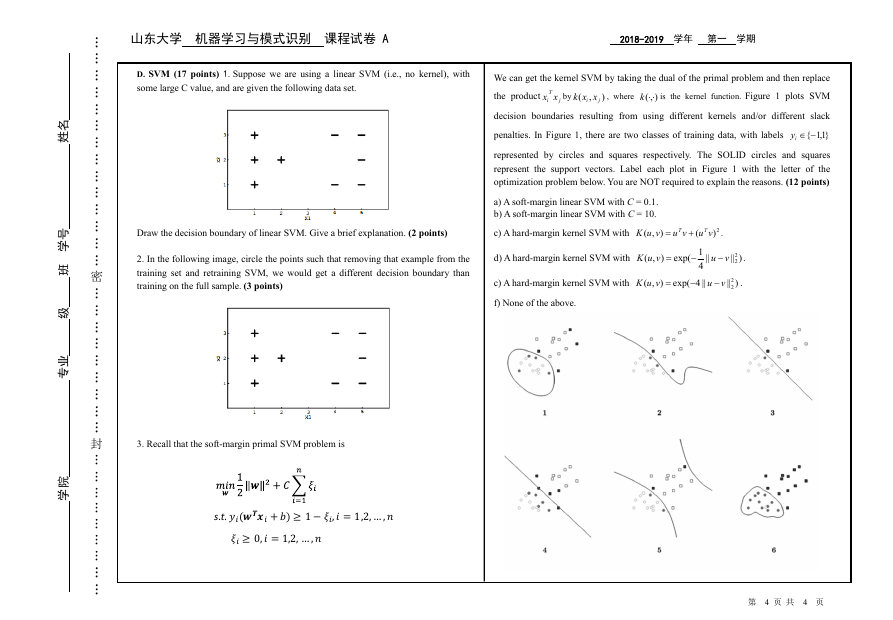

山东大学 机器学习与模式识别 课程试卷 A

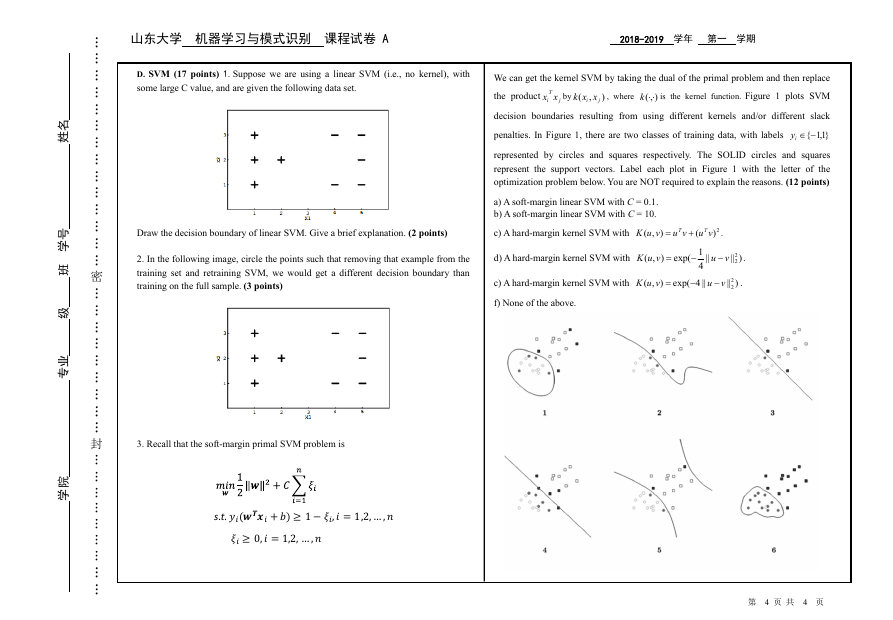

D. SVM (17 points) 1.Suppose we are using a linear SVM (i.e., no kernel), with

some large C value, and are given the following data set.

Draw the decision boundary of linear SVM. Give a brief explanation. (2 points)

2. In the following image, circle the points such that removing that example from the

training set and retraining SVM, we would get a different decision boundary than

training on the full sample. (3 points)

2018-2019 学年 第一 学期

We can get the kernel SVM by taking the dual of the primal problem and then replace

the product

is the kernel function. Figure 1 plots SVM

, where

),( k

by

)

i xxk

(

,

j

T

i xx

j

decision boundaries resulting from using different kernels and/or different slack

}1,1{−iy

penalties. In Figure 1, there are two classes of training data, with labels

represented by circles and squares respectively. The SOLID circles and squares

represent the support vectors. Label each plot in Figure 1 with the letter of the

optimization problem below. You are NOT required to explain the reasons. (12 points)

a) A soft-margin linear SVM with C = 0.1.

b) A soft-margin linear SVM with C = 10.

vuK

c) A hard-margin kernel SVM with

),(

=

d) A hard-margin kernel SVM with

vuK

),(

=

vu

T +

2)

.

exp(

2vu

)||

2

−

.

vu

(

T

1

4

||4

−

−

||

c) A hard-margin kernel SVM with

vuK

),(

=

exp(

f) None of the above.

2vu

)||

2

−

.

3. Recall that the soft-margin primal SVM problem is

s.t.

第 4 页 共 4 页

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc