3 OpenMP

3.1 Modification of Serial Code

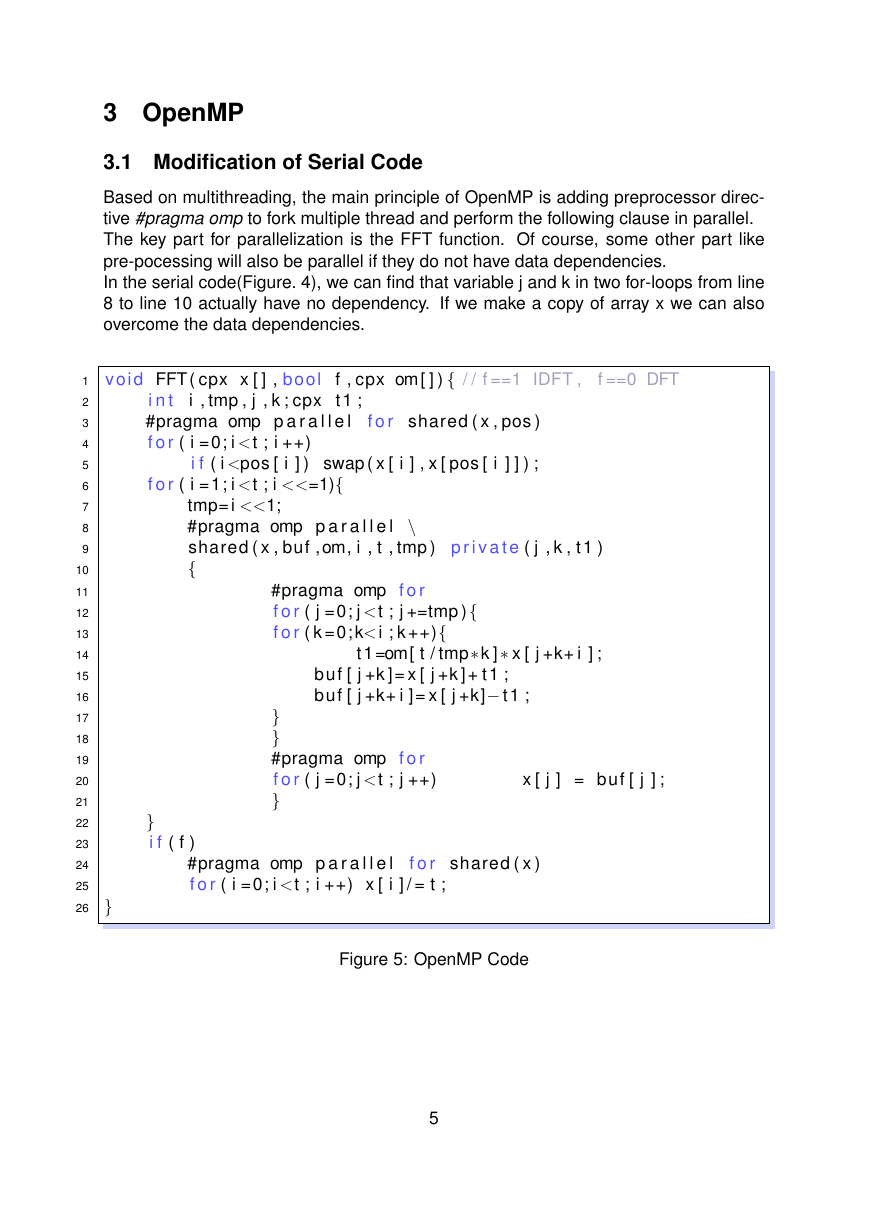

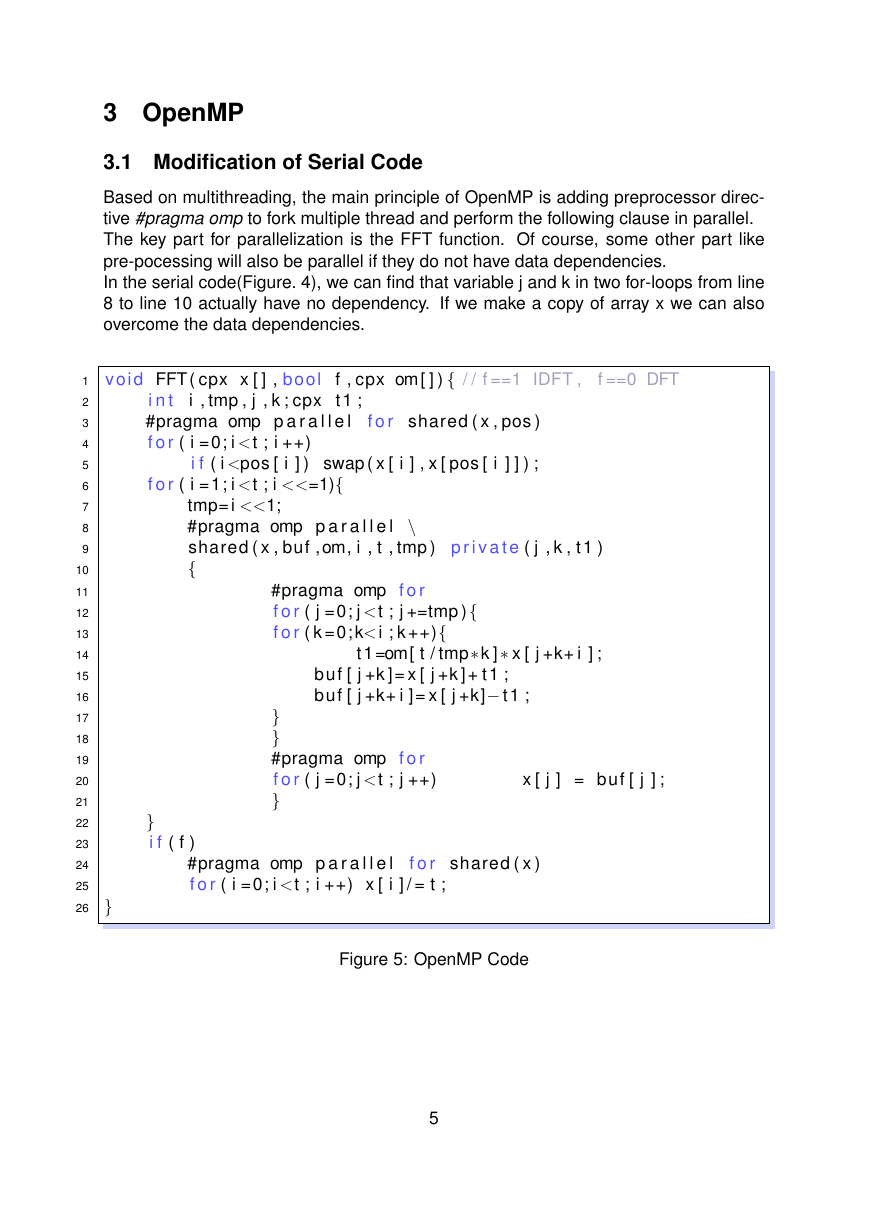

Based on multithreading, the main principle of OpenMP is adding preprocessor direc-

tive #pragma omp to fork multiple thread and perform the following clause in parallel.

The key part for parallelization is the FFT function. Of course, some other part like

pre-pocessing will also be parallel if they do not have data dependencies.

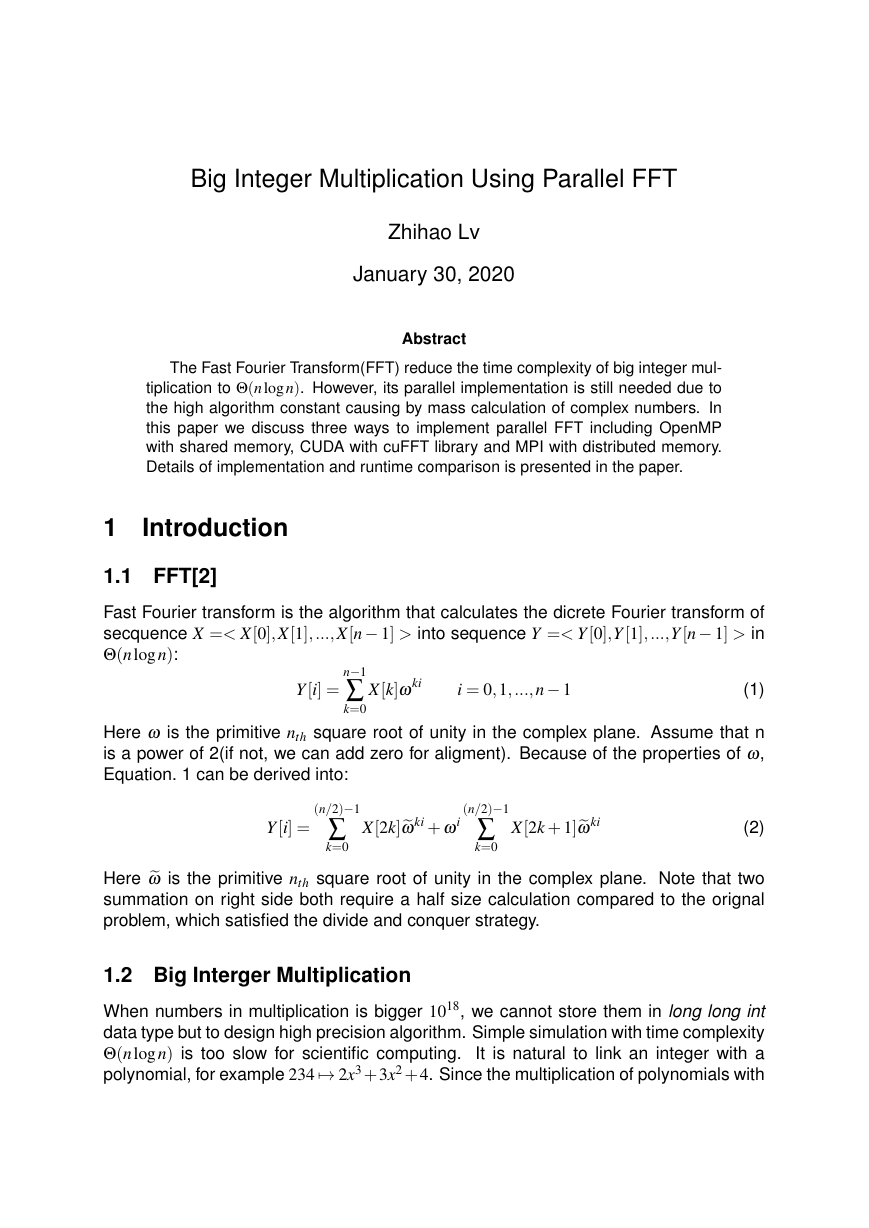

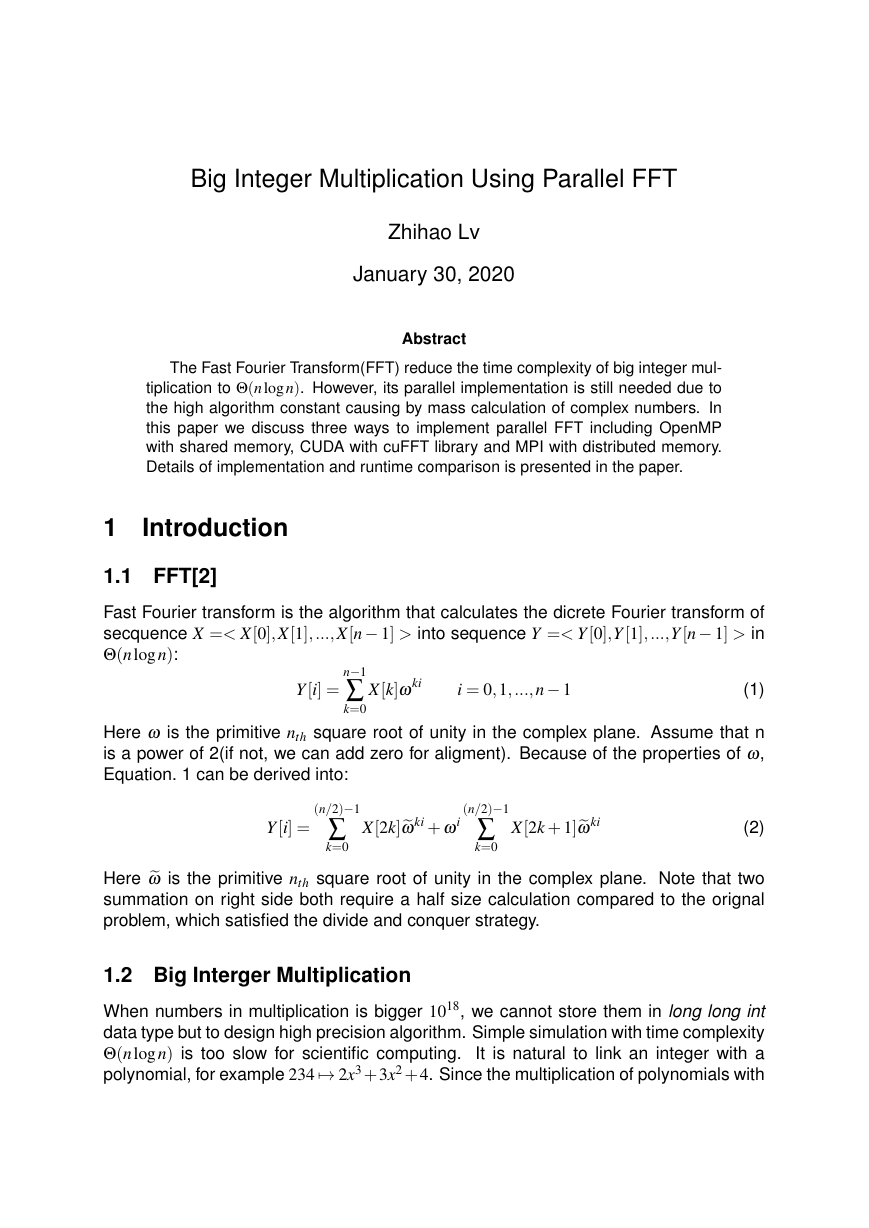

In the serial code(Figure. 4), we can find that variable j and k in two for-loops from line

8 to line 10 actually have no dependency. If we make a copy of array x we can also

overcome the data dependencies.

f , cpx om [ ] ) { / / f ==1 IDFT ,

f ==0 DFT

f o r shared ( x , pos )

i f ( i

3.2 Results

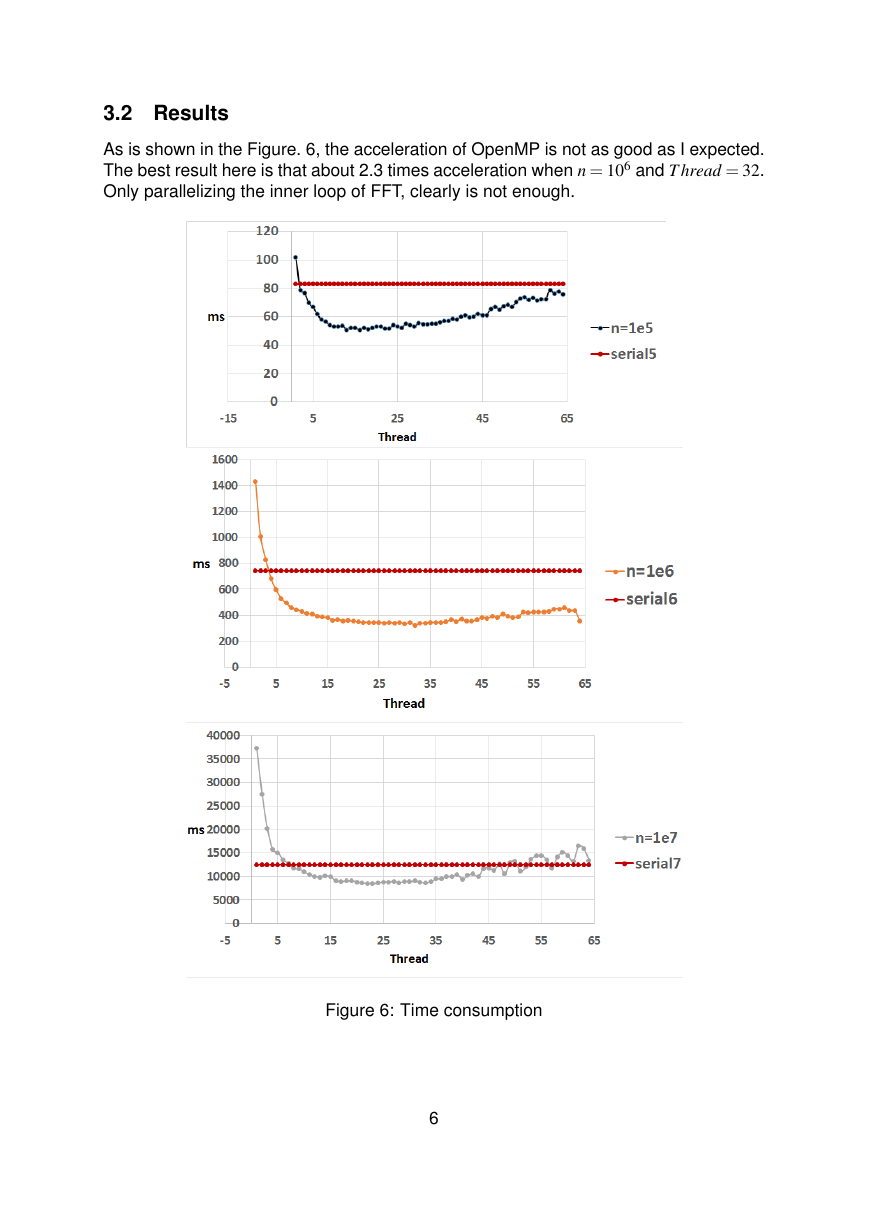

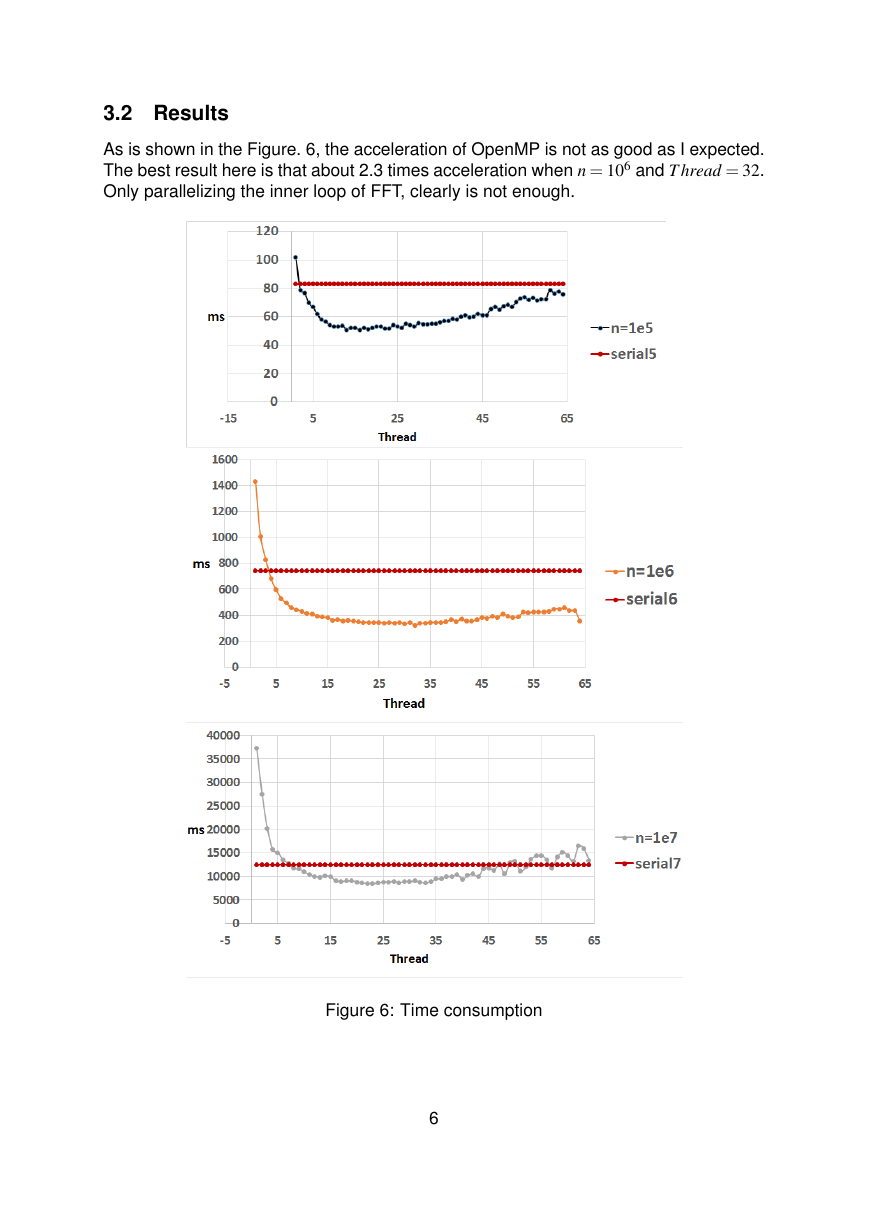

As is shown in the Figure. 6, the acceleration of OpenMP is not as good as I expected.

The best result here is that about 2.3 times acceleration when n = 106 and T hread = 32.

Only parallelizing the inner loop of FFT, clearly is not enough.

Figure 6: Time consumption

6

�

4 CUDA

4.1 cuFFT

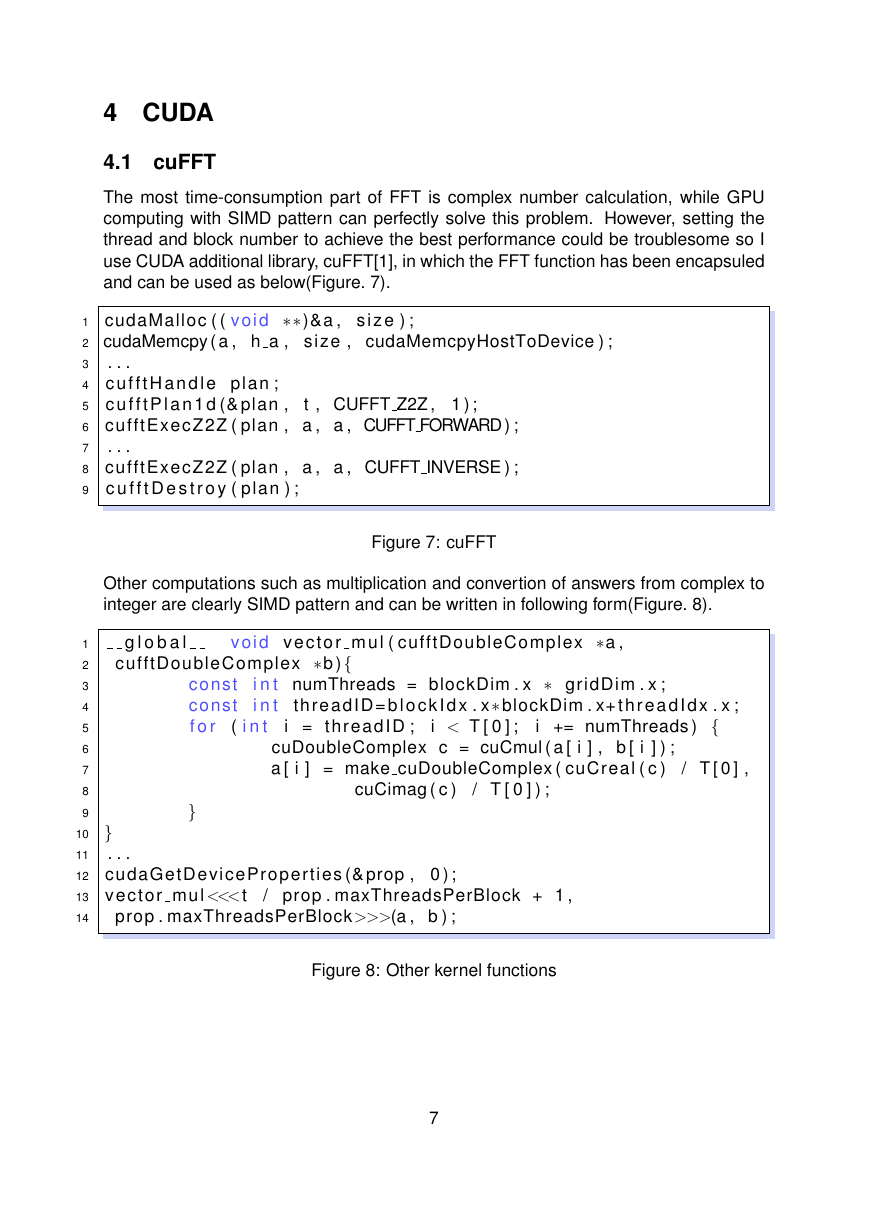

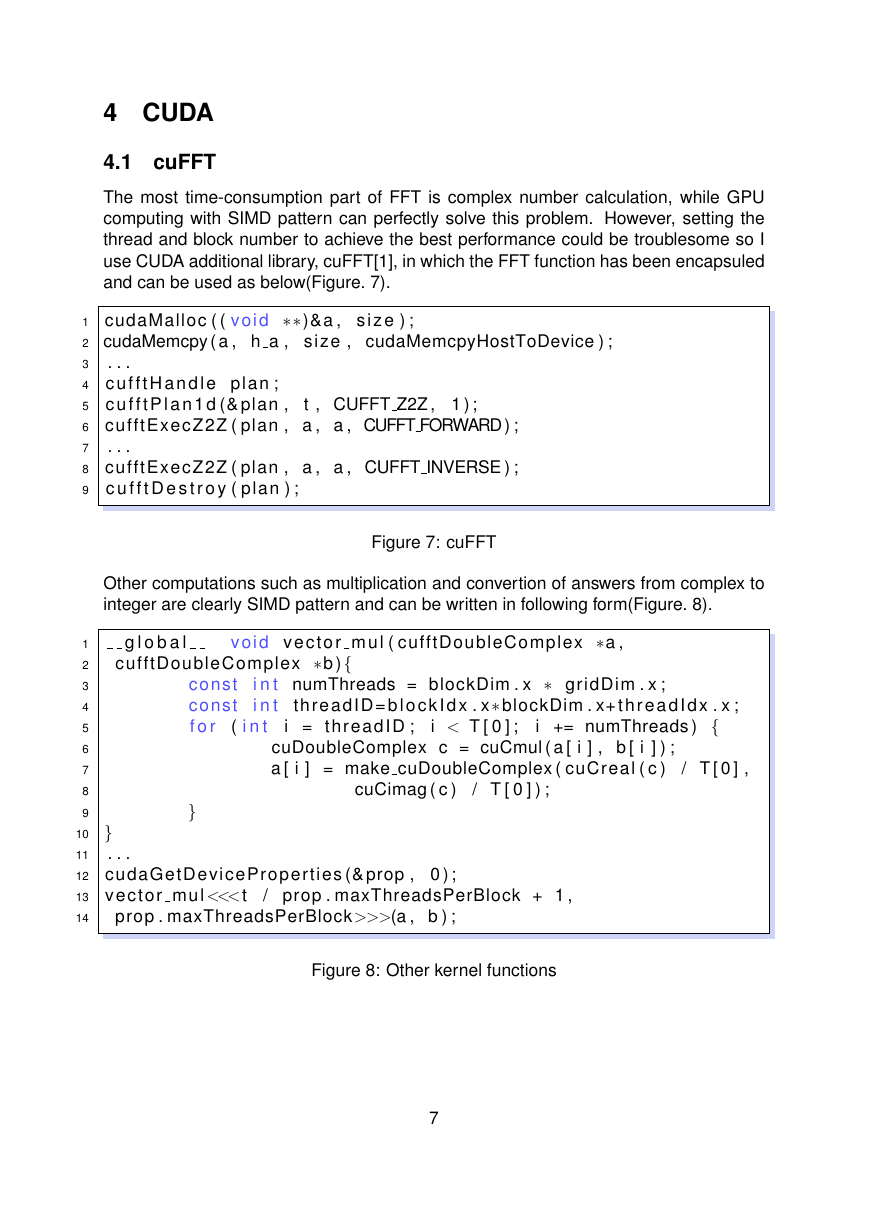

The most time-consumption part of FFT is complex number calculation, while GPU

computing with SIMD pattern can perfectly solve this problem. However, setting the

thread and block number to achieve the best performance could be troublesome so I

use CUDA additional library, cuFFT[1], in which the FFT function has been encapsuled

and can be used as below(Figure. 7).

. . .

1 cudaMalloc ( ( void ∗∗)&a , s i z e ) ;

2 cudaMemcpy ( a , h a , size , cudaMemcpyHostToDevice ) ;

3

4 c u f f t H a n d l e plan ;

5 c u f f t P l a n 1 d (& plan ,

6 cufftExecZ2Z ( plan , a , a , CUFFT FORWARD ) ;

7

8 cufftExecZ2Z ( plan , a , a , CUFFT INVERSE ) ;

9 c u f f t D e s t r o y ( plan ) ;

t , CUFFT Z2Z , 1 ) ;

. . .

Figure 7: cuFFT

Other computations such as multiplication and convertion of answers from complex to

integer are clearly SIMD pattern and can be written in following form(Figure. 8).

g l o b a l

cufftDoubleComplex ∗b ){

void vector mul ( cufftDoubleComplex ∗a ,

const

const

f o r

i n t numThreads = blockDim . x ∗ gridDim . x ;

i n t

( i n t

threadID= b l o c k I d x . x∗ blockDim . x+ threadIdx . x ;

i = threadID ;

i += numThreads ) {

i < T [ 0 ] ;

cuDoubleComplex c = cuCmul ( a [ i ] , b [ i ] ) ;

a [ i ] = make cuDoubleComplex ( cuCreal ( c )

/ T [ 0 ] ,

cuCimag ( c )

/ T [ 0 ] ) ;

1

2

3

4

5

6

7

8

9

}

10 }

. . .

11

12 cudaGetDeviceProperties (& prop , 0 ) ;

13 vector mul <<>>(a , b ) ;

/ prop . maxThreadsPerBlock + 1 ,

Figure 8: Other kernel functions

7

�

4.2 Result

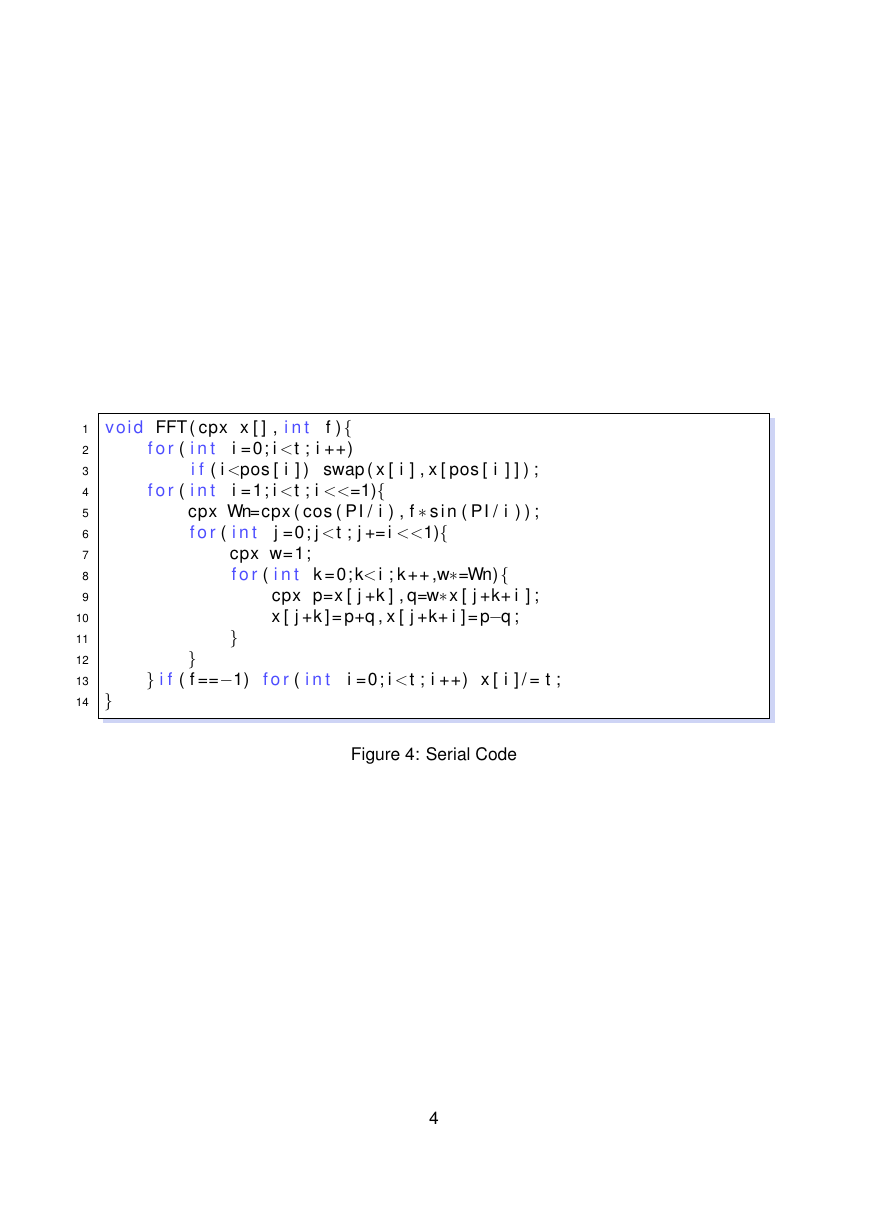

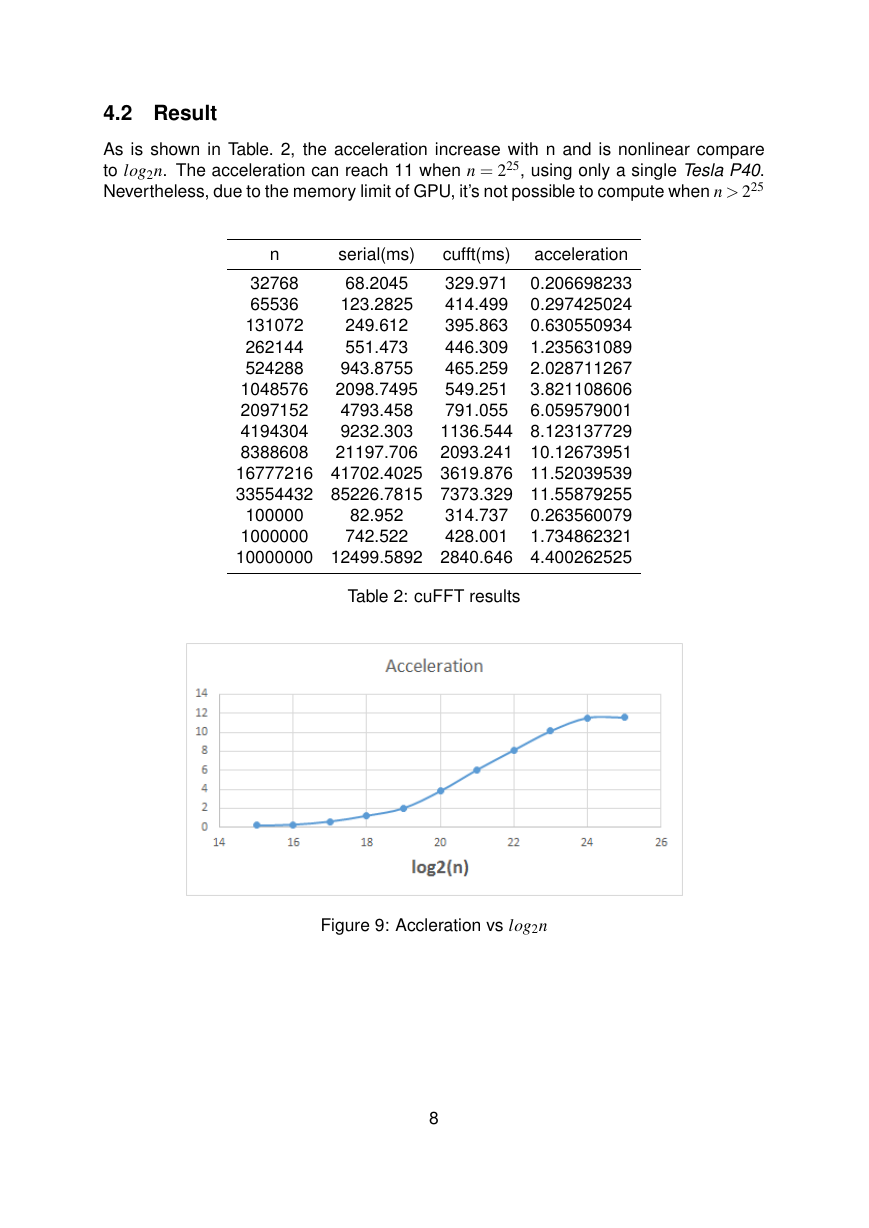

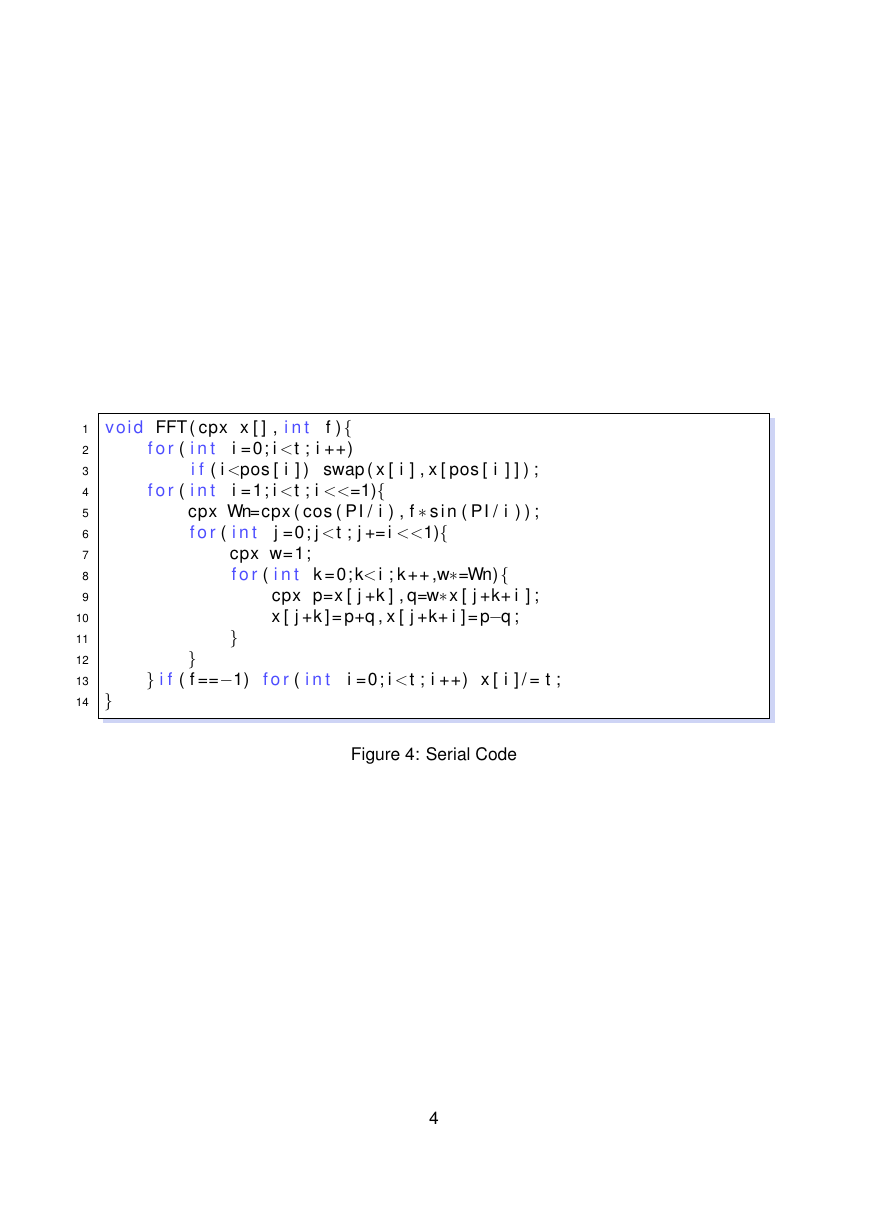

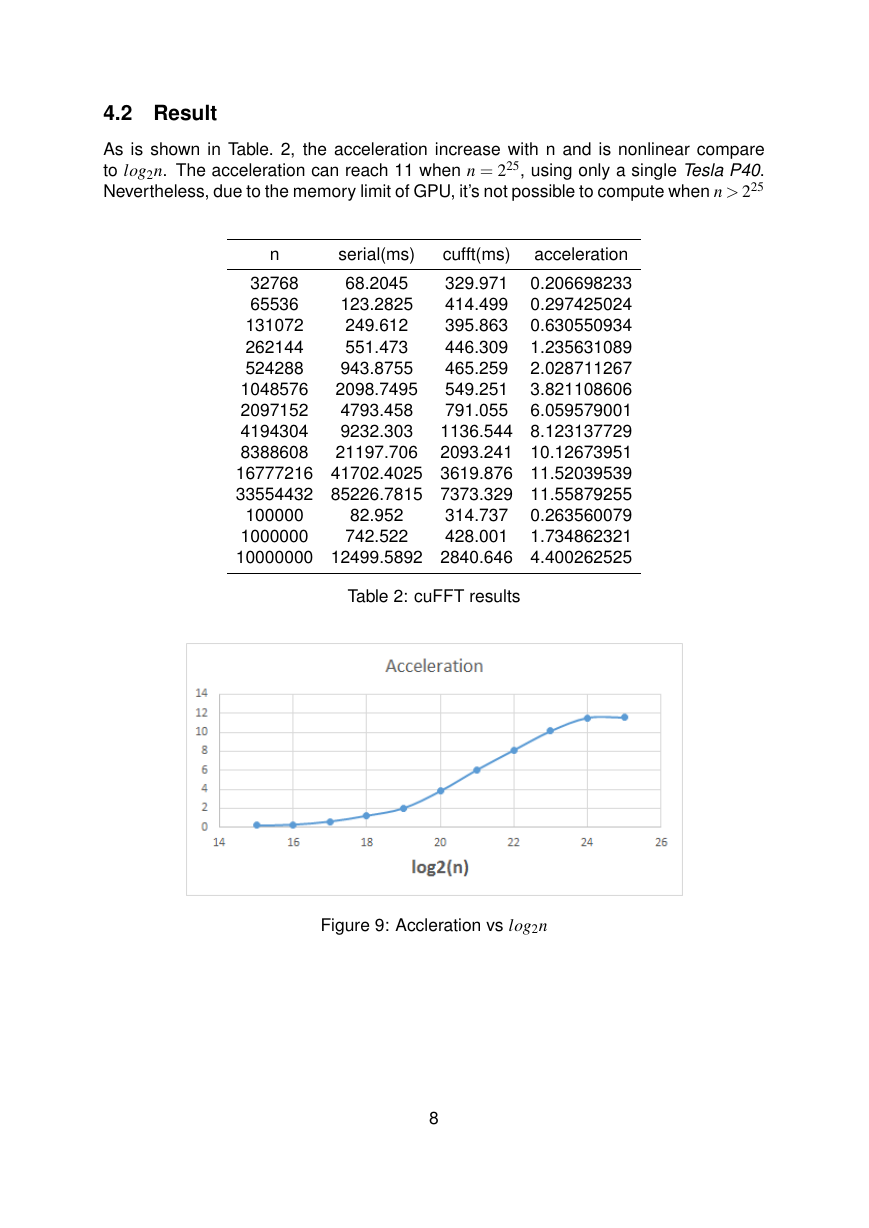

As is shown in Table. 2, the acceleration increase with n and is nonlinear compare

to log2n. The acceleration can reach 11 when n = 225, using only a single Tesla P40.

Nevertheless, due to the memory limit of GPU, it’s not possible to compute when n > 225

n

serial(ms)

68.2045

123.2825

249.612

551.473

943.8755

2098.7495

4793.458

9232.303

21197.706

cufft(ms)

acceleration

329.971

0.206698233

32768

414.499

0.297425024

65536

395.863

0.630550934

131072

446.309

1.235631089

262144

465.259

2.028711267

524288

549.251

3.821108606

1048576

791.055

6.059579001

2097152

1136.544 8.123137729

4194304

8388608

2093.241 10.12673951

16777216 41702.4025 3619.876 11.52039539

33554432 85226.7815 7373.329 11.55879255

0.263560079

100000

1000000

1.734862321

10000000 12499.5892 2840.646 4.400262525

82.952

742.522

314.737

428.001

Table 2: cuFFT results

Figure 9: Accleration vs log2n

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc