Vol. 9, No. 1: 25—36

An Image Inpainting Technique Based on

the Fast Marching Method

Alexandru Telea

Eindhoven University of Technology

Abstract. Digital inpainting provides a means for reconstruction of small dam-

aged portions of an image. Although the inpainting basics are straightforward, most

inpainting techniques published in the literature are complex to understand and im-

plement. We present here a new algorithm for digital inpainting based on the fast

marching method for level set applications. Our algorithm is very simple to im-

plement, fast, and produces nearly identical results to more complex, and usually

slower, known methods. Source code is available online.

1.

Introduction

Digital inpainting, the technique of reconstructing small damaged portions of

an image, has received considerable attention in recent years. Digital inpaint-

ing serves a wide range of applications, such as removing text and logos from

still images or videos, reconstructing scans of deteriorated images by remov-

ing scratches or stains, or creating artistic effects. Most inpainting methods

work as follows. First, the image regions to be inpainted are selected, usu-

ally manually. Next, color information is propagated inward from the region

boundaries, i.e., the known image information is used to fill in the missing

areas.

In order to produce a perceptually plausible reconstruction, an in-

painting technique should attempt to continue the isophotes (lines of equal

gray value) as smoothly as possible inside the reconstructed region. In other

words, the missing region should be inpainted so that the inpainted gray value

and gradient extrapolate the gray value and gradient outside this region.

25

© A K Peters, Ltd.

1086-7651/04 $0.50 per page

�

26

journal of graphics tools

Several inpainting methods are based on the above ideas. In [Bertalmio 00,

Bertalmio 01], the image smoothness information, estimated by the image

Laplacian, is propagated along the isophotes directions, estimated by the im-

age gradient rotated 90 degrees. The Total Variational (TV) model [Chan

and Shen 00a] uses an Euler-Lagrange equation coupled with anisotropic dif-

fusion to maintain the isophotes’ directions. The Curvature-Driven Diffusion

(CCD) model [Chan and Shen 00b] enhances the TV method to drive dif-

fusion along the isophotes’ directions and thus allows inpainting of thicker

regions. All above methods essentially solve a Partial Differential Equation

(PDE) that describes the color propagation inside the missing region, subject

to various heuristics that attempt to preserve the isophotes’ directions. Pre-

serving isophotes is, however desirable, never perfectly attained in practice.

The main problem is that both isophote estimation and information propaga-

tion are subject to numerical diffusion. Diffusion is desirable as it stabilizes

the PDEs to be solved, but leads inevitably to a cetain amount of blurring of

the inpainted area.

A second type of methods [Oliveira 01] repeatedly convolves a simple 3 ×

3 filter over the missing regions to diffuse known image information to the

missing pixels.

However impressive, the above methods have several drawbacks that pre-

clude their use in practice. The PDE-based methods require implementing

nontrivial iterative numerical methods and techniques, such as anisotropic

diffusion and multiresolution schemes [Bertalmio 00]. Little or no informa-

tion is given on practical implementation details such as various thresholds

or discretization methods, although some steps are mentioned as numerically

unstable. Moreover, such methods are quite slow, e.g., a few minutes for

the relatively small inpainting region shown in Figure 1.

In contrast, the

convolution-based method described in [Oliveira 01] is fast and simple to im-

plement. However, this method has no provisions for preserving the isophotes’

directions. High-gradient image areas must be selected manually before in-

painting and treated separately so as not to be blurred.

We propose a new inpainting algorithm based on propagating an image

smoothness estimator along the image gradient, similar to [Bertalmio 00]. We

estimate the image smoothness as a weighted average over a known image

neighborhood of the pixel to inpaint. We treat the missing regions as level

sets and use the fast marching method (FMM) described in [Sethian 96] to

propagate the image information. Our approach has several advantages:

• it is very simple to implement (the complete pseudocode is given here);

• it is considerably faster than other inpainting methods–processing an

800× 600 image (Figure 1) takes under three seconds on a 800 MHz PC;

• it produces very similar results as compared to the other methods;

�

Telea: An Image Inpainting Technique

27

a)

b)

Figure 1. An 800 × 600 image inpainted in less than three seconds.

• it can easily be customized to use different local inpainting strategies.

In Section 2, we describe our method. Section 3 presents several results,

details our method’s advantages and limitations in comparison to other meth-

ods, and discusses possible enhancements. Source code of a sample method

implementation is available online at the address listed at the end of the paper.

2. Our Method

This section describes our inpainting method. First, we introduce the math-

ematical model on which we base our inpainting (Section 2.1). Next, we

describe how the missing regions are inpainted using the FMM (Section 2.2).

Finally, we detail the implementation of inpainting one point on the missing

region’s boundary (Section 2.3).

2.1. Mathematical Model

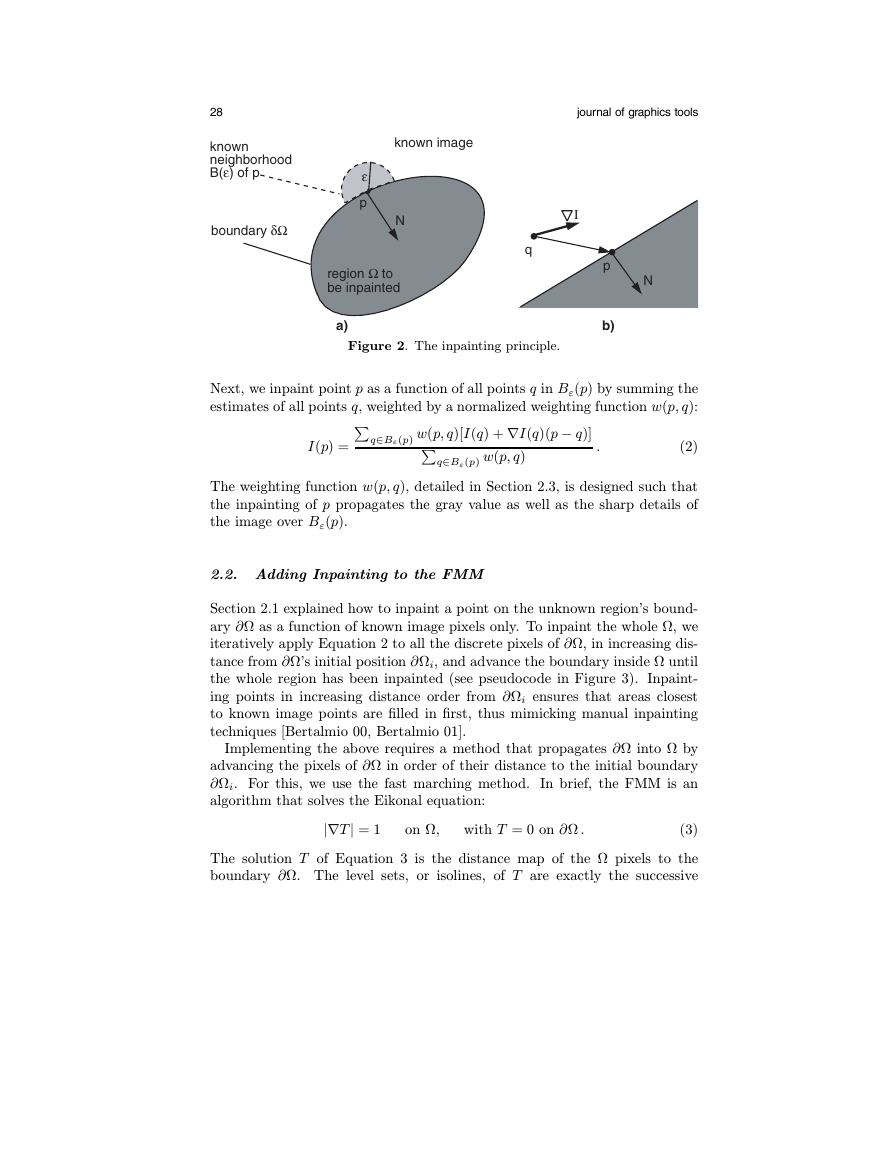

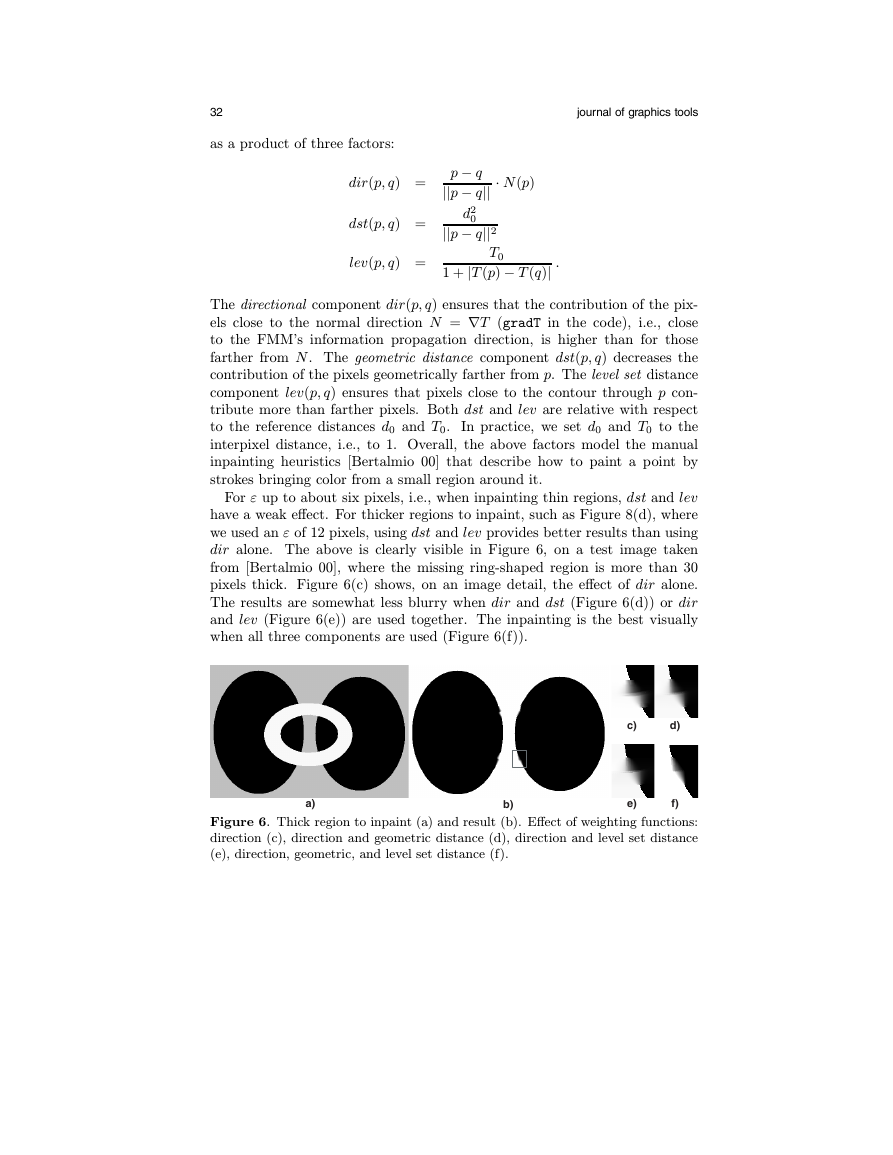

To explain our method, consider Figure 2, in which one must inpaint the

point p situated on the boundary ∂Ω of the region to inpaint Ω. Take a small

neighborhood Bε(p) of size ε of the known image around p (Figure 2(a)). As

described in [Bertalmio 00, Oliveira 01, Chan and Shen 00a], the inpainting

of p should be determined by the values of the known image points close to

p, i.e., in Bε(p). We first consider gray value images, color images being a

natural extension (see Section 2.4). For ε small enough, we consider a first

order approximation Iq(p) of the image in point p, given the image I(q) and

gradient ∇I(q) values of point q (Figure 2(b)):

Iq(p) = I(q) + ∇I(q)(p − q) .

(1)

�

28

known

neighborhood

B(ε) of p

boundary δΩ

journal of graphics tools

known image

ε

p

N

region Ω to

be inpainted

a)

I

q

N

p

b)

Figure 2. The inpainting principle.

Next, we inpaint point p as a function of all points q in Bε(p) by summing the

estimates of all points q, weighted by a normalized weighting function w(p, q):

I(p) = q∈Bε(p) w(p, q)[I(q) + ∇I(q)(p − q)]

q∈Bε(p) w(p, q)

.

(2)

The weighting function w(p, q), detailed in Section 2.3, is designed such that

the inpainting of p propagates the gray value as well as the sharp details of

the image over Bε(p).

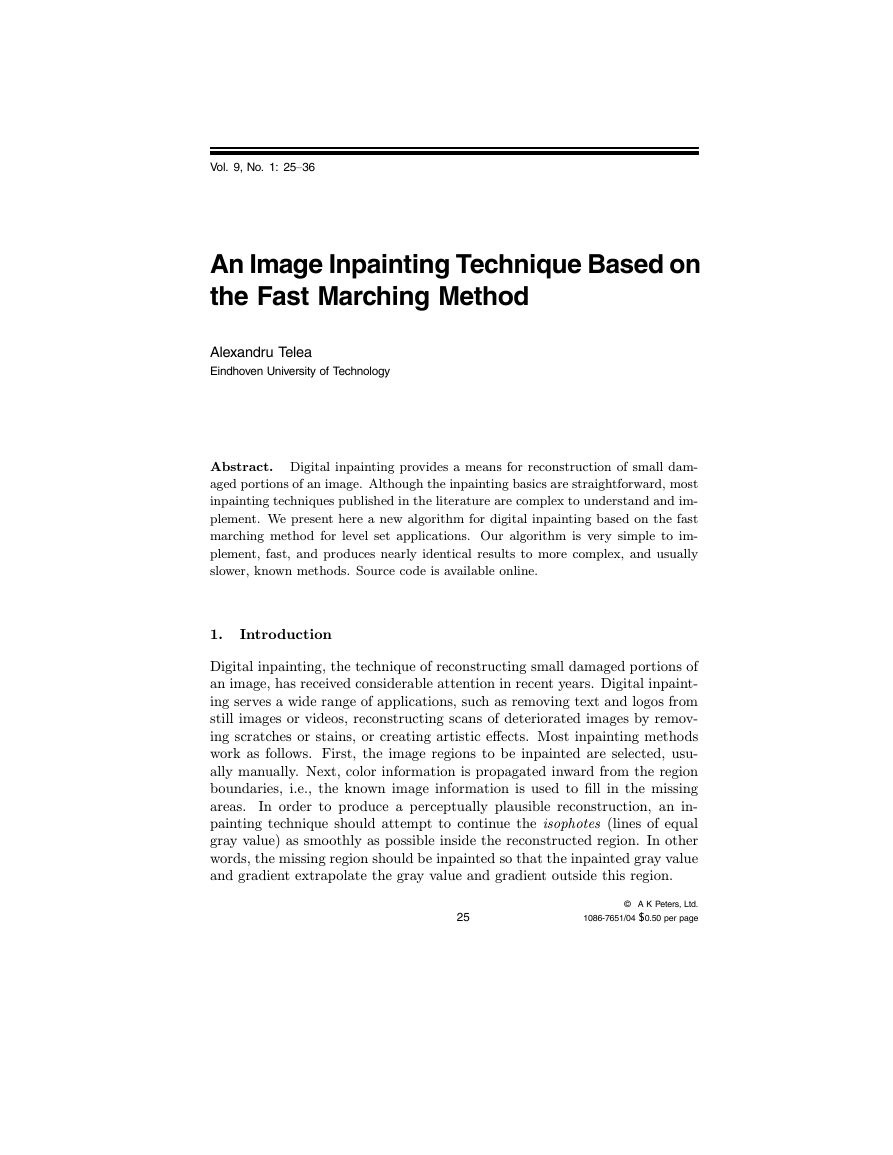

2.2. Adding Inpainting to the FMM

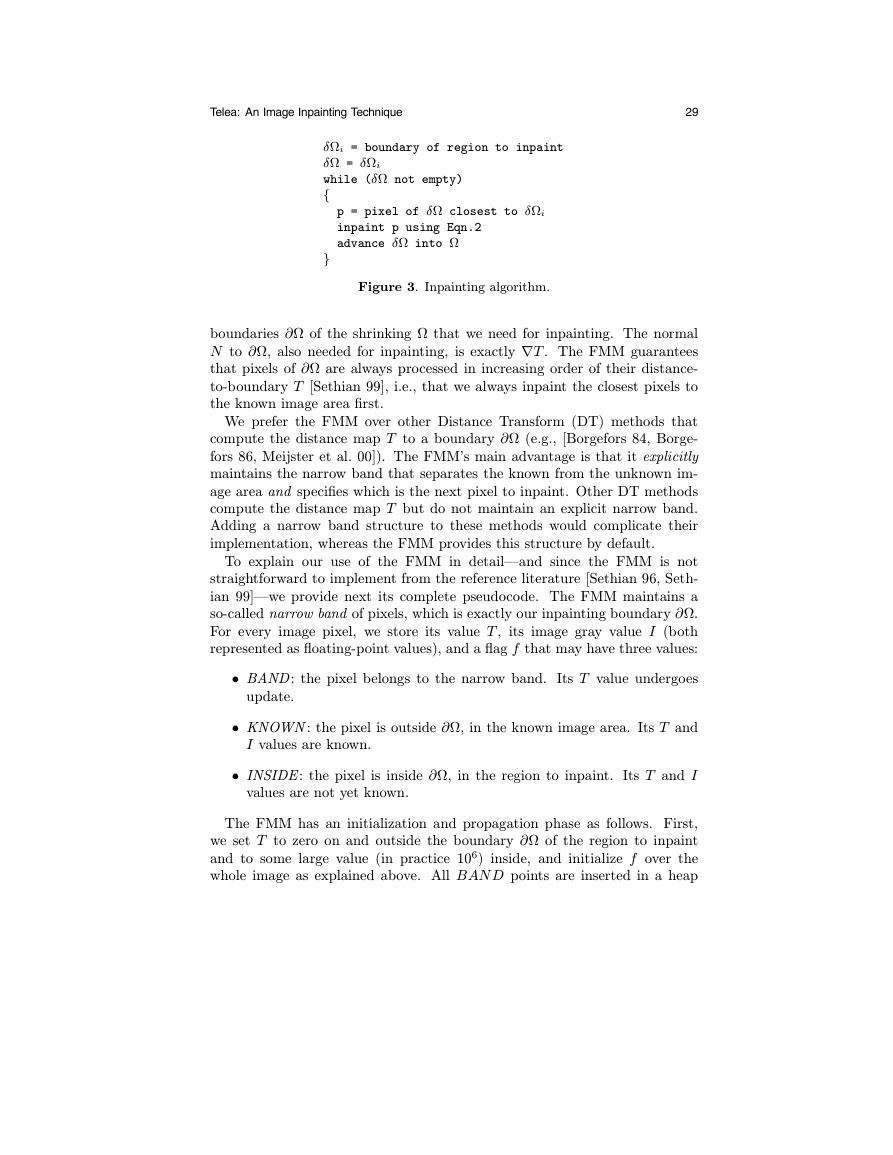

Section 2.1 explained how to inpaint a point on the unknown region’s bound-

ary ∂Ω as a function of known image pixels only. To inpaint the whole Ω, we

iteratively apply Equation 2 to all the discrete pixels of ∂Ω, in increasing dis-

tance from ∂Ω’s initial position ∂Ωi, and advance the boundary inside Ω until

the whole region has been inpainted (see pseudocode in Figure 3). Inpaint-

ing points in increasing distance order from ∂Ωi ensures that areas closest

to known image points are filled in first, thus mimicking manual inpainting

techniques [Bertalmio 00, Bertalmio 01].

Implementing the above requires a method that propagates ∂Ω into Ω by

advancing the pixels of ∂Ω in order of their distance to the initial boundary

∂Ωi. For this, we use the fast marching method. In brief, the FMM is an

algorithm that solves the Eikonal equation:

|∇T| = 1

on Ω,

with T = 0 on ∂Ω .

(3)

The solution T of Equation 3 is the distance map of the Ω pixels to the

boundary ∂Ω. The level sets, or isolines, of T are exactly the successive

�

Telea: An Image Inpainting Technique

29

δΩi = boundary of region to inpaint

δΩ = δΩi

while (δΩ not empty)

{

p = pixel of δΩ closest to δΩi

inpaint p using Eqn.2

advance δΩ into Ω

}

Figure 3. Inpainting algorithm.

boundaries ∂Ω of the shrinking Ω that we need for inpainting. The normal

N to ∂Ω, also needed for inpainting, is exactly ∇T . The FMM guarantees

that pixels of ∂Ω are always processed in increasing order of their distance-

to-boundary T [Sethian 99], i.e., that we always inpaint the closest pixels to

the known image area first.

We prefer the FMM over other Distance Transform (DT) methods that

compute the distance map T to a boundary ∂Ω (e.g., [Borgefors 84, Borge-

fors 86, Meijster et al. 00]). The FMM’s main advantage is that it explicitly

maintains the narrow band that separates the known from the unknown im-

age area and specifies which is the next pixel to inpaint. Other DT methods

compute the distance map T but do not maintain an explicit narrow band.

Adding a narrow band structure to these methods would complicate their

implementation, whereas the FMM provides this structure by default.

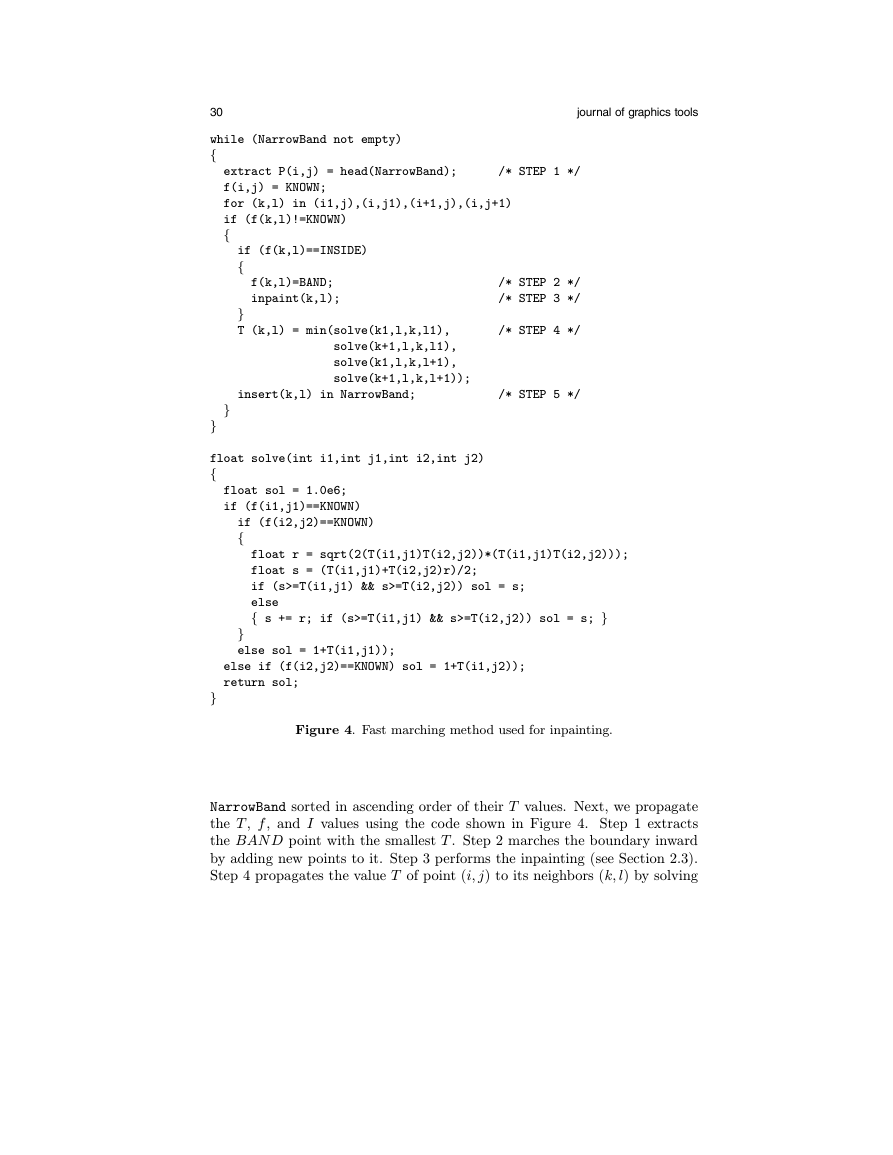

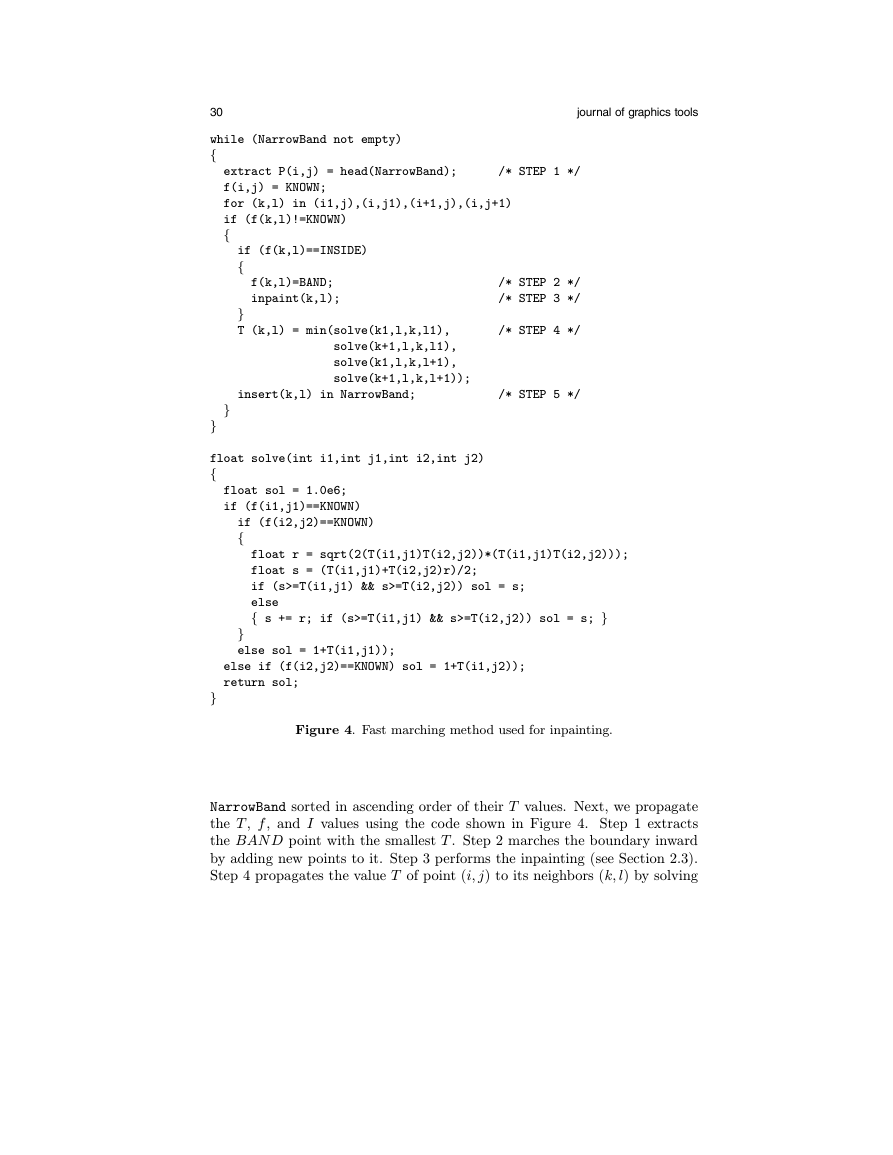

To explain our use of the FMM in detail–and since the FMM is not

straightforward to implement from the reference literature [Sethian 96, Seth-

ian 99]–we provide next its complete pseudocode. The FMM maintains a

so-called narrow band of pixels, which is exactly our inpainting boundary ∂Ω.

For every image pixel, we store its value T , its image gray value I (both

represented as floating-point values), and a flag f that may have three values:

• BAND : the pixel belongs to the narrow band. Its T value undergoes

update.

• KNOWN : the pixel is outside ∂Ω, in the known image area. Its T and

I values are known.

• INSIDE : the pixel is inside ∂Ω, in the region to inpaint. Its T and I

values are not yet known.

The FMM has an initialization and propagation phase as follows. First,

we set T to zero on and outside the boundary ∂Ω of the region to inpaint

and to some large value (in practice 106) inside, and initialize f over the

whole image as explained above. All BAN D points are inserted in a heap

�

30

journal of graphics tools

while (NarrowBand not empty)

{

extract P(i,j) = head(NarrowBand);

f(i,j) = KNOWN;

for (k,l) in (i1,j),(i,j1),(i+1,j),(i,j+1)

if (f(k,l)!=KNOWN)

{

/* STEP 1 */

if (f(k,l)==INSIDE)

{

f(k,l)=BAND;

inpaint(k,l);

}

T (k,l) = min(solve(k1,l,k,l1),

solve(k+1,l,k,l1),

solve(k1,l,k,l+1),

solve(k+1,l,k,l+1));

/* STEP 2 */

/* STEP 3 */

/* STEP 4 */

insert(k,l) in NarrowBand;

/* STEP 5 */

}

}

float solve(int i1,int j1,int i2,int j2)

{

float sol = 1.0e6;

if (f(i1,j1)==KNOWN)

if (f(i2,j2)==KNOWN)

{

float r = sqrt(2(T(i1,j1)T(i2,j2))*(T(i1,j1)T(i2,j2)));

float s = (T(i1,j1)+T(i2,j2)r)/2;

if (s>=T(i1,j1) && s>=T(i2,j2)) sol = s;

else

{ s += r; if (s>=T(i1,j1) && s>=T(i2,j2)) sol = s; }

}

else sol = 1+T(i1,j1));

else if (f(i2,j2)==KNOWN) sol = 1+T(i1,j2));

return sol;

}

Figure 4. Fast marching method used for inpainting.

NarrowBand sorted in ascending order of their T values. Next, we propagate

the T , f , and I values using the code shown in Figure 4. Step 1 extracts

the BAN D point with the smallest T . Step 2 marches the boundary inward

by adding new points to it. Step 3 performs the inpainting (see Section 2.3).

Step 4 propagates the value T of point (i, j) to its neighbors (k, l) by solving

�

Telea: An Image Inpainting Technique

the finite difference discretization of Equation 3 given by

max(D−xT,−D+xT, 0)2 + max(D−yT,−D+yT, 0)2 = 1 ,

31

(4)

where D−xT (i, j) = T (i, j)− T (i− 1, j) and D+xT (i, j) = T (i + 1, j)− T (i, j)

and similarly for y. Following the upwind idea of Sethian [Sethian 96], we

solve Equation 4 for (k, l)’s four quadrants and retain the smallest solution.

Finally, Step 5 (re)inserts (k, l) with its new T in the heap.

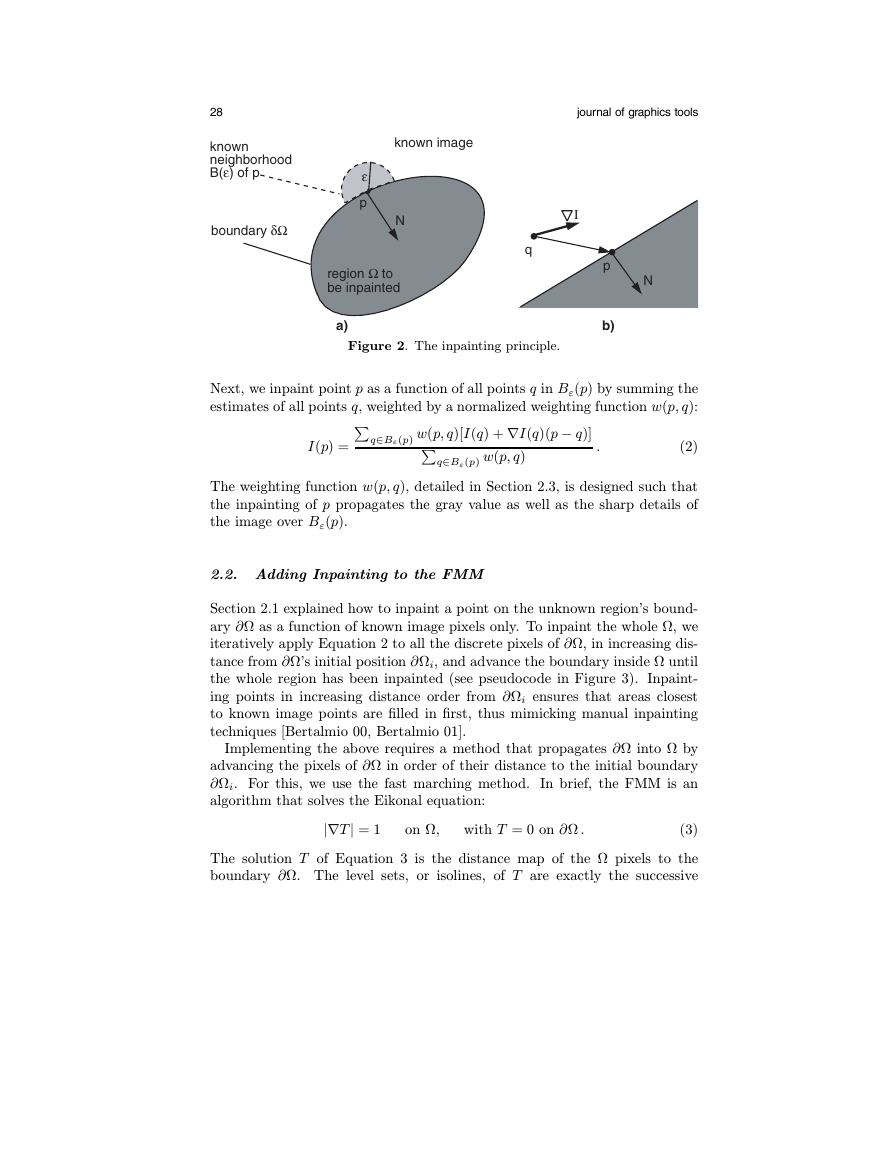

2.3.

Inpainting One Point

We consider now how to inpaint a newly discovered point (k, l), as a function

of the KN OW N points around it, following the idea described in Section 2.1.

(Step 3 in Figure 4, detailed in Figure 5). We iterate over the KN OW N points

in the neighborhood Bε of the current point (i, j) and compute I(i, j) following

Equation 2. The image gradient ∇I (gradI in the code) is estimated by central

differences. As stated in Section 2.1, the design of the weighting function

w(p, q) is crucial to propagate the sharp image details and the smooth zones as

such into the inpainted zone. We design w(p, q) = dir(p, q)· dst(p, q)· lev(p, q)

void inpaint(int i,int j)

{

for (all (k,l) in Bε(i,j) such that f(k,l)!=OUTSIDE)

{

= vector from (i,j) to (k,l);

r

dir = r * gradT(i,j)/length(r);

dst = 1/(length(r)*length(r));

lev = 1/(1+fabs(T(k,l)T(i,j)));

w

if (f(k+1,l)!=OUTSIDE && f(k1,l)!=OUTSIDE &&

= dir*dst*lev;

f(k,l+1)!=OUTSIDE && f(k,l1)!=OUTSIDE)

gradI = (I(k+1,l)I(k1,l),I(k,l+1)I(k,l1));

Ia += w * (I(k,l) + gradI * r);

s += w;

}

I(i,j) = Ia/s;

}

Figure 5. Inpainting one point.

�

32

journal of graphics tools

as a product of three factors:

dir(p, q) =

dst(p, q) =

lev(p, q) =

p − q

||p − q|| · N (p)

||p − q||2

T0

d2

0

1 + |T (p) − T (q)|

.

The directional component dir(p, q) ensures that the contribution of the pix-

els close to the normal direction N = ∇T (gradT in the code), i.e., close

to the FMM’s information propagation direction, is higher than for those

farther from N . The geometric distance component dst(p, q) decreases the

contribution of the pixels geometrically farther from p. The level set distance

component lev(p, q) ensures that pixels close to the contour through p con-

tribute more than farther pixels. Both dst and lev are relative with respect

to the reference distances d0 and T0.

In practice, we set d0 and T0 to the

interpixel distance, i.e., to 1. Overall, the above factors model the manual

inpainting heuristics [Bertalmio 00] that describe how to paint a point by

strokes bringing color from a small region around it.

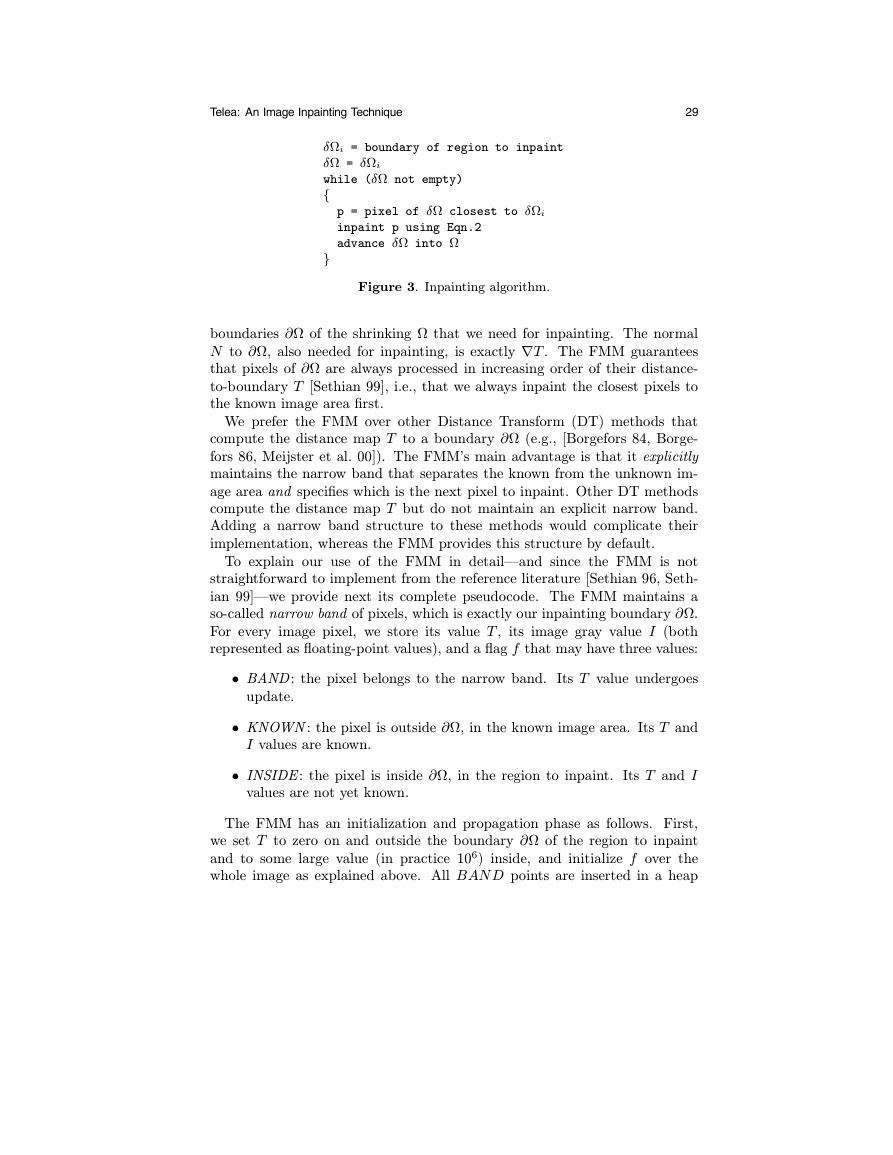

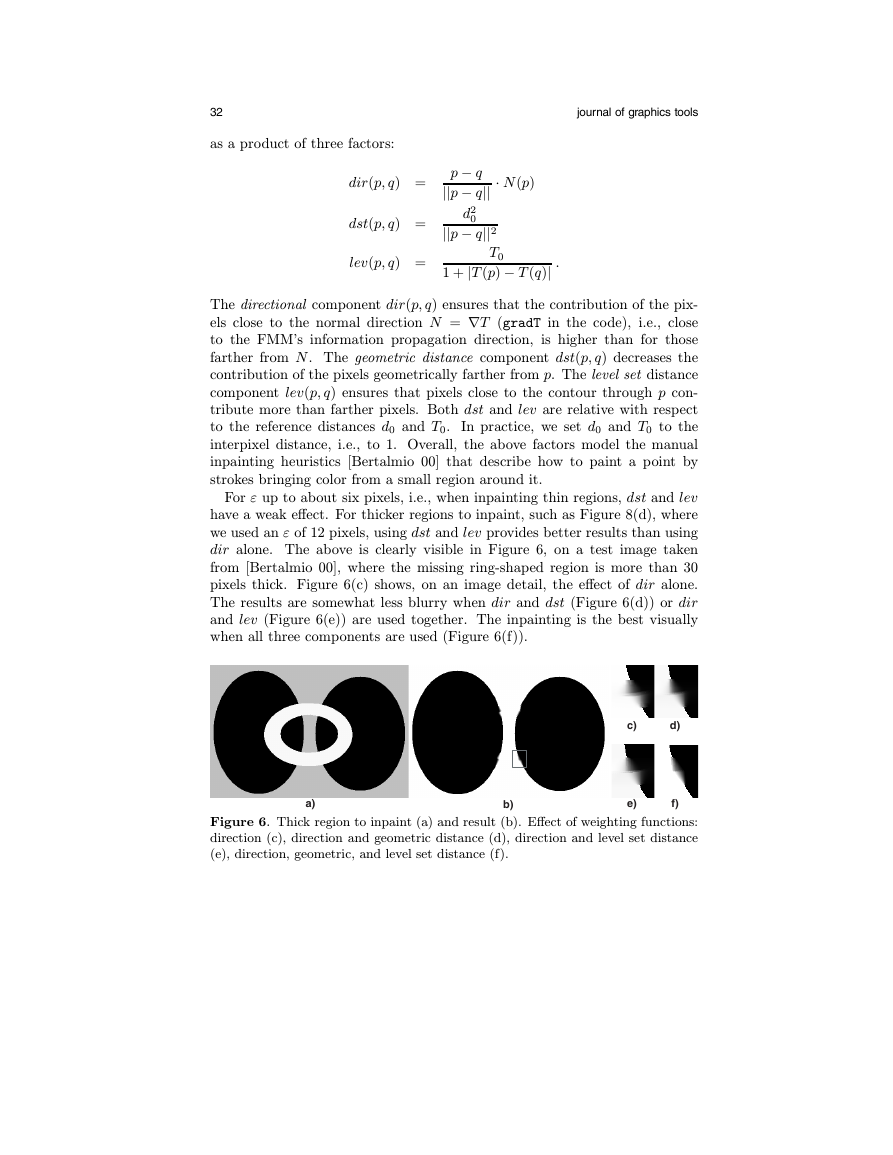

For ε up to about six pixels, i.e., when inpainting thin regions, dst and lev

have a weak effect. For thicker regions to inpaint, such as Figure 8(d), where

we used an ε of 12 pixels, using dst and lev provides better results than using

dir alone. The above is clearly visible in Figure 6, on a test image taken

from [Bertalmio 00], where the missing ring-shaped region is more than 30

pixels thick. Figure 6(c) shows, on an image detail, the effect of dir alone.

The results are somewhat less blurry when dir and dst (Figure 6(d)) or dir

and lev (Figure 6(e)) are used together. The inpainting is the best visually

when all three components are used (Figure 6(f)).

c)

d)

a)

b)

e)

f)

Figure 6. Thick region to inpaint (a) and result (b). Effect of weighting functions:

direction (c), direction and geometric distance (d), direction and level set distance

(e), direction, geometric, and level set distance (f).

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc