Machine Learning

!

!

!

!

! Srihari

Max-Sum Inference Algorithm

Sargur Srihari

srihari@cedar.buffalo.edu

1 1

�

Machine Learning

!

!

!

!

! Srihari

The max-sum algorithm

• Sum-product algorithm

– Takes joint distribution expressed as a factor graph

– Efficiently finds marginals over component variables

• Max-sum addresses two other tasks

1. Setting of the variables that has the highest

probability

2. Find value of that probability

• Algorithms are closely related

– Max-sum is an application of dynamic programming

to graphical models

2

�

Machine Learning

!

Finding latent variable values

!

!

!

! Srihari

having high probability

• Consider simple approach

– Use sum-product to obtain marginals for every

p(xi

)

variable

ix

– For each variable find value that maximizes marginal

• This would give set of values that are individually

ix

*

most probable

• However we wish to find vector that

maxx

maximizes joint distribution, i.e.

p(x)

arg

x max

x

max =

• With join probability

p(x

max =

)

xmax

p(x)

3

�

Machine Learning

!

!

!

!

! Srihari

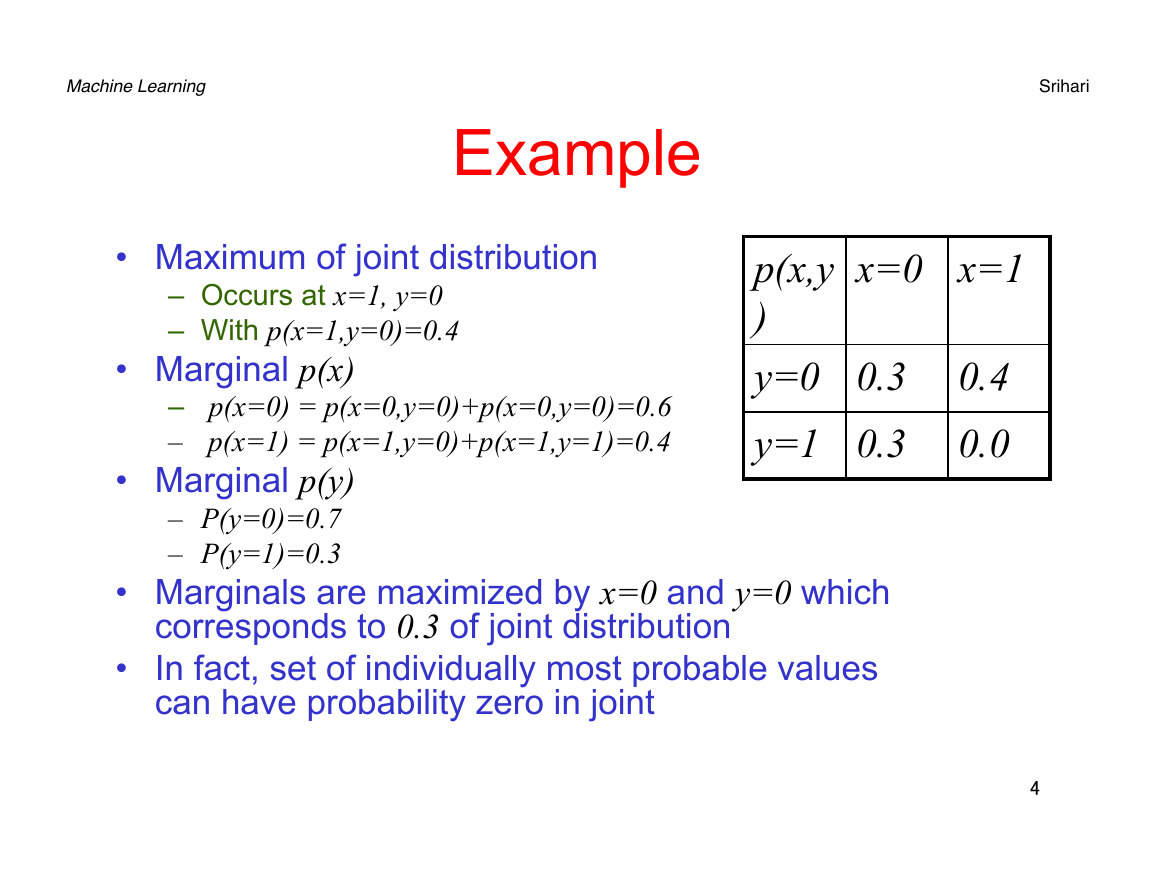

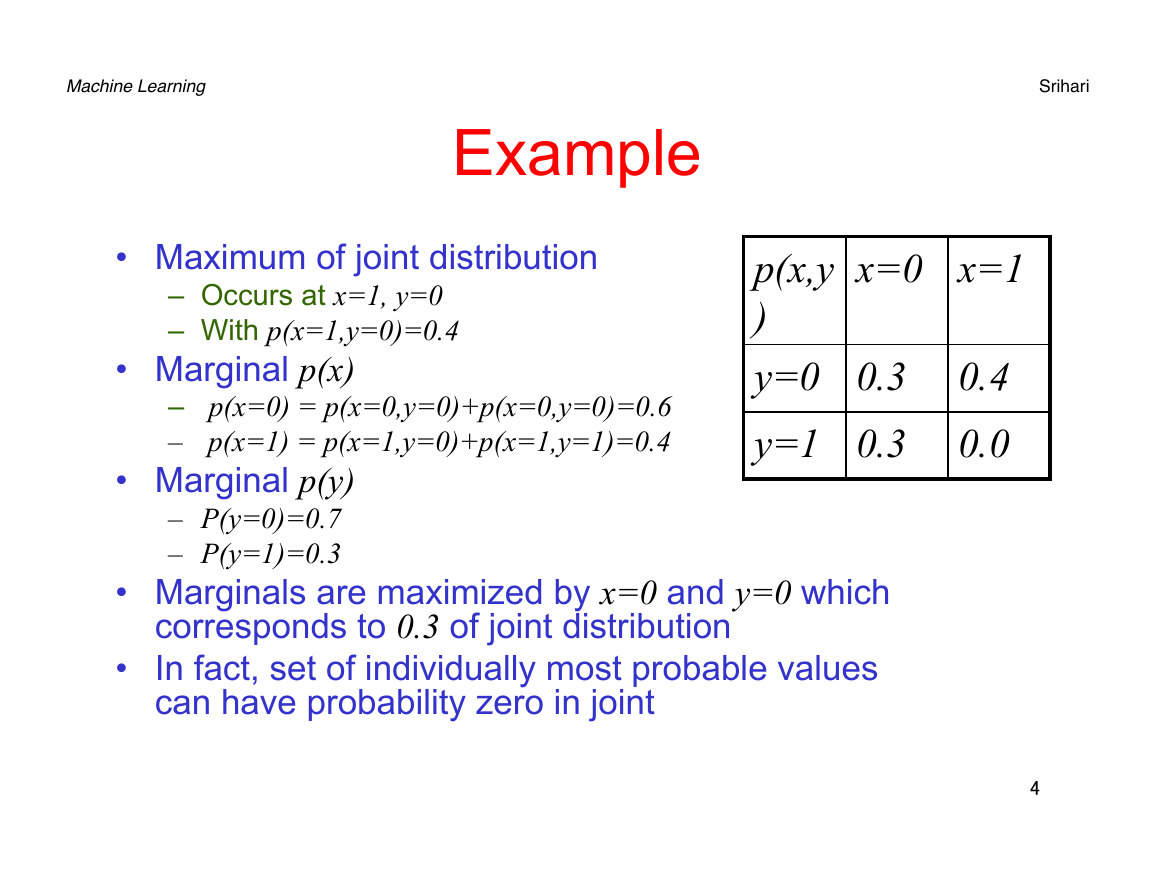

Example

• Maximum of joint distribution

– Occurs at x=1, y=0

– With p(x=1,y=0)=0.4

• Marginal p(x)

– p(x=0) = p(x=0,y=0)+p(x=0,y=0)=0.6

– p(x=1) = p(x=1,y=0)+p(x=1,y=1)=0.4

• Marginal p(y)

– P(y=0)=0.7

– P(y=1)=0.3

x=0 x=1

p(x,y

)

y=0 0.3

y=1 0.3

0.4

0.0

4

• Marginals are maximized by x=0 and y=0 which

•

corresponds to 0.3 of joint distribution

In fact, set of individually most probable values

can have probability zero in joint

�

Machine Learning

!

!

!

!

! Srihari

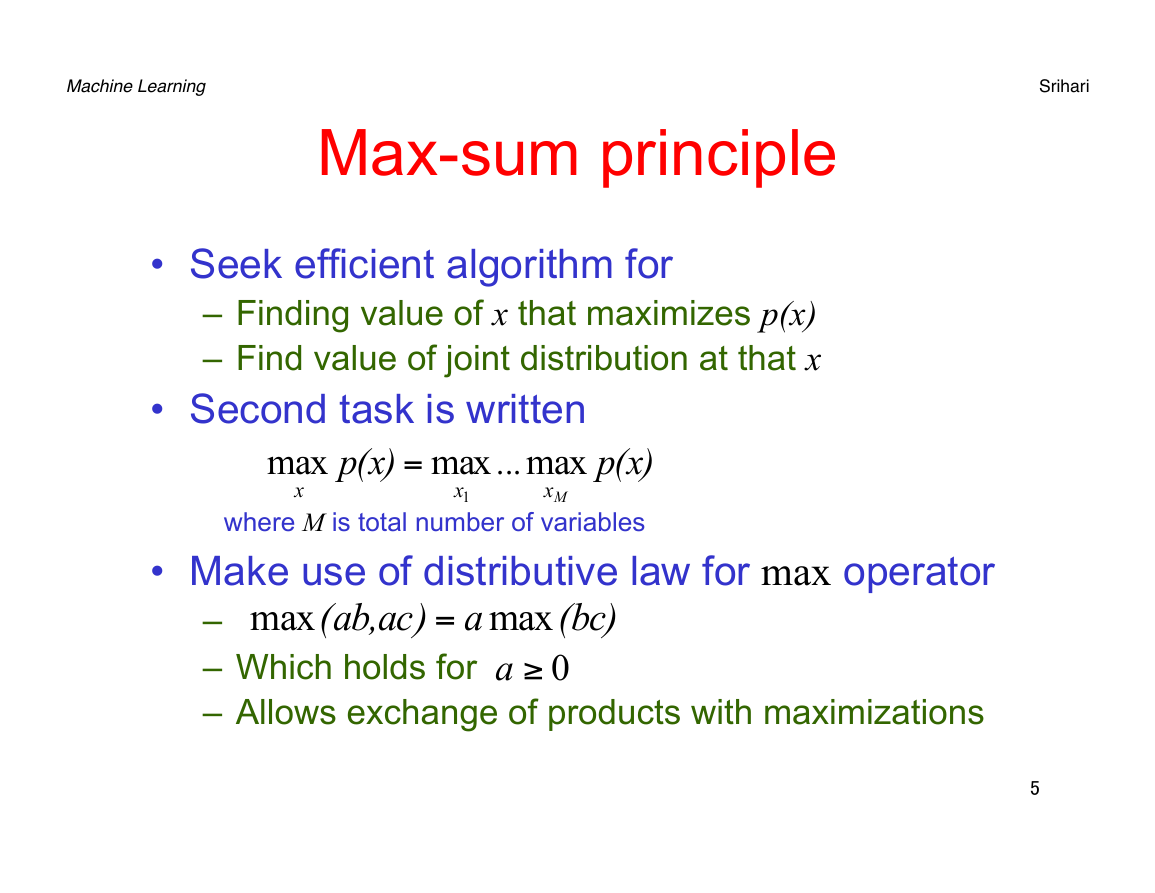

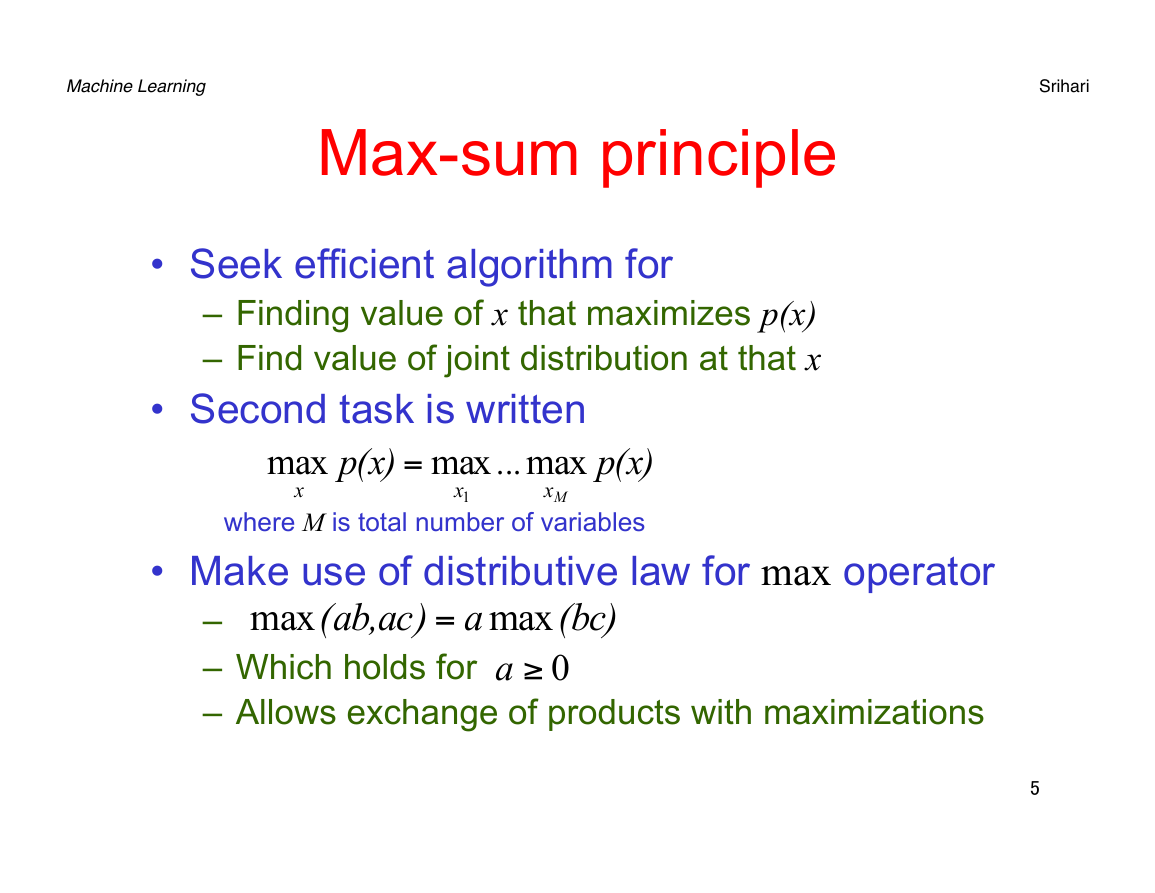

Max-sum principle

• Seek efficient algorithm for

– Finding value of x that maximizes p(x)

– Find value of joint distribution at that x

=

x

max

• Second task is written

max

Mx

p(x)

where M is total number of variables

• Make use of distributive law for max operator

max

x

1

p(x)

...

max

(ab,ac)

–

a

– Which holds for

– Allows exchange of products with maximizations

max

0≥a

(bc)

=

5

�

Machine Learning

!

!

!

!

! Srihari

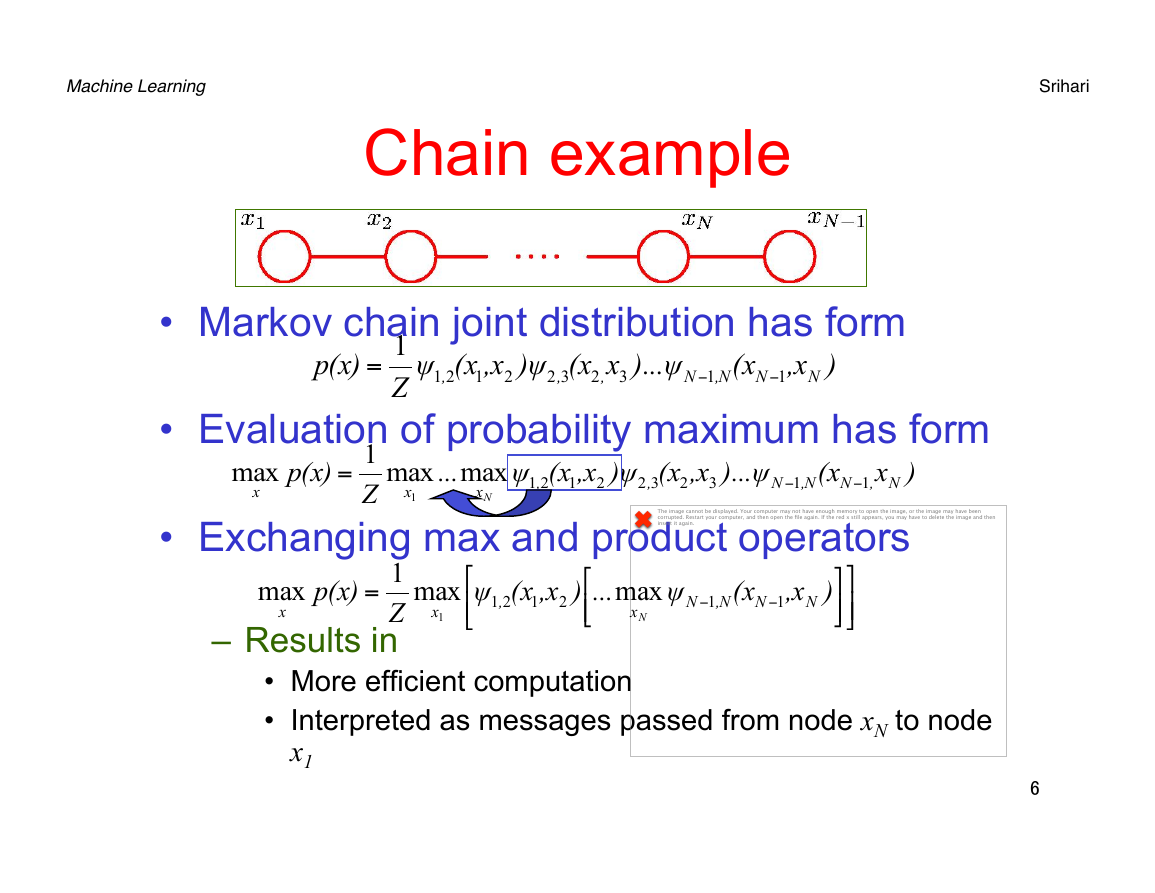

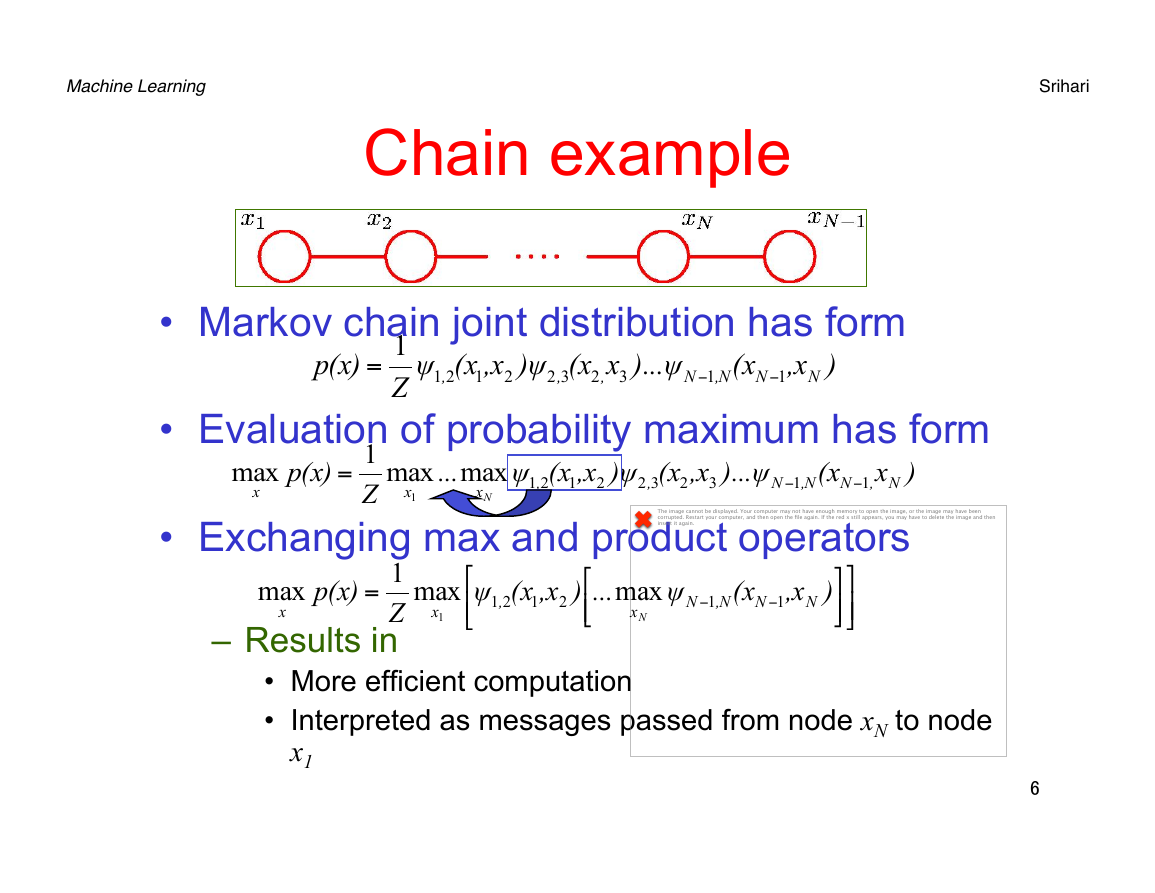

Chain example

1

Z

• Markov chain joint distribution has form

p(x)

=

(xψ

,

21

1

)ψ,x

2

(x

2

,

x

3

,

32

)...ψ

(x

N

,x

N

)

1

−

,NN

1

−

• Evaluation of probability maximum has form

=

p(x)

max

)x

N,N

• Exchanging max and product operators

(xψ

,

1

21

max

x

1

max

x

)ψ,x

)...ψ

(x

2

,x

3

,NN

1

−

(x

...

,

32

1

−

2

x

N

The image cannot be displayed. Your computer may not have enough memory to open the image, or the image may have been

corrupted. Restart your computer, and then open the file again. If the red x still appears, you may have to delete the image and then

insert it again.

1

Z

p(x)

max

1

Z

– Results in

=

x

max

x

1

⎡

(xψ

,

1

21

⎢⎣

,x

2

⎡

)

⎢⎣

...

max

x

N

ψ

(x

,x

N

N

1

−

N

1

−

,N

⎤

)

⎥⎦

⎤

⎥⎦

• More efficient computation

• Interpreted as messages passed from node xN to node

x1

6

�

Machine Learning

!

!

!

!

! Srihari

Generalization to tree factor graph

• Substitution factored graph expansion

p(x)

p(x)

∏=

s

=

(xf

)

s

s

...

max

x

1

x

max

• Into

• And exchanging maximizations with products

• Final maximization is performed over product

max

Mx

p(x)

of all messages arriving at the root node

• Could be called the max-product algorithm

7

�

Machine Learning

!

!

!

!

! Srihari

Use of log probabilities

• Products of probabilities can lead to numerical

underflow problems

• Convenient to work with logarithm of joint

distribution

• Has the effect of replacing products in max-

product algorithm with sums

• Thus we obtain the max-sum algorithm

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc