How to Go Really Big in AI:

Strategies & Principles for Distributed Machine Learning

Eric Xing

epxing@cs.cmu.edu

School of Computer Science

Carnegie Mellon University

Wei Dai, Qirong Ho, Jin Kyu Kim, Abhimanu Kumar, Seunghak Lee, Jinliang Wei, Pengtao Xie, Yaoliang Yu, Hao Zhang, Xun Zheng

Acknowledgement:

James Cipar, Henggang Cui,

and, Phil Gibbons, Greg Ganger, Garth Gibson

1

�

Machine Learning:

-- a view from outside

2

�

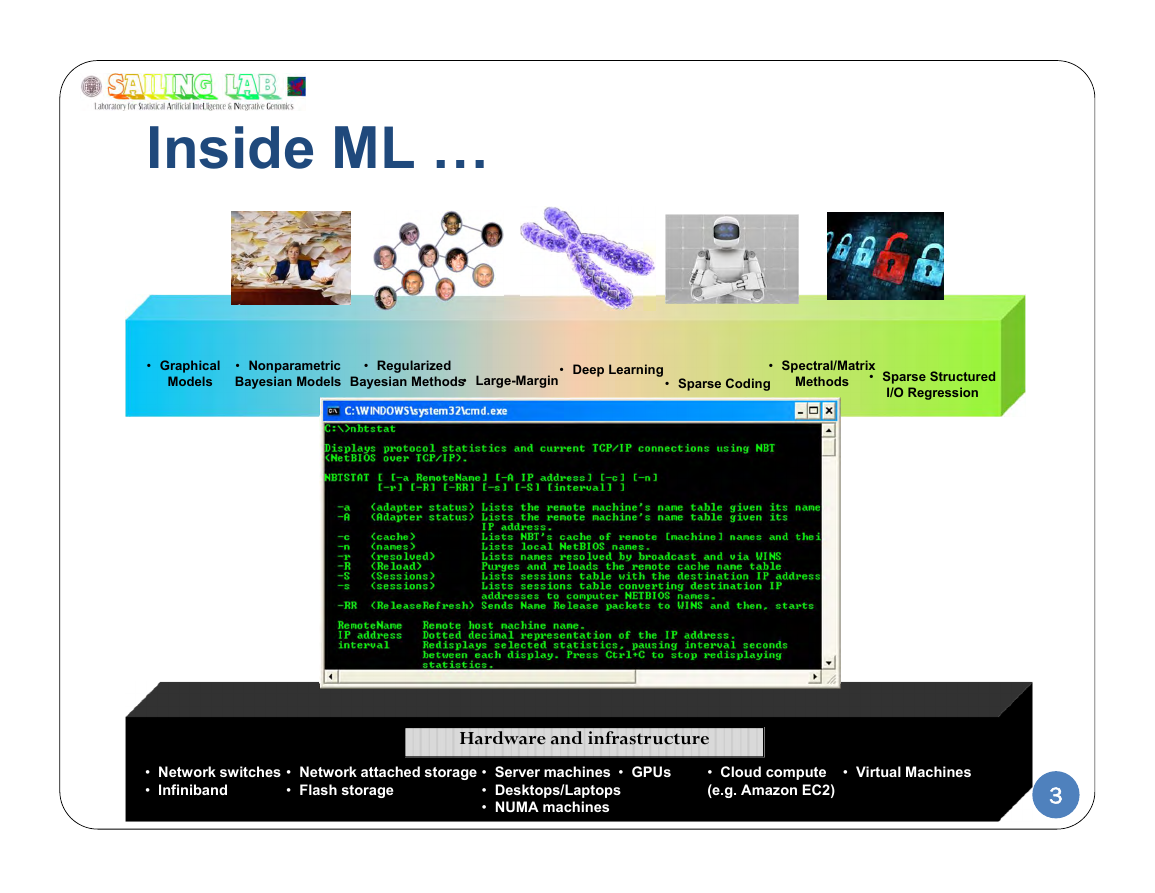

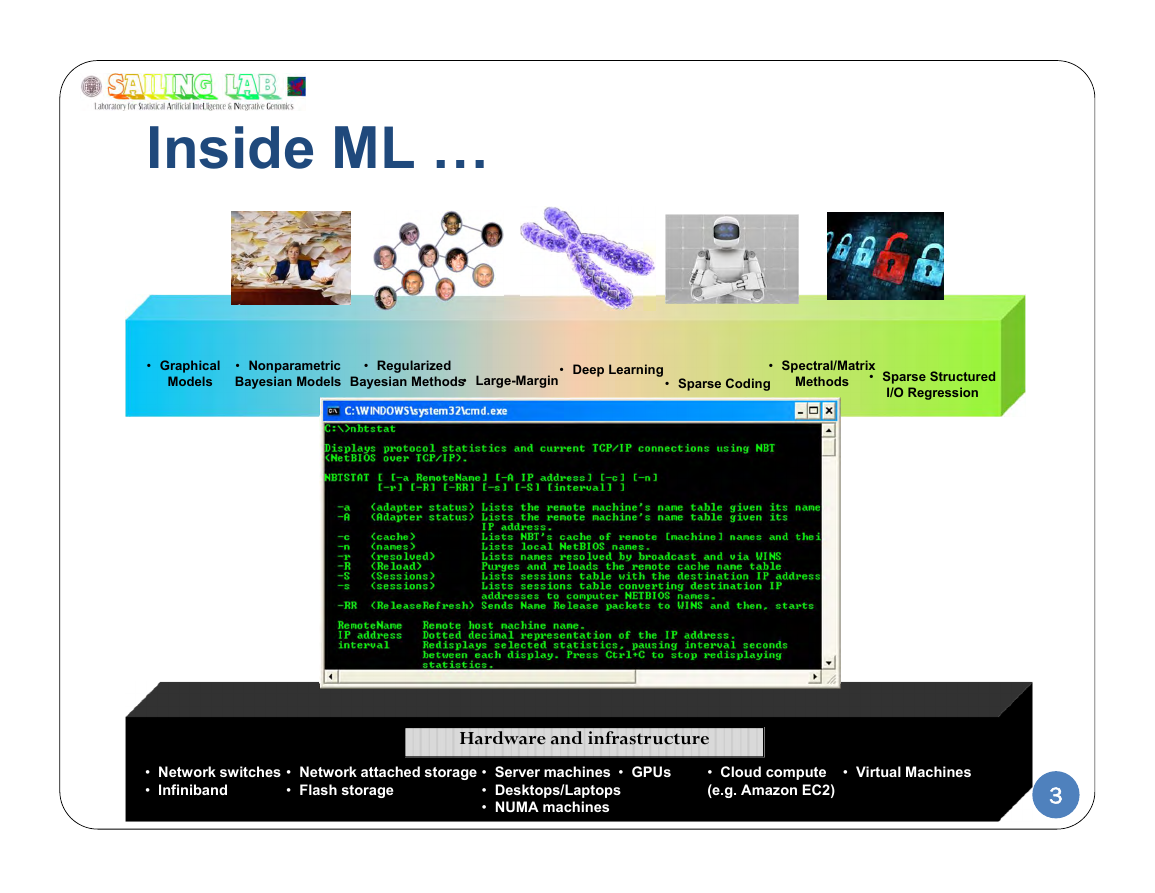

Inside ML …

• Graphical

Models

• Nonparametric

Bayesian Models

• Regularized

Bayesian Methods

• Large-Margin

• Deep Learning

• Sparse Coding

• Sparse Structured

I/O Regression

• Spectral/Matrix

Methods

• Network switches

• Infiniband

• Network attached storage

• Flash storage

• Server machines

• Desktops/Laptops

• NUMA machines

• GPUs

• Cloud compute

(e.g. Amazon EC2)

• Virtual Machines

3

Hardware and infrastructure

�

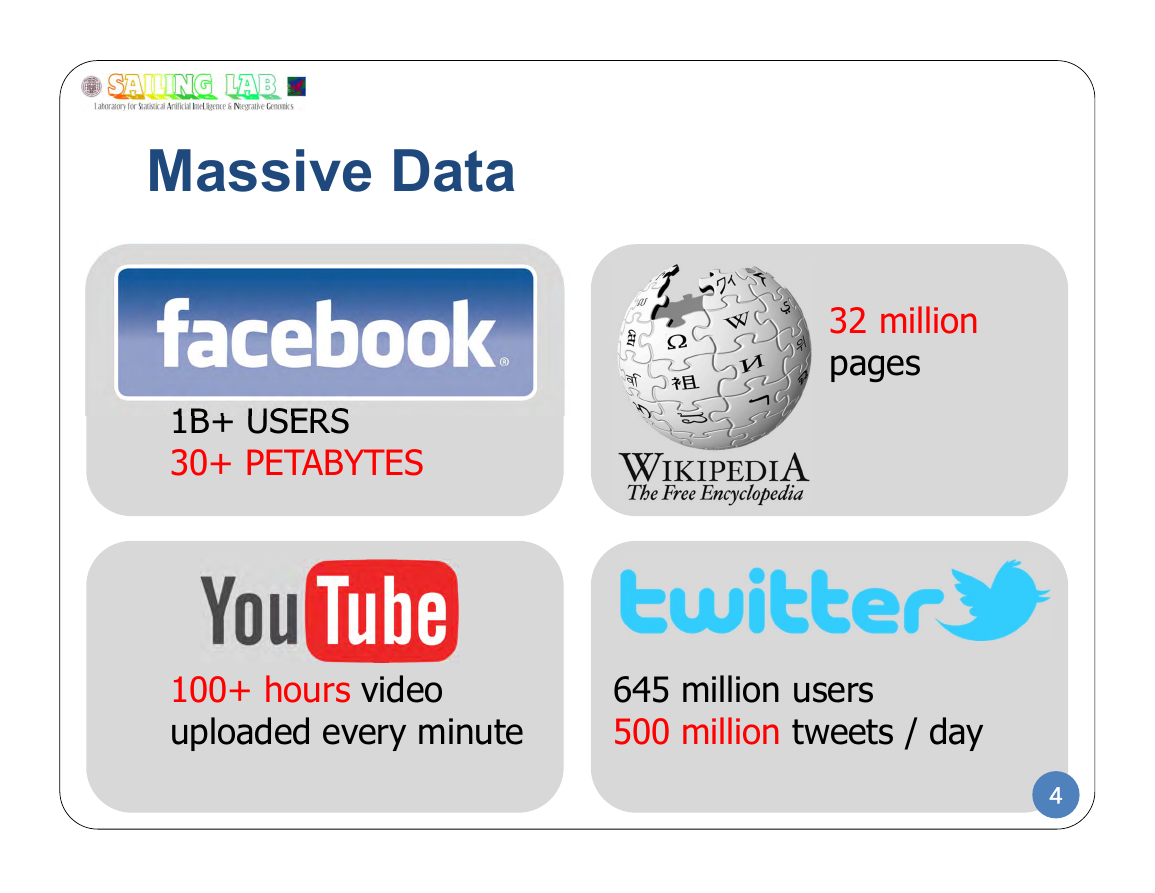

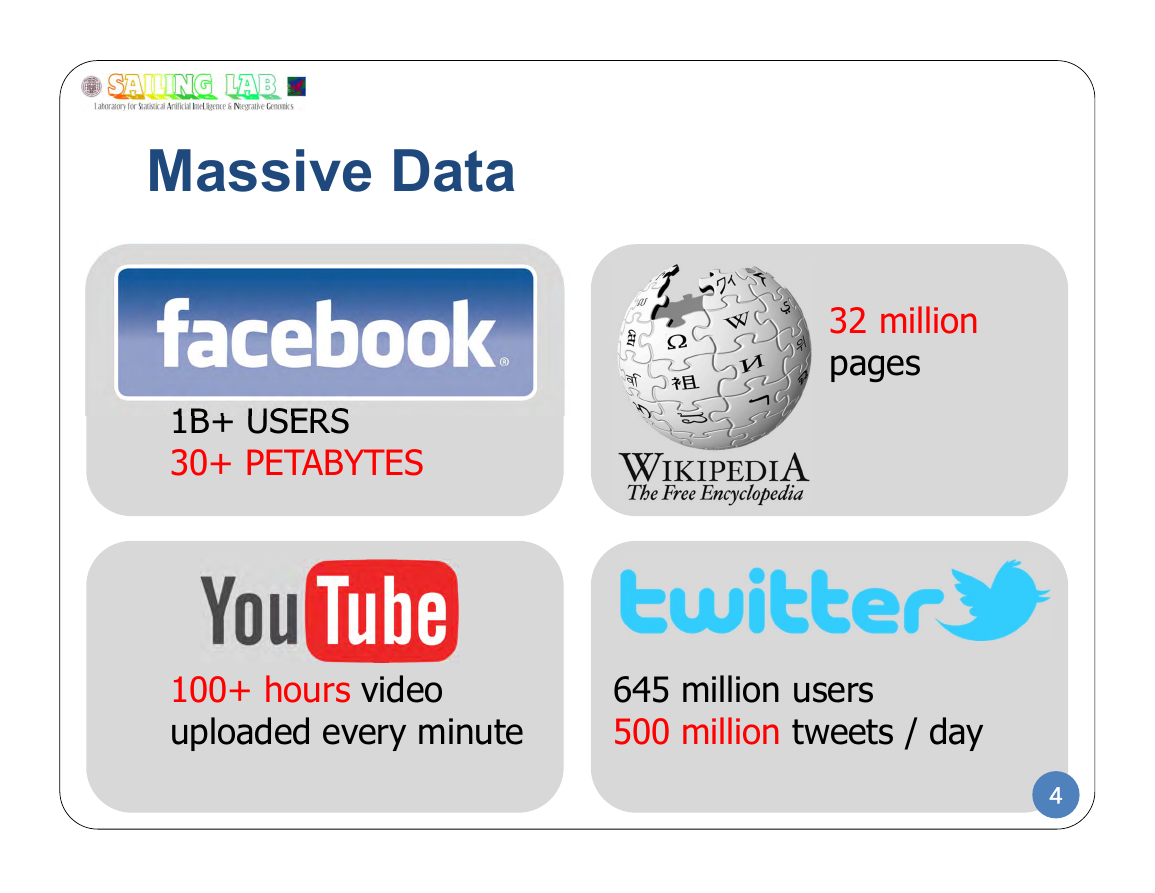

Massive Data

1B+ USERS

30+ PETABYTES

32 million

pages

100+ hours video

uploaded every minute

645 million users

500 million tweets / day

4

�

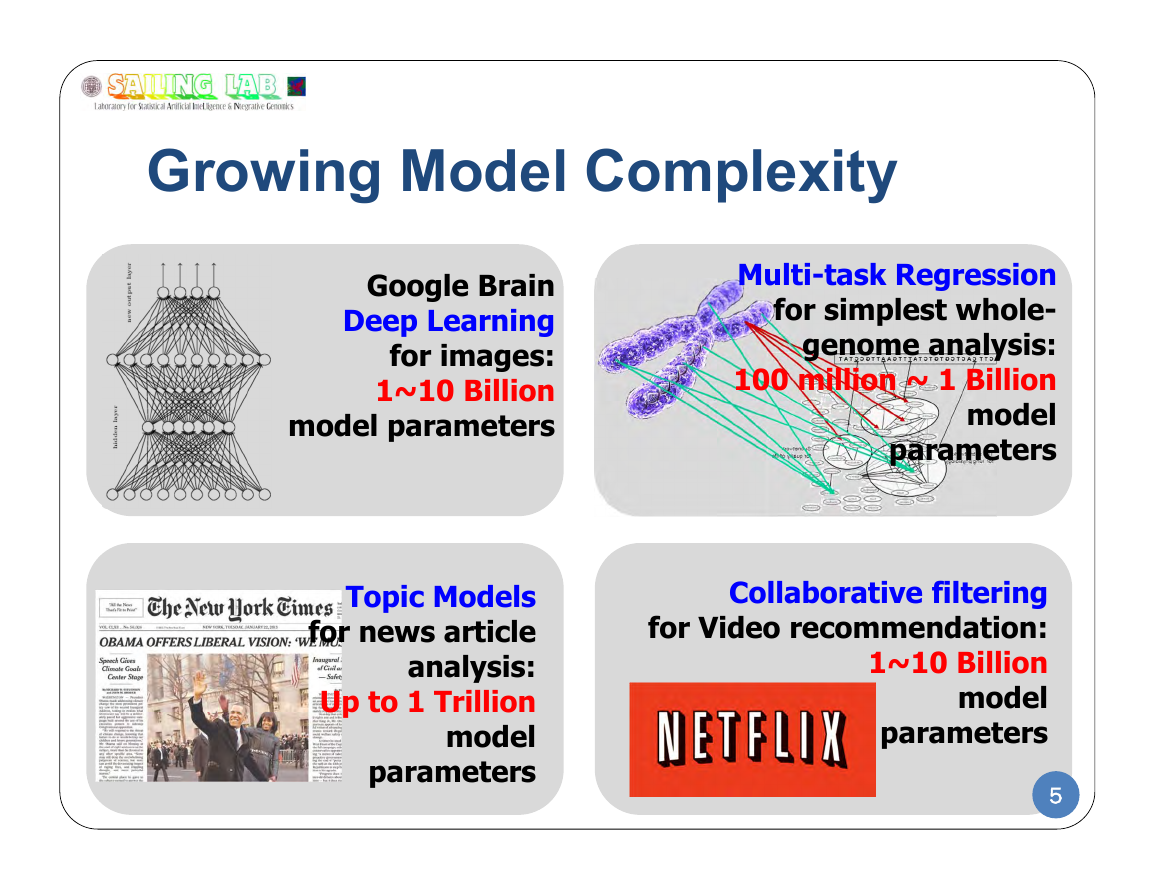

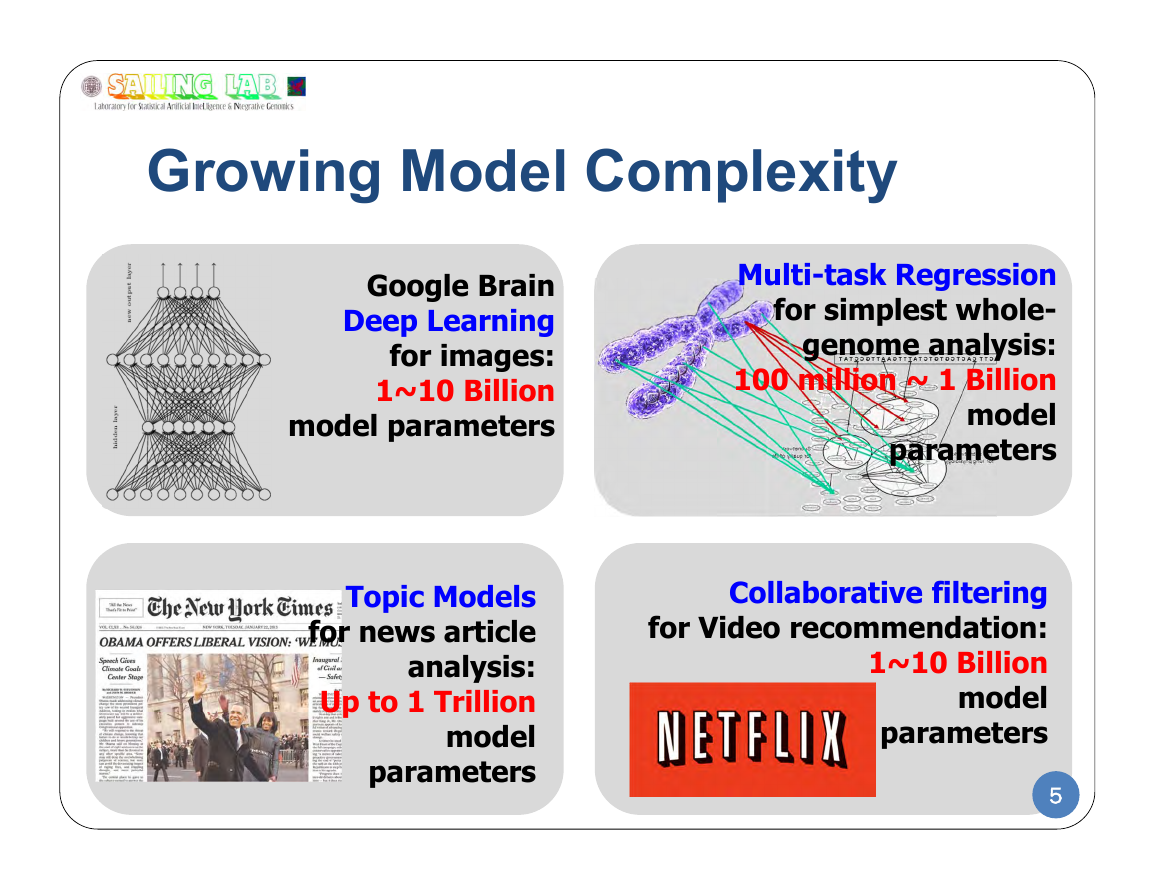

Growing Model Complexity

Google Brain

Deep Learning

for images:

1~10 Billion

model parameters

Multi-task Regression

for simplest whole-

genome analysis:

100 million ~ 1 Billion

model

parameters

Topic Models

for news article

analysis:

Up to 1 Trillion

model

parameters

Collaborative filtering

for Video recommendation:

1~10 Billion

model

parameters

5

�

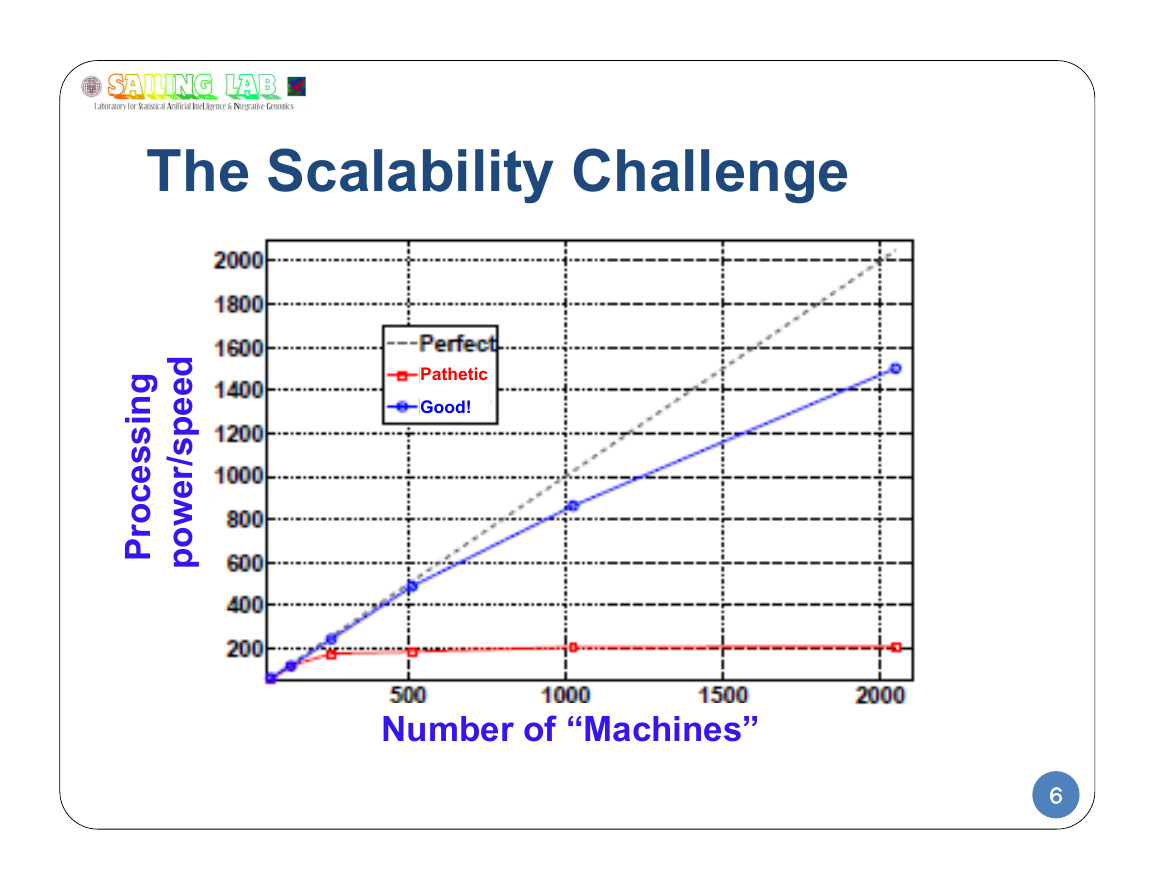

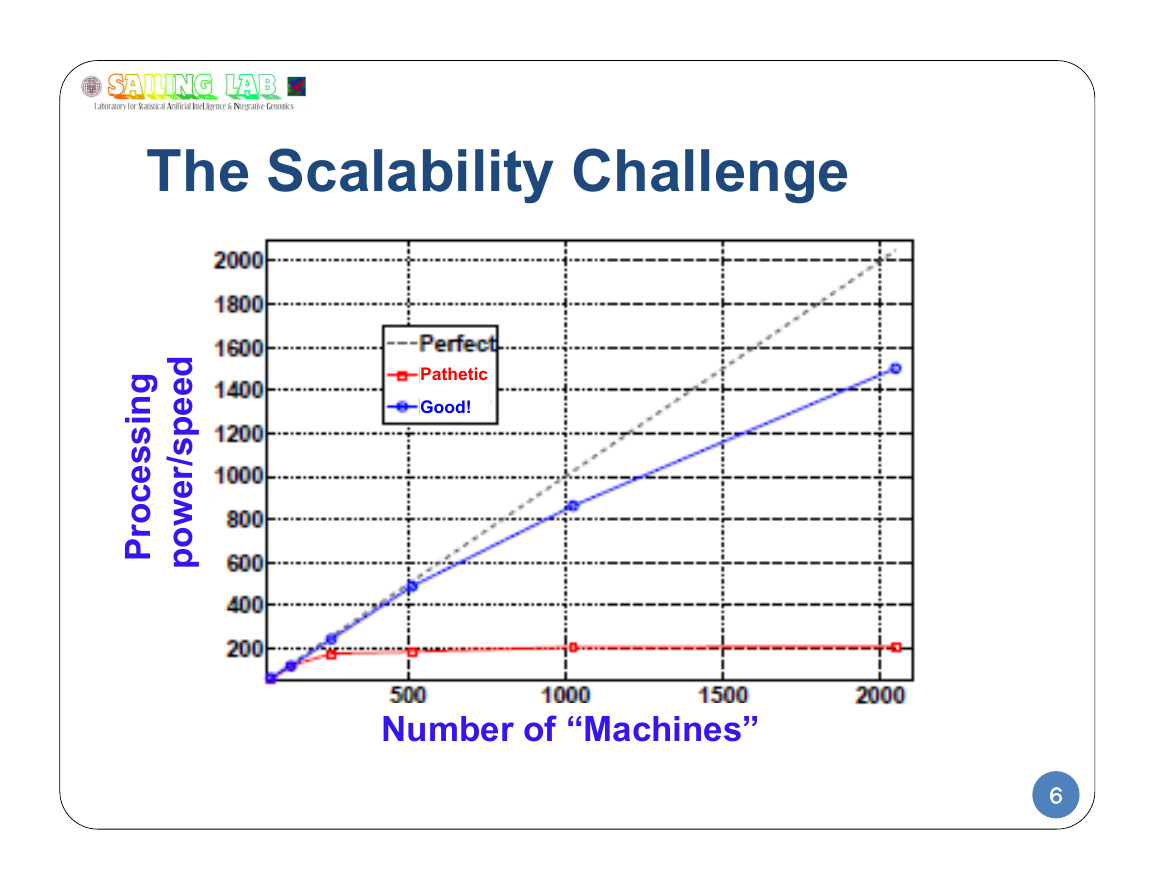

The Scalability Challenge

g

n

i

s

s

e

c

o

r

P

d

e

e

p

s

/

r

e

w

o

p

Pathetic

Good!

Number of “Machines”

6

�

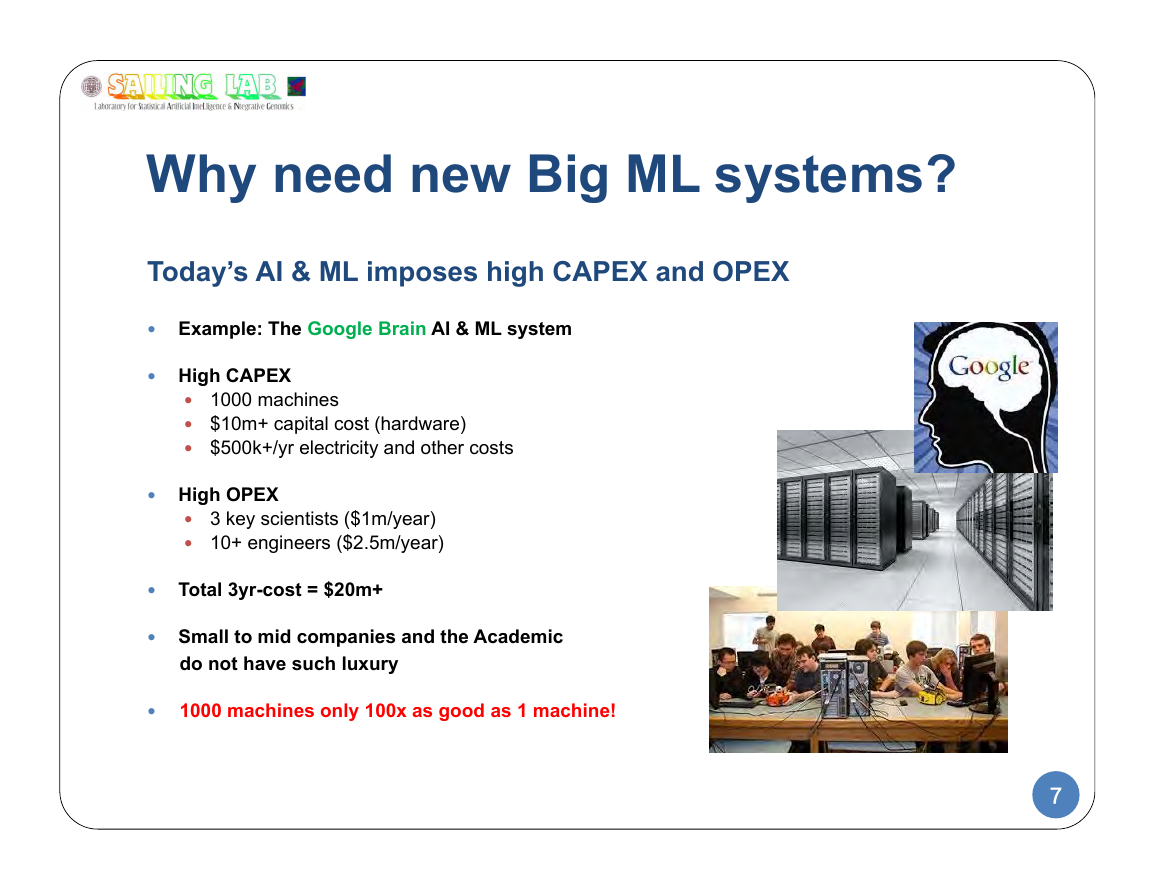

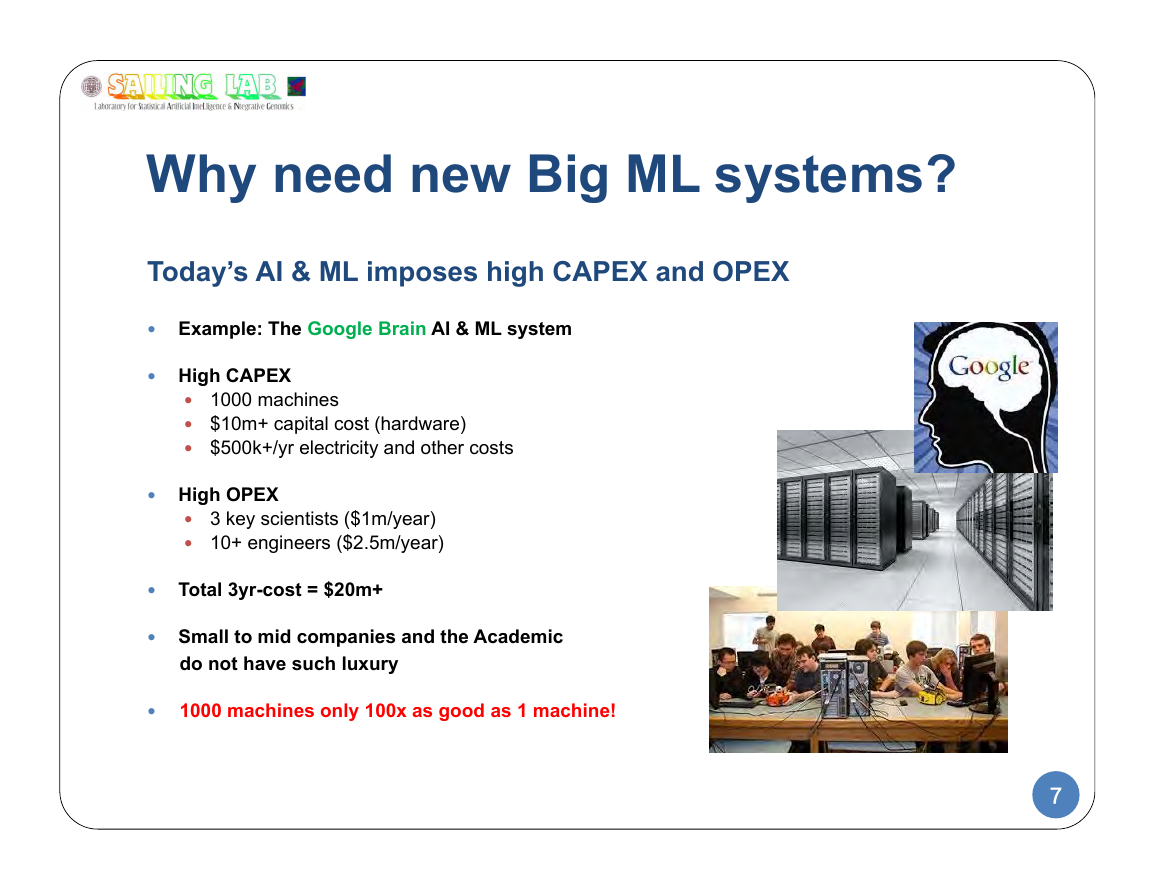

Why need new Big ML systems?

Today’s AI & ML imposes high CAPEX and OPEX

Example: The Google Brain AI & ML system

High CAPEX

1000 machines

$10m+ capital cost (hardware)

$500k+/yr electricity and other costs

High OPEX

3 key scientists ($1m/year)

10+ engineers ($2.5m/year)

Total 3yr-cost = $20m+

Small to mid companies and the Academic

do not have such luxury

1000 machines only 100x as good as 1 machine!

7

�

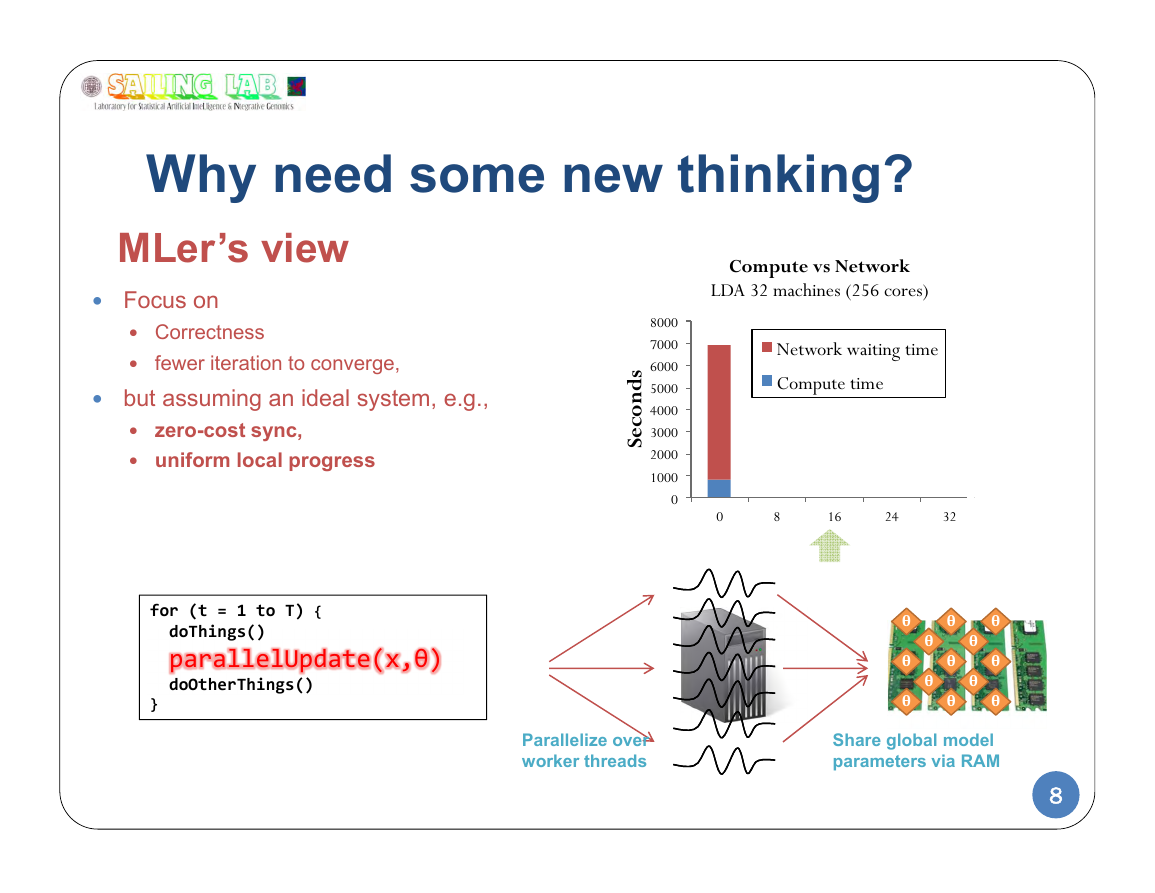

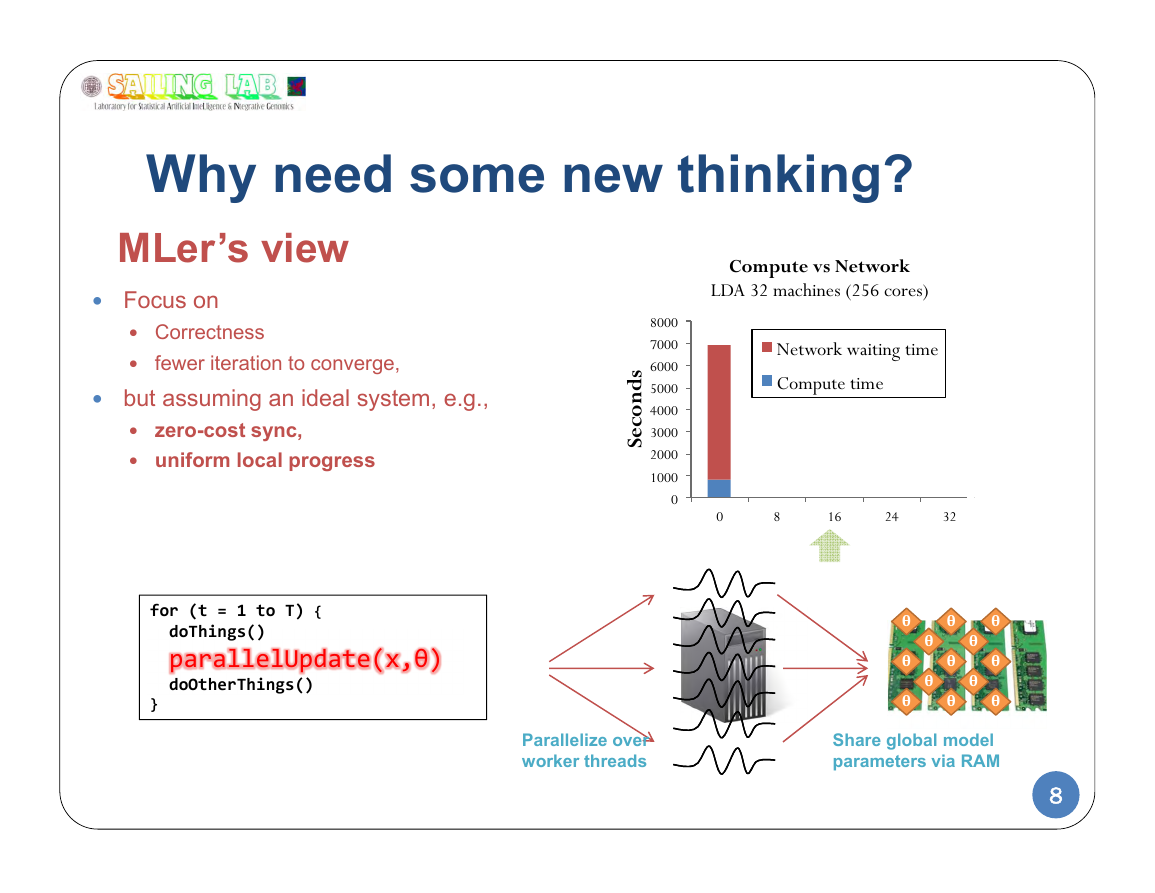

Why need some new thinking?

MLer’s view

Focus on

Compute vs Network

LDA 32 machines (256 cores)

Correctness

fewer iteration to converge,

but assuming an ideal system, e.g.,

zero-cost sync,

uniform local progress

s

d

n

o

c

e

S

8000

7000

6000

5000

4000

3000

2000

1000

0

Network waiting time

Compute time

0

8

16

24

32

for (t = 1 to T) {

doThings()

parallelUpdate(x,θ)

doOtherThings()

}

θ

θ

θ

θ

θ

θ

θ

θ

θ

θ

θ

θ

θ

Parallelize over

worker threads

Share global model

parameters via RAM

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc