Taming Text

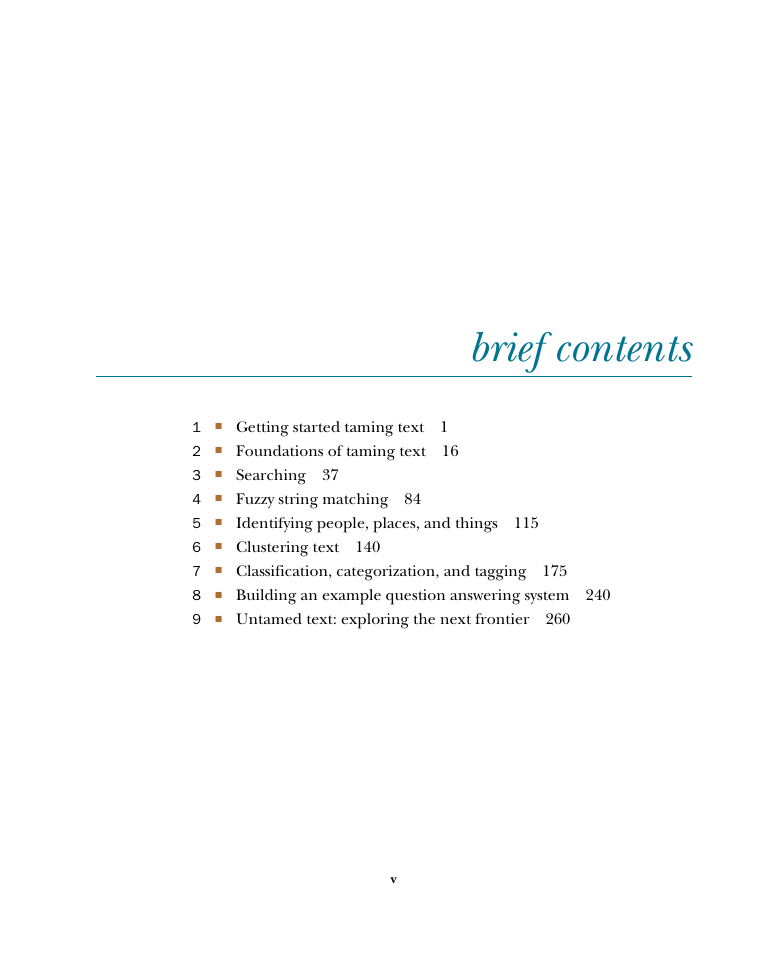

brief contents

contents

foreword

preface

acknowledgments

Grant Ingersoll

Tom Morton

Drew Farris

about this book

Who should read this book

Roadmap

Code conventions and downloads

Author Online

about the cover illustration

1 Getting started taming text

1.1 Why taming text is important

1.2 Preview: A fact-based question answering system

1.2.1 Hello, Dr. Frankenstein

1.3 Understanding text is hard

1.4 Text, tamed

1.5 Text and the intelligent app: search and beyond

1.5.1 Searching and matching

1.5.2 Extracting information

1.5.3 Grouping information

1.5.4 An intelligent application

1.6 Summary

1.7 Resources

2 Foundations of taming text

2.1 Foundations of language

2.1.1 Words and their categories

2.1.2 Phrases and clauses

2.1.3 Morphology

2.2 Common tools for text processing

2.2.1 String manipulation tools

2.2.2 Tokens and tokenization

2.2.3 Part of speech assignment

2.2.4 Stemming

2.2.5 Sentence detection

2.2.6 Parsing and grammar

2.2.7 Sequence modeling

2.3 Preprocessing and extracting content from common file formats

2.3.1 The importance of preprocessing

2.3.2 Extracting content using Apache Tika

2.4 Summary

2.5 Resources

3 Searching

3.1 Search and faceting example: Amazon.com

3.2 Introduction to search concepts

3.2.1 Indexing content

3.2.2 User input

3.2.3 Ranking documents with the vector space model

3.2.4 Results display

3.3 Introducing the Apache Solr search server

3.3.1 Running Solr for the first time

3.3.2 Understanding Solr concepts

3.4 Indexing content with Apache Solr

3.4.1 Indexing using XML

3.4.2 Extracting and indexing content using Solr and Apache Tika

3.5 Searching content with Apache Solr

3.5.1 Solr query input parameters

3.5.2 Faceting on extracted content

3.6 Understanding search performance factors

3.6.1 Judging quality

3.6.2 Judging quantity

3.7 Improving search performance

3.7.1 Hardware improvements

3.7.2 Analysis improvements

3.7.3 Query performance improvements

3.7.4 Alternative scoring models

3.7.5 Techniques for improving Solr performance

3.8 Search alternatives

3.9 Summary

3.10 Resources

4 Fuzzy string matching

4.1 Approaches to fuzzy string matching

4.1.1 Character overlap measures

4.1.2 Edit distance measures

4.1.3 N-gram edit distance

4.2 Finding fuzzy string matches

4.2.1 Using prefixes for matching with Solr

4.2.2 Using a trie for prefix matching

4.2.3 Using n-grams for matching

4.3 Building fuzzy string matching applications

4.3.1 Adding type-ahead to search

4.3.2 Query spell-checking for search

4.3.3 Record matching

4.4 Summary

4.5 Resources

5 Identifying people, places, and things

5.1 Approaches to named-entity recognition

5.1.1 Using rules to identify names

5.1.2 Using statistical classifiers to identify names

5.2 Basic entity identification with OpenNLP

5.2.1 Finding names with OpenNLP

5.2.2 Interpreting names identified by OpenNLP

5.2.3 Filtering names based on probability

5.3 In-depth entity identification with OpenNLP

5.3.1 Identifying multiple entity types with OpenNLP

5.3.2 Under the hood: how OpenNLP identifies names

5.4 Performance of OpenNLP

5.4.1 Quality of results

5.4.2 Runtime performance

5.4.3 Memory usage in OpenNLP

5.5 Customizing OpenNLP entity identification for a new domain

5.5.1 The whys and hows of training a model

5.5.2 Training an OpenNLP model

5.5.3 Altering modeling inputs

5.5.4 A new way to model names

5.6 Summary

5.7 Further reading

6 Clustering text

6.1 Google News document clustering

6.2 Clustering foundations

6.2.1 Three types of text to cluster

6.2.2 Choosing a clustering algorithm

6.2.3 Determining similarity

6.2.4 Labeling the results

6.2.5 How to evaluate clustering results

6.3 Setting up a simple clustering application

6.4 Clustering search results using Carrot 2

6.4.1 Using the Carrot 2 API

6.4.2 Clustering Solr search results using Carrot 2

6.5 Clustering document collections with Apache Mahout

6.5.1 Preparing the data for clustering

6.5.2 K-Means clustering

6.6 Topic modeling using Apache Mahout

6.7 Examining clustering performance

6.7.1 Feature selection and reduction

6.7.2 Carrot2 performance and quality

6.7.3 Mahout clustering benchmarks

6.8 Acknowledgments

6.9 Summary

6.10 References

7 Classification, categorization, and tagging

7.1 Introduction to classification and categorization

7.2 The classification process

7.2.1 Choosing a classification scheme

7.2.2 Identifying features for text categorization

7.2.3 The importance of training data

7.2.4 Evaluating classifier performance

7.2.5 Deploying a classifier into production

7.3 Building document categorizers using Apache Lucene

7.3.1 Categorizing text with Lucene

7.3.2 Preparing the training data for the MoreLikeThis categorizer

7.3.3 Training the MoreLikeThis categorizer

7.3.4 Categorizing documents with the MoreLikeThis categorizer

7.3.5 Testing the MoreLikeThis categorizer

7.3.6 MoreLikeThis in production

7.4 Training a naive Bayes classifier using Apache Mahout

7.4.1 Categorizing text using naive Bayes classification

7.4.2 Preparing the training data

7.4.3 Withholding test data

7.4.4 Training the classifier

7.4.5 Testing the classifier

7.4.6 Improving the bootstrapping process

7.4.7 Integrating the Mahout Bayes classifier with Solr

7.5 Categorizing documents with OpenNLP

7.5.1 Regression models and maximum entropy document categorization

7.5.2 Preparing training data for the maximum entropy document categorizer

7.5.3 Training the maximum entropy document categorizer

7.5.4 Testing the maximum entropy document classifier

7.5.5 Maximum entropy document categorization in production

7.6 Building a tag recommender using Apache Solr

7.6.1 Collecting training data for tag recommendations

7.6.2 Preparing the training data

7.6.3 Training the Solr tag recommender

7.6.4 Creating tag recommendations

7.6.5 Evaluating the tag recommender

7.7 Summary

7.8 References

8 Building an example question answering system

8.1 Basics of a question answering system

8.2 Installing and running the QA code

8.3 A sample question answering architecture

8.4 Understanding questions and producing answers

8.4.1 Training the answer type classifier

8.4.2 Chunking the query

8.4.3 Computing the answer type

8.4.4 Generating the query

8.4.5 Ranking candidate passages

8.5 Steps to improve the system

8.6 Summary

8.7 Resources

9 Untamed text: exploring the next frontier

9.1 Semantics, discourse, and pragmatics: exploring higher levels of NLP

9.1.1 Semantics

9.1.2 Discourse

9.1.3 Pragmatics

9.2 Document and collection summarization

9.3 Relationship extraction

9.3.1 Overview of approaches

9.3.2 Evaluation

9.3.3 Tools for relationship extraction

9.4 Identifying important content and people

9.4.1 Global importance and authoritativeness

9.4.2 Personal importance

9.4.3 Resources and pointers on importance

9.5 Detecting emotions via sentiment analysis

9.5.1 History and review

9.5.2 Tools and data needs

9.5.3 A basic polarity algorithm

9.5.4 Advanced topics

9.5.5 Open source libraries for sentiment analysis

9.6 Cross-language information retrieval

9.7 Summary

9.8 References

index

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

Q

R

S

T

U

V

W

X

Y

Z

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc