Effective Python: 59 Specific Ways to Write Better Python

P R E V

6. Built-in Modules

⏮

N E X T

8. Production ⏭

7. Collaboration

There are language features in Python to help you construct well-defined APIs with clear interface

boundaries. The Python community has established best practices that maximize the maintainability of

code over time. There are also standard tools that ship with Python that enable large teams to work

together across disparate environments.

Collaborating with others on Python programs requires being deliberate about how you write your code.

Even if you’re working on your own, chances are you’ll be using code written by someone else via the

standard library or open source packages. It’s important to understand the mechanisms that make it easy

to collaborate with other Python programmers.

I T E M 4 9 : W R I T E D O C S T R I N G S F O R E V E R Y F U N C T I O N , C L A S S , A N D M O D U L E

Documentation in Python is extremely important because of the dynamic nature of the language. Python provides built-in support for

attaching documentation to blocks of code. Unlike many other languages, the documentation from a program’s source code is directly

accessible as the program runs.

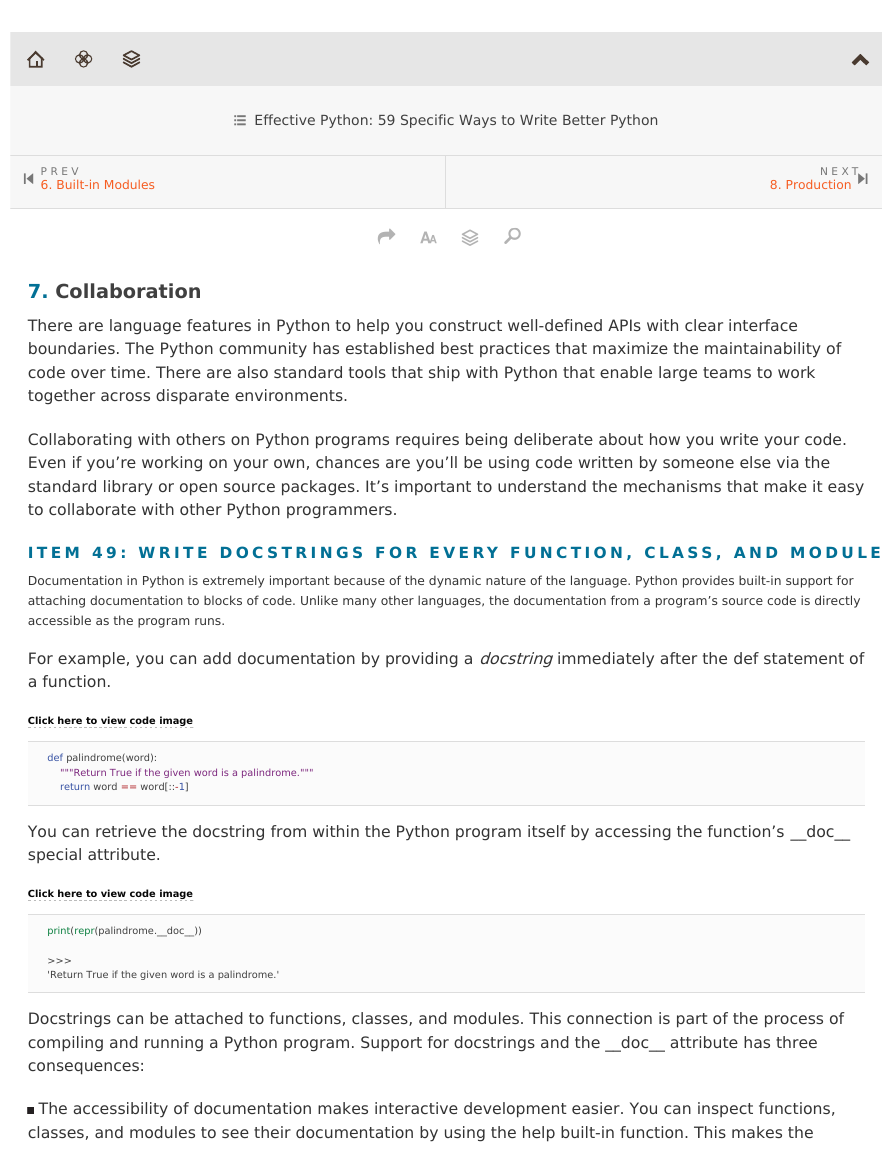

For example, you can add documentation by providing a docstring immediately after the def statement of

a function.

Click here to view code image

def palindrome(word):

"""Return True if the given word is a palindrome."""

return word == word[::-1]

You can retrieve the docstring from within the Python program itself by accessing the function’s __doc__

special attribute.

Click here to view code image

print(repr(palindrome.__doc__))

>>>

'Return True if the given word is a palindrome.'

Docstrings can be attached to functions, classes, and modules. This connection is part of the process of

compiling and running a Python program. Support for docstrings and the __doc__ attribute has three

consequences:

The accessibility of documentation makes interactive development easier. You can inspect functions,

classes, and modules to see their documentation by using the help built-in function. This makes the

�

Python interactive interpreter (the Python “shell”) and tools like IPython Notebook (http://ipython.org) a

joy to use while you’re developing algorithms, testing APIs, and writing code snippets.

A standard way of defining documentation makes it easy to build tools that convert the text into more

appealing formats (like HTML). This has led to excellent documentation-generation tools for the Python

community, such as Sphinx (http://sphinx-doc.org). It’s also enabled community-funded sites like Read

the Docs (https://readthedocs.org) that provide free hosting of beautiful-looking documentation for open

source Python projects.

Python’s first-class, accessible, and good-looking documentation encourages people to write more

documentation. The members of the Python community have a strong belief in the importance of

documentation. There’s an assumption that “good code” also means well-documented code. This means

that you can expect most open source Python libraries to have decent documentation.

To participate in this excellent culture of documentation, you need to follow a few guidelines when you

write docstrings. The full details are discussed online in PEP 257 (http://www.python.org/dev/peps/pep-

0257/). There are a few best-practices you should be sure to follow.

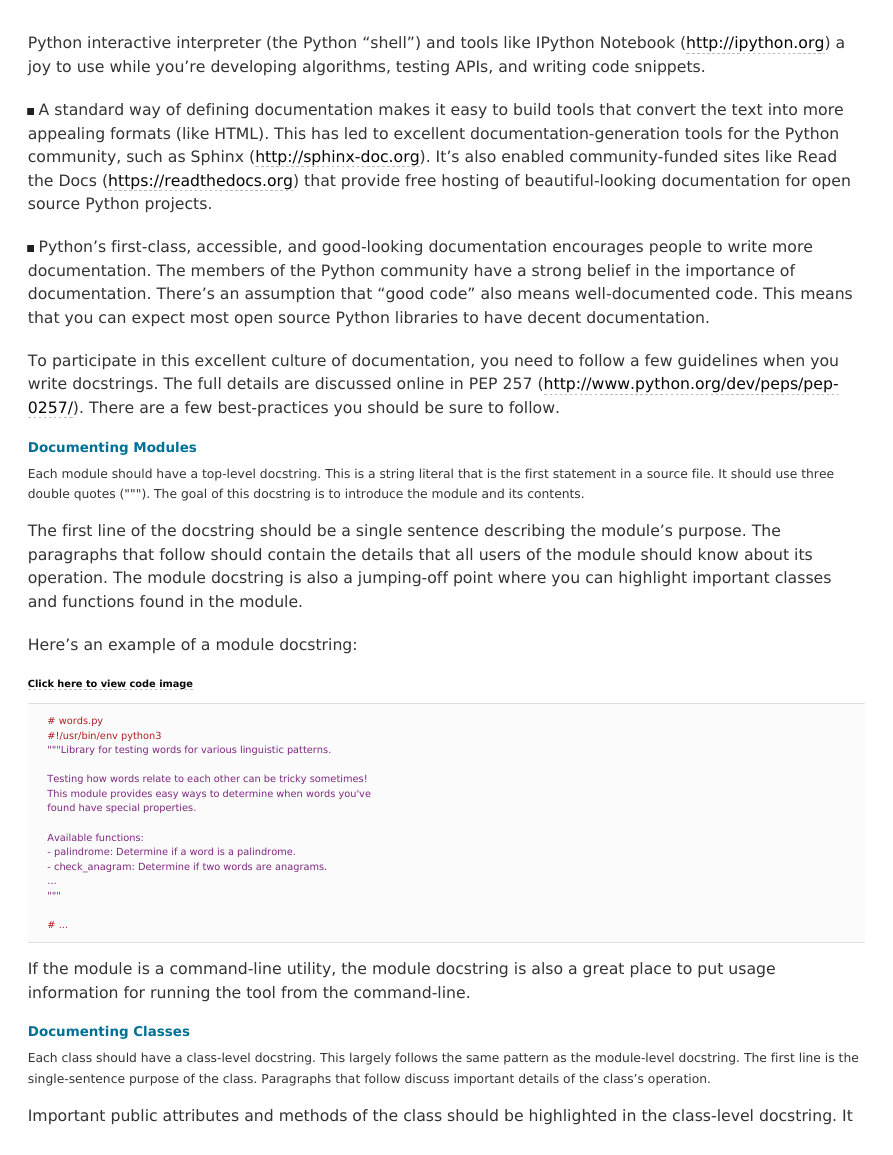

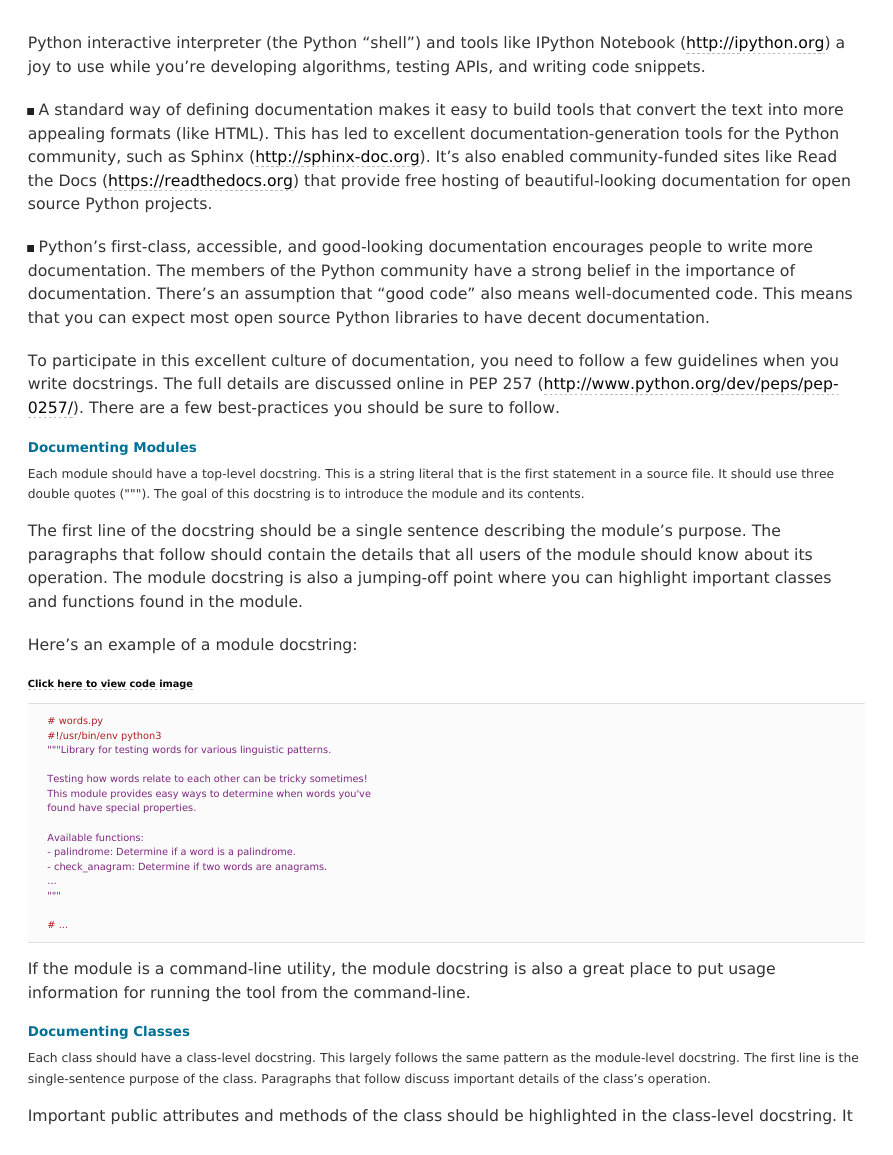

Documenting Modules

Each module should have a top-level docstring. This is a string literal that is the first statement in a source file. It should use three

double quotes ("""). The goal of this docstring is to introduce the module and its contents.

The first line of the docstring should be a single sentence describing the module’s purpose. The

paragraphs that follow should contain the details that all users of the module should know about its

operation. The module docstring is also a jumping-off point where you can highlight important classes

and functions found in the module.

Here’s an example of a module docstring:

Click here to view code image

# words.py

#!/usr/bin/env python3

"""Library for testing words for various linguistic patterns.

Testing how words relate to each other can be tricky sometimes!

This module provides easy ways to determine when words you've

found have special properties.

Available functions:

- palindrome: Determine if a word is a palindrome.

- check_anagram: Determine if two words are anagrams.

...

"""

# ...

If the module is a command-line utility, the module docstring is also a great place to put usage

information for running the tool from the command-line.

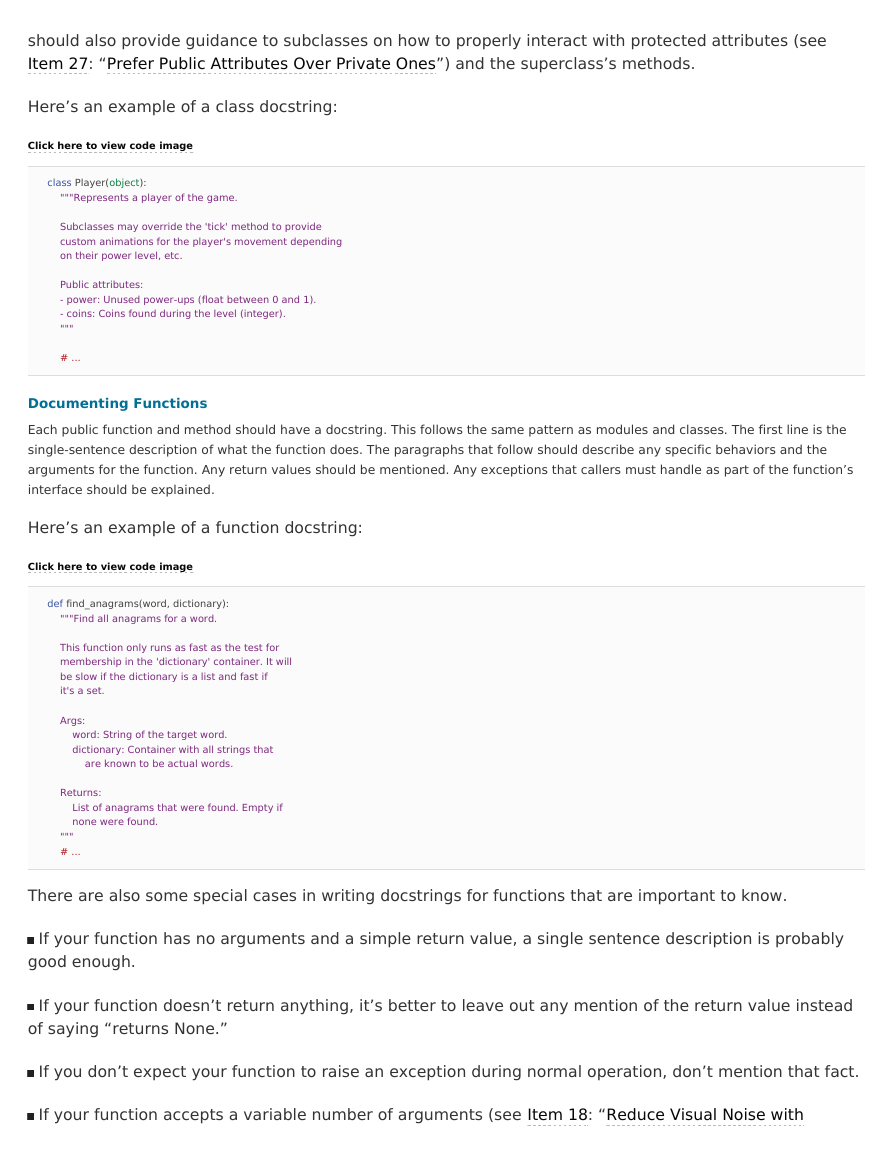

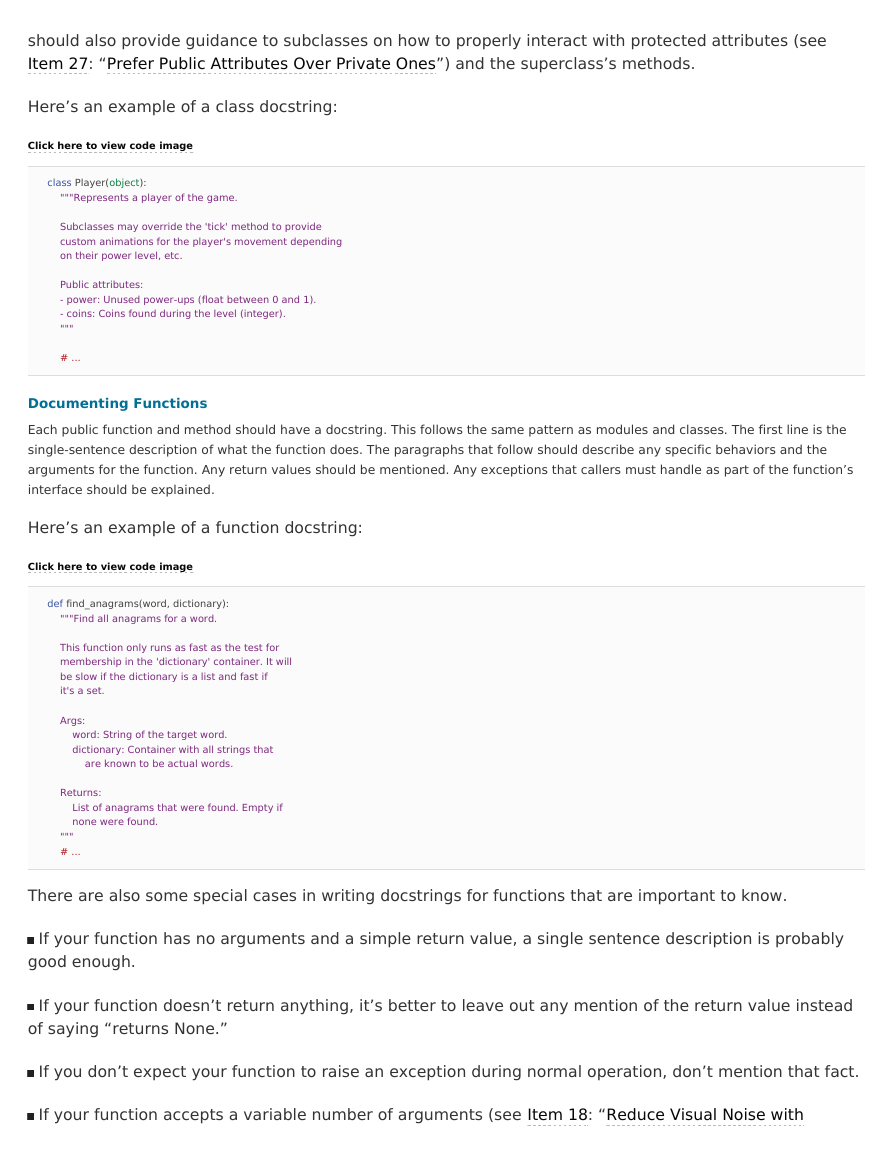

Documenting Classes

Each class should have a class-level docstring. This largely follows the same pattern as the module-level docstring. The first line is the

single-sentence purpose of the class. Paragraphs that follow discuss important details of the class’s operation.

Important public attributes and methods of the class should be highlighted in the class-level docstring. It

�

should also provide guidance to subclasses on how to properly interact with protected attributes (see

Item 27: “Prefer Public Attributes Over Private Ones”) and the superclass’s methods.

Here’s an example of a class docstring:

Click here to view code image

class Player(object):

"""Represents a player of the game.

Subclasses may override the 'tick' method to provide

custom animations for the player's movement depending

on their power level, etc.

Public attributes:

- power: Unused power-ups (float between 0 and 1).

- coins: Coins found during the level (integer).

"""

# ...

Documenting Functions

Each public function and method should have a docstring. This follows the same pattern as modules and classes. The first line is the

single-sentence description of what the function does. The paragraphs that follow should describe any specific behaviors and the

arguments for the function. Any return values should be mentioned. Any exceptions that callers must handle as part of the function’s

interface should be explained.

Here’s an example of a function docstring:

Click here to view code image

def find_anagrams(word, dictionary):

"""Find all anagrams for a word.

This function only runs as fast as the test for

membership in the 'dictionary' container. It will

be slow if the dictionary is a list and fast if

it's a set.

Args:

word: String of the target word.

dictionary: Container with all strings that

are known to be actual words.

Returns:

List of anagrams that were found. Empty if

none were found.

"""

# ...

There are also some special cases in writing docstrings for functions that are important to know.

If your function has no arguments and a simple return value, a single sentence description is probably

good enough.

If your function doesn’t return anything, it’s better to leave out any mention of the return value instead

of saying “returns None.”

If you don’t expect your function to raise an exception during normal operation, don’t mention that fact.

If your function accepts a variable number of arguments (see Item 18: “Reduce Visual Noise with

�

Variable Positional Arguments”) or keyword-arguments (see Item 19: “Provide Optional Behavior with

Keyword Arguments”), use *args and **kwargs in the documented list of arguments to describe their

purpose.

If your function has arguments with default values, those defaults should be mentioned (see Item 20:

“Use None and Docstrings to Specify Dynamic Default Arguments”).

If your function is a generator (see Item 16: “Consider Generators Instead of Returning Lists”), then your

docstring should describe what the generator yields when it’s iterated.

If your function is a coroutine (see Item 40: “Consider Coroutines to Run Many Functions Concurrently”),

then your docstring should contain what the coroutine yields, what it expects to receive from yield

expressions, and when it will stop iteration.

NoteNote

Once you’ve written docstrings for your modules, it’s important to keep the documentation up to date. The doctest built-in module makes it easy to exercise usage

examples embedded in docstrings to ensure that your source code and its documentation don’t diverge over time.

Things to Remember

Write documentation for every module, class, and function using docstrings. Keep them up to date as your code changes.

For modules: Introduce the contents of the module and any important classes or functions all users

should know about.

For classes: Document behavior, important attributes, and subclass behavior in the docstring following

the class statement.

For functions and methods: Document every argument, returned value, raised exception, and other

behaviors in the docstring following the def statement.

I T E M 5 0 : U S E PA C K A G E S T O O R G A N I Z E M O D U L E S A N D P R O V I D E S TA B L E A P I S

As the size of a program’s codebase grows, it’s natural for you to reorganize its structure. You split larger functions into smaller

functions. You refactor data structures into helper classes (see Item 22: “Prefer Helper Classes Over Bookkeeping with Dictionaries

and Tuples”). You separate functionality into various modules that depend on each other.

At some point, you’ll find yourself with so many modules that you need another layer in your program to

make it understandable. For this purpose, Python provides packages. Packages are modules that contain

other modules.

In most cases, packages are defined by putting an empty file named __init__.py into a directory. Once

__init__.py is present, any other Python files in that directory will be available for import using a path

relative to the directory. For example, imagine that you have the following directory structure in your

program.

main.py

mypackage/__init__.py

mypackage/models.py

mypackage/utils.py

To import the utils module, you use the absolute module name that includes the package directory’s

�

name.

# main.py

from mypackage import utils

This pattern continues when you have package directories present within other packages (like

mypackage.foo.bar).

NoteNote

Python 3.4 introduces namespace packages, a more flexible way to define packages. Namespace packages can be composed of modules from completely separate

directories, zip archives, or even remote systems. For details on how to use the advanced features of namespace packages, see PEP 420

(http://www.python.org/dev/peps/pep-0420/).

The functionality provided by packages has two primary purposes in Python programs.

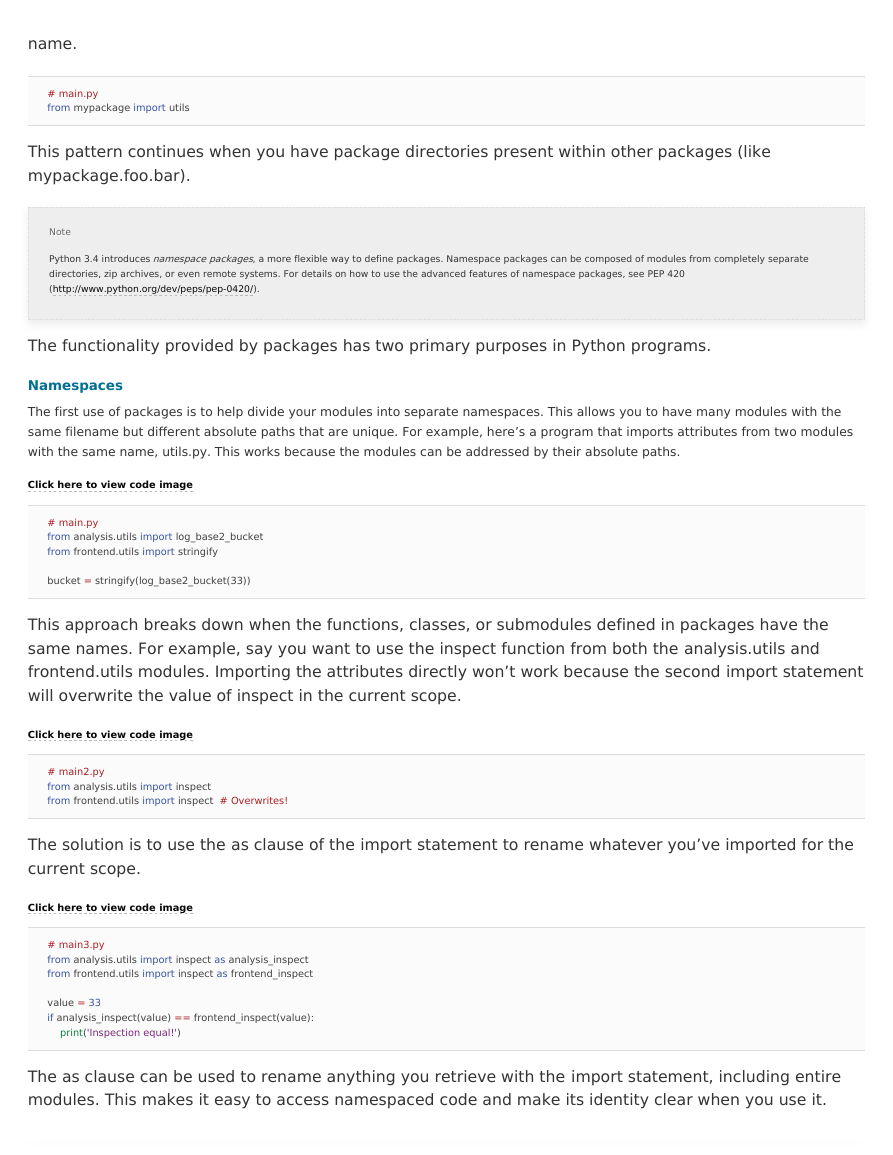

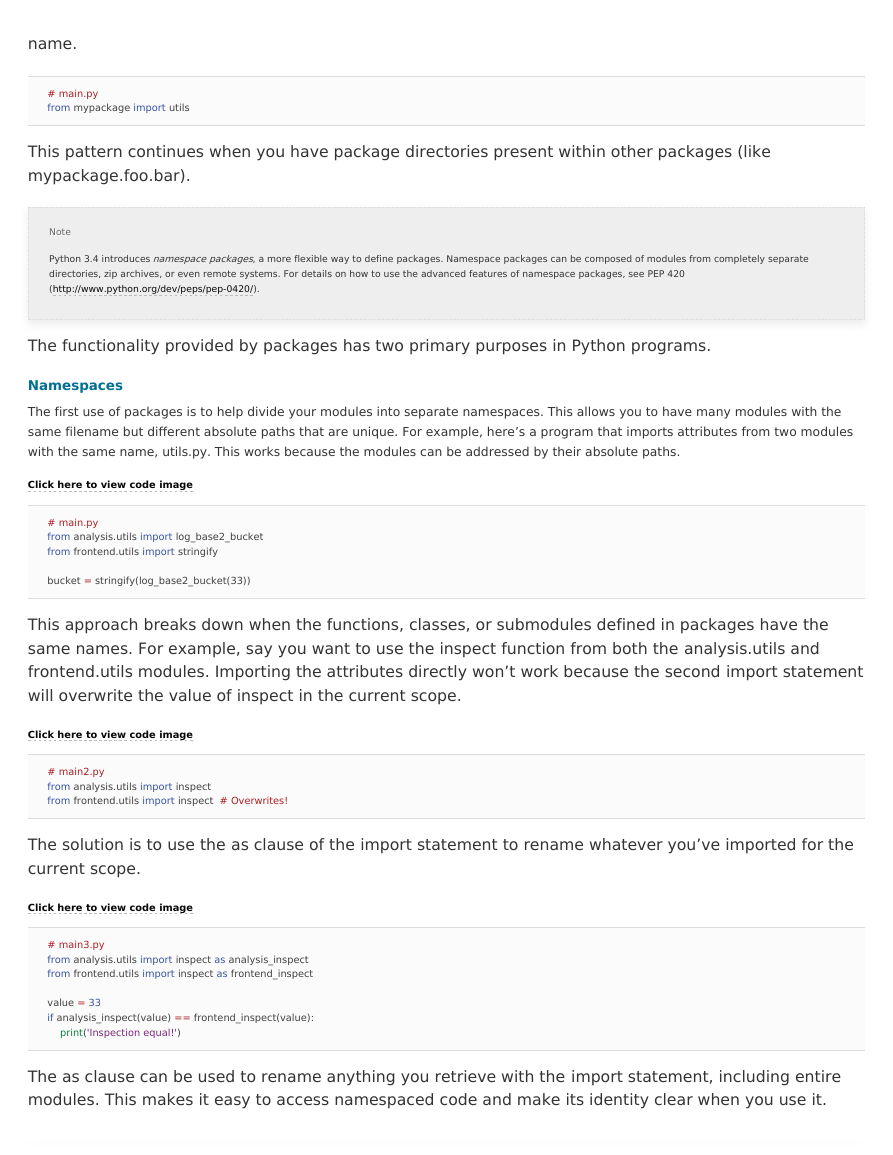

Namespaces

The first use of packages is to help divide your modules into separate namespaces. This allows you to have many modules with the

same filename but different absolute paths that are unique. For example, here’s a program that imports attributes from two modules

with the same name, utils.py. This works because the modules can be addressed by their absolute paths.

Click here to view code image

# main.py

from analysis.utils import log_base2_bucket

from frontend.utils import stringify

bucket = stringify(log_base2_bucket(33))

This approach breaks down when the functions, classes, or submodules defined in packages have the

same names. For example, say you want to use the inspect function from both the analysis.utils and

frontend.utils modules. Importing the attributes directly won’t work because the second import statement

will overwrite the value of inspect in the current scope.

Click here to view code image

# main2.py

from analysis.utils import inspect

from frontend.utils import inspect # Overwrites!

The solution is to use the as clause of the import statement to rename whatever you’ve imported for the

current scope.

Click here to view code image

# main3.py

from analysis.utils import inspect as analysis_inspect

from frontend.utils import inspect as frontend_inspect

value = 33

if analysis_inspect(value) == frontend_inspect(value):

print('Inspection equal!')

The as clause can be used to rename anything you retrieve with the import statement, including entire

modules. This makes it easy to access namespaced code and make its identity clear when you use it.

�

NoteNote

Another approach for avoiding imported name conflicts is to always access names by their highest unique module name.

For the example above, you’d first import analysis.utils and import frontend.utils. Then, you’d access the inspect functions with the full paths of analysis.utils.inspect and

frontend.utils.inspect.

This approach allows you to avoid the as clause altogether. It also makes it abundantly clear to new readers of the code where each function is defined.

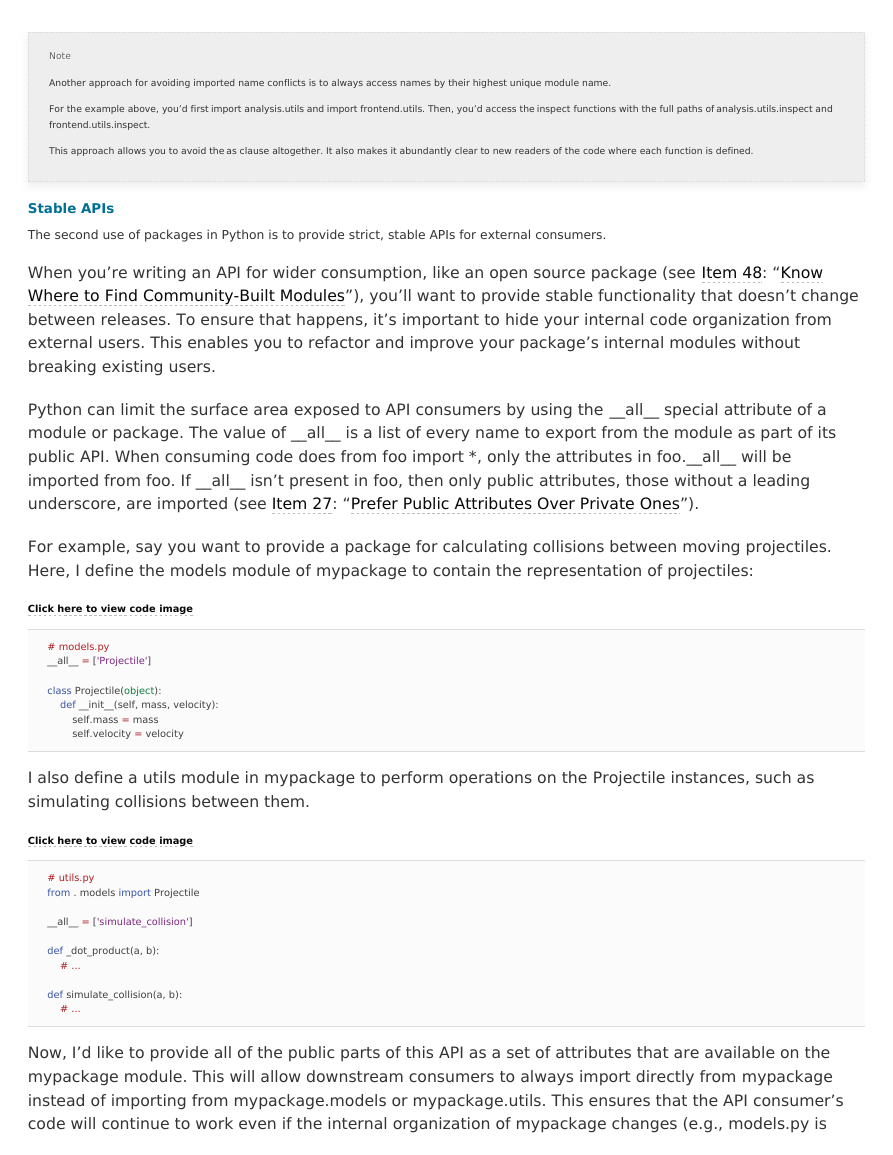

Stable APIs

The second use of packages in Python is to provide strict, stable APIs for external consumers.

When you’re writing an API for wider consumption, like an open source package (see Item 48: “Know

Where to Find Community-Built Modules”), you’ll want to provide stable functionality that doesn’t change

between releases. To ensure that happens, it’s important to hide your internal code organization from

external users. This enables you to refactor and improve your package’s internal modules without

breaking existing users.

Python can limit the surface area exposed to API consumers by using the __all__ special attribute of a

module or package. The value of __all__ is a list of every name to export from the module as part of its

public API. When consuming code does from foo import *, only the attributes in foo.__all__ will be

imported from foo. If __all__ isn’t present in foo, then only public attributes, those without a leading

underscore, are imported (see Item 27: “Prefer Public Attributes Over Private Ones”).

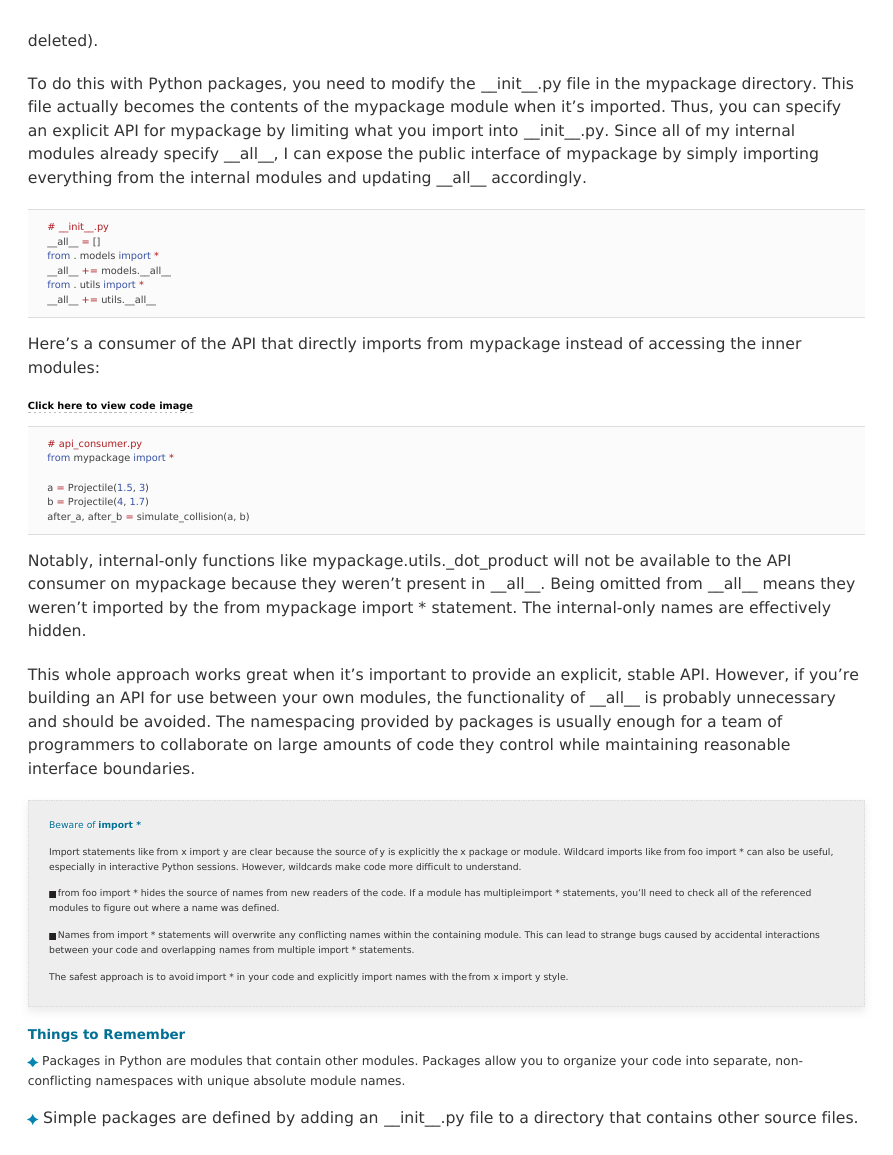

For example, say you want to provide a package for calculating collisions between moving projectiles.

Here, I define the models module of mypackage to contain the representation of projectiles:

Click here to view code image

# models.py

__all__ = ['Projectile']

class Projectile(object):

def __init__(self, mass, velocity):

self.mass = mass

self.velocity = velocity

I also define a utils module in mypackage to perform operations on the Projectile instances, such as

simulating collisions between them.

Click here to view code image

# utils.py

from . models import Projectile

__all__ = ['simulate_collision']

def _dot_product(a, b):

# ...

def simulate_collision(a, b):

# ...

Now, I’d like to provide all of the public parts of this API as a set of attributes that are available on the

mypackage module. This will allow downstream consumers to always import directly from mypackage

instead of importing from mypackage.models or mypackage.utils. This ensures that the API consumer’s

code will continue to work even if the internal organization of mypackage changes (e.g., models.py is

�

deleted).

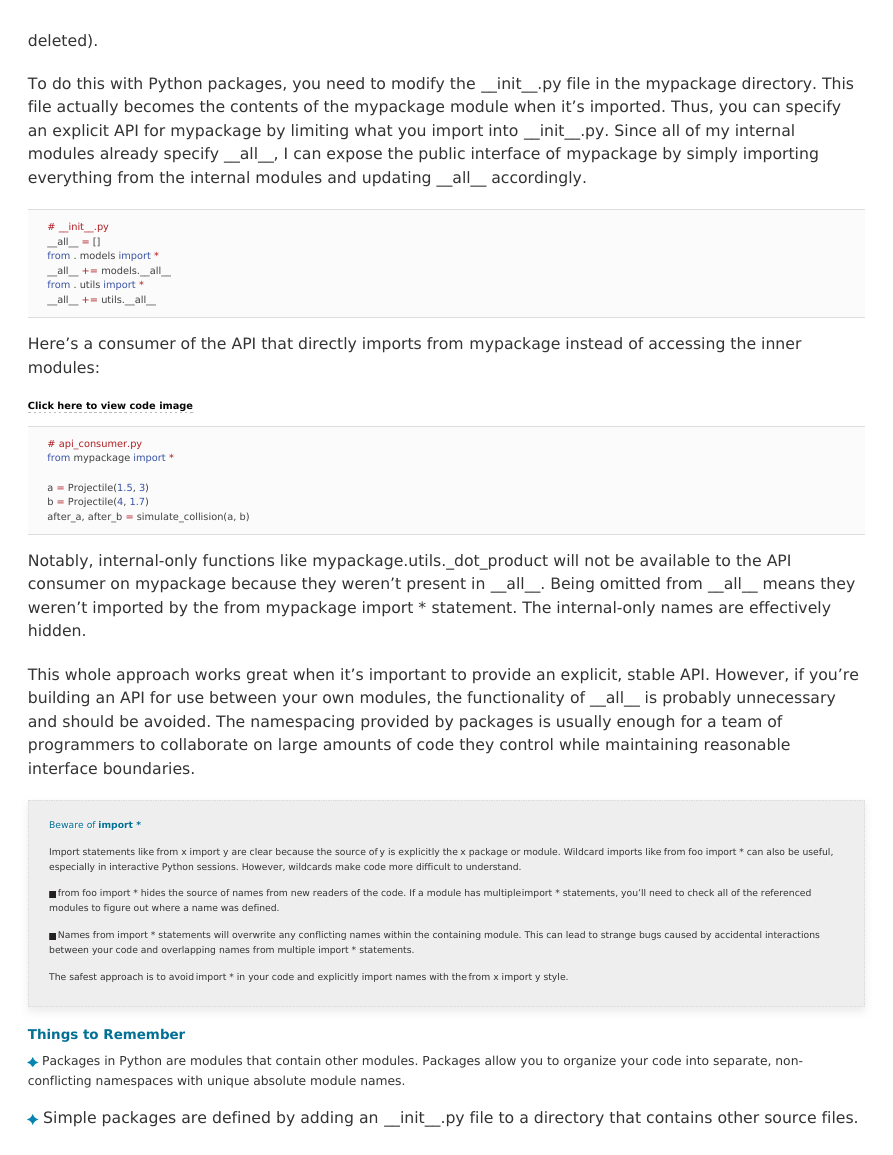

To do this with Python packages, you need to modify the __init__.py file in the mypackage directory. This

file actually becomes the contents of the mypackage module when it’s imported. Thus, you can specify

an explicit API for mypackage by limiting what you import into __init__.py. Since all of my internal

modules already specify __all__, I can expose the public interface of mypackage by simply importing

everything from the internal modules and updating __all__ accordingly.

# __init__.py

__all__ = []

from . models import *

__all__ += models.__all__

from . utils import *

__all__ += utils.__all__

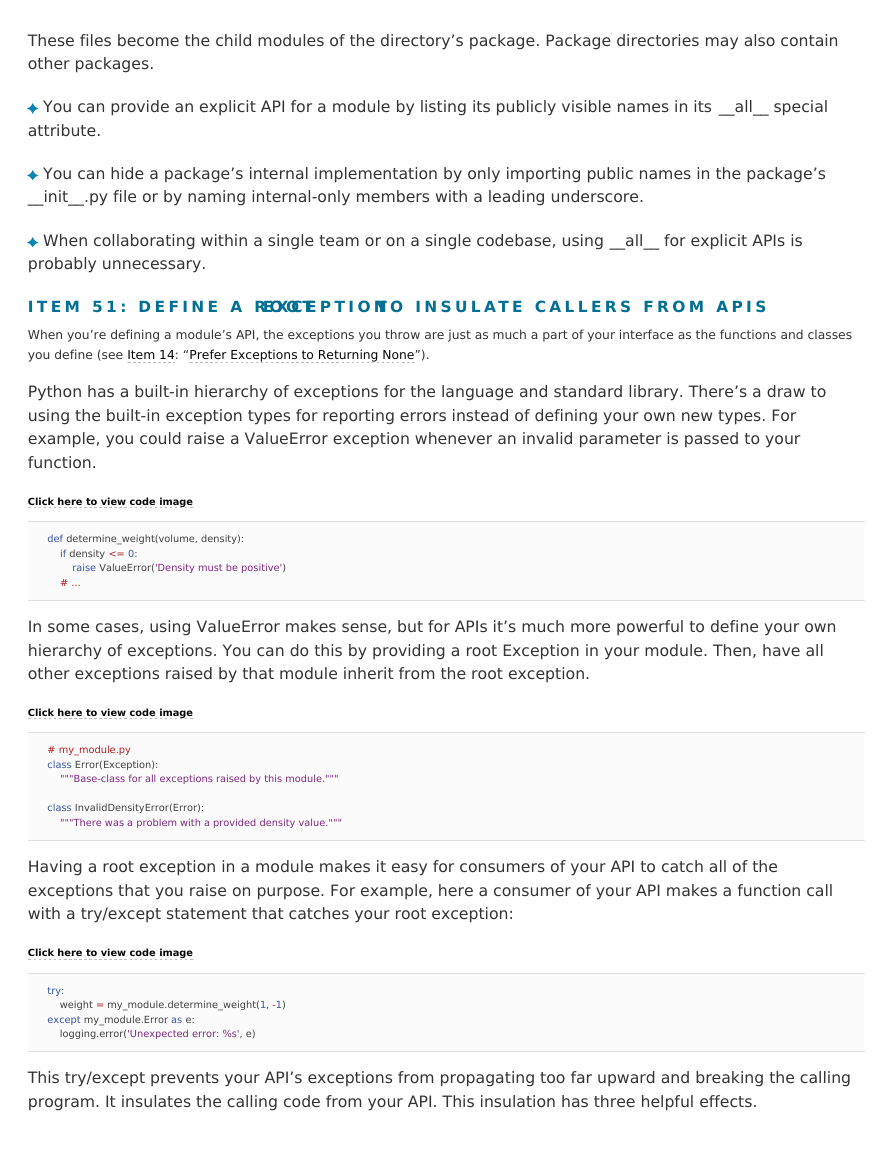

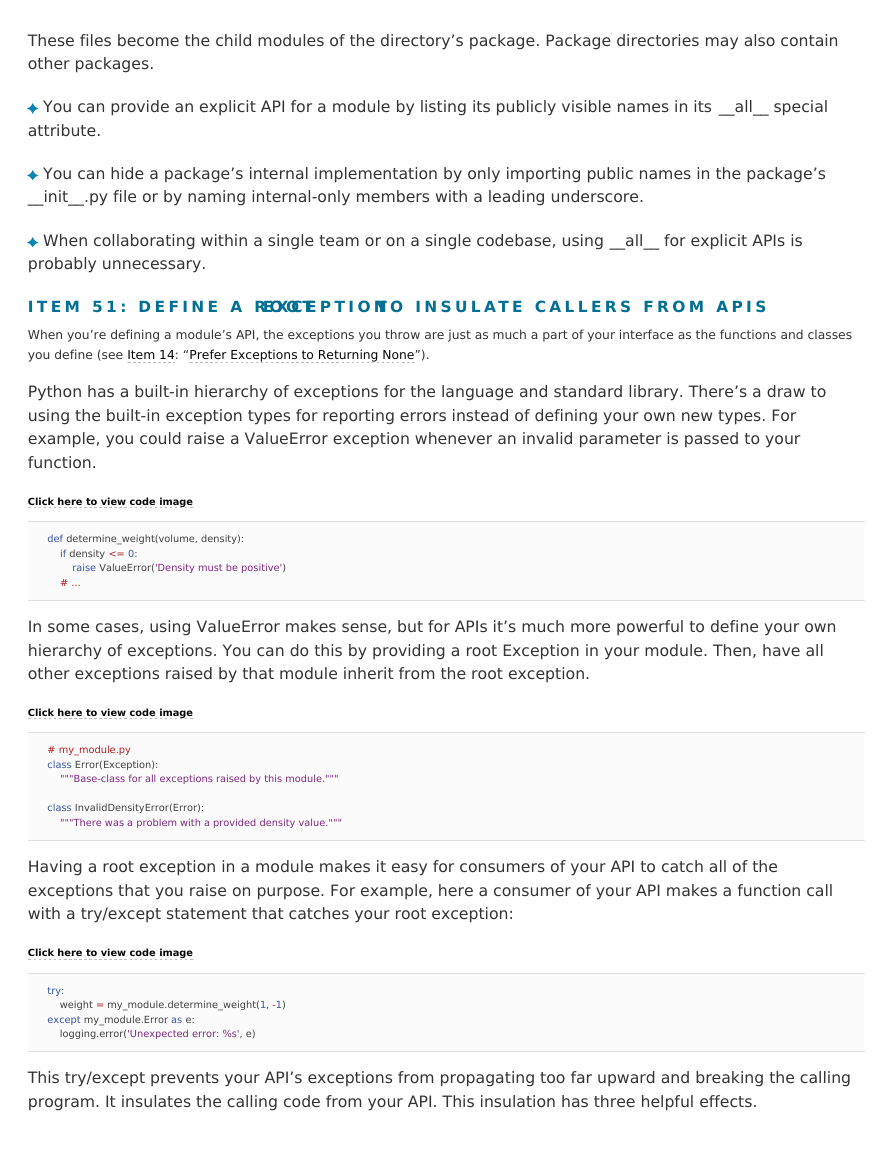

Here’s a consumer of the API that directly imports from mypackage instead of accessing the inner

modules:

Click here to view code image

# api_consumer.py

from mypackage import *

a = Projectile(1.5, 3)

b = Projectile(4, 1.7)

after_a, after_b = simulate_collision(a, b)

Notably, internal-only functions like mypackage.utils._dot_product will not be available to the API

consumer on mypackage because they weren’t present in __all__. Being omitted from __all__ means they

weren’t imported by the from mypackage import * statement. The internal-only names are effectively

hidden.

This whole approach works great when it’s important to provide an explicit, stable API. However, if you’re

building an API for use between your own modules, the functionality of __all__ is probably unnecessary

and should be avoided. The namespacing provided by packages is usually enough for a team of

programmers to collaborate on large amounts of code they control while maintaining reasonable

interface boundaries.

Beware of import *

Beware of

import *

Import statements like from x import y are clear because the source of y is explicitly the x package or module. Wildcard imports like from foo import * can also be useful,

especially in interactive Python sessions. However, wildcards make code more difficult to understand.

from foo import * hides the source of names from new readers of the code. If a module has multiple import * statements, you’ll need to check all of the referenced

modules to figure out where a name was defined.

Names from import * statements will overwrite any conflicting names within the containing module. This can lead to strange bugs caused by accidental interactions

between your code and overlapping names from multiple import * statements.

The safest approach is to avoid import * in your code and explicitly import names with the from x import y style.

Things to Remember

Packages in Python are modules that contain other modules. Packages allow you to organize your code into separate, non-

conflicting namespaces with unique absolute module names.

Simple packages are defined by adding an __init__.py file to a directory that contains other source files.

�

These files become the child modules of the directory’s package. Package directories may also contain

other packages.

You can provide an explicit API for a module by listing its publicly visible names in its __all__ special

attribute.

You can hide a package’s internal implementation by only importing public names in the package’s

__init__.py file or by naming internal-only members with a leading underscore.

When collaborating within a single team or on a single codebase, using __all__ for explicit APIs is

probably unnecessary.

E X C E P T I O N T O I N S U L AT E C A L L E R S F R O M A P I S

I T E M 5 1 : D E F I N E A R O O T

When you’re defining a module’s API, the exceptions you throw are just as much a part of your interface as the functions and classes

you define (see Item 14: “Prefer Exceptions to Returning None”).

Python has a built-in hierarchy of exceptions for the language and standard library. There’s a draw to

using the built-in exception types for reporting errors instead of defining your own new types. For

example, you could raise a ValueError exception whenever an invalid parameter is passed to your

function.

Click here to view code image

def determine_weight(volume, density):

if density <= 0:

raise ValueError('Density must be positive')

# ...

In some cases, using ValueError makes sense, but for APIs it’s much more powerful to define your own

hierarchy of exceptions. You can do this by providing a root Exception in your module. Then, have all

other exceptions raised by that module inherit from the root exception.

Click here to view code image

# my_module.py

class Error(Exception):

"""Base-class for all exceptions raised by this module."""

class InvalidDensityError(Error):

"""There was a problem with a provided density value."""

Having a root exception in a module makes it easy for consumers of your API to catch all of the

exceptions that you raise on purpose. For example, here a consumer of your API makes a function call

with a try/except statement that catches your root exception:

Click here to view code image

try:

weight = my_module.determine_weight(1, -1)

except my_module.Error as e:

logging.error('Unexpected error: %s', e)

This try/except prevents your API’s exceptions from propagating too far upward and breaking the calling

program. It insulates the calling code from your API. This insulation has three helpful effects.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc