Random Forests and Ferns

David Capel

�

The Multi-class Classification Problem

Fergus, Zisserman and Perona

276

276

Fergus, Zisserman and Perona

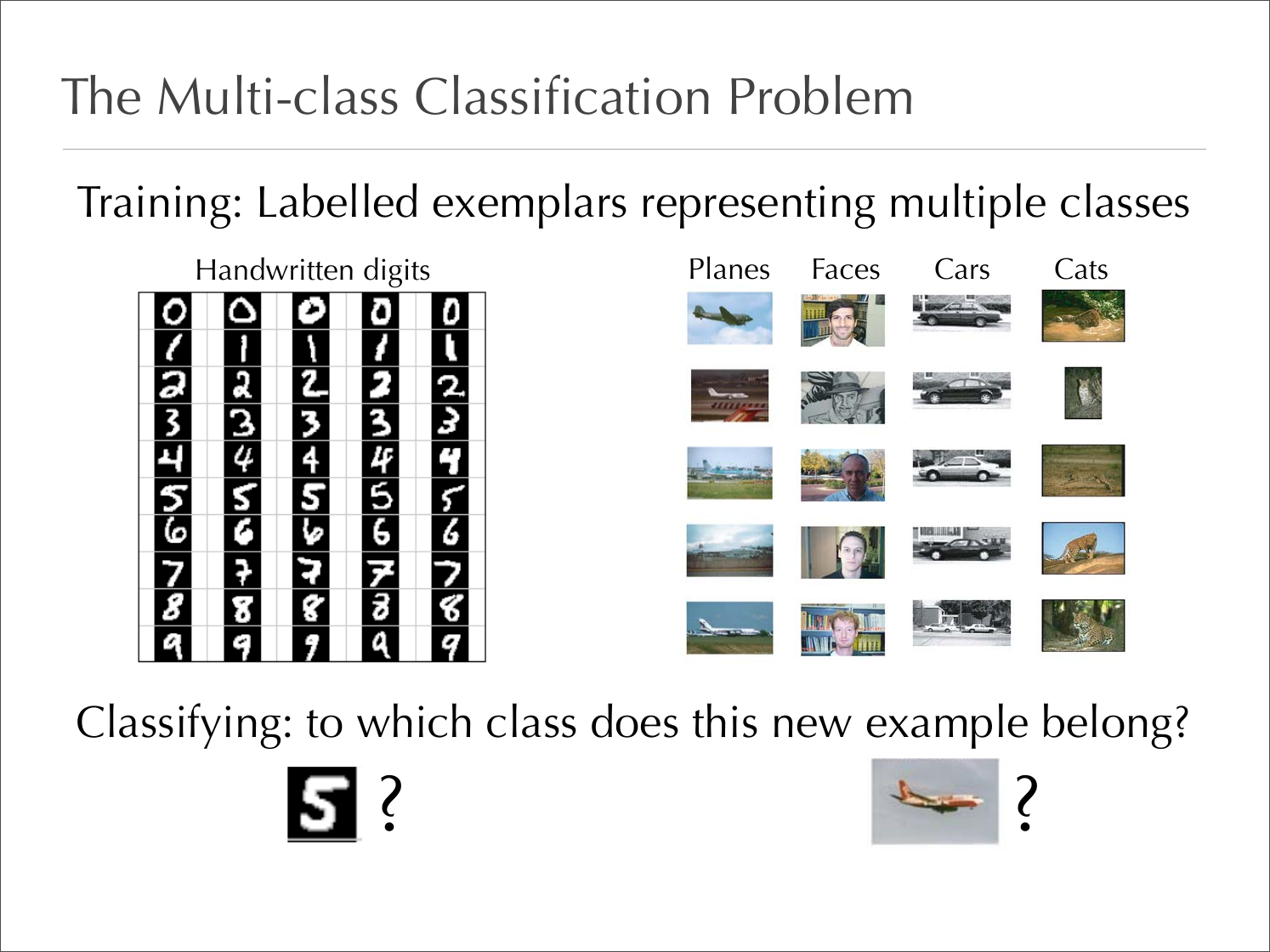

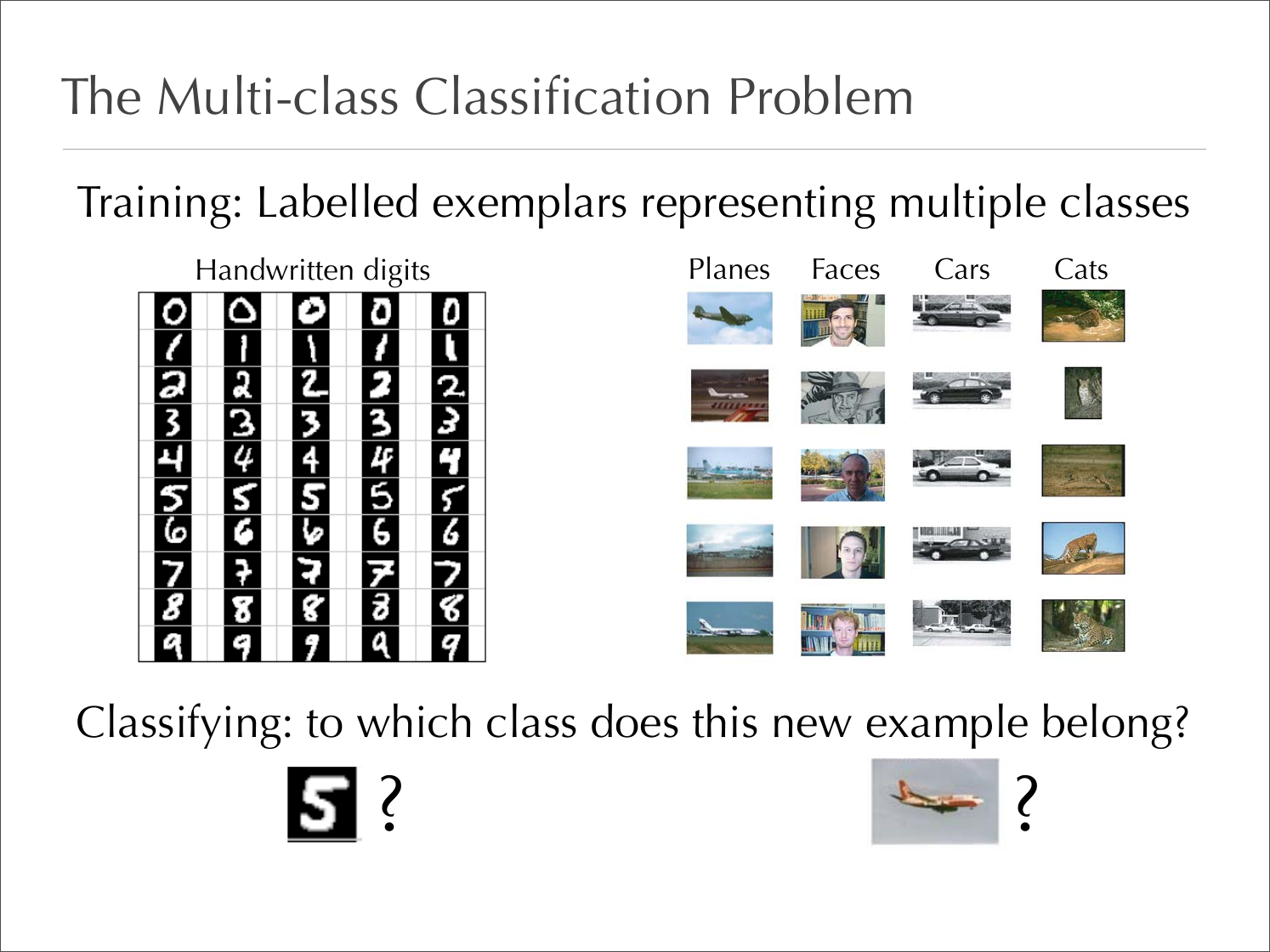

Training: Labelled exemplars representing multiple classes

Handwritten digits

Planes

Faces

Cars

Cats

276

Fergus, Zisserman and Perona

Classifying: to which class does this new example belong?

?

?

Figure 1. Some sample images from the datasets. Note the large variation in scale in, for example, the cars (rear) database. These datasets are

from both http://www.vision. caltech.edu/html-files/archive.html and http://www.robots. ox.ac.uk vgg/data/, except for the Cars (Side) from

(http://l2r.cs.uiuc.edu/ cogcomp/ index research.html) and Spotted Cats from the Corel Image library. A Powerpoint presentation of the figures

Figure 1. Some sample images from the datasets. Note the large variation in scale in, for example, the cars (rear) database. These datasets are

from both http://www.vision. caltech.edu/html-files/archive.html and http://www.robots. ox.ac.uk vgg/data/, except for the Cars (Side) from

(http://l2r.cs.uiuc.edu/ cogcomp/ index research.html) and Spotted Cats from the Corel Image library. A Powerpoint presentation of the figures

�

The Multi-class Classification Problem

• A classifier is a mapping H from feature vectors f to discrete

class labels C

f = (f1, f2, ..., fN)

C ∈ {c1, c2, ..., cK}

H : f → C

P (C = ci|f1, f2, ..., fN)

• Numerous choices of feature space F are possible, e.g.

H(f) = arg max

P (C = ci|f1, f2, ..., fN)

i

Dm = (f m, Cm)

for m = 1...M

Raw pixel values

Gij =

Texton histograms

1

2! 1

n"k

(d2

ik + d2

kj) − d2

ij −

Color histograms Oriented filter banks

kl#

d2

1

n2"kl

�

C ∈ {c1, c2, ..., cK}

H : f → C

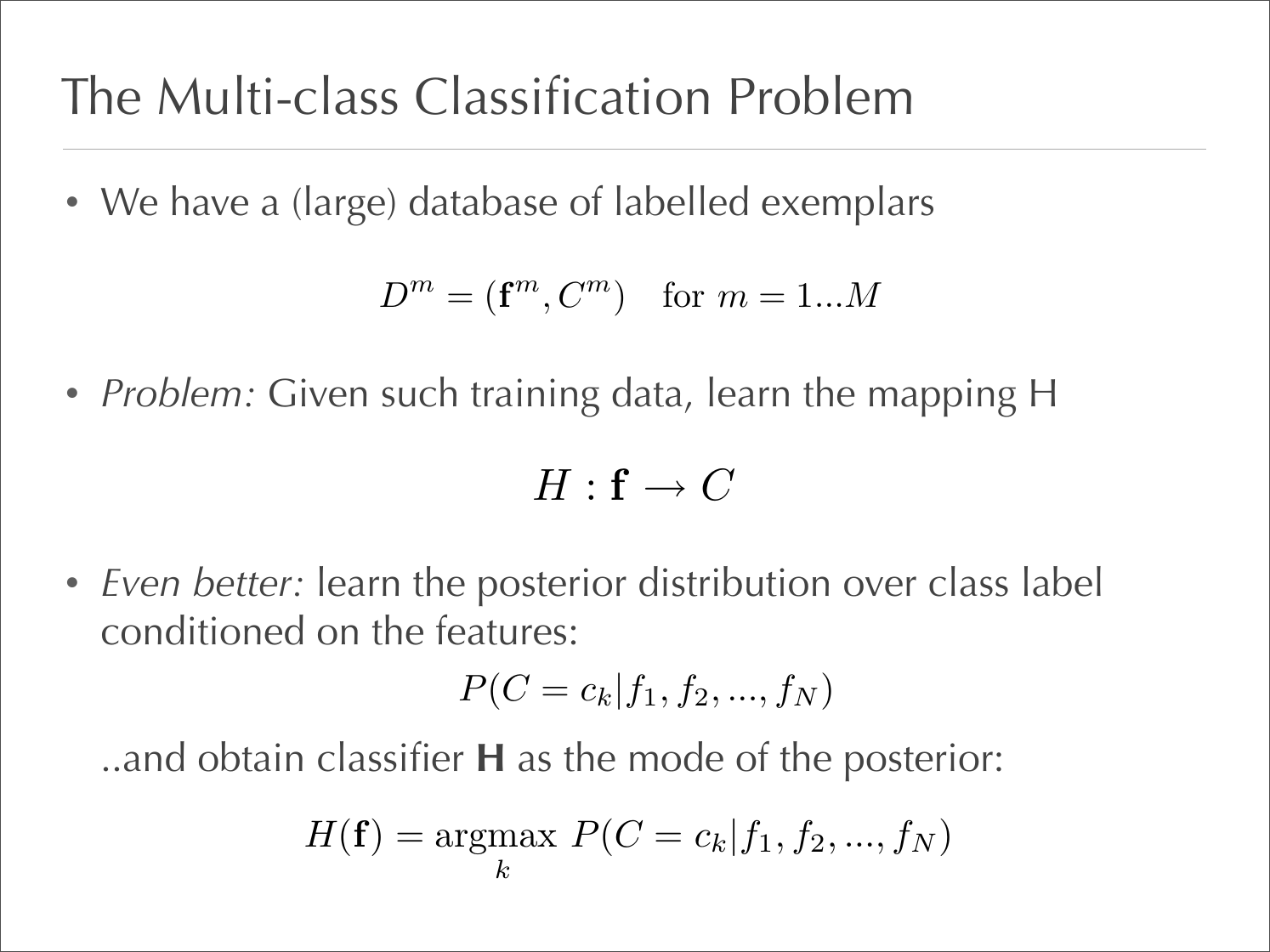

The Multi-class Classification Problem

P (C = ci|f1, f2, ..., fN)

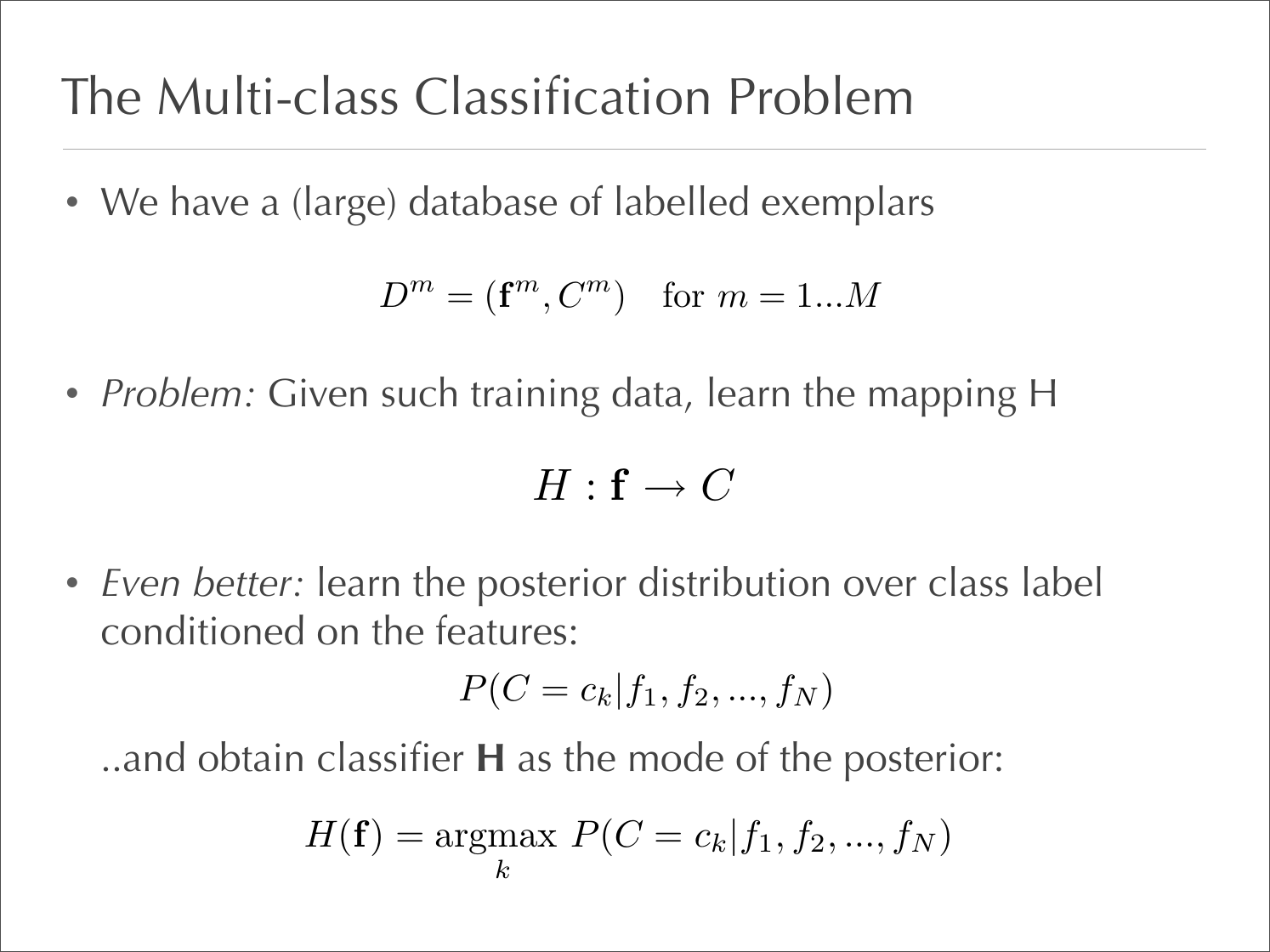

• We have a (large) database of labelled exemplars

P (C = ci|f1, f2, ..., fN)

H(f) = arg max

i

Dm = (f m, Cm)

for m = 1...M

• Problem: Given such training data, learn the mapping H

1

Gij =

• Even better: learn the posterior distribution over class label

H(f) = arg max

conditioned on the features:

..and obtain classifier H as the mode of the posterior:

1

kl#

d2

f = (f1, f2, ..., fN)

2! 1

n"k

n2"kl

C ∈ {c1, c2, ..., cK}

kj) − d2

ik + d2

(d2

ij −

H : f → C

f = (f1, f2, ..., fN)

P (C = ci|f1, f2, ..., fN)

C ∈ {c1, c2, ..., cK}

f = (f1, f2, ..., fN)

err(y) ="ij $Gij − y!i yj%2

C ∈ {c1, c2, ..., cK}

i

P (C = ck|f1, f2, ..., fN)

P (C = ck|f1, f2, ..., fN)

yαi =&λαvαi

2! 1

n"k

P (C = ci|f1, f2, ..., fN)

H : f → C

H : f → C

P (C = ck|f1, f2, ..., fN)

for m = 1...M

P (C = ck|f1, f2, ..., fN)

for m = 1...M

1

kj) − d2

Dm = (f m, Cm)

ik + d2

n2"kl

H(f) = argmax

Dm = (f m, Cm)

H(f) = argmax

k

for α =1,2,...,d

ij −

(d2

k

kl#

d2

1

Gij =

�

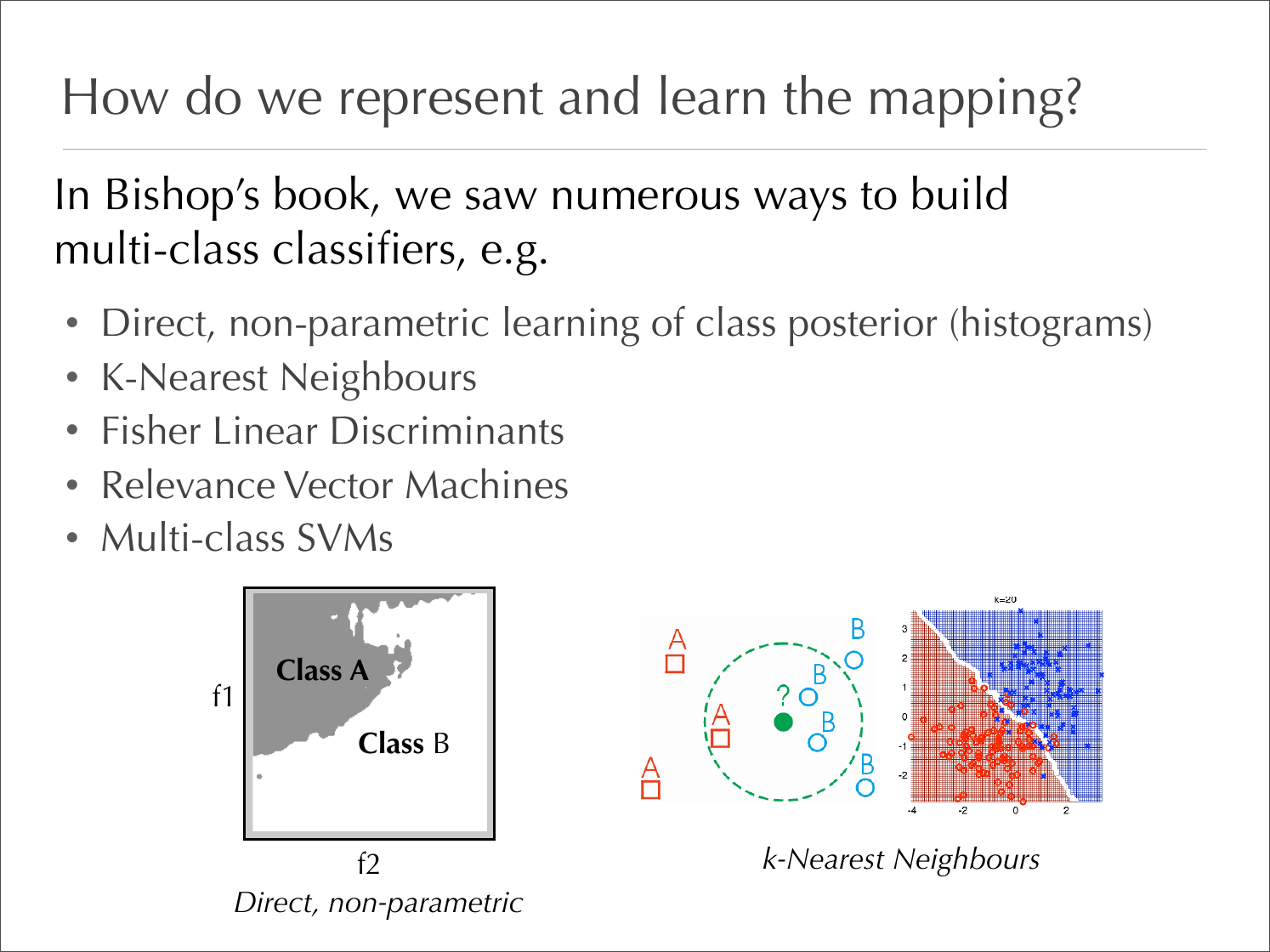

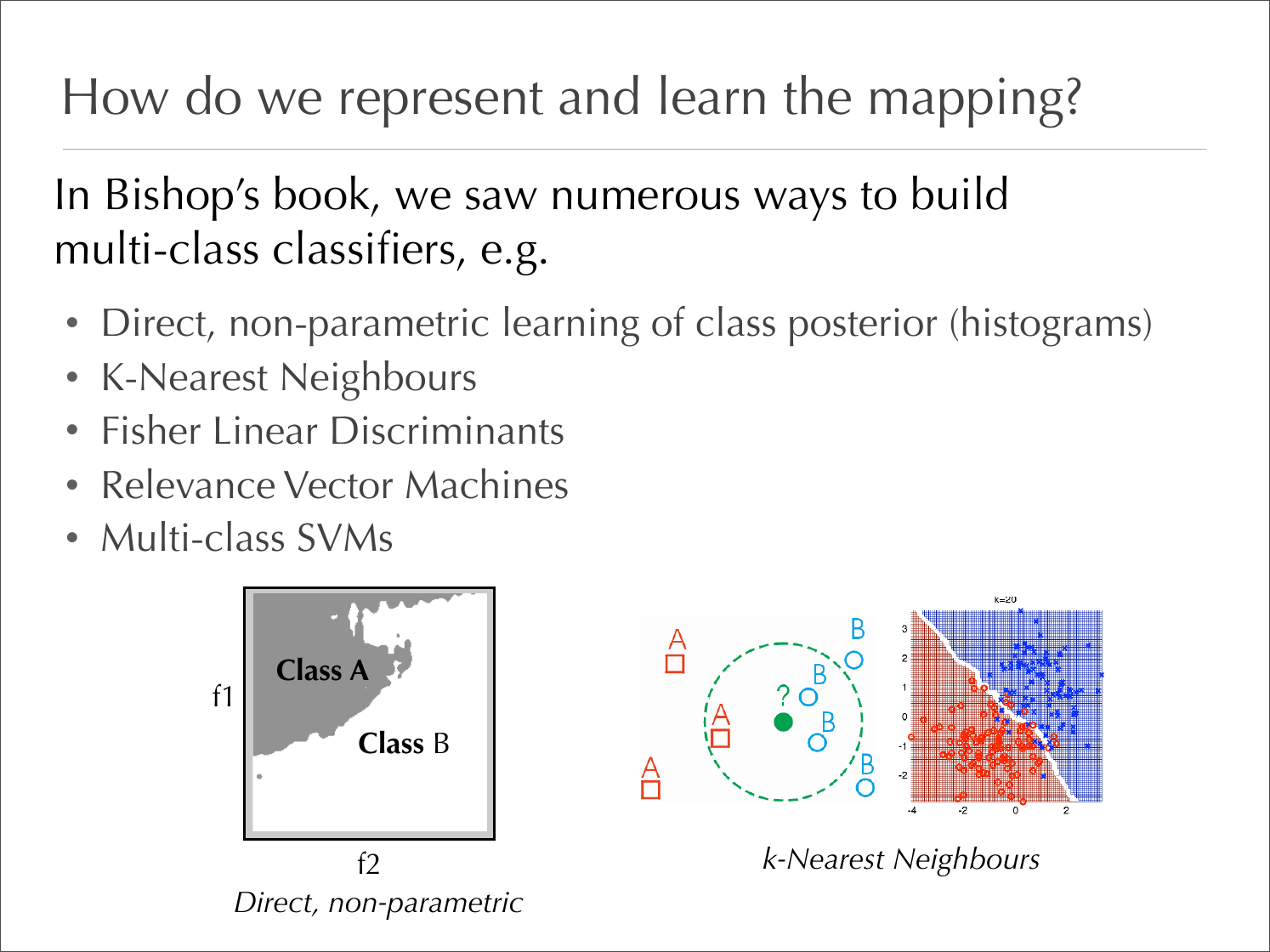

How do we represent and learn the mapping?

In Bishop’s book, we saw numerous ways to build

multi-class classifiers, e.g.

• Direct, non-parametric learning of class posterior (histograms)

• K-Nearest Neighbours

• Fisher Linear Discriminants

• Relevance Vector Machines

• Multi-class SVMs

y

t

i

s

n

e

n

t

f1

i

l

i

e

x

p

e

g

d

E

Class A

Class B

0.2 0.4 0.6 0.8

1

1 ! Specificity

(c)

Center pixel intensity

Direct, non-parametric

f2

(d)

k-Nearest Neighbours

�

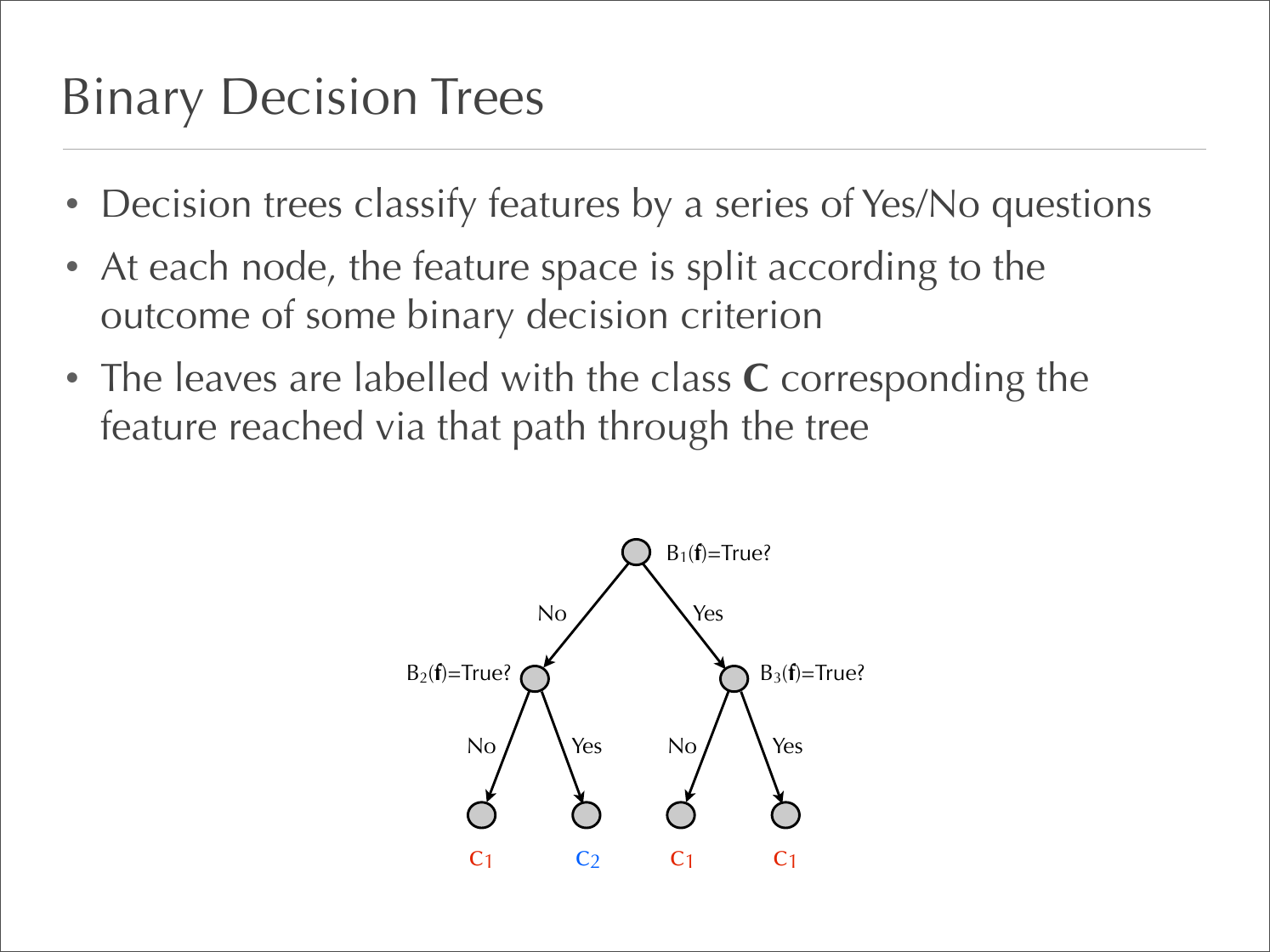

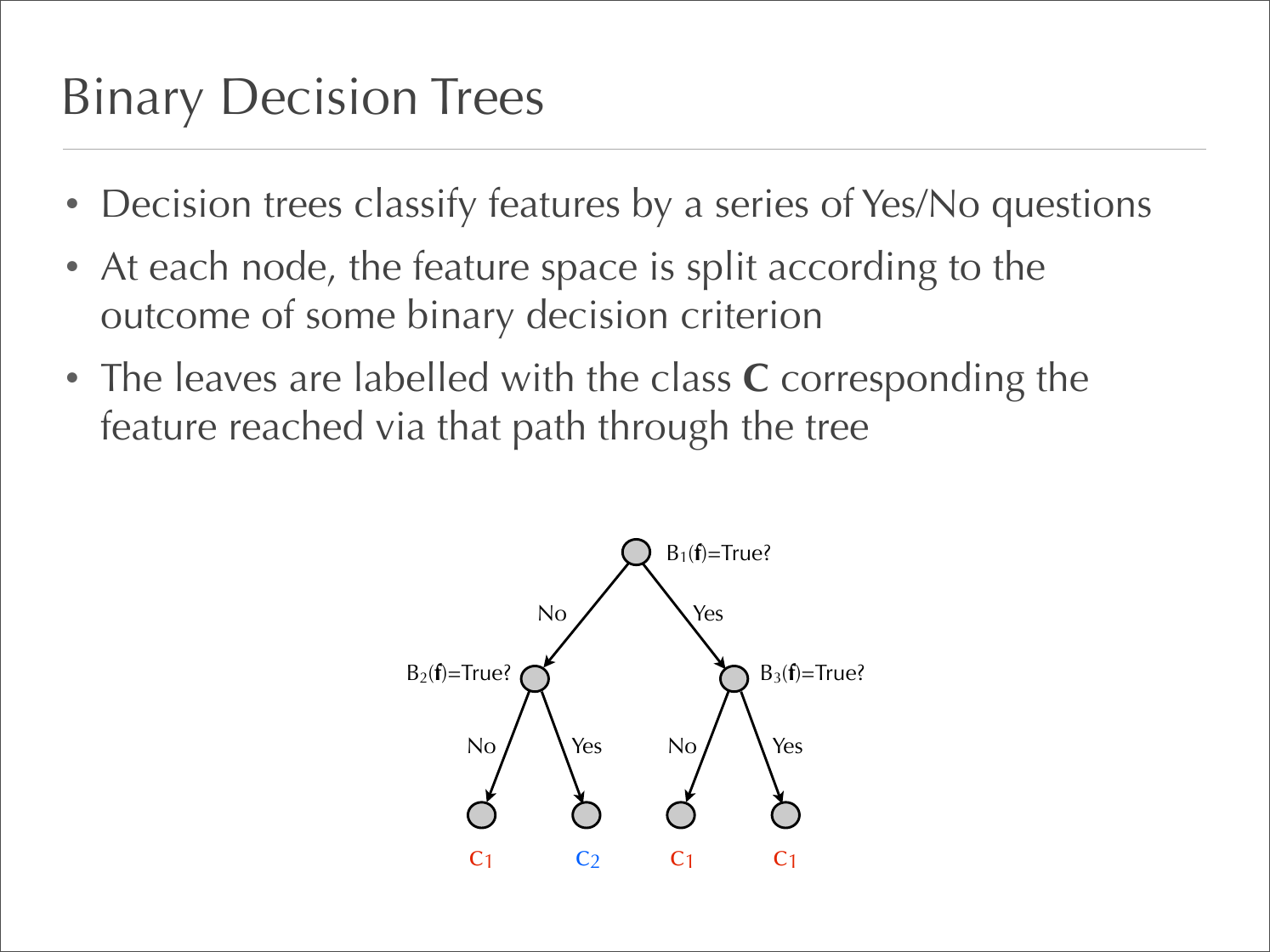

Binary Decision Trees

• Decision trees classify features by a series of Yes/No questions

• At each node, the feature space is split according to the

outcome of some binary decision criterion

• The leaves are labelled with the class C corresponding the

feature reached via that path through the tree

B1(f)=True?

No

Yes

B2(f)=True?

B3(f)=True?

No

Yes

No

Yes

c1

c2

c1

c1

�

Binary Decision Trees

• Decision trees classify features by a series of Yes/No questions

• At each node, the feature space is split according to the

outcome of some binary decision criterion

• The leaves are labelled with the class C corresponding the

feature reached via that path through the tree

f

B1(f)=True?

B1(f)=True?

No

Yes

B2(f)=True?

B3(f)=True?

No

Yes

No

Yes

c1

c2

c1

c1

�

Binary Decision Trees

• Decision trees classify features by a series of Yes/No questions

• At each node, the feature space is split according to the

outcome of some binary decision criterion

• The leaves are labelled with the class C corresponding the

feature reached via that path through the tree

f

B1(f)=True?

B1(f)=True?

No

Yes

Yes

B2(f)=True?

B3(f)=True?

B3(f)=True?

No

Yes

No

Yes

c1

c2

c1

c1

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc