Applied Numerical Linear Algebra

应用数值线性代数

[美]James W. Demmel 著

SIAM 1997

�

Contents

1 Introduction

1.1 Basic Notation . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Standard Problems of Numerical Linear Algebra . . . . . . . .

1.3 General Techniques . . . . . . . . . . . . . . . . . . . . . . . . .

1.3.1 Matrix Factorizations

. . . . . . . . . . . . . . . . . . .

1.3.2 Perturbation Theory and Condition Numbers . . . . . .

1.3.3 Efiects of Roundofi Error on Algorithms . . . . . . . . .

1.3.4 Analyzing the Speed of Algorithms . . . . . . . . . . . .

1.3.5 Engineering Numerical Software

. . . . . . . . . . . . .

1.4 Example: Polynomial Evaluation . . . . . . . . . . . . . . . . .

1.5 Floating Point Arithmetic . . . . . . . . . . . . . . . . . . . . .

1.6 Polynomial Evaluation Revisited . . . . . . . . . . . . . . . . .

1.7 Vector and Matrix Norms . . . . . . . . . . . . . . . . . . . . .

1.8 References and Other Topics for Chapter 1 . . . . . . . . . . .

1.9 Questions for Chapter 1 . . . . . . . . . . . . . . . . . . . . . .

2 Linear Equation Solving

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.1

2.2 Perturbation Theory . . . . . . . . . . . . . . . . . . . . . . . .

2.2.1 Relative Perturbation Theory . . . . . . . . . . . . . . .

2.3 Gaussian Elimination . . . . . . . . . . . . . . . . . . . . . . . .

2.4 Error Analysis

. . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4.1 The Need for Pivoting . . . . . . . . . . . . . . . . . . .

2.4.2 Formal Error Analysis of Gaussian Elimination . . . . .

2.4.3 Estimating Condition Numbers . . . . . . . . . . . . . .

2.4.4 Practical Error Bounds

. . . . . . . . . . . . . . . . . .

Improving the Accuracy of a Solution . . . . . . . . . . . . . .

2.5.1

Single Precision Iterative Reflnement . . . . . . . . . . .

2.5.2 Equilibration . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . .

2.6.1 Basic Linear Algebra Subroutines (BLAS) . . . . . . . .

2.6.2 How to Optimize Matrix Multiplication . . . . . . . . .

2.6.3 Reorganizing Gaussian Elimination to use Level 3 BLAS

2.6.4 More About Parallelism and Other Performance Issues .

2.7 Special Linear Systems . . . . . . . . . . . . . . . . . . . . . . .

2.7.1 Real Symmetric Positive Deflnite Matrices . . . . . . . .

2.6 Blocking Algorithms for Higher Performance

2.5

1

1

1

2

3

4

4

5

6

7

9

15

19

23

24

31

31

32

35

38

44

45

46

50

54

60

61

62

63

65

67

72

75

76

76

v

�

vi

Symmetric Indeflnite Matrices

. . . . . . . . . . . . . .

2.7.2

2.7.3 Band Matrices

. . . . . . . . . . . . . . . . . . . . . . .

2.7.4 General Sparse Matrices . . . . . . . . . . . . . . . . . .

2.7.5 Dense Matrices Depending on Fewer Than O(n2) Pa-

rameters . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.8 References and Other Topics for Chapter 2 . . . . . . . . . . .

2.9 Questions for Chapter 2 . . . . . . . . . . . . . . . . . . . . . .

79

79

83

90

92

93

3 Linear Least Squares Problems

101

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

3.1

3.2 Matrix Factorizations That Solve the Linear Least Squares Prob-

lem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

3.2.1 Normal Equations

. . . . . . . . . . . . . . . . . . . . . 106

3.2.2 QR Decomposition . . . . . . . . . . . . . . . . . . . . . 107

3.2.3

Singular Value Decomposition . . . . . . . . . . . . . . . 109

3.3 Perturbation Theory for the Least Squares Problem . . . . . . 117

3.4 Orthogonal Matrices . . . . . . . . . . . . . . . . . . . . . . . . 118

3.4.1 Householder Transformations . . . . . . . . . . . . . . . 119

3.4.2 Givens Rotations . . . . . . . . . . . . . . . . . . . . . . 121

3.4.3 Roundofi Error Analysis for Orthogonal Matrices . . . . 123

3.4.4 Why Orthogonal Matrices? . . . . . . . . . . . . . . . . 124

. . . . . . . . . . . . . 125

3.5 Rank-Deflcient Least Squares Problems

3.5.1

3.5.2

Solving Rank-Deflcient Least Squares Problems Using

the SVD . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

Solving Rank-Deflcient Least Squares Problems Using

QR with Pivoting

. . . . . . . . . . . . . . . . . . . . . 130

3.6 Performance Comparison of Methods for Solving Least Squares

Problems

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

3.7 References and Other Topics for Chapter 3 . . . . . . . . . . . 134

3.8 Questions for Chapter 3 . . . . . . . . . . . . . . . . . . . . . . 134

4 Nonsymmetric Eigenvalue Problems

139

4.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

4.2 Canonical Forms . . . . . . . . . . . . . . . . . . . . . . . . . . 140

4.2.1 Computing Eigenvectors from the Schur Form . . . . . . 148

4.3 Perturbation Theory . . . . . . . . . . . . . . . . . . . . . . . . 148

4.4 Algorithms for the Nonsymmetric Eigenproblem . . . . . . . . 153

4.4.1 Power Method . . . . . . . . . . . . . . . . . . . . . . . 154

4.4.2

Inverse Iteration . . . . . . . . . . . . . . . . . . . . . . 155

4.4.3 Orthogonal Iteration . . . . . . . . . . . . . . . . . . . . 156

4.4.4 QR Iteration . . . . . . . . . . . . . . . . . . . . . . . . 159

4.4.5 Making QR Iteration Practical

. . . . . . . . . . . . . . 163

4.4.6 Hessenberg Reduction . . . . . . . . . . . . . . . . . . . 164

4.4.7 Tridiagonal and Bidiagonal Reduction . . . . . . . . . . 166

�

vii

4.4.8 QR Iteration with Implicit Shifts . . . . . . . . . . . . . 167

4.5 Other Nonsymmetric Eigenvalue Problems . . . . . . . . . . . . 173

4.5.1 Regular Matrix Pencils and Weierstrass Canonical Form 173

4.5.2

Singular Matrix Pencils and the Kronecker

Canonical Form . . . . . . . . . . . . . . . . . . . . . . . 179

4.5.3 Nonlinear Eigenvalue Problems . . . . . . . . . . . . . . 182

4.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

4.7 References and Other Topics for Chapter 4 . . . . . . . . . . . 187

4.8 Questions for Chapter 4 . . . . . . . . . . . . . . . . . . . . . . 187

5 The Symmetric Eigenproblem and Singular Value Decompo-

195

sition

5.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

5.2 Perturbation Theory . . . . . . . . . . . . . . . . . . . . . . . . 197

5.2.1 Relative Perturbation Theory . . . . . . . . . . . . . . . 207

5.3 Algorithms for the Symmetric Eigenproblem . . . . . . . . . . . 210

5.3.1 Tridiagonal QR Iteration . . . . . . . . . . . . . . . . . 212

5.3.2 Rayleigh Quotient Iteration . . . . . . . . . . . . . . . . 215

5.3.3 Divide-and-Conquer . . . . . . . . . . . . . . . . . . . . 217

5.3.4 Bisection and Inverse Iteration . . . . . . . . . . . . . . 228

5.3.5

Jacobi’s Method . . . . . . . . . . . . . . . . . . . . . . 232

5.3.6 Performance Comparison . . . . . . . . . . . . . . . . . 236

5.4 Algorithms for the Singular Value Decomposition . . . . . . . . 237

5.4.1 QR Iteration and Its Variations for the Bidiagonal SVD 242

5.4.2 Computing the Bidiagonal SVD to High Relative Accuracy246

5.4.3

Jacobi’s Method for the SVD . . . . . . . . . . . . . . . 249

5.5 Difierential Equations and Eigenvalue Problems . . . . . . . . . 254

5.5.1 The Toda Lattice . . . . . . . . . . . . . . . . . . . . . . 255

5.5.2 The Connection to Partial Difierential Equations . . . . 260

5.6 References and Other Topics for Chapter 5 . . . . . . . . . . . 260

5.7 Questions for Chapter 5 . . . . . . . . . . . . . . . . . . . . . . 261

6 Iterative Methods for Linear Systems

265

6.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

6.2 On-line Help for Iterative Methods . . . . . . . . . . . . . . . . 266

6.3 Poisson’s Equation . . . . . . . . . . . . . . . . . . . . . . . . . 267

6.3.1 Poisson’s Equation in One Dimension . . . . . . . . . . 267

6.3.2 Poisson’s Equation in Two Dimensions . . . . . . . . . . 270

6.3.3 Expressing Poisson’s Equation with Kronecker Products 274

6.4 Summary of Methods for Solving Poisson’s Equation . . . . . . 277

6.5 Basic Iterative Methods . . . . . . . . . . . . . . . . . . . . . . 279

6.5.1

Jacobi’s Method . . . . . . . . . . . . . . . . . . . . . . 281

6.5.2 Gauss{Seidel Method . . . . . . . . . . . . . . . . . . . 282

6.5.3

Successive Overrelaxation . . . . . . . . . . . . . . . . . 283

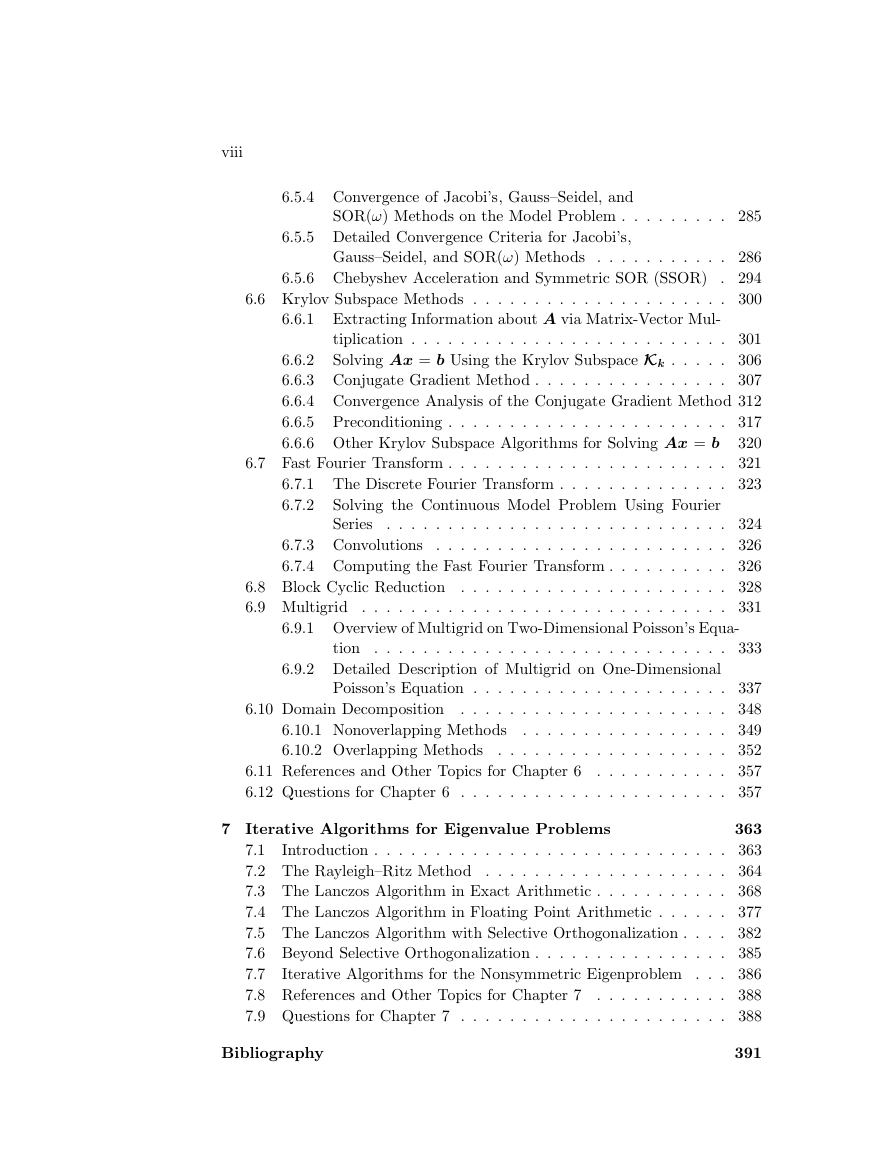

�

viii

6.5.4 Convergence of Jacobi’s, Gauss{Seidel, and

SOR(!) Methods on the Model Problem . . . . . . . . . 285

6.5.5 Detailed Convergence Criteria for Jacobi’s,

Gauss{Seidel, and SOR(!) Methods . . . . . . . . . . . 286

. 294

6.6 Krylov Subspace Methods . . . . . . . . . . . . . . . . . . . . . 300

6.5.6 Chebyshev Acceleration and Symmetric SOR (SSOR)

6.6.1 Extracting Information about A via Matrix-Vector Mul-

tiplication . . . . . . . . . . . . . . . . . . . . . . . . . . 301

Solving Ax = b Using the Krylov Subspace Kk . . . . . 306

6.6.2

6.6.3 Conjugate Gradient Method . . . . . . . . . . . . . . . . 307

6.6.4 Convergence Analysis of the Conjugate Gradient Method 312

6.6.5 Preconditioning . . . . . . . . . . . . . . . . . . . . . . . 317

6.6.6 Other Krylov Subspace Algorithms for Solving Ax = b

320

6.7 Fast Fourier Transform . . . . . . . . . . . . . . . . . . . . . . . 321

6.7.1 The Discrete Fourier Transform . . . . . . . . . . . . . . 323

6.7.2

Solving the Continuous Model Problem Using Fourier

Series

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 324

. . . . . . . . . . . . . . . . . . . . . . . . 326

6.7.3 Convolutions

6.7.4 Computing the Fast Fourier Transform . . . . . . . . . . 326

6.8 Block Cyclic Reduction . . . . . . . . . . . . . . . . . . . . . . 328

6.9 Multigrid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 331

6.9.1 Overview of Multigrid on Two-Dimensional Poisson’s Equa-

tion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333

6.9.2 Detailed Description of Multigrid on One-Dimensional

Poisson’s Equation . . . . . . . . . . . . . . . . . . . . . 337

6.10 Domain Decomposition . . . . . . . . . . . . . . . . . . . . . . 348

. . . . . . . . . . . . . . . . . 349

. . . . . . . . . . . . . . . . . . . 352

6.11 References and Other Topics for Chapter 6 . . . . . . . . . . . 357

6.12 Questions for Chapter 6 . . . . . . . . . . . . . . . . . . . . . . 357

6.10.1 Nonoverlapping Methods

6.10.2 Overlapping Methods

7 Iterative Algorithms for Eigenvalue Problems

363

7.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 363

7.2 The Rayleigh{Ritz Method . . . . . . . . . . . . . . . . . . . . 364

7.3 The Lanczos Algorithm in Exact Arithmetic . . . . . . . . . . . 368

7.4 The Lanczos Algorithm in Floating Point Arithmetic . . . . . . 377

7.5 The Lanczos Algorithm with Selective Orthogonalization . . . . 382

7.6 Beyond Selective Orthogonalization . . . . . . . . . . . . . . . . 385

7.7

Iterative Algorithms for the Nonsymmetric Eigenproblem . . . 386

7.8 References and Other Topics for Chapter 7 . . . . . . . . . . . 388

7.9 Questions for Chapter 7 . . . . . . . . . . . . . . . . . . . . . . 388

Bibliography

391

�

Index

ix

411

�

Preface to the 1996 Edition

The following are natural goals for an aspiring textbook writer of a book like

this one:

1. It should be attractive to flrst year graduate students from a variety of

engineering and scientiflc disciplines.

2. The text should be self-contained, assuming only a good undergraduate

background in linear algebra.

3. The students should learn the mathematical basis of the fleld, as well as

how to build or flnd good numerical software.

4. Students should acquire practical knowledge for solving real problems

e–ciently.

In particular, they should know what the state-of-the-art

techniques are in each area, or when to look for them and where to flnd

them.

5. It should all flt in one semester, since that is what most students have

available for this subject.

The flfth goal is perhaps the hardest to manage. The flrst edition of these

notes was 215 pages, which did flt into one ambitious semester. This edition

has more than doubled in length, which is certainly too much material for

even a heroic semester. The new material reects the growth in research in

the fleld in the last few years, including my own. It also reects requests from

colleagues for sections on certain topics that were treated lightly or not at all

in the flrst edition. Notable additions include

† a class homepage with Matlab source code for examples and homework

problems in the text, and pointers to other on-line software and text-

books;

† more pointers in the text to software for all problems, including a sum-

mary of available software for solving sparse linear systems using direct

methods;

† a new chapter on Krylov subspace methods for eigenvalue problems;

† a section on domain decomposition, including both overlapping and nonover-

lapping methods;

xi

�

xii

Preface

† sections on \relative perturbation theory" and corresponding high-accuracy

algorithms for eigenproblems, like Jacobi and qd;

† more detailed performance comparisons of competing least squares and

symmetric eigenvalue algorithms; and

† new homework problems, including many contributed by Zhaojun Bai.

A reasonable one-semester curriculum could consist of the following chap-

ters and sections:

† all of Chapter 1;

† Chapter 2, excluding sections 2.2.1, 2.4.3, 2.5, 2.6.3, and 2.6.4;

† Chapter 3, excluding section 3.5;

† Chapter 4, up to and including section 4.4.5;

† Chapter 5, excluding sections 5.2.1, 5.3.5, 5.4 and 5.5; and

† Chapter 6, excluding sections 6.3.3, 6.5.5, 6.5.6, 6.6.6, 6.7.2, 6.7.3, 6.7.4,

6.8, 6.9.2, and 6.10.

Homework problems are marked Easy, Medium or Hard, according to their

di–culty. Problems involving signiflcant amounts of programming are marked

\programming".

Many people have helped contribute to this text, notably Zhaojun Bai,

Alan Edelman, Velvel Kahan, Richard Lehoucq, Beresford Parlett, and many

anonymous referees, all of whom made detailed comments on various parts of

the text. Table 2.2 is taken from the PhD thesis of my student Xiaoye Li. Alan

Edelman at MIT and Martin Gutknecht at ETH Zurich provided hospitable

surroundings at their institutions while this flnal edition was being prepared.

Many students at Courant, Berkeley, Kentucky and MIT have listened to and

helped debug this material over the years, and deserve thanks. Finally, Kathy

Yelick has contributed scientiflc comments, latex consulting, and moral support

over more years than either of us expected this project to take.

James Demmel

MIT

September, 1996

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc