MatConvNet

Convolutional Neural Networks for MATLAB

Andrea Vedaldi

Karel Lenc

Ankush Gupta

i

�

ii

Abstract

MatConvNet is an implementation of Convolutional Neural Networks (CNNs)

for MATLAB. The toolbox is designed with an emphasis on simplicity and flexibility.

It exposes the building blocks of CNNs as easy-to-use MATLAB functions, providing

routines for computing linear convolutions with filter banks, feature pooling, and many

more. In this manner, MatConvNet allows fast prototyping of new CNN architec-

tures; at the same time, it supports efficient computation on CPU and GPU allowing

to train complex models on large datasets such as ImageNet ILSVRC. This document

provides an overview of CNNs and how they are implemented in MatConvNet and

gives the technical details of each computational block in the toolbox.

�

Contents

1 Introduction to MatConvNet

1.1 Getting started . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 MatConvNet at a glance

. . . . . . . . . . . . . . . . . . . . . . . . . . .

1.3 Documentation and examples

. . . . . . . . . . . . . . . . . . . . . . . . . .

1.4 Speed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.5 Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 Neural Network Computations

2.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2 Network structures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2.1

Sequences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2.2 Directed acyclic graphs . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3 Computing derivatives with backpropagation . . . . . . . . . . . . . . . . . .

2.3.1 Derivatives of tensor functions . . . . . . . . . . . . . . . . . . . . . .

2.3.2 Derivatives of function compositions

. . . . . . . . . . . . . . . . . .

2.3.3 Backpropagation networks . . . . . . . . . . . . . . . . . . . . . . . .

2.3.4 Backpropagation in DAGs . . . . . . . . . . . . . . . . . . . . . . . .

2.3.5 DAG backpropagation networks . . . . . . . . . . . . . . . . . . . . .

3 Wrappers and pre-trained models

3.1 Wrappers

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

SimpleNN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.1.1

3.1.2 DagNN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.2 Pre-trained models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3 Learning models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4 Running large scale experiments . . . . . . . . . . . . . . . . . . . . . . . . .

4 Computational blocks

4.1 Convolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2 Convolution transpose (deconvolution)

. . . . . . . . . . . . . . . . . . . . .

4.3 Spatial pooling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.4 Activation functions

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.5 Spatial bilinear resampling . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6 Normalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . .

4.6.1 Local response normalization (LRN)

1

2

4

5

6

7

9

9

10

10

11

12

12

13

14

15

18

21

21

21

21

22

23

23

25

25

27

29

29

30

30

30

iii

�

iv

CONTENTS

4.6.2 Batch normalization . . . . . . . . . . . . . . . . . . . . . . . . . . .

Spatial normalization . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6.3

4.6.4

Softmax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7 Categorical losses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.1 Classification losses . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.2 Attribute losses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.8 Comparisons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

p-distance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.8.1

5 Geometry

5.1 Preliminaries

5.2 Simple filters

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.2.1 Pooling in Caffe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.3 Convolution transpose . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4 Transposing receptive fields

. . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5 Composing receptive fields . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.6 Overlaying receptive fields . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6 Implementation details

6.1 Convolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.2 Convolution transpose . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.3 Spatial pooling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.4 Activation functions

6.4.1 ReLU . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.4.2

Sigmoid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.5 Spatial bilinear resampling . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.6 Normalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.6.1 Local response normalization (LRN)

. . . . . . . . . . . . . . . . . .

6.6.2 Batch normalization . . . . . . . . . . . . . . . . . . . . . . . . . . .

Spatial normalization . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.6.3

6.6.4

Softmax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.7 Categorical losses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.7.1 Classification losses . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.7.2 Attribute losses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.8 Comparisons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

p-distance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.8.1

Bibliography

30

31

31

31

32

33

34

34

37

37

38

38

40

41

42

42

43

43

44

45

45

45

46

46

46

46

47

48

48

49

49

49

50

50

51

�

Chapter 1

Introduction to MatConvNet

MatConvNet is a MATLAB toolbox implementing Convolutional Neural Networks (CNN)

for computer vision applications. Since the breakthrough work of [7], CNNs have had a

major impact in computer vision, and image understanding in particular, essentially replacing

traditional image representations such as the ones implemented in our own VLFeat [11] open

source library.

While most CNNs are obtained by composing simple linear and non-linear filtering op-

erations such as convolution and rectification, their implementation is far from trivial. The

reason is that CNNs need to be learned from vast amounts of data, often millions of images,

requiring very efficient implementations. As most CNN libraries, MatConvNet achieves

this by using a variety of optimizations and, chiefly, by supporting computations on GPUs.

Numerous other machine learning, deep learning, and CNN open source libraries exist.

To cite some of the most popular ones: CudaConvNet,1 Torch,2 Theano,3 and Caffe4. Many

of these libraries are well supported, with dozens of active contributors and large user bases.

Therefore, why creating yet another library?

The key motivation for developing MatConvNet was to provide an environment par-

ticularly friendly and efficient for researchers to use in their investigations.5 MatConvNet

achieves this by its deep integration in the MATLAB environment, which is one of the most

popular development environments in computer vision research as well as in many other areas.

In particular, MatConvNet exposes as simple MATLAB commands CNN building blocks

such as convolution, normalisation and pooling (chapter 4); these can then be combined and

extended with ease to create CNN architectures. While many of such blocks use optimised

CPU and GPU implementations written in C++ and CUDA (section section 1.4), MATLAB

native support for GPU computation means that it is often possible to write new blocks

in MATLAB directly while maintaining computational efficiency. Compared to writing new

CNN components using lower level languages, this is an important simplification that can

significantly accelerate testing new ideas. Using MATLAB also provides a bridge towards

1https://code.google.com/p/cuda-convnet/

2http://cilvr.nyu.edu/doku.php?id=code:start

3http://deeplearning.net/software/theano/

4http://caffe.berkeleyvision.org

5While from a user perspective MatConvNet currently relies on MATLAB, the library is being devel-

oped with a clean separation between MATLAB code and the C++ and CUDA core; therefore, in the future

the library may be extended to allow processing convolutional networks independently of MATLAB.

1

�

2

CHAPTER 1.

INTRODUCTION TO MATCONVNET

other areas; for instance, MatConvNet was recently used by the University of Arizona in

planetary science, as summarised in this NVIDIA blogpost.6

MatConvNet can learn large CNN models such AlexNet [7] and the very deep net-

works of [9] from millions of images. Pre-trained versions of several of these powerful models

can be downloaded from the MatConvNet home page7. While powerful, MatConvNet

remains simple to use and install. The implementation is fully self-contained, requiring only

MATLAB and a compatible C++ compiler (using the GPU code requires the freely-available

CUDA DevKit and a suitable NVIDIA GPU). As demonstrated in fig. 1.1 and section 1.1,

it is possible to download, compile, and install MatConvNet using three MATLAB com-

mands. Several fully-functional examples demonstrating how small and large networks can

be learned are included. Importantly, several standard pre-trained network can be immedi-

ately downloaded and used in applications. A manual with a complete technical description

of the toolbox is maintained along with the toolbox.8 These features make MatConvNet

useful in an educational context too.9

MatConvNet is open-source released under a BSD-like license. It can be downloaded

from http://www.vlfeat.org/matconvnet as well as from GitHub.10.

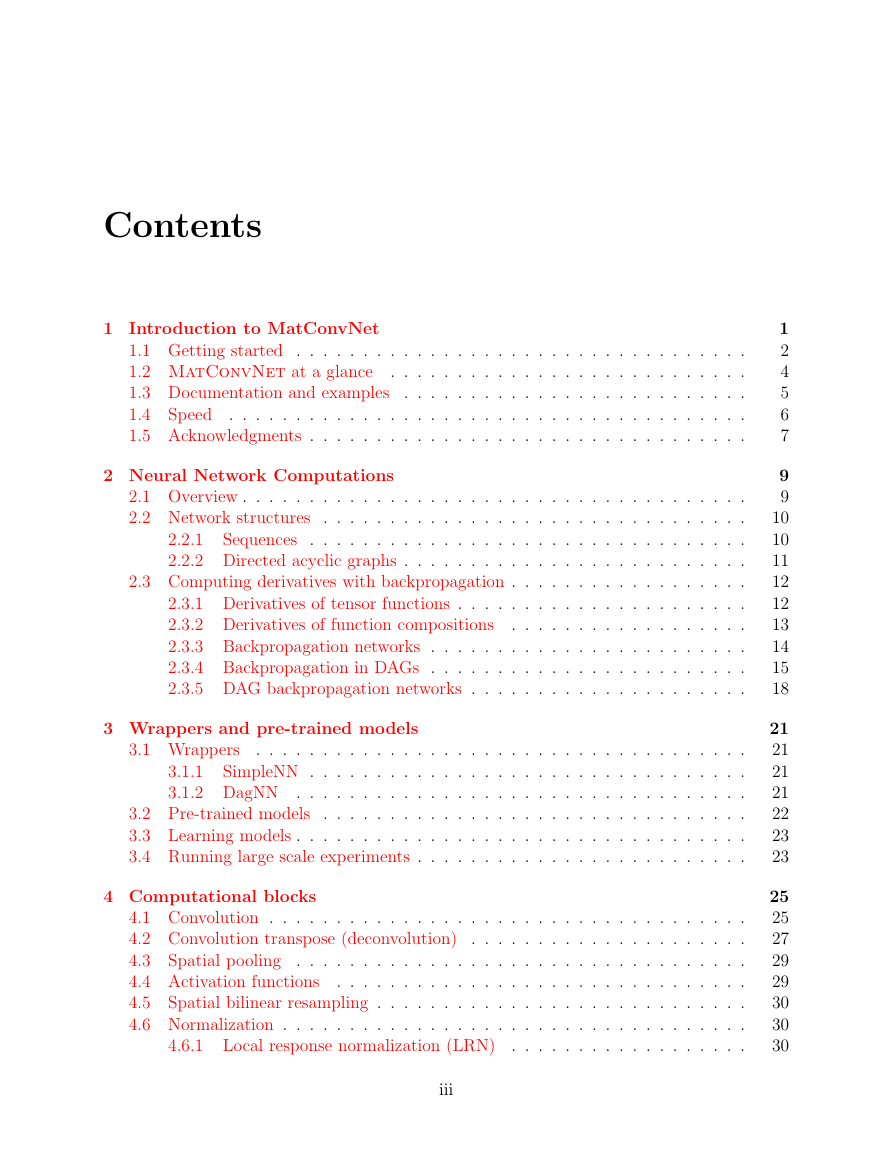

1.1 Getting started

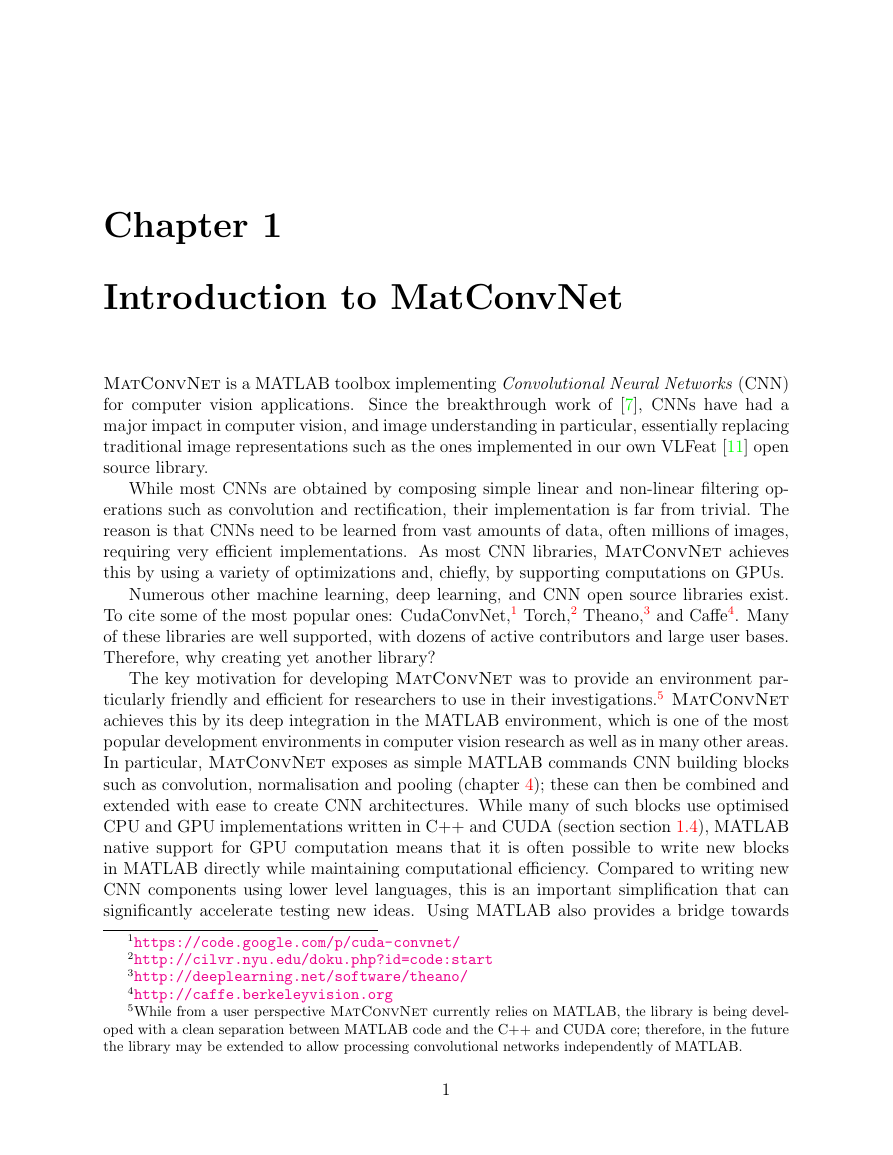

MatConvNet is simple to install and use. fig. 1.1 provides a complete example that clas-

sifies an image using a latest-generation deep convolutional neural network. The example

includes downloading MatConvNet, compiling the package, downloading a pre-trained CNN

model, and evaluating the latter on one of MATLAB’s stock images.

The key command in this example is vl_simplenn, a wrapper that takes as input the

CNN net and the pre-processed image im_ and produces as output a structure res of results.

This particular wrapper can be used to model networks that have a simple structure, namely

a chain of operations. Examining the code of vl_simplenn (edit vl_simplenn in MatCon-

vNet) we note that the wrapper transforms the data sequentially, applying a number of

MATLAB functions as specified by the network configuration. These function, discussed in

detail in chapter 4, are called “building blocks” and constitute the backbone of MatCon-

vNet.

While most blocks implement simple operations, what makes them non trivial is their

efficiency (section 1.4) as well as support for backpropagation (section 2.3) to allow learning

CNNs. Next, we demonstrate how to use one of such building blocks directly. For the sake of

the example, consider convolving an image with a bank of linear filters. Start by reading an

image in MATLAB, say using im = single(imread('peppers.png')), obtaining a H × W × D

array im, where D = 3 is the number of colour channels in the image. Then create a bank

of K = 16 random filters of size 3 × 3 using f = randn(3,3,3,16,'single'). Finally, convolve the

6http://devblogs.nvidia.com/parallelforall/deep-learning-image-understanding-planetary-science/

7http://www.vlfeat.org/matconvnet/

8http://www.vlfeat.org/matconvnet/matconvnet-manual.pdf

9An example laboratory experience based on MatConvNet can be downloaded from http://www.

robots.ox.ac.uk/~vgg/practicals/cnn/index.html.

10http://www.github.com/matconvnet

�

1.1. GETTING STARTED

3

'matconvnet−1.0−beta12.tar.gz']) ;

% install and compile MatConvNet (run once)

untar(['http://www.vlfeat.org/matconvnet/download/' ...

cd matconvnet−1.0−beta12

run matlab/vl_compilenn

% download a pre−trained CNN from the web (run once)

urlwrite(...

'http://www.vlfeat.org/matconvnet/models/imagenet−vgg−f.mat', ...

'imagenet−vgg−f.mat') ;

% setup MatConvNet

run matlab/vl_setupnn

% load the pre−trained CNN

net = load('imagenet−vgg−f.mat') ;

% load and preprocess an image

im = imread('peppers.png') ;

im_ = imresize(single(im), net.meta.normalization.imageSize(1:2)) ;

im_ = im_ − net.meta.normalization.averageImage ;

% run the CNN

res = vl_simplenn(net, im_) ;

% show the classification result

scores = squeeze(gather(res(end).x)) ;

[bestScore, best] = max(scores) ;

figure(1) ; clf ; imagesc(im) ;

title(sprintf('%s (%d), score %.3f',...

net.classes.description{best}, best, bestScore)) ;

Figure 1.1: A complete example including download, installing, compiling and running Mat-

ConvNet to classify one of MATLAB stock images using a large CNN pre-trained on

ImageNet.

bell pepper (946), score 0.704�

4

CHAPTER 1.

INTRODUCTION TO MATCONVNET

image with the filters by using the command y = vl_nnconv(x,f,[]). This results in an array

y with K channels, one for each of the K filters in the bank.

While users are encouraged to make use of the blocks directly to create new architectures,

MATLAB provides wrappers such as vl_simplenn for standard CNN architectures such as

AlexNet [7] or Network-in-Network [8]. Furthermore, the library provides numerous examples

(in the examples/ subdirectory), including code to learn a variety of models on the MNIST,

CIFAR, and ImageNet datasets. All these examples use the examples/cnn_train training

code, which is an implementation of stochastic gradient descent (section 3.3). While this

training code is perfectly serviceable and quite flexible, it remains in the examples/ subdirec-

tory as it is somewhat problem-specific. Users are welcome to implement their optimisers.

1.2 MatConvNet at a glance

MatConvNet has a simple design philosophy. Rather than wrapping CNNs around complex

layers of software, it exposes simple functions to compute CNN building blocks, such as linear

convolution and ReLU operators, directly as MATLAB commands. These building blocks are

easy to combine into complete CNNs and can be used to implement sophisticated learning

algorithms. While several real-world examples of small and large CNN architectures and

training routines are provided, it is always possible to go back to the basics and build your

own, using the efficiency of MATLAB in prototyping. Often no C coding is required at all

to try new architectures. As such, MatConvNet is an ideal playground for research in

computer vision and CNNs.

MatConvNet contains the following elements:

• CNN computational blocks. A set of optimized routines computing fundamental

building blocks of a CNN. For example, a convolution block is implemented by

y=vl_nnconv(x,f,b) where x is an image, f a filter bank, and b a vector of biases (sec-

tion 4.1). The derivatives are computed as [dzdx,dzdf,dzdb] = vl_nnconv(x,f,b,dzdy)

where dzdy is the derivative of the CNN output w.r.t y (section 4.1). chapter 4 de-

scribes all the blocks in detail.

• CNN wrappers. MatConvNet provides a simple wrapper, suitably invoked by

vl_simplenn, that implements a CNN with a linear topology (a chain of blocks). It also

provides a much more flexible wrapper supporting networks with arbitrary topologies,

encapsulated in the dagnn.DagNN MATLAB class.

• Example applications. MatConvNet provides several examples of learning CNNs with

stochastic gradient descent and CPU or GPU, on MNIST, CIFAR10, and ImageNet

data.

• Pre-trained models. MatConvNet provides several state-of-the-art pre-trained CNN

models that can be used off-the-shelf, either to classify images or to produce image

encodings in the spirit of Caffe or DeCAF.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc