�

Automatic Speech and Speaker Recognition

Au tomatic Speech and Speaker R ecognition: Large Margin and Ker n el Methods Edited by Joseph Keshet

and Samy Bengio © 2009 John Wiley & Sons, Ltd. ISBN: 978-0-470-69683-5

Automatic Speech and

Speaker Recognition

Large Margin and Kernel Methods

Joseph Keshet

IDIAP Research Institute, Martigny, Switzerland

Samy Bengio

Google Inc., Mountain View, CA, USA

A John Wiley and Sons, Ltd, Publication

�

This edition first published 2009

c 2009 John Wiley & Sons Ltd

Registered office

John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ,

United Kingdom

For details of our global editorial offices, for customer services and for information about how to apply

for permission to reuse the copyright material in this book please see our website at www.wiley.com.

The right of the author to be identified as the author of this work has been asserted in accordance with

the Copyright, Designs and Patents Act 1988.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or

transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or

otherwise, except as permitted by the UK Copyright, Designs and Patents Act 1988, without the prior

permission of the publisher.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print

may not be available in electronic books.

Designations used by companies to distinguish their products are often claimed as trademarks. All

brand names and product names used in this book are trade names, service marks, trademarks or

registered trademarks of their respective owners. The publisher is not associated with any product or

vendor mentioned in this book. This publication is designed to provide accurate and authoritative

information in regard to the subject matter covered. It is sold on the understanding that the publisher is

not engaged in rendering professional services. If professional advice or other expert assistance is

required, the services of a competent professional should be sought.

Library of Congress Cataloging-in-Publication Data

Automatic speech and speaker recognition : large margin and kernel methods /

edited by Joseph Keshet, Samy Bengio.

p. cm.

Includes bibliographical references and index.

ISBN 978-0-470-69683-5 (cloth)

1. Automatic speech recognition. I. Keshet, Joseph. II. Bengio, Samy.

TK7895.S65A983 2009

006.4’54–dc22

A catalogue record for this book is available from the British Library.

ISBN 9780470696835 (H/B)

Set in 10/12pt Times by Sunrise Setting Ltd, Torquay, UK.

Printed in Singapore by Markono Print Media Pte Ltd.

2008038551

�

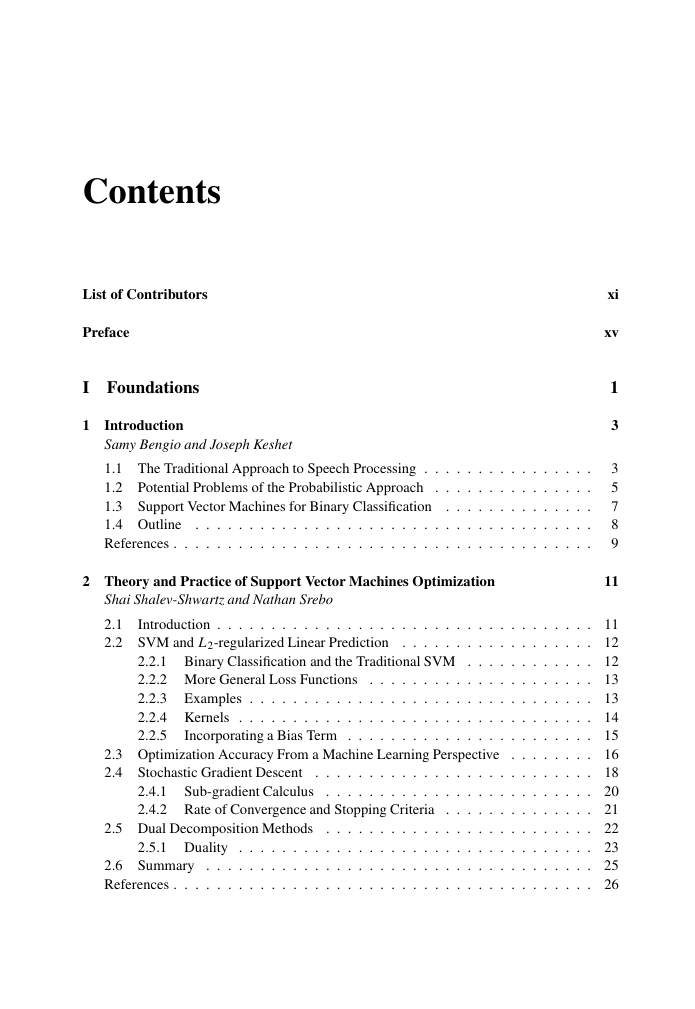

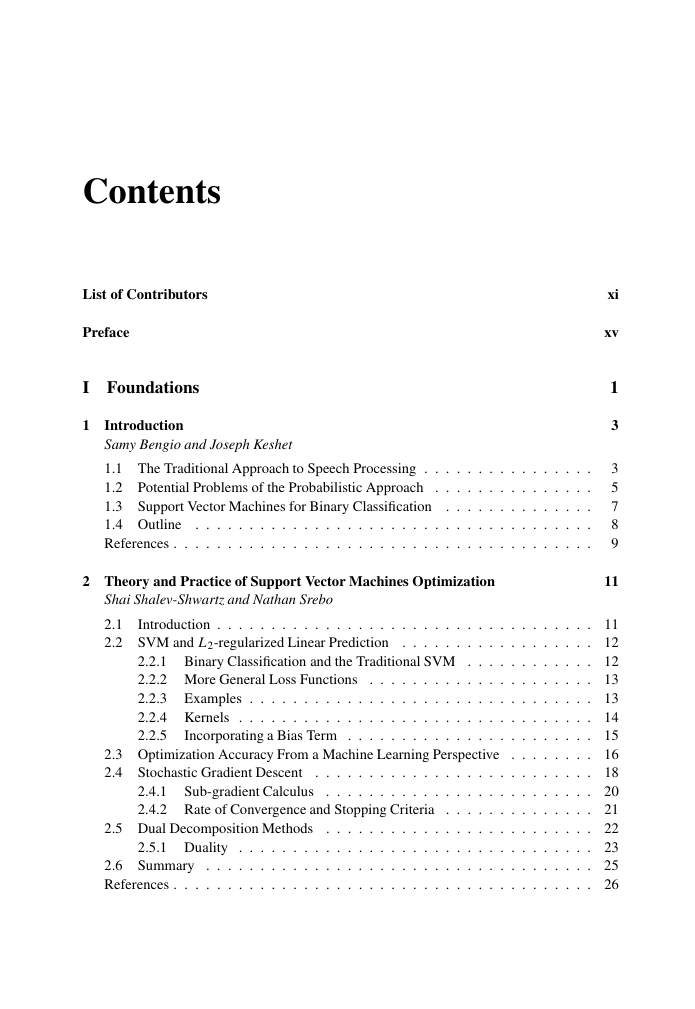

Contents

List of Contributors

Preface

I Foundations

1

Introduction

Samy Bengio and Joseph Keshet

1.1 The Traditional Approach to Speech Processing .

. . . .

1.2 Potential Problems of the Probabilistic Approach . . . .

1.3 Support Vector Machines for Binary Classification . . .

. . . .

1.4 Outline

References .

. . . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

2 Theory and Practice of Support Vector Machines Optimization

Shai Shalev-Shwartz and Nathan Srebo

xi

xv

1

3

3

5

7

8

9

11

. . .

. . .

. . . .

. . . .

. . . .

. . .

. . . .

. . . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

Introduction .

. . . .

. . . .

2.2.1 Binary Classification and the Traditional SVM .

. . . .

2.2.2 More General Loss Functions

2.2.3 Examples . .

. . . .

. . . .

2.2.4 Kernels . . .

2.2.5

. . . .

. .

. . . .

. . . .

Incorporating a Bias Term . . . .

. . . .

. . . .

. . . .

. . . .

. . . .

2.1

2.2 SVM and L2-regularized Linear Prediction . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

2.3 Optimization Accuracy From a Machine Learning Perspective . . . .

2.4 Stochastic Gradient Descent

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

2.4.1

. . . .

2.4.2 Rate of Convergence and Stopping Criteria . . .

. . . .

. . . .

. . . .

. . . .

2.5.1 Duality . . .

. . . .

. . . .

2.6 Summary . .

References .

. . . .

2.5 Dual Decomposition Methods

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

Sub-gradient Calculus

. . .

. . .

. . .

. . .

. .

. . . .

. . . .

. . . .

. . . .

. . . . 11

. . . . 12

. . . . 12

. . . . 13

. . . . 13

. . . . 14

. . . . 15

. . . . 16

. . . . 18

. . . . 20

. . . . 21

. . . . 22

. . . . 23

. . . . 25

. . . . 26

�

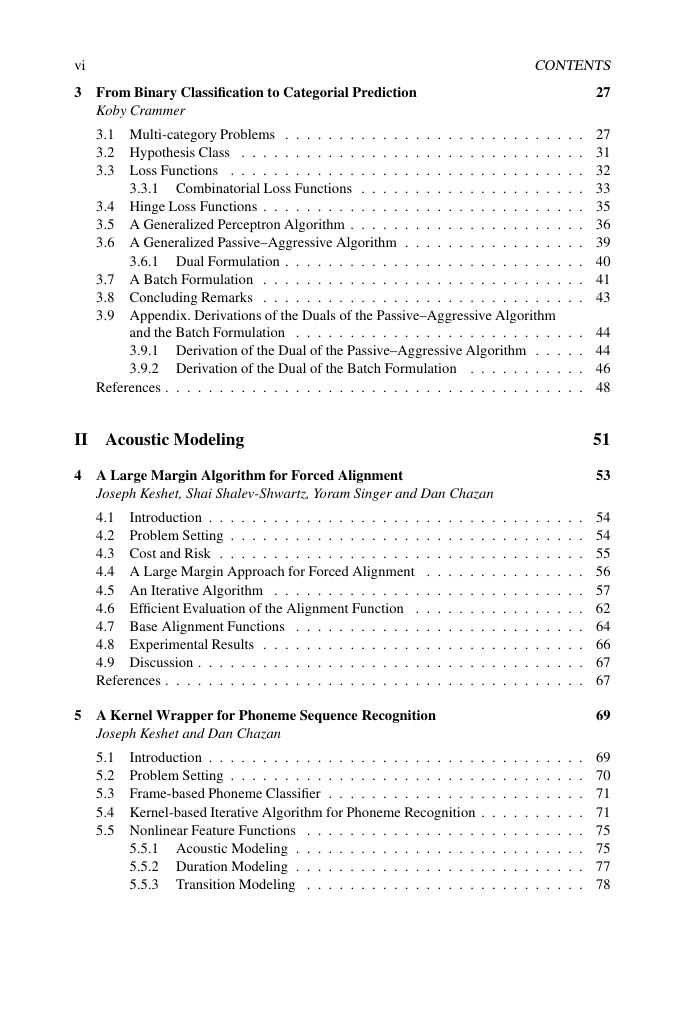

vi

3 From Binary Classification to Categorial Prediction

CONTENTS

27

. . .

. . . .

. . .

. . . .

. . . .

. . . .

. . . .

Koby Crammer

3.1 Multi-category Problems . .

. . .

3.2 Hypothesis Class

3.3 Loss Functions

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

3.3.1 Combinatorial Loss Functions . . .

. . . .

3.4 Hinge Loss Functions .

. . . .

3.5 A Generalized Perceptron Algorithm . . . .

. . . .

3.6 A Generalized Passive–Aggressive Algorithm . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

3.7 A Batch Formulation .

3.8 Concluding Remarks .

. . . .

3.9 Appendix. Derivations of the Duals of the Passive–Aggressive Algorithm

. . . .

and the Batch Formulation .

3.9.1 Derivation of the Dual of the Passive–Aggressive Algorithm . .

. . . .

3.9.2 Derivation of the Dual of the Batch Formulation . . . .

. . . .

. . . .

3.6.1 Dual Formulation . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

References . .

. . . .

. . . .

. . . .

. . .

. . . .

. . .

. . . .

. . . .

. . . .

. . . .

. . .

. . . .

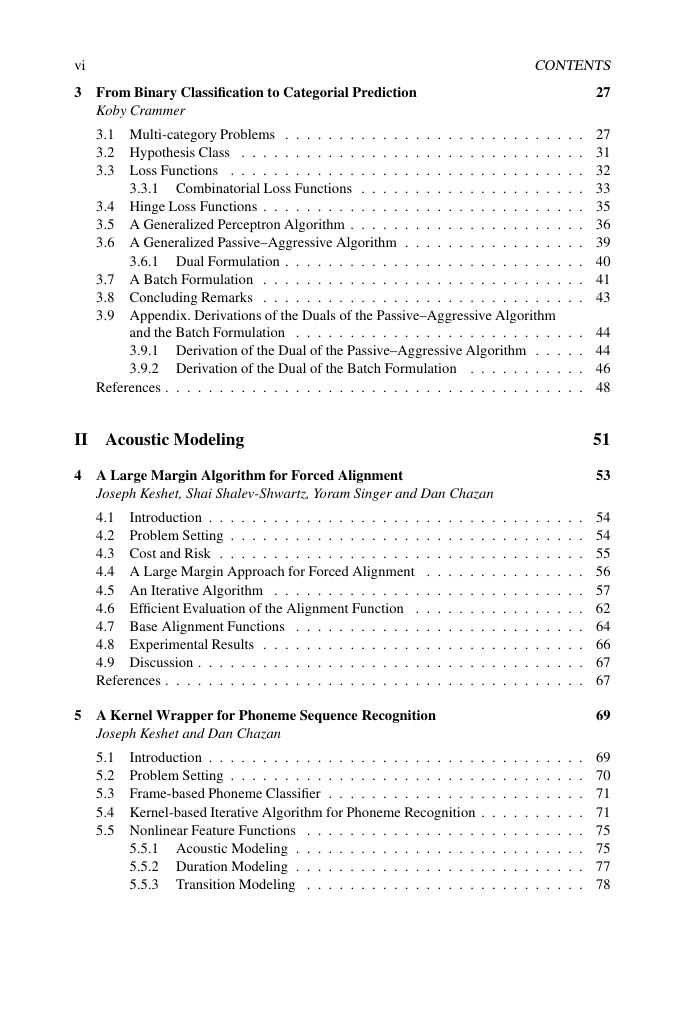

II Acoustic Modeling

4 A Large Margin Algorithm for Forced Alignment

. . . 27

. . . 31

. . . 32

. . . 33

. . . 35

. . . 36

. . . 39

. . . 40

. . . 41

. . . 43

. . . 44

. . . 44

. . . 46

. . . 48

51

53

. . .

. . .

. . .

Introduction . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

Joseph Keshet, Shai Shalev-Shwartz, Yoram Singer and Dan Chazan

. . . .

4.1

. . . .

. . . .

4.2 Problem Setting . . . .

. . . .

4.3 Cost and Risk .

. . . .

.

4.4 A Large Margin Approach for Forced Alignment

4.5 An Iterative Algorithm . . .

. . . .

4.6 Efficient Evaluation of the Alignment Function . .

4.7 Base Alignment Functions .

. . . .

. . . .

. . .

4.8 Experimental Results .

. . . .

. . .

. . . .

4.9 Discussion . . .

References . .

. . . .

. . . .

. . .

. . . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . 54

. . . 54

. . . 55

. . . 56

. . . 57

. . . 62

. . . 64

. . . 66

. . . 67

. . . 67

5 A Kernel Wrapper for Phoneme Sequence Recognition

69

. . .

. . .

Introduction . .

Joseph Keshet and Dan Chazan

. . . .

5.1

. . . .

. . . .

. . . .

5.2 Problem Setting . . . .

. . . .

5.3 Frame-based Phoneme Classifier . .

. . . .

5.4 Kernel-based Iterative Algorithm for Phoneme Recognition . . .

. . . .

5.5 Nonlinear Feature Functions . . . .

. . . .

. . . .

5.5.1 Acoustic Modeling .

. . . .

5.5.2 Duration Modeling .

. . . .

5.5.3 Transition Modeling . . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . 69

. . . 70

. . . 71

. . . 71

. . . 75

. . . 75

. . . 77

. . . 78

�

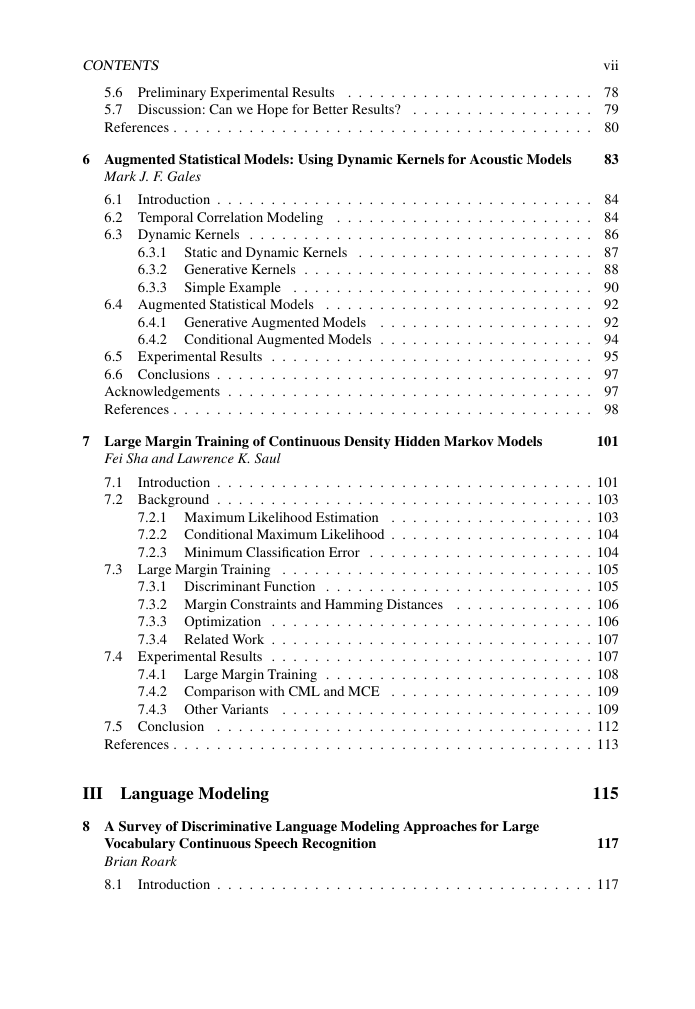

CONTENTS

5.6 Preliminary Experimental Results

. . . .

5.7 Discussion: Can we Hope for Better Results? . .

. . . .

References .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

vii

. . . .

. . . .

. . . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . . 78

. . . . 79

. . . . 80

6 Augmented Statistical Models: Using Dynamic Kernels for Acoustic Models

83

. . .

. . .

. . . .

Introduction .

Mark J. F. Gales

6.1

. . . .

6.2 Temporal Correlation Modeling .

6.3 Dynamic Kernels . .

. . . .

6.3.1

6.3.2 Generative Kernels . . . .

. . . .

6.3.3

. .

. . . .

. . . .

. . . .

Static and Dynamic Kernels . . .

. . . .

. . . .

. . . .

6.4.1 Generative Augmented Models

.

6.4.2 Conditional Augmented Models .

. . . .

. . . .

. . . .

. . . .

6.5 Experimental Results . . .

. . .

6.6 Conclusions .

. . . .

. . .

Acknowledgements . . . .

References .

. . . .

. . .

6.4 Augmented Statistical Models

. . . .

. . . .

. . . .

. . . .

Simple Example .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . . 84

. . . . 84

. . . . 86

. . . . 87

. . . . 88

. . . . 90

. . . . 92

. . . . 92

. . . . 94

. . . . 95

. . . . 97

. . . . 97

. . . . 98

7 Large Margin Training of Continuous Density Hidden Markov Models

101

. . .

. . .

. . . .

. . . .

. . . .

. . . .

Fei Sha and Lawrence K. Saul

7.1

Introduction .

7.2 Background .

. . . .

. . . .

. .

. . . .

. . . .

7.3 Large Margin Training . .

. . . .

. . . .

7.2.1 Maximum Likelihood Estimation . . . .

7.2.2 Conditional Maximum Likelihood . . . .

7.2.3 Minimum Classification Error

. . . .

. . . .

. . . .

. . . .

7.3.1 Discriminant Function . .

7.3.2 Margin Constraints and Hamming Distances

7.3.3 Optimization . . .

7.3.4 Related Work . . .

7.4 Experimental Results . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

7.4.1 Large Margin Training . .

. . . .

7.4.2 Comparison with CML and MCE . . . .

. . . .

7.4.3 Other Variants

. . . .

. . . .

. . . .

. . . .

7.5 Conclusion .

References .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . . 101

. . . . 103

. . . . 103

. . . . 104

. . . . 104

. . . . 105

. . . . 105

. . . . 106

. . . . 106

. . . . 107

. . . . 107

. . . . 108

. . . . 109

. . . . 109

. . . . 112

. . . . 113

III Language Modeling

8 A Survey of Discriminative Language Modeling Approaches for Large

115

117

Vocabulary Continuous Speech Recognition

Brian Roark

8.1

Introduction .

. . . .

. . . .

. . .

. . . .

. . . .

. . . .

. . .

. . . .

. . . . 117

�

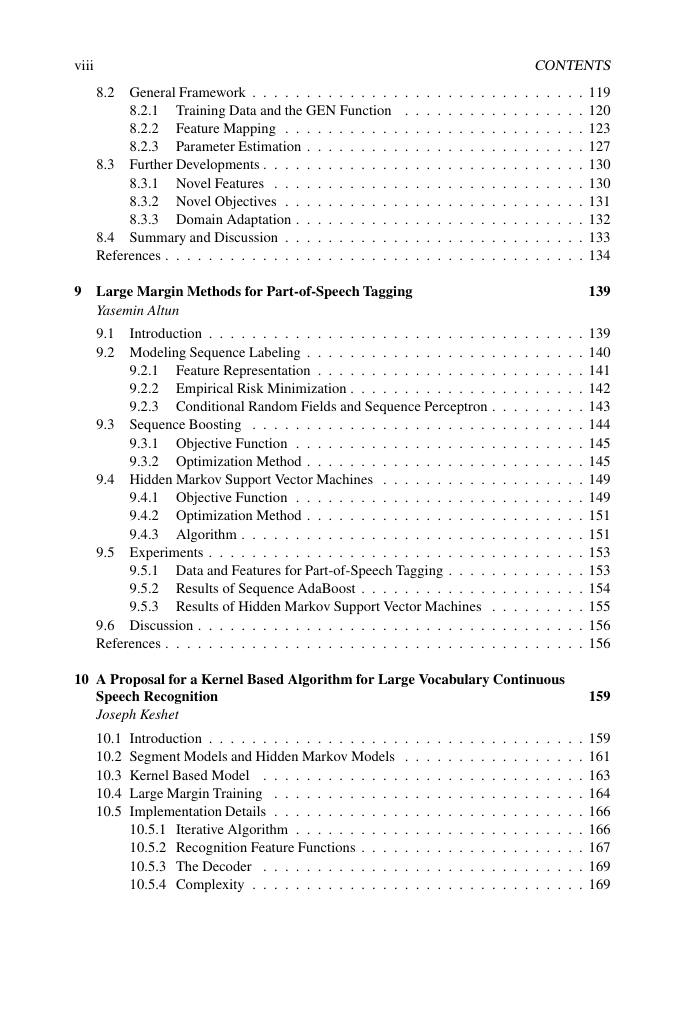

viii

. . .

. . . .

. . . .

8.3 Further Developments .

8.2 General Framework . .

. . . .

8.2.1 Training Data and the GEN Function . . .

. . . .

8.2.2

. . . .

8.2.3

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

Feature Mapping . .

. . . .

Parameter Estimation . . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

8.3.1 Novel Features . . .

8.3.2 Novel Objectives . .

8.3.3 Domain Adaptation .

8.4 Summary and Discussion . .

References . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

CONTENTS

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . 119

. . . 120

. . . 123

. . . 127

. . . 130

. . . 130

. . . 131

. . . 132

. . . 133

. . . 134

9 Large Margin Methods for Part-of-Speech Tagging

. . . .

. . .

Introduction . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

9.3 Sequence Boosting . .

Yasemin Altun

. . . .

9.1

9.2 Modeling Sequence Labeling . . . .

Feature Representation . . .

. . . .

. . .

9.3.1 Objective Function .

. . . .

9.3.2 Optimization Method . . . .

. . . .

. . . .

. . . .

9.4 Hidden Markov Support Vector Machines .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

9.2.1

. . . .

9.2.2 Empirical Risk Minimization . . . .

. . . .

9.2.3 Conditional Random Fields and Sequence Perceptron . .

. . . .

. . .

. . . .

. . .

. . . .

. . .

. . . .

. . .

. . . .

. . .

. . . .

. . .

. . . .

. . .

. . . .

. . .

. . . .

9.5.1 Data and Features for Part-of-Speech Tagging . .

9.5.2 Results of Sequence AdaBoost . . .

. . . .

. . .

9.5.3 Results of Hidden Markov Support Vector Machines . .

. . . .

. . . .

9.4.1 Objective Function .

. . . .

9.4.2 Optimization Method . . . .

. . . .

9.4.3 Algorithm . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

9.6 Discussion . . .

. . . .

References . .

9.5 Experiments . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

139

. . . 139

. . . 140

. . . 141

. . . 142

. . . 143

. . . 144

. . . 145

. . . 145

. . . 149

. . . 149

. . . 151

. . . 151

. . . 153

. . . 153

. . . 154

. . . 155

. . . 156

. . . 156

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

10 A Proposal for a Kernel Based Algorithm for Large Vocabulary Continuous

. . .

. . . .

. . . .

. . . .

Speech Recognition

Joseph Keshet

10.1 Introduction . .

. . . .

10.2 Segment Models and Hidden Markov Models . . .

. . . .

10.3 Kernel Based Model

. . . .

. . .

. . . .

. . . .

10.4 Large Margin Training . . .

. . . .

. . . .

10.5 Implementation Details . . .

. . . .

10.5.1 Iterative Algorithm .

. . . .

10.5.2 Recognition Feature Functions . . .

. . . .

. . . .

. . . .

10.5.3 The Decoder

.

10.5.4 Complexity . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . .

. . .

.

159

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . 159

. . . 161

. . . 163

. . . 164

. . . 166

. . . 166

. . . 167

. . . 169

. . . 169

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc