World Journal of Engineering and Technology, 2017, 5, 81-92

http://www.scirp.org/journal/wjet

ISSN Online: 2331-4249

ISSN Print: 2331-4222

Vision-Based Vehicle Detection for a Forward

Collision Warning System

Din-Chang Tseng, Ching-Chun Huang

Institute of Computer Science and Information Engineering, National Central University, Taiwan

How to cite this paper: Tseng, D.-C. and

Huang, C.-C. (2017) Vision-Based Vehicle

Detection for a Forward Collision Warning

System. World Journal of Engineering and

Technology, 5, 81-92.

https://doi.org/10.4236/wjet.2017.53B010

Received: July 11, 2017

Accepted: August 8, 2017

Published: August 11, 2017

Abstract

A weather-adaptive forward collision warning (FCW) system was presented

by applying local features for vehicle detection and global features for vehicle

verification. In the system, horizontal and vertical edge maps are separately

calculated. Then edge maps are threshold by an adaptive threshold value to

adapt the brightness variation. Third, the edge points are linked to generate

possible objects. Fourth, the objects are judged based on edge response, loca-

tion, and symmetry to generate vehicle candidates. At last, a method based on

the principal component analysis (PCA) is proposed to verify the vehicle can-

didates. The proposed FCW system has the following properties: 1) the edge

extraction is adaptive to various lighting condition; 2) the local features are

mutually processed to improve the reliability of vehicle detection; 3) the hie-

rarchical schemes of vehicle detection enhance the adaptability to various

weather conditions; 4) the PCA-based verification can strictly eli- minate the

candidate regions without vehicle appearance.

Keywords

Forward Collision Warning (FCW), Advanced Driver Assistance System

(ADAS), Weather Adaptive, Principal Component Analysis (PCA)

1. Introduction

To avoid the traffic accidents and to decrease injuries and fatalities, many ad-

vanced driving assistance systems (ADASs) have been developed paying more

regard to situations on road for drivers, such as forward collision warning

(FCW), lane departure warning (LDW), blind spot detection (BSD), etc. Based

on our previous studies [1] [2] [3], this study focuses on developing a FCW sys-

tem.

DOI: 10.4236/wjet.2017.53B010 Aug. 11, 2017

81

World Journal of Engineering and Technology

�

D.-C. Tseng, C.-C. Huang

DOI: 10.4236/wjet.2017.53B010

Extensive studies have been proposed for vision-based FCW systems [4] [5]

[6] [7]. Sun et al. [4] and Sivaraman et al. [5] generally divided the vision-based

vehicle detection methods into three categories: knowledge-based, motion-

based, and stereo-based methods. Knowledge-based methods integrate many

features of vehicles to recognize preceding vehicles in images. Most of these me-

thods are implemented by two steps: hypothesis generation (HG) and hypothesis

verification (HV) [4]. The HG step detects all possible vehicle candidates in an

image; the vehicle candidates are then confirmed by the HV step to ensure cor-

rect detections.

Due to the rigidness and the specific appearance of vehicles, many features

such as edges, shadow, symmetry, texture, color, and intensity can be observed

in images. The features used in knowledge-based methods can be further divided

into global features and local features according to whether the vehicle appear-

ance is considered when using the features. A usable FCW system should be sta-

ble in various weather conditions and in various background scenes. Further-

more, the system should get high detecting rate with fewer false alarms by uti-

lizing both local- and global features.

The weather adaptability of edge information is much better than other local

features such as shadow and symmetry. The contour of a vehicle can represent

by horizontal and vertical edges. However, the local edge features are not strict

enough to describe vehicle appearance. The appearance based verification is

better and need to support the vehicle detection based on edge information.

We here propose a FCW system combining the advantage of the global fea-

tures and local features to improve the reliability of vehicle detection. The local

feature is invariant to vehicle direction, lighting condition, and partial occlusion.

The global feature is used to search objects in the image base on whole vehicle

configuration. In the detection stage, all vehicle candidates are located according

to the local as many as possible to avoid missing targets; in the verification stage,

each candidate is confirmed as real target by the global as strictly as possible to

reduce the false alarms.

The proposed FCW system detects vehicles relying on edges; thereby the pro-

posed system can work well under various weather conditions. At first, the lane

detector [1] [2] is performed to limit the searching region of preceding vehicles.

Then an adaptive threshold is used to obtain bi-level horizontal and vertical

edges in the region. The vehicle candidate is generated depending on a valid ho-

rizontal edge and the geometry constrains vertical edges at two ends of the hori-

zontal edge. In verification stage, the vehicle candidates are verified by vehicle

appearance and principal component analysis (PCA). A principal component set

is obtained by applying PCA to a set of canonical vehicle images. These compo-

nents are used to dominate the appearance of vehicle-like regions; therefore, the

regions of vehicle-like objects can be well reconstructed by the principal com-

ponents. The closer the distance between an image and its reconstruction image

is, the higher the probability of the object on the image being a vehicle is.

82

World Journal of Engineering and Technology

�

D.-C. Tseng, C.-C. Huang

2. Vehicle Detection

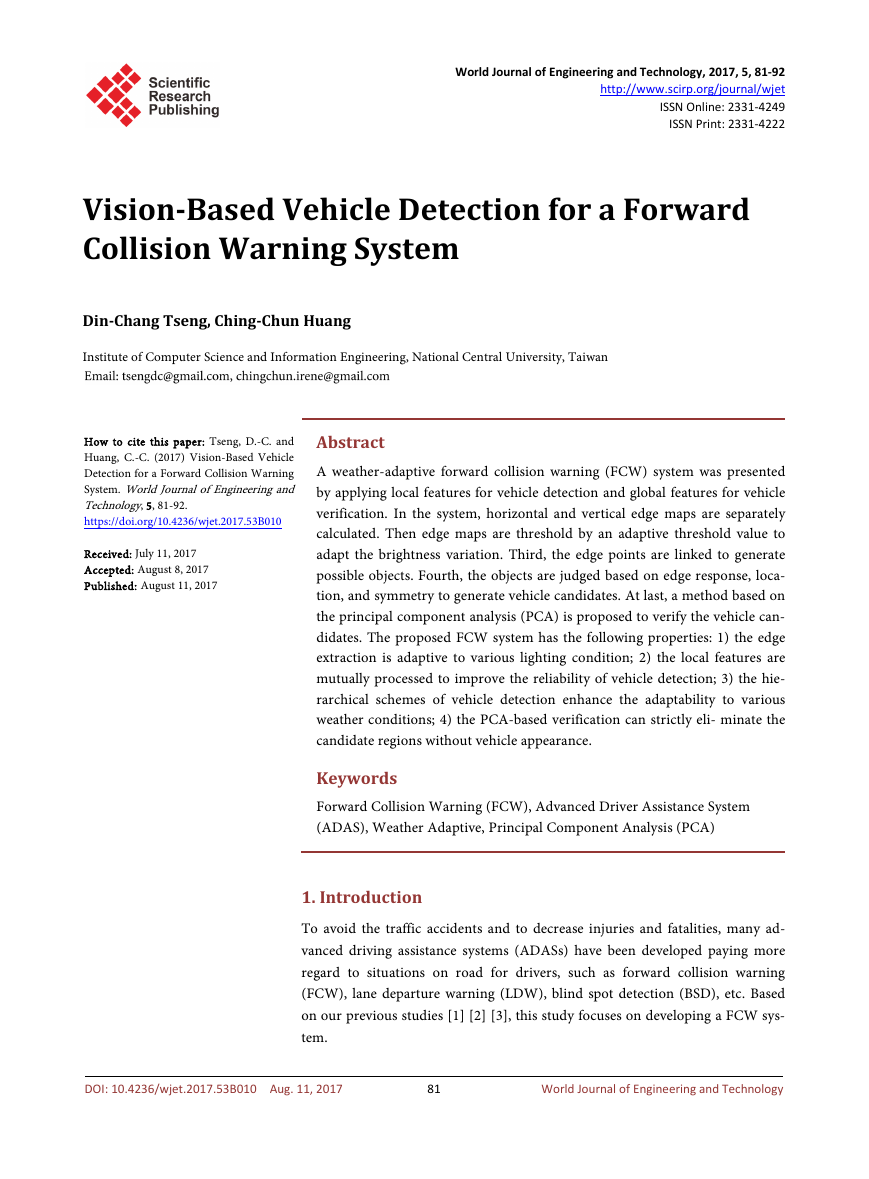

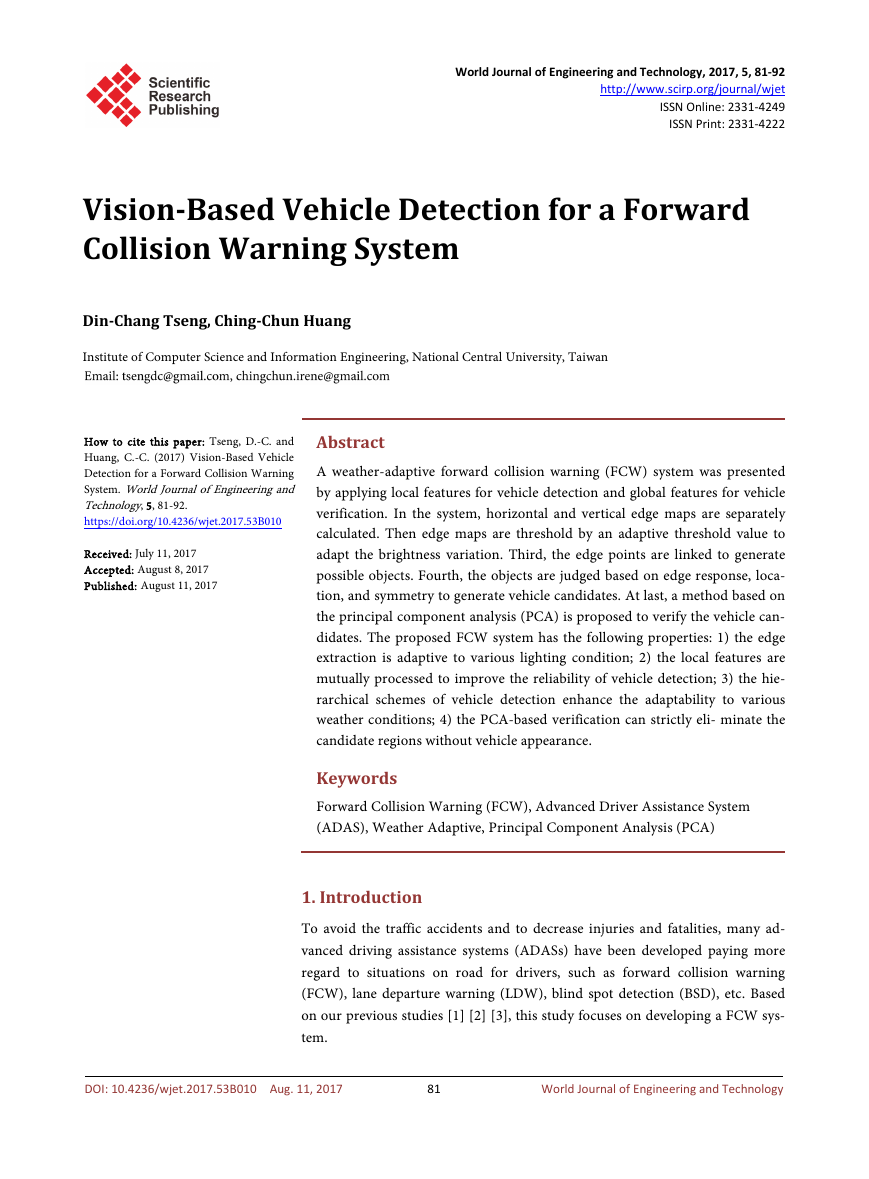

Edge information is an important feature for detecting vehicles in an image, es-

pecially the horizontal edges of underneath shadows. In the detection stage, ve-

hicle candidates are generated based on local edge features. Firstly, the appropri-

ate edge points are extracted in the region of interest (ROI) defined by lane

marks as shown in Figure 1. Then, the significant horizontal edges indicating

vehicle locations are refined. The negative horizontal edges (NHE) are thought

belonging to underneath shadow. The positive horizontal edges (PHE) are

mostly formed by bumper, windshield and roof of vehicles. For each kind of ho-

rizontal edges with different property, a specific procedure to find vertical bor-

ders of vehicles is applied around each horizontal edge. Finally, the horizontal

edges and vertical borders are used to find the bounding boxes of vehicle candi-

dates.

The horizontal edges are expected belonging to underneath shadows or ve-

hicle body. Considering the sunlight influences, the horizontal edges belonging

to underneath shadows are further divided into the moderate or the long by edge

widths. The procedures for searching the paired vertical borders are designed

according to the specific properties of different kinds of horizontal edges. In the

proposed vehicle detection, three detecting methods (Case-1, Case-2, and Case-

3) are respectively designed to generate vehicle candidates based on the hori-

zontal edges of underneath shadows, long underneath shadows, and vehicle bo-

dies. In the situations of clear weather with less influence from sunlight, the un-

derneath shadows are distinct and the widths of shadows are similar to vehicle

width; Case-1 method proposed based on horizontal edges of moderate under-

neath shadows is adequately used to generate candidates. In the situations of

sunny weather with lengthened shadow, there is the strong possibility that only

one vertical border is searched by using Case-1 method; Case-2 method is pro-

posed based on horizontal edge of long underneath shadows to search two cor-

responding vertical borders in a larger region, as shown in Figure 2. In the situ-

ations of bad weather disturbed by water spray and reflection, the underneath

shadows are not reliable and are not observed at the worst; Case-3 method base

on vehicle bodies is applied in a different way to retrieve the missing cases of

using Case-1 and 2 methods.

If the only one vertical edge of the NHE is at the right side, it is taken as the

DOI: 10.4236/wjet.2017.53B010

Figure 1. Two examples of ROI setting based on the detected lane marks.

83

World Journal of Engineering and Technology

�

D.-C. Tseng, C.-C. Huang

Figure 2. An example of the vertical characteristics in a long

underneath shadow area.

right boundary of the window. The left boundaries of all windows are respec-

tively set at the x-positions which are 0.3 wlane to 0.8 wlane away from the right

boundaries, and vice versa. The symmetry of the vertical edge points in the

window is calculated by

symmetry

=

∑ ∑

h

j

1

=

w

/2

i

1

=

0.5

i

(

eng x y

,

w h

)

j

, (1)

where h and w are the height and the width of the window, xi and yj are the x-

and y-locations in the window, eng(xi, yj) is the energy of symmetry at (xi, yj) de-

fined by

eng x y

,

(

i

)

j

I

(

1, if

=

0,

x , y

i

otherwise

ep

)

=

I

(

x

w i

−

, y

j

ep

j

) 1

=

, (2)

where Iep(x, y) is the value of the edge point map Iep at (x, y) and Iep is one of the

vertical, the positive horizontal, and the negative horizontal edge point maps

which are Ivep, Ip_hep, and In_hep.

The horizontal edges in Case-3 are the edges of the vehicle body, though the

components which they belong to are unknown. The y-positions of the horizon-

tal edges can’t indicate the y-positions of the candidate bottoms, which only

gives a hint of the vehicle’s location. In the image, two vertical edges could be

detected at vehicle sides, which are similar to each other in length and y-posi-

tion. Therefore, two characteristic vertical edges are extracted from the areas

around two endpoints of the horizontal edge, respectively. The distance and the

y-position overlapping between two vertical edges are used to determine candi-

date bottom.

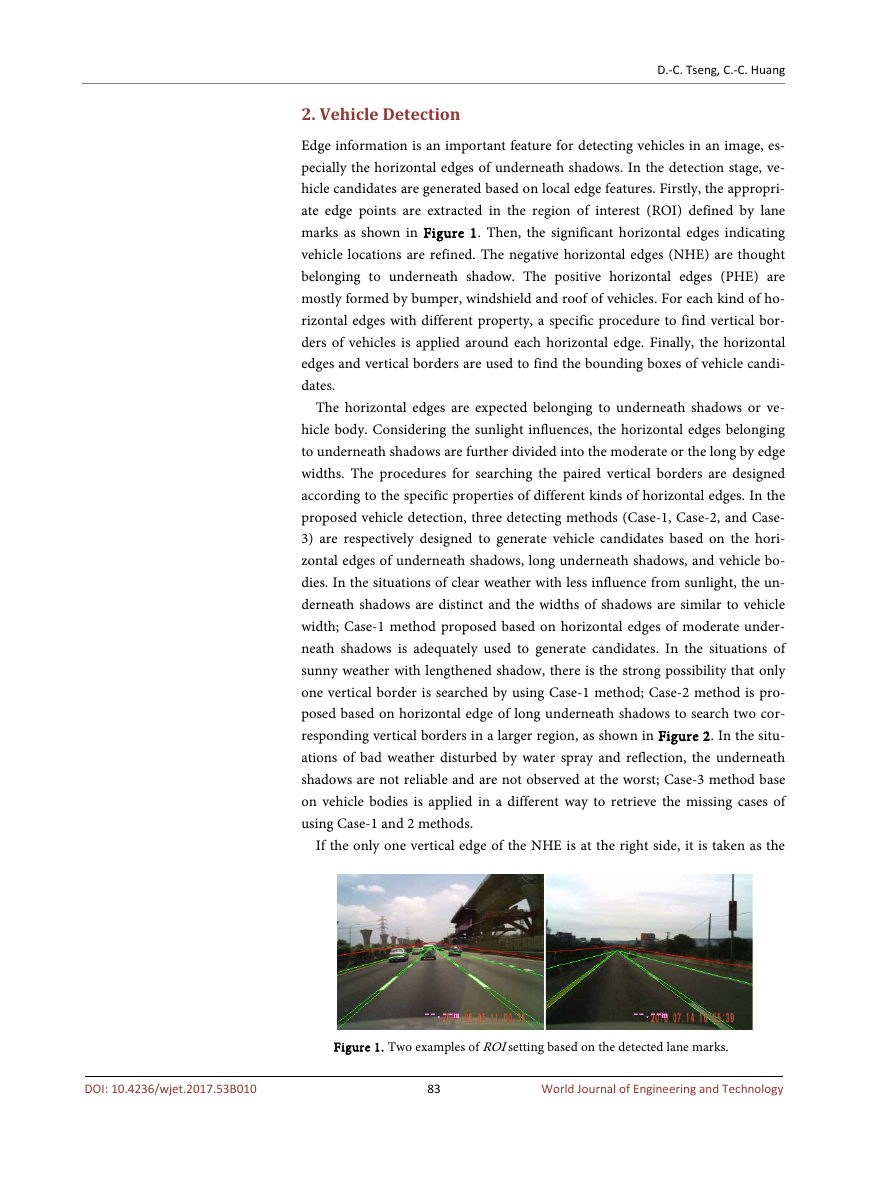

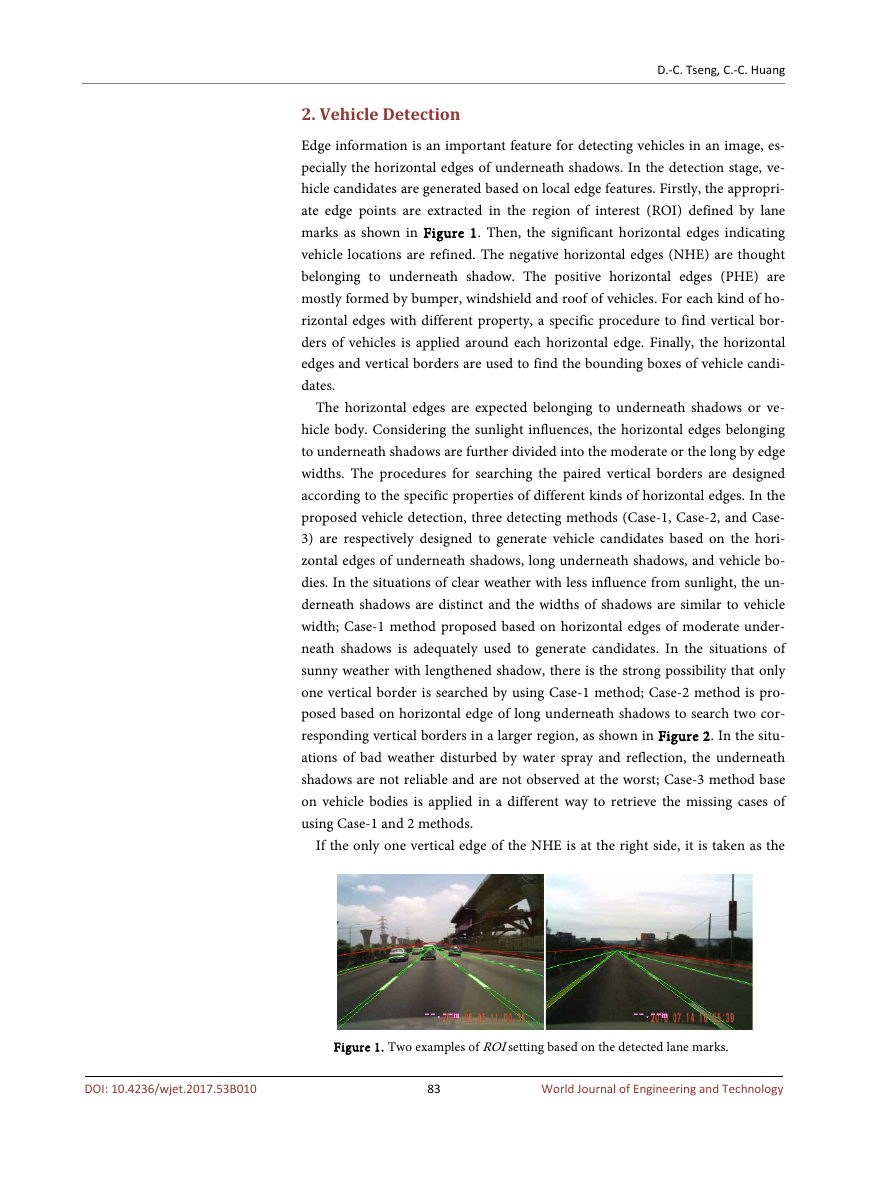

Firstly, the areas for searching the vertical edges are set extending upward and

downward from the horizontal edge endpoints, as the gray regions in Figure 3.

According to the candidate bottoms, the images of the vehicle candidates are

extracted with ratio height/width being 1. In the detecting result, the candidate

locations are represented by “U” shape bounding boxes. The bottom of a “U”

shape bounding box is the candidate bottom. The left and right margins of the

84

World Journal of Engineering and Technology

DOI: 10.4236/wjet.2017.53B010

�

D.-C. Tseng, C.-C. Huang

Figure 3. The searching areas for vertical edges and the long-

est vertical edges in each area.

bounding box are defined as the vertical line segments from (xl, yl) to (xl, yl +

w ) and from (xr, y(r)) to (xr, yr + w ), respectively. w is the width of the can-

didate bottom, that is w = xr – xl. Each horizontal edge is individually deter-

mined if it belongs to a vehicle candidate. Around one vehicle target, there might

be many bounding boxes which are set according to the horizontal edges at dif-

ferent y-positions. To delete the repetitions, the candidate being most closes to

the host vehicle in a candidate group is reserved for the rest processes. That is,

the bounding box with smallest y-position in image is reserved.

3. Vehicle Verification

In the verification stage, the vehicle candidates are determined applying PCA

reconstruction. The reconstruction images are obtained according to the images

of pre-trained eigenvehicles. Then, the image comparison methods are used to

evaluate the likelihood of the vehicle candidates to e vehicle.

3.1. The Principle and Procedure of PCA-based Verification

Principal components analysis (PCA) is a statistical technique used to analyze

and simplify a data set. Each principle component (eigenvector) is obtained with

a corresponding data variance (eigenvalue). The larger the corresponding va-

riance is, the more important the data information contained by the principle

component is. For application of reducing data dimensionality, the principle

components are sorted by the corresponding variance; and the original data are

projected onto the principle components with the largest m variance. PCA is

widely performed to image recognition and compression.

3.2. Eigenvehicle Generation

The images of the eigenvectors obtained by applying PCA to vehicle images are

called “eigenvehicles”. To generate a set of eigenvehicles, a training set of pre-

ceding vehicle images cropped from on-road driving videos are prepared. The

on-road driving videos are captured under various weather conditions; the

85

World Journal of Engineering and Technology

DOI: 10.4236/wjet.2017.53B010

�

D.-C. Tseng, C.-C. Huang

training images are collected with various vehicle types, in various distance far

from the own vehicle, and at the own/adjacent lane. The cropped images of pre-

ceding vehicles are firstly resized to 32 × 32. For each resized image, the edge

map is produced and then normalized by letting the sum of edge values being

equal to 1. The normalized edge maps as the training images are represented as

vectors Γ1, Γ2, …,and ΓM of size N, where M is the number of training images

and N = 32 × 32.

Only the leading eigenvehicles are applied in the verification of vehicle candi-

dates. The sixteen leading eigenvehicles with largest eigenvalues of all are shown

in Figure 4.

Eigenvehicles are easily influenced by background content, foreground size,

illumination variation, and imaged orientation. To solve most of the problems,

the original vehicle images of the training set are manually identified and seg-

mented fitting the contour. The color and illumination factors would be also

eliminated by using edge map and applying normalization to the edge map.

3.3. Evaluation by The Reconstruction Error

For each vehicle candidate, the original image is firstly resized to 32 × 32 and

transformed into a normalized edge map Γ. The edge map Γ subtracts the mean

image Ψ to have the difference, and the difference is then projected onto the M'

leading eigenvehicles. Each value representing a weight would be calculated by

iw

T

= u

i

G Y

( – ),

(3)

where i = 1, 2, …, M'. The reconstruction image

map Γ is then obtained by

Γˆ

of the normalized edge

ˆ

Γ

=

∑ u

w

i

i

M

'

i

1

=

+

Y

.

(4)

The vehicle space is the subspace spanned by the eigenvehicles, and the re-

construction image is the closest point to Γ on the vehicle space. The reconstruc-

tion error of Γ could be easily calculated by Euclidean distance (ED),

d

ˆ

Γ=

− Γ (5)

The reconstruction images are usually dusted by a lot of noises. For more ac-

ˆΓ , the comparing method should be more

curate comparison between Γ and

robust to noise and neighboring variations. The images could be regarded as 3D

models by taking the intensity at the image coordinate (x, y) to be the height of

DOI: 10.4236/wjet.2017.53B010

Figure 4. The top 16 eigenvehicles of the training set.

86

World Journal of Engineering and Technology

�

D.-C. Tseng, C.-C. Huang

the model bin (x, y). The 3D models can be compared to each other by a cross-

bin comparison measurement such as the quadratic-form distance [8], Earth

Mover’s Distance (EMD) [9], and the diffused distance (DD) method [10]. Earth

Mover’s Distance is calculated as

EM

D H

(

,

H

1

2

)

=

n

j

∑ ∑

∑ ∑

m

i

1

=

m

i

1

=

1

=

n

j

d f

ij

f

ij

1

=

ij

, (6)

where H1 and H2 are two compared histograms, fij is the work flow from bin i to

bin j, and dij is the ground distance between bins i and j. The diffused distance is

calculated by

(

K H H

,

1

)2

= ∑

L

l

=

0

d x

( )

l

,

(7)

where d0(x) = H1(x) – H2(x) and dl(x) = [dl–1(x) * φ (x, σ)] ↓2 are different lay-

ers of the diffused temperature field with l = 1, 2, …, L, φ (x, σ) is a Gaussian fil-

ter of standard deviation σ, and the notation “↓2“ denotes half size down- sam-

pling.

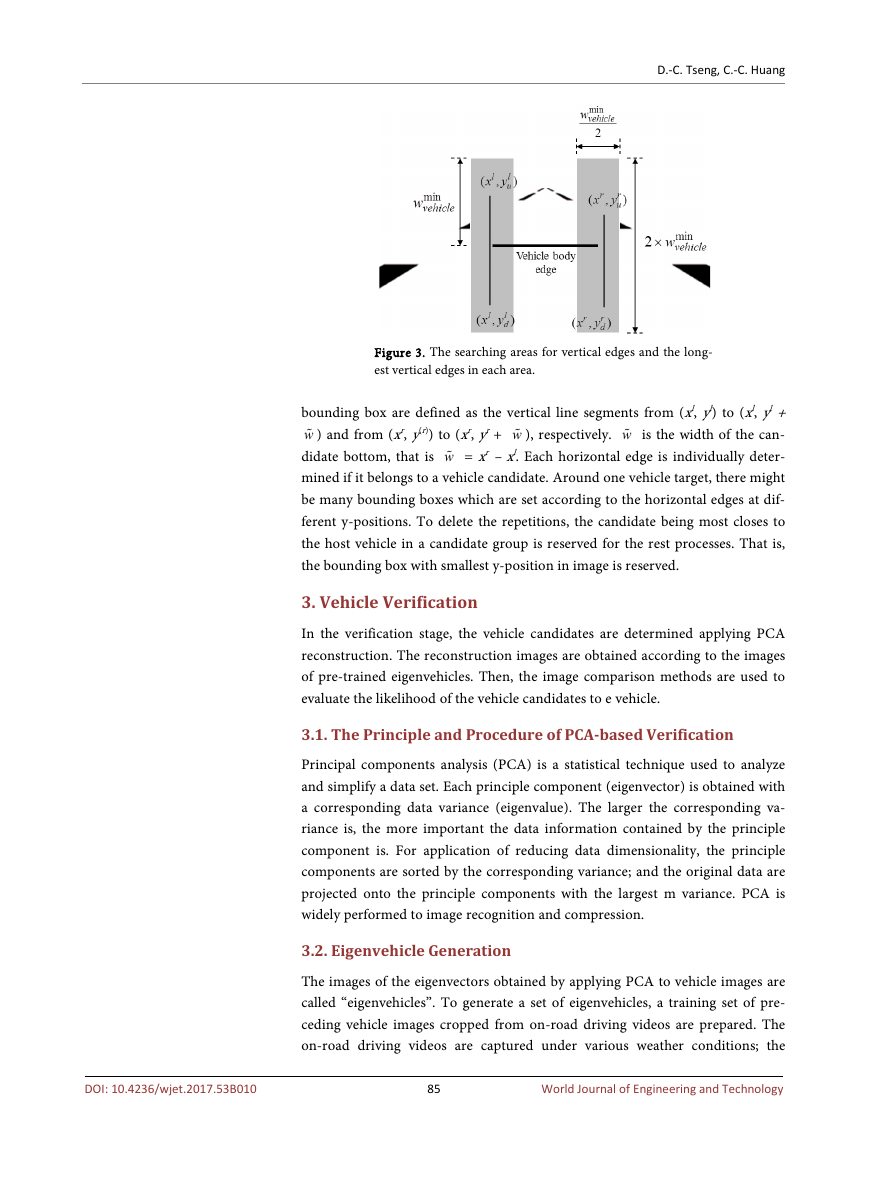

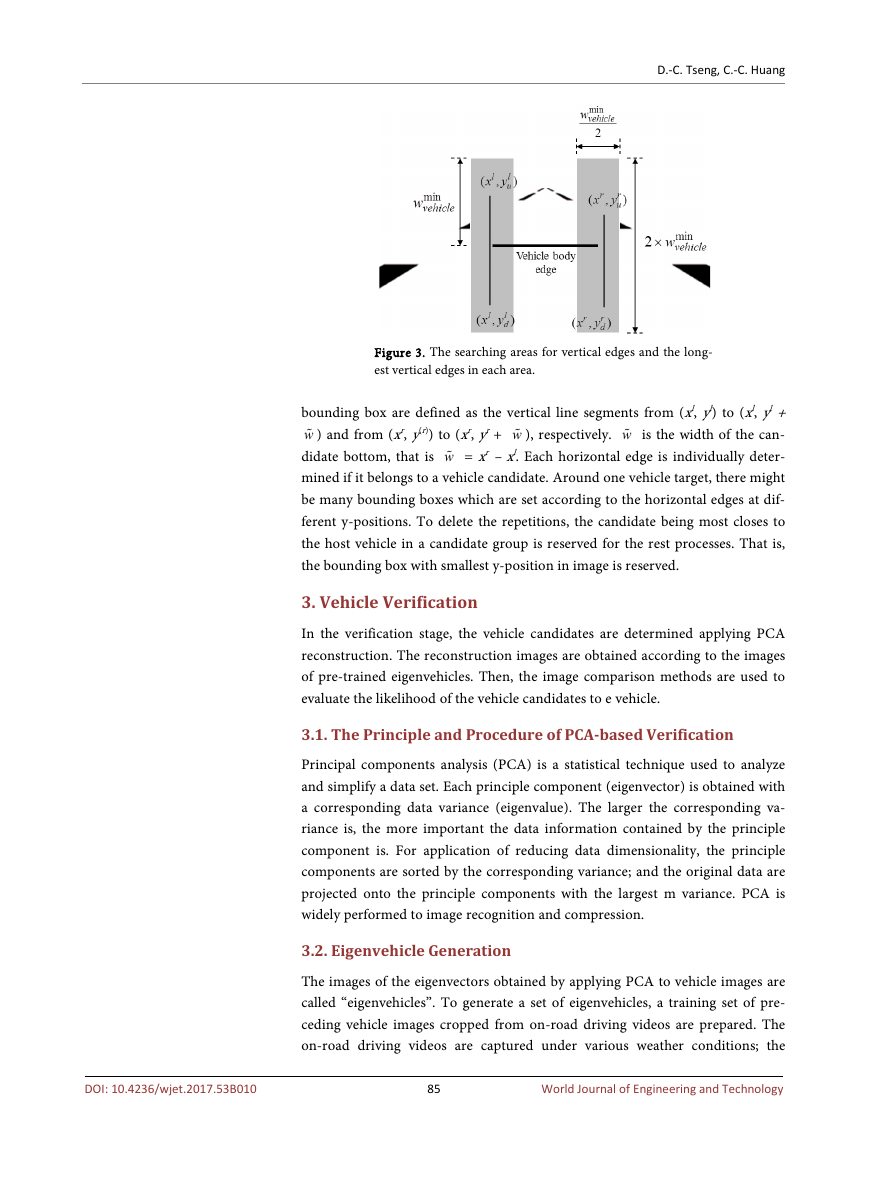

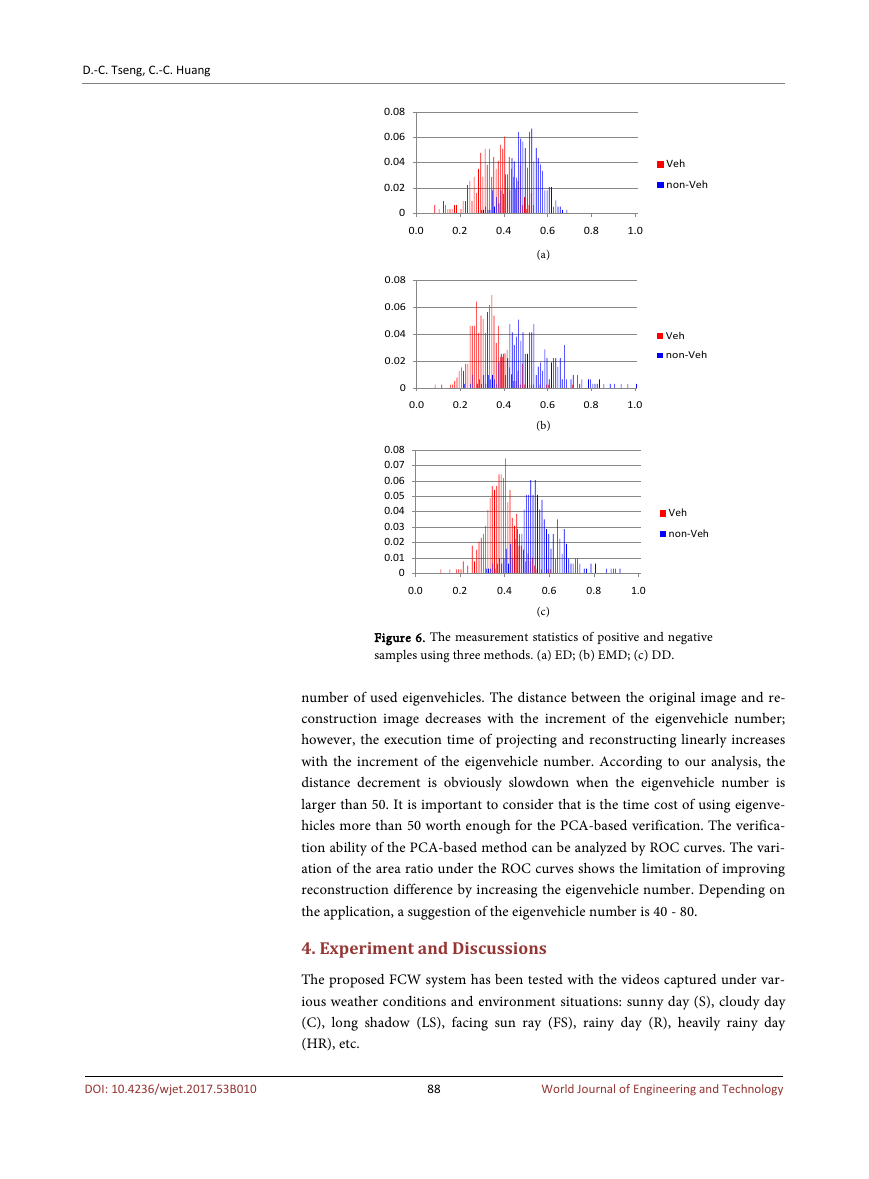

The recognition abilities of ED, EMD, and DD can be compared by receiver

operating characteristic (ROC) curves to decide the appropriate measurement

for the proposed vehicle verification, as shown in Figure 5. There are 500 posi-

tive and 500 negative samples used to evaluate each ROC curve. The ROC curves

are plotted with the false positive rate in x-axis and the true positive rate in y-

axis by changing the threshold of judging a sample as a vehicle. The statistics of

positive and negative samples measured using ED, EMD and DD are shown in

Figure 6. The x-axis is the measured distance and the y-axis is the accumulation

of samples. The threshold of measured distance is changed in the statistics from

minimum to maximum distance values to calculate the ratio of positive and

negative samples being recognized as a vehicle.

The verification based on PCA reconstruction is deeply influenced by the

DOI: 10.4236/wjet.2017.53B010

Figure 5. The ROC curves of the distance measurements: ED, EMD, and

DD.

87

World Journal of Engineering and Technology

�

D.-C. Tseng, C.-C. Huang

0.08

0.06

0.04

0.02

0

0.08

0.06

0.04

0.02

0

0.08

0.07

0.06

0.05

0.04

0.03

0.02

0.01

0

0.0

0.2

0.4

0.8

1.0

0.6

(a)

0.0

0.2

0.4

0.8

1.0

0.6

(b)

0.0

0.2

0.4

0.8

1.0

0.6

(c)

Veh

non-Veh

Veh

non-Veh

Veh

non-Veh

Figure 6. The measurement statistics of positive and negative

samples using three methods. (a) ED; (b) EMD; (c) DD.

number of used eigenvehicles. The distance between the original image and re-

construction image decreases with the increment of the eigenvehicle number;

however, the execution time of projecting and reconstructing linearly increases

with the increment of the eigenvehicle number. According to our analysis, the

distance decrement is obviously slowdown when the eigenvehicle number is

larger than 50. It is important to consider that is the time cost of using eigenve-

hicles more than 50 worth enough for the PCA-based verification. The verifica-

tion ability of the PCA-based method can be analyzed by ROC curves. The vari-

ation of the area ratio under the ROC curves shows the limitation of improving

reconstruction difference by increasing the eigenvehicle number. Depending on

the application, a suggestion of the eigenvehicle number is 40 - 80.

4. Experiment and Discussions

The proposed FCW system has been tested with the videos captured under var-

ious weather conditions and environment situations: sunny day (S), cloudy day

(C), long shadow (LS), facing sun ray (FS), rainy day (R), heavily rainy day

(HR), etc.

88

World Journal of Engineering and Technology

DOI: 10.4236/wjet.2017.53B010

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc