4

1

0

2

t

c

O

8

]

E

N

.

s

c

[

4

v

8

2

8

7

.

4

0

4

1

:

v

i

X

r

a

Deep Learning in Neural Networks: An Overview

Technical Report IDSIA-03-14 / arXiv:1404.7828 v4 [cs.NE] (88 pages, 888 references)

J¨urgen Schmidhuber

The Swiss AI Lab IDSIA

Istituto Dalle Molle di Studi sull’Intelligenza Artificiale

University of Lugano & SUPSI

Galleria 2, 6928 Manno-Lugano

Switzerland

8 October 2014

Abstract

In recent years, deep artificial neural networks (including recurrent ones) have won numerous

contests in pattern recognition and machine learning. This historical survey compactly summarises

relevant work, much of it from the previous millennium. Shallow and deep learners are distin-

guished by the depth of their credit assignment paths, which are chains of possibly learnable, causal

links between actions and effects. I review deep supervised learning (also recapitulating the history

of backpropagation), unsupervised learning, reinforcement learning & evolutionary computation,

and indirect search for short programs encoding deep and large networks.

LATEX source: http://www.idsia.ch/˜juergen/DeepLearning8Oct2014.tex

Complete BIBTEX file (888 kB): http://www.idsia.ch/˜juergen/deep.bib

Preface

This is the preprint of an invited Deep Learning (DL) overview. One of its goals is to assign credit

to those who contributed to the present state of the art. I acknowledge the limitations of attempting

to achieve this goal. The DL research community itself may be viewed as a continually evolving,

deep network of scientists who have influenced each other in complex ways. Starting from recent DL

results, I tried to trace back the origins of relevant ideas through the past half century and beyond,

sometimes using “local search” to follow citations of citations backwards in time. Since not all DL

publications properly acknowledge earlier relevant work, additional global search strategies were em-

ployed, aided by consulting numerous neural network experts. As a result, the present preprint mostly

consists of references. Nevertheless, through an expert selection bias I may have missed important

work. A related bias was surely introduced by my special familiarity with the work of my own DL

research group in the past quarter-century. For these reasons, this work should be viewed as merely a

snapshot of an ongoing credit assignment process. To help improve it, please do not hesitate to send

corrections and suggestions to juergen@idsia.ch.

1

�

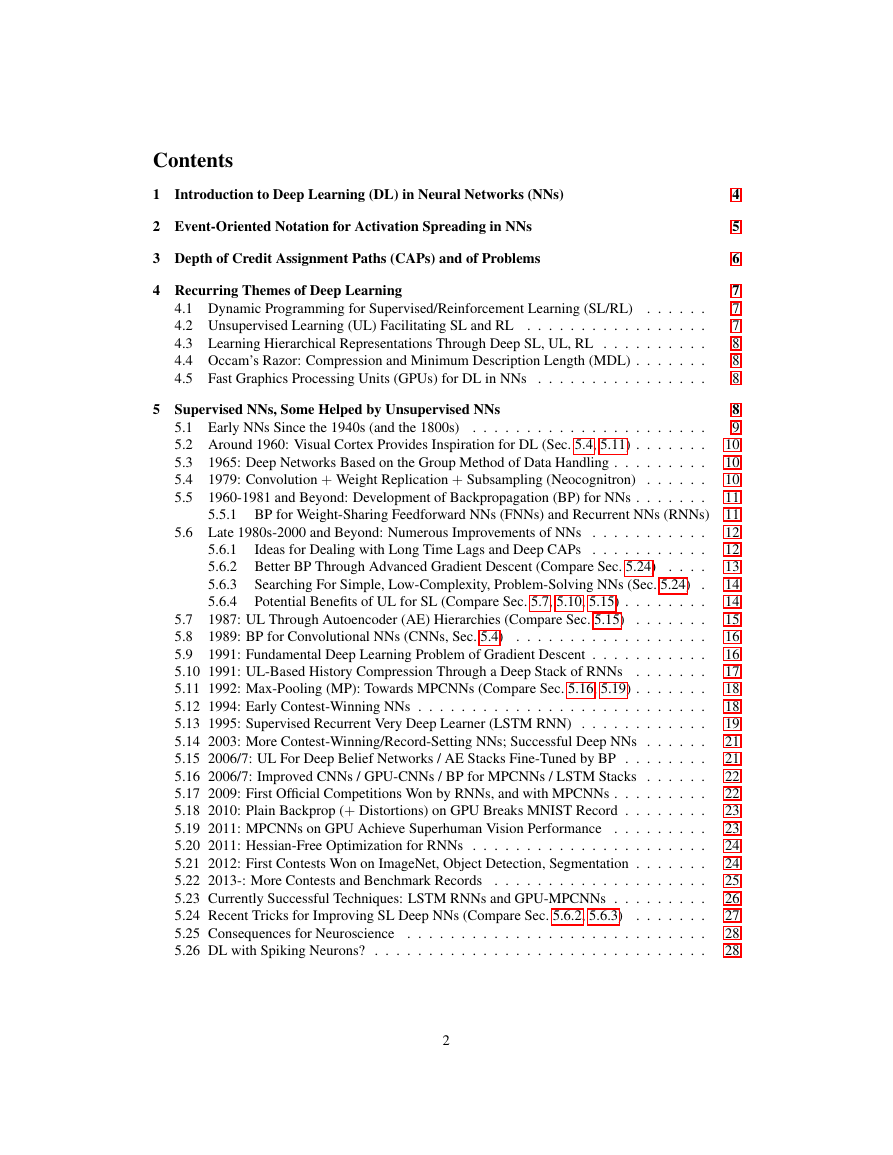

Contents

1 Introduction to Deep Learning (DL) in Neural Networks (NNs)

2 Event-Oriented Notation for Activation Spreading in NNs

3 Depth of Credit Assignment Paths (CAPs) and of Problems

4 Recurring Themes of Deep Learning

4.1 Dynamic Programming for Supervised/Reinforcement Learning (SL/RL)

. . . . . .

4.2 Unsupervised Learning (UL) Facilitating SL and RL . . . . . . . . . . . . . . . . .

4.3 Learning Hierarchical Representations Through Deep SL, UL, RL . . . . . . . . . .

4.4 Occam’s Razor: Compression and Minimum Description Length (MDL) . . . . . . .

4.5 Fast Graphics Processing Units (GPUs) for DL in NNs . . . . . . . . . . . . . . . .

5 Supervised NNs, Some Helped by Unsupervised NNs

.

5.1 Early NNs Since the 1940s (and the 1800s)

. . . . . . . . . . . . . . . . . . . . . .

5.2 Around 1960: Visual Cortex Provides Inspiration for DL (Sec. 5.4, 5.11) . . . . . . .

1965: Deep Networks Based on the Group Method of Data Handling . . . . . . . . .

5.3

5.4

1979: Convolution + Weight Replication + Subsampling (Neocognitron)

. . . . .

1960-1981 and Beyond: Development of Backpropagation (BP) for NNs . . . . . . .

5.5

5.5.1 BP for Weight-Sharing Feedforward NNs (FNNs) and Recurrent NNs (RNNs)

5.6 Late 1980s-2000 and Beyond: Numerous Improvements of NNs . . . . . . . . . . .

Ideas for Dealing with Long Time Lags and Deep CAPs . . . . . . . . . . .

5.6.1

. . . .

5.6.2 Better BP Through Advanced Gradient Descent (Compare Sec. 5.24)

Searching For Simple, Low-Complexity, Problem-Solving NNs (Sec. 5.24)

.

5.6.3

Potential Benefits of UL for SL (Compare Sec. 5.7, 5.10, 5.15) . . . . . . . .

5.6.4

. . . . . . .

1987: UL Through Autoencoder (AE) Hierarchies (Compare Sec. 5.15)

5.7

1989: BP for Convolutional NNs (CNNs, Sec. 5.4)

. . . . . . . . . . . . . . . . . .

5.8

1991: Fundamental Deep Learning Problem of Gradient Descent . . . . . . . . . . .

5.9

5.10 1991: UL-Based History Compression Through a Deep Stack of RNNs

. . . . . . .

5.11 1992: Max-Pooling (MP): Towards MPCNNs (Compare Sec. 5.16, 5.19) . . . . . . .

5.12 1994: Early Contest-Winning NNs . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.13 1995: Supervised Recurrent Very Deep Learner (LSTM RNN)

. . . . . . . . . . . .

5.14 2003: More Contest-Winning/Record-Setting NNs; Successful Deep NNs . . . . . .

5.15 2006/7: UL For Deep Belief Networks / AE Stacks Fine-Tuned by BP . . . . . . . .

5.16 2006/7: Improved CNNs / GPU-CNNs / BP for MPCNNs / LSTM Stacks . . . . . .

5.17 2009: First Official Competitions Won by RNNs, and with MPCNNs . . . . . . . . .

5.18 2010: Plain Backprop (+ Distortions) on GPU Breaks MNIST Record . . . . . . . .

5.19 2011: MPCNNs on GPU Achieve Superhuman Vision Performance . . . . . . . . .

5.20 2011: Hessian-Free Optimization for RNNs . . . . . . . . . . . . . . . . . . . . . .

5.21 2012: First Contests Won on ImageNet, Object Detection, Segmentation . . . . . . .

5.22 2013-: More Contests and Benchmark Records

. . . . . . . . . . . . . . . . . . . .

5.23 Currently Successful Techniques: LSTM RNNs and GPU-MPCNNs . . . . . . . . .

5.24 Recent Tricks for Improving SL Deep NNs (Compare Sec. 5.6.2, 5.6.3)

. . . . . . .

5.25 Consequences for Neuroscience . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.26 DL with Spiking Neurons? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

5

6

7

7

7

8

8

8

8

9

10

10

10

11

11

12

12

13

14

14

15

16

16

17

18

18

19

21

21

22

22

23

23

24

24

25

26

27

28

28

2

�

6 DL in FNNs and RNNs for Reinforcement Learning (RL)

6.1 RL Through NN World Models Yields RNNs With Deep CAPs . . . . . . . . . . . .

6.2 Deep FNNs for Traditional RL and Markov Decision Processes (MDPs) . . . . . . .

6.3 Deep RL RNNs for Partially Observable MDPs (POMDPs) . . . . . . . . . . . . . .

6.4 RL Facilitated by Deep UL in FNNs and RNNs . . . . . . . . . . . . . . . . . . . .

6.5 Deep Hierarchical RL (HRL) and Subgoal Learning with FNNs and RNNs . . . . . .

6.6 Deep RL by Direct NN Search / Policy Gradients / Evolution . . . . . . . . . . . . .

6.7 Deep RL by Indirect Policy Search / Compressed NN Search . . . . . . . . . . . . .

6.8 Universal RL .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

29

29

30

31

31

31

32

33

33

34

35

7 Conclusion and Outlook

8 Acknowledgments

Abbreviations in Alphabetical Order

AE: Autoencoder

AI: Artificial Intelligence

ANN: Artificial Neural Network

BFGS: Broyden-Fletcher-Goldfarb-Shanno

BNN: Biological Neural Network

BM: Boltzmann Machine

BP: Backpropagation

BRNN: Bi-directional Recurrent Neural Network

CAP: Credit Assignment Path

CEC: Constant Error Carousel

CFL: Context Free Language

CMA-ES: Covariance Matrix Estimation ES

CNN: Convolutional Neural Network

CoSyNE: Co-Synaptic Neuro-Evolution

CSL: Context Senistive Language

CTC : Connectionist Temporal Classification

DBN: Deep Belief Network

DCT: Discrete Cosine Transform

DL: Deep Learning

DP: Dynamic Programming

DS: Direct Policy Search

EA: Evolutionary Algorithm

EM: Expectation Maximization

ES: Evolution Strategy

FMS: Flat Minimum Search

FNN: Feedforward Neural Network

FSA: Finite State Automaton

GMDH: Group Method of Data Handling

GOFAI: Good Old-Fashioned AI

GP: Genetic Programming

GPU: Graphics Processing Unit

GPU-MPCNN: GPU-Based MPCNN

HMM: Hidden Markov Model

HRL: Hierarchical Reinforcement Learning

HTM: Hierarchical Temporal Memory

HMAX: Hierarchical Model “and X”

LSTM: Long Short-Term Memory (RNN)

MDL: Minimum Description Length

MDP: Markov Decision Process

MNIST: Mixed National Institute of Standards

and Technology Database

MP: Max-Pooling

MPCNN: Max-Pooling CNN

NE: NeuroEvolution

NEAT: NE of Augmenting Topologies

NES: Natural Evolution Strategies

NFQ: Neural Fitted Q-Learning

NN: Neural Network

OCR: Optical Character Recognition

PCC: Potential Causal Connection

PDCC: Potential Direct Causal Connection

PM: Predictability Minimization

POMDP: Partially Observable MDP

RAAM: Recursive Auto-Associative Memory

RBM: Restricted Boltzmann Machine

ReLU: Rectified Linear Unit

RL: Reinforcement Learning

RNN: Recurrent Neural Network

R-prop: Resilient Backpropagation

SL: Supervised Learning

SLIM NN: Self-Delimiting Neural Network

SOTA: Self-Organising Tree Algorithm

SVM: Support Vector Machine

TDNN: Time-Delay Neural Network

TIMIT: TI/SRI/MIT Acoustic-Phonetic Continu-

ous Speech Corpus

UL: Unsupervised Learning

WTA: Winner-Take-All

3

�

1 Introduction to Deep Learning (DL) in Neural Networks (NNs)

Which modifiable components of a learning system are responsible for its success or failure? What

changes to them improve performance? This has been called the fundamental credit assignment prob-

lem (Minsky, 1963). There are general credit assignment methods for universal problem solvers that

are time-optimal in various theoretical senses (Sec. 6.8). The present survey, however, will focus on

the narrower, but now commercially important, subfield of Deep Learning (DL) in Artificial Neural

Networks (NNs).

A standard neural network (NN) consists of many simple, connected processors called neurons,

each producing a sequence of real-valued activations. Input neurons get activated through sensors per-

ceiving the environment, other neurons get activated through weighted connections from previously

active neurons (details in Sec. 2). Some neurons may influence the environment by triggering actions.

Learning or credit assignment is about finding weights that make the NN exhibit desired behavior,

such as driving a car. Depending on the problem and how the neurons are connected, such behavior

may require long causal chains of computational stages (Sec. 3), where each stage transforms (of-

ten in a non-linear way) the aggregate activation of the network. Deep Learning is about accurately

assigning credit across many such stages.

Shallow NN-like models with few such stages have been around for many decades if not centuries

(Sec. 5.1). Models with several successive nonlinear layers of neurons date back at least to the 1960s

(Sec. 5.3) and 1970s (Sec. 5.5). An efficient gradient descent method for teacher-based Supervised

Learning (SL) in discrete, differentiable networks of arbitrary depth called backpropagation (BP) was

developed in the 1960s and 1970s, and applied to NNs in 1981 (Sec. 5.5). BP-based training of deep

NNs with many layers, however, had been found to be difficult in practice by the late 1980s (Sec. 5.6),

and had become an explicit research subject by the early 1990s (Sec. 5.9). DL became practically fea-

sible to some extent through the help of Unsupervised Learning (UL), e.g., Sec. 5.10 (1991), Sec. 5.15

(2006). The 1990s and 2000s also saw many improvements of purely supervised DL (Sec. 5). In the

new millennium, deep NNs have finally attracted wide-spread attention, mainly by outperforming al-

ternative machine learning methods such as kernel machines (Vapnik, 1995; Sch¨olkopf et al., 1998)

in numerous important applications. In fact, since 2009, supervised deep NNs have won many official

international pattern recognition competitions (e.g., Sec. 5.17, 5.19, 5.21, 5.22), achieving the first

superhuman visual pattern recognition results in limited domains (Sec. 5.19, 2011). Deep NNs also

have become relevant for the more general field of Reinforcement Learning (RL) where there is no

supervising teacher (Sec. 6).

Both feedforward (acyclic) NNs (FNNs) and recurrent (cyclic) NNs (RNNs) have won contests

(Sec. 5.12, 5.14, 5.17, 5.19, 5.21, 5.22). In a sense, RNNs are the deepest of all NNs (Sec. 3)—they

are general computers more powerful than FNNs, and can in principle create and process memories

of arbitrary sequences of input patterns (e.g., Siegelmann and Sontag, 1991; Schmidhuber, 1990a).

Unlike traditional methods for automatic sequential program synthesis (e.g., Waldinger and Lee, 1969;

Balzer, 1985; Soloway, 1986; Deville and Lau, 1994), RNNs can learn programs that mix sequential

and parallel information processing in a natural and efficient way, exploiting the massive parallelism

viewed as crucial for sustaining the rapid decline of computation cost observed over the past 75 years.

The rest of this paper is structured as follows. Sec. 2 introduces a compact, event-oriented notation

that is simple yet general enough to accommodate both FNNs and RNNs. Sec. 3 introduces the

concept of Credit Assignment Paths (CAPs) to measure whether learning in a given NN application is

of the deep or shallow type. Sec. 4 lists recurring themes of DL in SL, UL, and RL. Sec. 5 focuses

on SL and UL, and on how UL can facilitate SL, although pure SL has become dominant in recent

competitions (Sec. 5.17–5.23). Sec. 5 is arranged in a historical timeline format with subsections on

important inspirations and technical contributions. Sec. 6 on deep RL discusses traditional Dynamic

Programming (DP)-based RL combined with gradient-based search techniques for SL or UL in deep

4

�

NNs, as well as general methods for direct and indirect search in the weight space of deep FNNs and

RNNs, including successful policy gradient and evolutionary methods.

2 Event-Oriented Notation for Activation Spreading in NNs

Throughout this paper, let i, j, k, t, p, q, r denote positive integer variables assuming ranges implicit

in the given contexts. Let n, m, T denote positive integer constants.

An NN’s topology may change over time (e.g., Sec. 5.3, 5.6.3). At any given moment, it can

be described as a finite subset of units (or nodes or neurons) N = {u1, u2, . . . ,} and a finite set

H ⊆ N × N of directed edges or connections between nodes. FNNs are acyclic graphs, RNNs cyclic.

The first (input) layer is the set of input units, a subset of N. In FNNs, the k-th layer (k > 1) is the set

of all nodes u ∈ N such that there is an edge path of length k − 1 (but no longer path) between some

input unit and u. There may be shortcut connections between distant layers. In sequence-processing,

fully connected RNNs, all units have connections to all non-input units.

The NN’s behavior or program is determined by a set of real-valued, possibly modifiable, param-

eters or weights wi (i = 1, . . . , n). We now focus on a single finite episode or epoch of information

processing and activation spreading, without learning through weight changes. The following slightly

unconventional notation is designed to compactly describe what is happening during the runtime of

the system.

ft(nett) with real-valued nett =

During an episode, there is a partially causal sequence xt(t = 1, . . . , T ) of real values that I call

events. Each xt is either an input set by the environment, or the activation of a unit that may directly

depend on other xk(k < t) through a current NN topology-dependent set int of indices k representing

incoming causal connections or links. Let the function v encode topology information and map such

event index pairs (k, t) to weight indices. For example, in the non-input case we may have xt =

xkwv(k,t)

(multiplicative case), where ft is a typically nonlinear real-valued activation function such as tanh.

In many recent competition-winning NNs (Sec. 5.19, 5.21, 5.22) there also are events of the type

xt = maxk∈int(xk); some network types may also use complex polynomial activation functions

(Sec. 5.3). xt may directly affect certain xk(k > t) through outgoing connections or links represented

through a current set outt of indices k with t ∈ ink. Some of the non-input events are called output

events.

xkwv(k,t) (additive case) or nett =

k∈int

k∈int

Note that many of the xt may refer to different, time-varying activations of the same unit in

sequence-processing RNNs (e.g., Williams, 1989, “unfolding in time”), or also in FNNs sequentially

exposed to time-varying input patterns of a large training set encoded as input events. During an

episode, the same weight may get reused over and over again in topology-dependent ways, e.g., in

RNNs, or in convolutional NNs (Sec. 5.4, 5.8). I call this weight sharing across space and/or time.

Weight sharing may greatly reduce the NN’s descriptive complexity, which is the number of bits of

information required to describe the NN (Sec. 4.4).

In Supervised Learning (SL), certain NN output events xt may be associated with teacher-given,

real-valued labels or targets dt yielding errors et, e.g., et = 1/2(xt−dt)2. A typical goal of supervised

NN training is to find weights that yield episodes with small total error E, the sum of all such et. The

hope is that the NN will generalize well in later episodes, causing only small errors on previously

unseen sequences of input events. Many alternative error functions for SL and UL are possible.

SL assumes that input events are independent of earlier output events (which may affect the en-

vironment through actions causing subsequent perceptions). This assumption does not hold in the

broader fields of Sequential Decision Making and Reinforcement Learning (RL) (Kaelbling et al.,

1996; Sutton and Barto, 1998; Hutter, 2005; Wiering and van Otterlo, 2012) (Sec. 6). In RL, some

of the input events may encode real-valued reward signals given by the environment, and a typical

5

�

goal is to find weights that yield episodes with a high sum of reward signals, through sequences of

appropriate output actions.

Sec. 5.5 will use the notation above to compactly describe a central algorithm of DL, namely,

backpropagation (BP) for supervised weight-sharing FNNs and RNNs. (FNNs may be viewed as

RNNs with certain fixed zero weights.) Sec. 6 will address the more general RL case.

3 Depth of Credit Assignment Paths (CAPs) and of Problems

To measure whether credit assignment in a given NN application is of the deep or shallow type, I

introduce the concept of Credit Assignment Paths or CAPs, which are chains of possibly causal links

between the events of Sec. 2, e.g., from input through hidden to output layers in FNNs, or through

transformations over time in RNNs.

Let us first focus on SL. Consider two events xp and xq (1 ≤ p < q ≤ T ). Depending on the

application, they may have a Potential Direct Causal Connection (PDCC) expressed by the Boolean

predicate pdcc(p, q), which is true if and only if p ∈ inq. Then the 2-element list (p, q) is defined to

be a CAP (a minimal one) from p to q. A learning algorithm may be allowed to change wv(p,q) to

improve performance in future episodes.

More general, possibly indirect, Potential Causal Connections (PCC) are expressed by the re-

cursively defined Boolean predicate pcc(p, q), which in the SL case is true only if pdcc(p, q), or if

pcc(p, k) for some k and pdcc(k, q). In the latter case, appending q to any CAP from p to k yields a

CAP from p to q (this is a recursive definition, too). The set of such CAPs may be large but is finite.

Note that the same weight may affect many different PDCCs between successive events listed by a

given CAP, e.g., in the case of RNNs, or weight-sharing FNNs.

Suppose a CAP has the form (. . . , k, t, . . . , q), where k and t (possibly t = q) are the first succes-

sive elements with modifiable wv(k,t). Then the length of the suffix list (t, . . . , q) is called the CAP’s

depth (which is 0 if there are no modifiable links at all). This depth limits how far backwards credit

assignment can move down the causal chain to find a modifiable weight.1

Suppose an episode and its event sequence x1, . . . , xT satisfy a computable criterion used to

decide whether a given problem has been solved (e.g., total error E below some threshold). Then

the set of used weights is called a solution to the problem, and the depth of the deepest CAP within

the sequence is called the solution depth. There may be other solutions (yielding different event

sequences) with different depths. Given some fixed NN topology, the smallest depth of any solution

is called the problem depth.

Sometimes we also speak of the depth of an architecture: SL FNNs with fixed topology imply a

problem-independent maximal problem depth bounded by the number of non-input layers. Certain

SL RNNs with fixed weights for all connections except those to output units (Jaeger, 2001; Maass

et al., 2002; Jaeger, 2004; Schrauwen et al., 2007) have a maximal problem depth of 1, because only

the final links in the corresponding CAPs are modifiable. In general, however, RNNs may learn to

solve problems of potentially unlimited depth.

Note that the definitions above are solely based on the depths of causal chains, and agnostic to the

temporal distance between events. For example, shallow FNNs perceiving large “time windows” of

input events may correctly classify long input sequences through appropriate output events, and thus

solve shallow problems involving long time lags between relevant events.

At which problem depth does Shallow Learning end, and Deep Learning begin? Discussions with

DL experts have not yet yielded a conclusive response to this question. Instead of committing myself

1An alternative would be to count only modifiable links when measuring depth. In many typical NN applications this would

not make a difference, but in some it would, e.g., Sec. 6.1.

6

�

to a precise answer, let me just define for the purposes of this overview: problems of depth > 10

require Very Deep Learning.

The difficulty of a problem may have little to do with its depth. Some NNs can quickly learn

to solve certain deep problems, e.g., through random weight guessing (Sec. 5.9) or other types of

direct search (Sec. 6.6) or indirect search (Sec. 6.7) in weight space, or through training an NN first

on shallow problems whose solutions may then generalize to deep problems, or through collapsing

sequences of (non)linear operations into a single (non)linear operation (but see an analysis of non-

trivial aspects of deep linear networks, Baldi and Hornik, 1994, Section B). In general, however,

finding an NN that precisely models a given training set is an NP-complete problem (Judd, 1990;

Blum and Rivest, 1992), also in the case of deep NNs (S´ıma, 1994; de Souto et al., 1999; Windisch,

2005); compare a survey of negative results (S´ıma, 2002, Section 1).

Above we have focused on SL. In the more general case of RL in unknown environments, pcc(p, q)

is also true if xp is an output event and xq any later input event—any action may affect the environment

and thus any later perception. (In the real world, the environment may even influence non-input events

computed on a physical hardware entangled with the entire universe, but this is ignored here.) It is

possible to model and replace such unmodifiable environmental PCCs through a part of the NN that

has already learned to predict (through some of its units) input events (including reward signals) from

former input events and actions (Sec. 6.1). Its weights are frozen, but can help to assign credit to

other, still modifiable weights used to compute actions (Sec. 6.1). This approach may lead to very

deep CAPs though.

Some DL research is about automatically rephrasing problems such that their depth is reduced

(Sec. 4). In particular, sometimes UL is used to make SL problems less deep, e.g., Sec. 5.10. Often

Dynamic Programming (Sec. 4.1) is used to facilitate certain traditional RL problems, e.g., Sec. 6.2.

Sec. 5 focuses on CAPs for SL, Sec. 6 on the more complex case of RL.

4 Recurring Themes of Deep Learning

4.1 Dynamic Programming for Supervised/Reinforcement Learning (SL/RL)

One recurring theme of DL is Dynamic Programming (DP) (Bellman, 1957), which can help to fa-

cilitate credit assignment under certain assumptions. For example, in SL NNs, backpropagation itself

can be viewed as a DP-derived method (Sec. 5.5). In traditional RL based on strong Markovian as-

sumptions, DP-derived methods can help to greatly reduce problem depth (Sec. 6.2). DP algorithms

are also essential for systems that combine concepts of NNs and graphical models, such as Hidden

Markov Models (HMMs) (Stratonovich, 1960; Baum and Petrie, 1966) and Expectation Maximization

(EM) (Dempster et al., 1977; Friedman et al., 2001), e.g., (Bottou, 1991; Bengio, 1991; Bourlard and

Morgan, 1994; Baldi and Chauvin, 1996; Jordan and Sejnowski, 2001; Bishop, 2006; Hastie et al.,

2009; Poon and Domingos, 2011; Dahl et al., 2012; Hinton et al., 2012a; Wu and Shao, 2014).

4.2 Unsupervised Learning (UL) Facilitating SL and RL

Another recurring theme is how UL can facilitate both SL (Sec. 5) and RL (Sec. 6). UL (Sec. 5.6.4)

is normally used to encode raw incoming data such as video or speech streams in a form that is more

convenient for subsequent goal-directed learning. In particular, codes that describe the original data in

a less redundant or more compact way can be fed into SL (Sec. 5.10, 5.15) or RL machines (Sec. 6.4),

whose search spaces may thus become smaller (and whose CAPs shallower) than those necessary for

dealing with the raw data. UL is closely connected to the topics of regularization and compression

(Sec. 4.4, 5.6.3).

7

�

4.3 Learning Hierarchical Representations Through Deep SL, UL, RL

Many methods of Good Old-Fashioned Artificial Intelligence (GOFAI) (Nilsson, 1980) as well as

more recent approaches to AI (Russell et al., 1995) and Machine Learning (Mitchell, 1997) learn

hierarchies of more and more abstract data representations. For example, certain methods of syn-

tactic pattern recognition (Fu, 1977) such as grammar induction discover hierarchies of formal rules

to model observations. The partially (un)supervised Automated Mathematician / EURISKO (Lenat,

1983; Lenat and Brown, 1984) continually learns concepts by combining previously learnt concepts.

Such hierarchical representation learning (Ring, 1994; Bengio et al., 2013; Deng and Yu, 2014) is also

a recurring theme of DL NNs for SL (Sec. 5), UL-aided SL (Sec. 5.7, 5.10, 5.15), and hierarchical RL

(Sec. 6.5). Often, abstract hierarchical representations are natural by-products of data compression

(Sec. 4.4), e.g., Sec. 5.10.

4.4 Occam’s Razor: Compression and Minimum Description Length (MDL)

Occam’s razor favors simple solutions over complex ones. Given some programming language, the

principle of Minimum Description Length (MDL) can be used to measure the complexity of a so-

lution candidate by the length of the shortest program that computes it (e.g., Solomonoff, 1964;

Kolmogorov, 1965b; Chaitin, 1966; Wallace and Boulton, 1968; Levin, 1973a; Solomonoff, 1978;

Rissanen, 1986; Blumer et al., 1987; Li and Vit´anyi, 1997; Gr¨unwald et al., 2005). Some methods

explicitly take into account program runtime (Allender, 1992; Watanabe, 1992; Schmidhuber, 1997,

2002); many consider only programs with constant runtime, written in non-universal programming

languages (e.g., Rissanen, 1986; Hinton and van Camp, 1993). In the NN case, the MDL princi-

ple suggests that low NN weight complexity corresponds to high NN probability in the Bayesian

view (e.g., MacKay, 1992; Buntine and Weigend, 1991; Neal, 1995; De Freitas, 2003), and to high

generalization performance (e.g., Baum and Haussler, 1989), without overfitting the training data.

Many methods have been proposed for regularizing NNs, that is, searching for solution-computing

but simple, low-complexity SL NNs (Sec. 5.6.3) and RL NNs (Sec. 6.7). This is closely related to

certain UL methods (Sec. 4.2, 5.6.4).

4.5 Fast Graphics Processing Units (GPUs) for DL in NNs

While the previous millennium saw several attempts at creating fast NN-specific hardware (e.g., Jackel

et al., 1990; Faggin, 1992; Ramacher et al., 1993; Widrow et al., 1994; Heemskerk, 1995; Korkin et al.,

1997; Urlbe, 1999), and at exploiting standard hardware (e.g., Anguita et al., 1994; Muller et al., 1995;

Anguita and Gomes, 1996), the new millennium brought a DL breakthrough in form of cheap, multi-

processor graphics cards or GPUs. GPUs are widely used for video games, a huge and competitive

market that has driven down hardware prices. GPUs excel at the fast matrix and vector multiplications

required not only for convincing virtual realities but also for NN training, where they can speed up

learning by a factor of 50 and more. Some of the GPU-based FNN implementations (Sec. 5.16–5.19)

have greatly contributed to recent successes in contests for pattern recognition (Sec. 5.19–5.22), image

segmentation (Sec. 5.21), and object detection (Sec. 5.21–5.22).

5 Supervised NNs, Some Helped by Unsupervised NNs

The main focus of current practical applications is on Supervised Learning (SL), which has domi-

nated recent pattern recognition contests (Sec. 5.17–5.23). Several methods, however, use additional

Unsupervised Learning (UL) to facilitate SL (Sec. 5.7, 5.10, 5.15). It does make sense to treat SL and

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc