sample.pdf

sterling.com

Welcome to Sterling Software

Efficient C++ Performance Programming Techniques.pdf

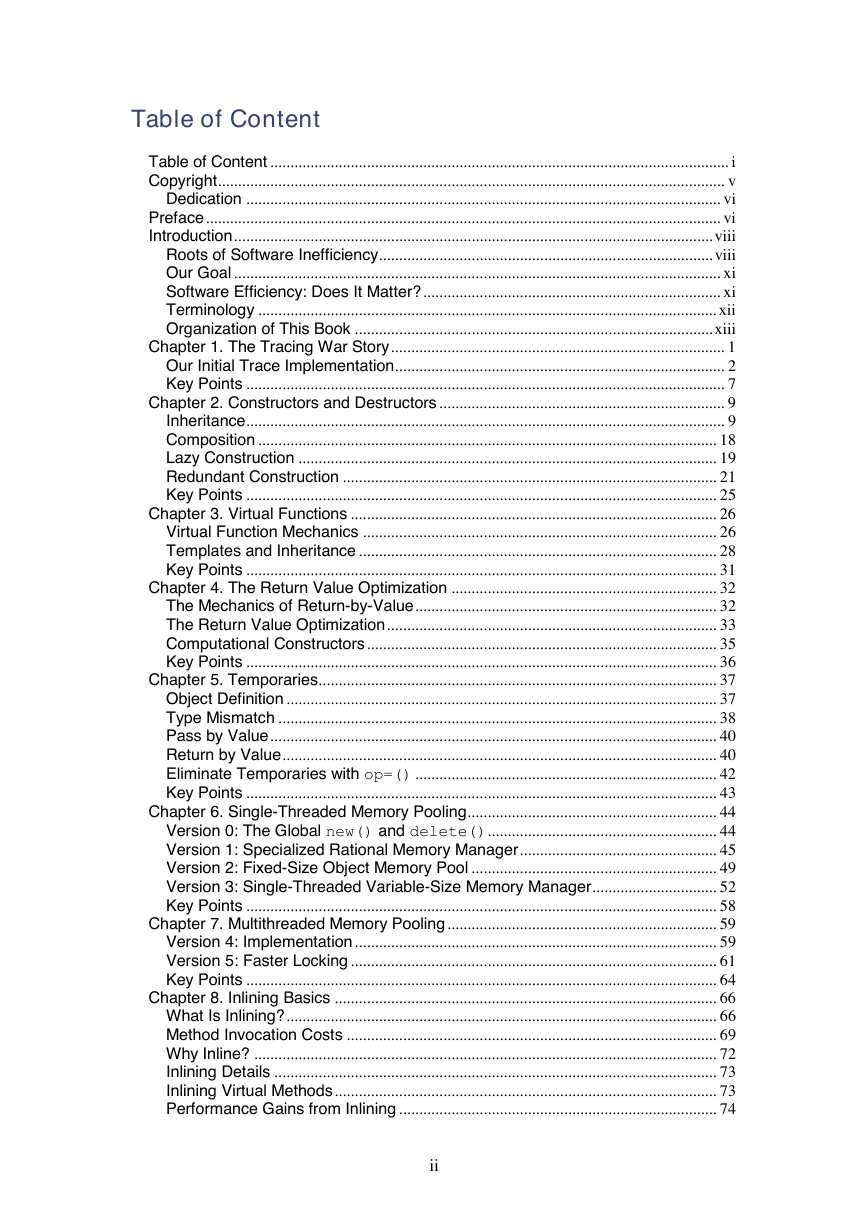

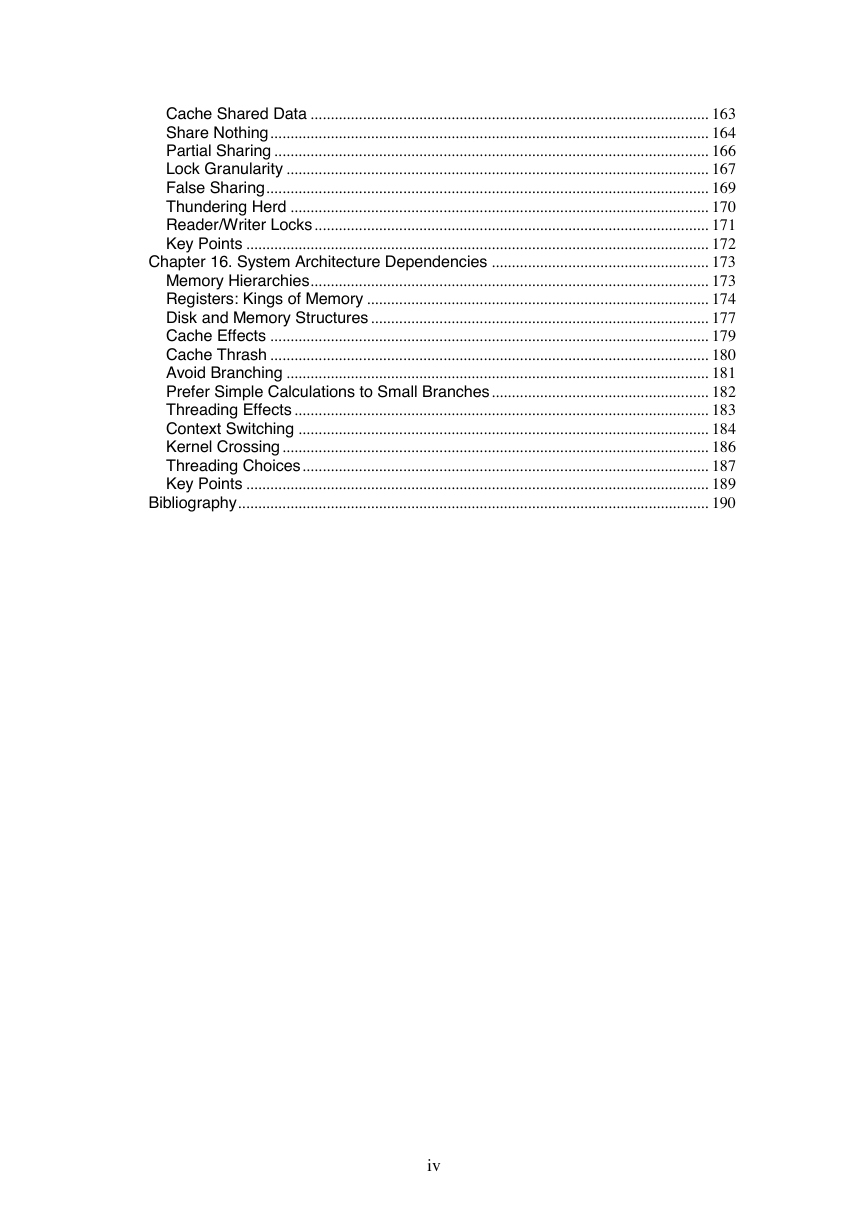

Table of Content

Copyright

Dedication

Preface

Introduction

Roots of Software Inefficiency

Figure 1. High-level classification of software performance.

Figure 2. Refinement of the design performance view.

Figure 3. Refinement of the coding performance view.

Our Goal

Software Efficiency: Does It Matter?

Terminology

Organization of This Book

Chapter 1. The Tracing War Story

Our Initial Trace Implementation

What Went Wrong

Figure 1.1. The performance cost of the Trace object.

The Recovery Plan

Figure 1.2. Impact of eliminating one string object.

Figure 1.3. Impact of conditional creation of the string member.

Key Points

Chapter 2. Constructors and Destructors

Inheritance

Figure 2.1. The cost of inheritance in this example.

Composition

Lazy Construction

Redundant Construction

Figure 2.2. Overhead of a silent initialization is negligible in this particular scenario.

Figure 2.3. More significant impact of silent initialization.

Key Points

Chapter 3. Virtual Functions

Virtual Function Mechanics

Templates and Inheritance

Hard Coding

Inheritance

Templates

Key Points

Chapter 4. The Return Value Optimization

The Mechanics of Return-by-Value

The Return Value Optimization

Figure 4.1. The speed-up of RVO.

Computational Constructors

Key Points

Chapter 5. Temporaries

Object Definition

Type Mismatch

Pass by Value

Return by Value

Eliminate Temporaries with op=()

Key Points

Chapter 6. Single-Threaded Memory Pooling

Version 0: The Global new() and delete()

Version 1: Specialized Rational Memory Manager

Figure 6.1. A free list of Rational objects.

Figure 6.2. Global new() and delete() compared to a Rational memory pool.

Version 2: Fixed-Size Object Memory Pool

Figure 6.3. Adding a template memory pool for generic objects.

Version 3: Single-Threaded Variable-Size Memory Manager

Figure 6.4. Variable-size memory free list.

Figure 6.5. A variable-size memory pool is naturally slower than fixed-size.

Key Points

Chapter 7. Multithreaded Memory Pooling

Version 4: Implementation

Figure 7.1. Comparing multithreaded to single-threaded memory pooling.

Version 5: Faster Locking

Figure 7.2. Multithreaded memory pool using faster locks.

Figure 7.3. Comparing the various flavors of memory pooling.

Key Points

Chapter 8. Inlining Basics

What Is Inlining?

Method Invocation Costs

Figure 8.1. Call frame register mapping.

Why Inline?

Inlining Details

Inlining Virtual Methods

Performance Gains from Inlining

Key Points

Chapter 9. Inlining—Performance Considerations

Cross-Call Optimization

Why Not Inline?

Development and Compile-Time Inlining Considerations

Profile-Based Inlining

Table 9.1. The Inlining Decision Matrix

Inlining Rules

Singletons

Trivials

Key Points

Chapter 10. Inlining Tricks

Conditional Inlining

Selective Inlining

Recursive Inlining

Inlining with Static Local Variables

Architectural Caveat: Multiple Register Sets

Key Points

Chapter 11. Standard Template Library

Asymptotic Complexity

Insertion

Figure 11.1. Speed of insertion.

Figure 11.2. Object insertion speed.

Figure 11.3. Comparing object to pointer insertion.

Figure 11.4. Comparing list to vector insertion.

Figure 11.5. Vector insertion with and without capacity reservation.

Figure 11.6. Inserting at the front.

Deletion

Figure 11.7. Comparing list to vector deletion.

Figure 11.8. Deleting elements at the front.

Traversal

Figure 11.9. Container traversal speed.

Find

Figure 11.10. Container search speed.

Figure 11.11. Comparing generic find() to member find().

Function Objects

Figure 11.12. Comparing function objects to function pointers.

Better than STL?

Figure 11.13. Comparing STL speed to home-grown code.

Key Points

Chapter 12. Reference Counting

Figure 12.1. Duplicating resources.

Implementation Details

Figure 12.2. A simple design for a reference-counted Widget class.

Figure 12.3. Adding inheritance and smart pointer to the reference-counting design.

Figure 12.4. BigInt assignment speed.

Figure 12.5. BigInt creation speed.

Preexisting Classes

Figure 12.6. Reference-counting a pre-existing BigInt.

Figure 12.7. BigInt creation speed.

Concurrent Reference Counting

Figure 12.8. Multithreaded BigInt assignment speed.

Figure 12.9. Multithreaded BigInt creation speed.

Key Points

Chapter 13. Coding Optimizations

Caching

Precompute

Reduce Flexibility

80-20 Rule: Speed Up the Common Path

Lazy Evaluation

Useless Computations

System Architecture

Memory Management

Library and System Calls

Compiler Optimization

Key Points

Chapter 14. Design Optimizations

Design Flexibility

Caching

Web Server Timestamps

Data Expansion

The Common Code Trap

Efficient Data Structures

Lazy Evaluation

getpeername()

Useless Computations

Obsolete Code

Key Points

Chapter 15. Scalability

Figure 15.1. Single processor architecture.

Figure 15.2. Threads are the scheduling entities.

The SMP Architecture

Figure 15.3. The SMP Architecture.

Amdahl's Law

Figure 15.4. Potential speedup is limited.

Figure 15.5. A specific design of one Web server.

Multithreaded and Synchronization Terminology

Break Up a Task into Multiple Subtasks

Cache Shared Data

Share Nothing

Partial Sharing

Figure 15.6. A single shared resource.

Figure 15.7. Breaking up a single shared resource.

Lock Granularity

False Sharing

Thundering Herd

Reader/Writer Locks

Key Points

Chapter 16. System Architecture Dependencies

Memory Hierarchies

Table 16.1. Memory Access Speed

Registers: Kings of Memory

Disk and Memory Structures

Cache Effects

Cache Thrash

Avoid Branching

Prefer Simple Calculations to Small Branches

Threading Effects

Context Switching

Kernel Crossing

Threading Choices

Key Points

Bibliography

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc