User’s Guide for SNOPT Version 7:

Software for

Large-Scale Nonlinear Programming∗

Philip E. GILL

Department of Mathematics

University of California, San Diego, La Jolla, CA 92093-0112, USA

Walter MURRAY and Michael A. SAUNDERS

Systems Optimization Laboratory

Department of Management Science and Engineering

Stanford University, Stanford, CA 94305-4026, USA

June 16, 2008

Abstract

SNOPT is a general-purpose system for constrained optimization. It minimizes a

linear or nonlinear function subject to bounds on the variables and sparse linear or

nonlinear constraints. It is suitable for large-scale linear and quadratic programming

and for linearly constrained optimization, as well as for general nonlinear programs.

SNOPT finds solutions that are locally optimal, and ideally any nonlinear functions

should be smooth and users should provide gradients. It is often more widely useful.

For example, local optima are often global solutions, and discontinuities in the function

gradients can often be tolerated if they are not too close to an optimum. Unknown

gradients are estimated by finite differences.

SNOPT uses a sequential quadratic programming (SQP) algorithm. Search di-

rections are obtained from QP subproblems that minimize a quadratic model of the

Lagrangian function subject to linearized constraints. An augmented Lagrangian merit

function is reduced along each search direction to ensure convergence from any starting

point.

On large problems, SNOPT is most efficient if only some of the variables enter

nonlinearly, or there are relatively few degrees of freedom at a solution (i.e., many

constraints are active). SNOPT requires relatively few evaluations of the problem

functions. Hence it is especially effective if the objective or constraint functions (and

their gradients) are expensive to evaluate.

The source code is re-entrant and suitable for any machine with a Fortran compiler

(or the f2c translator and a C compiler). SNOPT may be called from a driver program

in Fortran, C, or Matlab. It can also be used as a stand-alone package, reading data

in the MPS format used by commercial mathematical programming systems.

Keywords: optimization, large-scale nonlinear programming, nonlinear constraints,

SQP methods, limited-storage quasi-Newton updates, Fortran software, C software.

pgill@ucsd.edu

walter@stanford.edu

saunders@stanford.edu

http://www.cam.ucsd.edu/~peg

http://www.stanford.edu/~walter

http://www.stanford.edu/~saunders

∗Partially supported by National Science Foundation grants DMI-9204208, DMI-9500668, CCR-9988205,

and CCR-0306662, and Office of Naval Research grants N00014-96-1-0274 and N00014-02-1-0076.

�

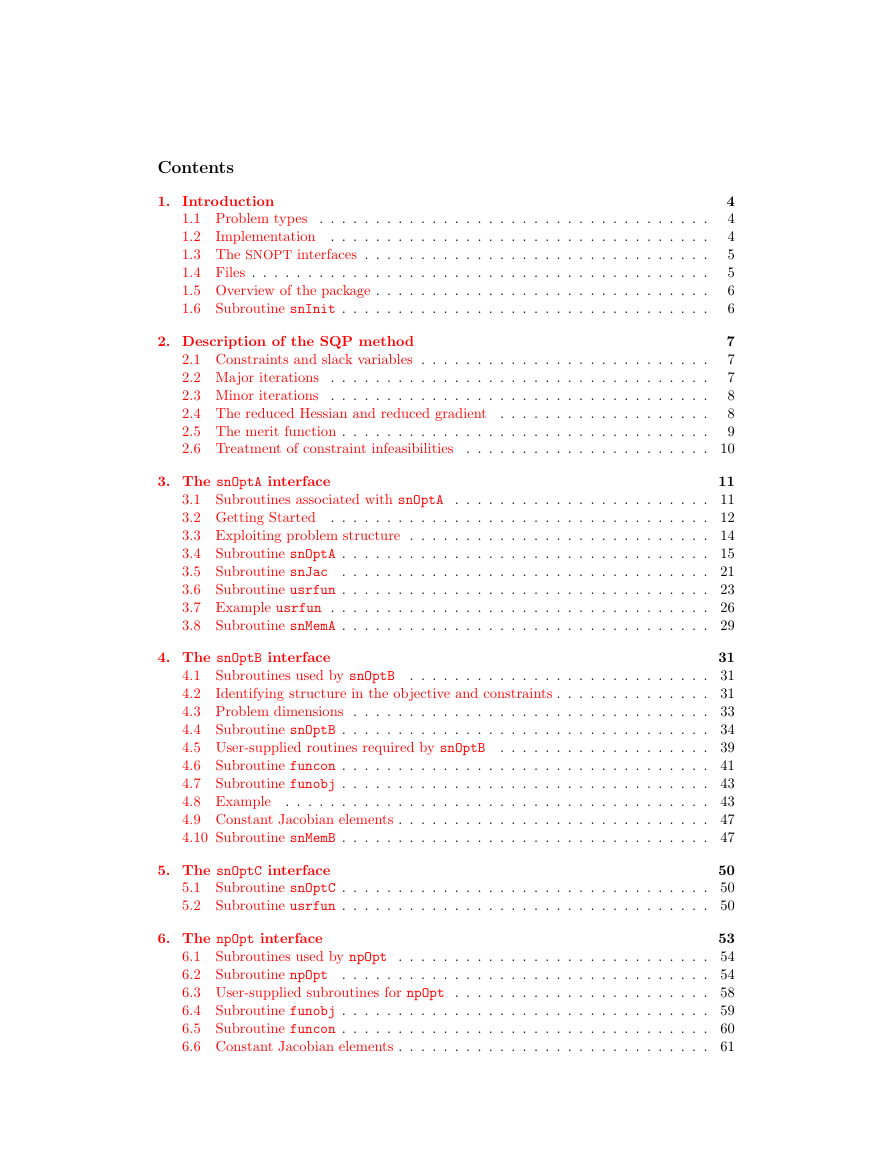

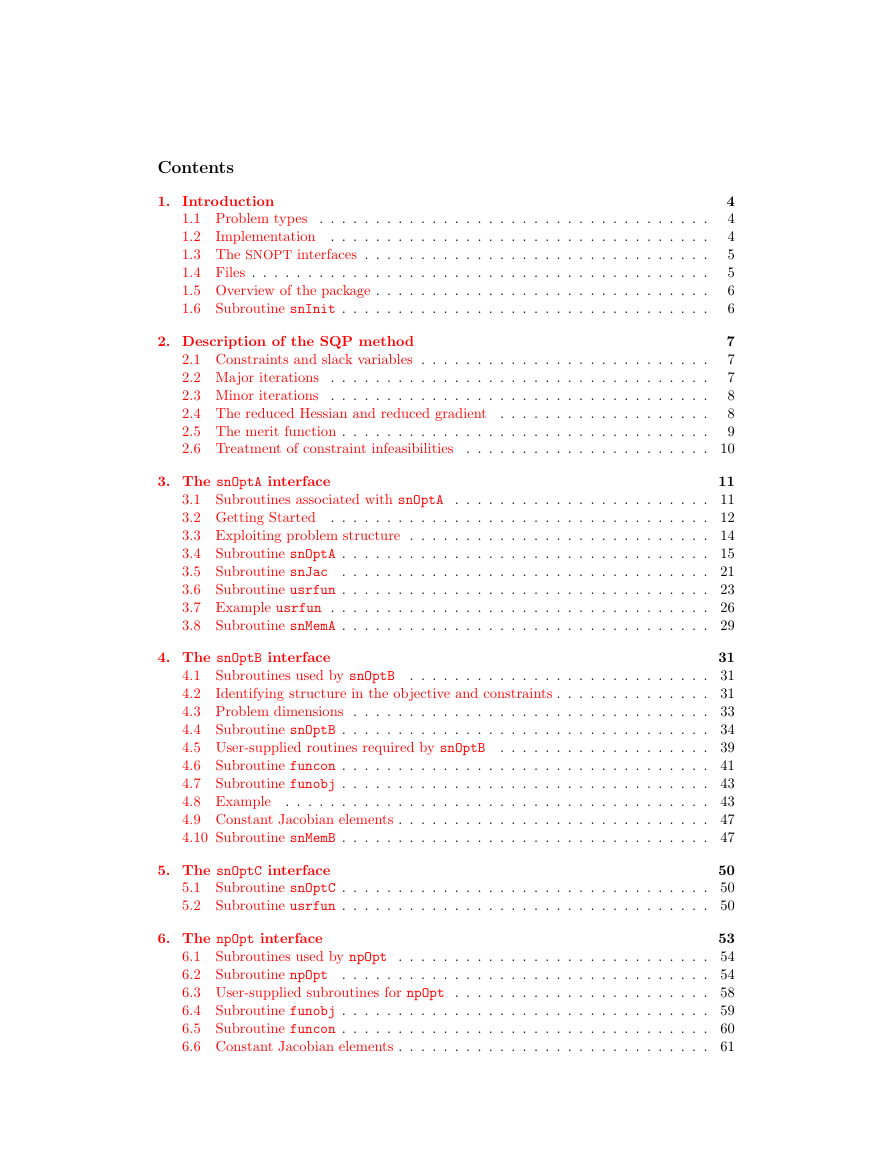

Contents

1. Introduction

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.1 Problem types

1.2

Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.3 The SNOPT interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.4 Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.5 Overview of the package . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.6 Subroutine snInit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

4

4

5

5

6

6

2. Description of the SQP method

2.1 Constraints and slack variables . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2 Major iterations

2.3 Minor iterations

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4 The reduced Hessian and reduced gradient

. . . . . . . . . . . . . . . . . . .

2.5 The merit function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.6 Treatment of constraint infeasibilities

7

7

7

8

8

9

. . . . . . . . . . . . . . . . . . . . . . 10

3. The snOptA interface

11

3.1 Subroutines associated with snOptA . . . . . . . . . . . . . . . . . . . . . . . 11

3.2 Getting Started . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.3 Exploiting problem structure . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

3.4 Subroutine snOptA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.5 Subroutine snJac . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.6 Subroutine usrfun . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.7 Example usrfun . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.8 Subroutine snMemA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4. The snOptB interface

31

4.1 Subroutines used by snOptB . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

4.2

Identifying structure in the objective and constraints . . . . . . . . . . . . . . 31

4.3 Problem dimensions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.4 Subroutine snOptB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.5 User-supplied routines required by snOptB . . . . . . . . . . . . . . . . . . . 39

4.6 Subroutine funcon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.7 Subroutine funobj . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.8 Example

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.9 Constant Jacobian elements . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.10 Subroutine snMemB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

5. The snOptC interface

50

5.1 Subroutine snOptC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

5.2 Subroutine usrfun . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

6. The npOpt interface

53

6.1 Subroutines used by npOpt . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

6.2 Subroutine npOpt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

6.3 User-supplied subroutines for npOpt . . . . . . . . . . . . . . . . . . . . . . . 58

6.4 Subroutine funobj . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

6.5 Subroutine funcon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

6.6 Constant Jacobian elements . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

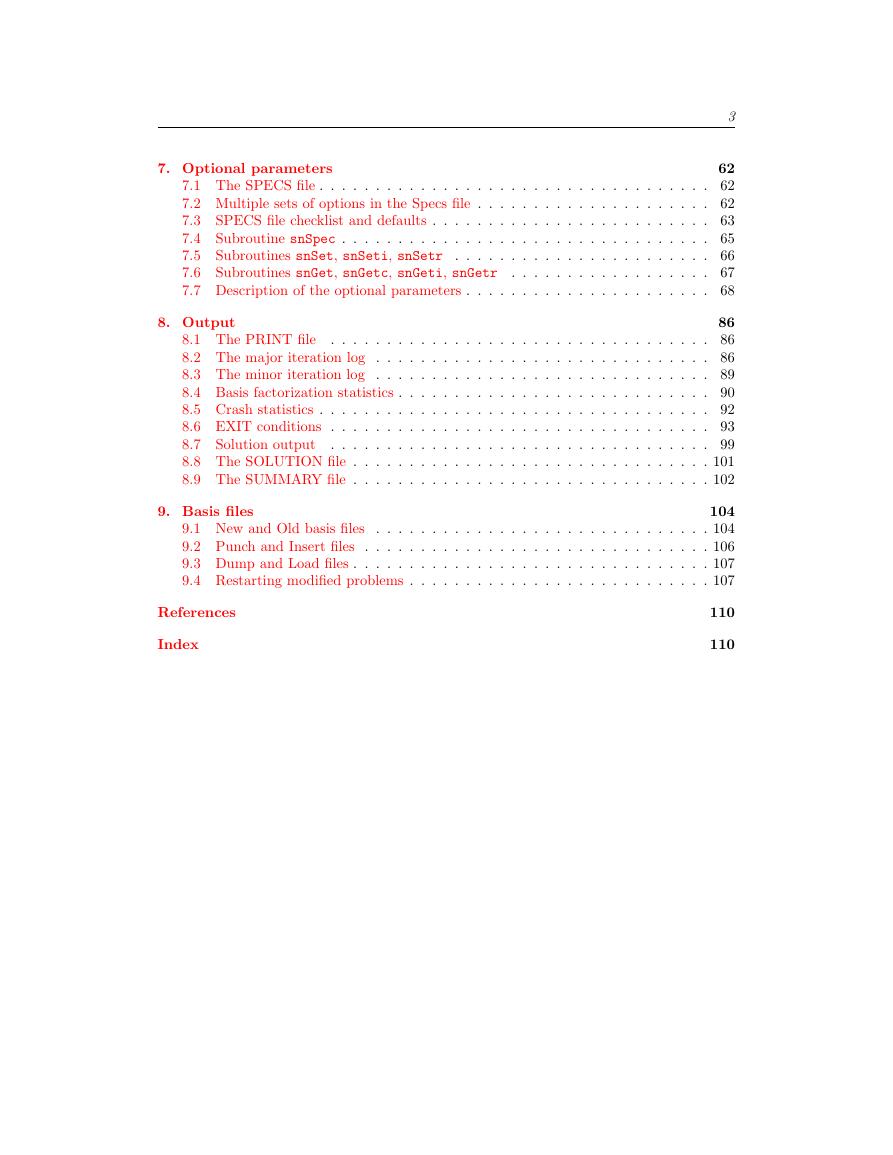

�

3

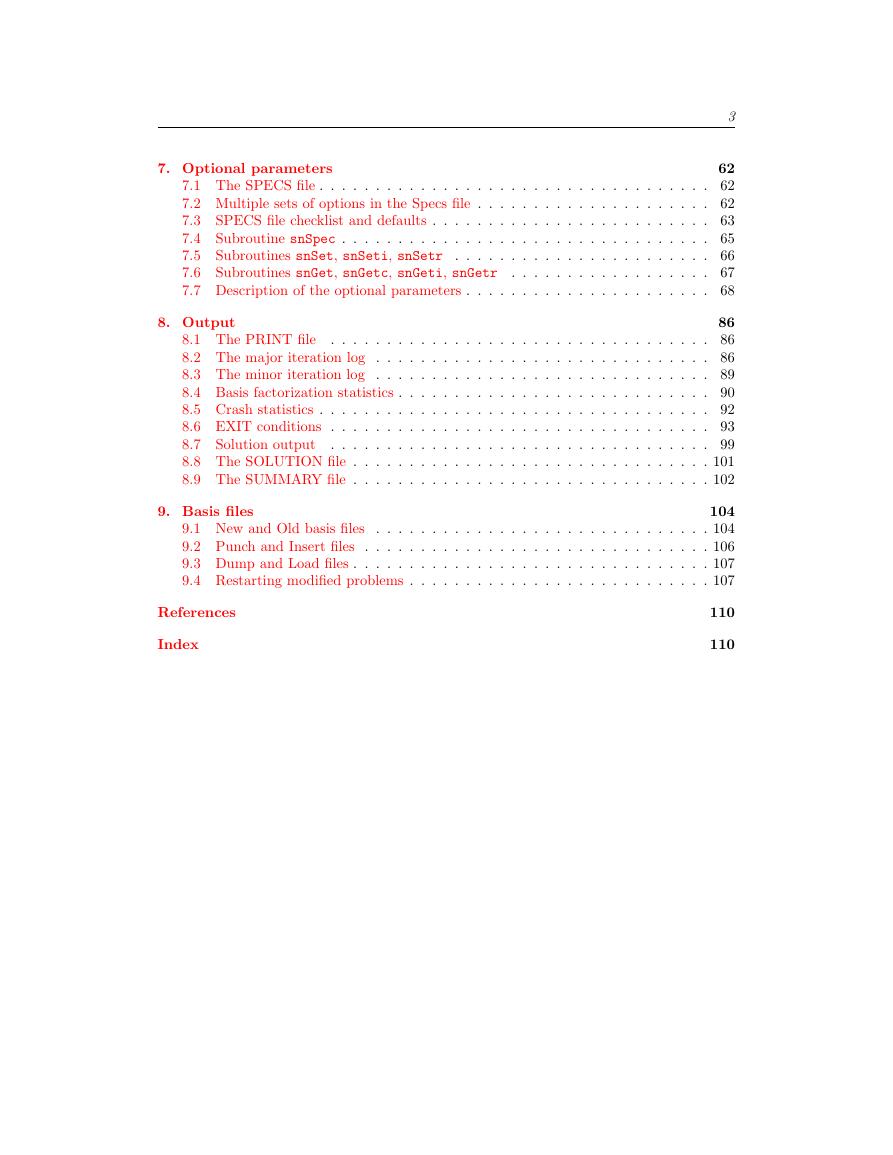

7. Optional parameters

62

7.1 The SPECS file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

7.2 Multiple sets of options in the Specs file . . . . . . . . . . . . . . . . . . . . . 62

7.3 SPECS file checklist and defaults . . . . . . . . . . . . . . . . . . . . . . . . . 63

7.4 Subroutine snSpec . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

7.5 Subroutines snSet, snSeti, snSetr . . . . . . . . . . . . . . . . . . . . . . . 66

7.6 Subroutines snGet, snGetc, snGeti, snGetr . . . . . . . . . . . . . . . . . . 67

7.7 Description of the optional parameters . . . . . . . . . . . . . . . . . . . . . . 68

8. Output

86

8.1 The PRINT file

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

8.2 The major iteration log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

8.3 The minor iteration log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

8.4 Basis factorization statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

8.5 Crash statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

8.6 EXIT conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

8.7 Solution output

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

8.8 The SOLUTION file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

8.9 The SUMMARY file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

9. Basis files

104

9.1 New and Old basis files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

9.2 Punch and Insert files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

9.3 Dump and Load files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

9.4 Restarting modified problems . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

References

Index

110

110

�

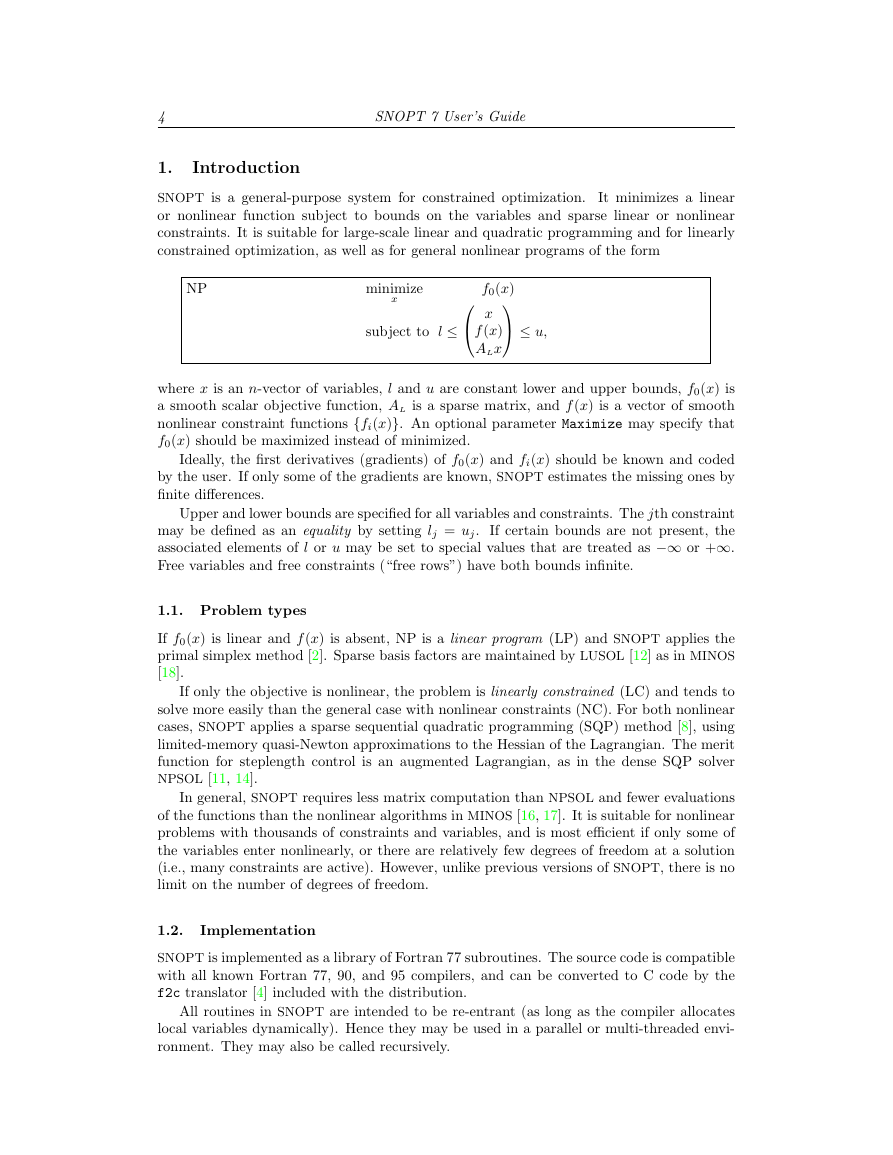

4

SNOPT 7 User’s Guide

1.

Introduction

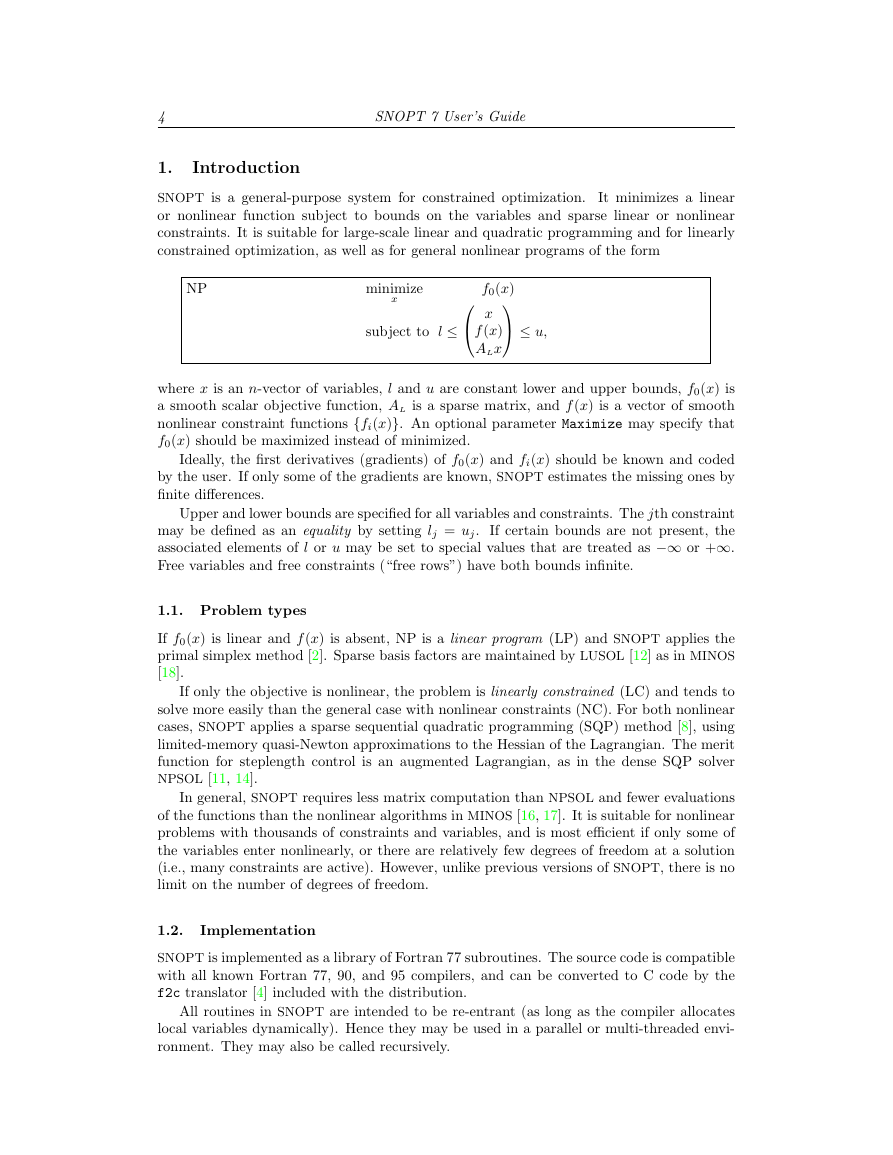

SNOPT is a general-purpose system for constrained optimization.

It minimizes a linear

or nonlinear function subject to bounds on the variables and sparse linear or nonlinear

constraints. It is suitable for large-scale linear and quadratic programming and for linearly

constrained optimization, as well as for general nonlinear programs of the form

NP

minimize

x

subject to l ≤

f0(x)

≤ u,

x

f(x)

ALx

where x is an n-vector of variables, l and u are constant lower and upper bounds, f0(x) is

a smooth scalar objective function, AL is a sparse matrix, and f(x) is a vector of smooth

nonlinear constraint functions {fi(x)}. An optional parameter Maximize may specify that

f0(x) should be maximized instead of minimized.

Ideally, the first derivatives (gradients) of f0(x) and fi(x) should be known and coded

by the user. If only some of the gradients are known, SNOPT estimates the missing ones by

finite differences.

Upper and lower bounds are specified for all variables and constraints. The jth constraint

may be defined as an equality by setting lj = uj. If certain bounds are not present, the

associated elements of l or u may be set to special values that are treated as −∞ or +∞.

Free variables and free constraints (“free rows”) have both bounds infinite.

1.1. Problem types

If f0(x) is linear and f(x) is absent, NP is a linear program (LP) and SNOPT applies the

primal simplex method [2]. Sparse basis factors are maintained by LUSOL [12] as in MINOS

[18].

If only the objective is nonlinear, the problem is linearly constrained (LC) and tends to

solve more easily than the general case with nonlinear constraints (NC). For both nonlinear

cases, SNOPT applies a sparse sequential quadratic programming (SQP) method [8], using

limited-memory quasi-Newton approximations to the Hessian of the Lagrangian. The merit

function for steplength control is an augmented Lagrangian, as in the dense SQP solver

NPSOL [11, 14].

In general, SNOPT requires less matrix computation than NPSOL and fewer evaluations

of the functions than the nonlinear algorithms in MINOS [16, 17]. It is suitable for nonlinear

problems with thousands of constraints and variables, and is most efficient if only some of

the variables enter nonlinearly, or there are relatively few degrees of freedom at a solution

(i.e., many constraints are active). However, unlike previous versions of SNOPT, there is no

limit on the number of degrees of freedom.

1.2.

Implementation

SNOPT is implemented as a library of Fortran 77 subroutines. The source code is compatible

with all known Fortran 77, 90, and 95 compilers, and can be converted to C code by the

f2c translator [4] included with the distribution.

All routines in SNOPT are intended to be re-entrant (as long as the compiler allocates

local variables dynamically). Hence they may be used in a parallel or multi-threaded envi-

ronment. They may also be called recursively.

�

1.

Introduction

5

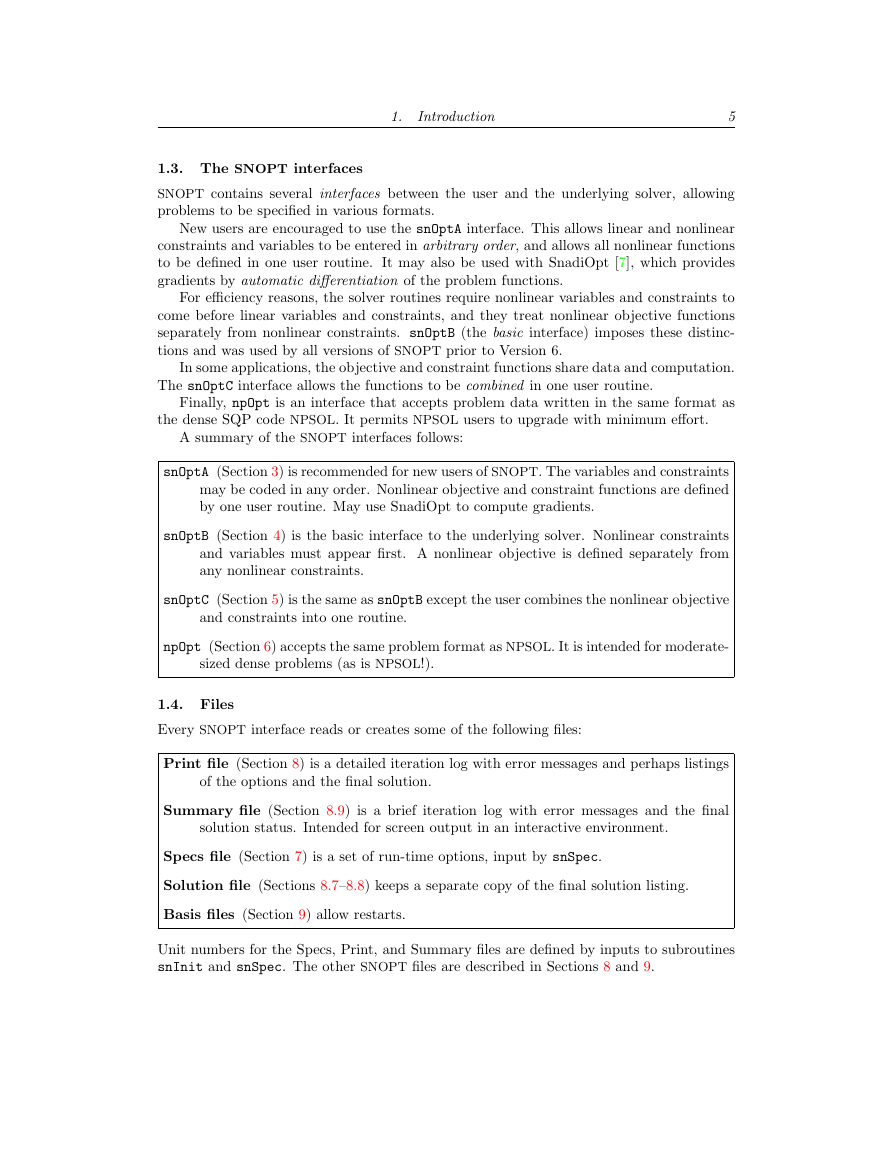

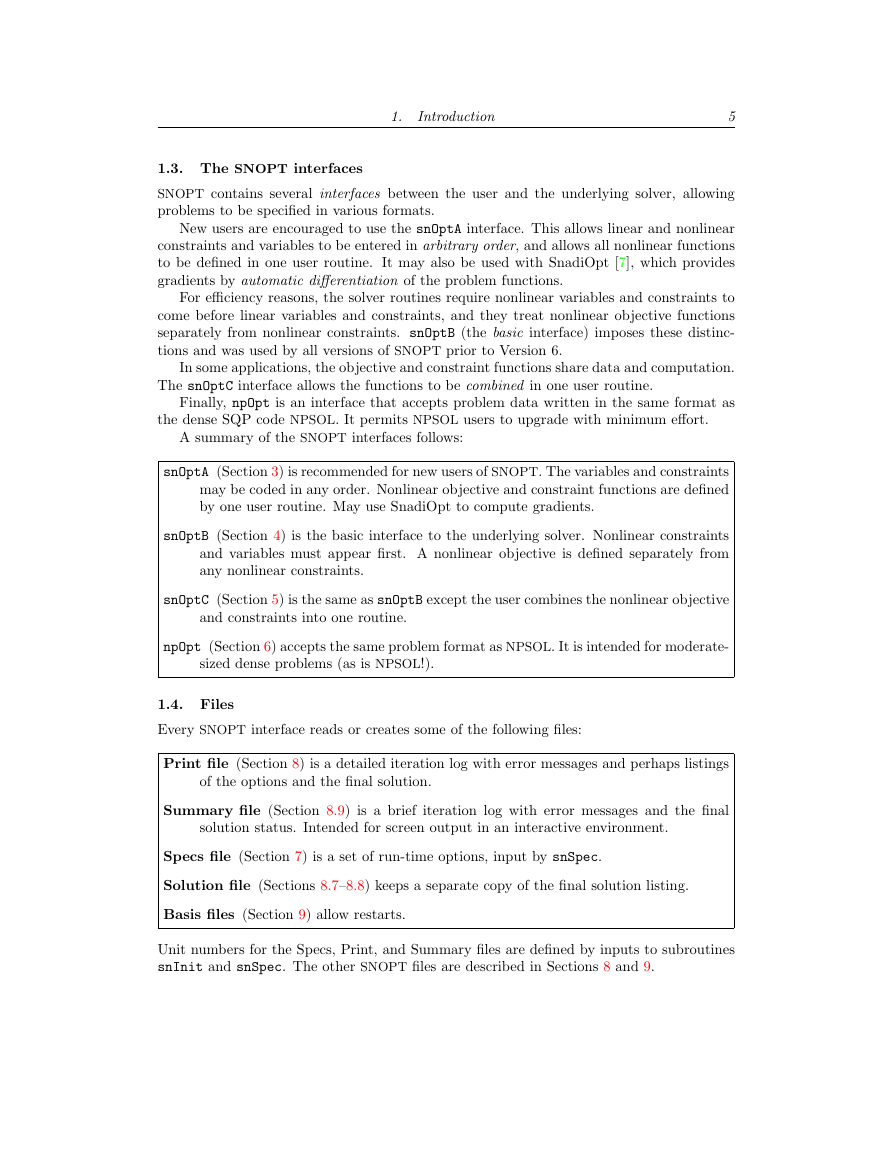

1.3. The SNOPT interfaces

SNOPT contains several interfaces between the user and the underlying solver, allowing

problems to be specified in various formats.

New users are encouraged to use the snOptA interface. This allows linear and nonlinear

constraints and variables to be entered in arbitrary order, and allows all nonlinear functions

to be defined in one user routine. It may also be used with SnadiOpt [7], which provides

gradients by automatic differentiation of the problem functions.

For efficiency reasons, the solver routines require nonlinear variables and constraints to

come before linear variables and constraints, and they treat nonlinear objective functions

separately from nonlinear constraints. snOptB (the basic interface) imposes these distinc-

tions and was used by all versions of SNOPT prior to Version 6.

In some applications, the objective and constraint functions share data and computation.

The snOptC interface allows the functions to be combined in one user routine.

Finally, npOpt is an interface that accepts problem data written in the same format as

the dense SQP code NPSOL. It permits NPSOL users to upgrade with minimum effort.

A summary of the SNOPT interfaces follows:

snOptA (Section 3) is recommended for new users of SNOPT. The variables and constraints

may be coded in any order. Nonlinear objective and constraint functions are defined

by one user routine. May use SnadiOpt to compute gradients.

snOptB (Section 4) is the basic interface to the underlying solver. Nonlinear constraints

and variables must appear first. A nonlinear objective is defined separately from

any nonlinear constraints.

snOptC (Section 5) is the same as snOptB except the user combines the nonlinear objective

and constraints into one routine.

npOpt (Section 6) accepts the same problem format as NPSOL. It is intended for moderate-

sized dense problems (as is NPSOL!).

1.4. Files

Every SNOPT interface reads or creates some of the following files:

Print file (Section 8) is a detailed iteration log with error messages and perhaps listings

of the options and the final solution.

Summary file (Section 8.9) is a brief iteration log with error messages and the final

solution status. Intended for screen output in an interactive environment.

Specs file (Section 7) is a set of run-time options, input by snSpec.

Solution file (Sections 8.7–8.8) keeps a separate copy of the final solution listing.

Basis files (Section 9) allow restarts.

Unit numbers for the Specs, Print, and Summary files are defined by inputs to subroutines

snInit and snSpec. The other SNOPT files are described in Sections 8 and 9.

�

6

SNOPT 7 User’s Guide

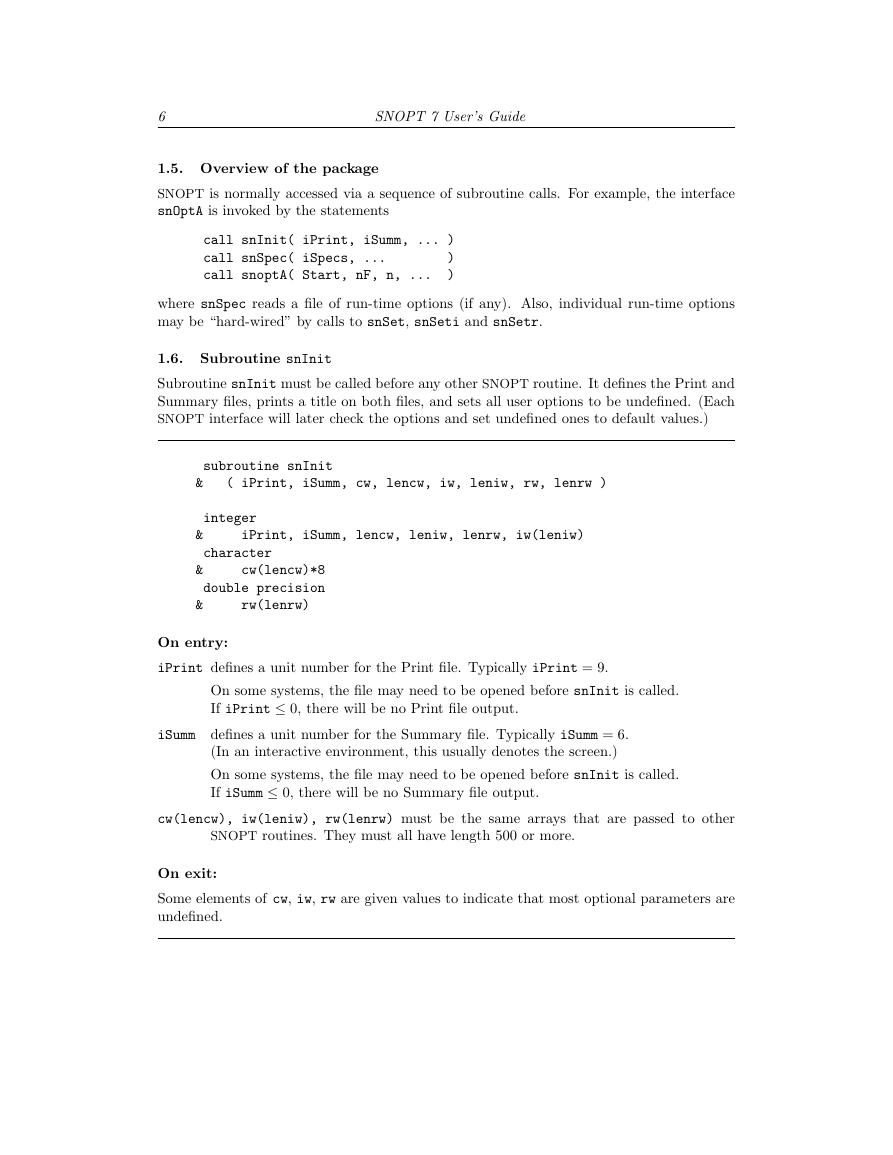

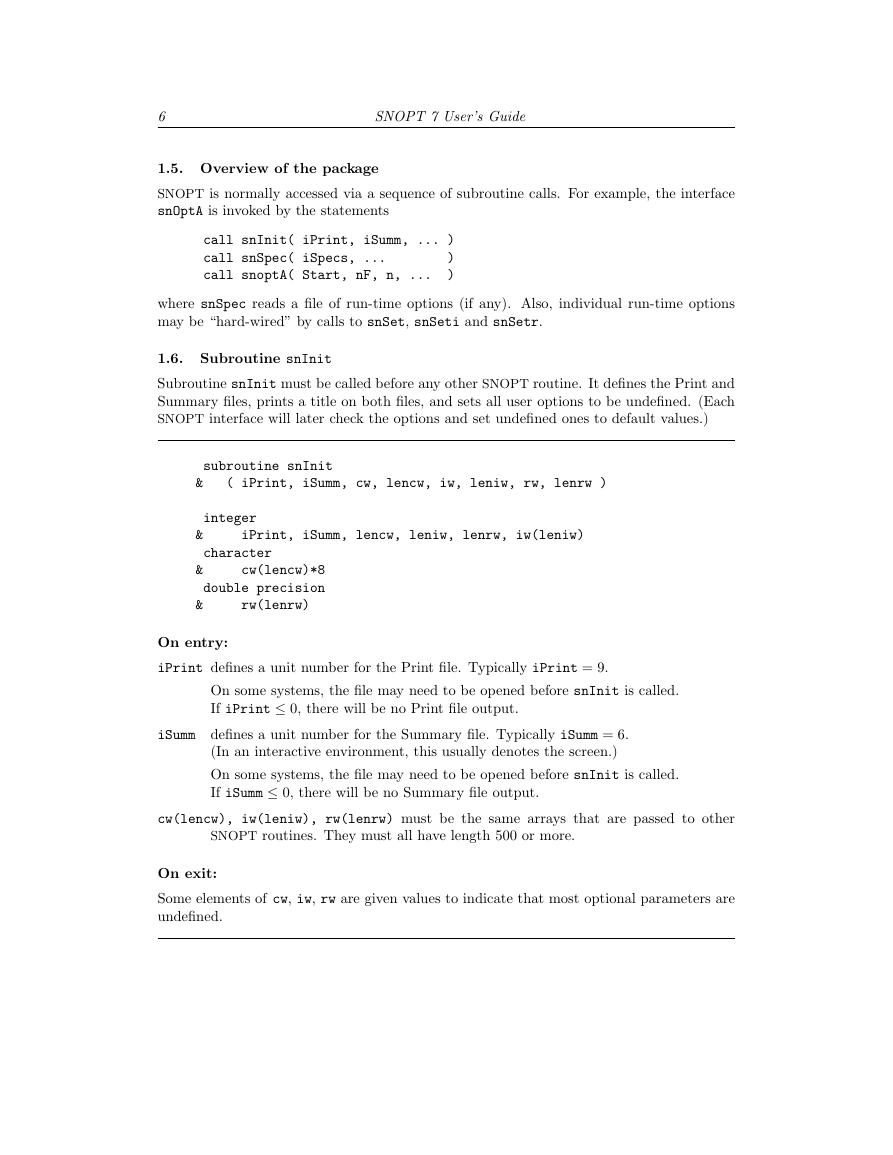

1.5. Overview of the package

SNOPT is normally accessed via a sequence of subroutine calls. For example, the interface

snOptA is invoked by the statements

call snInit( iPrint, iSumm, ... )

call snSpec( iSpecs, ...

)

)

call snoptA( Start, nF, n, ...

where snSpec reads a file of run-time options (if any). Also, individual run-time options

may be “hard-wired” by calls to snSet, snSeti and snSetr.

1.6. Subroutine snInit

Subroutine snInit must be called before any other SNOPT routine. It defines the Print and

Summary files, prints a title on both files, and sets all user options to be undefined. (Each

SNOPT interface will later check the options and set undefined ones to default values.)

subroutine snInit

&

( iPrint, iSumm, cw, lencw, iw, leniw, rw, lenrw )

integer

&

iPrint, iSumm, lencw, leniw, lenrw, iw(leniw)

character

&

cw(lencw)*8

double precision

&

rw(lenrw)

On entry:

iPrint defines a unit number for the Print file. Typically iPrint = 9.

On some systems, the file may need to be opened before snInit is called.

If iPrint ≤ 0, there will be no Print file output.

iSumm defines a unit number for the Summary file. Typically iSumm = 6.

(In an interactive environment, this usually denotes the screen.)

On some systems, the file may need to be opened before snInit is called.

If iSumm ≤ 0, there will be no Summary file output.

cw(lencw), iw(leniw), rw(lenrw) must be the same arrays that are passed to other

SNOPT routines. They must all have length 500 or more.

On exit:

Some elements of cw, iw, rw are given values to indicate that most optional parameters are

undefined.

�

2. Description of the SQP method

7

2. Description of the SQP method

Here we summarize the main features of the SQP algorithm used in SNOPT and introduce

some terminology used in the description of the library routines and their arguments. The

SQP algorithm is fully described by Gill, Murray and Saunders [9].

2.1. Constraints and slack variables

Problem NP contains n variables in x. Let m be the number of components of f(x) and ALx

combined. The upper and lower bounds on those terms define the general constraints of the

problem. SNOPT converts the general constraints to equalities by introducing a set of slack

variables s = (s1, s2, . . . , sm)T . For example, the linear constraint 5 ≤ 2x1 + 3x2 ≤ +∞ is

replaced by 2x1 + 3x2 − s1 = 0 together with the bounded slack 5 ≤ s1 ≤ +∞. Problem

NP can be written in the equivalent form

f0(x)

minimize

f(x)

ALx

x,s

subject to

x

s

− s = 0,

l ≤

≤ u.

The general constraints become the equalities f(x) − sN = 0 and ALx − sL = 0, where sL

and sN are the linear and nonlinear slacks.

2.2. Major iterations

The basic structure of the SQP algorithm involves major and minor iterations. The major

iterations generate a sequence of iterates {xk} that satisfy the linear constraints and converge

to a point that satisfies the nonlinear constraints and the first-order conditions for optimality.

At each xk a QP subproblem is used to generate a search direction toward what will be the

next iterate xk+1. The constraints of the subproblem are formed from the linear constraints

ALx − sL = 0 and the linearized constraint

f(xk) + f0(xk)(x − xk) − sN = 0,

where f0(xk) denotes the Jacobian matrix, whose elements are the first derivatives of f(x)

evaluated at xk. The QP constraints therefore comprise the m linear constraints

where x and s are bounded above and below by u and l as before. If the m × n matrix A

and m-vector b are defined as

f0(xk)x − sN

= −f(xk) + f0(xk)xk,

− sL = 0,

ALx

f0(xk)

AL

A =

and b =

−f(xk) + f0(xk)xk

,

0

then the QP subproblem can be written as

QPk

x,s

minimize

q(x, xk) = gT

subject to Ax − s = b,

x

k(x − xk) + 1

l ≤

2(x − xk)THk(x − xk)

≤ u,

s

where q(x, xk) is a quadratic approximation to a modified Lagrangian function [8]. The

matrix Hk is a quasi-Newton approximation to the Hessian of the Lagrangian. A BFGS

update is applied after each major iteration. If some of the variables enter the Lagrangian

linearly the Hessian will have some zero rows and columns. If the nonlinear variables appear

first, then only the leading n1 rows and columns of the Hessian need be approximated, where

n1 is the number of nonlinear variables.

�

8

SNOPT 7 User’s Guide

2.3. Minor iterations

Solving the QP subproblem is itself an iterative procedure. Here, the iterations of the QP

solver SQOPT [10] form the minor iterations of the SQP method.

method similar to that in MINOS [16].

SQOPT uses a reduced-Hessian active-set method implemented as a reduced-gradient

At each minor iteration, the constraints Ax − s = b are partitioned into the form

BxB + SxS + N xN = b,

where the basis matrix B is square and nonsingular, and the matrices S, N are the remaining

columns of ( A −I ). The vectors xB, xS, xN are the associated basic, superbasic, and nonbasic

variables components of (x, s).

The term active-set method arises because the nonbasic variables xN are temporarily

frozen at their upper or lower bounds, and their bounds are considered to be active. Since

the general constraints are satisfied also, the set of active constraints takes the form

B S N

0@xB

xS

xN

1A =

I

b

xN

,

where xN represents the current values of the nonbasic variables. (In practice, nonbasic vari-

ables are sometimes frozen at values strictly between their bounds.) The reduced-gradient

method chooses to move the superbasic variables in a direction that will improve the objec-

tive function. The basic variables “tag along” to keep Ax− s = b satisfied, and the nonbasic

variables remain unaltered until one of them is chosen to become superbasic.

At a nonoptimal feasible point (x, s) we seek a search direction p such that (x, s) + p

remains on the set of active constraints yet improves the QP objective. If the new point is

to be feasible, we must have BpB + SpS + N pN = 0 and pN = 0. Once pS is specified, pB is

uniquely determined from the system BpB = −SpS. It follows that the superbasic variables

may be regarded as independent variables that are free to move in any desired direction.

The number of superbasic variables (nS say) therefore indicates the number of degrees of

freedom remaining after the constraints have been satisfied. In broad terms, nS is a measure

of how nonlinear the problem is. In particular, nS need not be more than one for linear

problems.

2.4. The reduced Hessian and reduced gradient

The dependence of p on pS may be expressed compactly as p = ZpS, where Z is a matrix

that spans the null space of the active constraints:

0@−B−1S

1A

where P permutes the columns of ( A −I ) into the orderB S N. Minimizing q(x, xk)

I

0

Z = P

(2.1)

with respect to pS now involves a quadratic function of pS:

gTZpS + 1

2 pT

S Z THZpS,

where g and H are expanded forms of gk and Hk defined for all variables (x, s). This is a

quadratic with Hessian Z THZ (the reduced Hessian) and constant vector Z Tg (the reduced

gradient). If the reduced Hessian is nonsingular, pS is computed from the system

Z THZpS = −Z Tg.

(2.2)

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc