�

OPTIMIZATION THEORY AND

METHODS

Nonlinear Programming

Springer Optimization and Its Applications

VOLUME 1

Managing Editor

Panos M. Pardalos (University of Florida)

Editor—Combinatorial Optimization

Ding-Zhu Du (University of Texas at Dallas)

Advisory Board

J. Birge (University of Chicago)

C.A. Floudas (Princeton University)

F. Giannessi (University of Pisa)

H.D. Sherali (Virginia Polytechnic and State University)

T. Terlaky (McMaster University)

Y. Ye (Stanford University)

Aims and Scope

Optimization has been expanding in all directions at an astonishing rate

during the last few decades. New algorithmic and theoretical techniques have

been developed, the diffusion into other disciplines has proceeded at a rapid

pace, and our knowledge of all aspects of the field has grown even more

profound. At the same time, one of the most striking trends in optimization is

the constantly increasing emphasis on the interdisciplinary nature of the field.

Optimization has been a basic tool in all areas of applied mathematics,

engineering, medicine, economics and other sciences.

The series Springer Optimization and Its Applications publishes

undergraduate and graduate textbooks, monographs and state-of-the-art

expository works that focus on algorithms for solving optimization problems

and also study applications involving such problems. Some of the topics

covered include nonlinear optimization (convex and nonconvex), network

stochastic optimization, optimal control, discrete

flow problems,

optimization, multi-objective programming, description of

software

packages, approximation techniques and heuristic approaches.

�

OPTIMIZATION THEORY AND

METHODS

Nonlinear Programming

By

WENYU SUN

Nanjing Normal University, Nanjing, China

YA-XIANG YUAN

Chinese Academy of Science, Beijing, China

1 3

�

Library of Congress Control Number: 2005042696

Printed on acid-free paper.

O 2006 Springer Science+Business Media, LLC

All rights reserved. This work may not be translated or copied in whole or in part without the written

permission of the publisher (Springer Science+Business Media, LLC, 233 Spring Street, New York, NY

10013, USA), except for brief excerpts in connection with reviews or scholarly analysis. Use in

connection with any form of information storage and retrieval, electronic adaptation, computer software,

or by similar or dissimilar methodology now known or hereafter developed is forbidden.

The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are

not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject

to proprietary rights.

Printed in the United States of America.

�

Contents

Preface

1 Introduction

1.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Mathematics Foundations . . . . . . . . . . . . . . . . . . . .

1.2.1 Norm . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2.2

Inverse and Generalized Inverse of a Matrix . . . . . .

1.2.3 Properties of Eigenvalues

. . . . . . . . . . . . . . . .

1.2.4 Rank-One Update . . . . . . . . . . . . . . . . . . . .

1.2.5 Function and Differential

. . . . . . . . . . . . . . . .

1.3 Convex Sets and Convex Functions . . . . . . . . . . . . . . .

1.3.1 Convex Sets . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . .

1.3.2 Convex Functions

1.3.3

. . . . . . . .

Separation and Support of Convex Sets

1.4 Optimality Conditions for Unconstrained Case

. . . . . . . .

1.5 Structure of Optimization Methods . . . . . . . . . . . . . . .

Exercises

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xi

1

1

2

3

9

12

17

22

31

32

36

50

57

63

68

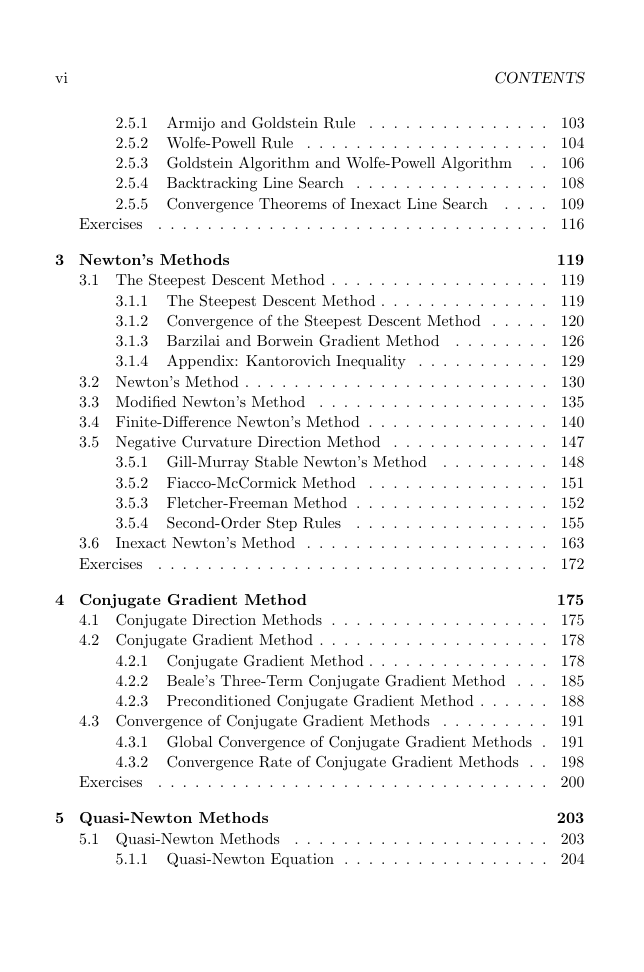

2 Line Search

71

71

2.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . .

74

2.2 Convergence Theory for Exact Line Search . . . . . . . . . .

84

2.3 Section Methods

. . . . . . . . . . . . . . . . . . . . . . . . .

84

2.3.1 The Golden Section Method . . . . . . . . . . . . . . .

87

2.3.2 The Fibonacci Method . . . . . . . . . . . . . . . . . .

89

Interpolation Method . . . . . . . . . . . . . . . . . . . . . . .

89

2.4.1 Quadratic Interpolation Methods . . . . . . . . . . . .

2.4.2 Cubic Interpolation Method . . . . . . . . . . . . . . .

98

Inexact Line Search Techniques . . . . . . . . . . . . . . . . . 102

2.4

2.5

�

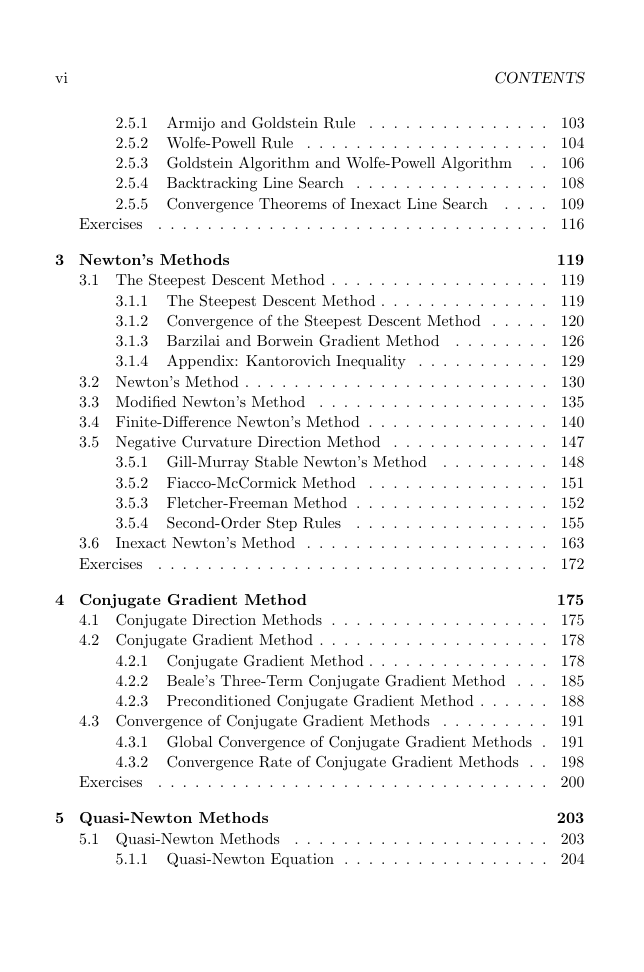

vi

CONTENTS

2.5.1 Armijo and Goldstein Rule . . . . . . . . . . . . . . . 103

2.5.2 Wolfe-Powell Rule . . . . . . . . . . . . . . . . . . . . 104

2.5.3 Goldstein Algorithm and Wolfe-Powell Algorithm . . 106

2.5.4 Backtracking Line Search . . . . . . . . . . . . . . . . 108

2.5.5 Convergence Theorems of Inexact Line Search . . . . 109

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

Exercises

3 Newton’s Methods

119

3.1 The Steepest Descent Method . . . . . . . . . . . . . . . . . . 119

3.1.1 The Steepest Descent Method . . . . . . . . . . . . . . 119

3.1.2 Convergence of the Steepest Descent Method . . . . . 120

3.1.3 Barzilai and Borwein Gradient Method . . . . . . . . 126

3.1.4 Appendix: Kantorovich Inequality . . . . . . . . . . . 129

3.2 Newton’s Method . . . . . . . . . . . . . . . . . . . . . . . . . 130

3.3 Modified Newton’s Method . . . . . . . . . . . . . . . . . . . 135

3.4 Finite-Difference Newton’s Method . . . . . . . . . . . . . . . 140

3.5 Negative Curvature Direction Method . . . . . . . . . . . . . 147

3.5.1 Gill-Murray Stable Newton’s Method . . . . . . . . . 148

3.5.2 Fiacco-McCormick Method . . . . . . . . . . . . . . . 151

3.5.3 Fletcher-Freeman Method . . . . . . . . . . . . . . . . 152

3.5.4

. . . . . . . . . . . . . . . . 155

Inexact Newton’s Method . . . . . . . . . . . . . . . . . . . . 163

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Second-Order Step Rules

3.6

Exercises

4 Conjugate Gradient Method

175

4.1 Conjugate Direction Methods . . . . . . . . . . . . . . . . . . 175

4.2 Conjugate Gradient Method . . . . . . . . . . . . . . . . . . . 178

4.2.1 Conjugate Gradient Method . . . . . . . . . . . . . . . 178

4.2.2 Beale’s Three-Term Conjugate Gradient Method . . . 185

4.2.3 Preconditioned Conjugate Gradient Method . . . . . . 188

. . . . . . . . . 191

4.3.1 Global Convergence of Conjugate Gradient Methods . 191

4.3.2 Convergence Rate of Conjugate Gradient Methods . . 198

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

4.3 Convergence of Conjugate Gradient Methods

Exercises

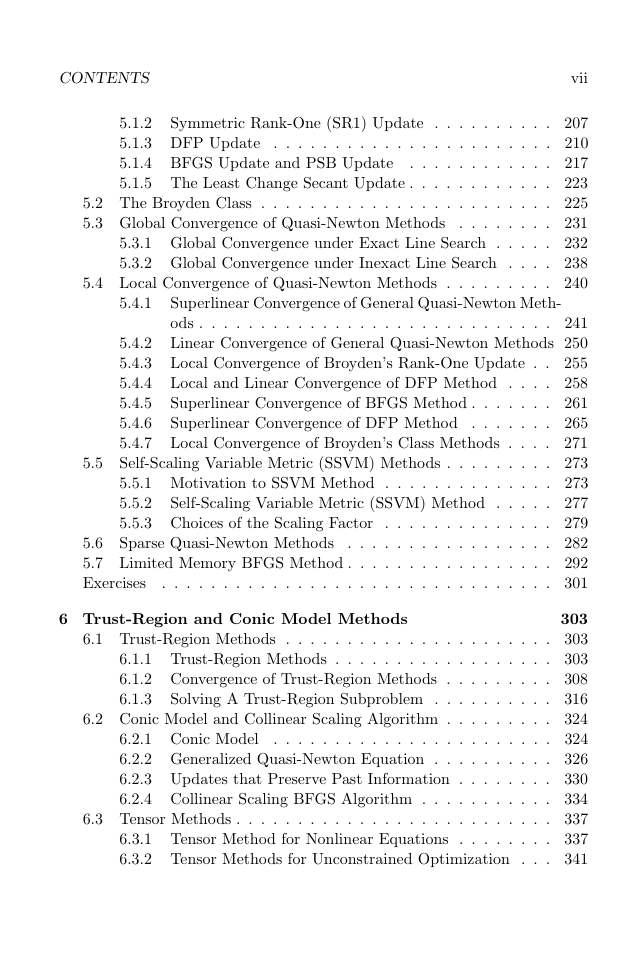

5 Quasi-Newton Methods

5.1 Quasi-Newton Methods

203

. . . . . . . . . . . . . . . . . . . . . 203

5.1.1 Quasi-Newton Equation . . . . . . . . . . . . . . . . . 204

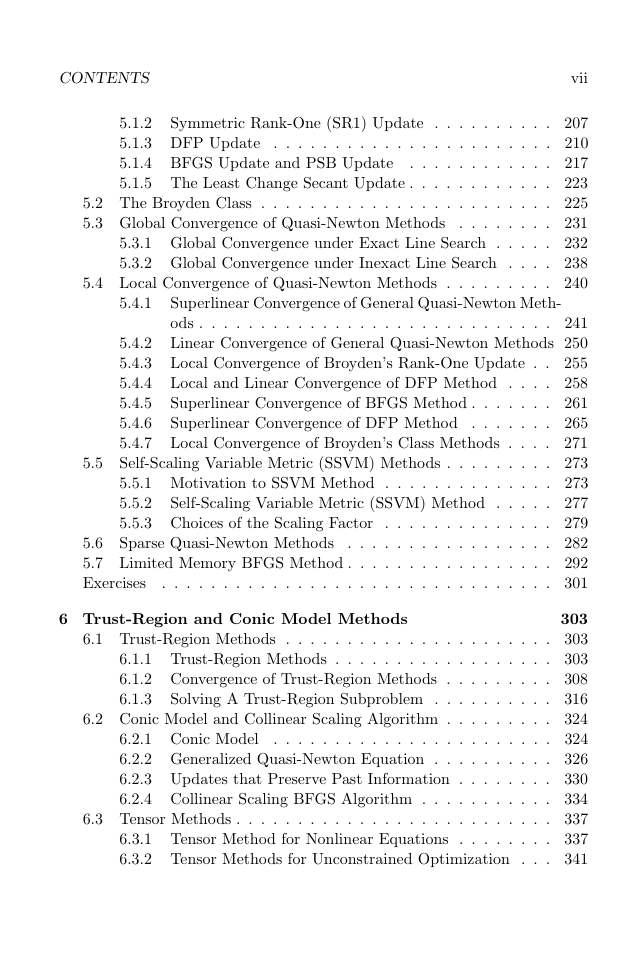

�

CONTENTS

vii

5.1.2

Symmetric Rank-One (SR1) Update . . . . . . . . . . 207

5.1.3 DFP Update . . . . . . . . . . . . . . . . . . . . . . . 210

5.1.4 BFGS Update and PSB Update

. . . . . . . . . . . . 217

5.1.5 The Least Change Secant Update . . . . . . . . . . . . 223

5.2 The Broyden Class . . . . . . . . . . . . . . . . . . . . . . . . 225

5.3 Global Convergence of Quasi-Newton Methods

. . . . . . . . 231

5.3.1 Global Convergence under Exact Line Search . . . . . 232

5.3.2 Global Convergence under Inexact Line Search . . . . 238

5.4 Local Convergence of Quasi-Newton Methods . . . . . . . . . 240

5.4.1

Superlinear Convergence of General Quasi-Newton Meth-

ods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

5.4.2 Linear Convergence of General Quasi-Newton Methods 250

5.4.3 Local Convergence of Broyden’s Rank-One Update . . 255

5.4.4 Local and Linear Convergence of DFP Method . . . . 258

Superlinear Convergence of BFGS Method . . . . . . . 261

5.4.5

5.4.6

Superlinear Convergence of DFP Method . . . . . . . 265

5.4.7 Local Convergence of Broyden’s Class Methods . . . . 271

5.5 Self-Scaling Variable Metric (SSVM) Methods . . . . . . . . . 273

5.5.1 Motivation to SSVM Method . . . . . . . . . . . . . . 273

5.5.2

Self-Scaling Variable Metric (SSVM) Method . . . . . 277

5.5.3 Choices of the Scaling Factor . . . . . . . . . . . . . . 279

5.6 Sparse Quasi-Newton Methods

. . . . . . . . . . . . . . . . . 282

5.7 Limited Memory BFGS Method . . . . . . . . . . . . . . . . . 292

Exercises

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

6 Trust-Region and Conic Model Methods

303

6.1 Trust-Region Methods . . . . . . . . . . . . . . . . . . . . . . 303

6.1.1 Trust-Region Methods . . . . . . . . . . . . . . . . . . 303

6.1.2 Convergence of Trust-Region Methods . . . . . . . . . 308

6.1.3

Solving A Trust-Region Subproblem . . . . . . . . . . 316

6.2 Conic Model and Collinear Scaling Algorithm . . . . . . . . . 324

6.2.1 Conic Model

. . . . . . . . . . . . . . . . . . . . . . . 324

6.2.2 Generalized Quasi-Newton Equation . . . . . . . . . . 326

6.2.3 Updates that Preserve Past Information . . . . . . . . 330

6.2.4 Collinear Scaling BFGS Algorithm . . . . . . . . . . . 334

6.3 Tensor Methods . . . . . . . . . . . . . . . . . . . . . . . . . . 337

6.3.1 Tensor Method for Nonlinear Equations . . . . . . . . 337

6.3.2 Tensor Methods for Unconstrained Optimization . . . 341

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc