Cover

Copyright

Contents at a Glance

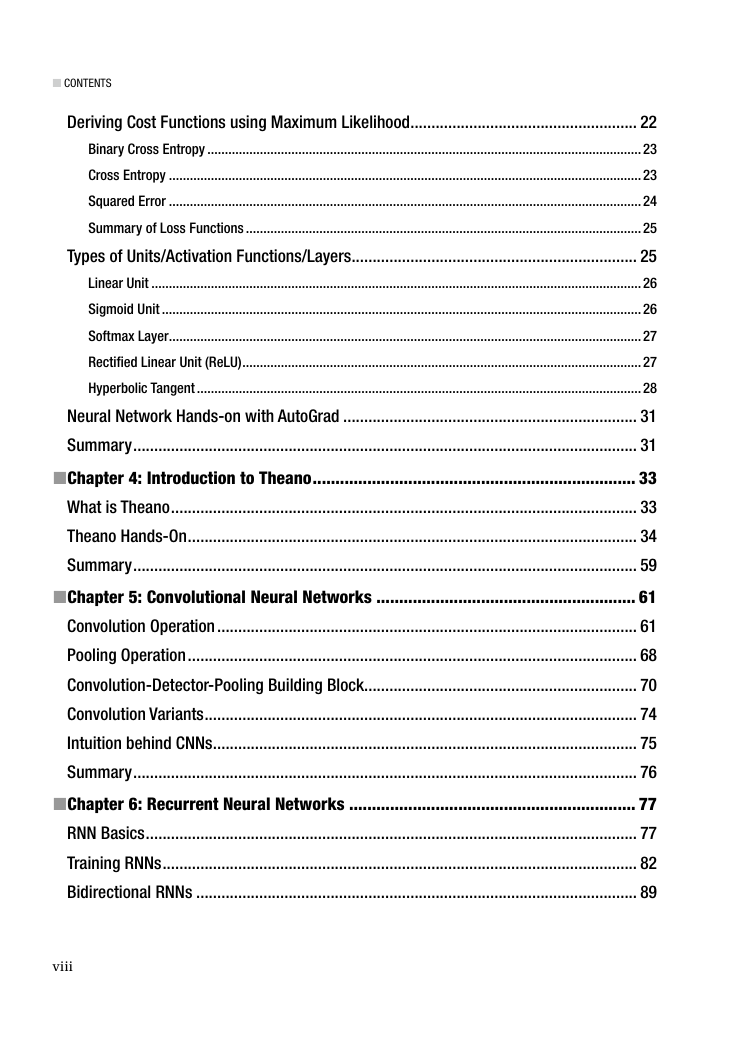

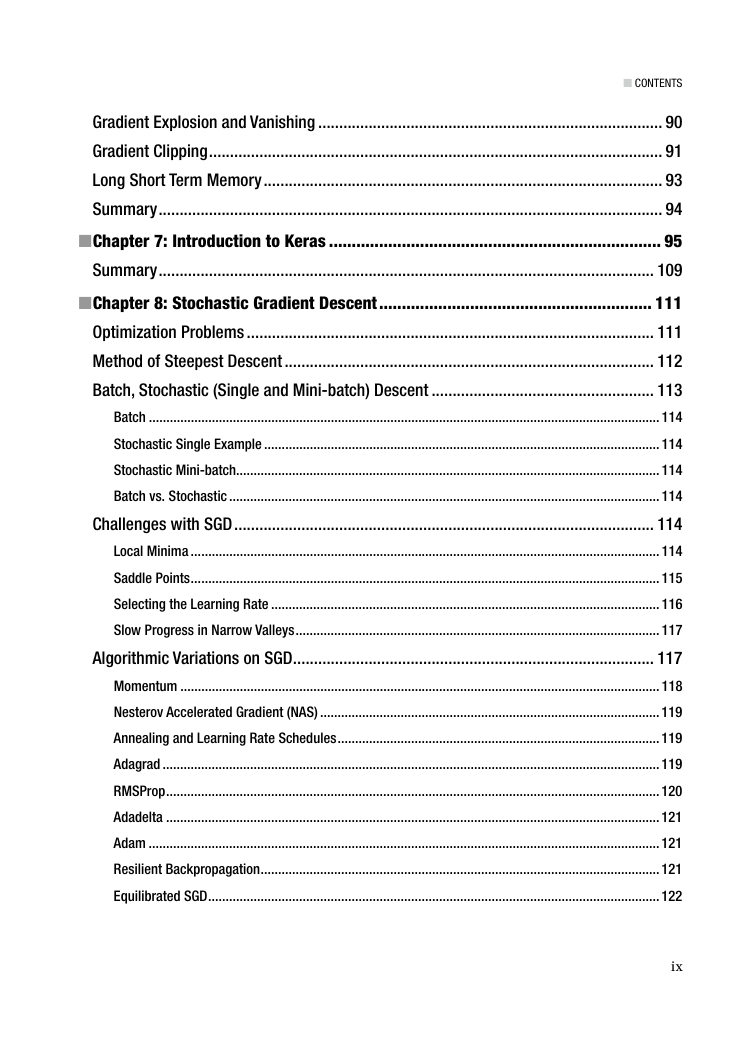

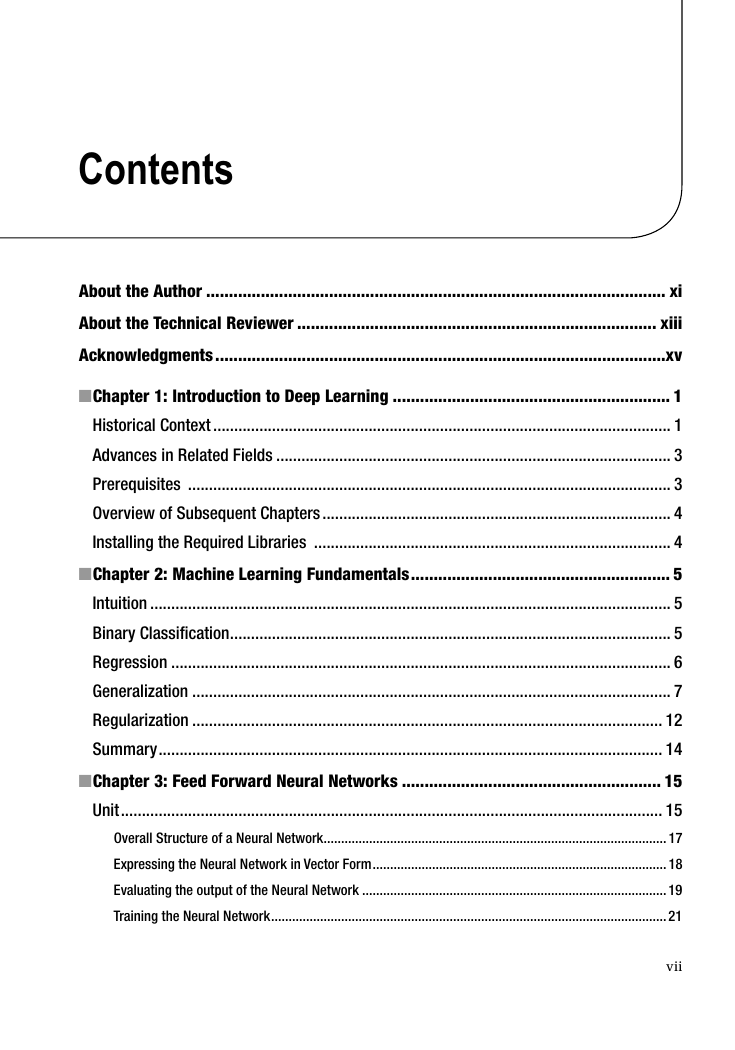

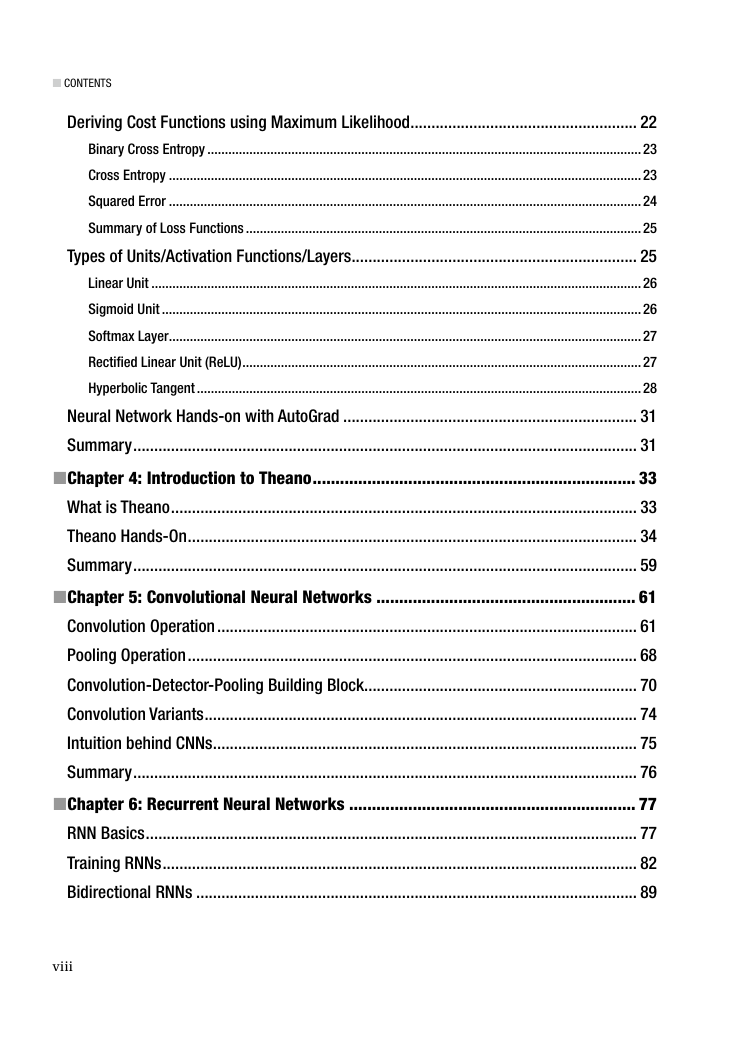

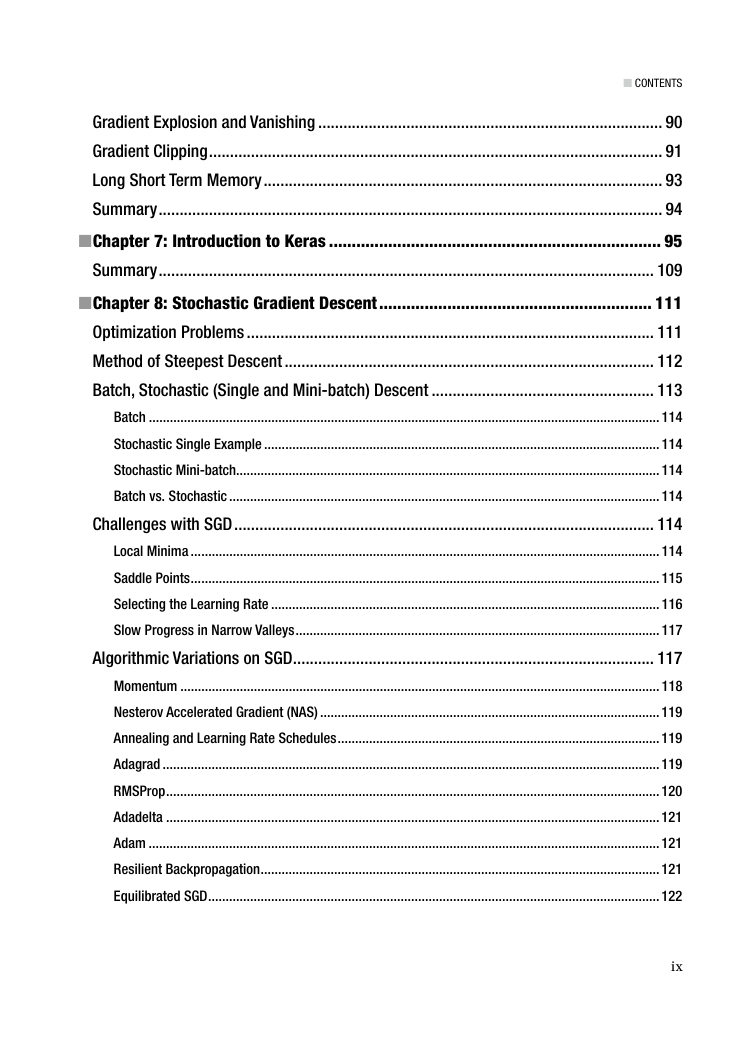

Contents

About the Author

1: Introduction to Deep Learning

Historical Context

Advances in Related Fields

Prerequisites

Overview of Subsequent Chapters

Installing the Required Libraries

2: Machine Learning Fundamentals

Intuition

Binary Classification

Regression

Generalization

Regularization

Summary

3: Feed Forward Neural Networks

Unit

Overall Structure of a Neural Network

Expressing the Neural Network in Vector Form

Evaluating the output of the Neural Network

Training the Neural Network

Deriving Cost Functions using Maximum Likelihood

Binary Cross Entropy

Cross Entropy

Squared Error

Summary of Loss Functions

Types of Units/Activation Functions/Layers

Linear Unit

Sigmoid Unit

Softmax Layer

Rectified Linear Unit (ReLU)

Hyperbolic Tangent

Neural Network Hands-on with AutoGrad

Summary

4: Introduction to Theano

What is Theano

Theano Hands-On

Summary

5: Convolutional Neural Networks

Convolution Operation

Pooling Operation

Convolution-Detector-Pooling Building Block

Convolution Variants

Intuition behind CNNs

Summary

6: Recurrent Neural Networks

RNN Basics

Training RNNs

Bidirectional RNNs

Gradient Explosion and Vanishing

Gradient Clipping

Long Short Term Memory

Summary

7: Introduction to Keras

Summary

8: Stochastic Gradient Descent

Optimization Problems

Method of Steepest Descent

Batch, Stochastic (Single and Mini-batch) Descent

Batch

Stochastic Single Example

Stochastic Mini-batch

Batch vs. Stochastic

Challenges with SGD

Local Minima

Saddle Points

Selecting the Learning Rate

Slow Progress in Narrow Valleys

Algorithmic Variations on SGD

Momentum

Nesterov Accelerated Gradient (NAS)

Annealing and Learning Rate Schedules

Adagrad

RMSProp

Adadelta

Adam

Resilient Backpropagation

Equilibrated SGD

Tricks and Tips for using SGD

Preprocessing Input Data

Choice of Activation Function

Preprocessing Target Value

Initializing Parameters

Shuffling Data

Batch Normalization

Early Stopping

Gradient Noise

Parallel and Distributed SGD

Hogwild

Downpour

Hands-on SGD with Downhill

Summary

9: Automatic Differentiation

Numerical Differentiation

Symbolic Differentiation

Automatic Differentiation Fundamentals

Forward/Tangent Linear Mode

Reverse/Cotangent/Adjoint Linear Mode

Implementation of Automatic Differentiation

Source Code Transformation

Operator Overloading

Hands-on Automatic Differentiation with Autograd

Summary

10: Introduction to GPUs

Summary

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc