DARPA XAI Literature Review

p.

1

Explanation in Human-AI Systems:

A Literature Meta-Review

Synopsis of Key Ideas and Publications

and

Bibliography for Explainable AI

Prepared by Task Area 2

Shane T. Mueller

Michigan Technical University

Robert R. Hoffman, William Clancey, Abigail Emrey

Institute for Human and Machine Cognition

Gary Klein

MacroCognition, LLC

DARPA XAI Program

February 2019

�

DARPA XAI Literature Review

p.

2

Explanation in Human-AI Systems

Executive Summary

This is an integrative review that address the question, "What makes for a good

explanation?" with reference to AI systems. Pertinent literatures are vast. Thus, this review is

necessarily selective. That said, most of the key concepts and issues are exressed in this Report.

The Report encapsulates the history of computer science efforts to create systems that explain

and instruct (intelligent tutoring systems and expert systems). The Report expresses the

explainability issues and challenges in modern AI, and presents capsule views of the leading

psychological theories of explanation. Certain articles stand out by virtue of their particular

relevance to XAI, and their methods, results, and key points are highlighted.

It is recommended that AI/XAI researchers be encouraged to include in their research

reports fuller details on their empirical or experimental methods, in the fashion of experimental

psychology research reports: details on Participants, Instructions, Procedures, Tasks, Dependent

Variables (operational definitions of the measures and metrics), Independent Variables

(conditions), and Control Conditions.

In the papers reviewed in this Report one can find methodological guidance for the

evaluation of XAI systems. But the Report highlights some noteworthy considerations: The

differences between global and local explanations, the need to evaluate the performance of the

human-machine work system (and not just the performance of the AI or the performance of the

users), the need to recognize that experiment procedures tacitly impose on the user the burden of

self-explanation.

Corrective/contrastive user tasks support self-explanation or explanation-as-exploration.

Tasks that involve human-AI interactivity and co-adaptation, such as bug or oddball detection,

hold promise for XAI evaluation since they too conform to the notions of "explanation-as-

exploration" and explanation as a co-adaptive dialog process. Tasks that involve predicting the

AI's determinations, combined with post-experimental interviews, hold promise for the study of

mental models in the XAI context.

�

DARPA XAI Literature Review

p.

3

Preface

This Report is an expansion of a previous Report on the DARPA XAI Program, which was titled

"Literature Review and Integration of Key Ideas for Explainable AI," and was dated February

2018. This new version integrates nearly 200 additional references that have been discovered.

This Report includes a new section titled "Review of Human Evaluation of XAI Systems." This

section focuses on reports—many of them recent—on projects in which human-machine AI or

XAI systems underwent some sort of empirical evaluation. This new section is particularly

relevant to the empirical and experimental activities in the DARPA XAI Program.

Acknowledgements

Contributions to this Report were made by Sara Tan and Brittany Nelson of the Michigan

Technological University, and Jared Van Dam of the Institute for Human and Machine

Cognition.

This material is approved for public release. Distribution is unlimited. This material is based on

research sponsored by the Air Force Research Lab (AFRL) under agreement number FA8650-

17-2-7711. The U.S. Government is authorized to reproduce and distribute reprints for

Governmental purposes notwithstanding any copyright notation thereon.

Disclaimer

The views and conclusions contained herein are those of the authors and should not be

interpreted as necessarily representing the official policies or endorsements, either expressed or

implied, of AFRL or the U.S. Government.

�

DARPA XAI Literature Review

p.

4

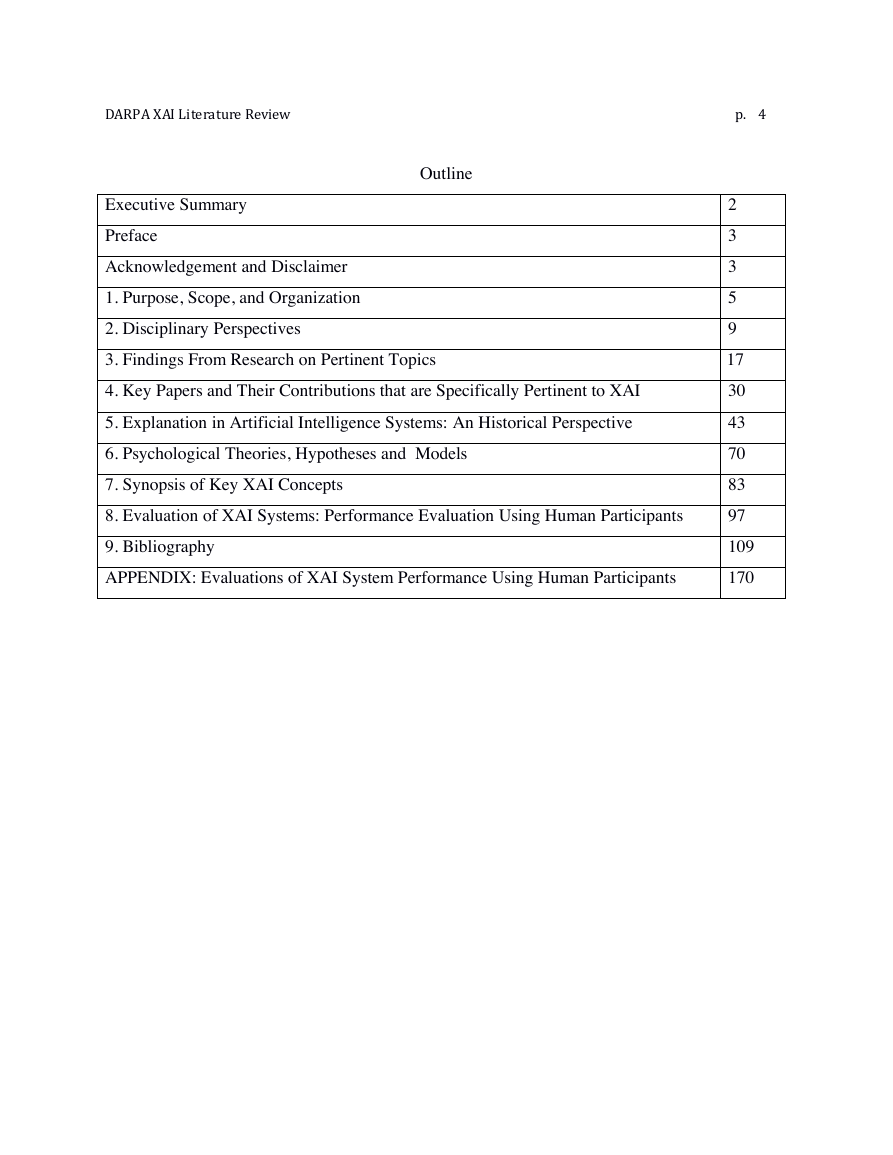

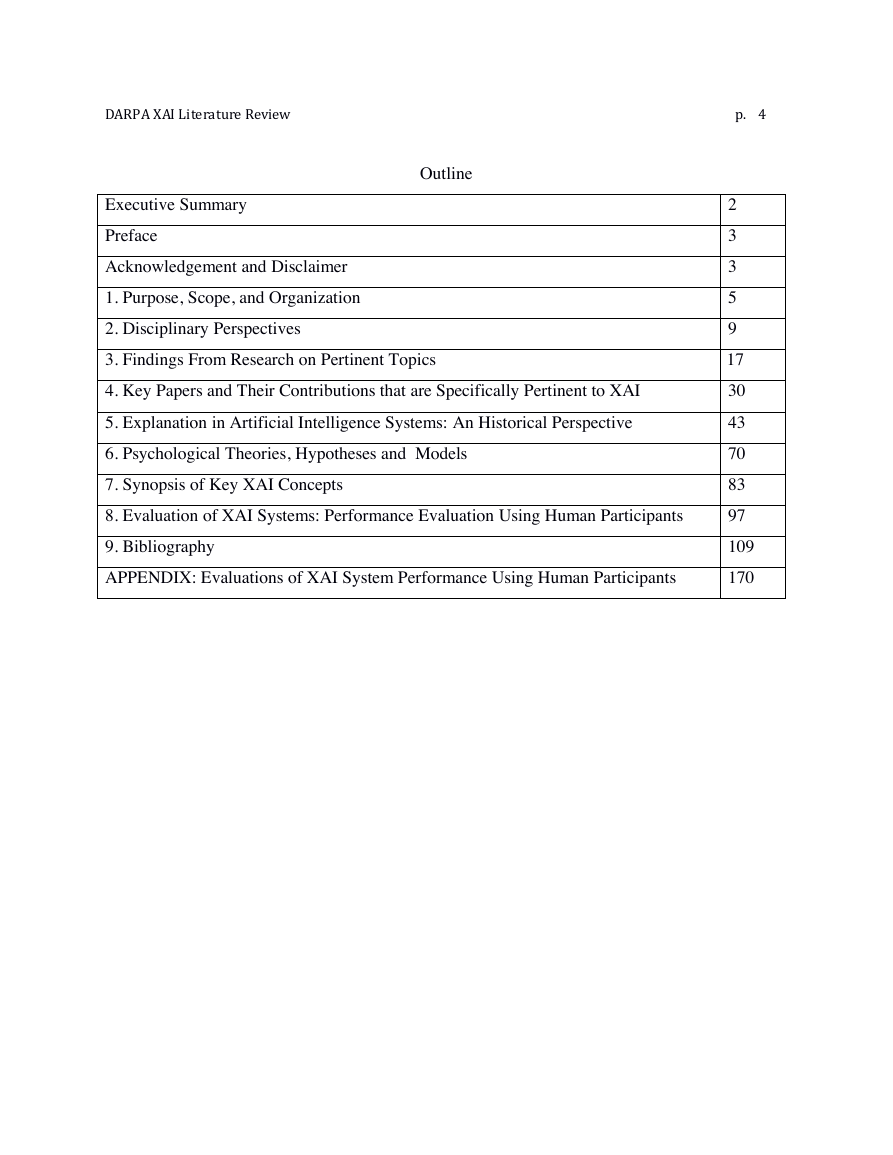

Outline

Executive Summary

Preface

Acknowledgement and Disclaimer

1. Purpose, Scope, and Organization

2. Disciplinary Perspectives

3. Findings From Research on Pertinent Topics

4. Key Papers and Their Contributions that are Specifically Pertinent to XAI

5. Explanation in Artificial Intelligence Systems: An Historical Perspective

6. Psychological Theories, Hypotheses and Models

7. Synopsis of Key XAI Concepts

8. Evaluation of XAI Systems: Performance Evaluation Using Human Participants

9. Bibliography

APPENDIX: Evaluations of XAI System Performance Using Human Participants

2

3

3

5

9

17

30

43

70

83

97

109

170

�

DARPA XAI Literature Review

p.

5

1. Purpose, Scope, and Organization

The purpose of this document is to distill from existing scientific literatures the resent key ideas

that pertain to the DARPA XAI Program.

Importance of the Topic

For decision makers who rely upon analytics and data science, explainability is a real issue. If the

computational system relies on a simple decision model such as logistic regression, they can

understand it and convince executives who have to sign off on a system because it seems

reasonable and fair. They can justify the analytical results to shareholders, regulators, etc. But for

"Deep Nets" and "Machine Learning" systems, they can no longer do this. There is a need to find

ways to explain the system to the decision maker so that they know that their decisions are going

to be reasonable, and simply invoking a neurological metaphor might not be sufficient. The goals

of explanation involve persuasion, but that comes only as a consequence of understanding the

hot the AI works, the mistakes the system can make, and the safety measures surrounding it.

... current efforts face unprecedented difficulties: contemporary models are more

complex and less interpretable than ever; [AI systems are] used for a wider array

of tasks, and are more pervasive in everyday life than in the past; and [AI is]

increasingly allowed to make (and take) more autonomous decisions (and

actions). Justifying these decisions will only become more crucial, and there is

little doubt that this field will continue to rise in prominence and produce exciting

and much needed work in the future (Biran and Cotton, 2017, p. 4).

This quotation brings into relief the importance of XAI. Governments and the general public are

expressing concern about the emerging "black box society." A proposed regulation before the

European Union (Goodman and Flaxman, 2016) prohibits "automatic processing" unless user's

rights are safeguarded. Users have a "right to an explanation" concerning algorithm-created

�

DARPA XAI Literature Review

p.

6

decisions that are based on personal information. Future laws may restrict AI, which represents a

challenge to industry.

The importance of explanation, and especially explanation in AI, has been emphasized in

numerous popular press outlets over the past decades, with considerable discussion of the

explainability of Deep Nets and Machine Learning systems in both the technical literature and

the recent popular press (Alang, 2017; Bornstein, 2016; Champlin, Bell, and Schocken, 2017;

Clancey, 1986a; Cooper, 2004; Core, et al., 2006; Harford, 2014; Hawkins, 2017; Kim, 2018;

Kuang, 2017; Marcus, 2017; Monroe, 2018; Pavlus, 2017; Nott, 2017; Pinker, 2017; Schwiep,

2017; Sheh and Monteath, 2018; Voosen, 2017; Wang, et al., 2019; Weinberger, 2017).

Reporting and opinion pieces in the past several years have discussed social justice, equity, and

fairness issues that are implicated by "inscrutable" AI (Adler, et al., 2018; Amodei, et al., 2016;

Belotti and Edwards, 2001; Bostrom andYudkowsky, 2014; Dwork, et al., 2012; Fallon and

Blaha, 2018; Hajian, et al., 2015; Hayes and Shah, 2017; Joseph, et al., 2016a,b; Kroll, et al.,

2016; Lum and Isaac, 2016; Otte, 2013; Sweeney, 2013; Tate, et al., 2016; Varsheny and

Alemzadeh, 2017; Wachter, Mittelstadt, and Russell, 2017).

One of the clearest statements about explainability issues was provided by Ed Felton (Felton,

2017). He identified four social issues: confidentiality, complexity, unreasonableness, and

injustice. For example, sometimes an algorithm is confidential, or a trade secret, or it would be a

security risk to reveal it. This barrier to explanation is known to create inequity in automated

decision processes including loans, hiring, insurance, prison sentencing/release, and because the

algorithms are legally secret, it is difficult for outsiders to identify the biases. Alternately,

sometimes algorithms are well understood but are highly complex, so that a clear understanding

by a layperson is not possible. This is an area where XAI approaches might be helpful, as they

may be able to deliberately create alternative algorithms that are easier to explain. A third

challenge described by Felton is unreasonableness—algorithms that use rationally justifiable

information to make decisions that are nevertheless not reasonable or are discriminatory or

�

DARPA XAI Literature Review

p.

7

unfair. Finally, he identified injustice as a challenge: we may understand the ways an algorithm

is working, but want an explanation for how they are consistent with a legal or moral code.

Scope of This Review

A thorough analysis of the subject of explanation would have to cover literatures spanning the

entire history of Western philosophy from Aristotle onward. While this Report does call out key

concepts mined from the diverse literatures and disciplines, the focus is on explanation in the

context of AI systems. An explanation facility for intelligent systems can play a role in situations

where the system provides information and explanations in order to help the user make decisions

and take actions. The focus of this Report is on contexts in which the AI makes a determination

or reaches conclusions that then have to be explained to the user. AI approaches that pertain to

Explainable AI include rule-based systems (e.g., Mycin), which also make determinations or

reach conclusions based on predicate calculus. However, the specific focus of the XAI Program

is Deep Net (DN) and Machine Learning (ML) systems.

Some of the articles cited in this Report could be sufficiently integrated by identifying their key

ideas, methods, or findings. Many articles were read in detail, by at least one of the TA-2 Team's

researchers. The goal was to create a compendium rather than an annotated bibliography. In

other words, this Report does not exhaustively summarize each individual publication. Rather, it

synthesizes across publications in order to highlight key concepts. That said, certain articles do

stand out by virtue of their particular relevance to XAI. The key points of those articles are

highlighted across the sections of this Report.

Organization of This Review

We look at the pertinent literatures from three primary perspectives: key concepts, research, and

history. We start in Section 2 by looking at the pertinent literatures from the perspective of the

traditional disciplines (i.e., computer science, philosophy, psychology, human factors). Next, we

�

DARPA XAI Literature Review

p.

8

express the key research findings and ideas on topics that are pertinent to explanation, such as

causal reasoning, abduction, and concept formation (Section 3). The research that pertains

specifically to XAI is encapsulated in Section 4). In Section 5 presents an historical perspective

on approaches to explanation in AI—the trends and the methods as they developed over time.

The key ideas and research findings (Sections 2 through 5) are distilled in Section 6, in the form

of a glossary of ideas that seem most pertinent to XAI. Next, in Section 7 we point to the reports

of research that specifically addresses the topic of explanation in AI applications. Section 8 is

new (compared to the February 2018 release of this XAI literature review). This Section focuses

on reports in which AI explanation systems were evaluated in research with human participants.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc